A Gaussian-Distributed Quantum Random Number Generator Using Vacuum Shot Noise

Abstract

1. Introduction

2. Analysis of Gaussian Distribution QRNG Scheme

2.1. Gaussian Random Source and Entropy Estimation

2.1.1. Vacuum Fluctuation

2.1.2. Entropy Estimation

2.2. Impact of Sampling Device

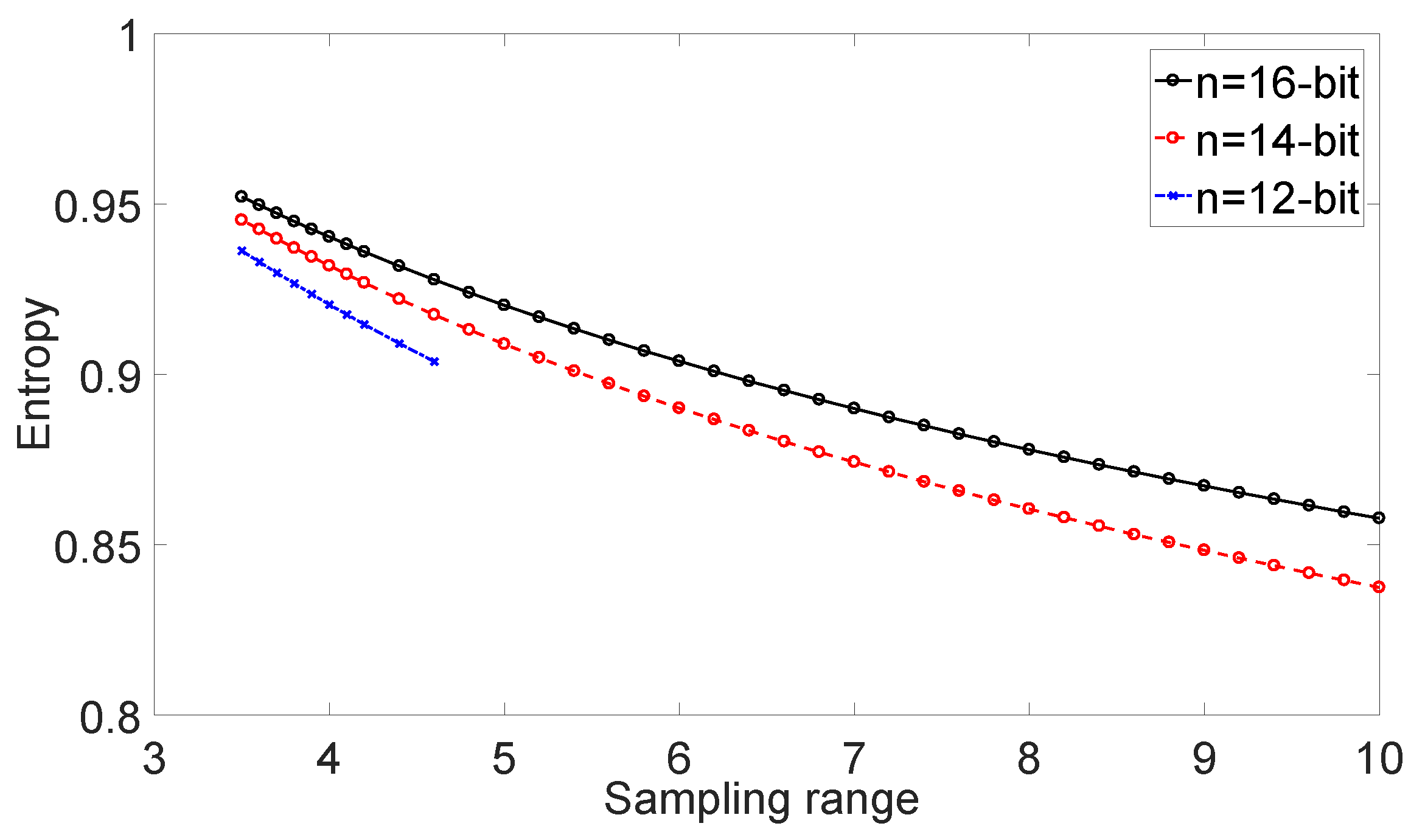

2.2.1. Sampling Range

- If k is too small, () will occur too often, making the random variable more predictable, and reducing entropy . Furthermore, the worse profile of Gaussian distribution has a higher possibility to fail the GoF test, which does not match our requirement in post-processing and applications;

- If k is too large, most signals will locate in a small range of sample bins, making the most significant bits (MSB) of samples more predictable, and also reducing entropy . On the other hand, many sampling bins are unoccupied, wasting the ability of devices and substantially reduce the sampling precision.

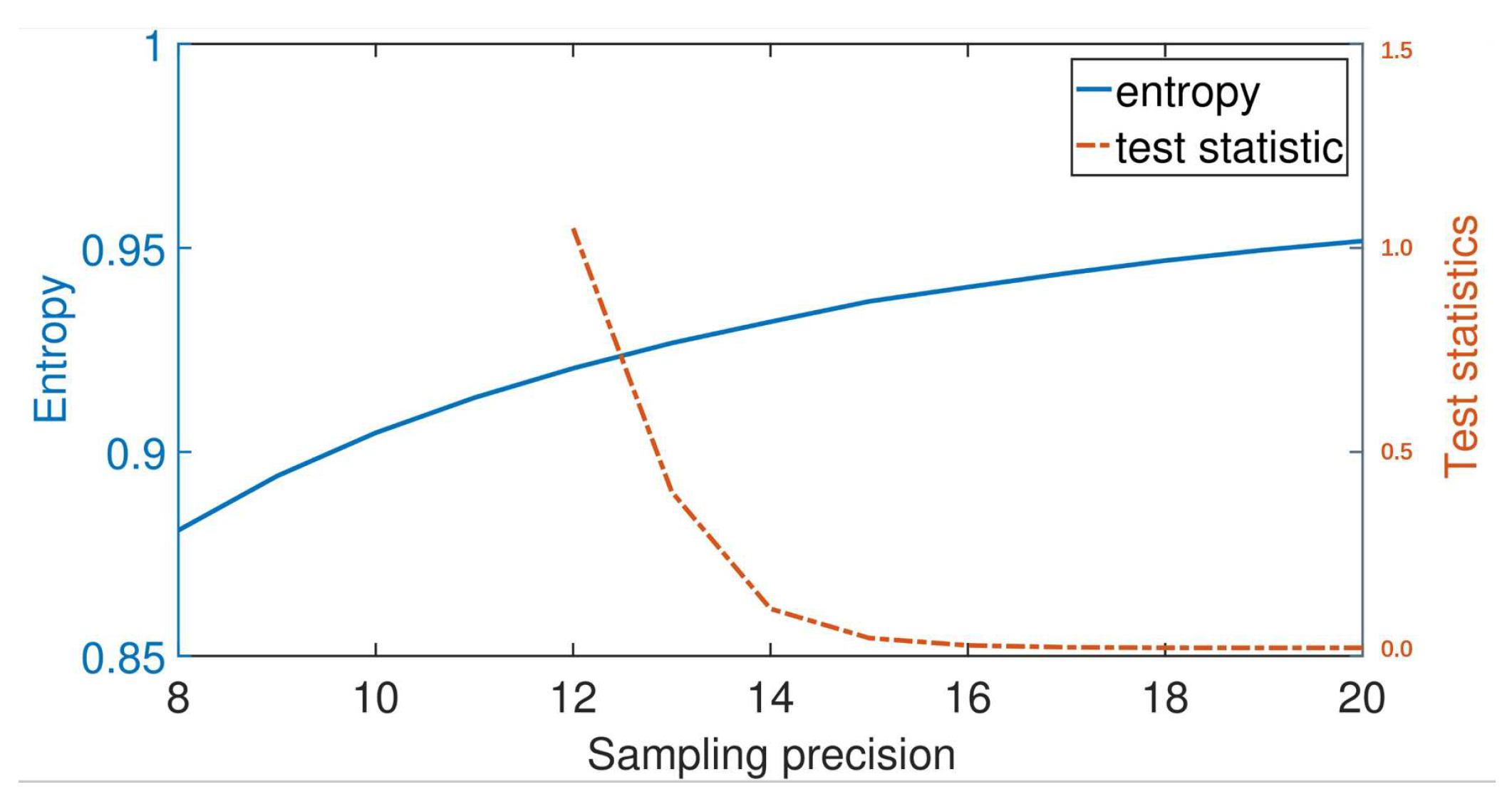

2.2.2. Sampling Resolution

2.2.3. Sampling Depth

3. Post-Processing

- Elements in the matrix, which are the weights in Equation (10), is not fixed, as long as they obey fundamental rules. For matrix, each row/column should have 3 (1) positive and 1 (3) negative elements, and the position should not be the same; the absolute value of each row and column should not be the same either. Thus there is a group of with hundreds of possible matrices;

- The size of the matrix can be designed, which indicates how many raw numbers will be used to generate a final number. We take the matrix as the simplest example for a demonstration. However, when the precision after m-MSB pre-processing is inadequate, and a larger matrix should be made. For instance, in the following section of implementation, we generate 12-bit Gaussian distribution numbers from 5-bit pre-processed data, by utilizing an matrix. If the matrix size is larger, it has a potential for even higher precision, such as five-bit pre-processed data with a matrix will generate 20-bit Gaussian distribution random numbers for high multiple-sigma applications.

- The values of matrix elements can also be designed, which indicate shifted bits of the pre-processed data. In the discussion above, weights of adjacent numbers always follow the power of , which means that adjacent numbers in should shift one bit in the summation operation. However, if we change to , it means that adjacent numbers in should shift two bits. Remember that according to Equation (17), a normalized coefficient should be carefully calculated to match the designation, making sure that the input and output share the same variance.

4. Implementation and Results

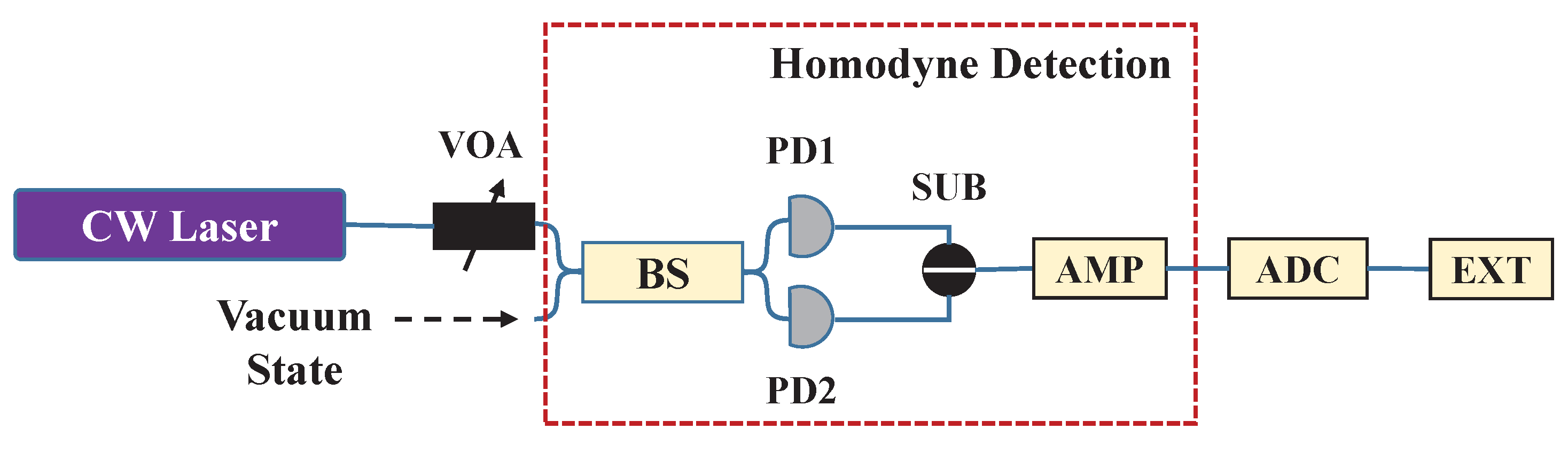

4.1. Experimental Setup

4.2. Test Results

Normality Tests

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| QRNG | Quantum Random Number Generator |

| QKD | Quantum Key Distribution |

| PDF/CDF | Probability/Cumulative Density Function |

| ADC | Analog-to-Digital Converter |

| QCNR | Quantum-to-Classical Noise Ratio |

| GoF | Goodness of Fit |

| MSB/LSB | Most/Least Significant Bit |

Appendix A. Goodness of Fit Tests

Appendix B. PDF Conversion between Uniform and Gaussian Distribution

- Box-Muller [63]: uniform and Gaussian distribution can be easily converted between rectangular basis and polar basis. Assuming that are uniform variables, and are Gaussian variables, there exist:while for the inverse conversion:

- CDF method [54]: uniform and Gaussian distribution can be converted by cumulative density function (CDF) and its inverse function, ICDF. Assuming U an uniform variable, and X a Gaussian variable, there exist:is denoted as:

References

- Gisin, N.; Ribordy, G.; Tittel, W.; Zbinden, H. Quantum cryptography. Rev. Mod. Phys. 2002, 74, 145. [Google Scholar]

- Scarani, V.; Bechmann-Pasquinucci, H.; Cerf, N.J.; Dušek, M.; Lütkenhaus, N.; Peev, M. The security of practical quantum key distribution. Rev. Mod. Phys. 2009, 81, 1301. [Google Scholar] [CrossRef]

- Xu, F.; Ma, X.; Zhang, Q.; Lo, H.K.; Pan, J.W. Secure quantum key distribution with realistic devices. arXiv 2019, arXiv:1903.09051. [Google Scholar]

- Pirandola, S.; Andersen, U.; Banchi, L.; Berta, M.; Bunandar, D.; Colbeck, R.; Englund, D.; Gehring, T.; Lupo, C.; Ottaviani, C.; et al. Advances in quantum cryptography. arXiv 2019, arXiv:1906.01645. [Google Scholar] [CrossRef]

- Brent, R.P. Algorithm 488: A Gaussian pseudo-random number generator. Commun. ACM 1974, 17, 704–706. [Google Scholar] [CrossRef]

- Herrero-Collantes, M.; Garcia-Escartin, J.C. Quantum random number generators. Rev. Mod. Phys. 2017, 89, 015004. [Google Scholar] [CrossRef]

- Ma, X.F.; Yuan, X.; Cao, Z.; Qi, B.; Zhang, Z. Quantum random number generation. npj Quantum Inf. 2016, 2, 16021. [Google Scholar] [CrossRef]

- Jennewein, T.; Achleitner, U.; Weihs, G.; Weinfurter, H.; Zeilinger, A. A fast and compact quantum random number generator. Rev. Sci. Instrum. 2000, 71, 1675. [Google Scholar] [CrossRef]

- Stefanov, A.; Gisin, N.; Guinnard, O.; Guinnard, L.; Zbinden, H. Optical quantum random number generator. J. Mod. Opt. 2000, 47, 595. [Google Scholar] [CrossRef]

- Wang, P.X.; Long, G.L.; Li, Y.S. Scheme for a quantum random number generator. J. Appl. Phys. 2006, 100, 056107. [Google Scholar] [CrossRef]

- Ma, H.Q.; Xie, Y.J.; Wu, L.A. Random number generation based on the time of arrival of single photons. Appl. Opt. 2005, 44, 7760. [Google Scholar] [CrossRef] [PubMed]

- Stipčević, M.; Rogina, B.M. Quantum random number generator based on photonic emission in semiconductors. Rev. Sci. Instrum. 2007, 78, 045104. [Google Scholar] [CrossRef] [PubMed]

- Dynes, J.F.; Yuan, Z.L.; Sharpe, A.W.; Shields, A.J. A high speed, postprocessing free, quantum random number generator. Appl. Phys. Lett. 2008, 93, 031109. [Google Scholar] [CrossRef]

- Wayne, M.A.; Jeffrey, E.R.; Akselrod, G.M.; Kwiat, P.G. Photon arrival time quantum random number generation. J. Mod. Opt. 2009, 56, 516. [Google Scholar] [CrossRef]

- Wahl, M.; Leifgen, M.; Berlin, M.; Rohlicke, T.; Rahn, H.J.; Benson, O. An ultrafast quantum random number generator with provably bounded output bias based on photon arrival time measurements. Appl. Phys. Lett. 2011, 98, 171105. [Google Scholar] [CrossRef]

- Nie, Y.Q.; Zhang, H.F.; Zhang, Z.; Wang, J.; Ma, X.F.; Zhang, J.; Pan, J.W. Practical and fast quantum random number generation based on photon arrival time relative to external reference. Appl. Phys. Lett. 2014, 104, 051110. [Google Scholar] [CrossRef]

- Wei, W.; Guo, H. Bias-free true random-number generator. Opt. Lett. 2009, 34, 1876. [Google Scholar] [CrossRef]

- Ren, M.; Wu, E.; Liang, Y.; Jian, Y.; Wu, G.; Zeng, H.P. Quantum random-number generator based on a photon-number-resolving detector. Phys. Rev. A 2011, 83, 023820. [Google Scholar] [CrossRef]

- Guo, H.; Tang, W.Z.; Liu, Y.; Wei, W. Truly random number generation based on measurement of phase noise of a laser. Phys. Rev. E 2010, 81, 051137. [Google Scholar] [CrossRef]

- Qi, B.; Chi, Y.M.; Lo, H.K.; Qian, L. High-speed quantum random number generation by measuring phase noise of a single-mode laser. Opt. Lett. 2010, 35, 312. [Google Scholar] [CrossRef]

- Jofre, M.; Curty, M.; Steinlechner, F.; Anzolin, G.; Torres, J.P.; Mitchell, M.W.; Pruneri, V. True random numbers from amplified quantum vacuum. Opt. Express 2011, 19, 20665. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Z.L.; Lucamarini, M.; Dynes, J.F.; Frohlich, B.; Plews, A.; Shields, A.J. Robust random number generation using steady-state emission of gain-switched laser diodes. Appl. Phys. Lett. 2014, 104, 261112. [Google Scholar] [CrossRef]

- Nie, Y.Q.; Huang, L.L.; Liu, Y.; Payne, F.; Zhang, J.; Pan, J.W. The generation of 68 Gbps quantum random number by measuring laser phase fluctuations. Rev. Sci. Instrum. 2015, 86, 063105. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Liu, J.L.; Su, Q.; Li, Z.Y.; Fan, F.; Xu, B.J.; Guo, H. 5.4 Gbps real time quantum random number generator with simple implementation. Opt. Express 2016, 24, 27475–27481. [Google Scholar] [CrossRef]

- Huang, M.; Chen, Z.Y.; Zhang, Y.C.; Guo, H. A phase fluctuation based practical quantum random number generator scheme with delay-free structure. Appl. Sci. 2020, 10, 2431. [Google Scholar] [CrossRef]

- Wei, W.; Xie, G.D.; Dang, A.H.; Guo, H. High-speed and bias-free optical random number generator. IEEE Photon. Technol. Lett. 2012, 24, 437. [Google Scholar] [CrossRef]

- Williams, C.R.S.; Salevan, J.C.; Li, X.W.; Roy, R.; Murphy, T.E. Fast physical random number generator using amplified spontaneous emission. Opt. Express 2010, 18, 23584. [Google Scholar] [CrossRef]

- Li, X.W.; Cohen, A.B.; Murphy, T.E.; Roy, R. Scalable parallel physical random number generator based on a superluminescent LED. Opt. Lett. 2011, 36, 1020. [Google Scholar] [CrossRef]

- Martin, A.; Sanguinetti, B.; Lim, C.C.W.; Houlmann, R.; Zbinden, H. Quantum Random Number Generation for 1.25 GHz Quantum Key Distribution Systems. IEEE J. Lightwave Technol. 2015, 33, 2855. [Google Scholar] [CrossRef]

- Gabriel, C.; Wittmann, C.; Sych, D.; Dong, R.F.; Mauerer, W.; Andersen, U.L.; Marquardt, C.; Leuchs, G. A generator for unique quantum random numbers based on vacuum states. Nat. Photon. 2010, 4, 711. [Google Scholar] [CrossRef]

- Shen, Y.; Tian, L.A.; Zou, H.X. Practical quantum random number generator based on measuring the shot noise of vacuum states. Phys. Rev. A 2010, 81, 063814. [Google Scholar] [CrossRef]

- Symul, T.; Assad, S.M.; Lam, P.K. Real time demonstration of high bitrate quantum random number generation with coherent laser light. Appl. Phys. Lett. 2011, 98, 231103. [Google Scholar] [CrossRef]

- Haw, J.Y.; Assad, S.M.; Lance, A.M.; Ng, N.H.Y.; Sharma, V.; Lam, P.K.; Symul, T. Maximization of extractable randomness in a quantum random-number generator. Phys. Rev. Appl. 2015, 3, 054004. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhang, Y.; Huang, W.; Yu, S.; Guo, H. 6 Gbps real-time optical quantum random number generator based on vacuum fluctuation. Rev. Sci. Instrum. 2019, 90, 043105. [Google Scholar] [CrossRef] [PubMed]

- Katsoprinakis, G.E.; Polis, M.; Tavernarakis, A.; Dellis, A.T.; Kominis, I.K. Quantum random number generator based on spin noise. Phys. Rev. A 2008, 77, 054101. [Google Scholar] [CrossRef]

- Zhang, Q.; Deng, X.W.; Tian, C.X.; Su, X.L. Quantum random number generator based on twin beams. Opt. Lett. 2017, 42, 895–898. [Google Scholar] [CrossRef]

- Bell, J.S. On the Einstein Podolsky Rosen paradox. Physics 1964, 1, 195. [Google Scholar] [CrossRef]

- Clauser, J.F.; Horne, M.A.; Shimony, A.; Holt, R.A. Proposed experiment to test local hidden-variable theories. Phys. Rev. Lett. 1969, 23, 880. [Google Scholar] [CrossRef]

- Pironio, S.; Acin, A.; Massar, S.; de la Giroday, A.B.; Matsukevich, D.N.; Maunz, P.; Olmschenk, S.; Hayes, D.; Luo, L.; Manning, T.A.; et al. Random numbers certified by Bell’s theorem. Nature 2010, 464, 1021. [Google Scholar] [CrossRef]

- Colbeck, R.; Kent, A. Private randomness expansion with untrusted devices. J. Phys. A Math. Theor. 2011, 44, 095305. [Google Scholar] [CrossRef]

- Fehr, S.; Gelles, R.; Schaffner, C. Security and composability of randomness expansion from Bell inequalities. Phys. Rev. A 2013, 87, 012335. [Google Scholar] [CrossRef]

- Miller, C.A.; Shi, Y.Y. Universal security for randomness expansion from the spot-checking protocol. SIAM J. Comput. 2017, 46, 1304–1335. [Google Scholar] [CrossRef]

- Liu, Y.; Yuan, X.; Li, M.; Zhang, W.; Zhao, Q.; Zhong, J.; Cao, Y.; Li, Y.; Chen, L.; Li, H.; et al. High-speed device-independent quantum random number generation without a detection loophole. Phys. Rev. Lett. 2018, 120, 010503. [Google Scholar] [CrossRef] [PubMed]

- Colbeck, R.; Renner, R. Free randomness can be amplified. Nat. Phys. 2012, 8, 450. [Google Scholar] [CrossRef]

- Gallego, R.; Masanes, L.; de la Torre, G.; Dhara, C.; Aolita, L.; Acin, A. Full randomness from arbitrarily deterministic events. Nat. Commun. 2013, 4, 2654. [Google Scholar] [CrossRef]

- Cao, Z.; Zhou, H.Y.; Yuan, X.; Ma, X.F. Source-independent quantum random number generation. Phys. Rev. X 2016, 6, 011020. [Google Scholar] [CrossRef]

- Marangon, D.G.; Vallone, G.; Villoresi, P. Source-Device-Independent Ultrafast Quantum Random Number Generation. Phys. Rev. Lett. 2017, 118, 060503. [Google Scholar] [CrossRef]

- Cao, Z.; Zhou, H.Y.; Ma, X.F. Loss-tolerant measurement-device-independent quantum random number generation. New J. Phys. 2015, 17, 125011. [Google Scholar] [CrossRef]

- Nie, Y.Q.; Guan, J.Y.; Zhou, H.Y.; Zhang, Q.; Ma, X.F.; Zhang, J.; Pan, J.W. Experimental measurement-device-independent quantum random-number generation. Phys. Rev. A 2016, 94, 060301. [Google Scholar] [CrossRef]

- Xu, B.; Chen, Z.; Li, Z.; Yang, J.; Su, Q.; Huang, W.; Zhang, Y.; Guo, H. High speed continuous variable source-independent quantum random number generation. Quantum Sci. Technol. 2019, 4, 025013. [Google Scholar] [CrossRef]

- Michel, T.; Haw, J.Y.; Marangon, D.G.; Thearle, O.; Vallone, G.; Villoresi, P.; Lam, P.K.; Assad, S.M. Real-time Source-Independent Quantum Random Number Generator with Squeezed States. Phys. Rev. Appl. 2019, 12, 034017. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Z.; Chen, Z.; Weedbrook, C.; Zhao, Y.; Wang, X.; Huang, Y.; Xu, C.; Xiaoxiong, Z.; Wang, Z.; et al. Continuous-variable QKD over 50 km commercial fiber. Quantum Sci. Technol. 2019, 4, 035006. [Google Scholar] [CrossRef]

- Zhang, Y.C.; Chen, Z.; Pirandola, S.; Wang, X.; Zhou, C.; Chu, B.; Zhao, Y.; Xu, B.; Yu, S.; Guo, H. Long-distance continuous-variable quantum key distribution over 202.81 km fiber. arXiv 2020, arXiv:2001.02555. [Google Scholar]

- Muller, M.E. An inverse method for the generation of random normal deviates on large-scale computers. Math. Tables Other Aids Comput. 1958, 12, 167–174. [Google Scholar] [CrossRef]

- Wallace, C.S. Fast pseudorandom generators for normal and exponential variates. ACM Trans. Math. Software (TOMS) 1996, 22, 119–127. [Google Scholar] [CrossRef]

- Thomas, D.B.; Luk, W.; Leong, P.H.W.; Villasenor, J.D. Gaussian random number generators. ACM Comput. Surv. (CSUR) 2007, 39, 11. [Google Scholar] [CrossRef]

- Xu, F.H.; Qi, B.; Ma, X.F.; Xu, H.; Zheng, H.X.; Lo, H.K. Ultrafast quantum random number generation based on quantum phase fluctuations. Opt. Express 2012, 20, 12366. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.F.; Xu, F.H.; Xu, H.; Tan, X.Q.; Qi, B.; Lo, H.K. Postprocessing for quantum random-number generators: Entropy evaluation and randomness extraction. Phys. Rev. A 2013, 87, 062327. [Google Scholar] [CrossRef]

- Chen, Z.; Li, Z.; Xu, B.; Zhang, Y.; Guo, H. The m-least significant bits operation for quantum random number generation. J. Phys. B At. Mol. Opt. 2019, 52, 195501. [Google Scholar] [CrossRef]

- Massey, F.J., Jr. The Kolmogorov-Smirnov test for goodness of fit. J. Am. Stat. Assoc. 1951, 46, 68–78. [Google Scholar] [CrossRef]

- Anderson, T.W.; Darling, D.A. A Test of Goodness of Fit. J. Am. Stat. Assoc. 1954, 49, 765–769. [Google Scholar] [CrossRef]

- Razali, N.M.; Bee, W.Y. Power Comparisons of Shapiro-Wilk, Kolmogorov-Smirnov, Lilliefors and Anderson-Darling Tests. J. Stat. Modeling and Anal. 2011, 2, 21–33. [Google Scholar]

- Box, G.E.P.; Muller, M.E. A note on the generation of random normal deviates. Ann. Math. Stat. 1958, 29, 610–611. [Google Scholar] [CrossRef]

| Normal | t-Dist. | Uniform | Rayleigh | |||||

|---|---|---|---|---|---|---|---|---|

| QCNR(dB) | Before | After | Before | After | Before | After | Before | After |

| 3 | 1.2225 | 1.1653 | 1.2966 | 1.3338 | 64.036 | 4.6896 | 179.40 | 1.4993 |

| 6 | 1.2582 | 1.2320 | 1.4416 | 1.4348 | 9.0556 | 1.4507 | 39.991 | 1.2510 |

| 10 | 1.1920 | 1.2031 | 1.2478 | 1.2741 | 1.4064 | 1.3917 | 4.5185 | 1.1799 |

| 20 | 1.2717 | 1.2455 | 1.2132 | 1.2510 | 1.1764 | 1.1996 | 1.2150 | 1.1964 |

| Function | Mean | AD Test | JB Test | t-Test |

|---|---|---|---|---|

| Calculated result | − | p = 0.4788 | p = 0.3678 | p = 0.2023 |

| Confidence Interval | [, 0.0036] | NULL | NULL | NULL |

| Hypothesis value | ||||

| Status | Pass | Pass | Pass | Pass |

| Test Name | p-Value | Proportion | Status |

|---|---|---|---|

| Frequency | 0.811993 | 394 | Success |

| Block Frequency | 0.719747 | 396 | Success |

| Cumulative Sums | 0.785103(KS) | 395.5(avg) | Success |

| Runs | 0.270275 | 396 | Success |

| Longest Run | 0.788728 | 397 | Success |

| Rank | 0.375313 | 396 | Success |

| FFT | 0.272297 | 395 | Success |

| Non-overlapping | 0.647530(KS) | 394(avg) | Success |

| Overlapping | 0.830808 | 396 | Success |

| Universal | 0.451234 | 393 | Success |

| Approx. Entropy | 0.739918 | 397 | Success |

| Excursions | 0.726852(KS) | 392(avg) | Success |

| Excursions Var. | 0.670396(KS) | 395(avg) | Success |

| Serial | 0.589359(KS) | 392.5(avg) | Success |

| Complexity | 0.124115 | 392 | Success |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, M.; Chen, Z.; Zhang, Y.; Guo, H. A Gaussian-Distributed Quantum Random Number Generator Using Vacuum Shot Noise. Entropy 2020, 22, 618. https://doi.org/10.3390/e22060618

Huang M, Chen Z, Zhang Y, Guo H. A Gaussian-Distributed Quantum Random Number Generator Using Vacuum Shot Noise. Entropy. 2020; 22(6):618. https://doi.org/10.3390/e22060618

Chicago/Turabian StyleHuang, Min, Ziyang Chen, Yichen Zhang, and Hong Guo. 2020. "A Gaussian-Distributed Quantum Random Number Generator Using Vacuum Shot Noise" Entropy 22, no. 6: 618. https://doi.org/10.3390/e22060618

APA StyleHuang, M., Chen, Z., Zhang, Y., & Guo, H. (2020). A Gaussian-Distributed Quantum Random Number Generator Using Vacuum Shot Noise. Entropy, 22(6), 618. https://doi.org/10.3390/e22060618