Abstract

Dynamic correlation is the correlation between two time series across time. Two approaches that currently exist in neuroscience literature for dynamic correlation estimation are the sliding window method and dynamic conditional correlation. In this paper, we first show the limitations of these two methods especially in the presence of extreme values. We present an alternate approach for dynamic correlation estimation based on a weighted graph and show using simulations and real data analyses the advantages of the new approach over the existing ones. We also provide some theoretical justifications and present a framework for quantifying uncertainty and testing hypotheses.

1. Introduction

Dynamic bivariate correlation, or dynamic correlation for short, is correlation between a pair of time series, which itself changes over time. Assessing dynamic correlation is of importance in many areas within neuroscience. Dynamic correlation estimation is of interest in neuroimaging because many studies have identified dynamic changes in functional connectivity during the course of a functional magnetic resonance imaging (fMRI) experiment [1,2,3,4,5,6,7], especially during resting state. Estimating dynamic correlation is of interest in neurophysiology as well. For example, dynamic correlation between local field potential time series obtained from different brain regions could be used to explore how certain brain regions work in tandem during certain specific behaviors. When identifying such changes, it is of importance to make sure that the dynamic shifts in the correlations observed are not due to spurious fluctuations inherent to the estimation method used.

A commonly used method for dynamic correlation estimation is the sliding window (SW) method. Although easy to understand and implement, the main limitation of the SW method is uncertainty regarding the choice of the window size. Another well-established method for dynamic correlation estimation is dynamic conditional correlation (DCC) developed by Engle and Sheppard in the field of econometrics [8,9] and widely used in finance literature. A few years ago DCC was introduced as a useful tool for neuroimaging researchers by Lindquist and co-authors [10]. Although DCC has many desirable features compared to SW method, it has certain limitations.

Time series measurements occurring in neuroscience literature occasionally have a few extreme values, as seen, for example, in the local field potential time series data, which is one of the data sets analyzed in this paper. Such values can be ignored only if they are an anomaly that does not make sense with the underlying scientific framework. In this paper, among other things, we show that the performance of DCC is not robust in the presence of extreme values. Another limitation of DCC is related to its practical implementation using numerical algorithms. Optimization procedures in DCC parameter estimation involves inversion of matrices which could lead to numerical instability. Although this computational issue is compounded in the presence of extreme values, it could occasionally be an issue even when there are no extreme values present in the pair of time series under consideration.

To address these problems, we propose a novel, robust approach for estimating dynamic correlation estimation. Our method is based on converting time series into a weighted graph and hence we refer to it as weighted graph algorithm (WGA). We illustrate the utility of the new method via simulations and real data examples. We also present theoretical justifications and provide a framework for calculating confidence intervals and conducting hypothesis tests for dynamical correlation based on the WGA approach.

2. Methods

We consider three methods for estimating dynamic correlation in this paper. The first two methods—the SW and DCC—are currently existing methods. The third method based on a weighted graph algorithm (WGA) is a new approach to the dynamic-correlation-estimation problem. We illustrate the methods using two time series generated as in Lindquist et al’s [10] simulations study . Random data were generated for each time point using a mean-zero bivariate normal distribution, with covariance matrix set to

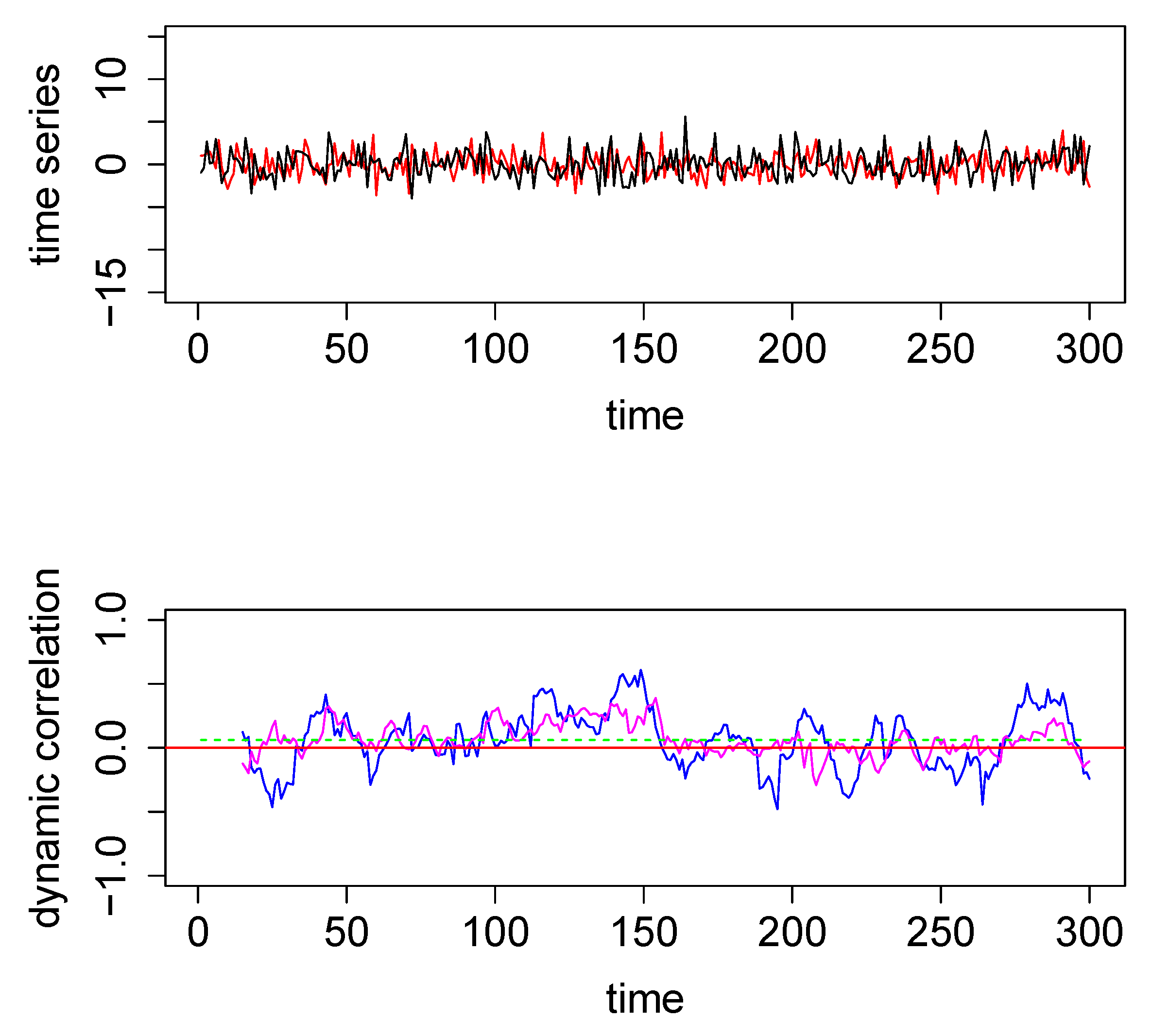

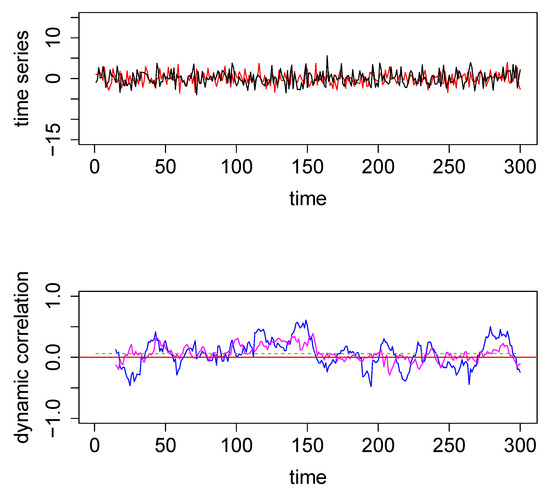

A bivariate time series generated using the above mechanism with time points is shown in the top panel in Figure 1 below. The dynamic correlation was a priori set to zero in this simulated example. That is, the underlying correlation equals zero at all time points in this simulated bivariate time series.

Figure 1.

Top panel: The two time series used for the first illustrative simulated example; data were generated from a bivariate normal distribution. Bottom Panel—Dynamic correlations estimated for the above two time series using 3 different methods—method #1, sliding window (SW) (blue); method #2, dynamic conditional correlation (DCC) (green) (see also Figure A1); and method #3, weighted graph algorithm (WGA) (magenta). The red horizontal line in the center through zero represents the underlying true dynamic correlation for this example.

2.1. Method # 1—Sliding-Window (SW) Technique

SW technique [1,2,4] is easy to explain and implement. We choose a window-frame of size = 15 for the example considered in Figure 1. At the first iteration, we used the window-frame to frame the first () 15 time points of the bivariate time series, and find the correlation between the values of the two series within this window-frame. Then we repeat the process by sliding the window to the right, one unit at a time. Thus, in general, at iteration i, the right-end of the SW is placed at the time point of both time series, and the correlation between the two time series within the window-frame was calculated. The series of correlations obtained as the right-end of the SW is moved from the time-point all the way to time-point of the two time series form the blue curve plotted in the bottom-panel of Figure 1.

To make it clearer, the 1st correlation value is the correlation between two vectors each of length 15: the 1st and the 2nd vectors containing the values corresponding to time points between 1 and 15 (end points included) of the 1st and the 2nd time series, respectively. To obtain the second correlation, we move the window one unit to the right, so that for this second iteration the right-end of the window-frame is at time-point 16, and the left end is at time-point 2. Thus the 2nd correlation value will be the correlation between two vectors each of length 15: the 1st and the 2nd vectors containing the values corresponding to time points between 2 and 16 (end points included) of the 1st and the 2nd time series, respectively. We continue moving the SW one unit to the right at each iteration and calculating the corresponding correlation until the final iteration (that is, when the right-end of the SW is at time point). All the consecutive correlations thus obtained are plotted as the blue curve in the bottom-panel. The performance of this method depends on the size of the window: a larger provides smoother estimates of dynamic correlation, while smaller is more sensitive to variations in the underlying data. Lindquist et al. [10] discusses this issue in more detail, and also mentions variations of the above technique such as tapered sliding window [1].

2.2. Method # 2—Dynamic Conditional Correlation

Here we outline the key steps involved in the DCC method. A good source with a detailed exposition is [11]. Let us denote the two time series under consideration by and . If the conditional correlation between and at time t is denoted by , then the corresponding correlation matrix may be denoted by :

If the conditional variances at time t for the two series and are and , respectively, then the corresponding conditional variance-covariance matrix

which may also be written as

or equivalently . If we denote

and without loss of generality assume (–otherwise, we can always center the time series data at the respective means to make this assumption valid) then Here the prime symbol right next to a vector or a matrix denotes its transpose. Hence,

Thus, if we can somehow estimate (that is, estimate and ) as a first step, then we may determine . Furthermore, the sample variance-covariance matrix of will be an estimate of based on Equation (1). Hence the first question is: ‘Is there a way to estimate and ?’ Extensive work in the econometrics field has shown that one of the best ways to address this question is using generalized autoregressive conditional heteroscedasticity (GARCH) modeling [12,13,14]. In econometrics literature the standard deviations and are known as ‘volatilities’ of the series and at time t, respectively, and is known as ‘volatility-adjusted data’. In the GARCH(1,1) setting for each is estimated recursively as the weighted sum of the unconditional estimated variance at (i.e., ) and :

Thus the six parameters to be estimated in this model are As mentioned before, once the first step is completed we will have an estimate of with which we will be able to calculate (the latter calculation is known as “DE-GARCHING" in econometrics literature). In the next step we estimate the variance-covariance matrix of using a recursive approach (-similar to the recursive approach in GARCH (1,1))

as a weighted sum of the contribution at , and the estimate that we obtained by . is an intercept matrix which has ones in the diagonal. Ideally, we should be done by the end of this second step because based on the theory we know that is an estimate of . However, in practice may deviate slightly from satisfying all the properties of a correlation matrix. Hence is labelled as a quasi-correlation matrix. Note that the number of parameters to be estimated in Equation (2) are three: a, b and the one off-diagonal parameter in the symmetric matrix . Although we deal with only two time series here, all the steps in the DCC algorithm can be easily extended to the case of N time series, . For larger N, the number of parameters in , can get very large. An alternate method (known as first-order correlation targeting) used in practice is to estimate of using

is the sample unconditional correlation matrix of the standardized residuals. If we use instead of , then in Equation (2) we will have 2 parameters rather than parameters. Although first-order correlation targeting helps computationally, it may induce a small downward bias in a for larger N. There are methods to correct this bias, but for the bias is negligible and hence in this paper we will apply correlation targeting in the second step without bias correction. For general a and b, Equation (2) models a mean-reverting process. Typically, for most pairs of time series occurring in finance, changes in correlations appear to be temporary and mean-reverting. However, this need not be always the case for time series pairs in neuroimaging or neuroscience literature. To model such a scenario, an alternate to Equation (2) could be used:

a process which has no tendency to have correlations revert to a constant value and should be particularly useful in modeling correlation that jumps one or more times.

The matrix estimated in the second step may not yield a proper correlation matrix in practice (e.g., certain elements could be outside ). Thus, in the final and third step of DCC, is rescaled to get a proper estimate of :

The most commonly used version of DCC, outlined above, may be summarized as follows:

- 1st step:

- Estimate using GARCH(1,1)

- DE-GARCH data:

- 2nd step:

- Estimate quasi-correlation matrix , where

- 3rd step:

- Rescale to get a proper correlation matrix:

Estimation of the parameters in practice is done using a 2-step process under the quasi-maximum-likelihood framework. The log-likelihood L can be split as a sum , where

consists of only variance parameters and consists of only correlation parameters, thereby facilitating a 2-step process. In the first step, the variance part of L (i.e., ) is maximized to obtain estimates of the corresponding parameters and based on these estimates standardized residuals ’s are obtained. In the second step the standard residuals from the first step is plugged-in into and then is then maximized with respect to the correlation parameters. Note that the second step involves inversion of the full matrix for each t; this could lead to non-convergence of the numerical optimization involved in estimation. Such numerical issues are aggravated when N is large, but they are less of a problem for normal data points when N = 2. However, even when N = 2, in the presence of extreme values in the data, the corresponding matrices may get close to singular which could lead to numerical instability and inaccurate results. All DCC analysis for this paper was conducted in Matlab using the DCC toolbox (https://github.com/canlab/Lindquist_Dynamic_Correlation).

2.3. Method # 3—Weighted Graph Algorithm (WGA)

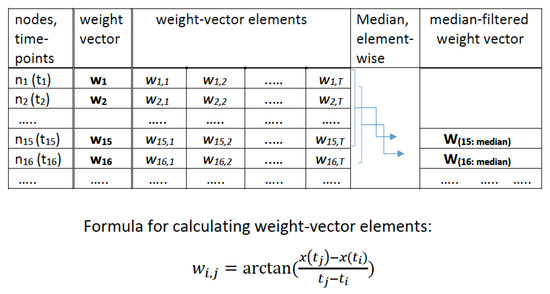

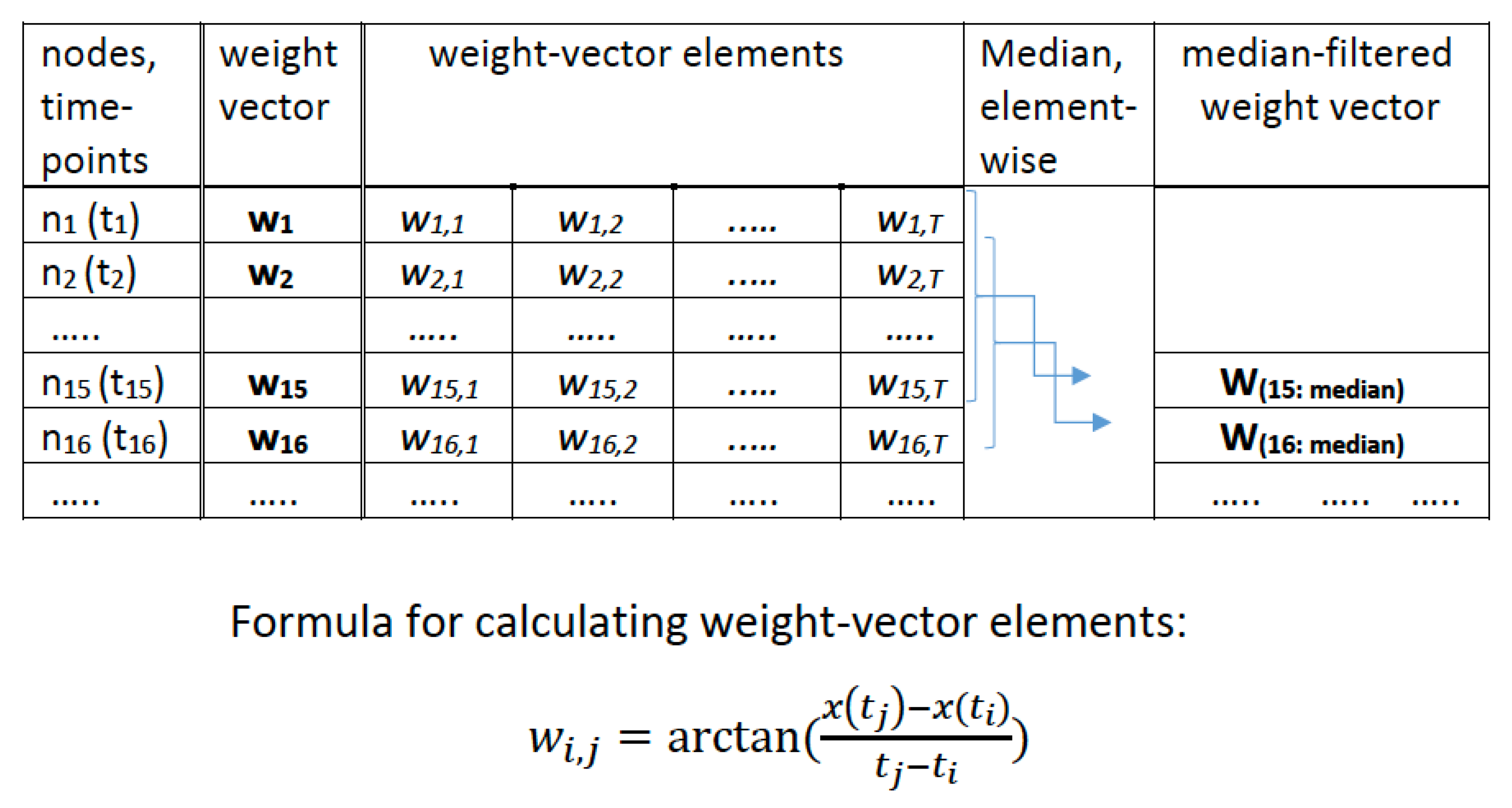

The new approach proposed in this paper is best explained using a fully connected weighted graph associated with each time series. For a given time series with values we consider a graph with T nodes, where the node corresponds to the time point . Any pair of nodes () (corresponding to the pair of time points ()) is connected by an edge with a weight associated with it. We define the weight to be

Our graph construction is inspired by, yet different from, the Weighted Visibility Graphs (WVGs) presented in Supriya et al. [15]. WVGs are weighted versions of visibility graphs introduced by Lacasa et al. [16]. Visibility graph algorithm, which converts a time series into a graph based on a visibility criterion, has found wide applications in physics and many other fields. (See Appendix B for more details about the visibility criterion defined by Lacasa et al). Methods based on visibility graphs became popular after the original concept of mapping time series to a complex network was introduced in Zhang and Small [17]. The main difference between the above-mentioned constructions from existing literature and ours is that we do not use a visibility criterion, and hence, in particular, our graph is fully connected.

Our Weighted Graph Algorithm (WGA) for dynamic correlation estimation is also based on a sliding window. As done for method 1, we pick a window of size 15 for illustration. Associated with each time point (that is, with each node in the WGA graph) is a weight-vector of length T consisting of the weights of the edges from all the other nodes of the graph to . We named this weight vector as . Note that we considered the weight between to itself as zero. That is, element of is zero, for all i. For each time series, we place the right-end of a sliding window (of size 15) at time point i, considered all the weight vectors

and obtained a new vector by taking the median element-wise. That is element of is the median of the elements of The steps so far are illustrated in the schematic diagram presented in Figure A7 in the Appendix B.

If we use super-scripts to denote the vectors corresponding to the two time series, then the above steps may be summarized using the following formula:

where denotes the element of each vector and denote the time series. The dynamic correlation estimate at the time point (where i ranges from 15 to T) in our approach is the correlation between the vectors and . A heuristic justification and further theoretical considerations are provided in the Appendix C.

Illustration of the Methods

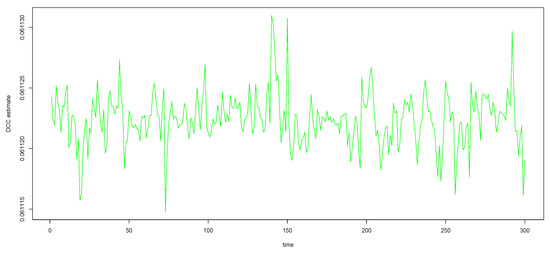

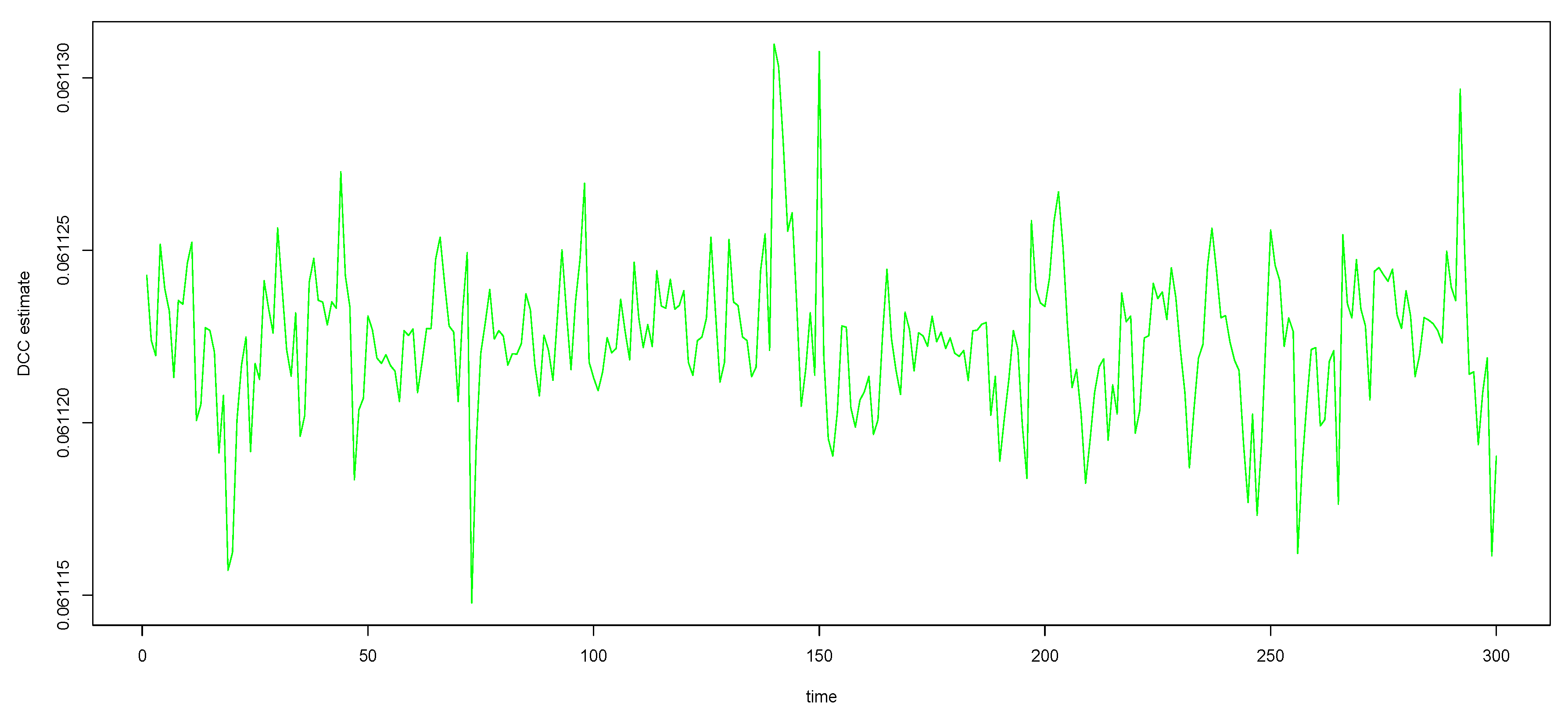

To illustrate graphically how the three methods perform, we computed the three dynamical correlations and plotted them together in the bottom panel of Figure 1. The underlying dynamic correlation, which is equal to zero at all time points, is plotted as the red line. The correlations obtained through the SW, WGA and DCC methods are plotted as blue, magenta and green curves in bottom panel in Figure 1. The dynamic correlation estimates based on DCC (green curve) may appear to be a straight line; but this is not in fact the case (see Figure A1 in the Appendix A where we used a different scale for the y-axis). SW method (blue curve) results in the worst estimate, DCC method (green curve) results in the best estimate, and the WGA method (magenta curve) performs somewhere in between. This qualitative estimation is confirmed quantitatively by the following summary statistics: the maximum absolute value for the blue, magenta and green curves are, respectively, 0.61, 0.39, and 0.06.

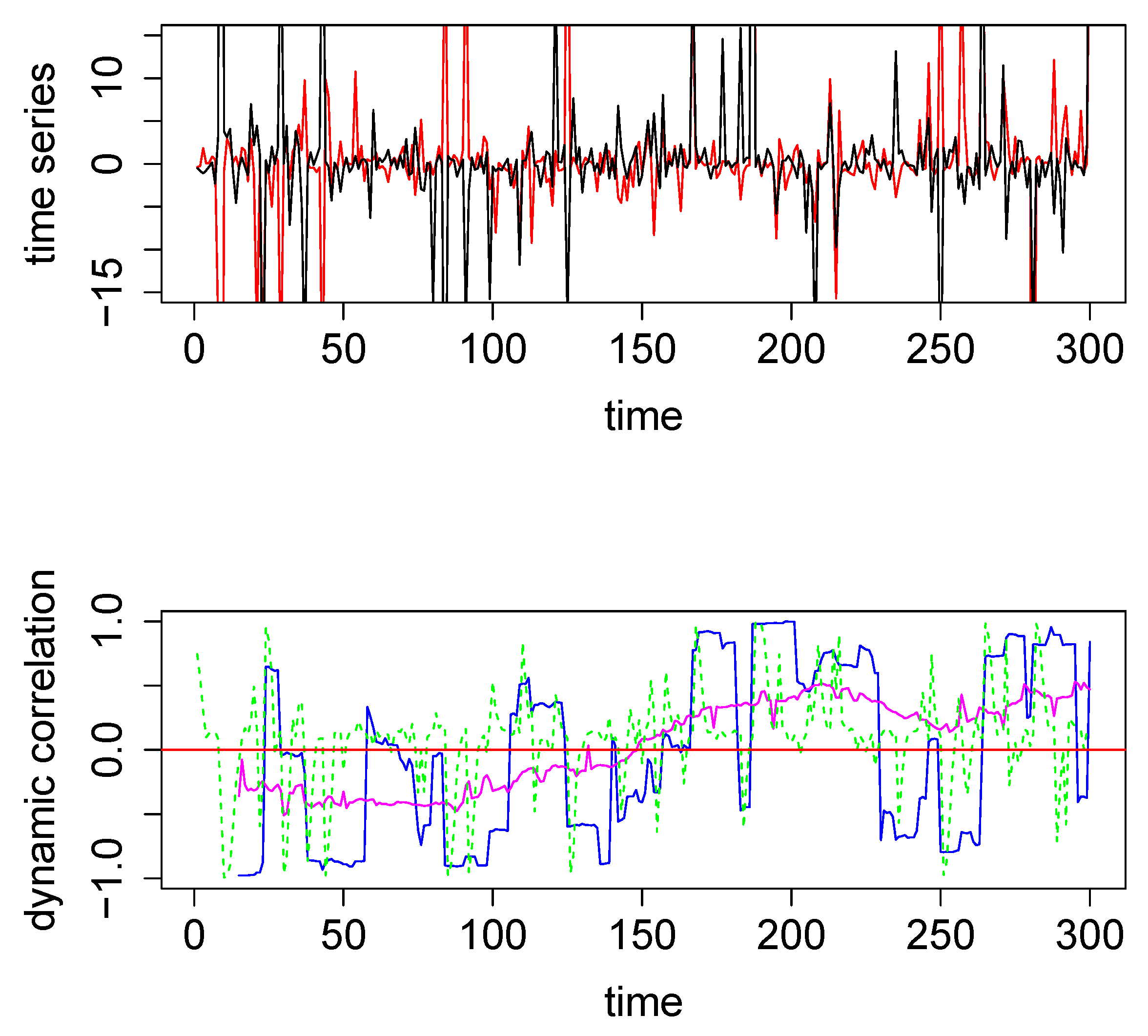

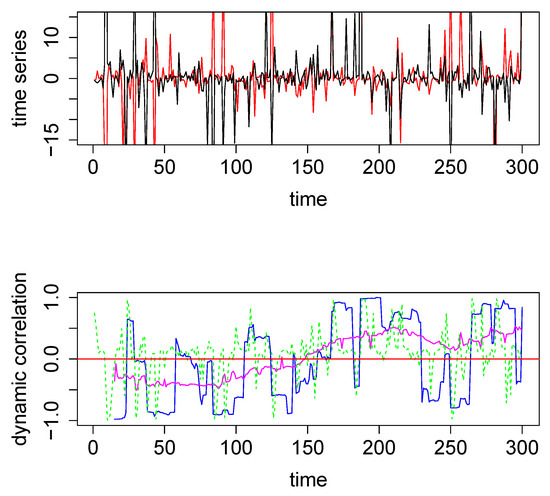

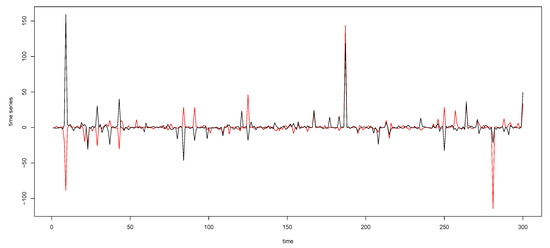

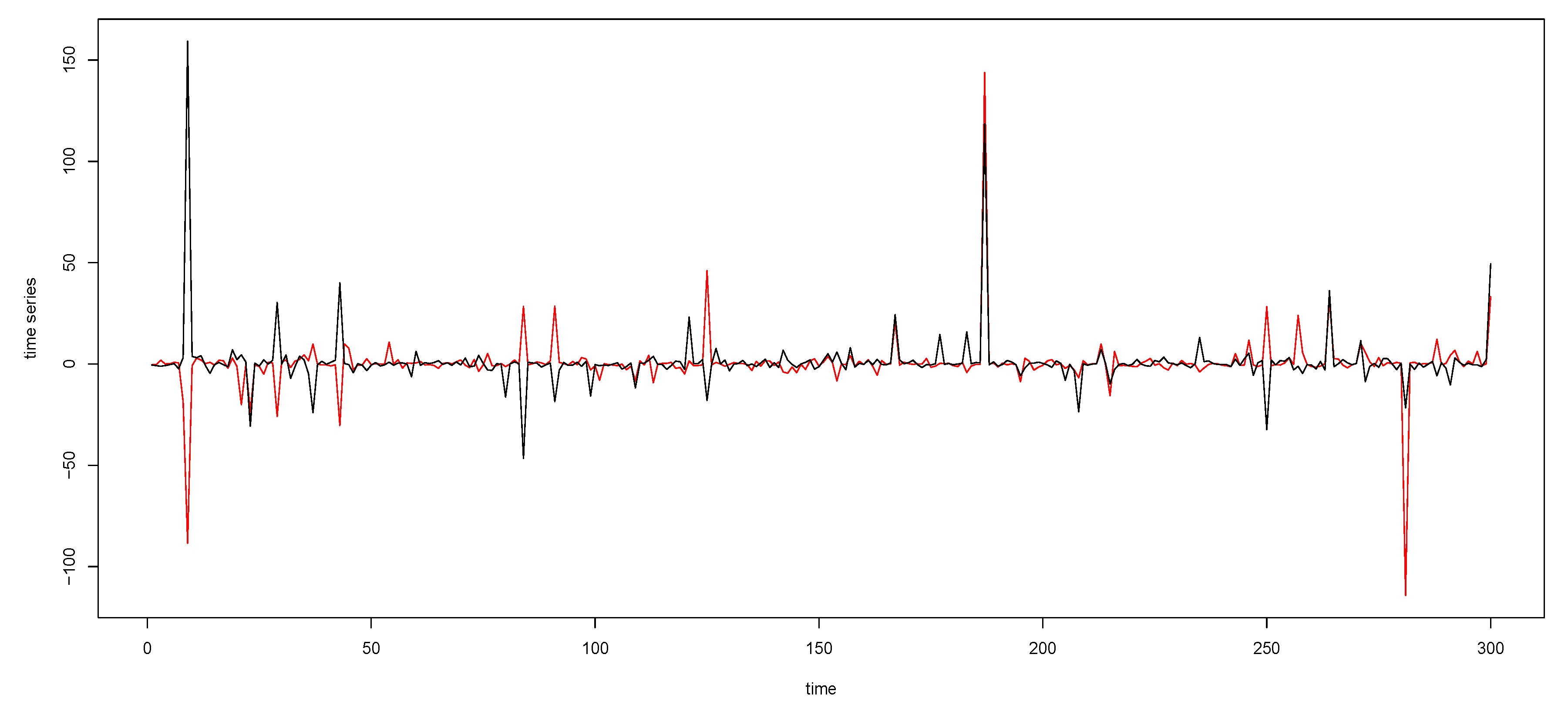

In the above example, DCC performs better than both the SW technique and the new method. However, the data in this example are a pair of nicely behaved time series with no outliers (since they were generated from a bivariate normal density). However, in the presence of outliers DCC does not perform as well. We generated two time series from a bivariate Cauchy density (by using the R function rcauchy2d from the package fMultivar) [18]. The correlation parameter for the bivariate Cauchy density () was set to zero, so that the underlying dynamic correlation for this example was also zero for all time points. The two time series thus generated are plotted in the top panel in Figure 2. Because Cauchy density has very heavy tails, the generated data contain several extreme values. We applied the same three analytical methods to this data set and the results are plotted in the bottom panel in Figure 2, with the same color coding as in Figure 1. For this example, the new method easily outperforms the other two methods, as seen visually. The maximum absolute values for the blue, green, and magenta curves are, respectively, 0.9986, 0.9989 and 0.5298, respectively. Thus the new method was more robust when extreme values were present in the data. As a minor note, the y-axes for the top panels had the same scale for Figure 1 and Figure 2, which cut off the plot for some extreme values in Figure 2. In order to see the actual extreme values, we plotted the same pair time series with extended y-margins in Appendix A Figure A2.

Figure 2.

Top panel: The two time series used for the second illustrative simulated example; data were generated from a bivariate Cauchy distribution. Bottom Panel: Dynamic correlations estimated for the above two time series using various methods. Method #1, SW (blue), method #2, DCC (green), and method #3, based on WGA (magenta). The red horizontal line in the center through zero represents the underlying true dynamic correlation for this example.

2.4. Simulation Design

To assess the performance of the three methods under various scenarios, we ran extensive simulations using scenarios aimed to closely match the work of Lindquist et al. [10]. We therefore generated data by using bivariate normal distribution, as in Lindquist et al. [10], but also bivariate Cauchy distribution, to obtain data sets with extreme values. In all the simulations involving bivariate Cauchy, values exceeding 50 were reset to 50, and values below –50 were reset to –50. The means for all pairs of time series in the simulation study was set to across all time points and the covariance matrix at time point t was

By considering three different forms for we obtained three different simulations scenarios, as in Lindquist et al. [10]. For simulations involving the sliding window technique (method #1) and the WGA (method #3), the window size was set to 15.

Simulations Design D1: In this case, we set for all t as in the illustrative examples above. We considered four time series of different lengths ( and 1000) for both bivariate normal and bivariate Cauchy distributions. The metrics used to assess the performance of the methods for this scenario were the mean and maximum absolute value of correlations across all time points.

Simulations Design D2: The scenarios in are designed to assess the performance of the methods when the underlying correlation varies slowly over time. We set , , with . We obtained two different (sub-)scenarios corresponding to and 4, which we refer to as and . We set for both of these sub-scenarios. The metric used to assess the performance was mean-squared-error (MSE) as in Lindquist et. al [10].

Simulations Design D3: The scenarios in are designed to assess the performance of the methods when there is a rapid change in the underlying correlation; here, the rapid change occurs near time point 250. We set equal to a Gaussian kernel with mean 250 and standard deviation 15k, where k = 3 and 4. The two k’s resulted in two different (sub-)scenarios, and ; for both cases. MSE was used to compare the performance of the methods.

2.5. Real Data Analysis

To illustrate the advantages and disadvantages of the methods considered in this paper, we applied our analysis to two different empirical data sets. The first data set used for illustration was fMRI data collected from one healthy adult subject while the subject performed a color-word Stroop task [19]. The data were part of a sample collected for a study of the underlying mechanisms based on distributed network of brain regions involved in congruency sequencing effect in cue-conflict paradigms [20]. Time series from six different brain regions were used in our analysis, and dynamic correlations between each pair of regions were obtained. The second data set used for illustration consisted of local field potential (LFP) time series [21,22] obtained from four different electrodes implanted in the brain of a rat. The recording occurred while the rat was awake and placed on a small platform in the lab. Three of the recordings were from the CA1 field of the hippocampus, and one recording from the medial dorsal striatum. Dynamic correlations of data from each pair of recordings were computed. To make the results of our analysis accessible, we did not apply any pre-processing to the recorded LFPs except downsampling from 2 kHz to 1 kHz. This procedure presented the movement artifacts which are prominent in the displayed signals. Although the behavior of the dynamic correlations of pairwise time series was of intrinsic interest for the background scientific research question related to both fMRI and LFP data sets, the main purpose in the current analysis was to illustrate various aspects/issues associated with the estimation methods considered in this paper. Specifically, the Stroop task fMRI data set illustrated the convergence issues related to DCC and LFP data illustrated the performance of the methods in the presence of extreme values. Details about data extraction for fMRI data set and recording for the LFP data are presented below. Codes used for all analysis and simulations are available at https://github.com/janinaf/WGA-DC.

fMRI data. Sample fMRI data from one healthy human adult subject were downloaded from OpenfMRI.org. We used Stroop task fMRI data (https://openfmri.org/dataset/ds000164/) [20], since activation patterns for the Stroop task is relatively well known. Preliminary data processing followed previous publication [23]. Using FMRIB Software Library (FSL) as well as Analysis of Functional NeuroImages (AFNI), anatomical volume was skull stripped, segmented (gray matter, white matter and CSF), and registered to the MNI 2mm standard brain. Processing of fMRI echo planar image (EPI) volume included the following. The first four EPI volumes were removed; transient signal spikes were removed; head motion was corrected; the volumes were smoothed with a 6mm FWHM Gaussian kernel; the volumes were resampled, spatially transformed and aligned to the MNI 2mm standard brain space; volumes with excess motion are scrubbed. In order to extract the time series for six regions chosen a priori, the frontal medial cortex, subcallosal frontal region, insular cortex, Heschl’s gyrus, and amygdala were defined using the Harvard-Oxford atlas and the Caudate Head was defined using WFU PickAtlas. The EPI time series was extracted within each of these six regions.

LFP data. The local field potential (LFP) data were recorded from an awake rat placed on a small platform. Previous to the recording, the animal had been implanted with a 16 tetrode hyperdrive assembly intended for recording in the CA1 field of the hippocampus, combined with two cannula/electrode systems (PlasticsOne, Inc) aimed bilaterally at the medial dorsal striatum. The tetrodes were gradually lowered in the CA1 layer across 8 to 10 days after recovery from surgery, and their tip was positioned based on the configurations of sharp waves and ripples. The electrodes aimed at the dorsal striatum were implanted directly in the position from which the recording was obtained (AP: + 2.5 mm, ML: +/–2.4 mm; DV: –5.4 mm; tilted 22 degrees from the vertical in the sagittal plane). The single wire electrodes located in the striatum and the 16 tetrodes were connected through a PCB to a group of four headstages with a total of 64 unity gain channels and 2 color LEDs for position tracking. The LFP signals were sampled at 2K Hz, amplified (1000 x), band-pass filtered (1–1000 Hz), digitized (30K Hz), and stored together with LED positions on hard disk (Cheetah Data Acquisition System, Neuralynx, Inc.). Subsequently the signal was downsampled to 1K Hz, in which form it was used for the current analysis.

3. Results

3.1. Simulations Results

Simulations Design D1: Results from simulations with design D1 are presented in Table 1 and Table 2. Table 1 presents the results when the pair of time series was generated from a bivariate normal distribution and Table 2 presents the results related to bivariate Cauchy. In the bivariate normal case, across all T values, DCC performed best, SW performed worst, and WGA performance was in between. In the bivariate normal case, DCC’s performance improved with larger T as measured both by bias and variance, while that of SW and WGA methods remained roughly the same for all T. In the bivariate Cauchy case, the SW method performed consistently worst across all T, while for the other two methods performance improved with larger T. At small T values, the WGA method performed better than the DCC method. As T got larger, the DCC performance overtook that of WGA, and at T = 1000, DCC performed better than WGA based on the metrics used in Table. Even when MSE was used as the metric for comparison, DCC performed better than WGA when T = 1000: [ for WGA versus [ for DCC. Thus, in the presence of extreme values, DCC is robust as long as the number of time points is large enough, for this particular simulation design; when the number of time points is small, WGA-based evaluation is preferable to DCC.

Table 1.

Bivariate Normal in design D1.

Table 2.

Bivariate Cauchy in design D1.

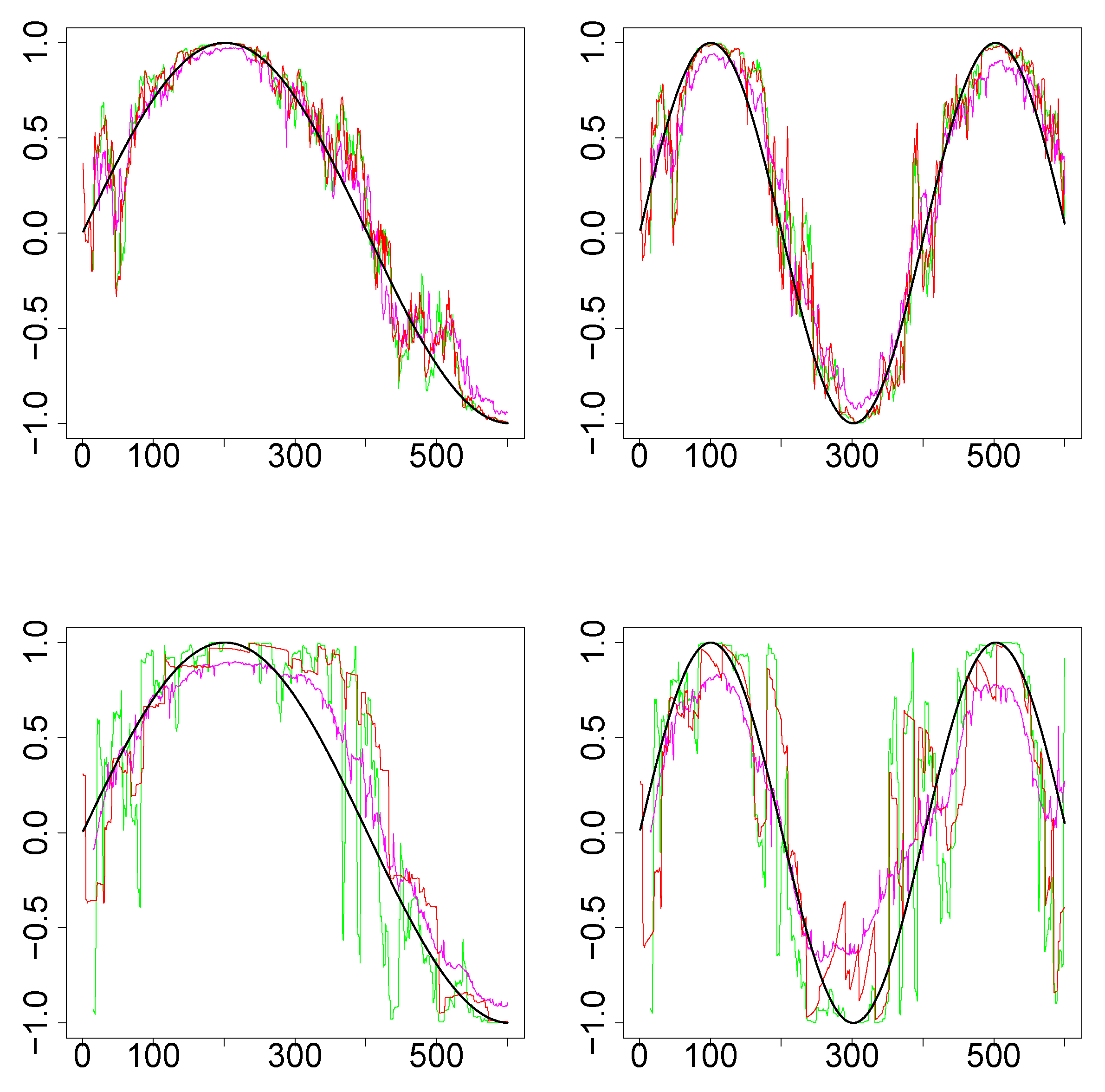

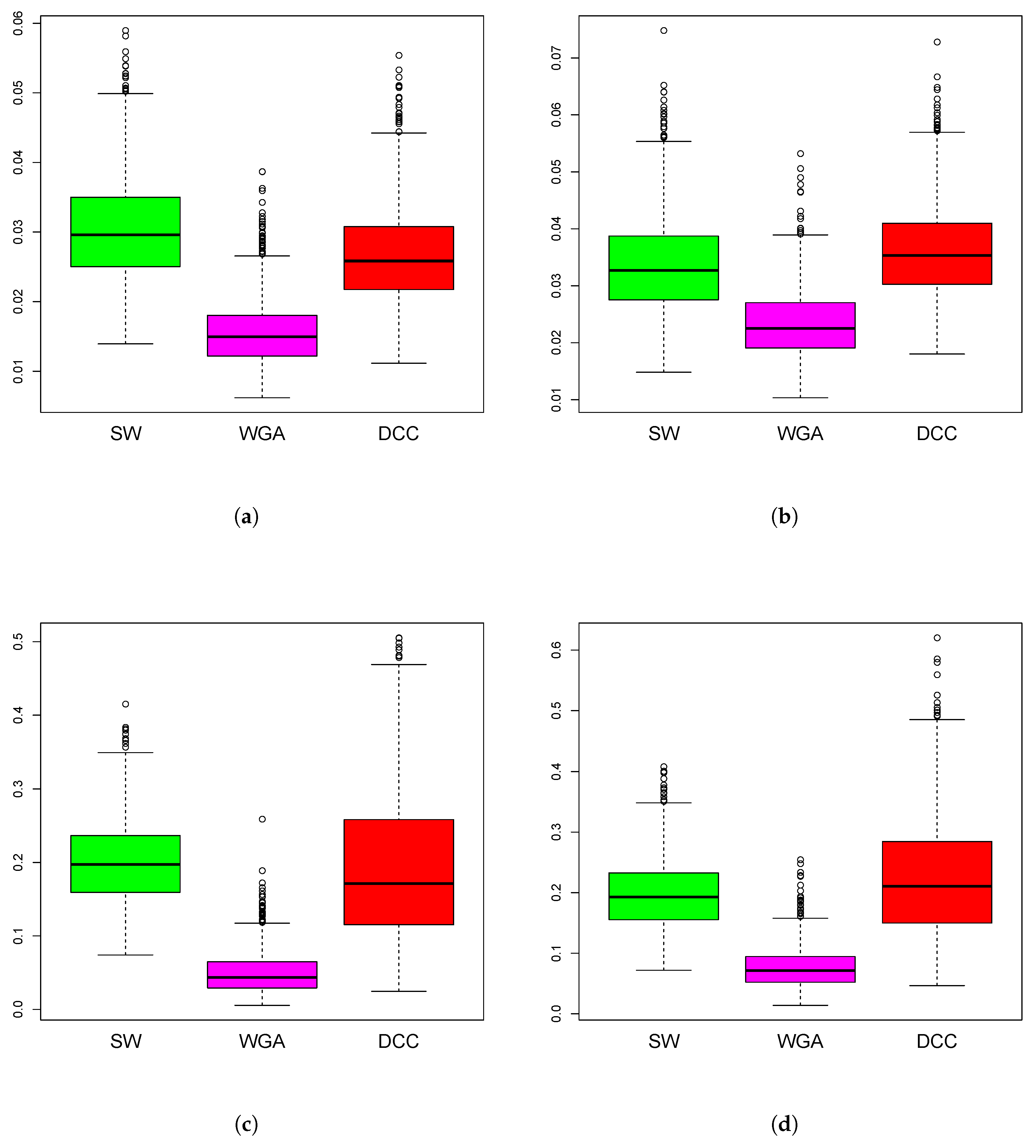

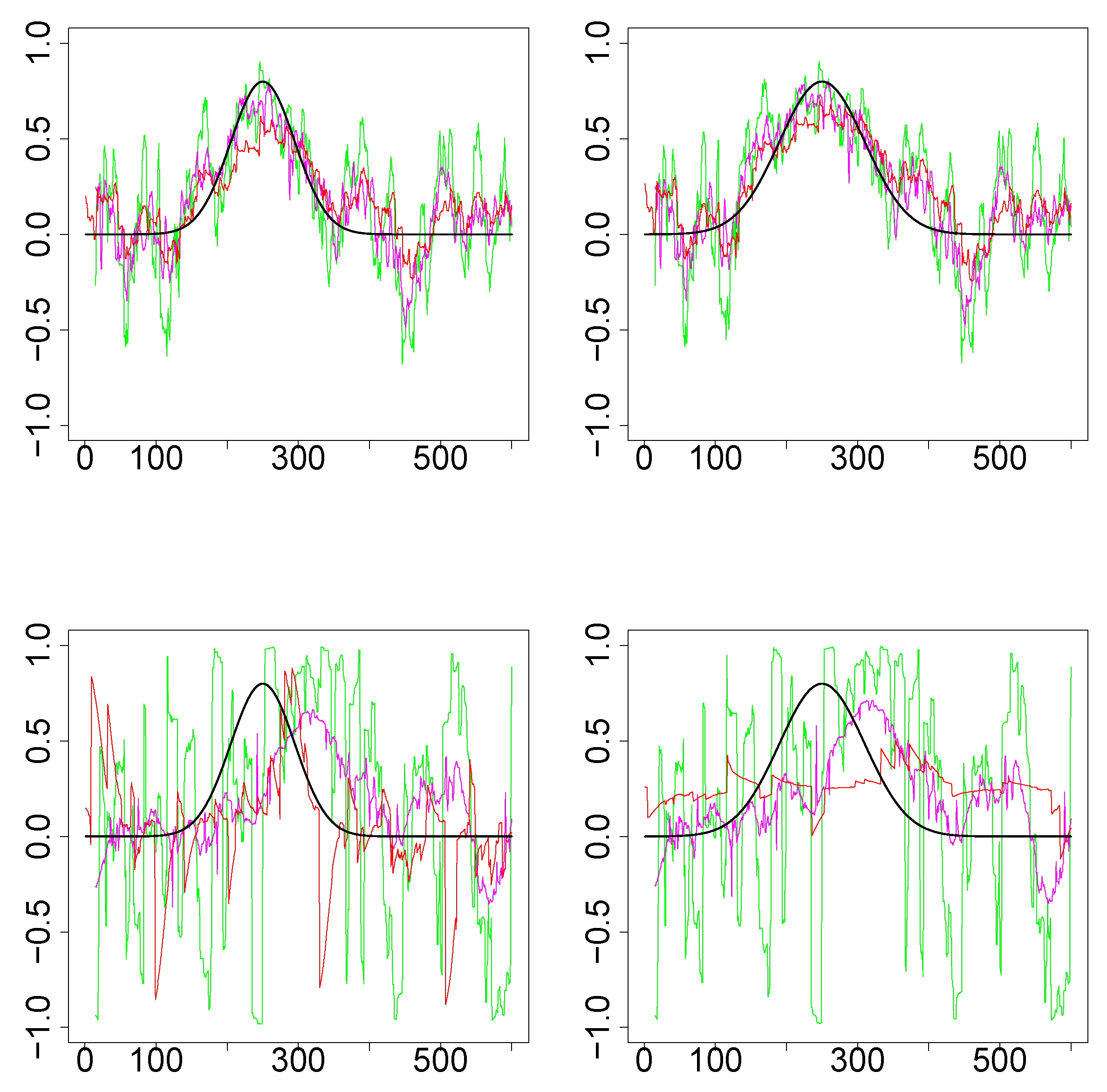

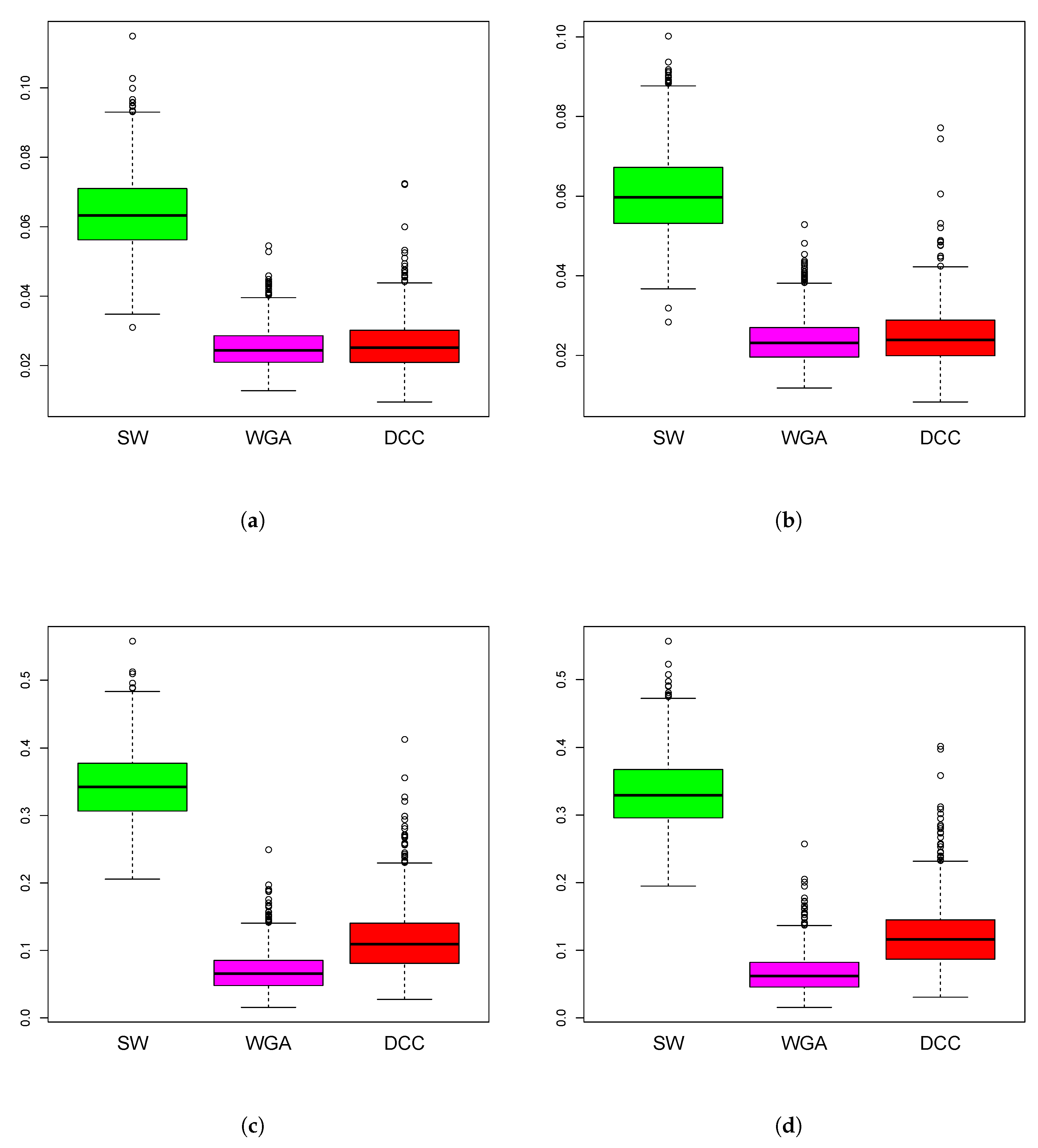

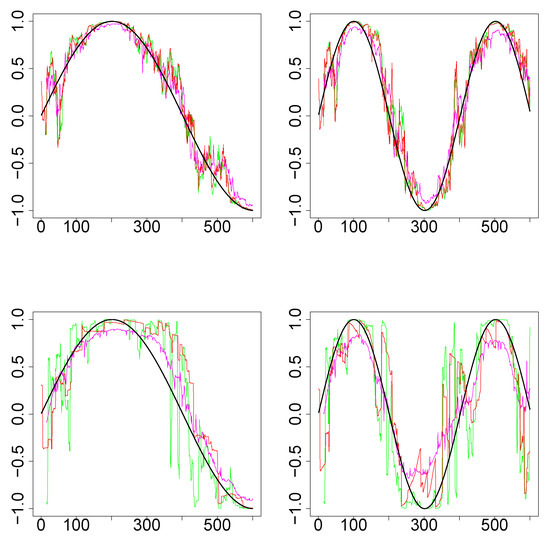

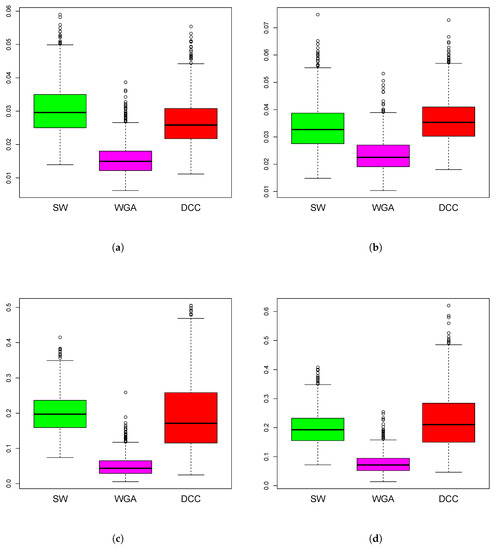

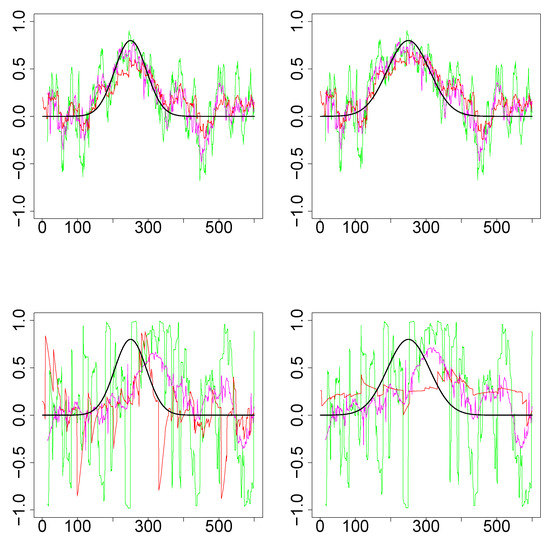

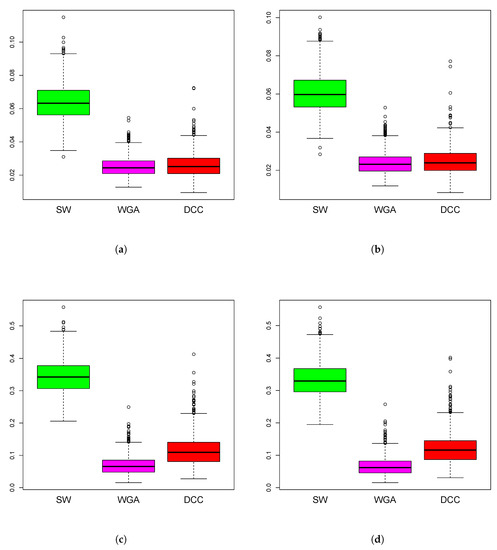

Simulations Design D2: In Figure 3, the black curves in the left panels are the underlying true dynamic correlations corresponding to simulations design D2a, the black curves in the right panels correspond to that of the simulations design D2b. The estimates of the dynamic correlations obtained via the three methods presented in this paper, for a single simulation iteration are plotted as colored curves. The top panels correspond to the bivariate normal scenario and the bottom panels correspond to the bivariate Cauchy scenario. Results from 1000 simulations for each scenario are plotted in Figure 4. In this design, the WGA-based method is far superior to the other two methods, in both bivariate normal and bivariate Cauchy cases.

Figure 3.

Results for single iteration from simulations design D2. (Top row): Underlying pair of time series from bivariate normal. (Bottom row): Underlying pair of time series from bivariate Cauchy. (Left column): Design 2a (that is, ). (Right column): Design 2b (). The underlying true dynamic correlations are plotted as the black curve. Green (SW), magenta (WGA) and red (DCC) represents the estimates of dynamic correlation.

Figure 4.

Boxplots of mean squared error of the estimation methods based on 1000 iterations from simulations design D2. The panels correspond to bivariate Normal (a,b), bivariate Cauchy (c,d), design D2a (a,c) and design D2b (b,d), as in Figure 3.

Simulations Design D3: Figure 5 plots the underlying true dynamic correlations and the estimates based on the three methods, for a single iteration corresponding to the simulation design D3. The results based on 1000 iterations are plotted in Figure 6. In all four scenarios for the design (D3a and D3b, bivariate normal and Cauchy), the performance of SW is substantially worse compared to the other two methods. DCC’s performance is comparable to that of WGA method in the bivariate normal scenario, although the WGA method has a slight edge over DCC. In the bivariate Cauchy case (bottom panels) the improvement in performance for the WGA-based method is more substantial. Thus, the results of the simulations indicate that when the underlying dynamic correlations are not constant across time, WGA performed better than DCC in many of the scenarios, and never worse than DCC in any of the scenarios considered.

Figure 5.

Results for single iteration from simulations design D2. (Top row): Underlying pair of time series from bivariate Normal. (Bottom row): Underlying pair of time series from bivariate Cauchy. (Left column): Design 3a (that is, ). (Right column): Design 3b (). The underlying true dynamic correlations are plotted as the black curve. Green (SW), magenta (WGA) and red (DCC) represents the estimates of dynamic correlation.

Figure 6.

Boxplots of mean squared error of the estimation methods based on 1000 iterations from simulations design D3. The panels correspond to bivariate Normal (a,b), bivariate Cauchy (c,d), design D3a (a,c) and design D3b (b,d), as in Figure 5.

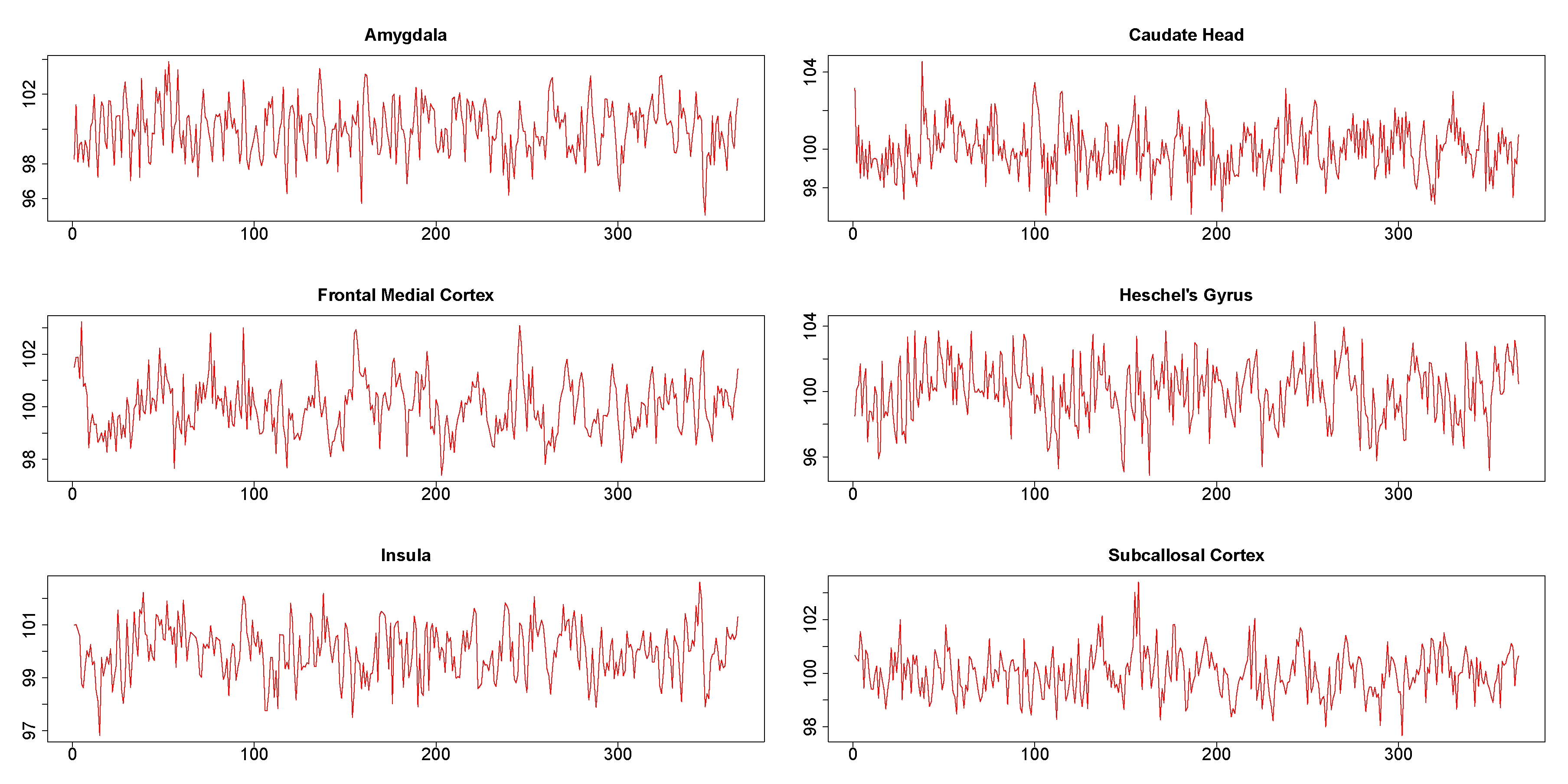

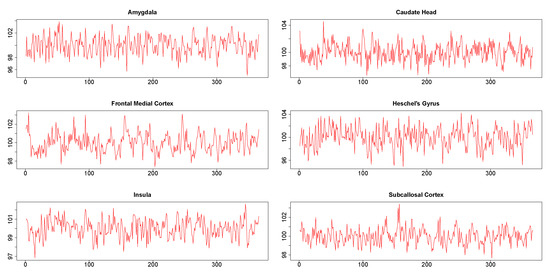

3.2. Stroop Task Data Results

The six times series obtained from six different brain regions of a single subject are shown in Figure 7. It may be noted in particular that the plots did not show the presence of any extreme values. The results from this data analysis are useful in illustrating certain numerical issues that arises during practical implementation of DCC.

Figure 7.

Time series from six regions in Stroop task functional magnetic resonance (fMRI) data.

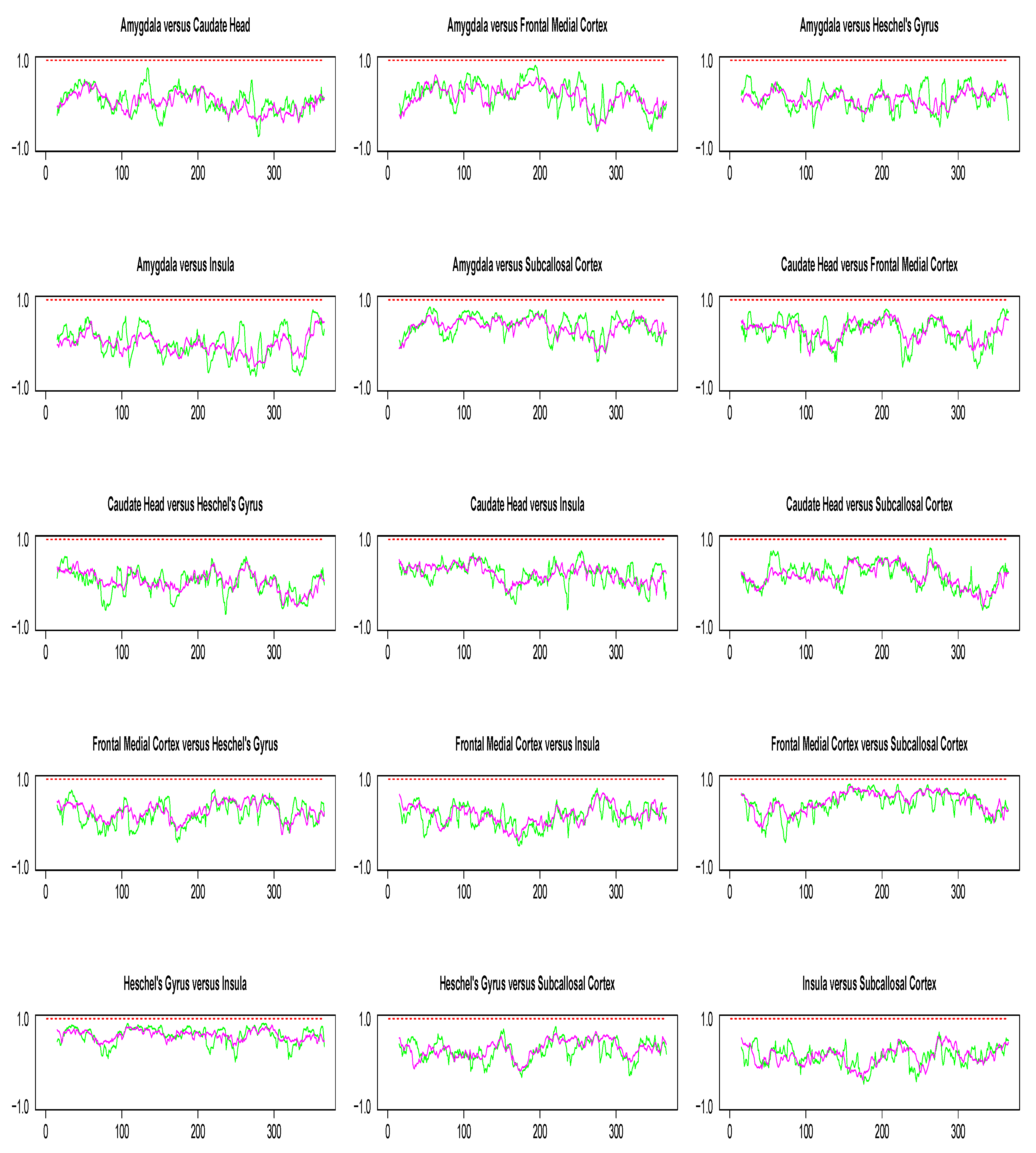

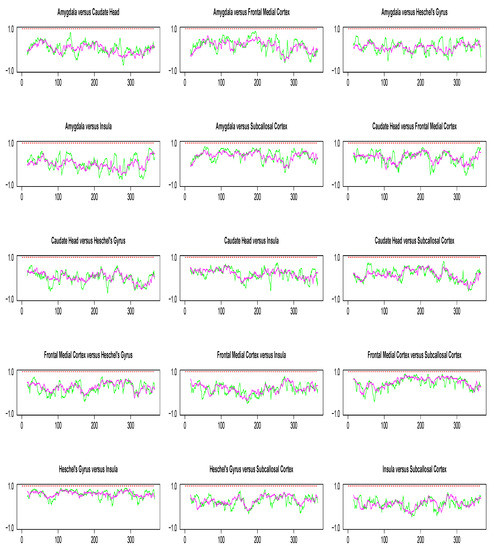

Pairwise dynamic correlation plots are shown in Figure 8. In the absence of extreme values, the conclusions from our simulation study suggest DCC method to be the best candidate for dynamic correlation. However, as seen from Figure 8, the DCC estimate obtained in each pairwise analysis is consistently a flat line with mean value near 1. Since there is no biological explanation for such a result, our best guess is that it is due to the limitations of numerical recipes required for the implementation of optimization algorithms involved in DCC estimation. Such numerical issues did not occur in the implementation of the WGA method.

Figure 8.

Pairwise dynamic correlation estimates for the Stroop task fMRI data. Green (SW), magenta (WGA), red (DCC).

The data analysis presented in this subsection was mainly to illustrate the performance of the different estimation methods for dynamic correlation. Since the data presented originated from only one subject, not much can be read, interpreted or generalized from the results. Nevertheless, the highest correlation occurred between Heschel’s gyrus and insula (see Table 3 for the mean correlation across all time points for all pairwise analyses and Table A1 in the Appendix A for the corresponding maximum values of the correlations). Heschel’s gyrus contains the primary auditory cortex (PAC), the first cortical structure to process incoming auditory information [24]. In turn, PAC is in close proximity to the posterior insular cortex [24], to which it is also directly connected [25]. If we use the estimate of dynamic correlation as a proxy measure of functional connectivity, the data would imply that the Heschel’s gyrus and insula are functionally interconnected. This conclusion has clinical relevance because reduction in the functional connectivity between PAC and insula has been linked to prosody dysfunction in patients with schizophrenia [26].

Table 3.

Results from Stroop data analysis.

LFP Data Analysis Results

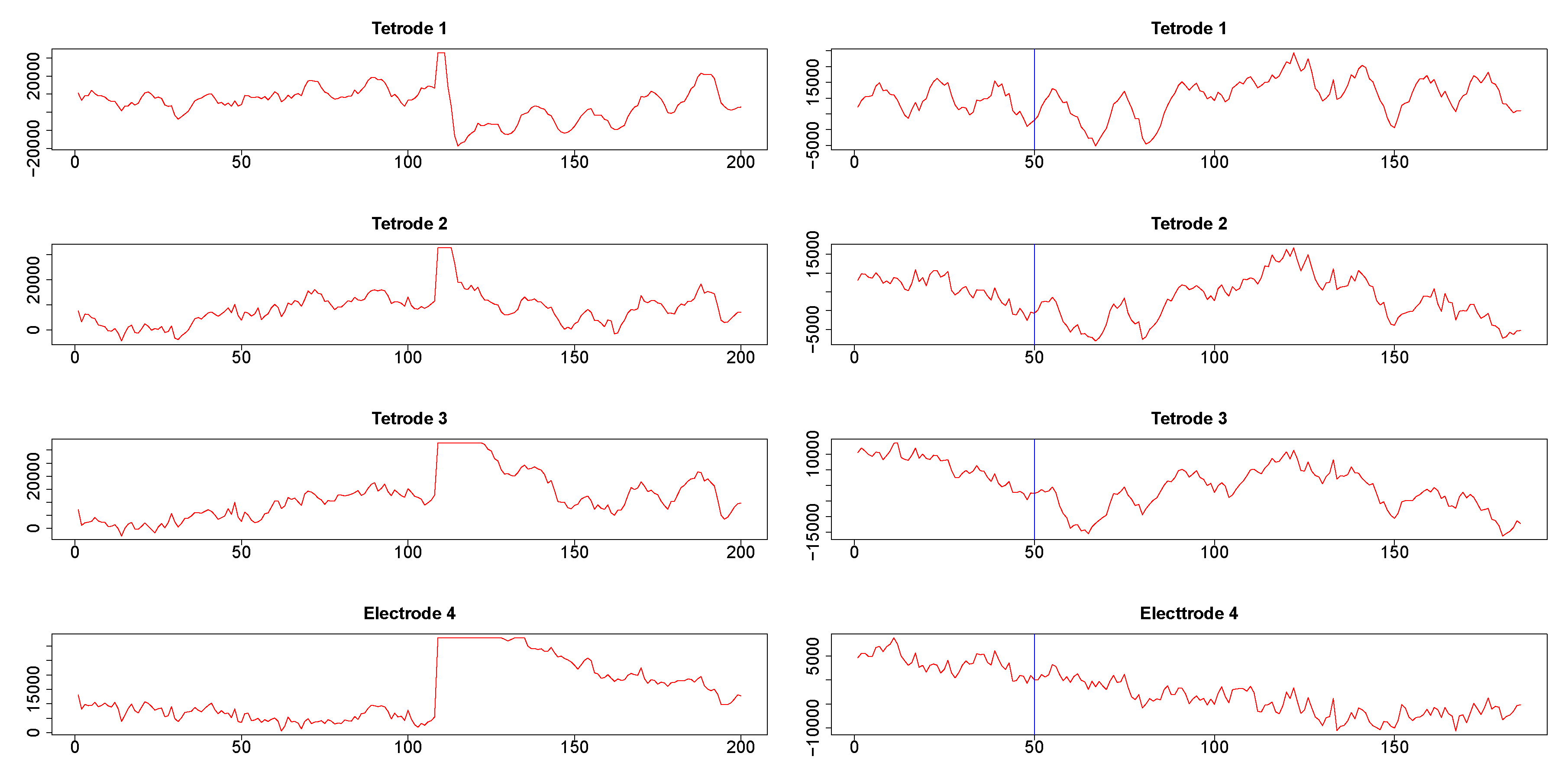

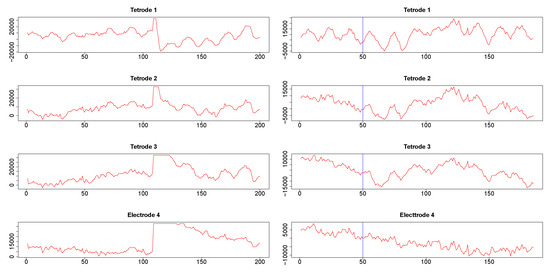

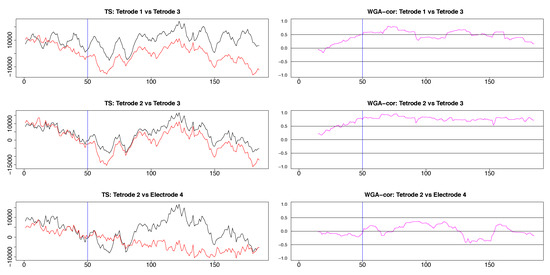

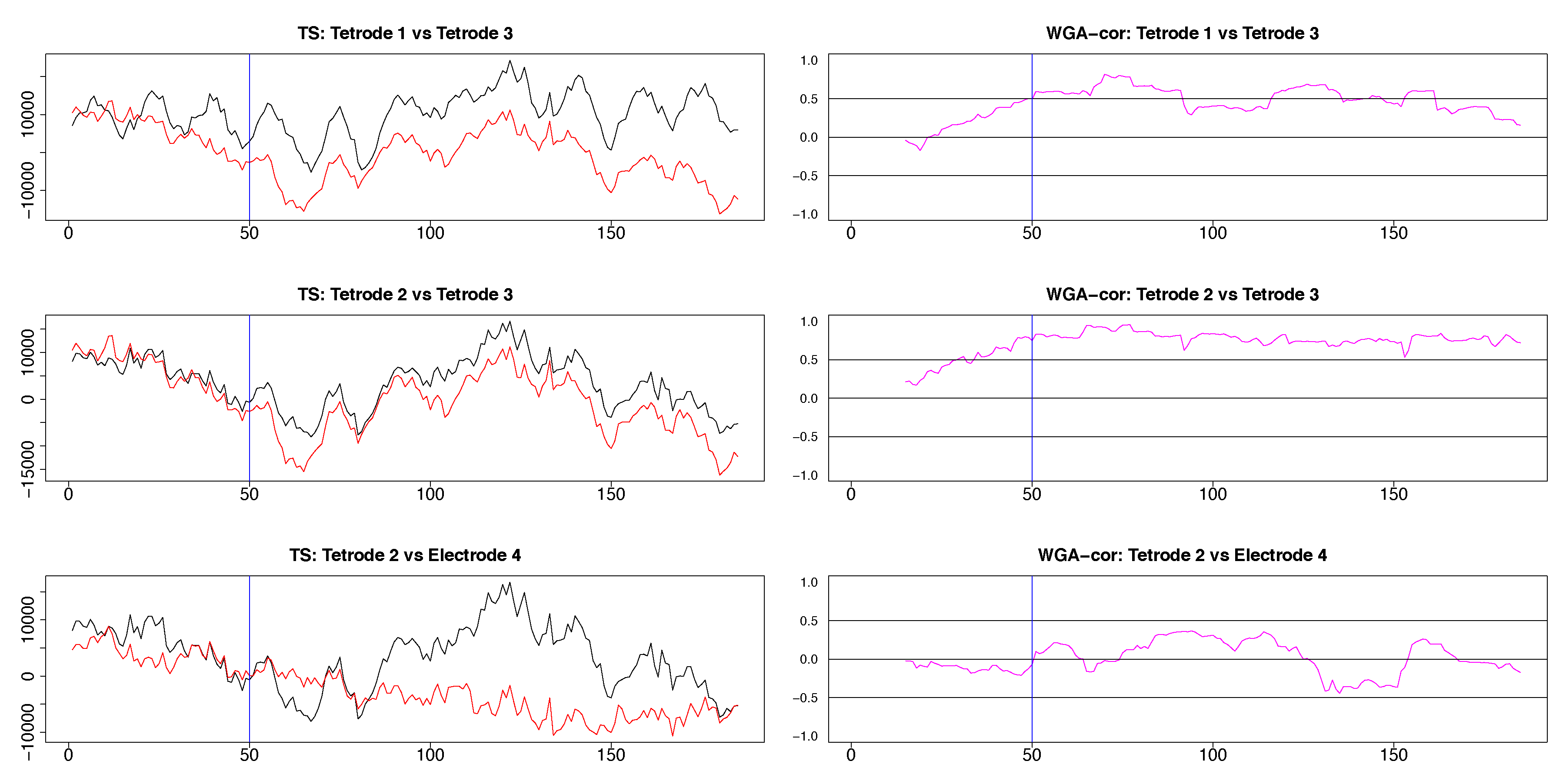

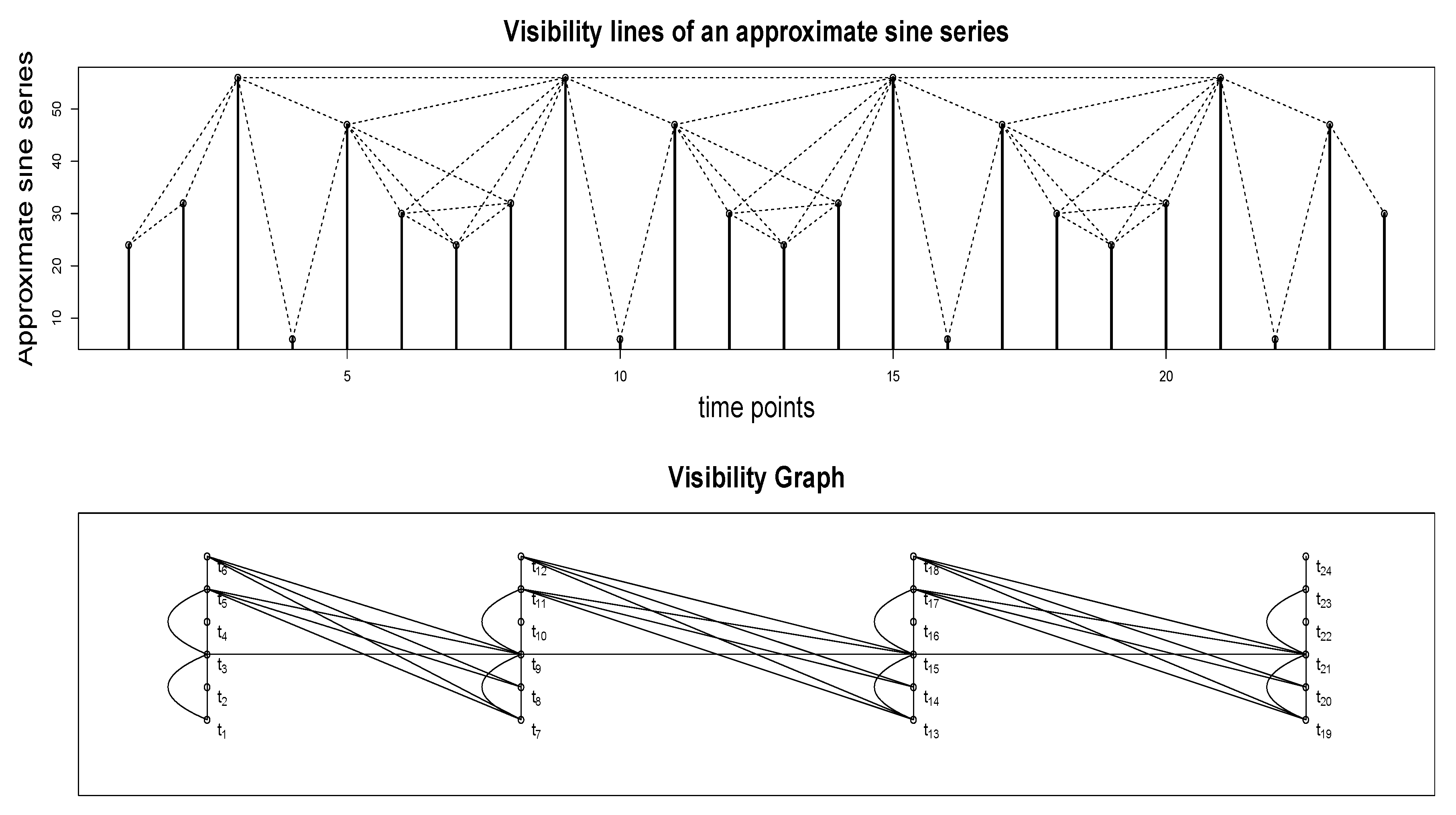

Time series corresponding to s (1024 data points) from the three tetrodes and one single wire electrode are plotted in appendix Figure A3. Tetrodes 1, 2 and 3 were placed in the CA1 field of the hippocampus, while electrode 4 was located in the medial dorsal striatum. All hippocampal tetrodes recorded a theta oscillation (7–14 Hz) typical for this brain area. Signals on tetrodes 2 and 3 were most similar, and included, along with theta, oscillations of much higher frequency; these oscillations were missing in the signal recorded on tetrode 1. The signal on electrode 4, recorded in the medial dorsal striatum, lacked the theta rhythm and was visibly different from the other three LFP’s. However, in all four recordings a large jump corresponding to a movement artifact occurred approximately near the 300th time point. The configurations of the four signals were used to evaluate the performance of SW, DCC, and WGA methods discussed above. To estimate the dynamic correlations between the signals in the presence of extreme values, we cut out segments of data between time points 200 and 400 for each of the four time series (left panels of Figure 9). We expected to obtain high correlations between all segments around the 300th data point, where the movement artifact registered in all four recordings. Outside of this point, we expected high correlation between LFPs 2 and 3, somewhat lower correlation between LFP 1 and either LFP 2 or LFP 3, and low correlations between LFP 4 and the signals on any of the three tetrodes. Furthermore, because of the presence of extreme values, we also expected that WGA would generate the best estimate.

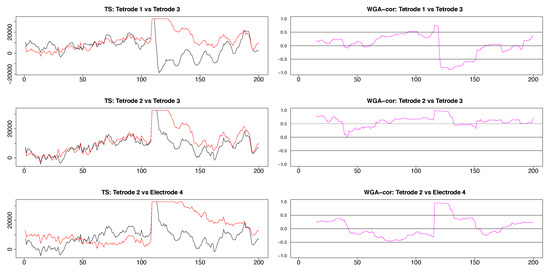

Figure 9.

Segments of local field potential (LFP) time series from four electrodes implanted in the CA1 field of the hippocampus (tetrodes 1-3) and medial dorsal striatum (electrode 4) in the brain of the same rat. Left column: Segments between time points 200 and 400 from the respective time series plotted in appendix Figure A3. Right column: segments between time points 415 and 600 of time series seen in Figure A3. 50th time point within these segments is marked by a blue vertical line.

Second, we also cut segments between time points 415 and 600 (plotted in the right panels in Figure 9). Visual inspection suggested a change in signal configuration around the 50th point of these fragments which should be reflected in changes in the dynamic correlations among LFPs 1-3 (left vs. the right of the 50th time point in Figure 9, right panels). At the same time, we expected little correlation between any of the LFPs 1-3 on one hand and LFP 4 on the other hand.

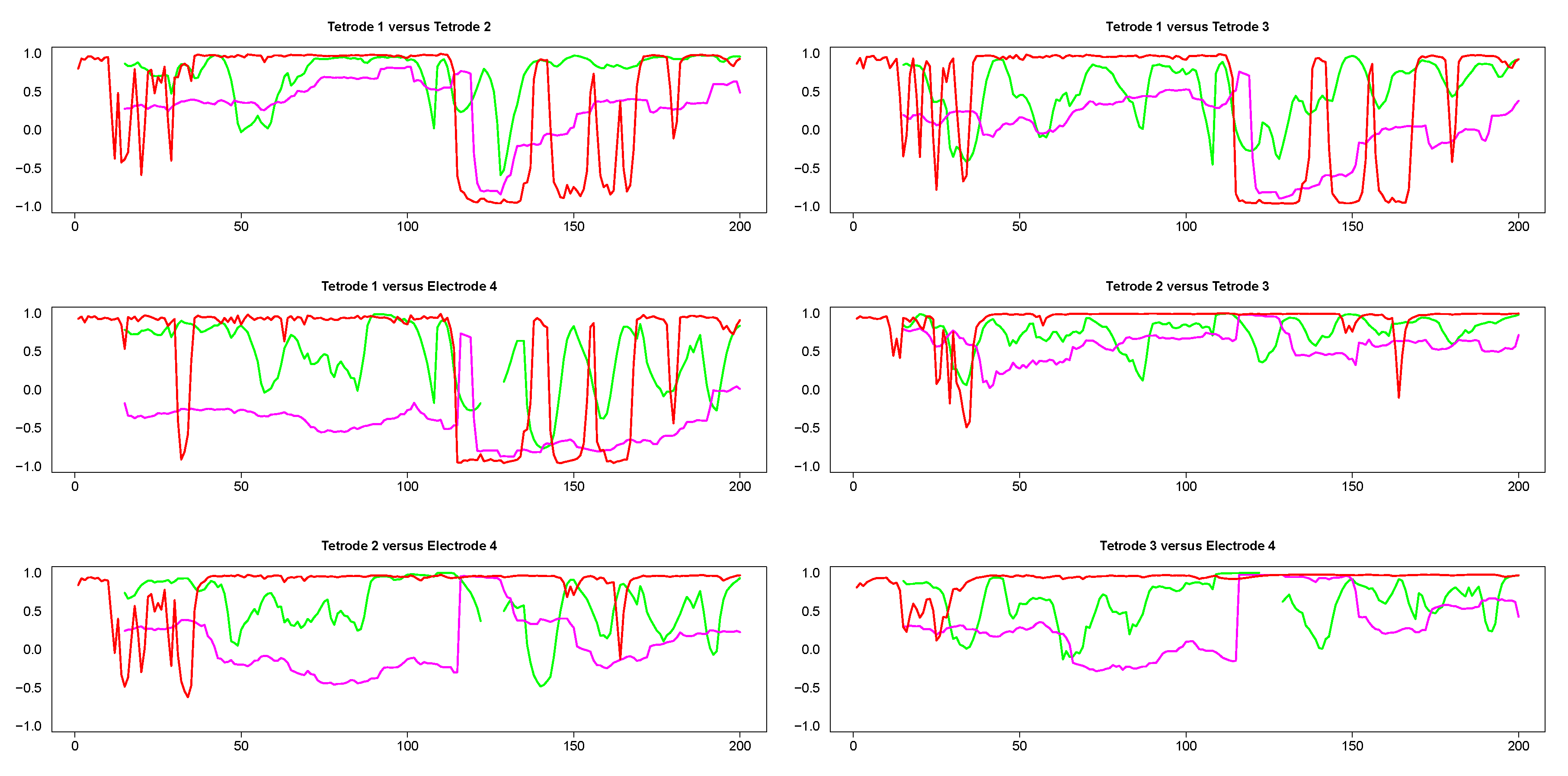

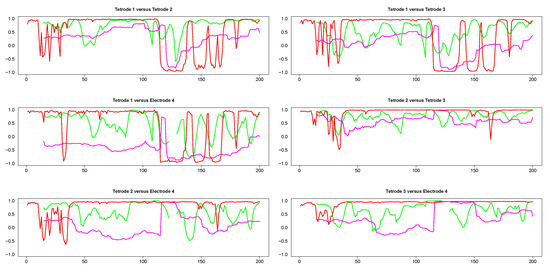

The results from pairwise analyses of dynamic correlations for the segments in the left side of Figure 9 are plotted in Figure 10. In all these plots, the estimates generated by the SW and DCC methods seem to be fluctuating very rapidly compared to WGA, suggesting that the presence of extreme values affected SW and DCC much more than WGA. It is also interesting to note that the SW method did not always result in a correlation value (see discontinuities in the green curve in Figure 10) because in some portions the original signals consisted of series of equal numbers with zero standard deviation. Thus, the WGA method generated the most reasonable estimates for this set of time series.

Figure 10.

Pairwise dynamic correlations for time series plotted in the left column panels in Figure 9. Green (SW), red (DCC) and magenta (WGA).

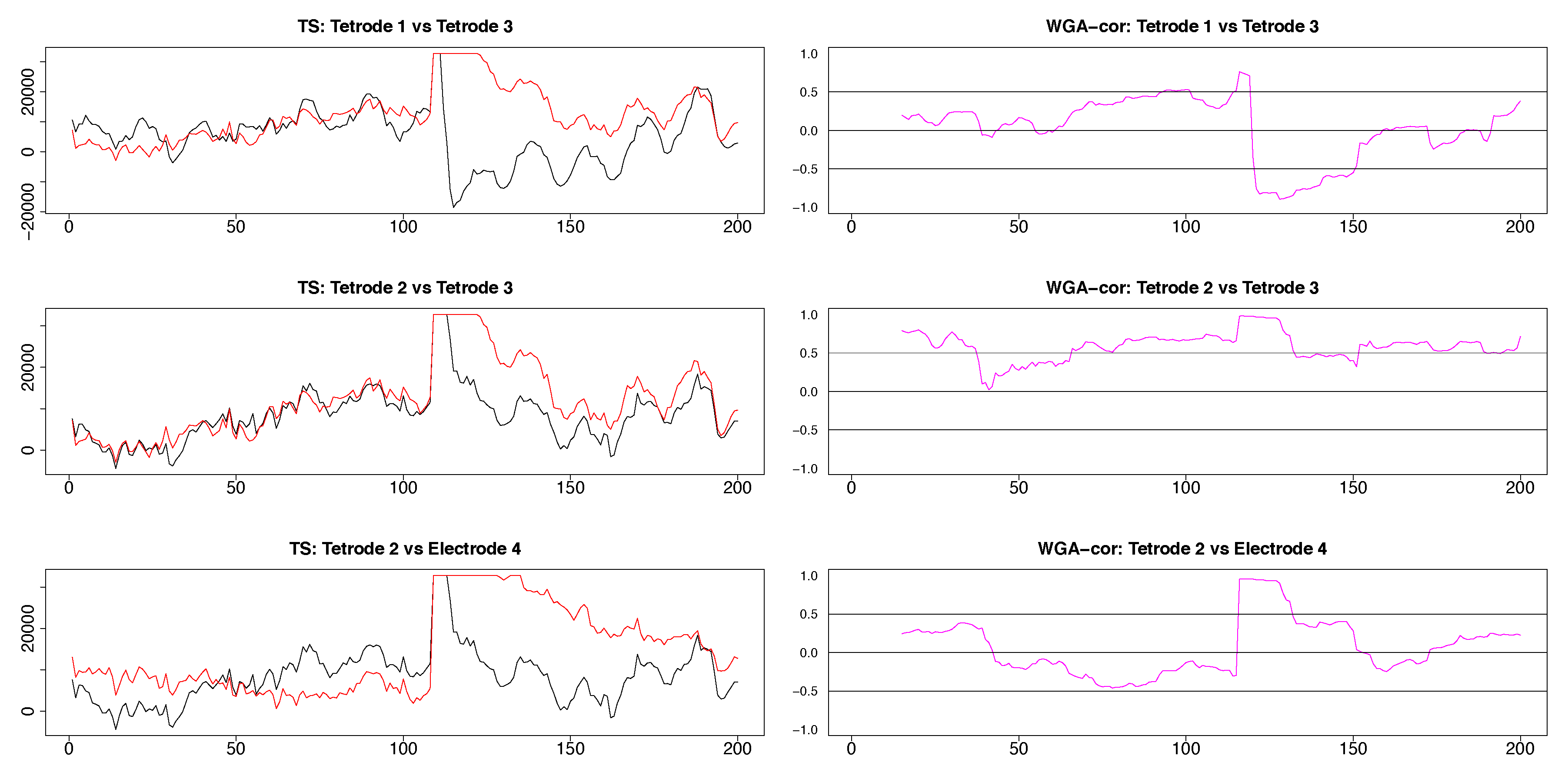

Visual comparison of LFPs 1 and 3 (first and third left panels in Figure 9 top left panel in Figure A4) indicated that although both signals were dominated by the theta rhythm and showed a movement artifact, they were overall dissimilar, particularly at the later time points. The WGA estimates (top right in Figure 10, top right in Figure A4) reflected accurately these variations, showing low correlation at the beginning of the signals raising to a maximum correlation at the point of the extreme values corresponding to the movement artifact, dipping to negative values in the immediate aftermath of the peaks, and returning to positive values at the very end. Similarly, visual inspection of the tetrodes 2 and 3 signals (middle two panels in the left side of Figure 9; middle left panel in Figure A4), suggested consistent high correlations across all time points within the segments. The results of the WGA analysis showed that this was indeed the case (magenta curve in the middle right panel of Figure 10; middle right panel of Figure A4). There was no significant dynamic shift for the magenta curve and its values were above 0.5 for a significant portion of the segment. In contrast, the other estimates fluctuated rapidly (green and red curves, middle right panel in Figure 10) which neither makes sense biologically, nor verifies our understanding based on visual inspection of the original pair of LFPs. Finally, the WGA estimate indicated that the dynamic correlation between any of the hippocampal signals (LFPs 1–3) and the striatal signal (LFP4) was consistently low except in the section of extreme values, where, not surprisingly, the values are high (middle left and bottom both panels in Figure 10, bottom right in Figure A4). This assessment corresponded with our expectation that the LFPs originating in the two different brain areas would be uncorrelated.

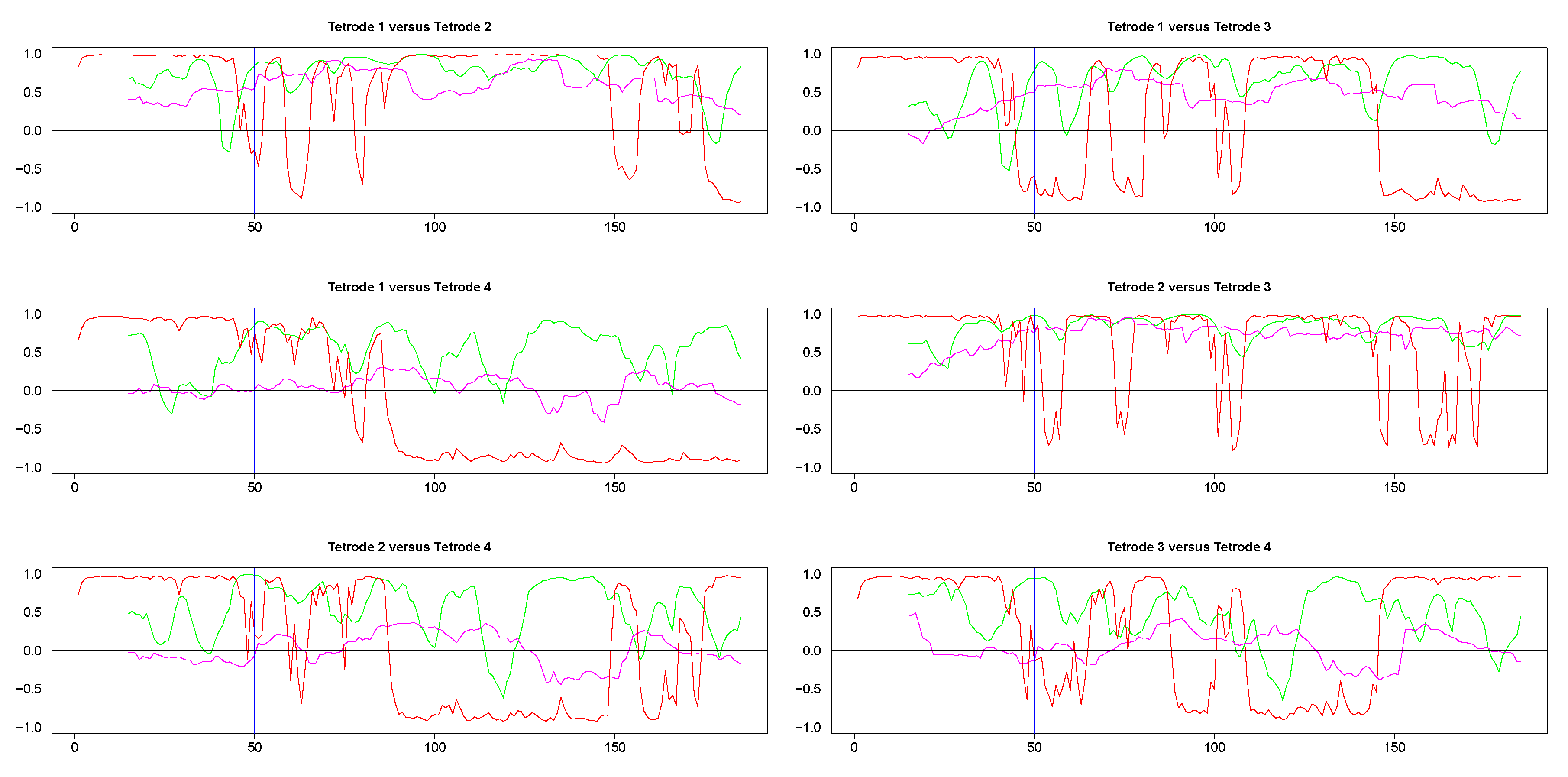

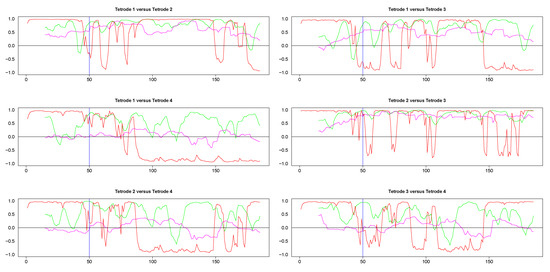

The results from pairwise analyses of dynamic correlations for the segments in the right side of Figure 9 are plotted in Figure 11 and Figure A5. As in the previous case, the estimates based on SW and DCC fluctuated rapidly. In contrast, WGA analysis reflected correctly the switch in dynamic correlation before and after the 50th time point and the higher similarity between the signals on tetrodes 2 and 3 vs. signal on tetrode 1 (top left panel and top two right panels in Figure 11, top two rows in Figure A5). Except for the segment before the 50th point, the WGA estimate of the dynamic correlation between tetrodes 2 and 3 was consistently above 0.5, confirming our visual evaluation. The estimate for the same time points of the dynamic correlations of electrode 1 with electrodes 2 and 3, respectively, showed near zero values in the beginning that gradually rose and after the 50th time point, the values were mostly above 0.5. These results again confirmed our visual observation of the original time series. Finally, as expected, the WGA estimates of dynamic correlations between hippocampal and striatal LFPs were near zero for most of the time points. Thus, the WGA method led to meaningful results.

Figure 11.

Pairwise dynamic correlations for time series plotted in the right column panels in Figure 9. Blue (SW), magenta (WGA), green (DCC). 50th time point within these segments is marked by a light blue vertical line.

4. Quantifying Uncertainty and Testing Hypothesis

In this section, we present methods to obtain confidence intervals (CIs) for our dynamic correlation estimates. We also present a statistical testing framework to assess whether correlation is dynamic or not. We calculate confidence intervals based on bootstrap samples of the original pair of time series. To be more specific, we first obtain WGA dynamic correlation estimates for each bootstrap sample of the original pair, and 95% CI at each time point is then obtained by calculating the 2.5th and 97.5th percentiles among all bootstrap based estimates. Our bootstrap sampling method is based on adapting slightly the ‘multivariate linear process bootstrap’ (MLPB) method developed and presented in [27]. The core idea behind our adaptation is same as in ‘DCBootCB’ algorithm presented in [28]. The hypothesis testing framework is based on adapting the statistical tests proposed in [29]. Details of all the approaches are given below.

4.1. Confidence Intervals

A simple bootstrap based re-sampling will destroy the autocovariance structure of the original pair of time series. A specialized re-sampling method such as MLPB algorithm that preserves the covariance structure between and within each time series in the pair is necessary. MLPB algorithm, developed by Jentsch and Politis have been shown [27] to give consistent estimates for statistics of order two or higher, in particular consistent estimates for autocovariance and autocorrelation. We provide a summary sketch of the key ingredients involved in the MLPB algorithm. Let us denote the two time series under consideration by and denote the vectorized version; this vector is centered in the first step. The main idea in the algorithm is to estimate the covariance matrix of centered properly, and use this estimate to de-correlate the centered . Standardized version of the de-correlated vector is just white noise and hence the standard bootstrap scheme could be used to resample from the standardized, decorrelated series. Inverse (transform) of the de-correlation operation is done to correlate the bootstrap sample pair. Thus at the end of this step we get a bootstrap sample pair that preserves the covariance structure of the original pair. The final step involves de-centering so that the bootstrap pair will have the same means as the original pair.

The key step in MLPB algorithm is the proper estimation of the covariance matrix of . A naive estimate for could be obtained using the sample autocovariance function , with ()th element being . Here

However, Jentsch and Politis [27] showed that the naive estimator is not consistent. A consistent estimator proposed in [27] is , where the ()th element is Here is a tapering function that leaves the diagonal elements unchanged and downweights the elements farther away from the diagonal. The simplest example (the one used in the simulation study in [27], and also the one used in this paper) is the trapezoid function which leaves the diagonal, super- and supra-diagonal elements unchanged and sets all other elements to zero. This estimation procedure involves a few more technical (but easy to understand) details, mainly to ensure that is positive definite. We skip these details; a reader interested in these details are referred to [28] or [27]. The steps in MLPB algorithm may be summarized as follows.

MLPB algorithm:

- Step 1:

- Let denote the centered where the centering for elements in and in are done separately.

- Step 2:

- Compute where L denotes the lower left matrix in Cholesky decomposition () of .

- Step 3:

- Let be the standardized version of obtained by subtracting the mean and dividing by the standard deviation (SD). Here mean and SD are computed from all elements in .

- Step 4:

- Generate by performing i.i.d resampling from .

- Step 5:

- Compute . Split into two separate vectors with containing the first T elements and containing the last T elements of .

- Step 6:

- Add mean of to each element of to obtain . Similarly, add mean of to each element of to obtain . The pair of vectors and are the bootstrap sample pair outputted.

Let X denote the data matrix with the elements of the row being In order to calculate the bootstrap based confidence intervals we employ the same strategy as in ‘DCBootCB’ algorithm presented in [28]. Steps are given below.

BootCI algorithm:

- Step 1:

- Divide the data matrix X into K adjacent blocks, where each block is a matrix.

- Step 2:

- For each block run the MLPB algorithm to get a bootstrap sample pair corresponding to that block.

- Step 3:

- Combine the K adjacent blocks of bootstrap sample pairs and apply the WGA algorithm on the combined bootstrap sample pair.

- Step 4:

- Repeat the above steps B times to get B dynamic correlation time series based on the WGA algorithm.

- Step 5:

- At each time point calculate the 2.5th and 97.5th percentiles among the bootstrap samples to obtain the bootstrap based 95% confidence interval.

A computationally simpler approach to calculate the 95% confidence interval is based on Fisher’s z-transformation of the correlation coefficient:

where is the estimate of dynamic correlation based on WGA at time t. Asymptotic standard error for is . The back transformation to obtain is , where tanh represents the hyperbolic tangent function. Based on this, the corresponding 95% confidence intervals can be calculated as

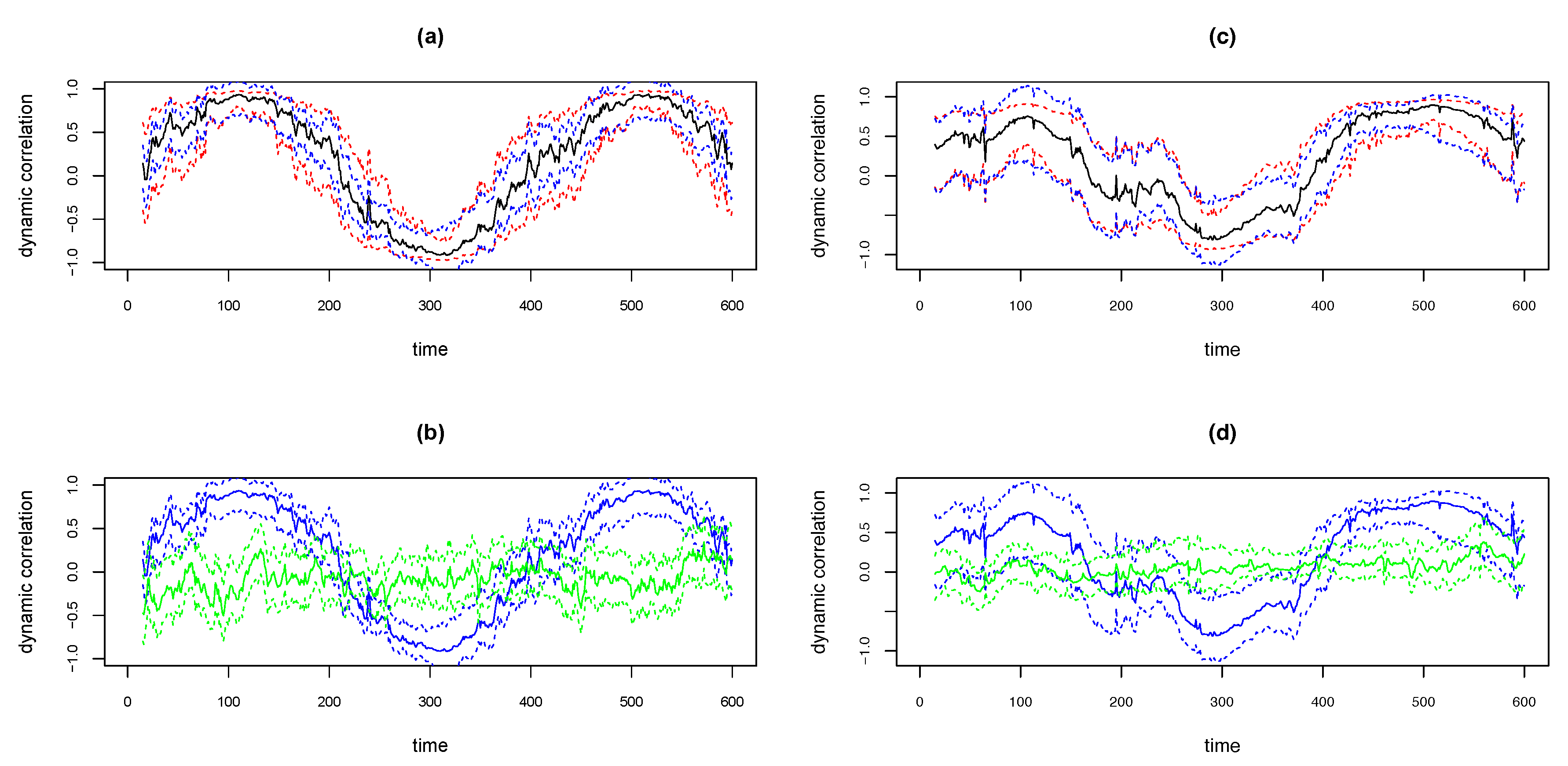

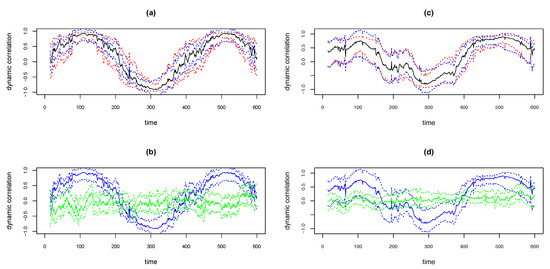

We illustrate the above methods using the simulated examples shown in the top panels in Figure 12. The simulated data is based on the . That is, the covariance matrix of the bivariate time series is of the form as in Equation (3), with , . The sample size . Thus the underlying true dynamic correlation is the same as depicted by the solid black lines in the right panels in Figure 3. The time series pair for the simulation in the left panel in Figure 12 was based on bivariate Normal and for the right panel based on bivariate Cauchy. The solid black curves in the top panels in Figure 12 are the estimates of dynamic correlation obtained using the WGA algorithm. The blue curves are the 95% confidence intervals obtained using the ‘BootCI’ algorithm and the red curves are the ones using the z-transformation method. As seen in the from the plots in the two top panels, the red and blue curves are close to each other. However, based on extensive simulations, Kudela et al. [28] determined that the coverage for the bootstrap based confidence interval is better than that based on z-transformation. We assume that the same result hold in our case too.

Figure 12.

Plots of WGA estimates and 95% confidence intervals for simulated data with D2b design, with bivariate normal (a,b) and bivariate Cauchy (c,d). Upper panels plot WGA estimate (black), 95% CI with ‘BootCI’ algorithm (blue) and with z-transformation (red). Lower panels plot WGA estimate and BootCI 95% CI (both blue), and corresponding estimates for randomly permuted series (green).

At any time point we may determine whether the dynamic correlation is significantly different from zero based on the bootstrap based confidence intervals and permutation based approaches described as follows. If we randomly permute both time series, the correlation between the resulting pair will be zero. We may obtain bootstrap based confidence interval for the dynamic correlation (which is an estimate of zero) between the randomly permuted pair using the ‘BootCI’ algorithm. The solid green lines in the bottom panels in Figure 12 show the WGA based dynamic correlation estimate of the randomly permuted series, and dotted green lines show the 95% confidence intervals for the corresponding solid green line using the ‘BootCI’ algorithm. The blue lines depicted in the bottom panels are same as the dynamic correlation estimate (solid line) for the original pair and the corresponding bootstrap based 95% confidence intervals (dotted lines) shown in the top panels. That is, the blue solid curve in the bottom panel is the exact replica of the black solid curve in the top panel and the blue dotted lines in the bottom panel is the exact replica of the blue dotted lines above. The time points at which the two confidence intervals do not overlap are the points at which the dynamic correlation is statistically different from zero at a 5% significance level.

4.2. Hypothesis Testing

In the above we presented methods to quantify uncertainty and a method to assess the time points at which the dynamic correlation is significantly different from zero. In this subsection we present a statistical hypothesis testing framework to assess whether ‘dynamic’ correlation detected is really dynamic or static. We consider the two statistical tests presented in [29] and show how they can be applied for the methods presented in this paper. The null and the alternate hypotheses under consideration are

where is the variance of the dynamic correlation time series. Thus, the null hypothesis correspond to ‘static’ case and the alternate correspond to the ‘dynamic’ case.

One obvious estimate of is the sample variance of estimated , which is the basis for the first test statistic proposed in [29]:

We propose a slightly modified version of the above statistic:

Here denotes the dynamic correlation estimated by the WGA method at time t, and denotes the window-size used. We also consider another statistic originally proposed by Zalesky et al. [30] which is described as follows. Let denote the time points at which the series crosses its median value . Let and . Thus we get a partition of the interval [] with segments [, ], , and within each segment the corresponding is completely above the median or completely below the median. Let denote the length of the interval and define

Then the test statistic proposed by Zalesky et al. [30] is

where and are tuning parameters. Zalesky et al. [30] recommended setting and .

Hypothesis testing can be done based on the standard error (SE) of each test statistic calculated from bootstrap samples obtained from the original pair of time series. Lower limit of 95% confidence intervals can be obtained by subtracting 2.5th percentile value and adding 97.5th percentile to the corresponding test statistic; here, the percentiles are obtained from among the test statistic values calculated from bootstrap samples. Null hypothesis may be rejected if the 95% confidence interval does not contain zero.

We illustrate the strategy for the simulated data in Figure 12 right panels (i.e., design and bivariate Cauchy; dynamic correlation estimated by WGA). The 95% confidence interval (CI) calculated for the test statistic for the above simulated example was (0.221, 0.679) based on which we may reject the null hypothesis since the CI does not contain zero. For the second test statistic, optimal values of and may be obtained also from bootstrap samples. For and , we considered a grid ranging from 0.05 to 1.50 with step size 0.05, and for each (, ) pair in the 2-dimensional grid calculated the sum-squared error based on the bootstrap samples. We picked the optimal (, ) pair as the one for which the bootstrap based sum-squared error was minimal. For the simulated example above, we obtained the optimal and . 95% confidence interval for the second test statistic based on this (, ) pair was (0.982, 6.659). Since this interval does not contain zero, we reject the null hypothesis based on the second test statistic as well. Thus, for the simulation example, we correctly reject the null hypothesis of ‘no dynamic correlation’ based on both hypothesis testing strategies presented in this section.

5. Conclusions and Discussion

Understanding the dynamic correlation underlying a pair of time series is of interest in many areas within neuroscience. Currently, existing techniques such as sliding window (SW) or GARCH-based DCC can be applied to this estimation problem. Linquist and co-authors [10] evaluated the existing methods and concluded that DCC is an attractive option for effective dynamic correlation estimation in fMRI data. Our analysis confirmed that DCC performs well for nicely behaved time series from a bivariate normal distribution. However, for time series with extreme values, common in neuroscience, DCC’s performance was sub-optimal. Our analyses also revealed that in practice DCC estimation is prone to numerical instability. To address these problems, in this paper, we proposed a novel approach to the estimation of dynamic correlation based on a weighted graph.

To study how our new method, the WGA, compares with the SW and DCC methods, we conducted extensive simulations. Results from our simulation study showed that WGA’s performance was superior to the existing methods in the presence of extreme values, especially when underlying correlation is truly dynamic. We also compared the results obtained by applying the three methods to one real fMRI data set and to one LFP data set with known properties and extreme values recorded in an awake rat. These analyses indicated that the WGA based method led to more biologically meaningful results and performed better in the presence of outliers than SW or DCC. We also provided a theoretical framework to better understand our method and presented approaches to quantify uncertainty and test hypotheses.

One factor that may contribute to the optimal performance of the WGA method may be the combination of local and global aspects of its algorithm. Method (SW) is based on only local information - correlation at each time point is based only on the values within the sliding window. As mentioned in Lindquist et al. [10], in these conditions the dynamic profiles of even random noise may show compelling changes in correlation across time. The local nature of SW could be transformed into a more global nature by increasing the window-size, but increasing the window-size beyond a particular level will lead to the method overlooking real dynamic changes. Method is much more of a global estimation approach in the sense that the entire data is used for estimating the parameters in DCC. The new approach based on WGA incorporates both local and global components. Since it is window-based, it is partly local in nature like SW. However, for time points within each window the correlations calculated involve median weight vectors with similar length as that of the original time series and consisting of weights from all time points from the entire series. This approach confers global character to the new method. The combined local and global aspects of the WGA method may result in optimal performance on data sets that do not conform to normality and contain extreme values. Our simulation study shows that extreme values do not pose a problem for WGA, which is based on a weighted graph where the weights are calculated using an arctan function. Arctan, like other functions used in robust estimation such as Huber’s function, trimming function or Tukey’s bisquare function reduces the influence of outlying values by applying a cap (see section 14.5 in [31]). Variations of our method could involve these other functions from the robust statistics literature.

Recently, a copula based dynamic conditional correlation approach for estimating time-varying correlation was proposed in [32]. Copulas are multivariate cumulative distribution functions that could be used to capture distribution-free dependence structure among random variables [33]. As pointed out in [32], copula framework can be combined with DCC-GARCH model to alleviate the sensitivity of DCC-GARCH approach on distributional assumptions. Based on a simulation study, it was showed in [32] that when bivariate time series data are generated from lognormal or beta densities, the copula-DCC-GARCH approach shows substantial improvement over DCC-GARCH method alone. In addition to the difference in the types of densities considered in [32], the design for simulations was also quite different: underlying dynamic correlation considered in their paper was much more rapidly varying than those considered in our paper or in [10]. In this regard, we acknowledge that the simulation study is limited in this introductory paper in which we present our new approach. Second, for all simulations in our paper, we considered only one fixed window size ( = 15). Extensive empirical studies comparing the outcomes of SW, DCC, copula-DCC, and VGA methods while varying the density functions, the rate of change of the underlying dynamic correlation, and window size will be needed in order to provide comprehensive recommendations for the practitioner aiming to utilize one of these analyses.

Building upon the mapping of multivariate time series into a multilayer graph proposed in [34], Sannino and co-authors described an approach to capture modulations across resting-state networks [35]. Sannino et al. observed that the networks within the multiplex layers have a modular structure which is an indication of different temporal regimes. Partitioning the visibility graph based on these modules provides a natural decomposition of the time series into time intervals. The approach presented in [35] is to capture the similarity between neural events using Sörensen similarity index between each pair of modules in the two time series. One difference from our approach is that we did not use a visibility criterion; another difference is that the approach in [35] is based on decomposing time series into time intervals. As in our case, we consider the approach in [35] could also be robust towards extreme values. Systematically exploring the differences and similarities between these two approaches will be an interesting topic for future work.

In this study, we used a task-based fMRI dataset to validate our method. Since the dataset is task-based, the dynamics may have been emphasized when compared to fMRI acquisition without tasks, namely resting state fMRI. It is a limitation of our work that we did not validate our finding by incorporating resting state fMRI data, but the Stroop task on which our dataset was based, is irrelevant for the method we proposed. In other words, we did not use the method to explore functional modifications introduced by performing the Stroop task, but we used fMRI data generated during the Stroop task to show that our method can capture such functional modifications. It is anticipated that similar validation can be obtained from resting state data.

Focusing on the pairwise bivariate correlations alone may exclude the potential effects of other regions on the pair of brain regions under consideration. There are new directions proposed recently in the neuroscience literature using partial correlations to account for such confounding effects [36,37]. To control for false positives due to partial correlations in the presence of colliders, [36,37] proposed a combined approach that includes both partial correlations and pairwise bivariate correlations. The approach presented in this paper focused only on bivariate correlations. Extending our method to a combined approach that takes into account confounding effects will also be an interesting direction for future work.

Author Contributions

Conceptualization, M.J. and Y.W.; Data curation, T.I. and J.F.; Formal analysis, M.J. and Y.W.; Funding acquisition, J.F.; Methodology, M.J., Y.W. and M.N.; Validation, A.J.; Writing—original draft, M.J.; Writing—review & editing, J.F. All authors have read and agree to the published version of the manuscript.

Funding

Majnu John’s work was supported in part by the following National Institute of Mental Health (NIMH) grants to PI, Anil K Malhotra: R01MH109508 and R01MH108654. Toshikazu Ikuta would like to acknowledge Brain and Behavior Research Foundations’s NARSAD Young Investigator Award. Aparna John would like to acknowledge Open Cloud Endowment Fellowship from the Open Cloud Institute at UTSA. Janina Ferbinteanu’s research was supported by NIMH grant R21MH106708. Stroop task research was sponsored in part by Pennsylvania Department of Health Formula Award SAP4100062201 and by the Army Research Laboratory under Cooperative Agreement Number W911NF-10-2-0022.

Acknowledgments

We sincerely thank the editors and the anonymous reviewers for their comments which led to improving the manuscript. We also thank Brian Caffo and Martin Lindquist for briefly reviewing an earlier version of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Figures and Tables

Figure A1.

The green curves (DCC estimates) in the bottom panel of Figure 1, with y-axis zoomed in, to see that the curves are not really straight lines.

Figure A1.

The green curves (DCC estimates) in the bottom panel of Figure 1, with y-axis zoomed in, to see that the curves are not really straight lines.

Figure A2.

The time series plot in the top panel in Figure 2 with the y-axis margins expanded.

Figure A2.

The time series plot in the top panel in Figure 2 with the y-axis margins expanded.

Table A1.

Results from Stroop Data Analysis.

Table A1.

Results from Stroop Data Analysis.

| Maximum Value of Correlations Across Time | |||

|---|---|---|---|

| SW | WGA | DCC | |

| Amygdala vs. Caudate Head | |||

| Amygdala vs. Frontal Medial Cortex | |||

| Amygdala vs. Heschel’s Gyrus | |||

| Amygdala vs. Insula | |||

| Amygdala vs. Subcallosal Cortex | |||

| Caudate Head vs. Frontal Medial Cortex | |||

| Caudate Head vs. Heschel’s Gyrus | |||

| Caudate Head vs. Insula | |||

| Caudate Head vs Subcallosal Cortex | |||

| Frontal Medial Cortex vs. Heschel’s Gyrus | |||

| Frontal Medial Cortex vs. Insula | |||

| Frontal Medial Cortex vs. Subcallosal Cortex | |||

| Heschel’s Gyrus vs. Insula | |||

| Heschel’s Gyrus vs. Subcallosal Cortex | |||

| Insula versus Subcallosal Cortex | |||

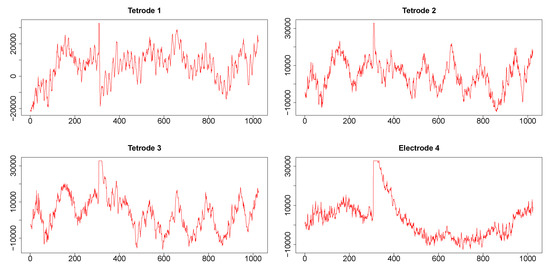

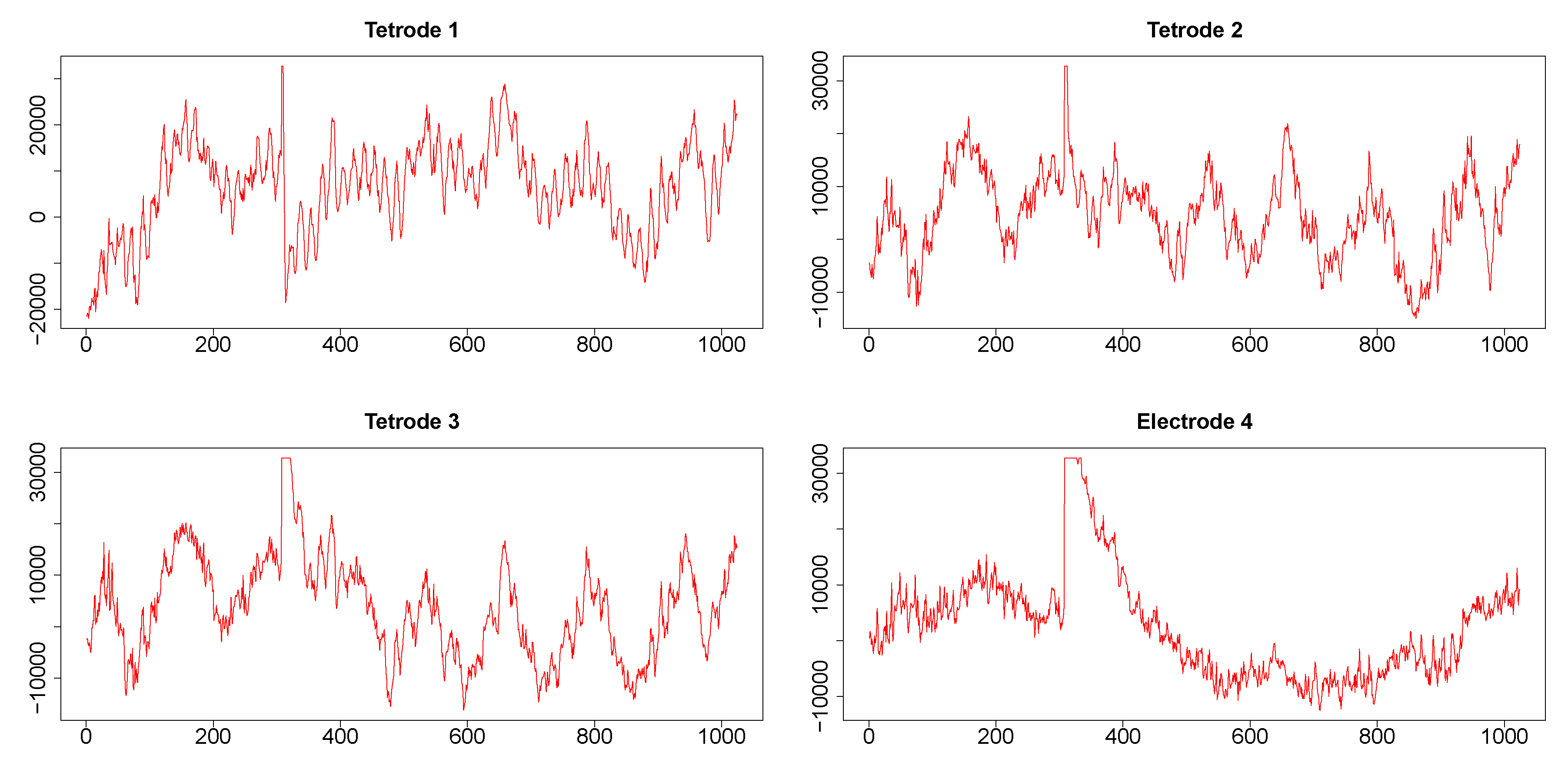

Figure A3.

LFP time series related to two consecutive time stamps (1024 data points sampled at 1 KHz, approximately 1 sec) from three tetrodes and one electrode. From each of these time series, segments between time points 200 and 400, and between 415 and 600 were cut out and plotted in left and right panels, respectively, of Figure 9. These segments were analyzed for illustrating the dynamic correlation estimation methods.

Figure A3.

LFP time series related to two consecutive time stamps (1024 data points sampled at 1 KHz, approximately 1 sec) from three tetrodes and one electrode. From each of these time series, segments between time points 200 and 400, and between 415 and 600 were cut out and plotted in left and right panels, respectively, of Figure 9. These segments were analyzed for illustrating the dynamic correlation estimation methods.

Figure A4.

Three plots selected from the pairwise plots in Figure 10. LFP pairs are shown in the left column, the resulting dynamic correlations computed by using the WGA method are shown in the right column. In all cases, the maximum correlation occurs at the time point corresponding to the stimulus artifact. Overall, the highest correlations resulted for the tetrodes 2 and 3 LFPs which are the most similar, while the dynamic correlation between the very different LFPs of tetrode 2 and electrode 4 were dominated by low values.

Figure A4.

Three plots selected from the pairwise plots in Figure 10. LFP pairs are shown in the left column, the resulting dynamic correlations computed by using the WGA method are shown in the right column. In all cases, the maximum correlation occurs at the time point corresponding to the stimulus artifact. Overall, the highest correlations resulted for the tetrodes 2 and 3 LFPs which are the most similar, while the dynamic correlation between the very different LFPs of tetrode 2 and electrode 4 were dominated by low values.

Figure A5.

Three plots selected from the pairwise plots in Figure 11. LFP pairs are shown in the left column, the resulting dynamic correlations computed by using the WGA method are shown in the right column. The hippocampal LFPs become more similar after the 50th time point and the dynamic correlations in the top two panels reflect adequately this change. The LFP’s recorded in hippocampus and medial dorsal striatum are very different, a characteristic reflected in the low values of the corresponding dynamic correlation.

Figure A5.

Three plots selected from the pairwise plots in Figure 11. LFP pairs are shown in the left column, the resulting dynamic correlations computed by using the WGA method are shown in the right column. The hippocampal LFPs become more similar after the 50th time point and the dynamic correlations in the top two panels reflect adequately this change. The LFP’s recorded in hippocampus and medial dorsal striatum are very different, a characteristic reflected in the low values of the corresponding dynamic correlation.

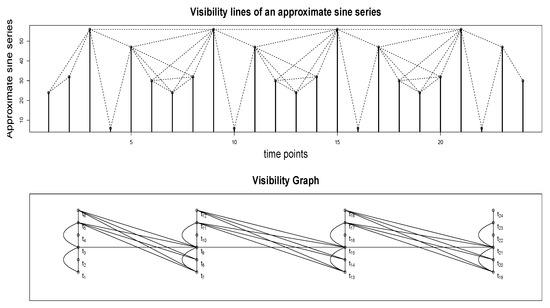

Appendix B. Visibility Criteria

Visibility graph algorithm (VGA) is a method that extends usefulness of the techniques and focus of mathematical graph theory to characterize time series. It has been shown that the visibility graph inherits several properties of the time series [16,38], and its study reveals nontrivial information about the time series itself.

Figure A6 top panel illustrates how the visibility algorithm works. The time series plotted in the upper panel is an approximate sine series; specifically, a sine series with Gaussian white noise added. The values at 24 time points are plotted as vertical bars. One may imagine these vertical bars as, for example, buildings along a straight line in a city landscape (i.e., a city block). Each node in the associated visibility graph (shown in the bottom panel) corresponds to each time point in the series. So, the graph in Figure A6 has 24 nodes. We draw a link or an edge between a pair of nodes, say and , if the visual line of sight from the top of the building (vertical bar) situated at towards the top of the building/bar at is not blocked by any intermediate buildings - that is, if we were to draw a line from the top of the vertical bar at to the top of the vertical bar at , it should not intersect any intermediate vertical bars. Visibility lines corresponding to the edges in the graph are plotted as dotted lines in the figure in the upper panel. For example, there is no edge between and since the line of sight (not shown) between the top points of the vertical bars at these two time points is blocked by the vertical bar at . On the other hand, there is an edge between and since the corresponding visibility line (shown as a dotted line) does not intersect the vertical bar at .

Figure A6.

A time series and the corresponding visibility graph. and so forth denote the time points as well as the corresponding nodes in the visibility graph.

Figure A6.

A time series and the corresponding visibility graph. and so forth denote the time points as well as the corresponding nodes in the visibility graph.

More formally, the following visibility criteria can be established: two arbitrary data values (, ) and () will have visibility, and consequently will become two connected nodes of the associated graph, if any other data () placed between them fulfills:

In our new approach both time series were converted into graphs using a modified version of the weighted visibility graph algorithm (WVGA), introduced in Supriya et al. [15]. WVGA is an extension of the visibility graph algorithm (originally developed by Lacasa et al. [16]) that converts a time series to a graph. The nodes of the WVGA-based graphs correspond to the time points and therefore, the graph generated from each time series consists of T nodes. The weight of the edge between the nodes corresponding to time points and , with , is calculated using Equation (2) given in p.6558 in Supriya et al. [15]:

Here and are the values of the time series at time points and , respectively. All edge weights are calculated in radians.

We modified WVGA in several ways. First, strictly speaking, for the graph to be a visibility graph, there should be no edge between two nodes if there is no “visibility" between them, where “visibility" is defined based on the criterion spelled out above. However, in our version of the algorithm we kept all the edges, and each edge had an assigned weight based on the arctan function mentioned above. Second, while Supriya et al. [15] used only the absolute values of the weights, we retained the signs of the weights calculated, as is. The reason we introduced these modifications is that the shape of the arctan function, rather than the visibility criterion, provides a suitable solution for our purpose.

Schematic Diagram

Figure A7.

Schematic diagram illustrating the median-filtered weight vectors used in the algorithm presented in this paper.

Figure A7.

Schematic diagram illustrating the median-filtered weight vectors used in the algorithm presented in this paper.

Appendix C. Theoretical Considerations

Appendix C.1. Heuristic Justification

A heuristic justification based on an approximation argument for the new approach (WGA) is as follows. Let and denote the first and second time series. Arctan function and median filtering in WGA were used to robustify the method. If we ignore the arctan function in the algorithm for a moment, and use mean instead of median, then the element in (–in this case it should be called , rather–) is

Here is the window size () that we used in the WGA algorithm. Note that we also assumed spacing for convenience. Re-arranging and collecting terms, we may write

Similarly, the element in (–, rather-) may be written as

We also write

Thus, without the arctan function, and with mean instead of median in the algorithm, our estimate for dynamic correlation at time t will be

The term depends on i and hence cannot be factored out of the sums in the numerator and denominator. For a heuristic argument, if we take to be approximately a constant that depends only on t, say , and also assume and , then term in the numerator and the denominator of Equation (A1) cancels out, so that in the case without arctan and with mean instead of median we get

This does not depend on t (i.e., not dynamic) but it is in the ballpark because it is an estimate of the overall average correlation across all time points. If we use median instead of mean, the term does not cancel out in Equation (A1) and hence the final estimate will depend on t (i.e., will be dynamic) and the arctan function will make the method more robust to outliers. We emphasize that the arguments presented in this subsection is just heuristic and the real justification of our algorithm is based on empirical simulations that we conducted and presented in the paper.

Appendix C.2. A Theoretical Framework

We present a theoretical framework to better understand our estimate for dynamic correlation. For convenience with notation, let us write . In the new notation, the two time series under consideration are and . We also assume for convenience that the two time series are centered. Consider the function defined by

with 0 at the spot, and the function defined by

Similarly define as

and

Let

where , , and are matrices defined as

Based on the above definitions, we have the following lemma.

Lemma A1.

K is symmetric and positive semidefinite.

Proof.

Symmetry is easy to check using the definition. To check positive semi-definiteness, let be a -vector of real numbers, where and . Then

□

Note that our dynamic correlation estimate sans arctan and median filtering is Framing our estimate in the above manner makes it easy to check that it is a proper correlation estimate that takes values in [].

Lemma A2.

, for all .

Proof.

Proof follows easily from the fact that for any symmetric positive semidefinite matrix M, , since all principal submatrices of a positive semidefinite matrix are also positive semi-definite (see p.398 in [39]). Here denotes the element of M. (Proof also follows from a simple application of Cauchy-Schwartz inequality directly to and , which does not require the matrix framework.) □

Note that , are “projections” into a larger dimensional feature space. Although the theoretical framework that we outlined above has some similarities with kernel method framework in machine learning, the two frameworks are not the same. The and functions in our framework are known functions, while as in the kernel setting, they are typically unknown functions. Also, , and are not exactly kernel functions because they do not depend on their inputs alone. For instance, in order to evaluate we use all other () as well. Hence, in particular, we cannot use the “kernel trick”. Nevertheless K is still symmetric and positive semidefinite like a Gram matrix and it is interesting to observe that our dynamic correlation estimate is a true correlation estimate in a higher dimensional space.

References

- Allen, E.A.; Damaraju, E.; Plis, S.M.; Erhardt, E.B.; Eichele, T.; Calhoun, V.D. Tracking whole-brain connectivity dynamics in the resting state. Cereb. Cortex. Available online: http://dx.doi.org/10.1093/cercor/bhs352 (accessed on 1 August 2017). [CrossRef] [PubMed]

- Chang, C.; Glover, G.H. Time—frequency dynamics of resting-state brain connectivity measured with fMRI. Neuroimage 2010, 50, 81–98. [Google Scholar] [CrossRef] [PubMed]

- Cribben, I.; Haraldsdottir, R.; Atlas, L.Y.; Wager, T.D.; Lindquist, M.A. Dynamic connectivity regression: Determining state-related changes in brain connectivity. Neuroimage 2012, 61, 907–920. [Google Scholar] [CrossRef] [PubMed]

- Handwerker, D.A.; Roopchansingh, V.; Gonzalez-Castillo, J.; Bandettini, P.A. Periodic changes in fMRI connectivity. Neuroimage 2012, 63, 1712–1719. [Google Scholar] [CrossRef]

- Hutchison, R.M.; Womelsdorf, T.; Allen, E.A.; Bandettini, P.A.; Calhoun, V.D.; Corbetta, M.; Penna, S.D.; Duyn, J.; Glover, G.; Gonzalez-Castillo, J.; et al. Dynamic functional connectivity: Promises, issues, and interpretations. NeuroImage 2013, 80, 360–378. [Google Scholar] [CrossRef]

- Jones, D.T.; Vemuri, P.; Murphy, M.C.; Gunter, J.L.; Senjem, M.L.; Machulda, M.M.; Przybelski, S.A.; Gregg, B.E.; Kantarci, K.; Knopman, D.S.; et al. Non-stationarity in the “resting brain’s” modular architecture. PLoS ONE 2012, 7, e39731. [Google Scholar] [CrossRef]

- Kiviniemi, V.; Vire, T.; Remes, J.; Elseoud, A.A.; Starck, T.; Tervonen, O.; Nikkinen, J. A sliding time-window ICA reveals spatial variability of the default mode network in time. Brain Connect. 2011, 1, 339–347. [Google Scholar] [CrossRef]

- Engle, R. Dynamic conditional correlation: A simple class of multivariate generalized autoregressive conditional heteroskedasticity models. J. Bus. Econ. Stat. 2002, 20, 339–350. [Google Scholar] [CrossRef]

- Engle, R.F.; Sheppard, K. Theoretical and Empirical Properties of Dynamic Conditional Correlation Multivariate Garch; Technical Report; National Bureau of Economic Research: Cambridge, MA, USA, 2001. [Google Scholar]

- Lindquist, M.A.; Xu, Y.; Nebel, M.B.; Caffo, B.S. Evaluating dynamic bivariate correlations in resting-state fMRI: A comparison study and a new approach. NeuroImage 2014, 101, 531–546. [Google Scholar] [CrossRef]

- Engle, R.F. Anticipating Correlations; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Bollerslev, T. Generalized autoregressive conditional heteroskedasticity. J. Econ. 1986, 31, 307–327. [Google Scholar] [CrossRef]

- Francq, C.; Zakoian, J.-M. GARCH Models: Structure, Statistical Inference and Financial Applications; Wiley: Hoboken, NJ, USA, 2010; ISBN 978-0-470-68391-0. [Google Scholar]

- Purdon, P.L.; Solo, V.; Weisskoff, R.M.; Brown, E.N. Locally regularized spatiotemporalmodeling and model comparison for functional MRI. Neuroimage 2001, 14, 912–923. [Google Scholar] [CrossRef] [PubMed]

- Supriya, S.; Siuly, S.; Wang, H.; Cao., J.; Zhang, Y. Weighted visibility graph with complex network features in the detection of epilepsy. IEEE Access. 2016, 4, 6554–6566. [Google Scholar] [CrossRef]

- Lacasa, L.; Luque, B.; Ballesteros, F.; Luque, J.; Nuno, J.C. From time series to complex networks: The visibilty graph. Proc. Natl. Acad. Sci. USA 2008, 105, 4972–4975. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Small, M. Complex network from pseudoperiodic time series: Topology versus dynamics. Phys. Rev. Lett. 2006, 96, 238701. [Google Scholar] [CrossRef] [PubMed]

- Wuertz, D.; Setz, T.; Chalabi, Y. fMultivar: Rmetrics-Analysing and Modeling Multivariate Financial Return Distributions. Available online: https://CRAN.R-project.org/package=fMultivar (accessed on 1 August 2017).

- Stroop, J.R. Studies of interference in serial verbal reactions. Available online: https://psycnet.apa.org/record/1936-01863-001 (accessed on 1 August 2017).

- Verstynen, T.D. The organization and dynamics of corticostriatal pathways link the medial orbitofrontal cortex to future behavioral responses. J. Neurophysiol. 2014, 112, 2457–2469. [Google Scholar] [CrossRef][Green Version]

- Legatt, A.D.; Arezzo, J.; Vaughan, H.G., Jr. Averaged multiple unit activity as an estimate of phasic changes in local neuronal activity: Effects of volume-conducted potentials. J. Neurosci. Methods 1980, 2, 203–217. [Google Scholar] [CrossRef]

- Gray, C.M.; Maldonado, P.E.; Wilson, M.; McNaughton, B. Tetrodes markedly improve the reliability and yield of multiple single-unit isolation from multi-unit recordings in cat striate cortex. J. Neurosci. Methods 1995, 63, 43–54. [Google Scholar] [CrossRef]

- Kiparizoska, S.; Ikuta, T. Disrupted Olfactory Integration in Schizophrenia: Functional Connectivity Study. Int. J. Neuropsychopharmacol. 2017, 20, 740–746. [Google Scholar] [CrossRef]

- Palaniyappan, L.; Liddle, P.F. Does the salience network play a cardinal role in psychosis? An emerging hypothesis of insular dysfunction. J. Psychiatry Neurosci. 2012, 37, 17–27. [Google Scholar] [CrossRef]

- Pang, L.; Kennedy, D.; Wei, Q.; Lv, L.; Gao, J.; Li, H.; Quan, M.; Li, X.; Yang, Y.; Fan, X.; et al. Decreased Functional Connectivity of Insular Cortex in Drug Naïve First Episode Schizophrenia: In Relation to Symptom Severity. PLoS ONE 2017, 12, e0167242. [Google Scholar] [CrossRef]

- Leitman, D.I.; Hoptman, M.J.; Foxe, J.J.; Saccente, E.; Wylie, G.R.; Nierenberg, J.; Jalbrzikowski, M.; Lim, K.O.; Javitt, D.C. The neural substrates of impaired prosodic detection in schizophrenia and its sensorial antecedents. Am. J. Psychiatry 2007, 164, 474–482. [Google Scholar] [CrossRef] [PubMed]

- Jentsch, C.; Politis, D.N. Covariance matrix estimation and linear process bootstrap for multivariate time series of possibly increasing dimension. Ann. Stat. 2015, 43, 1117–1140. [Google Scholar] [CrossRef]

- Kudela, M.; Harezlak, J.; Lindquist, M.A. Assessing uncertainty in dynamic functional connectivity. NeuroImage 2017, 149, 165–177. [Google Scholar] [CrossRef] [PubMed]

- Hindriks, R.; Adhikari, M.H.; Murayama, Y.; Ganzetti, M.; Mantini, D.; Logothetis, N.K.; Deco, G. Can sliding-window correlations reveal dynamic functional connectivity in resting-state fMRI? NeuroImage 2016, 127, 242–256. [Google Scholar] [CrossRef]

- Zalesky, A.; Fornito, A.; Cocchi, L.; Gollo, L.L.; Breakspear, M. Time-resolved resting-state brain networks. Proc. Natl. Acad. Sci. USA 2014, 111, 10341–10346. [Google Scholar] [CrossRef]

- Pawitan, Y. All Likelihood, 1 ed.; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Lee, N.; Kim, J.-M. Dynamic functional connectivity analysis of resting-state fMRI based on copula time-varying correlation. J. Neurosci. Methods 2019, 323, 32–47. [Google Scholar] [CrossRef]