Abstract

In this paper, we introduce the notion of “learning capacity” for algorithms that learn from data, which is analogous to the Shannon channel capacity for communication systems. We show how “learning capacity” bridges the gap between statistical learning theory and information theory, and we will use it to derive generalization bounds for finite hypothesis spaces, differential privacy, and countable domains, among others. Moreover, we prove that under the Axiom of Choice, the existence of an empirical risk minimization (ERM) rule that has a vanishing learning capacity is equivalent to the assertion that the hypothesis space has a finite Vapnik–Chervonenkis (VC) dimension, thus establishing an equivalence relation between two of the most fundamental concepts in statistical learning theory and information theory. In addition, we show how the learning capacity of an algorithm provides important qualitative results, such as on the relation between generalization and algorithmic stability, information leakage, and data processing. Finally, we conclude by listing some open problems and suggesting future directions of research.

1. Introduction

1.1. Generalization Risk

A central goal when learning from data is to strike a balance between underfitting and overfitting. Mathematically, this requirement can be translated into an optimization problem with two competing objectives. First, we would like the learning algorithm to produce a hypothesis (i.e., an answer) that performs well on the empirical sample. This goal can be easily achieved by using a rich hypothesis space that can “explain” any observations. Second, we would like to guarantee that the performance of the hypothesis on the empirical data (a.k.a. training error) is a good approximation of its performance with respect to the unknown underlying distribution (a.k.a. test error). This goal can be achieved by limiting the complexity of the hypothesis space. The first condition mitigates underfitting while the latter condition mitigates overfitting.

Formally, suppose we have a learning algorithm that receives a sample , which comprises of m i.i.d. observations , and uses to select a hypothesis . Let l be a loss function defined on the product space . For instance, l can be the mean-square-error (MSE) in regression or the 0–1 error in classification. Then, the goal of learning from data is to select a hypothesis such that its true risk , defined by

is small. However, this optimization problem is often difficult to solve exactly since the underlying distribution of observations is seldom known. Rather, because the true risk can be decomposed into a sum of two terms:

where , both terms can be tackled separately. The first term in the equation above corresponds to the empirical risk on the training sample . The second term corresponds to the generalization risk. Hence, by minimizing both terms, one obtains a learning algorithm whose true risk is small.

Minimizing the empirical risk can be achieved using tractable approximations to the empirical risk minimization (ERM) procedure, such as stochastic convex optimization [1,2]. However, the generalization risk is often difficult to deal with directly because the underlying distribution is often unknown. Instead, it is a common practice to bound it analytically. By establishing analytical conditions for generalization, one hopes to design better learning algorithms that both perform well empirically and generalize as well into the future.

Several methods have been proposed in the past for bounding the generalization risk of learning algorithms. Some examples of popular approaches include uniform convergence, algorithmic stability, Rademacher and Gaussian complexities, and the PAC–Bayesian framework [3,4,5,6,7].

The proliferation of such bounds can be understood upon noting that the generalization risk of a learning algorithm is influenced by multiple factors, such as the domain , the hypothesis space , and the mapping from to . Hence, one may derive new generalization bounds by imposing conditions on any of such components. For example, the Vapnik–Chervonenkis (VC) theory derives generalization bounds by assuming constraints on whereas stability bounds, e.g., [6,8,9], are derived by assuming constraints on the mapping from to .

Rather than showing that certain conditions are sufficient for generalization, we will establish in this paper conditions that are both necessary and sufficient. More precisely, we will show that the “ uniform” generalization risk of a learning algorithm is an information-theoretic characterization. In particular, it is equal to the total variation distance between the joint distribution of the hypothesis and a single random training example , on one hand, and the product of their marginal distributions, on the other hand. Hence, it is analogous to the mutual information between and . Since uniform generalization is an information-theoretic quantity, information-theoretic tools, such as the data-processing inequality and the chain rules of entropy [10], can be used to analyze the performance of machine learning algorithms. For example, we will illustrate this fact by presenting a simple proof to the classical generalization bound in the finite hypothesis space setting using, solely, information-theoretic inequalities without any reference to the union bound.

1.2. Types of Generalization

Generalization bounds can be stated either in expectation or in probability. Let be some loss function with a bounded range. Then, we have the following definitions:

Definition 1

(Generalization in Expectation). The expected generalization risk of a learning algorithm with respect to a loss is defined by:

where is defined in Equation (1), and the expectation is taken over the random choice of and the internal randomness of . A learning algorithm generalizes in expectation if as for all distributions .

Definition 2

(Generalization in Probability). A learning algorithm generalizes in probability if for any , we have:

where the probability is evaluated over the randomness of and the internal randomness of the learning algorithm.

In general, both types of generalization have been used to analyze machine learning algorithms. For instance, generalization in probability is used in the VC theory to analyze algorithms with finite VC dimensions, such as linear classifiers [3]. Generalization in expectation, on the other hand, was used to analyze learning algorithms, such as the stochastic gradient descent (SGD), differential privacy, and ridge regression [11,12,13,14]. Generalization in expectation is often simpler to analyze, but it provides a weaker performance guarantee.

1.3. Paper Outline

In this paper, a third notion of generalization is introduced, which is called uniform generalization. Uniform generalization also provides generalization bounds in expectation, but it is stronger than the traditional form of generalization in expectation in Definition 1 because it requires that the generalization risk vanishes uniformly in expectation across all bounded parametric loss functions (hence the name). In this paper, a loss function is called “ parametric” if it is conditionally independent of the original training sample given the learned hypothesis .

As mentioned earlier, the uniform generalization risk is equal to an information-theoretic quantity and it yields classical results in statistical learning theory. Perhaps more importantly, and unlike traditional in-expectation guarantees that do not imply concentration, we will show that uniform generalization in expectation implies generalization in probability. Hence, all of the uniform generalization bounds derived in this paper hold both in expectation and with a high probability.

The theory of uniform generalization bridges the gap between information theory and statistical learning theory. For example, we will establish an equivalence relation between the VC dimension, on one hand, and another quantity that is quite analogous to the Shannon channel capacity, on the other hand. Needless to mention, both the VC dimension and the Shannon channel capacity are arguably the most central concepts in statistical learning theory and information theory. This connection between the two concepts is obtained via the notion of the “ learning capacity” that we introduce in this paper, which is the supremum of the uniform generalization risk across all input distributions. We will compute the learning capacities for many machine learning algorithms and show how it matches known bounds on the generalization risk up to logarithmic factors.

In general, the main aim of this work is to bring to light a new information-theoretic approach for analyzing machine learning algorithms. Despite the fact that “ uniform generalization” might appear to be a strong condition at a first sight, one of the central themes that is emphasized repeatedly throughout this paper is that uniform generalization is, in fact, a natural condition that arises commonly in practice. It is not a condition to require or enforce by machine learning practitioners! We believe this holds because any learning algorithm is a channel from the space of training samples to the hypothesis space so its risk for overfitting can be analyzed by studying the properties of this mapping itself. Such an approach yields the uniform generalization bounds that are derived in this paper.

While we strive to introduce foundational results in this work, there are many important questions that remain unanswered. We conclude this paper by listing some of those open problems and suggesting future directions of research.

2. Notation

The notation used in this paper is fairly standard. Important exceptions are listed here. If is a random variable that takes its values from a finite set uniformly at random, we write to denote such a distribution. If is a boolean random variable (i.e., a predicate), then if and only if is true, otherwise . In general, random variables are denoted with boldface letters , instances of random variables are denoted with small letters x, matrices are denoted with capital letters X, and alphabets i.e., fixed sets) are denoted with calligraphic typeface (except that will be reserved for the learning algorithm and that will be reserved for the input distribution as is customary in the literature).

Throughout this paper, we will always write to denote the space of observations (a.k.a. domain) and write to denote the hypothesis space (a.k.a. range). A learning algorithm is formally treated as a stochastic map, where the hypothesis can be a deterministic or a randomized function of the training sample . Given a 0–1 loss function , we will abuse terminology slightly by speaking about the “ VC dimension of ” when we actually mean the VC dimension of the loss class .

In addition, given two probability measures p and q defined on the same space, we will write to denote the overlapping coefficient between p and q. That is, , where is the total variation distance.

Moreover, we will use the order in probability notation for real-valued random variables. Here, we adopt the notation used by [15] and [16]. In particular, let be a real-valued random variable that depends on some parameter . Then, we will write if for any , there exists absolute constants C and such that for any fixed , the inequality holds with a probability of, at least, . In other words, the ratio is stochastically bounded [15]. Similarly, we write if converges to zero in probability. As an example, if is a standard multivariate Gaussian vector, then even though can be arbitrarily large. Intuitively, the probability of the event when goes to zero as so is effectively of the order .

3. Related Work

A learning algorithm is called consistent if the true risk of its hypothesis converges to the optimal true risk in , i.e., , as in a distribution agnostic manner. A learning problem, which is a tuple with l being a loss function defined on the product space , is called learnable if it admits a consistent learning algorithm. It can be shown that learnability is equivalent to uniform convergence for supervised classification and regression even though uniform convergence is not necessary in the general setting [17].

Unlike learnability, the subject of generalization looks into how representative the empirical risk is to the true risk as discussed earlier. It can be rightfully considered as an extension to the law of large numbers, which is one of the earliest and most important results in probability theory and statistics. However, unlike the law of large numbers, which assumes that observations are independent and identically distributed, the subject of generalization in machine learning addresses the case where the losses are no longer i.i.d. due to the fact that is selected according to the training sample and .

Similar to learnability, uniform convergence is, by definition, sufficient for generalization but it is not necessary because the learning algorithm might restrict its search space to a smaller subset of . So, in addition to uniform convergence bounds, several other methods have been introduced for bounding the generalization risk, such as using algorithmic stability, Rademacher and Gaussian complexities, generic chaining bounds, the PAC-Bayesian framework, and robustness-based analysis [5,6,7,18,19,20]. Classical concentration of measure inequalities, such as using the union bound, form the building blocks of such rich theories.

In this work, we address the subject of generalization in machine learning from an information-theoretic point of view. We will show that if the hypothesis conveys “ little” information about a random single training example , then the difference between and will be small with a high probability. The measure of information we use here is given by the notion of variational information between the hypothesis and a single random training example . Variational information, also sometimes called T-information [14], is an instance of the class of informativity measures using f-divergences, which can be motivated axiomatically [21,22]. Unlike traditional methods, we will prove that is equal to the “ uniform” generalization risk; it is not just an upper bound.

Information-theoretic approaches of analyzing the generalization risk of learning algorithms, such as the one proposed in this paper, have found applications in adaptive data analysis. This includes the work of [12] using the max-information, the work of [23] and [24] using the mutual information, and the work of [14] using the leave-one-out information. One key contribution of our work is to show that one should examine the relationship between the hypothesis and a single random training example, instead of examining the relationship between the hypothesis and the full training sample as is customary in the literature. The gap between such two approaches is strict. For example, Theorem 8 in Section 5.5 presents an example of when a learning algorithm can have a vanishing uniform generalization risk even when the mutual information between the learned hypothesis and the training sample can be made arbitrarily large.

4. Uniform Generalization

4.1. Preliminary Definitions

In this paper, we consider the general setting of learning introduced by Vapnik [3]. To reiterate, we have an observation space (a.k.a. domain) and a hypothesis space . Our learning algorithm receives a set of m observations generated i.i.d. from some fixed unknown distribution , and picks a hypothesis according to some probability distribution . In other words, is a channel from to . In this paper, we allow the hypothesis to be any summary statistic of the training set. It can be an answer to a query, a measure of central tendency, or a mapping from the input space to the output space. In fact, we even allow to be a subset of the training set itself. In formal terms, is a stochastic map between the two random variables and , where the exact interpretation of those random variables is irrelevant. Moreover, we assume that there exists a non-negative bounded loss function that is used to measure the fitness of the hypothesis on the observation .

For any fixed hypothesis , we define its true risk by Equation (1) and denote its empirical risk on the training sample by . We also define the true and empirical risks of the learning algorithm by the expected corresponding risk of its hypothesis:

Finally, the generalization risk of the learning algorithm is defined by:

Next, we define uniform generalization:

Definition 3

(Parametric Loss). A loss function is called parametric if it is conditionally independent of the training sample given the hypothesis . That is, it satisfies the Markov chain .

Definition 4

(Uniform Generalization). A learning algorithm generalizes uniformly with rate if for all bounded parametric losses , we have , where is given in Equation (5).

Informally, Definition 4 states that once a hypothesis is selected by a learning algorithm that achieves uniform generalization, then no “ adversary” can post-process the hypothesis in a manner that causes over-fitting to occur. Equivalently, uniform generalization implies that the empirical performance of on the sample will remain close to its performance with respect to the underlying distribution regardless of how that performance is being measured. For example, the loss function in Equation (5) can be the misclassification error rate as in the traditional classification setting, a cost-sensitive error rate as in fraud detection and medical diagnosis [25], or the Brier score as in probabilistic predictions [26]. The generalization guarantee would hold in any case.

4.2. Variational Information

Given two random variables and , the variational information between the two random variables is defined to be the total variation distance between the join distribution and the product of marginals . We will denote this by . By definition:

Note that . We describe some of the important properties of variational information in this section. The reader may consult the appendices for detailed proofs.

Lemma 1

(Data Processing Inequality). If is a Markov chain, then:

This data processing inequality holds, in general, for all informativity measures using f-divergences [21,22].

Lemma 2

(Information Cannot Hurt). For any random variables , , and , we have:

Proof.

The proof is in Appendix A. □

Finally, we derive a chain rule for the variational information.

Definition 5

(Conditional Variational Information). The conditional variational information between the two random variables and given is defined by:

which is analogous to the conditional mutual information in information theory [10].

Theorem 1

(Chain Rule). Let be a sequence of random variables. Then, for any random variable , we have:

Proof.

The proof is in Appendix B. □

Although the chain rule above provides an upper bound, the upper bound is tight in the following sense:

Proposition 1.

For any random variables, and, we haveand.

Proof.

The proof is in Appendix C. □

In other words, the inequality in the chain rule becomes an equality if:

The chain rule provides a recipe for computing the bias of a composition of hypotheses . Recently, [23] proposed an information budget framework for controlling the bias of estimators by controlling the mutual information between and the training sample . The proposed framework rests on the chain rule of mutual information. Here, we note that the argument for the information budget framework also holds when using the variational information due to the chain rule above.

4.3. Equivalence Result

Our first main theorem states that the uniform generalization risk has a precise information-theoretic characterization.

Theorem 2.

Given a fixed constantand a learning algorithmthat selects a hypothesisaccording to a training sample, whereare i.i.d.,generalizes uniformly with rate ϵ if and only if, whereis a single random training example.

Proof.

Let be a learning algorithm that receives a finite set of training examples drawn i.i.d. from a fixed unknown distribution . Let be the hypothesis chosen by (can be deterministic or randomized) and write to denote a random variable that selects its value uniformly at random from the training sample . Clearly, and are not independent in general. To simplify notation, we will write to denote the loss function. Note that is itself a random variable that satisfies the Markov chain . The claim is that generalizes uniformly with rate across all parametric loss functions if and only if .

By the Markov property, we have . By definition, the true and empirical risks of are given by:

Because is a random variable whose value is chosen uniformly at random with replacement from the training set , its marginal distribution is . Its conditional distribution given can be different, however, because both and depend on the training set . However, they are both conditionally independent of each other given . By marginalization, we have:

Combining this with Equations (6) and (7) yields and . Both equations imply that:

Now, we would like to sandwich the right-hand side between upper and lower bounds. To do this, we note that if and are two distributions defined on the same domain and , then:

where is the total variation distance. This result can be immediately proven by considering the two regions and separately. In addition, it is tight because the inequality holds with equality for the loss function . Consequently:

Finally, from the Markov chain and the data processing inequality, we have . Plugging this into the earlier inequality yields the bound:

To prove the converse, define:

The loss is independent of the training sample given because is evaluated by taking expectation over all the training samples conditioned on . Hence, is a 0–1 loss defined on the product space and satisfies the Markov chain . However, given this choice of loss, we have:

Hence, the variational information does not only provide an upper bound on the uniform generalization risk, but is also a lower bound to it. Therefore, is equal to the uniform generalization risk. □

Remark 1.

One important observation about Theorem 2 is that the variational information is measured between the hypothesisand a single training example , which is quite different from previous works that looked into the mutual information with the entire training sample . By considering rather than , we quantify the uniform generalization risk with equality and the resulting bound is not vacuous even if the learning algorithm was deterministic. By contrast, may yield vacuous bounds when is deterministic and both and are uncountable.

For concreteness, we illustrate how to compute the uniform generalization risk (or equivalently the variational information) on two simple examples. Here, is the binomial distribution. The first example is a special case of a more general theorem that will be presented later in Section 5.2.

Example 1.

Suppose that observationsare i.i.d. Bernoulli trials with, and that the hypothesis produced byis the empirical average. Becauseand, it can be shown that the uniform generalization risk of this learning algorithm is given by the following quantity assuming thatis an integer:

This is maximized when, in which case, the uniform generalization risk can be bounded using the Stirling approximation [27] byup to a first-order term.

Proof.

First, the probability we obtain a hypothesis , where , given that we have m Bernoulli trials has a binomial distribution:

We use the identity:

However, is Bernoulli with probability of success while is Bernoulli with probability of success . The total variation distance between the two Bernoulli distributions is given by . So, we obtain:

This is the mean deviation. Assuming is an integer, then the mean deviation of the binomial random variable is given by de Moivre’s formula:

The mean deviation is maximized when . This gives us:

where in the last step we expanded the binomial coefficient and used Stirling’s approximation [27]. □

Example 2.

Suppose that the domain isfor some, wherefor all. Let the hypothesis space bewhereis equal to the fraction of times the value k is observed in the training sample. For example, if, the hypothesisis chosen among the setwith the respective probabilities. Then, the variational information is given by:

Proof.

We have by symmetry for all . Let . By Bayes rule, we have:

However, given one observation , the probability of selecting a hypothesis depends on two cases:

for some values and such that . To find q, we use the definition of :

This holds because is equivalent to an algorithm that selects a single observation in the set uniformly at random. So, to satisfy the condition , we have:

Now, we are ready to find the desired expression.

□

Note that the variational information in Example 2 is , which is smaller than the variational information in Example 1. This is not a coincidence. The difference between the two examples is related to data processing. Specifically, suppose that in Example 2 and let be the hypothesis. Let be the hypothesis in Example 1. Then, we have the Markov chain because is Bernoulli with parameter .

4.4. Learning Capacity

The variational information depends on the distribution of observations , which is seldom known in practice. To construct a distribution-free bound on the uniform generalization risk, we introduce the following quantity:

Definition 6

(Learning Capacity). The learning capacity of an algorithm is defined by:

where and are as defined in Theorem 2.

The above quantity is analogous to the Shannon channel capacity except that it is measured in the total variation distance. It quantifies the capacity for overfitting in the given learning algorithm. For example, the learning capacity of the algorithm in Example 1 is up to a first order term, as proved earlier, so its capacity for overfitting is larger than that of the learning algorithm in Example 2.

Theorem 2 reveals that has, at least, three equivalent interpretations:

- Statistical: The learning capacity is equal to the supremum of the expected generalization risk across all input distributions and all bounded parametric losses. This holds by Theorem 2 and Definition 6.

- Information-Theoretic: The learning capacity is equal to the amount of information contained in the hypothesis about the training examples. This holds because .

- Algorithmic: The learning capacity measures the influence of a single training example on the distribution of the final hypothesis . As such, a learning algorithm has a small learning capacity if and only if it is algorithmically stable. This follows from the fact that .

Throughout the sequel, we analyze the properties of and derive upper bounds for it under various conditions, such as in the finite hypothesis space setting and differential privacy.

4.5. The Definition of Hypothesis

In the proof of Theorem 2, the following Markov chain is used. Essentially, this states that the loss function , which is a random variable itself, must be parameterized entirely by the hypothesis as stated in Definition 3. We list, next, a few examples that highlight this point.

Example 3

(Input Normalization). If the data is normalized prior to training, such as using min-max or z-score normalization, then the normalization parameters are included in the definition of the hypothesis .

Example 4

(Feature Selection). If the observations comprise of d features and feature selection is implemented prior to training a model (such as in classification or clustering), then the hypothesis is the composition , where encodes the set of the features that have been selected by the feature selection algorithm.

Example 5

(Cross Validation). Hyper-parameter tuning is a common practice in machine learning. This includes choosing the tradeoff parameter C in support vector machine (SVM) [28] or the bandwidth γ in radial basis function (RBF) networks [29]. However, not all hyper-parameters are encoded in the hypothesis . For instance, the tradeoff constant C is never used during prediction so it is omitted from the definition of but the bandwidth parameter γ is included if it is selected based on the training sample.

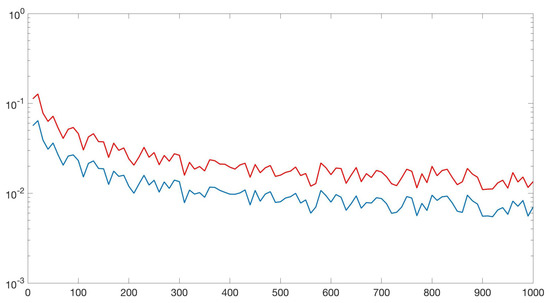

In order to illustrate why the Markov chain is important, consider the following simple scenario. Suppose we have a mixture of two Gaussians in , one corresponding to the positive class and one corresponding to the negative class. If z-score normalization is applied before training a linear classifier, then the generalization risk might increase with normalization because the final hypothesis now includes more information about the training sample (see Lemma 2). Figure 1 shows this effect when . As illustrated in the figure, normalization is often important in order to assign equal weights to all features but it can increase the generalization risk as well.

Figure 1.

This figure corresponds to a classification problem in one dimension in which a classifier is a threshold between positive and negative examples. In this figure, the x axis is the number of training examples while the y-axis is the generalization risk. The red curve (top) corresponds to the difference between training and test accuracy when z-score normalization is applied before learning a classifier. The blue curve (bottom) corresponds to the difference between training and test accuracy when the data is not normalized.

4.6. Concentration

The notion of uniform generalization in Definition 4 provides in-expectation guarantees. In this section, we show that whereas traditional generalization in expectation does not imply concentration, uniform generalization in expectation implies concentration. In fact, we will use the chain rule in Theorem 1 to derive a Markov-type inequality. After that, we show that the bound is tight.

We begin by showing why a non-uniform generalization in expectation does not imply concentration.

Proposition 2.

There exists a learning algorithmand a parametric losssuch that the expected generalization risk iseven though, where the probability is evaluated over the randomness ofand the internal randomness of.

Proof.

Let be an instance space with a continuous marginal density and let be the target set. Let be some fixed predictor, such that , where the probability is evaluated over the random choice of . In other words, the marginal distribution of the labels predicted by is uniform over the set . These assumptions are satisfied, for example, if is uniform in and .

Next, let the hypothesis space be the set of predictors from to that output a label in uniformly at random everywhere in except at a finite number of points. Define the parametric loss by .

Next, we construct a learning algorithm that generalizes perfectly in expectation but does not generalize in probability. The learning algorithm simply picks at random with equal probability. The two hypotheses are:

Because is uncountable, where the probability of seeing the same observation twice is zero, for this learning algorithm. Thus:

However, the empirical risk for any satisfies while the true risk always satisfies , as mentioned earlier. Hence, the statement of the proposition follows. □

There are many ways of seeing why the algorithm in Proposition 2 does not generalize uniformly in expectation. The simplest way is to use the equivalence between uniform generalization and variational information as stated in Theorem 2. Given the hypothesis that is learned by the algorithm constructed in the proposition, the marginal distribution of an individual training example is uniform over the sample . This follows from the fact that the hypothesis has to encode the entire sample . However, the probability of seeing the same observation twice is zero (by construction). Hence, . This shows that .

The example in Proposition 2 reveals an interesting property of non-uniform generalization. Namely, non-uniform generalization can be sensitive to every bit of information provided by the hypothesis. In the example above, the hypothesis is encoded by the pair , where determines which of the two hypotheses is selected. The discrepancy between generalization in expectation and generalization in probability happens because is added into the hypothesis.

Next, we use the chain rule in Theorem 1 to prove that uniform generalization, on the other hand, is a robust property of learning algorithms. More precisely, if has a finite domain, then a hypothesis generalizes uniformly in expectation if and only if the pair generalizes uniformly in expectation. Hence, adding any finite amount of information (in bits) to a hypothesis cannot alter its uniform generalization property in a significant way.

Theorem 3.

Letbe a learning algorithm whose hypothesis is. Letbe a different hypothesis that is obtained from the same sample. If, then:

Proof.

The proof is in Appendix D. □

We use Theorem 3, next, to prove that a uniform generalization in expectation implies a generalization in probability. The proof is by contradiction. Suppose we have a hypothesis that generalizes uniformly in expectation but there exists a parametric loss that does not generalize in probability. We will derive a contradiction from these two assumptions. We show that appending little information to the hypothesis will allow us to construct a different parametric loss that does not generalize in expectation by determining whether or not the empirical risk w.r.t. is greater than, approximately equal to, or is less than the true risk w.r.t. the same loss. This is described in, at most, two bits. Knowing this additional information, we can define a new parametric loss that does not generalize in expectation, which contradicts the definition of uniform generalization.

Theorem 4.

Letbe a learning algorithm, whose risk is evaluated using a parametric loss. Then:

where the probability is evaluated over the random choice ofand the internal randomness of.

Proof.

Let be a parametric loss function and write:

Consider the new pair of hypotheses , where:

Then, by Theorem 3, the uniform generalization risk in expectation for the composition of hypotheses is bounded by . This holds uniformly across all parametric loss functions that satisfy the Markov chain . Next, consider the parametric loss:

Note that is parametric with respect to the composition of hypotheses . Using Equation (12), the generalization risk w.r.t in expectation is, at least, as large as . Therefore, by Theorems 2 and 3, we have , which is the statement of the theorem (Note: The proof assumes that the loss function has access to the underlying distribution. This assumption is valid because the underlying distribution is fixed and does not depend on any random outcomes, such as or ). □

Theorem 4 reveals that uniform generalization is sufficient for concentration to hold. Importantly, the generalization bound depends on the learning algorithm only via its variational information . Hence, by controlling the uniform generalization risk, one improves the generalization risk of both in expectation and with a high probability.

The same proof technique used in Theorem 4 also implies the following concentration bound, which is useful when where is the Shannon mutual information. The following bound is similar to the bound derived by [23] using properties of sub-Gaussian loss functions.

Proposition 3.

Letbe a learning algorithm, whose risk is evaluated using a parametric loss function. Then:

Proof.

The proof is in Appendix E. □

Note that having a vanishing mutual information, i.e., , which is the setting recently considered in the work of [23], is a strictly stronger condition than uniform generalization. For instance, we will later construct deterministic learning algorithms that generalize uniformly in expectation even though is unbounded (see Theorem 8). By contrast, is sufficient for to hold.

Finally, we note that the concentration bound depends linearly on the variational information . Typically, . By contrast, the VC bound provides an exponential decay on m [3,17]. Can the concentration bound in Theorem 4 be improved? The following proposition answers this question in the negative.

Proposition 4.

For any rational, there exists a learning algorithm, a distribution, and a parametric losssuch that:

where the probability is evaluated over the random choice ofand the internal randomness of.

Proof.

The proof is in Appendix F. □

Proposition 4 shows that, without making any additional assumptions beyond that of uniform generalization, the concentration bound in Theorem 4 is tight up to constant factors. Essentially, the only difference between the upper and the lower bounds is a vanishing term that is independent of .

5. Properties of the Learning Capacity

In this section, we derive bounds on the learning capacity under various settings. We also describe some of its important properties.

5.1. Data Processing

The relationship between learning capacity and data processing is presented in Lemma 1. Given the random variables , and and the Markov chain , we always have . Hence, we have a partial order on learning algorithms. This presents us with an important qualitative insight into the design of machine learning algorithms.

Suppose we have two different hypotheses and . We will say that contains less information than if the Markov chain holds. For example, if the observations are Bernoulli trials, then can be the empirical average as given in Example 1 while can be the label that occurs most often in the training set. Because , the hypothesis contains strictly less information about the original training set than . Formally, we have . In this case, enjoys a better uniform generalization bound because of data-processing. Intuitively, we know that such a result should hold because is less dependent to the original training set than . Hence, one can improve the uniform generalization bound (or equivalently the learning capacity) of a learning algorithm by post-processing its hypothesis in a manner that is conditionally independent of the original training set given .

Example 6.

Post-processing hypotheses is a common technique in machine learning. This includes sparsifying the coefficient vectorin linear methods, whereis set to zero if it has a small absolute magnitude. It also includes methods that have been proposed to reduce the number of support vectors in SVM by exploiting linear dependence [30], or some methods for decision tree pruning. By the data processing inequality, such techniques reduce the learning capacity and, as a consequence, mitigate the risk for overfitting.

Needless to mention, better generalization does not immediately translate into a smaller true risk. This is because the empirical risk itself may increase when the hypothesis is post-processed independently of the original training sample.

5.2. Effective Domain Size

Next, we look into how the size of the domain limits the learning capacity. First, we start with the following definition:

Definition 7

(Lazy Learning). A learning algorithm is called lazy if the training sample can be reconstructed perfectly from the hypothesis . In other words, , where H is the Shannon entropy. Equivalently, the mapping from to is injective.

One common example of a lazy learner is instance-based learning when . Despite their simple nature, lazy learners are useful in practice. They are useful theoretical tools as well. In particular, because of the fact that and the data processing inequality, the learning capacity of a lazy learner provides an upper bound to the learning capacity of any possible learning algorithm. Therefore, we can relate the learning capacity to the size of the domain by determining the learning capacity of lazy learners. Because the size of is usually infinite, we introduce the following definition of effective set size.

Definition 8.

In a countable spaceendowed with a probability mass function, the effective size ofw.r.t.is defined by:.

At one extreme, if is uniform over a finite alphabet , then . At the other extreme, if is a Kronecker delta distribution, then . As proved next, this notion of effective set size determines the rate of convergence of an empirical probability mass function to its true distribution when the distance is measured in the total variation sense. As a result, it allows us to relate the learning capacity to a property of the domain .

Theorem 5.

Letbe a countable space endowed with a probability mass function. Letbe a set of m i.i.d. observations. Defineto be the empirical probability mass function that results from drawing observations uniformly at random from. Then:

whereis the effective size of(see Definition 8).

Proof.

The proof is in Appendix G. □

A special case of Theorem 5 was proved by de Moivre in the 1730s, who showed that the empirical mean of i.i.d. Bernoulli trials with a probability of success converges to the true mean with rate . This is believed to be the first appearance of the square-root law in statistical inference in the literature [31]. Because the effective domain size of the Bernoulli distribution, according to Definition 8, is given by , Theorem 5 agrees with, in fact generalizes, de Moivre’s result.

Corollary 1.

Letbe a learning algorithm whose hypothesis is. Then,. Moreover, the bound is achieved by lazy learners.

Proof.

Let be the hypothesis produced by a lazy learner. The simplest example is if is equal to the training sample itself. Then, we always have the Markov chain for any hypothesis . Therefore, by the data processing inequality, we have . By Theorem 5, we have:

Hence, the statement of the corollary follows. □

Corollary 2.

For any learning algorithm, we have.

Proof.

The function is both concave over the probability simplex and permutation-invariant. Hence, by symmetry, the maximum effective domain size must be achieved at the uniform distribution , in which case . □

5.3. Finite Hypothesis Space

Next, we look into the role of the size of the hypothesis space. This is formalized by the following theorem.

Theorem 6.

Letbe the hypothesis produced by a learning algorithm. Then:

where H is the Shannon entropy measured in nats.

Proof.

If we let be the mutual information between the r.v.’s and and let be the training set, we have:

Because conditioning reduces entropy, i.e., for any r.v.’s and , we have:

Therefore:

Next, we use Pinsker’s inequality [10], which states that for any probability measures p and q: , where is total variation distance and is the Kullback-Leibler divergence measured in nats. If we recall that while the mutual information is , we deduce from Pinsker’s inequality and Equation (13):

□

Theorem 6 re-establishes the classical PAC result on the finite hypothesis space setting. However, unlike its typical proofs, the proof presented here is purely information-theoretic and does not make any references to the union bounds.

5.4. Differential Privacy

Randomization reduces the risk for overfitting. One common randomization technique in machine learning is differential privacy [32,33], which addresses the goal of obtaining useful information about the sample as a whole without revealing a lot of information about any individual observation. Here, we show that differentially-private learning algorithms have small learning capacities.

Definition 9

([33]). A randomized learning algorithm is differentially private if for any and any two samples and that differ in one observation only, we have:

Proposition 5.

If a learning algorithmisdifferentially private, then:.

Proof.

The proof is in Appendix H. □

Not surprisingly, the differential privacy parameters control the uniform generalization risk, where small values of and lead to a reduced risk for overfitting.

5.5. Empirical Risk Minimization of 0–1 Loss Classes

Empirical risk minimization (ERM) of stochastic loss is a popular approach for learning from data. It is often regarded as the default strategy to use, due to its simplicity, generality, and statistical efficiency [1,3,13,34]. Given a fixed hypothesis space , a domain , and a loss function , the ERM learning rule selects the hypothesis that minimizes the empirical risk:

By contrast, the true risk minimizer is:

Hence, learning via ERM is justified if , for some . If such a condition holds and as the sample size m increases, the ERM learning rule is called consistent.

Uniform generalization is a sufficient condition for the consistency of empirical risk minimization (ERM). To see this, we have by definition:

From this, we conclude that:

where is the learning capacity of the empirical risk minimization rule. The last inequality follows from Theorem 2. In addition, because , we have by the Markov inequality:

Hence, the ERM learning rule is consistent if as . Next, we describe when such a condition on holds for 0–1 loss classes. To do that, we begin with two familiar definitions from statistical learning theory.

Definition 10

(Shattered Set). Given a domain , a hypothesis space , and a 0–1 loss function , a set is said to be shattered by with respect to the function l if for any labeling , there exists a hypothesis such that .

Example 7.

Letand let the loss function be. Then, any singleton setis shattered bysince we always have the two hypothesesand. However, no set of two points incan be shattered by. By contrast, if the hypothesis is a pairand the loss function is, then any set of two distinct examplesis shattered by the hypothesis space.

Definition 11

(VC Dimension). The VC dimension of a hypothesis space with respect to a domain and a 0–1 loss is the maximum cardinality of a set of points in that can be shattered by with respect to l.

The VC dimension is arguably the most fundamental concept in statistical learning theory because it provides a crisp characterization of learnability for 0–1 loss classes. Next, we show that the VC dimension has, in fact, an equivalence characterization with the learning capacity . Specifically, under the Axiom of Choice, an ERM learning rule exists that has a vanishing learning capacity if and only if the 0–1 loss class has a finite VC dimension.

Before we establish this important result, we describe why ERM by itself is not sufficient for uniform generalization to hold even when the hypothesis space has a finite VC dimension.

Proposition 6.

For any sample sizeand a positive constant, there exists a hypothesis space, a domain, and a 0–1 losssuch that: (1)has a VC dimension, and (2) a learning algorithmexists that outputs an empirical risk minimizerwith.

Proof.

Let , where and and let the loss be . In other words, the goal is to learn a threshold in the unit interval that separates the positive from the negative examples. Let be uniformly distributed in and let be an error-free separator. Then, for any training sample , the set of all empirical risk minimizers is:

In particular, is an interval, which has the power of the continuum, so it can be used to encode the entire training sample.

Fix in advance, which can be made arbitrarily small. Then, the probability over the random choice of the sample that can be made arbitrarily small for a sufficiently small , where is the length of the interval.

Let be a hypothesis that lies at the middle of , i.e.,:

Let . Then, holds with a high probability (which can be made arbitrarily close to 1 for a sufficiently small ). Let be a hypothesis whose binary expansion agrees with in its first bits and encodes the entire training sample in the rest of the bits.

Finally, the output of the learning algorithm is , which is given by the following rule:

- If is an empirical risk minimizer, then set

- Otherwise, set .

Now, define the following different parametric loss to be a function that first uses to decode the training sample based on the coding method constructed above and, then, assigns 1 if and only if . To reiterate, this decoding succeeds with a probability that can be made arbitrarily high for a sufficiently small . Clearly, is a loss defined on the product space and has a bounded range. However, the generalization risk w.r.t. is, at least, equal to the probability that , which can be made arbitrarily close to 1. Hence, the statement of the proposition holds. □

Proposition 6 shows that one cannot obtain a non-trivial bound on the uniform generalization risk of an ERM learning rule in terms of the VC dimension d and the sample size m without making some additional assumptions. Next, we prove that an ERM learning rule exists that satisfies the uniform generalization property if the hypothesis space has a finite VC dimension. We begin by recalling a fundamental result in modern set theory. A non-empty set is said to be well-ordered if is endowed with a total order ⪯ such that every non-empty subset of contains a least element. The following fundamental result, which was published in 1904, is due to Ernst Zermelo [35].

Theorem 7

(Well-Ordering Theorem). Under the Axiom of Choice, every non-empty subset can be well-ordered.

Theorem 8.

Given a hypothesis space, a domain, and a 0–1 loss, let ⪯ be a well-ordering onand letbe the learning rule that outputs the “ least” empirical risk minimizer to the training sampleaccording to ⪯. Then,asifhas a finite VC dimension. In particular:

where d is the VC dimension of, provided that.

Proof.

The proof is in Appendix I. □

Next, we prove a converse statement. Before we do this, we present a learning problem that shows why a converse to Theorem 8 is not generally possible without making some additional assumptions. Hence, our converse will be later established for the binary classification setting only.

Example 8

(Subset Learning Problem). Let be a finite set of positive integers. Let and define the 0–1 loss of a hypothesis to be . Then, the VC dimension is d. However, the learning rule that outputs is always an ERM learning rule that generalizes uniformly with rate regardless of the sample size and the distribution of observations.

The previous example shows that a converse to Theorem 8 is not generally possible without making some additional assumptions. In particular, in the Subset Learning Problem, the VC dimension is not an accurate measure of the complexity of the hypothesis space because many hypotheses dominate others (i.e., perform better across all distributions of observations). For example, the hypothesis dominates because there is no distribution on observations in which outperforms . In fact, the hypothesis dominates all other hypotheses.

Consequently, in order to prove a lower bound for all ERM rules, we focus on the standard binary classification setting.

Theorem 9.

In any fixed domain, let the hypothesis spacebe a concept class onand letbe the misclassification error. Then, any ERM learning rulew.r.t. l has a learning capacitythat is bounded from below by, where m is the training sample size and d is the VC dimension of.

Proof.

The proof is in Appendix J. □

Using both Theorems 8 and 9, we arrive at the following equivalence characterization of the VC dimension of a concept class with the learning capacity.

Theorem 10.

Given a fixed domain, let the hypothesis spacebe a concept class onand letbe the misclassification error. Let m be the sample size. Then, the following statements are equivalent under the Axiom of Choice:

- admits an ERM learning rulewhose learning capacitysatisfiesas.

- has a finite VC dimension.

Proof.

The lower bound in Theorem 9 holds for all ERM learning rules. Hence, an ERM learning rule exists that generalize uniformly with a vanishing rate across all distributions only if has a finite VC dimension. However, under the Axiom of Choice, can always be well-ordered by Theorem 7 so, by Theorem 8, a finite VC dimension is also sufficient to guarantee the existence of a learning rule that generalize uniformly. □

Theorem 10 presents a characterization of the VC dimension in terms of information theory. According to the theorem, an ERM learning rule can be constructed that does not encode the training sample if and only if the hypothesis space has a finite VC dimension.

Remark 2.

One method of constructing a well-ordering on a hypothesis spaceis to use the fact that computers are equipped with finite precisions. Hence, in practice, every hypothesis space is enumerable, from which the normal ordering of the integers forms a valid well-ordering on.

6. Concluding Remarks

In this paper, we introduced the notion of “ learning capacity” for algorithms that learn from data, which is analogous to the Shannon capacity of communication channels. Learning capacity is an information-theoretic quantity that measures the contribution of a single training example to the final hypothesis. It has three equivalent interpretations: (1) as a tight upper bound on the uniform generalization risk, (2) as a measure of information leakage, and (3) as a measure of algorithmic stability. Furthermore, by establishing a chain rule for learning capacity, concentration bounds were derived, which revealed that the learning capacity controlled both the expectation of the generalization risk and its variance. Moreover, the relationship between algorithmic stability and data processing revealed that algorithmic stability can be improved by post-processing the learned hypothesis.

Throughout this paper, we provided several bounds on the learning capacity under various settings. For instance, we established a relationship between algorithmic stability and the effective size of the domain of observations, which can be interpreted as a formal justification for dimensionality reduction methods. Moreover, we showed how learning capacity recovered classical bounds, such as in the finite hypothesis space setting, and derived new bounds for other settings as well, such as differential privacy. We also established that, under the Axiom of Choice, the existence of an empirical risk minimization (ERM) rule for 0–1 loss classes that had a vanishing learning capacity was equivalent to the assertion that the hypothesis space had a finite Vapnik–Chervonenkis (VC) dimension, thus establishing an equivalence relation between two of the most fundamental concepts in statistical learning theory and information theory.

More generally, the intent of this work is to bring to light a new information-theoretic approach for analyzing machine learning algorithms. Despite the fact that “ uniform generalization” might appear to be a strong condition at a first sight, one of the central claims of this paper is that uniform generalization is, in fact, a natural condition that arises commonly in practice. It is not a condition to require or enforce! We believe this holds because any learning algorithm is a channel from the space of training samples to the hypothesis space. Because learning is a mapping between two spaces, its risk for overfitting should be determined from the mapping itself (i.e., independently of the choice of the loss function). Such an approach yields the uniform generalization bounds that are derived in this paper.

It is worth highlighting that uniform generalization bounds can be established for many other settings that have not be discussed in this paper and it has found some promising applications. Using sample compression schemes, one can show that any learnable hypothesis space is also learnable by an algorithm that achieves uniform generalization [36]. Also, generalization bounds for stochastic convex optimization yield information criteria for model selection that can outperform the popular Akaike’s information criterion (AIC) and Schwarz’s Bayesian information criterion (BIC) [37]. More recently, uniform generalization has inspired the development of new approaches for structured regression as well [38].

7. Further Research Directions

Before we conclude, we suggest future directions of research and list some open problems.

7.1. Induced VC Dimension

The variational information provides an upper bound on the generalization risk of the learning algorithm across all parametric loss classes. This upper bound is achievable by the generalization risk of the binary reconstruction loss:

which assigns the value one to observations that are more likely to have been present in the training sample upon knowing , and assigns zero otherwise. In expectation, the generalization risk of this parametric loss is the worst generalization risk across all parametric loss classes.

Let both and be fixed; the first is the distribution of observations while the second is entirely determined by the learning algorithm . Then, because the loss in Equation (16) is binary, it has a VC dimension, which we will call the induced VC dimension of the learning algorithm [39]. Note that this induced VC dimension is defined for all learning problems, including regression and clustering, but it is distribution-dependent, which is quite unlike the traditional VC dimension of hypothesis spaces.

There are a lot of open questions related to the induced VC dimension of learning algorithms. For instance, while a finite VC dimension implies a small variational information, when does the converse also hold? Can we obtain a non-trivial bound on the induced VC dimension of a learning algorithm upon knowing its uniform generalization risk ? Along similar lines, suppose that is an empirical risk minimization (ERM) algorithm of a 0–1 loss class that may or may not use an appropriate tie breaking rule (in light of what was discussed in Section 5.5). Is there a non-trivial relation between the VC dimension of the 0–1 loss that is being minimized and the induced VC dimension of the ERM learning algorithm?

7.2. Unsupervised Model Selection

Information criteria (such as AIC and BIC), are sometimes used in the unsupervised learning setting for model selection, such as when determining the value of k in the popular k-means algorithm [40]. Given that the notion of uniform generalization is developed in the general setting of learning, should the learning capacity serve as a model selection criterion in the unsupervised setting? Why or why not?

7.3. Effective Domain Size

The effective size of the domain of a random variable in Definition 8 satisfies some intuitive properties and violates others. For instance, it reduces to the size of the domain when the distribution is uniform. Moreover, if is Bernoulli, the effective domain size is determined by the variance of the Bernoulli distribution. Importantly, this notion is well-motivated because it determines the rate of convergence of an empirical probability mass function to its true distribution when the distance is measured in the total variation sense. As a result, it allowed us to relate the learning capacity to a property of the domain .

However, such a notion of effective domain size has some surprising properties. For instance, the effective size of the domain of two independent random variables is not equal to the product of the effective size of each individual domain! In rate distortion theory, a similar phenomenon is observed. Reference [10] explain this observation by stating that “ rectangular grid points (arising from independent descriptions) do not fill up the space efficiently.” Can the effective domain size in Definition 8 be motivated using rate distortion theory?

Funding

This research received no external funding.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Proof of Lemma 2

With no loss of generality, let’s assume that all domains are enumerable. We have:

However, the minimum of the sums is always larger than the sum of minimums. That is:

Using marginalization and the above inequality, we obtain:

Appendix B. Proof of Theorem 1

We will first prove the inequality when . First, we write by definition:

Using the fact that the total variation distance is related to the distance by , we have:

Using the triangle inequality:

The above inequality is interpreted by expanding the distance into a sum of absolute values of terms in the product space , where . Next, we bound each term on the right-hand side separately. For the first term, we note that:

The equality holds by expanding the distance and using the fact that .

Appendix C. Proof of Proposition 1

By the triangle inequality:

Therefore:

Combining this with the following chain rule of Theorem 2:

yields:

Or equivalently:

To prove the other inequality, we use Lemma 2. We have:

where the first inequality follows from Lemma 2 and the second inequality follows from the chain rule. Thus, we obtain the desired bound:

Both Equations (A4) and (A5) imply that the chain rule is tight. More precisely, the inequality can be made arbitrarily close to an equality when one of the two terms in the upper bound is chosen to be arbitrarily close to zero.

Appendix D. Proof of Theorem 3

We will use the following fact:

Fact 1.

Letbe a function with a bounded range in the interval. Letandbe two different probability measures defined on the same space. Then:

First Setting: We first consider the following scenario. Suppose a learning algorithm produces a hypothesis from some marginal distribution independently of the training sample . Afterwards, produces a second hypothesis according to . In other words, depends on both and but the latter two random variables are independent of each other. Under this scenario, we have:

where the equality follows from the chain rule in Theorem 1, the statement of Proposition 1, and the fact that .

The conditional variational information is written as:

where we used the fact that . By marginalization:

Similarly:

Therefore:

Next, we note that since is independent of the sample , the variational information between and can be bounded using Theorem 6. This follows because is selected independently of the sample , and, hence, the i.i.d. property of the observations continues to hold. Therefore, we obtain:

Because is arbitrary in our derivation, the above bound holds for any distribution of observations , any distribution , and any family of conditional distributions .

Original Setting: Next, we return to the original setting where both and are chosen according to the training sample . We have:

In the last line, we used the triangle inequality.

Next, we would like to bound the first term. Using the fact that the total variation distance is related to the distance by , we have:

Here, the inequality follows from Fact 1.

Appendix E. Proof of Proposition 3

Let denote the mutual information between and and let denote the Shannon entropy of the random variable measured in nats (i.e., using natural logarithms). As before, we write . We have:

The second line is the chain rule for entropy and the third lines follows from the fact that conditioning reduces entropy. We obtain:

By Pinsker’s inequality:

Using the chain rule for mutual information:

The desired bound follows by applying the same proof technique of Theorem 4 on the last uniform generalization bound, and using the fact that .

Appendix F. Proof of Proposition 4

Before we prove the statement of the theorem, we begin with the following lemma:

Lemma A1.

Let the observation spacebe the interval, whereis continuous in. Letbe a set of k examples picked at random without replacement from the training sample. Then.

Proof.

First, we note that is a mixture of two distributions: one that is uniform in with probability , and the original distribution with probability . By Jensen’s inequality, we have . Second, let the parametric loss be . Then, . By Theorem 2, we have . Both bounds imply the statement of the lemma. □

Now, we prove Proposition 4. Consider the setting where and suppose that the observations have a continuous marginal distribution. Because t is a rational number, let the sample size m be chosen such that is an integer.

Let be the training set, and let the hypothesis be given by with some probability and otherwise. Here, the k instances are picked uniformly at random without replacement from the sample . To determine the variational information between and , we consider the two cases:

- If , then as proved in Lemma 1. This happens with probability by design.

- Otherwise, . Thus: .

So, by combining the two cases above, we deduce that:

Therefore, generalizes uniformly with the rate . Next, let the parametric loss be given by . With this loss:

which is the statement of the proposition.

Appendix G. Proof of Theorem 5

Because is countable, we will assume without loss of generality that , and we will write to denote the marginal distribution of observations. Since all lazy learners are equivalent, we will look into the lazy learner whose hypothesis is equal to the training sample itself up to a permutation. Let denote the number of times was observed in the training sample. Note that , and so .

We have:

Using the relation for any two probability distributions p and q, we obtain:

For the inner summation, we write:

Using the multinomial series, we simplify the right-hand side into:

Now, we use De Moivre’s formula for the mean deviation of the binomial random variable (see the proof of Example 1). This gives us:

Using Stirling’s approximation to the factorial [17], we obtain the simple asymptotic expression:

Plugging this into the earlier expression for yields:

Due to the tightness of the Stirling approximation, the asymptotic expression for the variational information is tight. Because , we deduce that:

which provides the asymptotic rate of convergence of an empirical probability mass function to the true distribution.

Appendix H. Proof of Proposition 5

First, we note that for any two adjacent samples and and any , we have in the differential privacy setting:

Similarly, we have:

Both results imply that:

We write:

The last inequality follows by convexity. Next, let be a sample that contains observations drawing i.i.d. from . Then:

where are two adjacent samples. Finally, we use Equation (A11) to arrive at the statement of the proposition.

Appendix I. Proof of Theorem 8

The proof is similar to the classical VC argument. Given a fixed hypothesis space , a fixed domain , and a 0–1 loss function , let be a training sample that comprises of m i.i.d. observations. Define the restriction of to by:

In other words, is the set of all possible realizations of the 0–1 loss for the elements in by hypotheses in . We can introduce an equivalence relation between the elements of w.r.t. the sample . Specifically, we say that for , we have if and only if:

It is trivial to see that this defines an equivalence relation; i.e., it is reflexive, symmetric, and transitive. Let the set of equivalence classes w.r.t. be denoted . Note that we have a one-to-one correspondence between the members of and the members of . Moreover, is a partitioning of .

We use the standard twin-sample trick where we have and learns based on only. For any fixed , let be an arbitrary loss function, which can be different from the loss l that is optimized during the training. A Hoeffding bound for sampling without replacement [41] states that:

Hence:

This happens for a hypothesis that is fixed independently of the random split of into training and ghost samples. When h is selected according to the random split of , then we need to employ the union bound.

For any subset , let be the least element in H according to ⪯. Let be as defined previously and write . Then, it is easy to observe that the ERM learning rule of Theorem 2 must select one of the hypotheses in regardless of the split . This holds because is a coarser partitioning of than . In other words, every member of is a union of some finite number of members of . By the well-ordering property, the “ least” element among the empirical risk minimizers must be in .

Hence, there is, at most, possible hypotheses given , where is the growth function (sometimes referred to as the shattering coefficient), and those hypotheses can be fixed independently of the random splitting of into a training sample and a ghost sample .

Consequently, we have by the union bound:

where d is the VC dimension of . Finally, to bound the generalization risk in expectation, we use Lemma A.4 in [13], which implies that if :

Writing for the least empirical risk minimizer w.r.t. the training sample :

Because this bound in expectation holds for any single loss , it holds for the following loss function:

which is a deterministic 0–1 loss function of h that assigns to the value 1 if and only if our knowledge of h increases the probability that z belongs to the training sample. However, the generalization risk in expectation for the loss is equal to the variational information as shown in the proof of Theorem 2. Hence, we have the bound stated in the theorem:

Because this is a distribution-free bound, we have:

Appendix J. Proof of Theorem 9

Let be a set of d points in that are shattered by hypotheses in . By definition, this implies that for any possible 0–1 labeling , there exists a hypothesis such that .

Given an ERM learning rule whose hypothesis is denoted , let be the uniform distribution of instances over and define:

In other words, is the least probable class that is assigned by to the instance when is unseen in the training sample. Let with denote the uniform distribution of instances over with given by the labeling rule above.

By drawing a training sample of m i.i.d. observations from , our first task is to bound the expected number of distinct values in that are not observed in the training sample. Let:

Then, the expected number of distinct values in that are not observed in the training sample is:

Here, we used the linearity of expectation, which holds even when the random variables are not independent. This shows that the expected fraction of instances in that are not seen in the sample is .

Next, given an ERM learning rule that outputs an empirical risk minimizer, the training error of this learning algorithm is zero because is shattered by . However, for any learning rule , the expected error rate on the unseen examples is, at least, by construction. Therefore, there exists a distribution in which the generalization risk is, at least, .

By Theorem 2, the learning capacity is an upper bound on the maximum generalization risk across all distributions of observations and all parametric loss functions. Consequently:

which is the statement of the theorem.

References

- Shalev-Shwartz, S.; Shamir, O.; Srebro, N.; Sridharan, K. Stochastic Convex Optimization. In Proceedings of the Annual Conference on Learning Theory, Montreal, QC, Canada, 18–21 June 2009. [Google Scholar]

- Bartlett, P.L.; Jordan, M.I.; McAuliffe, J.D. Convexity, classification, and risk bounds. J. Am. Stat. Assoc. 2006, 101, 138–156. [Google Scholar] [CrossRef]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Blumer, A.; Ehrenfeucht, A.; Haussler, D.; Warmuth, M.K. Learnability and the Vapnik-Chervonenkis dimension. JACM 1989, 36, 929–965. [Google Scholar] [CrossRef]

- McAllester, D. PAC-Bayesian stochastic model selection. Mach. Learn. 2003, 51, 5–21. [Google Scholar] [CrossRef]

- Bousquet, O.; Elisseeff, A. Stability and generalization. JMLR 2002, 2, 499–526. [Google Scholar]

- Bartlett, P.L.; Mendelson, S. Rademacher and Gaussian complexities: Risk bounds and structural results. JMLR 2002, 3, 463–482. [Google Scholar]

- Kutin, S.; Niyogi, P. Almost-everywhere algorithmic stability and generalization error. In Proceedings of the Eighteenth conference on Uncertainty in Artificial Intelligence (UAI), Edmonton, AB, Canada, 1–4 August 2002. [Google Scholar]

- Poggio, T.; Rifkin, R.; Mukherjee, S.; Niyogi, P. General conditions for predictivity in learning theory. Nature 2004, 428, 419–422. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley & Sons: New York, NY, USA, 1991. [Google Scholar]

- Hardt, M.; Recht, B.; Singer, Y. Train faster, generalize better: Stability of stochastic gradient descent. arXiv 2015, arXiv:1509.01240. [Google Scholar]

- Dwork, C.; Feldman, V.; Hardt, M.; Pitassi, T.; Reingold, O.; Roth, A. Preserving Statistical Validity in Adaptive Data Analysis. In Proceedings of the Forty-Seventh Annual ACM on Symposium on Theory of Computing (STOC), Portland, OR, USA, 14–17 June 2015; pp. 117–126. [Google Scholar]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: New York, NY, USA, 2014. [Google Scholar]

- Raginsky, M.; Rakhlin, A.; Tsao, M.; Wu, Y.; Xu, A. Information-theoretic analysis of stability and bias of learning algorithms. In Proceedings of the 2016 IEEE Information Theory Workshop (ITW), Cambridge, UK, 11–14 September 2016; pp. 26–30. [Google Scholar]

- Janson, S. Probability asymptotics: Notes on notation. arXiv 2011, arXiv:1108.3924. [Google Scholar]

- Tao, T. Topics in Random Matrix Theory; American Mathematical Society: Providence, RI, USA, 2012. [Google Scholar]

- Shalev-Shwartz, S.; Shamir, O.; Srebro, N.; Sridharan, K. Learnability, stability and uniform convergence. JMLR 2010, 11, 2635–2670. [Google Scholar]

- Talagrand, M. Majorizing measures: The generic chaining. Ann. Probab. 1996, 24, 1049–1103. [Google Scholar] [CrossRef]

- Audibert, J.Y.; Bousquet, O. Combining PAC-Bayesian and generic chaining bounds. JMLR 2007, 8, 863–889. [Google Scholar]

- Xu, H.; Mannor, S. Robustness and generalization. Mach. Learn. 2012, 86, 391–423. [Google Scholar] [CrossRef]

- Csiszár, I. A Class of Measures of Informativity of Observation Channels. Period. Math. Hung. 1972, 2, 191–213. [Google Scholar] [CrossRef]

- Csiszár, I. Axiomatic Characterizations of Information Measures. Entropy 2008, 10, 261–273. [Google Scholar] [CrossRef]

- Russo, D.; Zou, J. Controlling Bias in Adaptive Data Analysis Using Information Theory. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics (AISTATS), Cadiz, Spain, 9–11 May 2016. [Google Scholar]

- Bassily, R.; Moran, S.; Nachum, I.; Shafer, J.; Yehudayoff, A. Learners that Use Little Information. PMLR 2018, 83, 25–55. [Google Scholar]

- Elkan, C. The foundations of cost-sensitive learning. In Proceedings of the IJCAI, Seattle, WA, USA, 4–10 August 2011. [Google Scholar]

- Kull, M.; Flach, P. Novel decompositions of proper scoring rules for classification: Score adjustment as precursor to calibration. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Cham, Switzerland, 2015; pp. 68–85. [Google Scholar]

- Robbins, H. A remark on Stirling’s formula. Am. Math. Mon. 1955, 62, 26–29. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support vector machine. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Q.; Chen, Y. RBF kernel based support vector machine with universal approximation and its application. ISNN 2004, 3173, 512–517. [Google Scholar]

- Downs, T.; Gates, K.E.; Masters, A. Exact simplification of support vector solutions. JMLR 2002, 2, 293–297. [Google Scholar]

- Stigler, S.M. The History of Statistics: The Measurement of Uncertainty before 1900; Harvard University Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Proceedings of the Third Theory of Cryptography Conference (TCC 2006), New York, NY, USA, 4–7 March 2006; pp. 265–284. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Theor. Comput. Sci. 2013, 9, 211–407. [Google Scholar]

- Koren, T.; Levy, K. Fast rates for exp-concave empirical risk minimization. In Proceedings of the NIPS 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 1477–1485. [Google Scholar]

- Kolmogorov, A.N.; Fomin, S.V. Introductory Real Analysis; Dover Publication, Inc.: New York, NY, USA, 1970. [Google Scholar]

- Alabdulmohsin, I.M. An information theoretic route from generalization in expectation to generalization in probability. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS 2017), Fort Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Alabdulmohsin, I. Information Theoretic Guarantees for Empirical Risk Minimization with Applications to Model Selection and Large-Scale Optimization. In Proceedings of the International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; pp. 149–158. [Google Scholar]

- Pavlovski, M.; Zhou, F.; Arsov, N.; Kocarev, L.; Obradovic, Z. Generalization-Aware Structured Regression towards Balancing Bias and Variance. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018; pp. 2616–2622. [Google Scholar]

- Alabdulmohsin, I.M. Algorithmic Stability and Uniform Generalization. In Proceedings of the NIPS 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 19–27. [Google Scholar]

- Pelleg, D.; Moore, A.W. X-means: Extending k-means with efficient estimation of the number of clusters. In Proceedings of the Seventeenth International Conference on Machine Learning, Stanford, CA, USA, 29 June–2 July 2000; pp. 727–734. [Google Scholar]

- Bardenet, R.; Maillard, O.A. Concentration inequalities for sampling without replacement. Bernoulli 2015, 21, 1361–1385. [Google Scholar] [CrossRef]