1. Introduction

The dynamics of proteins in solution can be modeled by a complex physical system [

1], yielding many interesting effects such as the competition and cooperation between elasticity and phenomena caused by swelling. Biopolymers, such as proteins, consist of monomers (amino acids) that are connected linearly. Thus, a chain seems to be the most natural model of such polymers. However, depending on the properties of the polymer molecule, modeling it as a chain may give more or less accurate outcomes [

2]. The most straightforward approach to the polymer is the freely jointed chain, where each monomer moves independently. The excluded volume chain effect, which prevents a polymer’s segments from overlapping, is one of the reasons why the real polymer chain differs from an ideal chain [

3]. The diffusive dynamics of biopolymers are discussed further in [

4] and the references therein, and the subdiffusive dynamics are discussed in [

5,

6]. For a given combination of polymer and solvent, the excluded volume varies with the temperature and can be zero at a specific temperature, named the Flory temperature [

7]. In other words, at the Flory temperature, the polymer chain becomes nearly ideal [

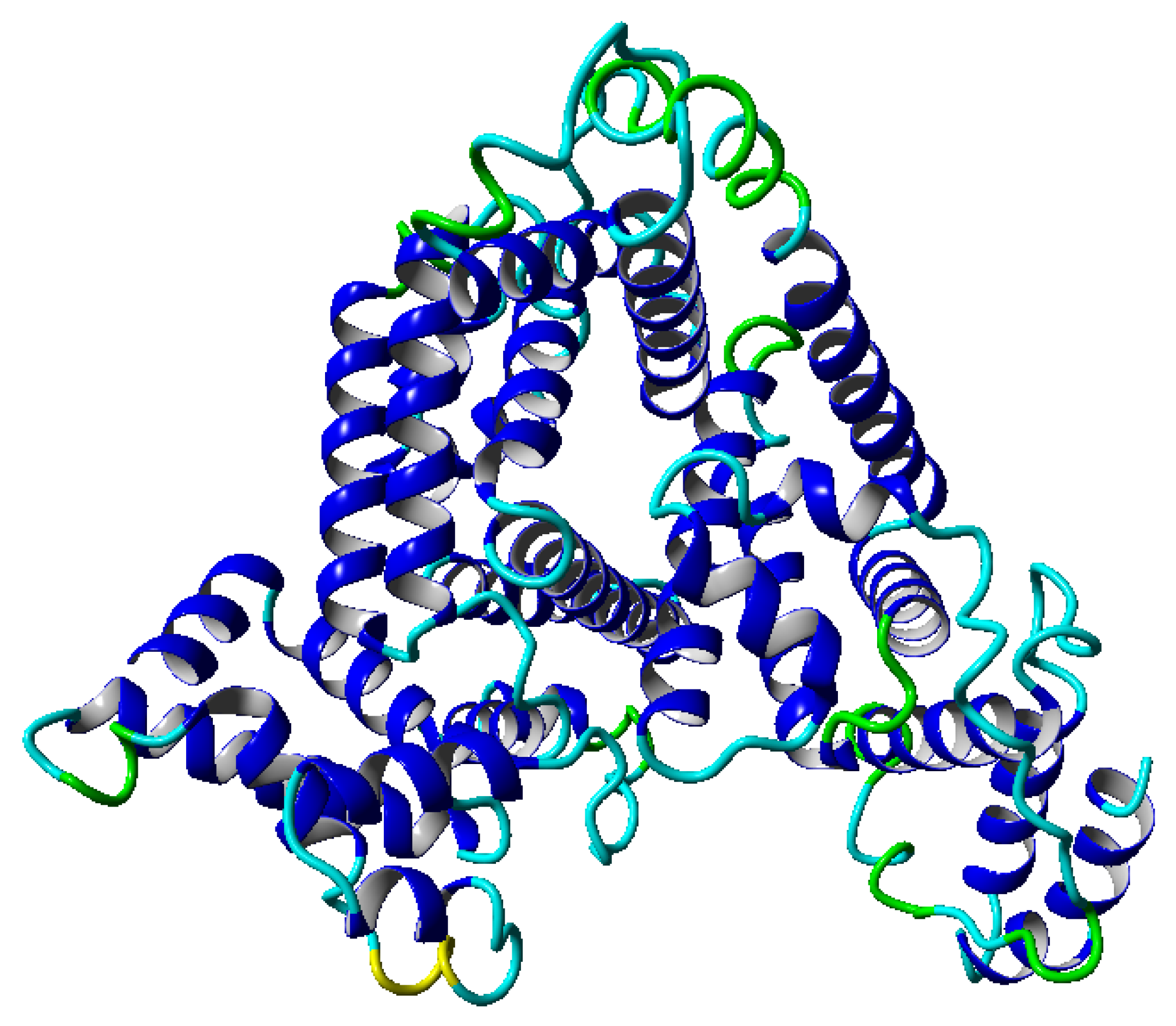

8]. However, this parameter is global, and a more detailed description of the dynamics is required to perform additional detailed analyses. Our work concentrates on an analysis of albumin (shown in

Figure 1) [

9]. Albumin plays several crucial roles as the main blood plasma protein (60% of all proteins): controlling pH, inducing oncotic pressure, and transporting fluid. This protein (of nonlinear viscoelastic, with characteristics of rheopexy [

10]) plays an essential role in the process of articular cartilage lubrication [

11] by synovial fluid (SF). SF is a complex fluid of crucial relevance for lowering the coefficients of friction in articulating joints, thus facilitating their lubrication [

12]. From a biological point of view, this fluid is a mixture of water, proteins, lipids, and other biopolymers [

13]. As an active component of lubricating mechanisms, albumin is affected by friction-induced increases of temperature in synovial fluid. The temperature inside the articular cartilage is in the range of

–

[

14], and changes occurring inside the fluid can affect the outcome of albumin’s impact on lubrication [

10]. Albumin can help to regulate temperature during fever. Therefore, a similar mechanism could take place during joint friction, increasing SF lubricating properties as an efficient heat removal. We analyze this range of temperature: from

–

.

Let us move to the technical introduction of albumin dynamics. Consider a chain that was formed by

monomers, assuming the following positions, designated by

. We denote by

a vector of a distance between two neighboring monomers. While considering the albumin, we have

amino acids. For the length of the distance vector

, we take the distance between two

-carbons in two consecutive amino acids, hence we have

[Å] [

15]. Using such a notation, we can define the end-to-end vector

by the following formula:

In the case of an ideal chain, the distance vectors (for all

Ns) are completely independent of each other. This property is expressed by a lack of correlation between any two different bonds, that is [

16]:

where

is the Kronecker delta. Equations (

1) and (

2) imply that a dot self-product of vector

reads:

where

R is the length of the vector

. The value of

R is measured by calculating the root mean square end-to-end distance of a polymer. The

R’th value is assumed to depend on the polymer’s length

N in the following manner [

17]:

where

is called the size exponent. If a chain is freely joined, it appears that

. Relation (

4) suggests we consider the dynamics of the albumin protein in some range of temperature and look for dependence between the temperature and exponent

. Applying the natural-logarithm operation to (

4) yields:

In Equation (

4) we use a ∼ symbol to emphasize that we use

to obtain

R. In the case discussed here, both parameters

and

N (the number of amino acids) are constant. Importantly, using molecular dynamics simulations, one can obtain the root mean square end-to-end distance for various temperatures. The idea of the chain points to its description by backbone angles and dihedral angles. Backbone angles are angles between three

-carbons occurring after each other and signed by

. The second angle, designated by

, is a dihedral angle [

18]. Using this parametrization, we can obtain an experimental distribution of probabilities. Such distributions have features that may display some time-dependent changes.

These time-dependent changes may have an utterly random course, but sometimes periodic behavior can be seen. This property can be analyzed using a particular statistical test [

19]. There is an assumption that the time series sampling is even, and the test treats it as a realization of the stochastic model evolution, which consists of specific terms of the form:

where

,

,

,

is uniformly distributed in

phase factor and

is an independent, identically distributed random noise sequence. The null hypothesis is that amplitude

is equal zero: (

:

) against the alternative hypothesis that

:

.

A periodogram of a time series (

) is used to test the above mentioned hypothesis and it is defined by the following formula:

where in general,

and

is the imaginary unit. For a discrete time series, a discrete value of

from

is being used:

which are known as Fourier frequencies.

The periodogram (for a single time series) can contain a peak. The statistical test should give us information about the significance of this selected peak. It is measured by the

p-value parameter. The statistic which is used in this test has the form:

where:

is the spectral estimate (the periodogram) evaluated at Fourier frequencies and

is the entire of the number

. The

p-value can be calculated according to the formula [

20]:

where

p is the largest integer less than

. In our analysis, we use this framework to evaluate a

p-value for a given time series of entropy. If we get

from the calculation of

g, then Equation (

10) gives a

p-value for probability that the statistic

g is larger than

.

Proteins can be characterized by various parameters that can be obtained from simulations, such as the earlier mentioned

or

. Another meaningful parameter is the RMSD (root mean squared displacement), which can be calculated in every moment of the simulation and is defined as:

where:

is a position of the

i-th atom. In this way, one can obtain a series of this parameter as a function of time for the further processing. The drawing procedure is realized at the beginning of the simulation from the area of initial values. The initial condition for the albumin molecule drawing is simulated for a given temperature.

We can then group molecules according to mentioned parameters (

,

, RMSD) and obtain five sets for

,

,

,

, and

, and compare these parameters’ values. We decided to use the Kruscal–Wallis statistical test because it does not assume a normal distribution of the data. This nonparametric test indicates the statistical significance of differences in the median between sets. A statistic of this test has the form [

21]:

where

is a sum of all rank of the given sample,

N represents the number of all data in all samples, and

k is the number of the groups (set into temperature). We compare the

H value of this statistic to the critical value of the

distribution. If the statistic crosses this critical value, we use a nonparametric multi-comparison test—the Conover–Iman. The formula which we employ has the form [

22]:

where

is the sum of all square of ranks,

is a quartile from the Student

t distribution on

degrees of freedom, and

C has the value:

The multi-comparison test gives the possibility of clustering sets of molecules into those between which there is a statistically significant difference and those between which there is no such difference.

Statistical tests describe differences between medians of the considered sets of molecules. However, they are not informative about differences between distributions of probabilities. In our work, we use the Kullback–Leibler divergence, which is used in information theory, to compare two distributions. The Kullback–Leibler divergence between two distributions

p and the reference distribution

q is defined as:

The Kullback–Leibler divergence is also called the relative entropy of

p with respect to

q. Divergence defined by (

15) is not symmetric measure. Therefore, sometimes one can use a symmetric form and obtain the symmetric distance measure [

23]:

The measure defined by (

15) is called relative entropy. It is widely used, especially for classification purposes. In our work, we also use this measure to recognize how a given probability distribution is different from a given reference probability distribution. We can look at this method as a complement to the Kruscall–Wallis test. The Kruscall–Wallis test gives information about the differences between medians, while the Kullback–Leibler divergence gives us information about the differences in distributions.

2. Results

We begin the analysis by examining the global dynamics of molecules at different temperatures using the Flory theory, briefly described in the introduction. To estimate the exponent

from definition in Formula (

5), we collect outcomes from a molecular dynamics simulation in the temperature range of

–

. We use a temperature step of

. Such a range of temperature was chosen because we operated within the physiological range of temperatures. During the simulation, we can follow the structural properties of molecules in the considered range of temperatures. In the albumin structure, we can distinguish 22

-helices, which are presented in

Figure 1. Molecules in the given range of parameters display roughly similar structures. Therefore, we chose to study their dynamics in several temperatures. One important property, which we can follow during simulations, is a polymer’s end-to-end distance vector as a function of time. We perform the analysis in two time windows, first, between 0–30 ns and, second, between 70–100 ns. For each time window, we obtained the root mean square end-to-end distance of this quantity, whose values are presented in

Table 1:

We can see that the root mean square length of the end-to-end vector for albumin varies with temperature. To obtain statistics, we can perform the Kruscal–Wallis test of this variation. In our work, values of the statistics in the test are treated as continuous parameters. We do not specify a statistical significance threshold. In

Table 2, in the second column, we can see the values of statistics calculated according to Formula (

12). The

H parameters from the Kruscal–Wallis test have small values for this test, so the results tend to support the hypothesis about equal medians.

The Kruscal–Wallis test only gives information about differences between medians of the sets, where each set represents one temperature and elements of the set are calculated as root mean square end-to-end vectors over time. In the analysis, for one temperature, we had the set of root mean square end-to-end vectors, and in the next step, we calculated a numerical normalized histogram. Each element of this set corresponds to the single realization of the experiment. The values of the Kullback–Leibler divergence for mean end-to-end signals in the time window 0–30 ns are presented in

Table 3. In the first column is the reference distribution for the temperature range. We can see that the biggest value is for the distribution of the temperature

in the reference distribution

.

We can calculate the Kullback–Leibler distance according to Formula (

16). The results are presented in

Table 4. This measure indicates that, between distributions, the biggest differences are between the distribution for

and the distribution for

.

We can perform the same analyses for the window 70–100 ns. The obtained values of the Kullback–Leibler divergence, which are presented in

Table 5. One can see that the biggest difference is between the distribution for

and the distribution for

. One can see that the maximum has changed and its value is bigger than the maximum for the 0–30 ns window.

For the symmetric case, results are presented in

Table 6. We can see that a symmetrical form of the measure is also between the distribution for

and the distribution for

.

Table 7,

Table 8,

Table 9 and

Table 10 give the different statistical approaches, without the averaging over the time step). We present there all end-to-end signals for one temperature, and for each moment of time, we calculate the average only over all simulated molecules. In the next step, we calculate normalized histograms. After obtaining numerical distributions, we calculate the Kullback–Leibler divergence between distributions of mean end-to-end signals. The results for the time window 0–30 ns are presented in

Table 7.

We can see that the large value of the Kullback–Leibler divergence appears for the distribution for temperature

, where the distribution for temperature

is a reference distribution. Calculations for the Kullback–Leibler distance for mean end-to-end signals in the time window 0–30 ns are presented in

Table 8. The results coincide with the measure of Kullback–Leibler divergence.

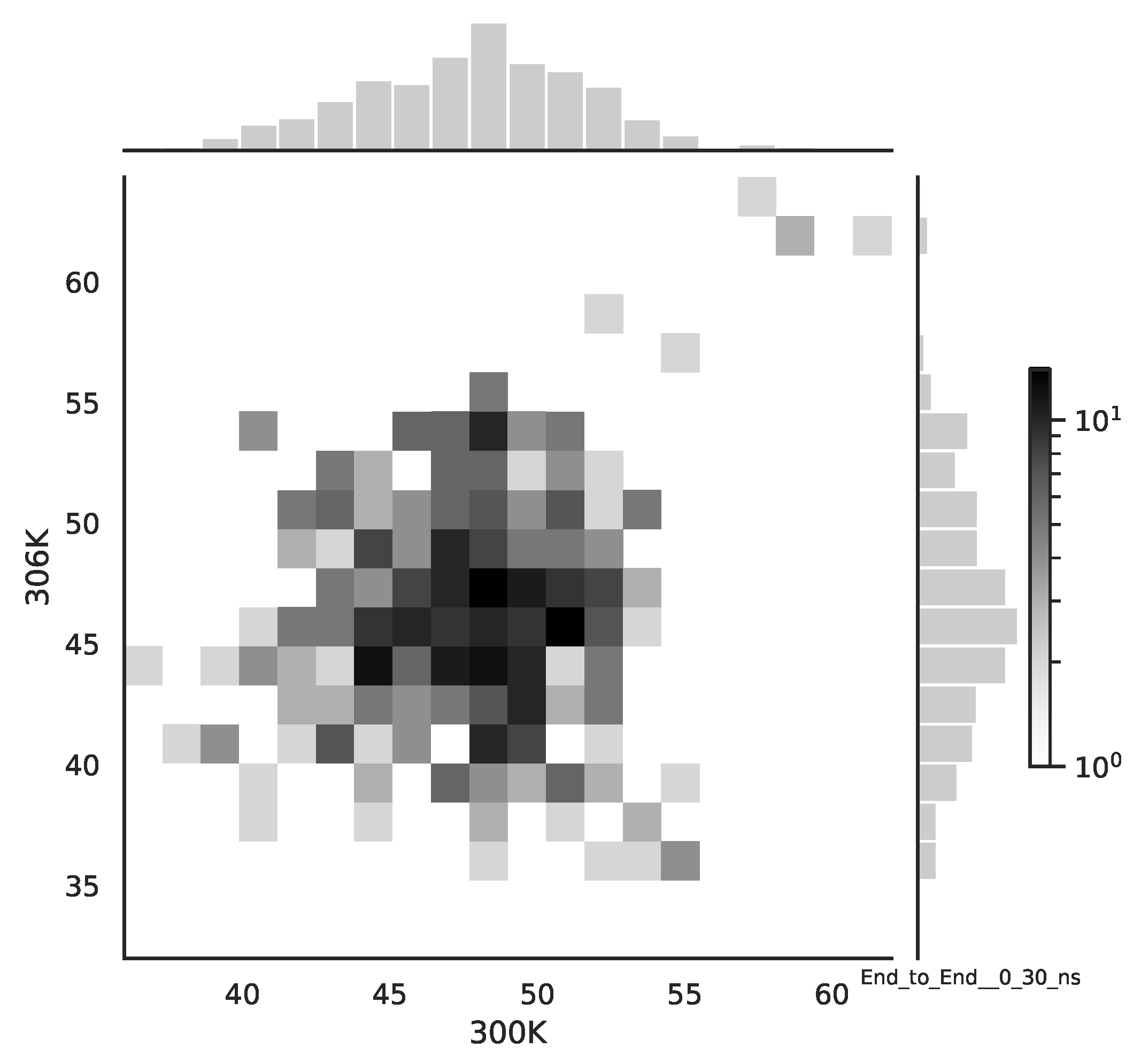

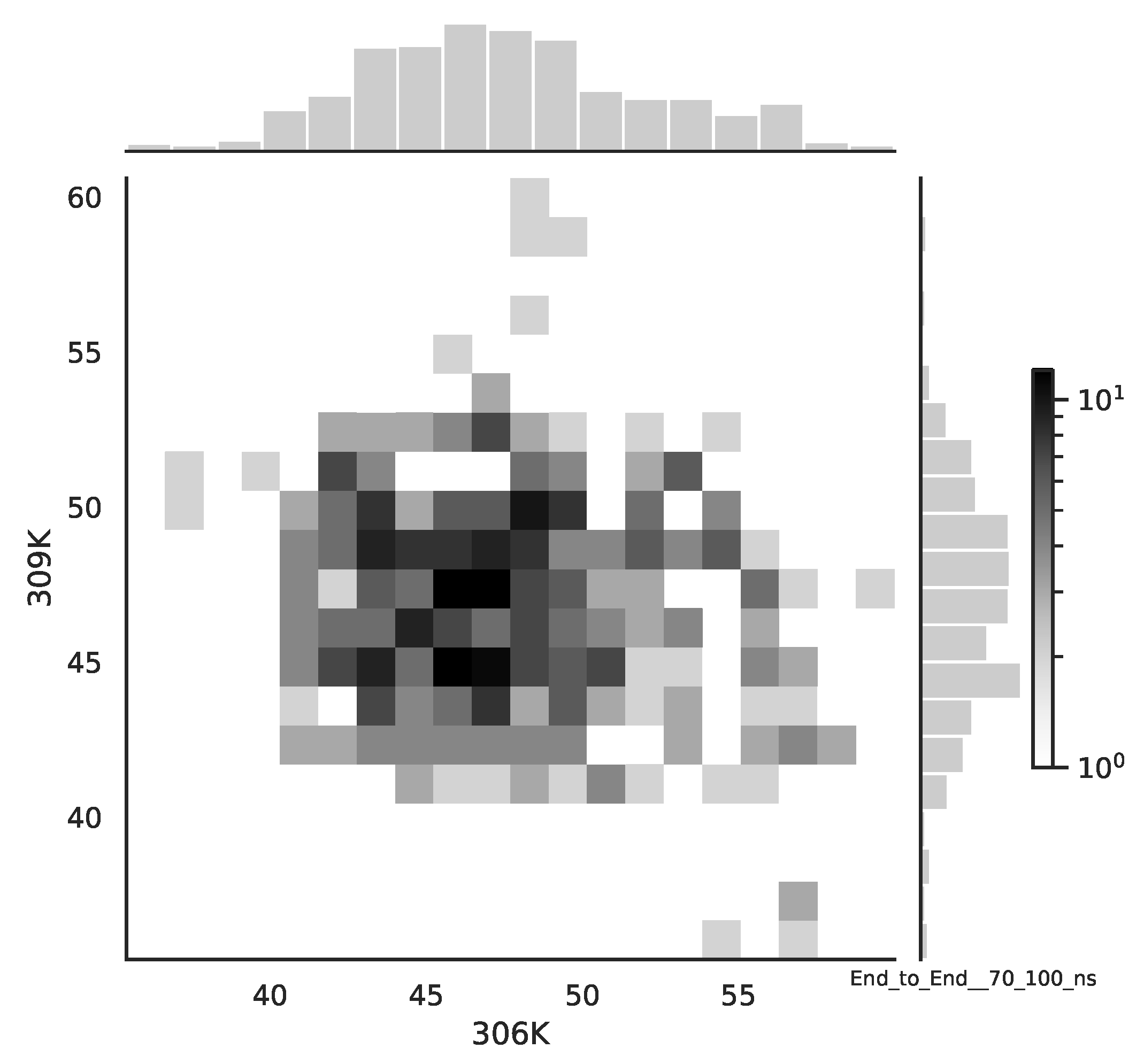

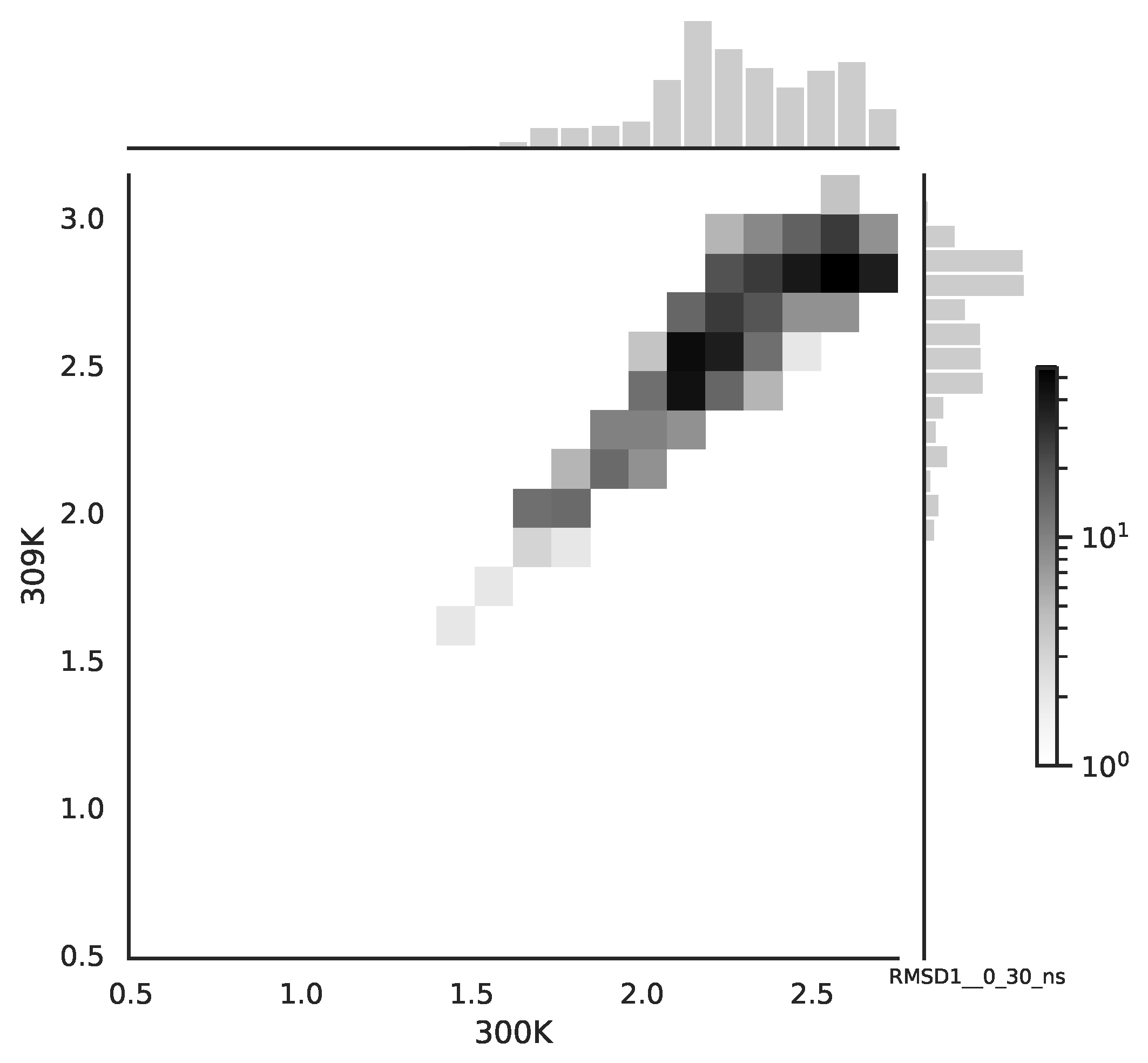

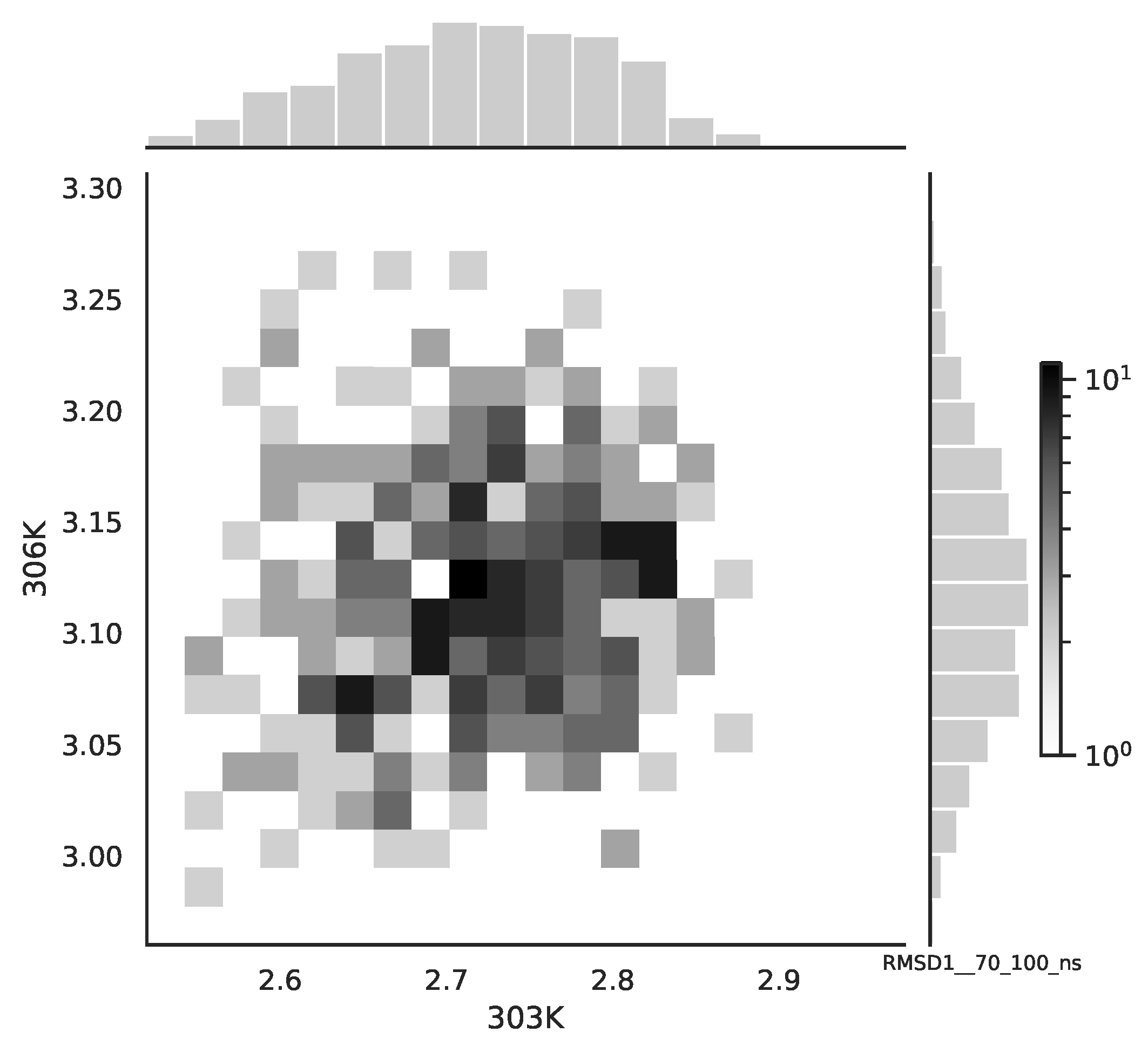

Following the copula approach presented in [

24], we performed the bivariate histograms of some signals to analyse the type of cross-correlation between signals. For the selected example of the mean end-to-end signals in the 0–30 ns time window see

Figure 2, while for the 70–100 ns time window, see

Figure 3. Observe that for the 0–30 ns case, we have simultaneous “high” events, which refer to the “upper tail dependency”. After the simulation time has passed, this dependency is diminished. The copula with the “upper tail dependency”, such as the Gumbel one, can provide the proper model of the mean end-to-end signal for the initial simulation time window. For the later simulation time window, we should look for the copula with no “tail dependencies”, such as the Frank or Gaussian one.

A similar calculation has been performed for the next time window 70–100 ns. In

Table 9, we collect results for Kullback–Leibler divergence for mean end-to-end signals in the time window 70–100 ns.

Its maximal value is between the distribution for and the distribution for as a reference distribution.

In

Table 10 we can see that the biggest value of the Kullback–Leibler distance appears between the distributions for

and

. Comparing this result with the previous one, we see that the maximal Kullback–Leibler distance for the window 0–30 ns changes its place.

In

Table 7,

Table 8,

Table 9 and

Table 10 the diagonal terms are equal 0, since we are comparing a distribution of end-to-end distributions of raw data for the same time window and temperature. In

Table 11 we present selected Kullback–Leibler distances for distributions of raw data end-to-end signals in the time window 70–100 ns and

temperature.

As two different statistical approaches can not unambiguously distinguish between temperatures (or their subsets), we can conclude that dynamics of the system at this temperatures range is roughly similar. This will be approved by the analysis of the the Flory–De Gennes exponent.

Using Equation (

5), we estimate values of the Flory–De Gennes exponent for the given protein chain. Results are presented in

Table 12. We can see that the values of the Flory–De Gennes exponents do not differ too much within the examined range of temperature, and they all seem to attain a value of ca.

. The power-law exponents for oligomers span a narrow range of 0.38–0.41, which is close to the value of

obtained for monomers [

25,

26,

27].

Detailed values for two time windows are presented in

Table 13. We can see that from a global point of view and Flory theory, the situation is quite uniform for all initial conditions and is within the considered range of temperature. Thus, in our modeling, we see an effect of elasticity vs. swelling. This competition is preserved for the examined temperature range.

The H parameters from the Kruscal–Wallis test have small values for this test, so the results tend to support the hypothesis about equal medians.

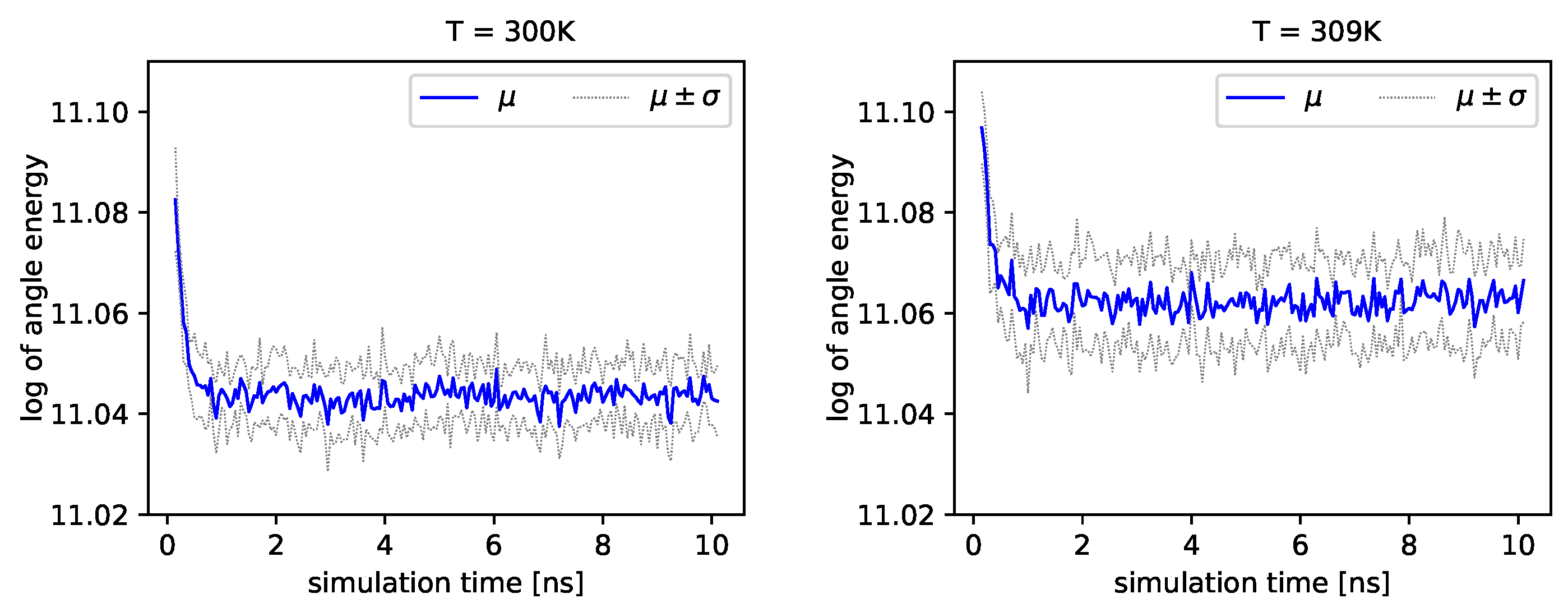

The distribution of backbone dihedral angles carries information of the molecule’s dynamics, as they are tightly connected to its elasticity. Using simulations, we can determine the angle

and the angle

(see Introduction). Next, we can determine energies that depend on these angles by employing Formula (

17). In

Figure 4 and

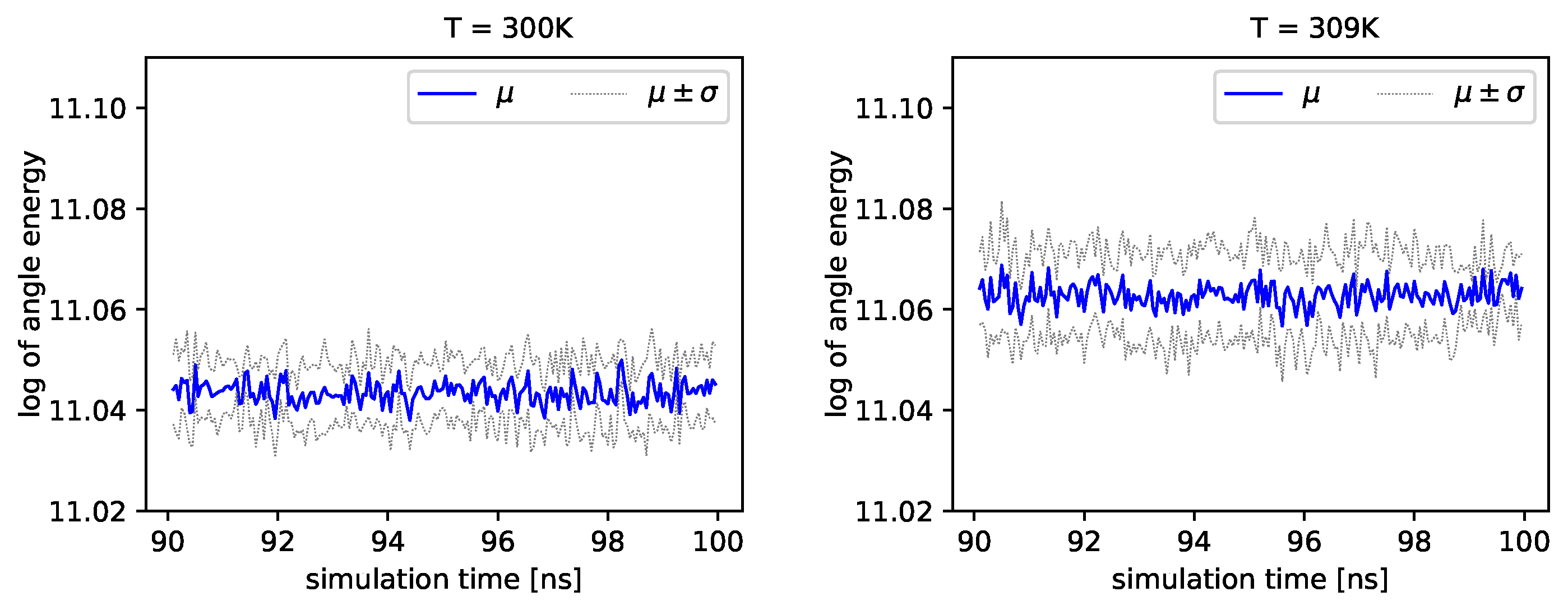

Figure 5, we present the logarithm of the angle energy component for two temperatures.

Figure 4 presents values in a period of time 0–10 ns, and

Figure 5 presents values in a period of time 90–100 ns. We can see that these values fluctuate around the mean value. Such dynamics are similar for all temperatures, but the mean energy rises slightly with increasing temperature. In

Figure 5, the blue line presents a logarithm of the mean value of angle energy and the gray line presents values of the standard deviation. We can see that changes in energy are much more evident.

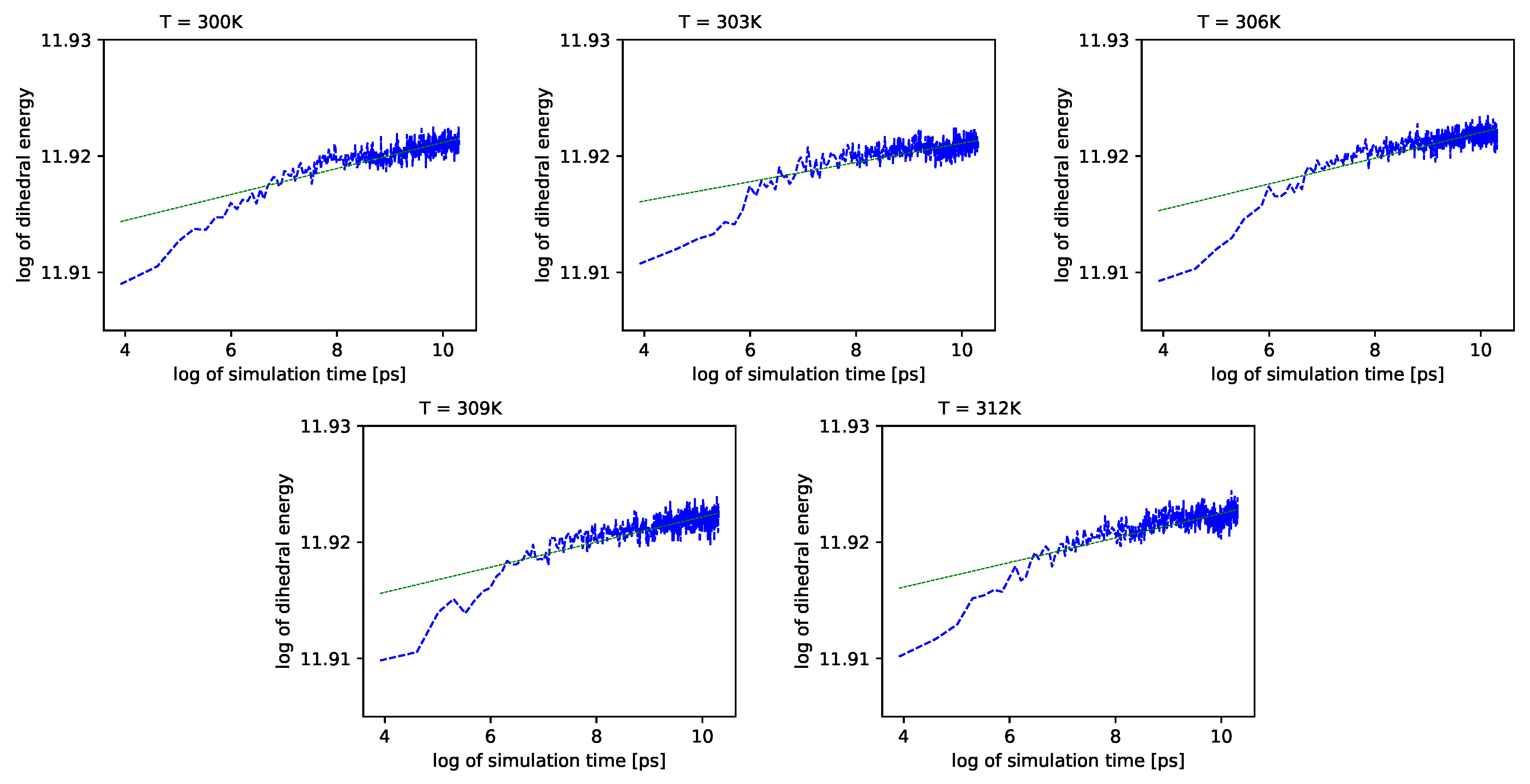

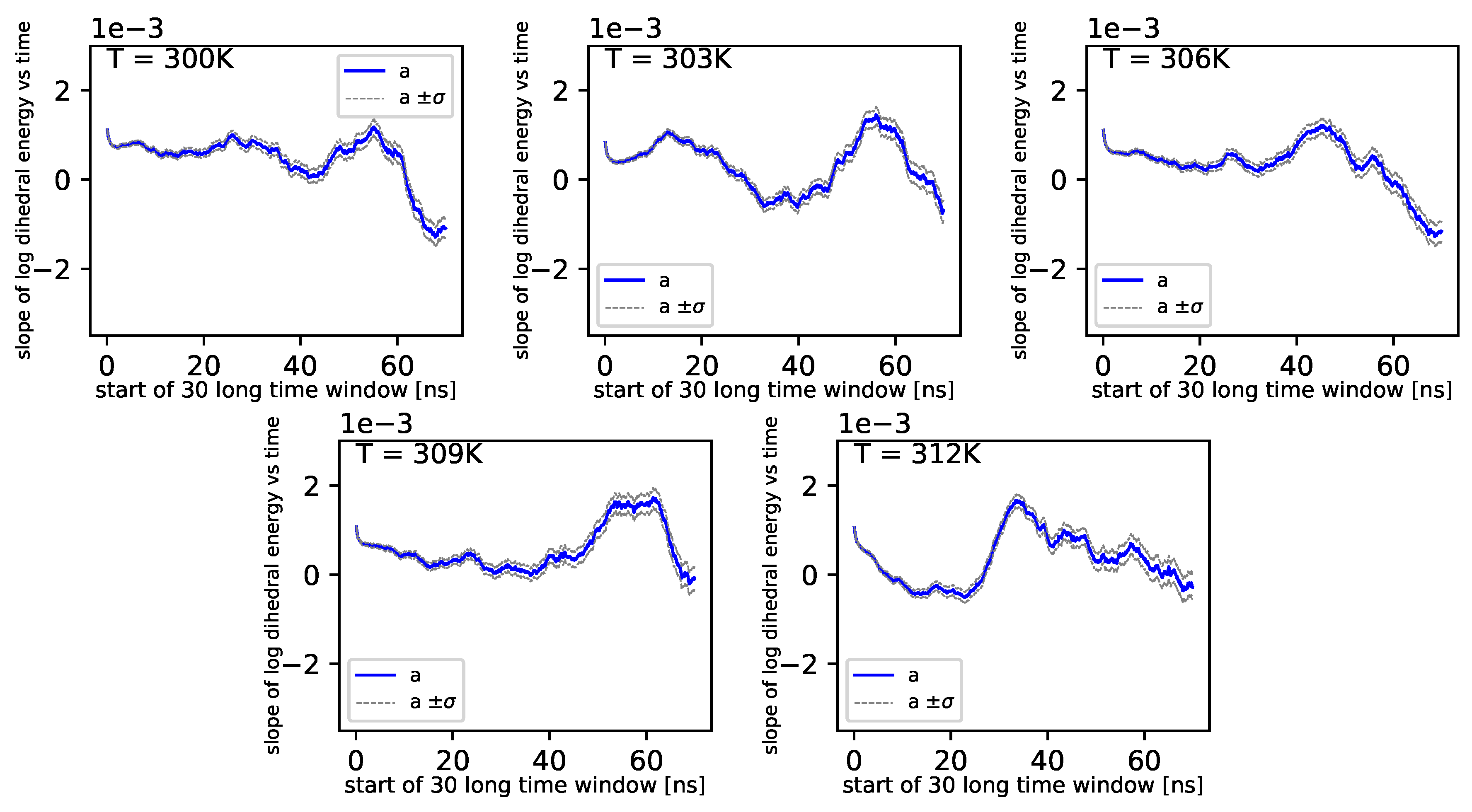

Different outcomes come from an analysis of the energy component connected with the dihedral angles

. See

Figure 6 (blue lines) in double logarithmic scale, and note the linear regression lines. We present a period of time from 1 ns to 10 ns.

Simulations also show that for further times in the log-log scale, these values exhibit almost no change with time.

The dynamics of proteins are governed mainly by non-covalent interactions [

28]. Therefore, it would be useful to study other components of energy, such as the Coulomb component and the van der Waals component (see Equation (

17) and the discussion following it). The Coulomb component oscillates around the almost constant trend present for every temperature. However, the mean value of these oscillations increases with the temperature. The opposite situation appears for the van der Waals component. Similar to the Coulomb component, for each selected temperature, it follows an almost constant trend. However, when the temperature increases, the mean value of such oscillations decreases. The Coulomb component and the van der Waals component obey simple dynamics. Hence, we can move to a further discussion of more interesting dihedral and angular parts of the energy.

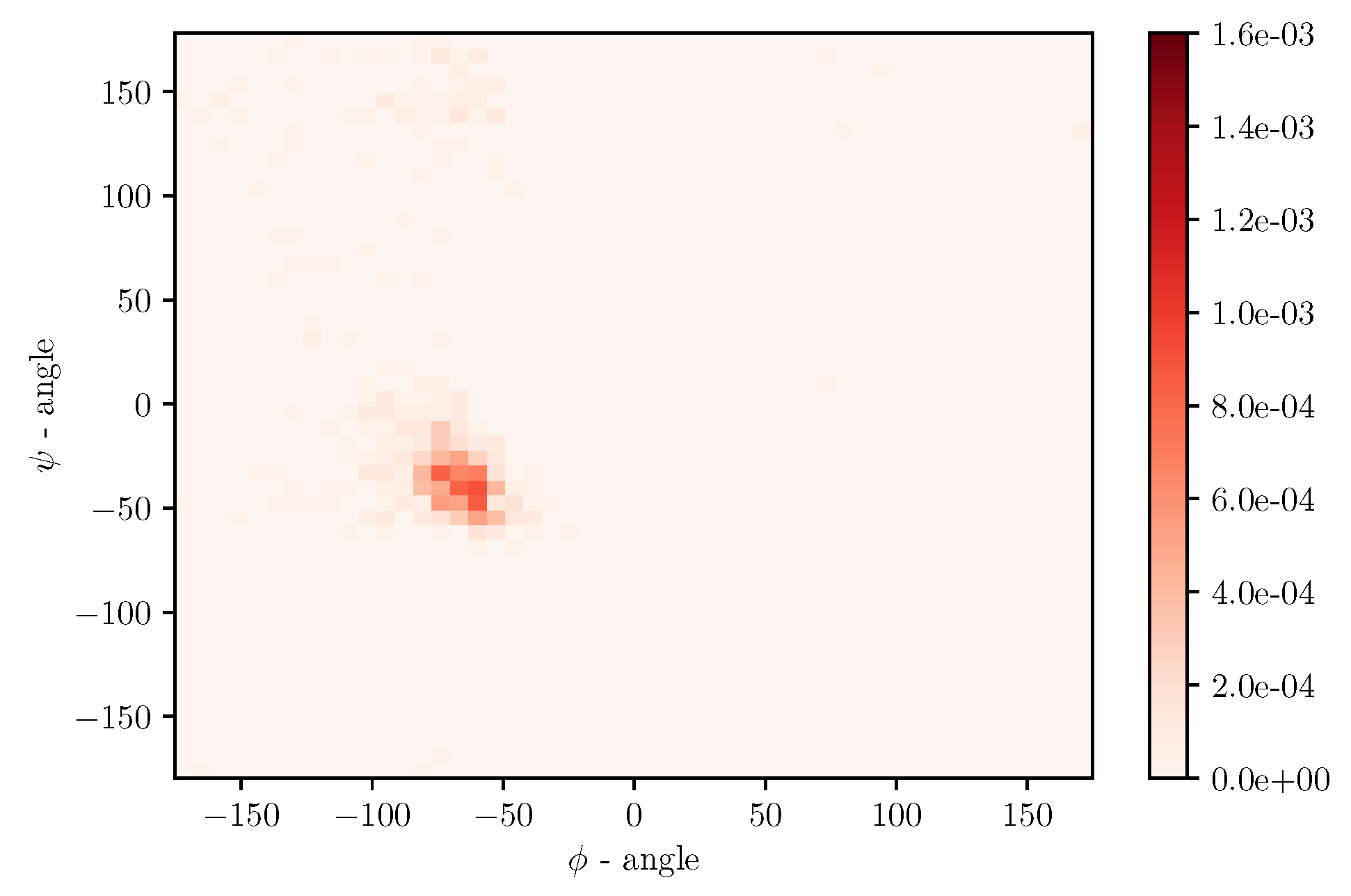

Simulations of albumin dynamics can be used to obtain frequency distributions of angles

and

. An exemplary 2 D histogram of such a distribution is presented in

Figure 8. We can see that most data are concentrated in a small area of space of angles. Similar behavior can be observed for other temperatures and simulation times. This is because the structure of the investigated protein is quite rigid, and there appears to be only a little angular movement. Nevertheless, we can observe variations of the conformational entropy, estimated with such a histogram (see

Figure 9).

According to formulas connecting the probability distribution of angles and entropy [

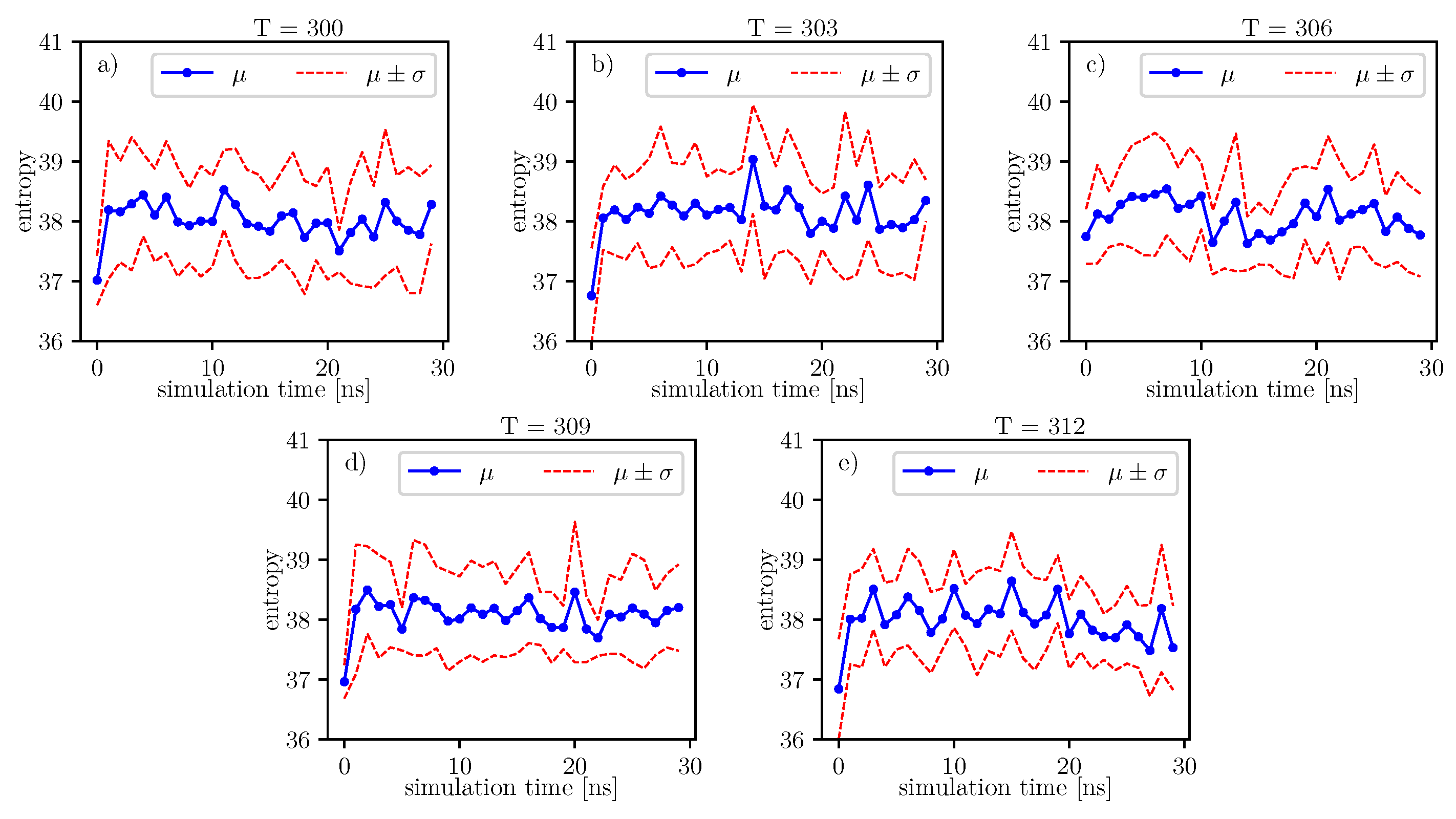

17], we can follow changes of entropy in time and temperature. Values of conformational entropy for various temperatures and with simulation time 0–30 ns are presented in

Figure 9, where the blue line represents the course of the mean entropy value over time. The calculations take into account nine simulations, and one dot presents one arithmetic mean of entropy. Here, we can see that during simulations, some oscillations can appear. Therefore, for each temperature, we perform Fisher’s test. Results of this test are presented in

Table 14.

A simple comparison of the

p-values in

Table 14 shows that the lowest value is located around

degrees. According to Formula (

6), mean entropy is supposed to be treated as stochastic, i.e., partially deterministic and partially random. In our work, we treat the

p-value as a parameter, which gives us information about the tendency on maintaining the truth of the hypothesis:

. If the

p-value is lower than in another case, then it presents a stronger tendency in favor of the alternative hypothesis:

.

Much more detailed calculations of the

p-values for this case are presented in

Table 15. We can see that at

, there is a local minimum of the mean

p-value. It indicates that at

, the tendency towards the alternative hypothesis:

is stronger than it is at

and

. However, for

, the mean

p-value parameter is the smallest. The mean

p-value parameter is almost the same for

and

. This means that both temperatures exhibit nearly the same tendency for the hypothesis:

. Such regularities can be seen in

Figure 9.

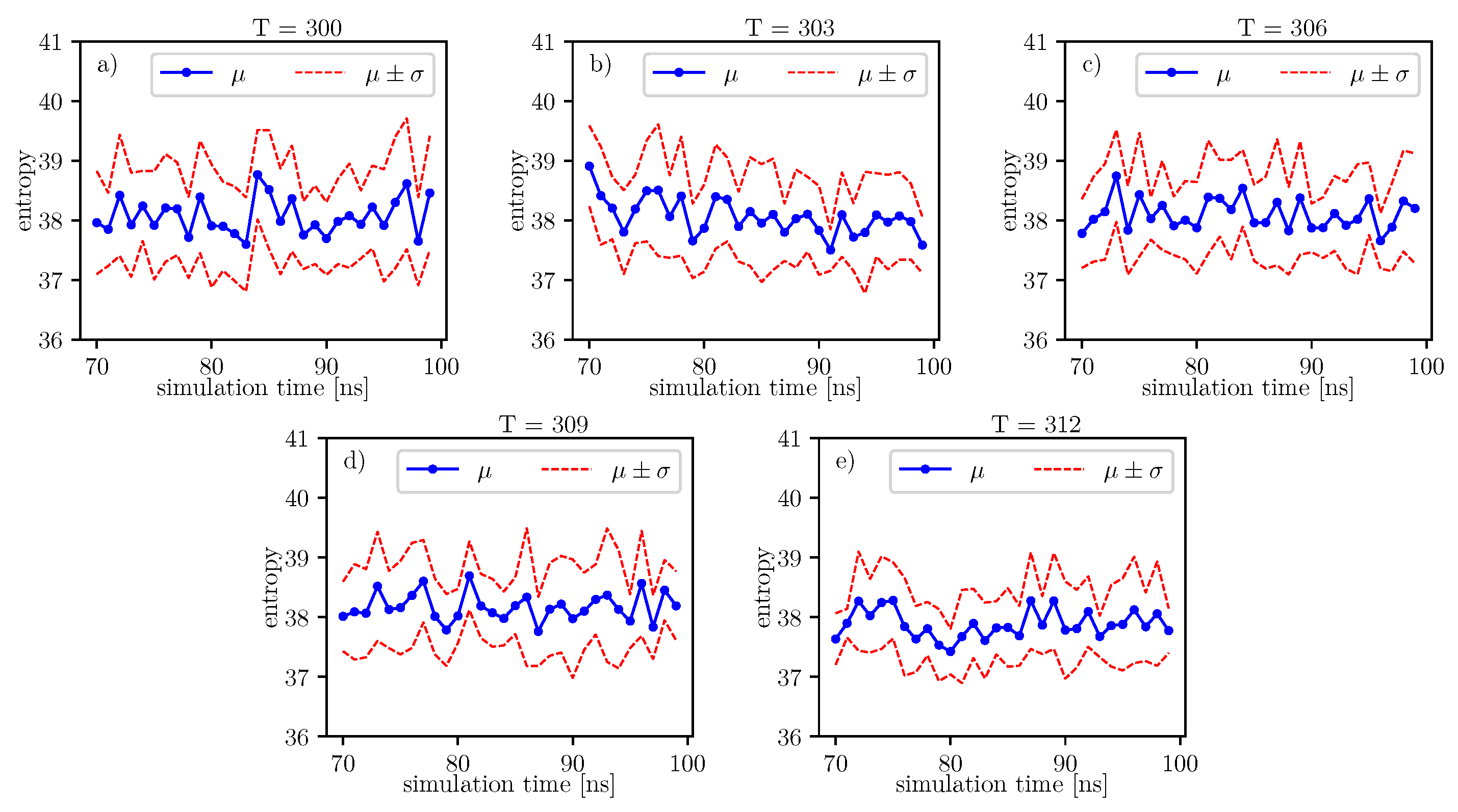

The same analyzes can be done on the data presented in the image.

The same analyzes can be done on the data presented in

Figure 10. The results of these analyzes, in relation to individual time series of entropy, are presented in the

Table 16. For these data, we also observe the local minimum of the

p-value parameter for

. We have a maximum at

. Thus, the tendency towards the hypothesis

is strongest here in comparison to the rest of the analyzed temperatures. This maximum value of the

p-value parameter for

is also present for the previous window.

Formula (

11) can be calculated for each moment of simulation, so we obtain a time series. To get one parameter for one molecule, we calculate a mean value of this parameter for each molecule. In the next step, we can perform the same statistical considerations to derive values of the

H statistic. For the time window 0–30 ns, the value of the Kruscal–Wallis test gives

; however, for the time window 70–100 ns, the value of this statistic is

. Because we treat the statistic as an ordinary parameter, we do not specify whether results are significantly different. In our approach, we conclude that the tendency for the alternative hypothesis about not equal medians is much higher for the time window 70–100 ns. Since this tendency is more significant in the second case than in the previous one, we decided to perform a multi-comparison test. We chose the Conover–Iman multi-comparison test. We treated

as a parameter, which depends on an assumed statistical significance. Therefore in

Table 17, we only give the left side of Equation (

13).

Here, we have a comparison between medians for different sets. Using values from

Table 17 and calculating the right sight of Equation (

13) for

, we can state that there are sets that differ from another cluster of sets. The cluster of sets for temperatures

,

, and

differ from the set for temperature

. Unfortunately, the set for temperature

is between these two.

We can compare the above statistical results to the other methods such as the Kullback–Leibler distance, which is presented in the Introduction. In

Table 18, we have values of this quantity. We can see that the biggest value is between the distribution for

and the distribution for

as a reference distribution.

The calculation of the Kullback–Leibler distance is presented in

Table 19. We can conclude that the

case differs most from all other cases, so the dynamics of the system in the temperature range of 306

K–309

K appears to differ most from the dynamics of the system in other temperature ranges.

The calculations of Kullback–Leibler divergence and distance for the time window 70–100 ns are presented in

Table 20 and

Table 21. We can conclude that the biggest value is between the distributions for

and

.

In a further analysis, we compare distributions from signals of the RMSD obtained in the same way as a mean root square of end-to-end signals. Results for the Kullback–Leibler distance are presented in

Table 22 and

Table 23. We can conclude that for the time window 0–30 ns, the most significant value of differences is for the distribution for

and the distribution for

. For the time window 70–100 ns, the most significant value is for

and

.

In all tables of the Kullback–Leibler divergence measures we can see 0 value. For all these cases we compare two raw data from the same time window in the same temperature. Results for

we present in

Table 24. We can see that the distribution of Kullback–Leibler distances are widely dispersed. We can observe a similar dispersion in other temperatures.

Referring to

Figure 11, we observe simultaneous small valued events for the 0–30 ns simulation time window. There is no such events for the 70–100 ns simulation time window, see

Figure 12. Here, the copula with the “lower tail dependency”, such as the Clayton, can provide the proper model of the RMSD for the 0–30 ns simulation time window.

3. Discussion

Albumin plays an essential role in many biological processes, such as the lubrication of articular cartilage. Hence, knowledge of its dynamics in various physiological conditions can help us to understand better its role in reducing friction. From a general point of view, albumin behaves stable regardless of the temperature. The angle energy of the protein fluctuates similarly regardless of the temperature (see

Figure 4 and

Figure 5). However, its mean value rises monotonically with temperature. On the other hand, the size exponent

fluctuates with temperature. We calculate, using the Kruscal–Wallis test, that these fluctuations generate small values of

H parameter and, as a result, indicate a hypothesis about the equality of medians.

Further, referring to

Figure 6 and

Figure 7, we can see that the dihedral energy seems to obey (at least at some range of simulation times) the power law-like relation, although the scaling exponent is relatively small. In

Figure 7, we can see this relation in the range of temperature from 306

K–309

K. This exponent does not change in time

t too much. It suggests stable behavior. We consider the conformational entropy of the system. For its plot versus simulation time see

Figure 9 and

Figure 10. On each graph, we can observe a pattern which seems to attain an oscillatory behavior. Fisher’s test allows describing this property by providing the

p-value parameter. If this parameter, for some time series of entropy, is relatively small in contrast to

p-value parameters for another time series of entropy, then the statistical hypothesis

:

is less probable than in another case. In

Table 14, we can observe that in the range of temperature 303

K–309

K, there is a local minimum of

p-values. A similar effect can be observed in

Table 15 when we calculate the mean

p-value for each column. We can also observe that there is a local minimum of around

. A smaller mean

p-value is seen for

and

. This means that both temperatures exhibit the analogical tendency to favor the hypothesis

. We can also see this in

Figure 9, where for temperatures

,

, and

, the regularity of periodicity increases more than at other temperatures. Other tests, including the Kruscal–Wallis test followed by the Conover–Iman multi-comparison test, Kullback–Leibler distance, and Kullback–Leibler divergence give consistent results, while referring to the mean RMSD of the protein of interest.

This globular protein is soluble in water and can bind both cations and anions. By analyzing

Figure 1 and

Figure 8, it is clear that albumin’s secondary structure is mainly standard

-helix. Charged amino acids (AA) have a large share in albumin’s structure, especially Aspartic and Glutamic acid (25% of all AA) and hydrophobic Leucine and Isoleucine (16%). This composition enables it to preserve its conformation, due to intramolecular interactions as well as interactions with the solvent. Two main factors are at play here, namely hydrophobic contacts and hydrogen bonds (which also occur between protein and water). A large number of charged AA result in a considerable number of inter- and intramolecular hydrogen bonds. On the other hand, hydrophobic AA result in a more substantial impact when the hydrophobic effect stabilizes a protein’s core. As shown by Rezaei-Tavirani et al. [

29], the increase of temperature in the physiological range of temperatures results in conformational changes in albumin, which cause more positively charged molecules to be exposed. This, in turn, results in a lower concentration of cations near a molecule. Albumin is known to largely contribute to the osmotic pressure of plasma, where the presence of ions largely influences this property. An increase of temperature results in a reduction of the osmotic pressure of blood, which in feverish conditions can lead to a higher concentration of urine. Because water is the main component of blood and it has a high heat capacity, the increase of urine concentration results in better removal of heat from the body [

29]. The difference in dynamics between temperatures shown in the present study could indicate albumin’s binding affinity with other SF components and thus changes its role in biolubrication toward anti-inflamatory. However, due to the high complexity of the fluid, more research has to be performed.

These chemical properties result in an effect of elasticity vs. swelling competition for albumin chains immersed in water. If the elasticity of the chain fully dominates over albumin’s swelling-induced counterpart (interactions of polymer beads and water with network/bond creation), the exponent would have a value of

. If, in turn, a reverse effect applied, the value of the exponent should approach the value of

, [

30] (note the two first Eqs only). As a consequence, and what has not been discovered by any other study, the exponent obtained from the simulation in the examined range of temperature looks as if it is near an arithmetic mean of the two exponents mentioned, namely:

, thus pointing to

. The difference of about

very likely comes from the fact that the elastic effect on albumin shows up to win over the swelling-assisted counterterm(s), cf. the Hamiltonian used for the simulations. The exponent

is for itself called the De Gennes exponent, and it is reminiscent of the De Gennes dimension-dependent gelation exponent

, see [

30] (eq 15 therein;

-case). It suggests that albumin’s elastic effect, centering at about

, is fairly well supported by the internal network and thus supports the creation of bonds, which has been revealed by the present study. Of course, the overall framework is well-substantiated in terms of the scaling concept [

3,

7]. Regular oscillations of entropy suggest that the system oscillates around the equilibrium state. Furthermore, the Flory–De Gennes exponent appears to be unchanged for both observation windows. Therefore, we expect the system to be near the equilibrium state. We expect the dynamic of the system, for longer simulation times, to be similar to the presented one. The AMBER force field has been reported on overstabilizing helices in proteins. Therefore our next work will report on the effect of force field and water model used.