Abstract

We propose a method to derive the stationary size distributions of a system, and the degree distributions of networks, using maximisation of the Gibbs-Shannon entropy. We apply this to a preferential attachment-type algorithm for systems of constant size, which contains exit of balls and urns (or nodes and edges for the network case). Knowing mean size (degree) and turnover rate, the power law exponent and exponential cutoff can be derived. Our results are confirmed by simulations and by computation of exact probabilities. We also apply this entropy method to reproduce existing results like the Maxwell-Boltzmann distribution for the velocity of gas particles, the Barabasi-Albert model and multiplicative noise systems.

Keywords:

complex networks; growth process; fluctuation scaling; Gibbs-Shannon entropy; scalefree distribution PACS:

02.50.Fz; 05.10.Gg; 05.40.-a; 05.65.+b

1. Introduction

The famous model by Yule [1,2] and its analogue for networks, the Barabasi and Albert (BA) model for scalefree networks [3], have been widely used to the describe phenomena and processes that involve scalefree distributions. The latter are an ubiquitous phenomenon found, for example, in word frequency in language [4] and web databases [5], city and company sizes [6] and high-energy physics, and they have been modeled with different approaches, for example, References [7,8]. When occurring in the degree distribution of networks, power laws affect in particular the dynamics on a network, for example, of protein interaction networks [9], brain functional networks [10], email networks [11], and various social networks [12] such as respiratory contact networks [13]. An advantage of the the Yule model and the BA-model is that their interpretation of the ’preferential attachment’ process (in which nodes preferentially attach to existing nodes with high degree) is simple and plausible, and that they generate a scalefree degree distribution, whose exponent can be calculated analytically given the rate of introduction of nodes. Therefore simple preferential attachment continues to be widely used to simulate networks for spreading processes. In addition, it has been extended [14,15,16,17,18] and the process has been generalized [19]. The exponent of the degree distribution in the BA-model can be derived starting from a master equation [20]. This ansatz is solvable for constantly growing systems, but becomes too complicated when a system can also lose nodes and edges. However, continuous growth is often not fulfilled in real world examples, especially for social systems, because people also exit the system or network.

Here, we present a method to predict the scaling exponent and the exponential cutoff of a size/degree distribution by maximisation of the Gibbs-Shannon entropy, building on the work in Reference [21]. This method is applicable to a variety of models that do not require the hypothesis of continuous growth. We introduce it at the example of a micro-founded model for the size distribution of urns (filled with balls), which preserves a stationary size distribution by deletion of balls, and/or by deletion of urns. Like the Yule process, this algorithm can be extended to networks, where links and nodes are entering and exiting the network.

Our example model also explains another scaling phenomenon, a ‘tent-shaped’ probability density for the aggregate growth rate , which often occurs in combination with a scalefree distribution in many real-world examples [22,23,24,25,26,27,28,29,30,31,32,33]. Tent-shaped growth rate probabilities are also generated by other preferential-attachment models like BA, but they are not produced by other families of models for scalefree distributions.

2. A Preferential-Attachment Algorithm for A Stable Size Process

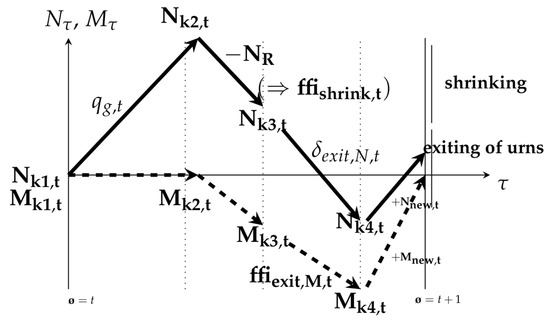

We consider a system of urns and balls, and extend it to nodes and edges in Section 4.3. Each of the M urns is filled with balls, and their sizes sum to a variable number . The dynamics are framed in terms of urns receiving and losing balls, in discrete time steps k. The key features are that M is constant; the average of N is conserved over time; the expected value of size change, for individual urns is 0 (though is not stationary), and that every ball has the same chance of attracting another ball and of vanishing. We give now the succession of events in one iteration (where we refer to as the number of balls at the beginning of an iteration). A scheme with more details is given in Figure A2 in Appendix B.

- Growth of urns: every ball has probability of attracting another ball from a reservoir. Let be the number of new balls in urn i in iteration t; is binomial with mean , such that the urn grows on average to . To ensure that after a full iteration of steps 1–4 is stationary (but not fixed), after the first time step, is adjusted to , such that the expected size after growth is always .

- Shrinking of urns: every ball has probability of disappearing, which is adjusted to as a result of the growth step 1, such that the expectancy after shrinking equals the initial size . Let be the number of disappearances of urn i; is a random variable with a binomial distribution with mean . The system shrinks in the number of balls, and some urns might be be left with 0 balls (which can be interpreted as exiting urns).

- Exit of urns (and balls): every urn has probability of exiting, that is, being set to size 0, so the system loses balls.

- Entry of urns (and balls): Urns that have lost all their balls due to steps (2) or (3) are replaced by urns that contain 1 ball, so that M is strictly conserved after one iteration of steps 1–4, but fluctuates around .

Even if step 3 is omitted, some urns will exit, as urns can vanish by losing all their balls. Steps 3 and 4 do not affect the number of urns M, but may leave the system with a net loss or gain of balls, compared to the beginning of step 1. To conserve the average sum of balls in the system after growth, , the probability to attract a new ball from the reservoir is adjusted for the next iteration. A scheme of how the number of urns and balls change in steps 1–4 is given in Figure A2 in Appendix B.

Possible Cases

This general process can be reduced to two limiting scenarios with the same growth but different shrinking mechanisms. These are: (I) No deletion of urns of size . (II) Urns can only grow and do not shrink, but exit (with their balls) at a rate and get replaced by urns of size 1. (III) A combination of both.

- (I)

- Urns do not exit (step 3 is omitted), that is, . For an urn i of size , the probability distribution of the size after a growth-and-shrink cycle, can be written as a discrete Gaussian centered around and with standard deviationwith standard deviation scaling exponent (see Equations (A1)–(A3) in Appendix A).

- (II)

- Urns do not shrink (step 2 is omitted). At each step a fraction of urns is deleted and replaced by urns of size 1, which means that the number of exiting balls varies more strongly. With probability , the urn size in the next time step is 1 (if one thinks of the replacing urn as being the same urn); with probability , the urn grows by , and the binomial distribution of has standard deviationagain with scaling exponent . The most probable outcome is the maximum of the binomial distribution (unless this is lower than ), but that is not the average expected size at for an urn of size : is again precisely (see Figure 2 left column with examples).

- (III)

- Mixed case. Steps 2 and 3 can be combined such that some balls (a fraction ) will disappear from the system due to shrinking of urns, and some because urns exit with probability with their balls. The exiting urns have on average the median size of all urns in the system, on average a fraction of balls exits with them (with ). The turnover rate can then be defined as the fraction of balls that gets removed through exit of urns, normalized by the total number of balls that get removed in one time step, .

3. Maximum Entropy Method

To derive we use the Maximum Entropy Principle (MEP) [21,34], which can be defined as:

Suppose that probabilities are to be assigned to the set of k mutually exclusive possible outcomes of an experiment. The principle says that these probability values are to be chosen such that the (Shannon) entropy of the distribution , that is, the expression attains its maximum value under the condition that P agrees with the given information [35].

We use as given information the probabilities of every urn i to change size. For urns that do not exit, the probability is either Gaussian (case I) or binomially distributed (case II), and their associated entropies are approximated by . This term becomes for case (I) using (1), or for case (II) using (2), which both have a standard deviation that scales as . The individual Gaussian or binomial probability distributions are themselves maximum entropy distributions [36], under the constraint that the expectation of change is zero. If the are maximal at stationary state, then needs to be stationary. are generated by the same updating rules as , and according to the Maximum Entropy Principle, needs to agree with the given information about . (Should not be relevant then it would cancel out in the entropy maximisation step, as it is the case for a system shown in Section 4.4). Formulated differently, the size distribution maximizes entropy under the constraint . Subtracting the constant from , we can use as sum of entropies

A second constraint is the conservation of the expectation of individual urn size , or summed over all urns i, which can be written as . (The precise number fluctuates around , in analogy to the total energy of a gas described in the canonical ensemble.) The Lagrangian function for maximizing entropy of the urn size distribution is

To determine the distribution that maximizes S, we calculate and set it to 0, leading to

with . This equation can be solved using , and , which gives and (with the upper incomplete Gamma function). For the constant in Equation (5) becomes , if urn sizes n can take values in . Knowing K, the exponent can be determined from the condition . In continuous approximation this yields . This result is independent of q and for simplifies to

For , depends only on C, which is the logarithm of the geometric mean of urn sizes.

4. Results

4.1. Size Distribution

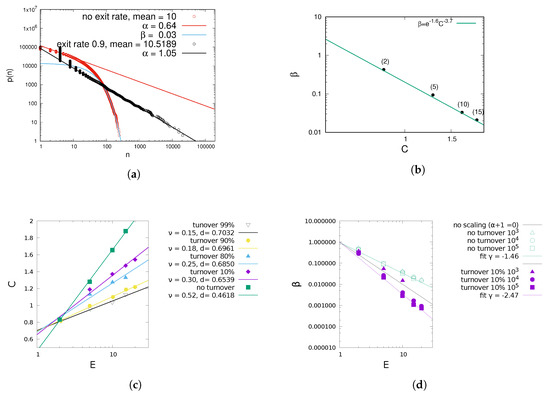

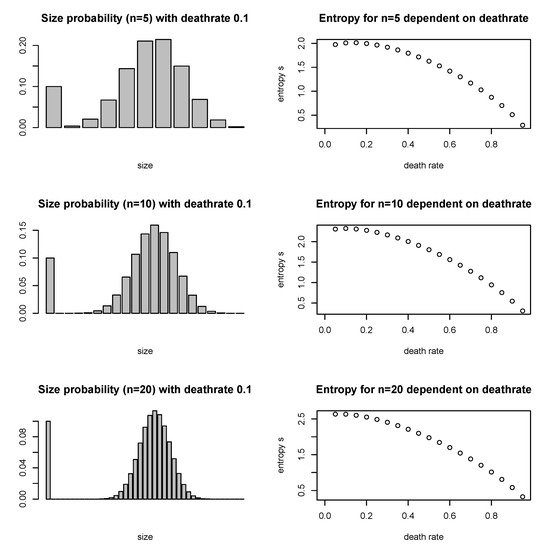

The maximum entropy size distribution of the stable size process (5) is confirmed by numerical results (see Figure 1a). The method holds for all three cases. The sum C is smaller when urns have a probability to be replaced with an urn of size 1, which has a theoretical explanation. is the logarithm of the geometric mean of the urn sizes . Reference [37] has shown that the geometric mean decreases when subject to mean-preserving spread, that is, when all numbers in a set become proportionately farther from their fixed arithmetic mean (of course increasing their standard deviation). The statement applies to C as well, since the logarithm is a monotonically increasing function. Spread increases with turnover, which increases the fraction of urns of size 1, and consequently the larger the other urns need to be for a given arithmetic mean E. Another effect is the direct change of through increasing with higher turnover, as shown in Figure 2.

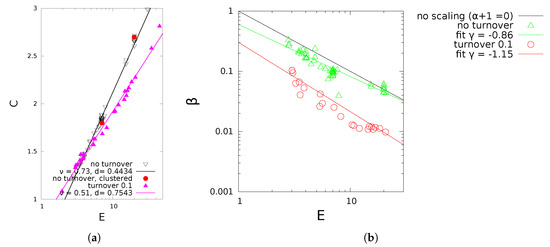

Figure 1.

Simulation results for different turnover rates. For small system sizes, for high turnover rates and E the cutoff is no longer a clear exponential which is why in subfigure (d) for N = 103 some are lacking. (a) Example of size distributions for different exit rates, in double logarithmic scale. (b) vs. C for no turnover rate and different mean sizes in parentheses. (c) Numerical C vs. mean E. (d) Exponential cutoff vs. E.

Figure 2.

Three examples for the expected size distribution, and its entropy, for the mixed case (III) with deathrate 0.1, for urns of size 5, 10 and 20. The high probability for size n = 1 comes from the re-introduction of urns of size 1. Dependent on the deathrate, this probability increases and the sum of the other outcomes decreases. In the right column, the entropy is calculated as a function of the deathrate . The higher the deathrate, the lower s, which contributes to a lower , and a higher the exponent , which are shown in Figure 1.

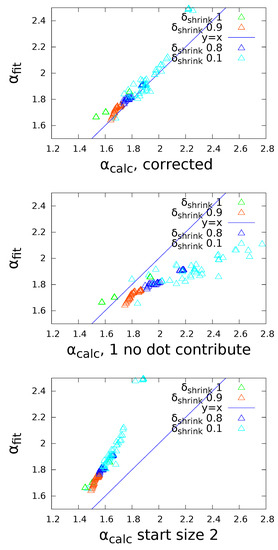

To compare the exponent fit of to the calculated one from the numerical entropy sum C, an adjustment to the computation of C in (3) is necessary, since the approximation of the entropy of a binomial holds for large n, but yields . Especially for cases (II) and (III) with turnover, urns of size 1 make up a large fraction of urns, and their contribution to the total entropy cannot be neglected. To correct for this we calculate the exact entropies and from the definition , and then multiply their fraction by from the large-n-approximation: with for a wide range of q. We use as corrected C

The correction is only significant for high turnover rates where a large fraction of urns has size 1, and with it, the theoretical is confirmed by simulations (see Figure 3). Furthermore, if the average size E and turnover rate are known, the power law exponent (via the constant C) and the exponential decay can be determined numerically (see Figure 1c,d). In case (I) where urns shrink, C is so big and consequently so low, that has a strong exponential cutoff in order to keep the system at the same mean urn size, in agreement with (5). Although (6) holds only for , it only slightly overestimates for , since the exponential cutoff affects only a small fraction of urns. In the presence of , C can be greater than 1, resulting in , which would diverge without exponential cutoff.

Figure 3.

Fitted vs. calculated exponent , for three different ways of accounting for urns of size 1.

The larger and the mean urn size E, the larger the fluctuations in number of removed balls in step 3, and the more the urn size distribution fluctuates. Both and are independent of system size (except if the system size is too low for convergence, in which case increases), see Figure 1d. Simulation results are independent of the urns’ probability to attract balls in one time step, q, in agreement with our theoretical result in (6).

In addition to simulations, we derived the same size distributions for cases (I) and (II) with another method using the exact probabilities of for every individual urn, which we calculated with a recursion equation (see Appendix A and Figure A1a). Also this method reproduces all of the results of the maximum entropy method which we presented above and in Section 4.4 (see for example Figure A1b).

4.2. Aggregate Growth Rate Distribution of the Stable Size Process

It follows from the binomially (or normally) distributed (where ) that an individual urn’s growth rate, defined as , is also normally distributed

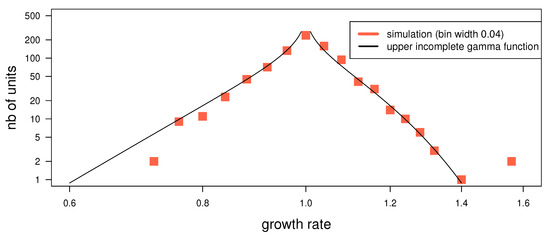

with scaling . The aggregate growth rate distribution (aggregated over all urns in one timestep, dropping the index t) is , or in the continuous limit . This can be evaluated using (8) and for the expression (5). For and , this yields a upper incomplete Gamma function shown in Figure 4 and References [38,39,40]: .

Figure 4.

Aggregate growth rate distribution, simulation and fit (for , )

Such ‘tent-shaped’ aggregate growth rate distributions are often observed for quantities that themselves follow a power-law [22,25,27,29,41]. The tent shape is the sample average, but not the expectation for a given urn, as other models for it presume [28,42]. This result adds credibility to the stable size process as a model for some real system, in particular since a tent-shaped aggregate growth rate distribution does not automatically result from other models for scalefree distributions. An example is a multiplicative noise term in the linear Langevin equation [7,43] (where is additive noise and is the size of the process at time t). Such models produce a scalefree distribution for n above some value , but the growth rate can be any i.i.d. random variable [44,45,46] independent of an urn’s size n, and no distinction between individual growth rate and aggregate growth rate can be made. Therefore it does not additionally generate a tent shape for the aggregate growth rate distribution (unless a tent shape is assumed as individual growth rate distribution ).

4.3. Extension to Networks of the Stable Size Process

The algorithm can be adapted to derive the degree distribution for networks, where M nodes are connected with N undirected and unweighted links. The substeps become: (1. and 2.) A random link is broken, and one of its neighbors i is chosen to receive an additional link (i.e., every node is picked with probability proportional to its degree ). Its new neighbor j is also picked with probability . (3.) Nodes are removed at random at rate ; their links are broken. (4.) Nodes are re-introduced and linked to an existing node; the probability of selecting a node i as neighbor is . New links are added to keep N conserved; each node has a probability of receiving a link .

Compared to an urn/ball system, the exponential cutoff always exists, for the following reason. The case (II) in Section of Possible Cases, where the only shrink mechanism is exit nodes, cannot be reached. If a node exits the network, all its links are broken, so necessarily also non-exiting nodes will lose the same number of edges. The maximal turnover rate is therefore 0.5. Numerical results confirm that a scalefree network without cutoff is not produced by this algorithm.

In previous work [47,48], we have added further features to make the model more plausible for example, for epidemiology, such as clustering (that a link is preferably formed between neighbours of second or third degree), or different exit rules, for example, removal of a node after a given time span instead of exit by rate . The latter increases in addition the exponential cutoff, because it prevents nodes to remain a sufficiently long duration to attract many links. In that case and in (5) can still be inferred numerically from E, and additional features (see Figure 5).

Figure 5.

Simulated network and simulation results for different turnover rates, N = 103. (a) Numerical C vs. mean degree E. (b) Exponential cutoff vs. E.

4.4. Maximum Entropy Argument of Other Systems

The method of using the sum of entropies of the evolution of individual urns as a constraint on the entropy of the system can be applied to many urn-ball systems in discrete time steps. It will affect the maximum entropy size distribution whenever depend on .

4.4.1. Maxwell-Boltzmann Distribution

A well-known example for a maximum entropy distribution is the velocity distribution of gas particles (Maxwell-Boltzmann distribution, here in one dimension). The only assumption about the process generating the velocity distribution P is that the mean is conserved in time. Particles can change their velocity through collisions with other particles. In a given timespan, the sum of received shocks of particle i (in one dimension) follows a Gaussian distribution, which has entropy , but all particles are hit by shocks of the same distribution, that is, , since does not depend on a particle’s own velocity . The focus is usually not on the distribution of individual change of , only on the stationary distribution of v. In one dimension, the Lagrangian function becomes with at the extremum where , and results in ). The constraint on entropies vanishes, as do not depend on , and the established Maxwell-Boltzmann distribution is found.

4.4.2. Yule Process (or Barabasi-Albert for Networks)

We simulated the Yule process in discrete time steps of adding a number of balls before adding an urn. If we consider larger time steps where several urns and many balls are added, the growth of an urn is approximately binomial with . Following our argument in Section 3, the binomial are themselves maximum entropy distributions [36] and therefore the sum of their entropies is stationary. The system has the constraint that the sum of individual entropies in one time step is stationary. The Lagrangian function becomes , which is maximal for .

4.4.3. Multiplicative Noise

An example are systems described by a multiplicative noise term in the linear Langevin equation [7,43] . They can be written like where the noise term appears now as an additive term. This (e.g., Gaussian) noise term has then , that is, . In this case, a large number of urns will attain size zero, since does not decrease for larger urns, due to . For this reason many empty urns need to be refilled and have size 1. We assume again that are therefore are stationary. Since the system is at constant size, we also assume . The Lagrangian function is , which is maximal for . Examples in the literature often have sufficiently large to not need a cutoff for the system to be at a given mean urn size [43]. An additional exit rate of urns can be added, in which case the power law exponent grows with exit rate, like in Figure 1.

5. Conclusions

We have introduced a method to derive stationary distributions, by looking at them as the maximum entropy distribution of the outcomes in one iteration, for a process in discrete time. The method provides an intuitive explanation for a size or degree distribution. It has been applied to a novel preferential attachment process for systems of constant size. The model has been analyzed for three different methods to keep the system at constant size. Each provides a realistic model for real-world applications. Results are confirmed by simulations and by summing over exact probabilities. We have also applied the method to derive the Maxwell-Boltzmann distribution for the velocity of gas particles, to the Yule process, and to multiplicative noise systems, where in each case established results are reproduced. The constraint that allowed these derivations is that the sum of entropies of the individual urns are also maximal when the system’s entropy is maximal.

Author Contributions

Methodology, C.M.; formal analysis, C.M.; writing—original draft preparation, C.M.; writing—review and editing, C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This received funding from https://www.epsrc.ac.uk/ grant numbers EP/K026003/1 and EP/L019981/1. The support of Climate-KIC/European Institute of Innovation and Technology (ARISE project) is gratefully acknowledged.

Acknowledgments

We thank Fernando Rosas, Gunnar Pruessner, Tim Evans and Chris Moore for discussion, and the two anonymous referees for their helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Size Distribution from Exact Probabilities

Appendix A.1.

In one growth and shrink cycle, an urn of size 1 can reach 3 possible states, 0, 1 and 2. Their probabilities can be calculated by the probability to grow by one in the growth step, and for the following shrink step when the system has grown to , the probability to shrink by one s . From this follows that

The process can thus be rephrased as a growth process that follows p given in Equations (A1)–(A3), using the shorter notation and , irrespective of the interpretation of the microfoundations. This probability mass function has mean since , and variance . For an urn of size n, , and and thus the standard deviation of an urn’s next size scales as

with its size n. This scaling holds whenever growth is the sum of independent growth of balls.

- (i)

- From (A1)–(A3), the probabilities , can be calculated, similar to Pascal’s triangle for binomial coefficients. The lowest possible j for an urn of size is always 0 (all balls leave), the largest is always (all balls attract another ball). Every probability is itself a sum of termsWe calculated the coefficients recursively from coefficients of the corresponding addends in the 3 terms , and with the corresponding powers x and y:if exists, given . The with is calculated first and no can be used in two addends for the same . With (A6) the coefficients and probabilities have been computed (until ). Care has been taken at the implementation since (A5) and (A6) sum over terms of very different orders of magnitude.

- (ii)

- With the transition probabilities the most probable time evolution of an urn that started at size 1 can be calculated recursively like . grows with t and approaches 1, since over time, the probability to have died out is increasing.

- (iii)

- Assuming that equilibrium has been obtained by continuously replacing urns of size 0 by urns of size , the equilibrium distribution is . It is shown in Figure A1.

The obtained size distribution can again be fitted by a power law with exponential cutoff (see Figure A1). The method applies to other processes if can be known. We used it also for multiplicative noise systems where Zipf’s law is recovered as result (Figure A1b).

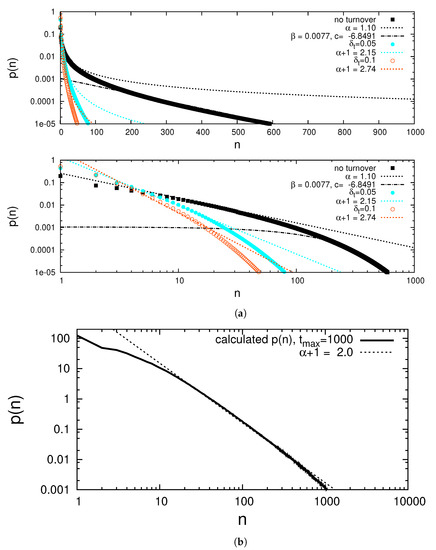

Figure A1.

(a) Numerical normalized probability density with Gaussian pi with without and with turnover, both in log-linear and double logarithmic scale (b) Numerical probability density with Gaussian pi with generates Zipf’s law .

Appendix B. Scheme of the Process

Figure A2.

Scheme of system size evolution, in urns M and balls N at the different steps 1–4 within one iteration. The exit rate for balls is not chosen but follows from the chosen exit rate for urns . does not equal , since it depends on the number of balls that have exited, and on the number of balls that have been introduced when empty urns have been refilled. As consequence, is adjusted such that is conserved.

References

- Simon, H.A. On a class of skew distribution functions. Biometrika 1955, 42, 425–440. [Google Scholar] [CrossRef]

- Yule, G.U., II. A mathematical theory of evolution, based on the conclusions of Dr. JC Willis, FR S. Proc. R. Soc. Lond. 1925, 213, 21–87. [Google Scholar]

- Barabási, A.L.; Albert, R. Emergence of scaling in random networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef]

- Mandelbrot, B. An informational theory of the statistical structure of language. Commun. Theory 1953, 84, 486–502. [Google Scholar]

- Babbar, R.; Metzig, C.; Partalas, I.; Gaussier, E.; Amini, M.R. On power law distributions in large-scale taxonomies. SIGKDD Explor. 2014, 16, 47–56. [Google Scholar] [CrossRef]

- Axtell, R.L. Zipf distribution of us firm sizes. Science 2001, 293, 1818–1820. [Google Scholar] [CrossRef] [PubMed]

- Biró, T.S.; Jakovác, A. Power-law tails from multiplicative noise. Phys. Rev. Lett. 2005, 94, 132302. [Google Scholar] [CrossRef] [PubMed]

- Marsili, M.; Zhang, Y.C. Interacting individuals leading to zipf’s law. Phys. Rev. Lett. 1998, 80, 2741. [Google Scholar] [CrossRef]

- Albert, R. Scale-free networks in cell biology. J. Cell Sci. 2005, 118, 4947–4957. [Google Scholar] [CrossRef]

- Eguiluz, V.M.; Chialvo, D.R.; Cecchi, G.A.; Baliki, M.; Apkarian, A.V. Scale-free brain functional networks. Phys. Rev. Lett. 2005, 94, 018102. [Google Scholar] [CrossRef]

- Ebel, H.; Mielsch, L.I.; Bornholdt, S. Scale-free topology of e-mail networks. Phys. Rev. E 2002, 66, 035103. [Google Scholar] [CrossRef] [PubMed]

- Barabási, A.L.; Albert, R.; Jeong, H. Scale-free characteristics of random networks: The topology of the world-wide web. Physica A 2000, 281, 69–77. [Google Scholar] [CrossRef]

- Eubank, S.; Guclu, H.; Kumar, V.A.; Marathe, M.V.; Srinivasan, A.; Toroczkai, Z.; Wang, N. Modelling disease outbreaks in realistic urban social networks. Nature 2004, 429, 180. [Google Scholar] [CrossRef] [PubMed]

- Bertotti, M.L.; Modanese, G. The bass diffusion model on finite barabasi-albert networks. Complexity 2019, 2019, 6352657. [Google Scholar] [CrossRef]

- Bertotti, M.L.; Modanese, G. The configuration model for barabasi-albert networks. Appl. Netw. Sci. 2019, 4, 32. [Google Scholar] [CrossRef]

- Bhaumik, H. Conserved manna model on barabasi–albert scale-free network. Eur. Phys. J. B 2018, 91, 21. [Google Scholar] [CrossRef]

- Glos, A. Spectral similarity for barabási–albert and chung–lu models. Physica A 2019, 516, 571–578. [Google Scholar] [CrossRef]

- Jaiswal, S.K.; Pal, M.; Sahu, M.; Sahu, P.; Dev, A. Evocut: A new generalization of albert-barabasi model for evolution of complex networks. In Proceedings of the 22nd Conference of Open Innovations Association (FRUCT), Petrozavodsk, Russia, 9–13 April 2018; pp. 67–72. [Google Scholar]

- Courtney, O.T.; Bianconi, G. Dense power-law networks and simplicial complexes. Phys. Rev. E 2018, 97, 052303. [Google Scholar] [CrossRef]

- Newman, M.E. Power laws, pareto distributions and zipf’s law. Contemp. Phys. 2005, 46, 323–351. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620. [Google Scholar] [CrossRef]

- Alfarano, S.; Milaković, M.; Irle, A.; Kauschke, J. A statistical equilibrium model of competitive firms. J. Econ. Dyn. Control 2012, 36, 136–149. [Google Scholar] [CrossRef]

- Alves, L.G.; Ribeiro, H.V.; Mendes, R.S. Scaling laws in the dynamics of crime growth rate. Physica A 2013, 392, 2672–2679. [Google Scholar] [CrossRef]

- Bottazzi, G.; Cefis, E.; Dosi, G. Corporate growth and industrial structures: Some evidence from the italian manufacturing industry. Ind. Corp. Chang. 2002, 11, 705–723. [Google Scholar] [CrossRef]

- Bottazzi, G.; Secchi, A. Explaining the distribution of firm growth rates. RAND J. Econ. 2006, 37, 235–256. [Google Scholar] [CrossRef]

- Halvarsson, D. Asymmetric Double Pareto Distributions: Maximum Likelihood Estimation with Application to the Growth Rate Distribution of Firms; Technical Report; The Ratio Institute: Stockholm, Sweden, 2019. [Google Scholar]

- Picoli, S., Jr.; Mendes, R.S.; Malacarne, L.C.; Lenzi, E.K. Scaling behavior in the dynamics of citations to scientific journals. EPL 2006, 75, 673. [Google Scholar] [CrossRef]

- Schwarzkopf, Y.; Axtell, R.; Farmer, J.D. An Explanation of Universality in Growth Fluctuations. 2010. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1597504 (accessed on 20 February 2019).

- Stanley, M.H.; Amaral, L.A.; Buldyrev, S.V.; Havlin, S.; Leschhorn, H.; Maass, P.; Salinger, M.A.; Stanley, H.E. Scaling behaviour in the growth of companies. Nature 1996, 379, 804. [Google Scholar] [CrossRef]

- Takayasu, M.; Watanabe, H.; Takayasu, H. Generalised central limit theorems for growth rate distribution of complex systems. J. Stat. Phys. 2014, 155, 47–71. [Google Scholar] [CrossRef]

- Stewart Thornhill and Raphael Amit. Learning about failure: Bankruptcy, firm age, and the resource-based view. Organ. Sci. 2003, 14, 497–509. [Google Scholar] [CrossRef]

- Amaral, L.A.N.; Buldyrev, S.V.; Havlin, S.; Maass, P.; Salinger, M.A.; Stanley, M.H. Scaling behavior in economics: The problem of quantifying company growth. Phys. A 1997, 244, 1–24. [Google Scholar] [CrossRef]

- Coad, A. Firm Growth: A Survey. 2007. Available online: http://hdl.handle.net/10419/31823 (accessed on 10 March 2020).

- Rosenkrantz, R.D. Where do we stand on maximum entropy? (1978). In ET Jaynes: Papers on Probability, Statistics and Statistical Physics; Springer: Berlin/Heidelberger, Germany, 1989; pp. 210–314. [Google Scholar]

- Uffink, J. The constraint rule of the maximum entropy principle. Stud. Hist. Philos. Sci. B 1996, 27, 47–79. [Google Scholar] [CrossRef]

- Harremoës, P. Binomial and poisson distributions as maximum entropy distributions. IEEE Trans. Inf. Theory 2001, 47, 2039–2041. [Google Scholar] [CrossRef]

- Mitchell, D.W. 88.27 more on spreads and non-arithmetic means. Math. Gaz. 2004, 88, 142–144. [Google Scholar] [CrossRef]

- Metzig, C. A Model for a Complex Economic System. Ph.D. Thesis, Universite de Grenoble, Saint-Martin-d’Heres, France, July 2013. [Google Scholar]

- Metzig, C.; Gordon, M. Heterogeneous enterprises in a macroeconomic agent-based model. arXiv 2012, arXiv:1211.5575. Available online: https://arxiv.org/abs/1211.5575 (accessed on 20 February 2019).

- Metzig, C.; Gordon, M.B. A model for scaling in firms’ size and growth rate distribution. Physica A 2014, 398, 264–279. [Google Scholar] [CrossRef][Green Version]

- Mondani, H.; Holme, P.; Liljeros, F. Fat-tailed fluctuations in the size of organizations: The role of social influence. PLoS ONE 2014, 9, e100527. [Google Scholar] [CrossRef]

- Fu, D.; Pammolli, F.; Buldyrev, S.V.; Riccaboni, M.; Matia, K.; Yamasaki, K.; Stanley, H.E. The growth of business firms: Theoretical framework and empirical evidence. Proc. Natl. Acad. Sci. USA 2005, 102, 18801–18806. [Google Scholar] [CrossRef]

- Takayasu, H.; Sato, A.H.; Takayasu, M. Stable infinite variance fluctuations in randomly amplified langevin systems. Phys. Rev. Lett. 1997, 79, 966. [Google Scholar] [CrossRef]

- Dorogovtsev, S.N.; Mendes, J.F.F. Scaling behaviour of developing and decaying networks. EPL 2000, 52, 33. [Google Scholar] [CrossRef]

- Moore, C.; Ghoshal, G.; Newman, M.E. Exact solutions for models of evolving networks with addition and deletion of nodes. Phys. Rev. E 2006, 74, 036121. [Google Scholar] [CrossRef]

- Sarshar, N.; Roychowdhury, V. Scale-free and stable structures in complex ad hoc networks. Phys. Rev. E 2004, 69, 026101. [Google Scholar] [CrossRef]

- Metzig, C.; Ratmann, O.; Bezemer, D.; Colijn, C. Phylogenies from dynamic networks. PLoS Comput. Biol. 2019, 15, e1006761. [Google Scholar] [CrossRef] [PubMed]

- Metzig, C.; Surey, J.; Francis, M.; Conneely, J.; Abubakar, I.; White, P.J. Impact of hepatitis c treatment as prevention for people who inject drugs is sensitive to contact network structure. Sci. Rep. 2017, 7, 1833. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).