Abstract

Genetic regulatory networks have evolved by complexifying their control systems with numerous effectors (inhibitors and activators). That is, for example, the case for the double inhibition by microRNAs and circular RNAs, which introduce a ubiquitous double brake control reducing in general the number of attractors of the complex genetic networks (e.g., by destroying positive regulation circuits), in which complexity indices are the number of nodes, their connectivity, the number of strong connected components and the size of their interaction graph. The stability and robustness of the networks correspond to their ability to respectively recover from dynamical and structural disturbances the same asymptotic trajectories, and hence the same number and nature of their attractors. The complexity of the dynamics is quantified here using the notion of attractor entropy: it describes the way the invariant measure of the dynamics is spread over the state space. The stability (robustness) is characterized by the rate at which the system returns to its equilibrium trajectories (invariant measure) after a dynamical (structural) perturbation. The mathematical relationships between the indices of complexity, stability and robustness are presented in case of Markov chains related to threshold Boolean random regulatory networks updated with a Hopfield-like rule. The entropy of the invariant measure of a network as well as the Kolmogorov-Sinaï entropy of the Markov transition matrix ruling its random dynamics can be considered complexity, stability and robustness indices; and it is possible to exploit the links between these notions to characterize the resilience of a biological system with respect to endogenous or exogenous perturbations. The example of the genetic network controlling the kinin-kallikrein system involved in a pathology called angioedema shows the practical interest of the present approach of the complexity and robustness in two cases, its physiological normal and pathological, abnormal, dynamical behaviors.

1. Introduction

The relationships between the notions of complexity, stability and robustness in genetic regulatory networks, as described in [1,2,3], are based on works from the eighties about Boolean automata [4,5,6,7,8,9] on the mathematical characterization of the stability in random dynamical systems. These studies about stability are based on theories of stochastic attractors (called confiners in [10,11]) and large deviations [12,13,14], and on an index of complexity—the evolutionary or dynamic entropy, introduced by L. Demetrius [15,16,17,18,19,20] and complementary to other complexity indices used in biological networks, such as number of nodes, connectivity (i.e., ratio between edge and node numbers) [21,22,23], number of strong connected components of their interaction graph [9], entropy of transformations with invariant measure [24,25], etc. The notion of stability (with respect to robustness) is quantified by the rate at which the system returns to its steady state (with respect to the threshold distance below which the system remains close to its former dynamics in terms of number and nature of attractors) after exogenous and/or endogenous perturbations.

If we restrict the study to discrete dynamical systems, many authors since Rochlin [24,25] have used the notion of entropy for quantifying the stability and robustness of dynamical processes, such as inference in Bayesian networks [26]; symbolic extension of a smooth interval map [27]; symbolic complexity of substitution dynamics on a set of sequences in a finite alphabet [28,29]; algorithmic complexity (called also Kolmogorov-Chaitin complexity) of binary strings involved in mutation dynamics [30]; and eventually, reconstruction and stability of Boolean dynamics of genetic regulatory networks following the René Thomas’ logic rules [31,32,33,34].

In the present paper, we will focus on the study of robustness of genetic regulatory networks driven by Hopfield’ stochastic rule [35], by using the Kolmogorov-Sinaï entropy of the Markov process underlying the state transition dynamics [36,37,38,39,40,41,42,43,44,45,46,47,48,49,50]. In Section 2, we define the concepts underlying the relationships between complexity, stability and robustness in the Markov framework of genetic threshold Boolean random regulatory networks. We give in Section 3 some rigorous results concerning their dynamics, and eventually, in Section 4, we calculate the evolutionary entropy for a particular network controlling the kinin-kallikrein system in physiological and pathological conditions, and we give in Section 5 some perspectives of the present work.

2. Materials and Methods

2.1. Definitions

The evolutionary (or dynamic) entropy is related to the network dynamics, and can be identified to the Kolmogorov-Sinaï entropy in a Markovian dynamics. Based on the configuration of its attractor (in deterministic case) or confiner (in random case) landscape in the network state space, it is related to the richness of the network attractor or confiner landscape [11]. The literature on network entropies is abundant [19,20,21,22,51,52,53,54,55,56,57,58,59,60,61,62] and concerns both discrete or continuous dynamical systems, which share common mathematical concepts, such as attractor, attraction basin, Jacobian interaction graph, stability and robustness. We take as the definition of an attractor, that given in [63,64], available for both continuous and discrete cases. More specific definitions of the concept of attractor adapted for network dynamics are given in [65,66,67,68].

In the state space E⊂n provided with a dynamic flow φ and a distance d, {φ(a,t)}t∊T denotes the trajectory starting at state a and being in state φ(a,t) at time t, where φ is a flow on ExT. L(a), the limit set of the trajectory starting in a, is made of the accumulation points of the trajectory {φ(a,t)}t∊T, when the time t tends to infinity in the time set T (either discrete, equal to or continuous, equal to ):

where s(ε,t) is a time (> t) at which the trajectory is in a ball of radius ε centered in y.

Considering a subset A of E, L(A) is the union of all limit sets L(a), for a belonging to A:

Conversely, B(A) is the set of all initial conditions (outside A), whose limit set L(a) is included in A (Figure 1). B(A) = {y∊E\A; L(y)⊂A)} is called the attraction basin of A.

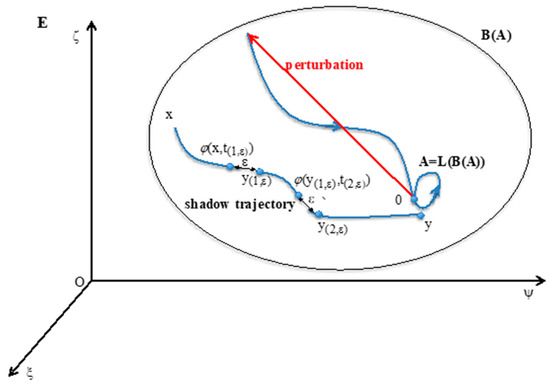

Figure 1.

The attractor A is invariant for the operator LoB in the state space E, whose general state is x = (ξ,ψ,ς). The shadow trajectory between x in B(A) and y near A. The point 0 on A is returning to A after a perturbation in its attraction basin B(A).

An attractor A verifies the three following conditions (Figure 1 Top left):

- (i)

- A = LoB(A).

- (ii)

- There is no set A’ containing strictly A and shadow-connected to A.

- (iii)

- There is no set A” strictly contained in A verifying (i) and (ii).

The definition of the “shadow-connectivity” between subsets F1 and F2 of E, lies on the fact that there exists a “shadow-trajectory” between F1 and F2 (cf. Figure 1, where F1 = {x} and F2 = {y}). The notion of “shadow-trajectory” has been defined first in [69]: for any ε > 0, there is an integer n(ε), times t(1,ε),…, t(n(ε),ε) and states y(1,ε),…, y(n(ε),ε) = y, such that all distances between successive states of the shadow-trajectory are less than ε:

For all known dynamical systems, the above definition of attractor remains available, and is meaningful for any discrete or continuous time set T, when the dynamical system is autonomous (with respect to the time), contrary to the other definitions proposed since the seventies, such as in [70,71,72], which are all less general.

2.2. Hopfield Dynamics

Let consider now discrete Hopfield dynamics [35], defined in a genetic regulatory network N having n genes, where xi(t) = 1, if the gene i is expressing its protein; else xi(t) = 0. The probability that xi(t) = 1, knowing the states at time t in a neighborhood Vi of i, is given by:

wij is a parameter representing the influence or weight (inhibitory, if wij < 0; activating, if wij > 0 and null, if wij = 0) the gene j from the neighborhood Vi ={j; wij ≠ 0} exerts on the gene i, and which corresponds to the notion of external field in statistical mechanics and here to the minimal value of activation for interaction potential: .

The thermodynamic parameter, temperature T, allows for introducing a certain degree of randomness in the transition rule. In particular, if T = 0, the rule is practically deterministic:

and

where H is the Heaviside function.

Once this rule defined, three incidence matrices of important graphs characterizing the network dynamics can be defined:

- -

- Interaction matrix W: Its coefficient wij corresponds to the action of the gene j on the gene i. W is analogue to a discrete Jacobian matrix. Signed Jacobian matrix A is defined as follows: αij=1, if wij > 0, αij = −1, if wij > 0 and αij=0, if wij=0. Its associated directed graph is the interaction graph G;

- -

- Updating matrix S: sij = 1, if j is updated before or with i, else sij = 0;

- -

- Flow matrix Φ: ϕbc = 1, where b and c belong to E, if and only if b = φ(c,1); else ϕbc = 0.

The matrices involved in the network dynamics are constant or depend on time t [41,42]:

- -

- Concerning W, the dependence is called the Hebbian dynamics: if the two vectors {xi(s)}s<t and {xj(s)}s<t have a correlation coefficient j(t)≠0, then the dependence is expressed through the equation: wij(t + 1) = wij(t) + hij(t), with h > 0, corresponding to a reinforcement of the absolute value of interactions wij(t) having succeeded to increase the xi(s)’s, if wij(t) is positive, and conversely to decrease the xi(s)’s, if wij(t) is negative.

- -

- Concerning S, the updating can be state dependent or not. If all sij equal one, the updating schedule is called parallel; if there exists a sequence of indices of the n nodes of the network, i1,…, such as sikik+1 = 1, the other sij’s being equal to 0, the updating is called sequential. Between these two extreme schedules, the updating modes are called block-sequential, i.e., they are parallel in a block, the blocks being updated sequentially. A more realistic schedule in genetic and metabolic networks is called block parallel [50]: These networks are composed of blocks made of genes sequentially updated, these blocks being updated in parallel (Figure 2 Top middle). Some interactions between these blocks can exist (i.e., there are wij ≠ 0, with i and j belonging to 2 different blocks), but because the block sizes are different, the time at which the first attractor state is reached in a block is not necessarily synchronized with the corresponding times in other blocks: the synchronization expected as asymptotic behavior of the dynamics depends on the intra-block as well as on the inter-block interactions, which explains that states of genes in a block serving as the clock for the network are highly dependent on states of genes in other blocks connected to them (e.g., acting as transcription factors of the clock genes).

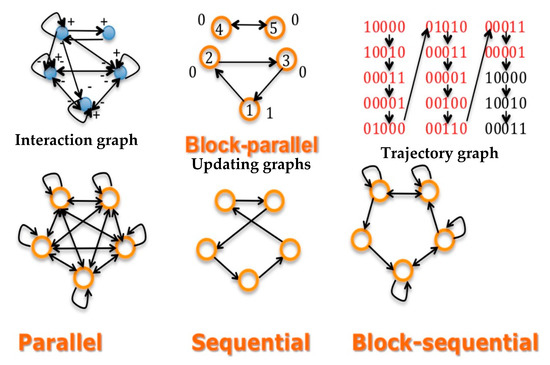

Figure 2. Top left: interaction graph G of a network made of a 3-switch linked to a regulon representing a genetic clock. Top middle: the updating graph corresponding to block-parallel dynamics ruling the network. Top right: a part of the trajectory graph of the dynamics exhibiting a limit-cycle of period 12 having internally a cycle of period 4 for the clock. Bottom: updating graphs corresponding successively (from the left to the right) to the parallel, sequential and block-sequential dynamics.

Figure 2. Top left: interaction graph G of a network made of a 3-switch linked to a regulon representing a genetic clock. Top middle: the updating graph corresponding to block-parallel dynamics ruling the network. Top right: a part of the trajectory graph of the dynamics exhibiting a limit-cycle of period 12 having internally a cycle of period 4 for the clock. Bottom: updating graphs corresponding successively (from the left to the right) to the parallel, sequential and block-sequential dynamics. - -

- Concerning Φ, the transition operator is Markov, and hence autonomous in time.

Figure 2 Top shows the three important graphs of the network dynamics; i.e., the graphs having as incidence matrices respectively, the interaction W (left), updating S (middle) and trajectory (right) matrices. If the dynamics are ruled by a deterministic Hopfield network, with temperature equal to 0 and interaction weights wij, which are equal to 1, −1 or 0, their attractor is a limit-cycle given on Figure 2 (top right), showing two intricate rhythms, one of Period 4 corresponding to the inner clock dynamics, embedded in a rhythm of period 12, the rhythm of the whole network. Figure 2 (Bottom) shows other updating graphs, less realistic than the block-parallel for representing the clock action.

3. Results

3.1. Attractor Entropy and Isochronal Entropy, Indices of Complexity and Synchronizability

The attractor entropy Eattractor is a measure of heterogeneity of the attractor landscape on the state space E of a dynamical system. It is defined by the quantity:

where ABRS(Ak) is equal to the attraction basin relative size of the kth attractor Ak among the m attractors of the network dynamics, divided by 2n, n being the number of genes of the network. Let consider the case of a continuous network, called the Wilson-Cowan oscillator, close to the Hopfield system if τx = τy = 1/ε, wxx = wxy = wyx = wyy = λ/ε, 0<ε<<1 [73], which describes the activities x and y of two populations of interacting genes as follows:

The variables x and y represent the proteinogenic activities of two populations of genes, whose interaction is described through a quasi-sigmoid function parametrized by λ, controlling its stiffness and accounting for the response to a signal coming from the other gene (whose expressed protein acts as a transcription factor, inhibitor or activator, on the first gene). This response is highly non-linear when λ is large, becoming similar to a Hopfield-like response.

The parameters τx and τy refer to the influence power of the populations of genes x and y. If θ = 0, τx = τy = τ, the Wilson-Cowan oscillator presents a Hopf bifurcation when λτ crosses the value 1 [74], moving from dynamics with only one stable fixed point to dynamics with a repulsor at the origin of the state space E, surrounded by an attractor limit cycle C of period T. Let us consider now the point φ(O,h) of C reached after a time (or phase) h on C from a point chosen as origin O = φ(O,0) (Figure 3): there is a bijective map b between C and the phase interval [0, T]. The isochron Ih of phase h is the set of points x of B(C) such that d(φ(x,t), φ(h,t)) tends to 0, when t tends to infinity. Ih extends the map b to all the points of B(C). Let denote φh the flow φ restrained to IhxTd, where Td = {kT}k∈ℕ, such as:

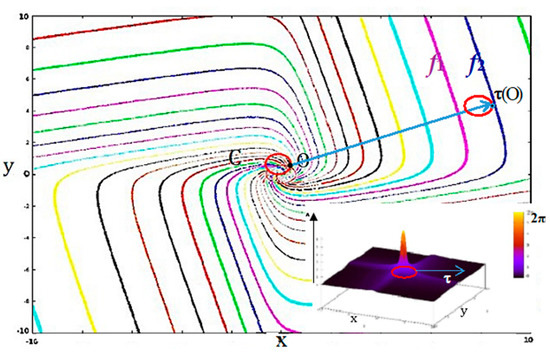

Figure 3.

Isochrons and maximum phase shift of the Wilson-Cowan oscillator. In the phase space (x0y) are 30 isochrons and the limit cycle C (in red) of a Wilson-Cowan oscillator (with τx = τy = τ = 1, and λ = 1.1). The oscillation period is 2π in the vicinity of its Hopf bifurcation; the limit cycle C is close to the unit circle; and isochrons are in the form of spirals. Thumbnail Bottom Right: representation of the maximum phase shift along the z axis with a color code (between 0 and 2π). The profile of maximum phase shift indicates how a population of Wilson-Cowan oscillators synchronizes following an instantaneous translation τ, and the degree of synchronization (inverse of this maximum phase shift observed after perturbation) is proportional to the intensity of the translation.

The point h is an attractor for φh, whose basin is Ih\{h}. Let us decompose now the time interval [0,T] in m equidistant sub-intervals [kT/m, (k+1)T/m[, for k = 0,…,m−1. Let denote φk the flow φ restrained to Ak∪Bk x Td, with Ak = F([kT/m, (k+1)T/m]) as attractor, having as attraction basin the set Bk = ∪h∊F([kT/m, (k+1)T/m[) Ih, with B(C) = ∪k=0,m−1Bk. The isochronal entropy of degree m is related to the m Ak’s subsets of of C=∪k=0,m−1Ak, each Ak being the attractor for the dynamics φk:

If isochron basins have all the same size, then Emattractor = log2m. If not, Emattractor reflects the spatial heterogeneity of the asymptotic behavior of the network dynamics: the basins Bk can be large (when the flow φ is rapid inside) or small (when the flow φ is slow inside). In the example of the Wilson-Cowan oscillator with τx = τy = 1, if λ is growing from 0 to infinite, then Emattractor is increasing from 0 to log2m [75].

Let us fix now the values of the parameters: τx = τy = τ = 1 and λ = 1.1, close to the bifurcation in the parameter space (λτ ≈ 1) and consider the isochron landscape of the Wilson-Cowan oscillator (Figure 3). The maximum phase shift observed after an instantaneous translation of the limit cycle of the Wilson-Cowan oscillator is inversely proportional to the intensity of this perturbation (Figure 3 Thumbnail), which means that a population of oscillators must be perturbed by a sufficiently intense stimulus to be synchronized. Indeed, isochrons form spirals, which diverge from one another far from the limit cycle and the synchronization, are observed if the translation of the limit cycle falls between two isochrons having close phases f1 and f2. These isochrons can be reached by translating the limit cycle C sufficiently far from its initial position [75,76,77]: it is possible if attraction basins are sufficiently wide, and hence, if the isochronal entropy Emattractor is sufficiently large. Hence, if Eattractor can serve as index of complexity of attractor landscape, Emattractor can serve more precisely as index of synchronizability, which is particularly interesting in the study of biological clocks and the entrainment of biological rhythms.

3.2. Energy, Frustration and Dynamic Entropy

We define first the functions energy U and frustration F for a genetic network N with n genes in interaction [1,2,15,16,17,18,19,20,21,22,23,36,37,38,39,40,41,42,43,44,78]:

where x is a configuration of gene expression (xi = 1, if the gene i is expressed, and xi = 0, if not), E denotes the set of all configurations of gene expression; that is, for a Boolean network, the hypercube {0,1}n, and αij = sign(wij) is the sign of the interaction weight wij, which quantifies the influence of the gene j on the gene i: αij = −1 (with respect to +1), if j is an inhibitor (with respect to activator) of the expression of i, and αij = 0, if j exerts no influence on i. Q+(N) is equal to the number of positive edges of the interaction graph G of the network N having n genes, whose incidence matrix is A = (αij)i,j = 1,n. F(x) denotes the global frustration of x; i.e., the number of pairs of genes (i,j) for which state values xi and xj are contradictory with the sign αij of the influence of j on i:

where Fij is the local frustration of the pair (i,j) defined by:

Fij(x) = 1, if {αij = 1 ∧ {{xj = 1 ∧ xi = 0}∨{xj = 0 ∧ xi = 1}} ∨ {αij = −1 ∧ {{xj = 1 ∧ xi = 1}∨{xj = 0 ∧ xi = 0}}, else Fij(x) = 0.

We choose for the dynamics of the network the Hopfield rule (1). It corresponds to a Markov operator defined on the state space E provided with a sequential updating schedule for the expression of the genes in a predefined order [1,2]. Let M = (Mxy) denote its Markov matrix, giving the transition probability (defined by the rule (1) and this sequential updating mode) to go from the configuration x to the configuration y in E, and μ = {µx = μ({x})}x∊E denote its stationary distribution on E. The dynamic entropy E is then defined as the Kolmogorov-Sinaï entropy of M by the classical formula:

In the sequential updating mode defined by the order of the nodes equal to the integer order on N={1,n}, by denoting I = {1,…,i − 1}, N\I = {i,…,n} and X (with respect to Y) the set of the indices i such that xi = 1 (with respect to yi = 1), we have for the general coefficient of the transition matrix M:

and for the invariant measure, the classical Gibbs measure µ [1,2]:

When T = 0, the invariant measure μ is concentrated on the m (≤2n) deterministic attractors Ak of size ak of the network dynamics (e.g., two fixed points and a limit cycle on Figure 4 Top) and we have:

because each line of M is reduced to one coefficient equal to one, the others being equal to 0.

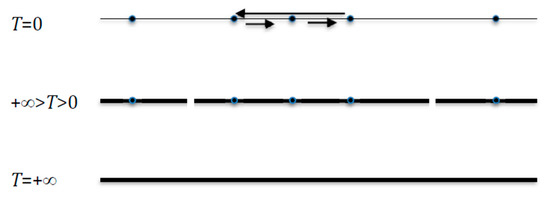

Figure 4.

The invariant measure μ is scattered uniformly. Top: if T = 0, over deterministic attractors (two fixed points and a limit cycle). Middle: if +∞ > T > 0, over attraction basins of these attractors, considered as final classes of the Markov transition matrix. Bottom: if T = +∞, over the whole state space E.

When +∞ > T > 0, if μ becomes scattered uniformly on the attraction basins of the m deterministic attractors supposed to be fixed points of the network dynamics (cf. Figure 4 Middle), they are transiently the final classes of the Markov transition matrix related to the dynamics (9), and are denoted Ak of size card(Ak) = ak. Then we have, when this scattering is observed, the approximate formula:

which can be written as:

where ABRS(A) = Σx∊B(A)∪A μx = μ(B(A)∪A).

When T tends to +∞, μ tends to be scattered uniformly over E (cf. Figure 4 Bottom) and E = log22n = n. More generally, we have:

where Ev and Eµ denote, respectively, the entropy of the joint measure on E2 and the entropy of the invariant measure µ, which can be estimated by Eattractor, if attractors are fixed points and by Emattractor if the attractor is a limit-cycle.

When T = +∞, and v and μ are scattered uniformly, respectively, on E2 and E, and we have: E = log2 ((2n)2)−log22n = n.

When T = 0, if v is concentrated on a set of states in E2 and µ is concentrated on the projection of this set of states on E, then they have the same entropy and E = 0. In between (when +∞ > T > 0), Equation (12) constitutes an estimator of E, if T is s large enough.

3.3. Dynamic Entropy, Index of Robustness

The problem of robustness of a biological network controlling important functions, such as morphogenesis, memory, cell division, etc., has been often considered for 80 years [79,80,81] under different names (structural stability, resilience, bifurcation, etc.), but is still pertinent [82,83].

The dynamic entropy E serves as index of robustness, being related (as Kolmogorov-Sinaï entropy) to the ability a system has to return to its equilibrium after exogenous or endogeneous perturbations [23,43].

By considering a Hopfield rule with all non-zero interaction weights wij having the same absolute value c, we will study the robustness of the network in response to the variations of c, by proving the following Propositions [39,42]:

Proposition 1.

Let us consider a deterministic Hopfield Boolean network, which is a circuit sequentially or synchronously updated with constant absolute value c for its non-zero interaction weights. Then, its dynamics are conservative, keeping constant on the trajectories the Hamiltonian function L defined by:

whereHdenotes the classical Heaviside function. L(x(t)) is the total discrete kinetic energy, equal to the half of the global dynamic frustrationF(x(t)) = ∑i=1,nFi,(i−1)modn (x(t)), where Fi,(i−1)modn is the local dynamic frustration defined between nodes (i−1) and i as follows:

Fi,(i−1)(x(t)) = 1, if {sign(wi(i−1)) = 1 ∧xi(t) ≠ xi−1(t − 1)} ∨ {sign(wi(i−1)) = −1 ∧ xi(t) = xi−1(t − 1)}, else Fi,(i−1)(x(t)) = 0.

The Proposition 1 still holds if the network is a circuit whose transition functions are Boolean identity or negation, a circuit on which it is easy to calculate the global frustration F and to show that it characterizes attractors by remaining constant along them and decreasing inside their attraction basin like in discrete gradient dynamics (Figure 5 Left) [84].

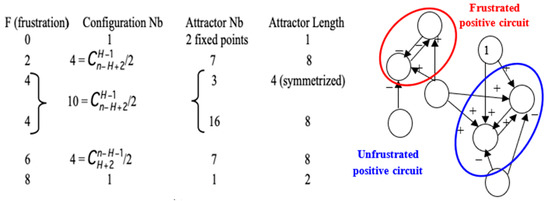

Figure 5.

Left: Description of the attractors of circuits of length eight for which Boolean local transition functions are either identity or negation. Right: Frustrated pair of nodes belonging to a positive circuit of length two in the genetic network controlling the flowering of Arabidopsis thaliana. The network evolves by diminishing the global frustration until the attractor on which the global frustration remains constant.

Let consider now the quantity ∂E/∂c, which denotes the derivative of the dynamic entropy E with respect to the common absolute value c. ∂E/∂c can be considered as the capacity the robustness parameter E has to resist to environmental variations of the network weights and we have from formula (13): ∂E/∂c = ∂Ev/∂c − ∂Eµ/∂c. Then we can prove the following result [29,42]:

Proposition 2.

If the updating mode of the Hopfield network is sequential, we have:

where the variance Varµ is taken for the invariant Gibbs measure µ defined by:

∂Eµ/∂c = −cVarµ(F),

Proof:

We have:

where ∂μx/∂c = ∂[exp((Σi,j=1,n cαijxixj − θ)/T)/Z]/∂c; Ni is the neighborhood of i made of the nodes j such as αij ≠ 0, Z = Σy∊E exp((Σi,j=1,n cαijyjyj − θ)/T) and ∂Z/∂c = Σy∊E (Σi,j=1,n αijyiyj/T)Zμy. Then:

which implies:

and

Eµ = −Σx∊E μxlog2µx, then ∂E /∂c = −Σx∊E ∂μx/∂c log2µx − Σx∊E μx ∂log2µx/∂c,

∂μx/∂c =[∂[exp((Σi,j=1,n cαijxixj-θ)/T)/∂c]/Z − exp((Σi,j=1,n cαijxixj-θ)/T)(∂Z/∂c)/Z2

= (Σi,j=1,n αijxixj/T)μx − Σy∊E (Σi,j=1,n αijyiyj/T)μyμx,

= (Σi,j=1,n αijxixj/T)μx − Σy∊E (Σi,j=1,n αijyiyj/T)μyμx,

∂μx/∂c log2µx=[c(Σi,j=1,n αijxixj/T)2μx − Σy∊E c(Σi,j=1,n αijyiyj/T)(Σi,j=1,n αijxixj/T)μyμx]/Log2 − ∂μx/∂c log2Z

μx ∂log2µx/∂c = (∂μx/∂c)/Log2

Hence, we have:

but Σx∊E ∂μx/∂c = ∂(Σx∊E μx)/∂c = 0, therefore ∂Eµ/∂c = −cVarµ(U) = −cVarµ(F) □

∂Eµ/∂c = −Σx∊E ∂μx/∂c log2µx − Σx∊E μx ∂log2µx/∂c

= −c[Eµ(U2) − (Eµ(U))2]/Log2 + Σx∊E [∂μx/∂c log2Z − ∂µx/∂c]/Log2,

Let us define now the local cross-frustration Gij(x,y) = 1: if αij = 1, xiyj = 0 or αij = −1, xiyj = 1, and Gij(x,y) = 0 elsewhere, the global cross-frustration is G(x,y) = Σi,j=1,n Gij(x,y). We can remark than G(x,x) = F(x). The local V(x,y) and conditional V(x) cross-energy functions are respectively defined as:

V(x,y) = Σi,j=1,nαijxiyj/T = Q+(N) – G(x,y) and V(x) = Σy∊EV(x,y).

By considering the conditional entropy Ex = −Σy∊E Mxy log2Mxy, where, in the parallel updating mode:

and the invariant measure µx equals:

μx = Σy∊E exp((Σi,j=1,n cαijxiyj − θ)/T)/Z, with Z = Σx,y∊E exp((Σi,j=1,n cαijxiyj − θ)/T)

Then, the following Propositions holds:

Proposition 3.

In parallel updating mode, we have: ∂Ex/∂c = −cVarxG, where the variance Varx is taken for the conditional measure {Mxy}y∊E.

Proof:

It is the same proof as for Proposition 2 by replacing F with G. □

Let remark now that dynamic entropy E is related to the conditional entropy Ex as follows:

Proposition 4.

In parallel updating mode, we have: ∂E/∂c = -cVarvG + Covv(J,G), were Varv and Covv are respectively the variance and the covariance taken for the random variables G(x,y) and J(x,y) = log2Mxy and for the joint measure v =μxMxy.

Proof:

We have: ∂E/∂c = Σx∊E (μx ∂Ex/∂c + Ex ∂μx/∂c). Hence, from Proposition 3, we get:

With ∂μx/∂c = [Σy∊Ω[∂exp((Σi,j=1,n cαijxiyj-θ)/T)/∂c]/Z − [Σy∊E exp((Σi,j=1,n cαijxiyj − θ)/T](∂Z/∂c)/Z2, where Z = Σx,y∊E exp((Σi,j=1,n cαijxiyj − θ)/T) and ∂Z/∂c = Σx,y∊E; i,j=1,n αijxiyj/T)exp((Σi,j=1,n cαijxiyj − θ)/T)

∂E/∂c = −cVarvG + Σx∊E Ex ∂μx/∂c,

Then, we have:

and Σx∊E Ex ∂μx/∂c = Σx∊E μx Ex Ex(G) – E Eµ(G) = Ev(JG) – Ev(J)Ev(G).

∂μx/∂c = Σy∊E; i,j=1,n αijxiyj exp((Σi,j=1,n cαijxiyj − θ)/T)/ZT − μxΣx,y∊E; i,j=1,n αijxiyj exp((Σi,j=1,n cαijxiyj − θ)/T)/ZT

= μx (Ex(G) – Ev(G))

= μx (Ex(G) – Ev(G))

Finally, we get: ∂E/∂c = −cVarvG + Covv(J,G) □

We have shown in the Propositions above a direct link between the sensitivity to c of entropies Eµ, Ex and E, and the variability of frustrations of the network; e.g., if the network is sequentially updated, Eµ decreases when the variance of the global frustration F increases, because of Equation (15): ∂Eµ/∂c = −cVarµF [39].

Remarks:

- (1)

- The Proposition 2 still holds when we replace c, the absolute value of weights wij, by the temperature T, and we have: ∂Eµ/∂T = Varµ(F)/T3.

- (2)

- The Proposition 2 shows that there is a close relationship between the robustness (or structural stability) with respect to variations of the parameter c and the global dynamic frustration F. This global dynamic frustration F is in general easy to calculate. For the configuration x of the genetic network controlling the flowering of Arabidopsis thaliana [37], there is only one frustrated pair; hence, F(x) = 2 (Figure 5 Right). More generally, all the thermodynamic functions introduced here are calculable in theory and could serve to quantifying the trajectory (Lyapunov) stability and the structural stability. If the number of states of a discrete state space E is too large, or if integrals on a continuous state space E are too difficult to calculate, it is possible to use Monte Carlo procedures for getting the values of the variance of the global frustration and of the dynamic entropy.

3.4. Entropy Centrality of Nodes

There are four classical types of centrality in an interaction graph G (Figure 2 Top). The betweenness centrality [85] is the first type and is defined for a node k as follows:

where ij(k) is the number of shortest paths going from j to i through k, and k = ΣI ≠ j∊G ij(k).

The degree centrality is the second type of classical centrality. It is defined from the notions of in-, out- or total-degree of a node i, which correspond respectively to the number of arrows of the interaction graph G having i respectively as end, start, or as both. For example, the in-degree centrality is defined by:

where aij denotes the general coefficient of the signed incidence matrix A of the graph G.

The closeness centrality is the third type of classical centrality. Closeness, as the inverse of the farness, is defined by considering the inverse of an average distance between i and j, over all nodes j of the interaction graph G:

where the distance chosen here is l(i,j), the length of the closest path between i and j.

The eigen-centrality or spectral centrality is the last classical centrality. It takes into account the fact that neighbors of a node i are possibly highly connected to the interaction graph, considering that connections to (possibly few) highly connected nodes contribute to the centrality of i as much as connections to (possibly numerous) weakly connected nodes. Hence, the eigenvector centrality Cieigen of the gene i measures more the global influence of i on the whole network and verifies [86]:

where λ is the greatest eigenvalue of the incidence matrix of the interaction graph G.

The four centralities above can be very different (Figure 6), but each has a specific interest: (i) betweenness centrality relates to the global connectivity with all nodes of the network, (ii) degree centralities correspond to the local connectivity with only nearest nodes, (iii) closeness centrality measures the proximity with other nodes using a distance on the interaction graph and (iv) eigen-centrality quantifies the ability of a node to be connected to nodes, possibly only a few, but with a high connectivity; hence, identifying important hub-relays controlling a wide sub-network.

Figure 6.

Comparison between classical types of centrality in an interaction graph, where the diameter of the point representing a node of the network is proportional to its centrality value. Left: eigen-centrality in an interaction graph corresponding to friendship relations in a high school. Middle: total-degree centrality (same interaction graph than left). Right: entropy centrality in a quasi-symmetric graph of friendship.

As a complement of the classical centralities, we introduce now a new notion of centrality, called entropy centrality, taking into account the heterogeneity of the distribution of states of the neighbors of a node i, and not only its connectivity in the graph, and highlighting the symmetric sub-graphs having equi-distributed states in the neighborhoods of their nodes (Figure 6):

where vk denotes the kth frequency among s (s = 2 in Boolean case) in the histogram of the values of states observed in the neighborhood Vi of the node i, set of the nodes out- or in-linked to the node i.

Cientropy = −Σk = 1,s vk log2vk,

4. Application to a Real Genetic Network

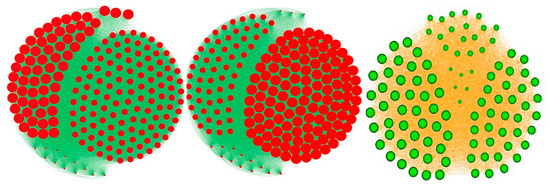

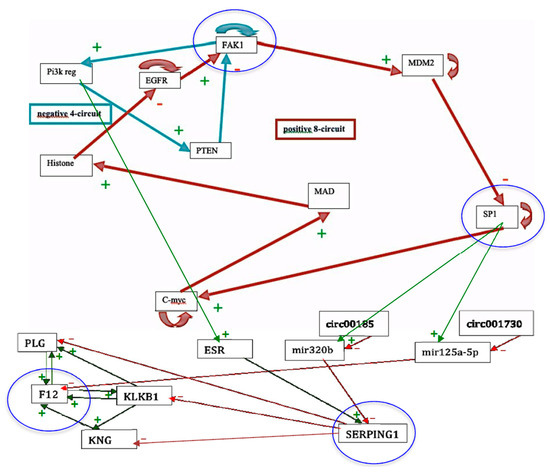

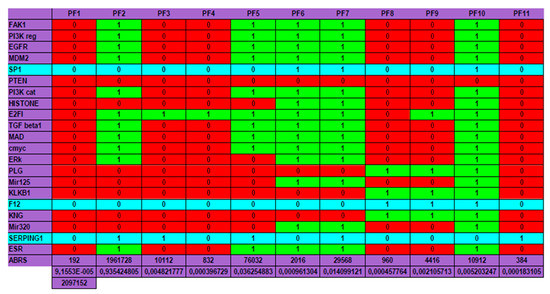

The genetic regulatory network controlling kinins and kallikrein described on Figure 7 corresponds to genes involved in a familial disease, angioedema, for which there are normal profiles of genetic expression and pathologic ones, particularly in the hereditary form of the disease. By studying the connectivity of the nodes of the interaction graph of the network, we can select four critical genes having a high connectivity power with high total degree (≥5) and entropy centrality: these genes are FAK1, SP1, F12 and SERPING1. Surprisingly, the last three of them are the most involved in the etiology of the angioedema, being susceptible of mutations and/or hormonal interactions, favoring the appearance of the disease. Four are represented circled in blue in the network of Figure 7 and three are in a blue line on Figure 8.

Figure 7.

The genetic regulatory network ruling the expression of the genes involved in the angioedema disease. Genes circled in blue are those having a total-degree centrality more than five.

Figure 8.

The genetic regulatory network ruling the expression of the genes involved in the angioedema disease.

Eattractor can be evaluated by the quantity − Σk = 1,m ABRS(Ak)log2[ABRS(Ak)], where ABRS(Ak) is equal to the size of the attraction basin of the kth attractor Ak among the m attractors of the network dynamics, divided by 2n, where n is the number of genes of the studied network N (equal to 21 in the present case, by supposing that circular RNAs are absent). Then, in the present example, we have Eattractor ≈ 0.4520. Hence, we have: E ≈ log22n − Eattractor ≈ 21 − 0.4520 ≈ 20.55, which corresponds to a high value of the dynamic entropy, and consequently to a high structural stability of the genetic network N controlling the kinin- kallikrein system.

We can notice on Figure 8 Top that the physiologic attractor is a fixed point (PF3) whose attraction basin is made of 93.43% of the possible states of the network. The pathologic attractor corresponding to the hereditary form of the disease called angioedema is the fixed point PF10, at which there is the absence of the gene SERPING1 and the presence of the genes KLKB1 coding for kallikrein B1 and KNG coding for kininogen. The attraction basin of PF10 has a relative size equal about to 5‰ of the possible states of the network, and the observed prevalence of hereditary angioedema in the general population is reported to range from 1:10,000 to 1:150,000, without major sex or ethnic differences [87,88].

A last remark concerns the number of attractors (11 fixed points and no periodic attractor on Figure 8). A previous work [40] gives an algebraic formula allowing for the calculation of the number of attractors of a Boolean network; in their Jacobian interaction graph were two tangential circuits (Table 1): one positive of length right, (involved in the richness in attractors, as predicted by [32,33,34], and one negative of length four (responsible of the trajectory stability, as predicted by [36,89]). This predicted number (11) is the same as that calculated from the simulation of all trajectories from all possible initial conditions summarized in Figure 8, and more results both theoretical and applied to real Boolean networks can be found in more recent literature [46,47,48,49,90] showing a large spectrum of possible applications in genetic, metabolic or social Boolean networks.

Table 1.

Total number of attractors (here 8 in red) of two tangential circuits at the intersection of a line fixing the length L of a negative circuit (here 4) and a column fixing the length R of positive circuit (here 8) tangential to the negative one.

5. Conclusions and Perspectives

The notion of dynamic entropy is common to both discrete and continuous dynamical systems and can be applied to numerous situations in biological modeling in order to interpret the dynamical behavior of complex systems having several elements in interaction, leading to many observed trajectories until their ultimate asymptotic behavior, via the attractors. Biological regulatory networks are currently widely used to design the mechanisms of these interactions at different scales, from genes and cells [68,91,92] to individuals [46,93]. Between these extreme molecular and population levels, identical functions can be concerned from different species: from paramecia, a plant-like Bidens pilosus to humans as memory function [49,94] or motion control [95,96,97]. Because the corresponding regulatory systems are often random, future work will be done in the framework of general random systems [98]—in which the notions of entropy, stochastic attractor (confiner) and invariant measure can help to interpret and classify the observed data in many regulatory networks (neural, genetic, metabolic, social, etc.)—and in biomedical fields for practical applications (see for example [61,99]).

Author Contributions

The four authors contributed to the research described in the article, have read and approved the final manuscript.

Funding

This research received no external funding.

Acknowledgments

We are indebted to the Project PHC Maghreb SCIM (Complex Systems and Medical Engineering) for providing administrative support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Demongeot, J.; Jezequel, C.; Sené, S. Asymptotic behavior and phase transition in regulatory networks. I. Theoretical results. Neural Netw. 2008, 21, 962–970. [Google Scholar] [CrossRef] [PubMed]

- Demongeot, J.; Sené, S. Asymptotic behavior and phase transition in regulatory networks. II. Simulations. Neural Netw. 2008, 21, 971–979. [Google Scholar] [CrossRef] [PubMed]

- Demongeot, J.; Elena, A.; Sené, S. Robustness in neural and genetic networks. Acta Biotheor. 2008, 56, 27–49. [Google Scholar] [CrossRef] [PubMed]

- Goles, E.; Olivos, J. Comportement itératif des fonctions à multiseuil. Inf. Control 1980, 45, 300–313. [Google Scholar] [CrossRef]

- Goles, E. Sequential iteration of threshold functions. Springer Ser. Synerg. 1981, 9, 64–70. [Google Scholar]

- Fogelman-Soulié, F.; Goles, E.; Weisbuch, G. Specific roles of the different Boolean mappings in random networks. Bull. Math. Biol. 1982, 44, 715–730. [Google Scholar] [CrossRef]

- Goles, E. Dynamics of positive automata networks. Theor. Comp. Sci. 1985, 41, 19–32. [Google Scholar] [CrossRef][Green Version]

- Goles, E.; Fogelman-Soulie, F.; Pellegrin, D. Decreasing energy functions as a tool for studying threshold networks. Discrete Appl. Math. 1985, 12, 261–277. [Google Scholar] [CrossRef]

- Cosnard, M.; Goles, E.; Moumida, D. Bifurcation structure of a discrete neuronal equation. Discret. Appl. Math. 1988, 21, 21–34. [Google Scholar] [CrossRef][Green Version]

- Demongeot, J.; Jacob, C. Confineurs: Une approche stochastique. Comptes Rendus Acad. Sci. Série I 1989, 309, 699–702. [Google Scholar]

- Demongeot, J.; Benaouda, D.; Jezequel, C. “Dynamical confinement” in neural networks and cell cycle. Chaos Interdiscip. J. Nonlinear Sci. 1995, 5, 167–173. [Google Scholar] [CrossRef] [PubMed]

- Wentzell, A.D.; Freidlin, M.I. On small random perturbations of dynamical systems. Russ. Math. Surv. 1970, 25, 1–55. [Google Scholar]

- Donsker, M.D.; Varadhan, S.R.S. Asymptotic evaluation of certain Markov process expectations for large time. Comm. Pure Appl. Math. 1975, 28, 1–47. [Google Scholar] [CrossRef]

- Freidlin, M.I.; Wentzell, A.D. Random Perturbations of Dynamical Systems; Springer: Berlin/Heidelberg, Germany, 1984. [Google Scholar]

- Demetrius, L. Statistical mechanics and population biology. J. Stat. Phys. 1983, 30, 709–753. [Google Scholar] [CrossRef]

- Demetrius, L.; Demongeot, J. A thermodynamic approach in the modeling of the cellular cycle. Biometrics 1984, 40, 259–260. [Google Scholar]

- Demongeot, J.; Demetrius, L. La dérive démographique et la sélection naturelle: Etude empirique de la France (1850–1965). Population 1989, 2, 231–248. [Google Scholar]

- Demetrius, L. Directionality principles in thermodynamics and evolution. Proc. Natl. Acad. Sci. USA 1997, 94, 3491–3498. [Google Scholar] [CrossRef]

- Demetrius, L. Thermodynamics and evolution. J. Theor. Biol. 2000, 206, 1–16. [Google Scholar] [CrossRef]

- Demetrius, L.; Gundlach, M.; Ochs, G. Complexity and demographic stability in population models. Theor. Popul. Biol. 2004, 65, 211–225. [Google Scholar] [CrossRef]

- Demetrius, L.; Manke, T. Robustness and network evolution-an entropic principle. Phys. A Stat. Mech. Its Appl. 2005, 346, 682–696. [Google Scholar] [CrossRef]

- Manke, T.; Demetrius, L.; Vingron, M. An entropic characterization of protein interaction networks and cellular robustness. J. R. Soc. Interface 2006, 3, 843–850. [Google Scholar] [CrossRef] [PubMed]

- Demetrius, L.; Ziehe, M. Darwinian fitness. Theor. Popul. Biol. 2007, 72, 323–345. [Google Scholar] [CrossRef] [PubMed]

- Rochlin, V.A. Exact endomorphisms of Lebesgue spaces. Am. Math. Soc. Transl. 1964, 39, 1–36. [Google Scholar]

- Rochlin, V.A. Lectures on the theory of entropy of transformations with invariant measure. Russ. Math. Surv. 1967, 22, 1–52. [Google Scholar] [CrossRef]

- Wichert, A.; Moreira, C.; Peter Bruza, P. Balanced Quantum-Like Bayesian Networks. Entropy 2020, 22, 170. [Google Scholar] [CrossRef]

- Downarowicz, T. Symbolic extensions of smooth interval maps. Probab. Surv. 2010, 7, 84–104. [Google Scholar] [CrossRef]

- Ferenczi, S. Complexity of sequences and dynamical systems. Discret. Math. 1999, 206, 145–154. [Google Scholar] [CrossRef]

- Ferenczi, S. Substitution dynamical systems on infinite alphabets. Ann. l’Institut Fourier 2006, 56, 2315–2343. [Google Scholar] [CrossRef]

- Hernández-Orozco, S.; Kiani, N.A.; Zenil, H. Algorithmically probable mutations reproduce aspects of evolution, such as convergence rate, genetic memory and modularity. R. Soc. Open Sci. 2018, 5, 180399. [Google Scholar] [CrossRef]

- Zenil, H.; Kiani, N.A.; Marabita, F.; Deng, Y.; Elias, S.; Schmidt, A.; Ball, G.; Tegnér, J. An Algorithmic Information Calculus for Causal Discovery and Reprogramming Systems. iScience 2019, 19, 1160–1172. [Google Scholar] [CrossRef]

- Delbrück, M. Discussions, In: Unités biologiques douées de continuité génétique. Colloques Int. CNRS 1949, 8, 33–35. [Google Scholar]

- Thomas, R. Boolean formalization of genetic control circuits. J. Theor. Biol. 1973, 42, 563–585. [Google Scholar] [CrossRef]

- Thomas, R. On the relation between the logical structure of systems and their ability to generate multiple steady states or sustained oscillations. Springer Ser. Synerg. 1981, 9, 180–193. [Google Scholar]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Demongeot, J.; Ben Amor, H.; Gillois, P.; Noual, M.; Sené, S. Robustness of regulatory networks. A generic approach with applications at different levels: Physiologic, metabolic and genetic. Int. J. Mol. Sci. 2009, 10, 4437–4473. [Google Scholar] [CrossRef]

- Demongeot, J.; Goles, E.; Morvan, M.; Noual, M.; Sené, S. Attraction basins as gauges of environmental robustness against boundary conditions in biological complex systems. PLoS ONE 2010, 5, e11793.2. [Google Scholar] [CrossRef]

- Demongeot, J.; Elena, A.; Noual, M.; Sené, S.; Thuderoz, F. Immunetworks, intersecting circuits and dynamics. J. Theor. Biol. 2011, 280, 19–33. [Google Scholar] [CrossRef]

- Demongeot, J.; Waku, J. Robustness in biological regulatory networks. I–IV. Comptes Rendus Mathématique 2012, 350, 221–228, 289–298. [Google Scholar] [CrossRef]

- Demongeot, J.; Noual, M.; Sené, S. Combinatorics of Boolean automata circuits dynamics. Discret. Appl. Math. 2012, 160, 398–415. [Google Scholar] [CrossRef]

- Demongeot, J.; Cohen, O.; Henrion-Caude, A. MicroRNAs and Robustness in Biological Regulatory Networks. A Generic Approach with Applications at Different Levels: Physiologic, Metabolic, and Genetic. Springer Ser. Biophys. 2013, 16, 63–114. [Google Scholar]

- Demongeot, J.; Ben Amor, H.; Hazgui, H.; Waku, J. Stability, complexity and robustness in population dynamics. Acta Biotheor. 2014, 62, 243–284. [Google Scholar] [CrossRef] [PubMed]

- Demongeot, J.; Demetrius, L. Complexity and Stability in Biological Systems. Int. J. Bifurc. Chaos 2015, 25, 40013. [Google Scholar] [CrossRef]

- Demongeot, J.; Hazgui, H.; Henrion Caude, A. Genetic regulatory networks: Focus on attractors of their dynamics. In Computational Biology, Bioinformatics & Systems Biology; Tran, Q.N., Arabnia, H.R., Eds.; Elsevier: New York, NY, USA, 2015; pp. 135–165. [Google Scholar]

- Demongeot, J.; Elena, A.; Taramasco, C. Social Networks and Obesity. Application to an interactive system for patient-centred therapeutic education. In Metabolic Syndrome: A Comprehensive Textbook; Ahima, R.S., Ed.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 287–307. [Google Scholar]

- Demongeot, J.; Jelassi, M.; Taramasco, C. From Susceptibility to Frailty in social networks: The case of obesity. Math. Pop. Studies 2017, 24, 219–245. [Google Scholar] [CrossRef]

- Demongeot, J.; Jelassi, M.; Hazgui, H.; Ben Miled, S.; Bellamine Ben Saoud, N.; Taramasco, C. Biological Networks Entropies: Examples in Neural Memory Networks, Genetic Regulation Networks and Social Epidemic Networks. Entropy 2018, 20, 36. [Google Scholar] [CrossRef]

- Demongeot, J.; Sené, S. Phase transitions in stochastic non-linear threshold Boolean automata networks on ℤ2: The boundary impact. Adv. Appl. Math. 2018, 98, 77–99. [Google Scholar] [CrossRef]

- Demongeot, J.; Hazgui, H.; Thellier, M. Memory in plants: Boolean modelling of the learning and store/recall mnesic functions in response to environmental stimuli. J. Theor. Biol. 2019, 467, 123–133. [Google Scholar] [CrossRef]

- Demongeot, J.; Sené, S. About block-parallel Boolean networks: A position paper. Nat. Comput. 2020, 19. [Google Scholar] [CrossRef]

- Erdös, P.; Rényi, A. On random graphs. Pub. Math. 1959, 6, 290–297. [Google Scholar]

- Barabasi, A.L.; Albert, R. Emergence of scaling in random networks. Science 1959, 286, 509–512. [Google Scholar] [CrossRef]

- Albert, R.; Jeong, H.; Barabasi, A.L. Error and attack tolerance of complex networks. Nature 2000, 406, 378–382. [Google Scholar] [CrossRef]

- Banerji, C.R.S.; Miranda-Saavedra, D.; Severini, S.; Widschwendter, M.; Enver, T.; Zhou, J.X.; Teschendorff, A.E. Cellular network entropy as the energy potential in Waddington’s differentiation landscape. Sci. Rep. 2013, 3, 3039. [Google Scholar] [CrossRef] [PubMed]

- Jeong, H.; Mason, S.P.; Barabasi, A.L.; Oltvai, Z.N. Lethality and centrality in protein networks. Nature 2001, 411, 41–42. [Google Scholar] [CrossRef]

- Gómez-Gardeñes, J.; Latora, V. Entropy rate of diffusion processes on complex networks. Phys. Rev. E 2008, 78, 065102. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wang, B.H.; Wang, W.X.; Zhou, T. Network Entropy Based on Topology Configuration and Its Computation to Random Networks. Chin. Phys. Lett. 2008, 25, 4177–4180. [Google Scholar] [CrossRef]

- Teschendorff, A.E.; Severini, S. Increased entropy of signal transduction in the cancer metastasis phenotype. BMC Syst. Biol. 2010, 4, 104. [Google Scholar] [CrossRef] [PubMed]

- Teschendorff, A.E.; Banerji, C.R.S.; Severini, S.; Kuehn, R.; Sollich, P. Increased signaling entropy in cancer requires the scale-free property of protein interaction networks. Sci. Rep. 2014, 2, 802. [Google Scholar]

- Teschendorff, A.E.; Sollich, P.; Kuehn, R. Signalling entropy: A novel network theoretical framework for systems analysis and interpretation of functional omic data. Methods 2014, 67, 282–293. [Google Scholar] [CrossRef]

- West, J.; Bianconi, G.; Severini, S.; Teschendorff, A. Differential network entropy reveals cancer hallmarks. Sci. Rep. 2012, 2, 802. [Google Scholar] [CrossRef]

- Chen, B.S.; Wong, S.W.; Li, C.W. On the Calculation of System Entropy in Nonlinear Stochastic Biological Networks. Entropy 2015, 17, 6801–6833. [Google Scholar] [CrossRef]

- Cosnard, M.; Demongeot, J. Attracteurs: Une approche déterministe. Comptes Rendus Acad. Sci. Maths. Série I 1985, 300, 551–556. [Google Scholar]

- Cosnard, M.; Demongeot, J. On the definitions of attractors. Lect. Notes Maths. 1985, 1163, 23–31. [Google Scholar]

- Szabó, K.G.; Tél, T. Thermodynamics of attractor enlargement. Phys. Rev. E 1994, 50, 1070–1082. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Xu, L.; Wang, E. Potential landscape and flux framework of nonequilibrium networks: Robustness, dissipation, and coherence of biochemical oscillations. Proc. Natl. Acad. Sci. USA 2008, 105, 12271–12276. [Google Scholar] [CrossRef] [PubMed]

- Menck, P.J.; Heitzig, J.; Marwan, N.; Kurths, J. How basin stability complements the linear-stability paradigm. Nat. Phys. 2013, 9, 89–92. [Google Scholar] [CrossRef]

- Kurz, F.T.; Kembro, J.M.; Flesia, A.G.; Armoundas, A.A.; Cortassa, S.; Aon, M.A.; Lloyd, D. Network dynamics: Quantitative analysis of complex behavior in metabolism, organelles, and cells, from experiments to models and back. Wiley Interdiscipl. Rev. Syst. Biol. Med. 2017, 9, e1352. [Google Scholar] [CrossRef] [PubMed]

- Bowen, R. ω-limit sets for Axiom A diffeomorphisms. J. Diff. Equ. 1978, 18, 333–339. [Google Scholar] [CrossRef]

- Williams, R.F. Expanding attractors. Publ. Math. l’IHÉS 1974, 43, 169–203. [Google Scholar] [CrossRef]

- Ruelle, D. Small random perturbations and the definition of attractors. Comm. Math. Phys. 1981, 82, 137–151. [Google Scholar] [CrossRef]

- Haraux, A. Attractors of asymptotically compact processes and applications to nonlinear partial differential equations. Commun. Partial Differ. Equ. 1988, 13, 1383–1414. [Google Scholar] [CrossRef]

- Glade, N.; Forest, L.; Demongeot, J. Liénard systems and potential-Hamiltonian decomposition. III Applications in biology. Comptes Rendus Mathématique 2007, 344, 253–258. [Google Scholar] [CrossRef]

- Tonnelier, A.; Meignen, S.; Bosch, H.; Demongeot, J. Synchronization and desynchronization of neural oscillators: Comparison of two models. Neural Netw. 1999, 12, 1213–1228. [Google Scholar] [CrossRef]

- Robert, F. Discrete Iterations: A Metric Study, Springer Series in Computational Mathematics; Springer: Berlin/Heidelberg, Germany, 1986. [Google Scholar]

- Ben Amor, H.; Glade, N.; Lobos, C.; Demongeot, J. The isochronal fibration: Characterization and implication in biology. Acta Biotheor. 2010, 58, 121–142. [Google Scholar] [CrossRef] [PubMed]

- Demongeot, J.; Kaufmann, M.; Thomas, R. Positive feedback circuits and memory. Comptes Rendus Acad. Sci. Paris Life Sci. 2000, 323, 69–79. [Google Scholar] [CrossRef]

- Toulouse, G. Theory of the frustration effect in spin glasses: I. Commun. Phys. 1977, 2, 115–119. [Google Scholar]

- Waddington, C.H. Organizers & Genes; Cambridge University Press: Cambridge, UK, 1940. [Google Scholar]

- Gardner, M.R.; Ashby, W.R. Connectance of large dynamic systems: Critical values for stability. Nature 1970, 228, 784. [Google Scholar] [CrossRef]

- Thom, R. Structural Stability and Morphogenesis; CRC Press: Boca Raton, FL, USA, 1972. [Google Scholar]

- Wagner, A. Robustness and Evolvability in Living Systems; Princeton University Press: Princeton, NJ, USA, 2005. [Google Scholar]

- Gunawardena, S. The robustness of a biochemical network can be inferred mathematically from its architecture. Biol. Syst. Theory 2010, 328, 581–582. [Google Scholar]

- Demongeot, J.; Elena, A.; Weil, G. Potential-Hamiltonian decomposition of cellular automata. Application to degeneracy of genetic code and cyclic codes III. Comptes Rendus Biol. 2006, 329, 953–962. [Google Scholar] [CrossRef]

- Barthélémy, M. Betweenness centrality in large complex networks. Eur. Phys. J. B 2004, 38, 163–168. [Google Scholar] [CrossRef]

- Negre, C.F.A.; Morzan, U.N.; Hendrickson, H.P.; Pal, R.; Lisi, G.P.J.; Loria, P.; Rivalta, I.; Ho, J.; Batista, V.S. Eigenvector centrality for characterization of protein allosteric pathways. Proc. Natl. Acad. Sci. USA 2018, 115, 12201–12208. [Google Scholar] [CrossRef]

- Ghazi, A.; Grant, J.A. Hereditary angioedema: Epidemiology, management, and role of icatibant. Biol. Targets Ther. 2013, 7, 103–113. [Google Scholar] [CrossRef]

- Charignon, D.; Ponard, D.; de Gennes, C.; Drouet, C.; Ghannam, A. SERPING1 and F12 combined variants in a hereditary angioedema family. Ann. Allergy Asthma Immunol. 2018, 121, 500–502. [Google Scholar] [CrossRef] [PubMed]

- Cinquin, O.; Demongeot, J. Positive and negative feedback: Striking a balance between necessary antagonists. J. Theor. Biol. 2002, 216, 229–241. [Google Scholar] [CrossRef] [PubMed]

- Fatès, N. A tutorial on elementary cellular automata with fully asynchronous updating. Nat. Comput. 2020, 19. [Google Scholar] [CrossRef]

- Mason, O.; Verwoerd, M. Graph theory and networks in Biology. IET Syst. Biol. 2007, 1, 89–119. [Google Scholar] [CrossRef] [PubMed]

- Gosak, M.; Markovi, R.; Dolenšek, J.; Rupnik, M.S.; Marhl, M.; Stožer, A.; Perc, M. Network science of biological systems at different scales: A review. Phys. Life Rev. 2017, 1571, 0645. [Google Scholar] [CrossRef]

- Christakis, N.A.; Fowler, J.H. The Spread of Obesity in a Large Social Network over 32 Years. N. Engl. J. Med. 2007, 357, 370–379. [Google Scholar] [CrossRef] [PubMed]

- Federer, C.; Zylberberg, J. A self-organizing memory network. BioRxiv 2017. [Google Scholar] [CrossRef]

- Grillner, S. Biological Pattern Generation: The Cellular and Computational Logic of Networks in Motion. Neuron 2006, 52, 751–766. [Google Scholar] [CrossRef] [PubMed]

- Naitoh, Y.; Sugino, K. Ciliary Movement and Its Control in Paramecium. J. Eukaryot. Microbiol. 2007, 31, 31–40. [Google Scholar]

- Guerra, S.; Peressotti, A.; Peressotti, F.; Bulgheroni, F.M.; Baccinelli, W.; D’Amico, E.; Gómez, A.; Massaccesi, S.; Ceccarini, F.; Castiello, U. Flexible control of movement in plants. Sci. Rep. 2019, 9, 16570. [Google Scholar] [CrossRef]

- Young, L.S. Some large deviation results for dynamical systems. Trans. Amer. Math. Soc. 1990, 318, 525–535. [Google Scholar] [CrossRef]

- Weaver, D.C.; Workman, C.T.; Stormo, G.D. Modeling regulatory networks with weight matrices. Pac. Symp. Biocomput. 1999, 4, 112–123. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).