4.1. Maximum Entropy and Georgesçu-Roegen

The principle of maximum entropy serves as the foundation of the theory of inference [

45], providing the statistical mechanics to reconstruct probabilistic information from incomplete data. Physical systems evolve spontaneously and possess stability characteristics at equilibrium, which is characterized by the value of maximum entropy. The key to the application of the principle (see [

45]) is associating to a probability density function (pdf) an entropy function that measures the dispersion or uncertainty with which the occurrence of possible events are expected. This allows us to introduce constraints, based on our knowledge of the system, that can be treated with the formalism of Lagrange multipliers (see

Section 4.2 for more details).

Generally, entropy may be interpreted as: (i) a measure of disorder in a system, (ii) a measure of our ignorance of a system, and (iii) an indicator of the irreversible changes in a system [

46].

The Austrian school posits the economy as a complex system that is the outcome of uncoordinated individual behavior [

47,

48]. In such systems, equilibria do not always refer to a “stationary state” but instead are related to the concept of attractors. An attractor is a deterministic sequence of states which are cyclically visited by the system. As such, it becomes impossible to fully understand macro processes by examining individual behavior. Although representatives of the Austrian School had a skeptical regard to the use of mathematical tools in economics [

49], their ideas can be expressed through the lense of statistical thermodynamics or the theory of information (see also [

50]).

In chapter VI of his 1971 book [

22], Georgesçu-Roegen describes the introduction of statistical mechanics and highlights the connection of economic processes with the second law of thermodynamics, i.e., the entropy law.

We draw the connections here between statistics, economic productivity, and physics of complex systems. As is well known, the correlation between two variables can be influenced by other confounding factors, and does not imply a direct causal effect of one variable on another. On the other hand, interactions or interdependencies refer to strict relationships between variables, allowing us to describe the impact of the variation of one variable on another. Economic productivity models can be used to analyze these interdependencies in a production process, as well as the interconnections between different economic sectors. Physics investigates the interactions between particles in order to analyze direct and reciprocal effects.

We finally highlight the fact that in information theory the maximum entropy problem can be reformulated as a minimum cost of coding, which is actually a function defined as the opposite of the entropy [

51].

We aim to derive the level and the structure of interactions between disciplinary research productivities. We can think of this as an inverse problem, as inference of the underlying network is drawn from observational data [

25]. Importantly, Georgesçu-Roegen [

22] provides the theoretical support for the unknown model parameters, and justification of the assumptions underlying our statistical model.

4.2. Maximum Entropy Estimates

We define our variables as the vectors

,

. Here and in the following bold style marks a vector quantity. Subscripts, e.g.,

i, indicate disciplines, whereas the superscript index

refers to a given Country. The observable

depends on the observation time

t, thus the set of data

can be defined, where

identifies a given realization of the generic set of variables or configuration

. To simplify the notation, where unnecessary, the index

t will be omitted. The element of our variable,

, in the case of our application to knowledge production (see

Section 5.1), is related to productivity in the discipline

i of the Country

as follows

is the productivity of country

in disciplinary subject category

i at time

t. We let

represent the world-country average of productivity in subject

i, so that

and

. We can use this formulation to account for the recent trend of increasing worldwide scientific productivity, considering deviations from the world-country average productivity,

, in place of

. While the average scientific productivity increases over time, the distribution of the deviations around the means does not. See the evidence reported in [

52].

Shannon’s [

51] theorem states that entropy (

S), defined in statistical mechanics, is a measure of the ‘amount of uncertainty’ related to a given discrete probability distribution

. Accordingly,

is given by

where

K is a positive constant and

is the pdf (probability density function) of the configuration

. This quantity is positive, additive for independent sources of uncertainty and it agrees with the intuitive notion that a uniform (or broad) distribution represents more uncertainty than does a sharply peaked distribution. It is immediate to verify the latter observation in the one-dimensional case by considering Equation (

4) and taking into account the property of the discrete distribution of probability,

.

In making inference on the basis of incomplete information we must use that probability which maximizes the ‘amount of uncertainty’ or entropy subject to whatever is known [

45]. This yields an unbiased assignment, avoiding arbitrary assumption of information which by hypothesis we do not have [

45]. For a set of variables

the so-called empirical expected value of a given function of

is defined as the average of the function over the observed realization (the mean) of

, in the present case the average over time. Since some empirical expectation values can be measured, formally this means that

can be found as the solution of a constrained optimization problem, i.e., maximizing the entropy of the distribution subject to conditions that enforce the expected values to coincide with the empirical ones. We will refer to the quantities whose averages are constrained as ‘features’ of the system. For simplicity, we choose to observe the lower order statistics of the data which can bring information about the underlying network of interactions between variables, i.e., pairwise correlations. The features of the system we are considering are thus the two-variable combinations

,

,

. The optimization problem reduces to

with the constraints

where ‘

’ is the true average over the distribution

p. The symbol ‘·’ denotes the Hadamard product of two vectors. The first constraint accounts for the correct normalization of the pdf, whereas the second one arises from the required equality between true and empirical average of the above-defined features. Generally, if certain interdependencies are known to exist between the elements of the matrix

, constraints can be imposed to account for these interdependencies. However, instead of imposing a priori interdependency constraints we chose to infer them instead of assuming their existence. Solving Equation (

5) with the constraints (

6) leads to

The chosen parameters,

, are symmetric, i.e.,

(There is a link between the assumption of equilibrium underlying a Boltzmann-Gibbs distribution, and symmetry of the pairwise interactions. Symmetric couplings lead to a steady state described by the Boltzmann-Gibbs distribution while asymmetric couplings lead to a non-equilibrium state [

53]. We can assign to the system a particular dynamics, which leads it to a given steady state distribution. Recent developments achieved for dynamical inverse Ising model [

54,

55] could represent an interesting extension of the present work, which is left for future research). The constant

Z can be determined by exploiting the constraint

, obtaining

. The parameters

are determined by requiring the second constraint to be fulfilled. Asymmetric

can always be re-conducted to symmetric

, which give rise to the same values of pdf if this latter is the Gibbs distribution in Equation (

7).

We observe that the pdf defined in Equation (

7) coincides with the Maxwell-Boltzmann probability distribution function at a given fixed temperature,

related to an Ising model with spin

, interaction parameters

and zero magnetic field, described by the Hamiltonian

The quantity

Z in Equations (

7) and (

8), constant with respect to

but dependent on the set of parameters

with generic element

, is called in this context the partition function.

The connection between maximum entropy and maximum likelihood is indeed well known (see e.g., [

56]) and the pdf in Equation (

7) which satisfies the constraint on the parameters

given in Equation (

6) can properly be derived by searching the maximum of the so-called Likelihood function within the class of models of the Boltzmann distribution related to an Ising model with zero magnetic field. The Likelihood function is defined in the context of Bayesian inference (see e.g., [

26]). By assuming that (i) each realization of the set

is drawn independently, (ii) the data have been generated by a (known) model, which depends on the set of (unknown) pairwise parameters

, one aims to find the optimal values of

, i.e., the ones which maximize the conditional probability

. From the Bayes theorem [

26] it follows that

The probability

is called

posterior,

prior,

evidence and

Likelihood. If the prior is the uniform distribution, as we assume here, the most probable a posteriori set of variables is, as a consequence of Equation (

10), the one which maximizes the Likelihood function. Under the further assumption that the Likelihood function belongs to the class of Boltzmann distribution functions, the so-called Log-Likelihood function can be defined as

If one assumes that the system can be described by an Ising-like, pairwise interacting model (see

Appendix A for an introduction and additional details) with zero external field, the Hamiltonian

H is the one defined in Equation (

9). We observe that in the definition of the Log-Likelihood function in Equation (

11) the hypothesis of independency of the realizations of the configuration

at different times has been exploited, see point (i) above. Thus, the optimization problem reduces to choosing the set of parameters

, which maximize the pdf in Equation (

11). A quick calculation of the first and second derivatives of Equation (

11) with respect to the parameters

shows that the set of parameters which maximizes Equation (

11) should indeed maximize Equation (

5) with the constraint in Equation (

6).

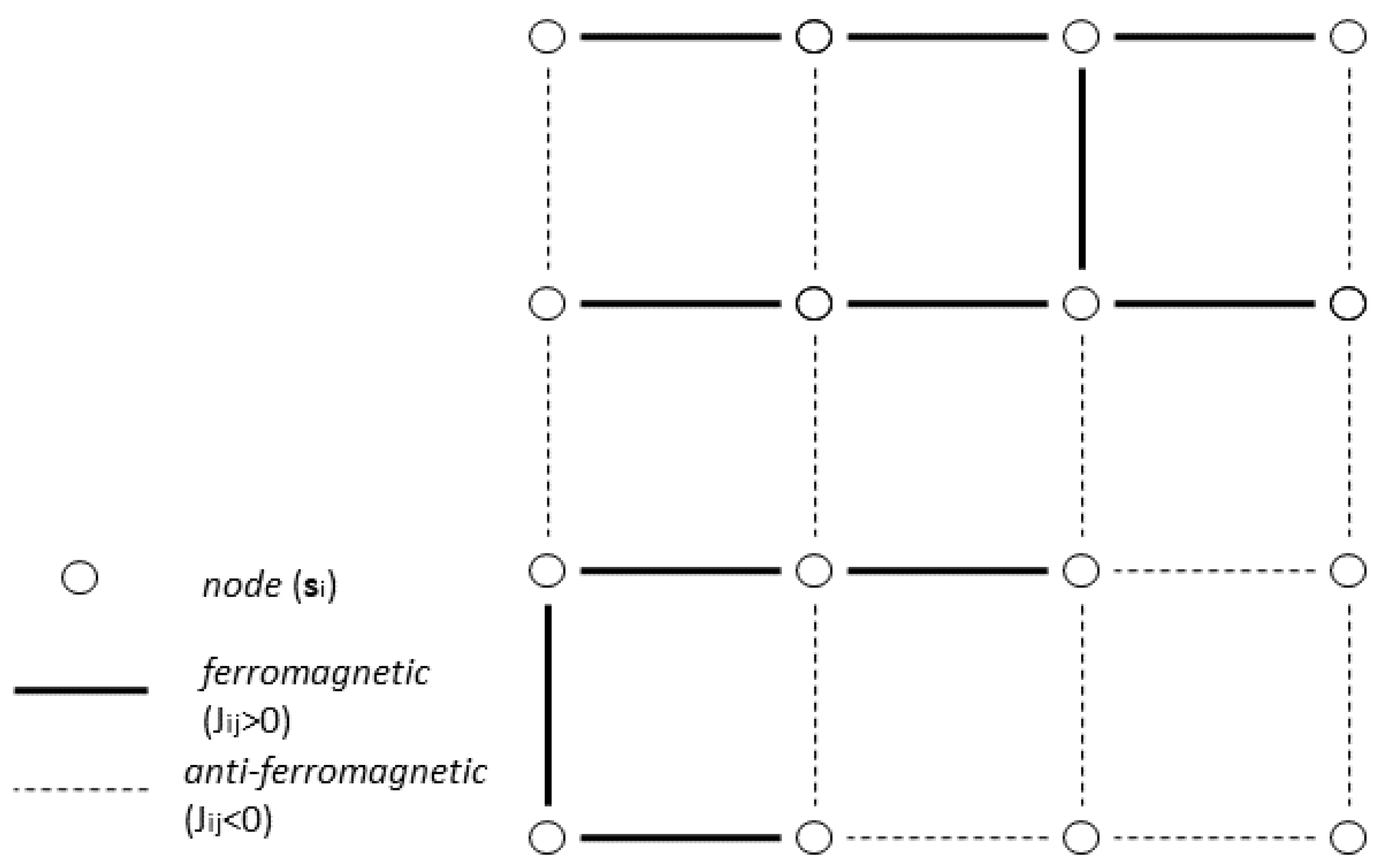

The Ising model has been widely applied in different fields, such as modelling the behaviour of magnets in statistical physics [

57], image processing and spatial statistics [

58,

59,

60], modelling of neural networks [

61] and social networks [

62]. It is, however, worth noting that by exploiting Shannon’s theorem the Ising model does not arise from specific hypotheses about the underlying network but instead is the least-structured model consistent with the measured pairwise correlations. In

Appendix A, we outline how the Ising spin glass model is introduced in the physics of complex systems.

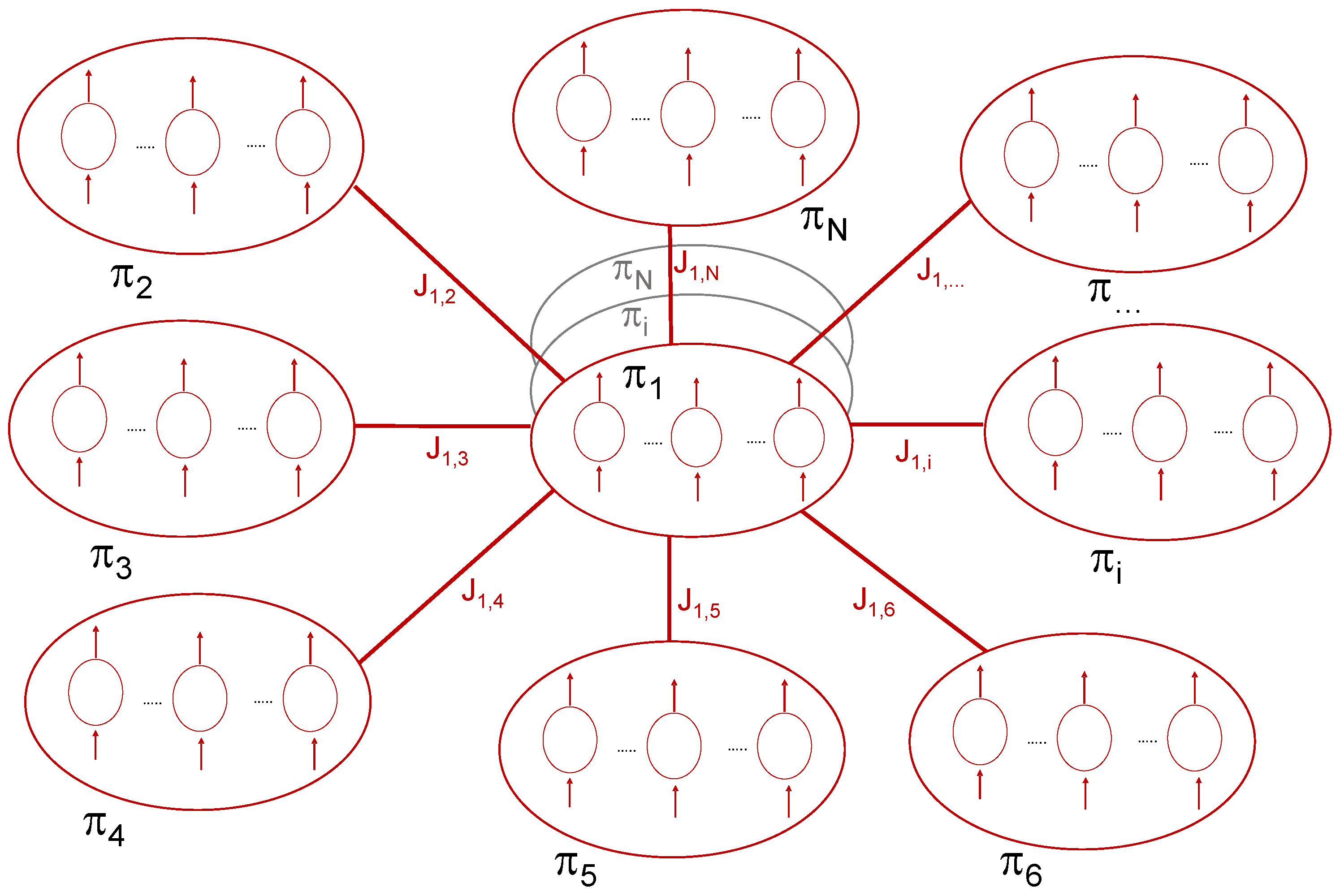

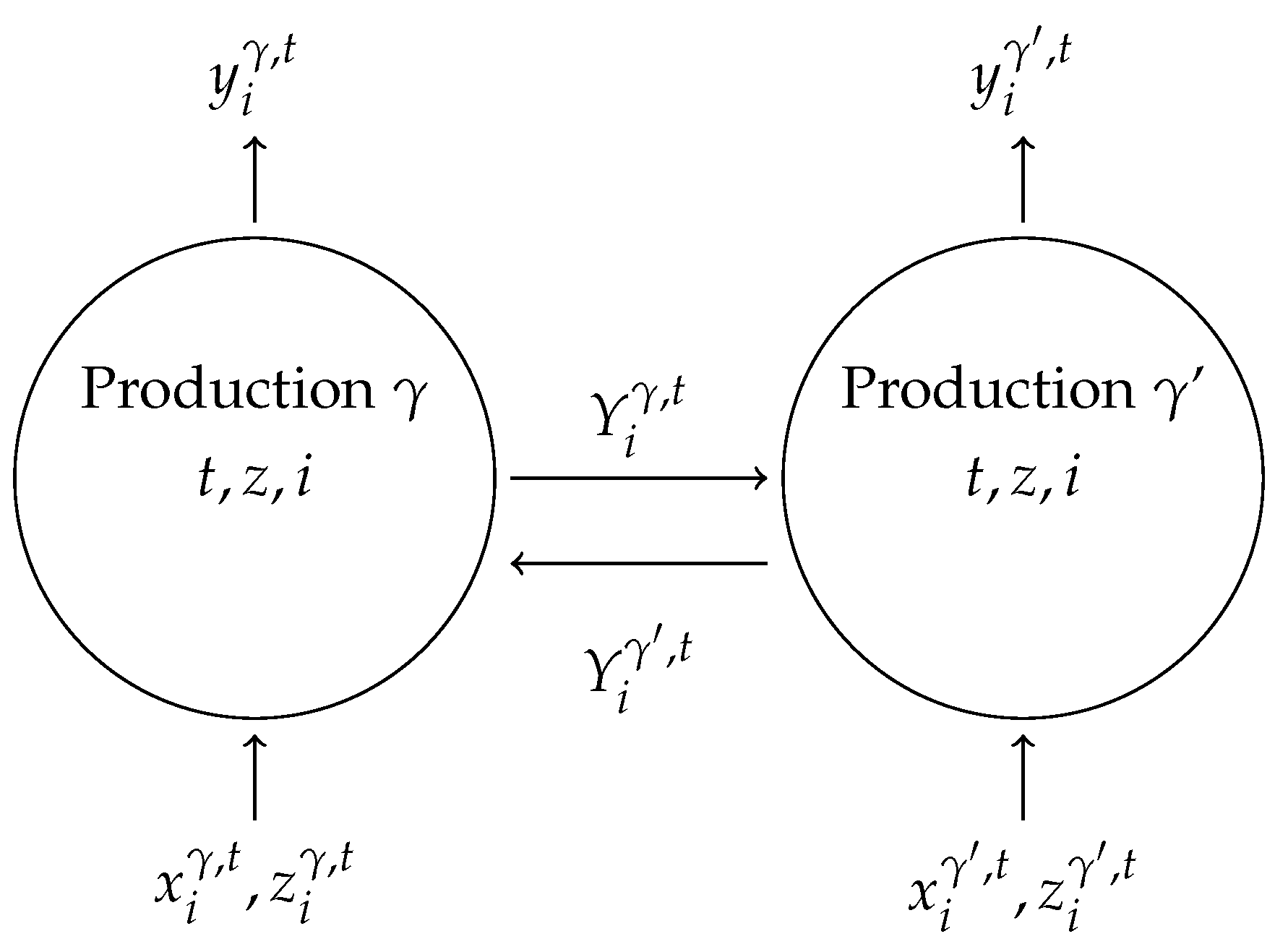

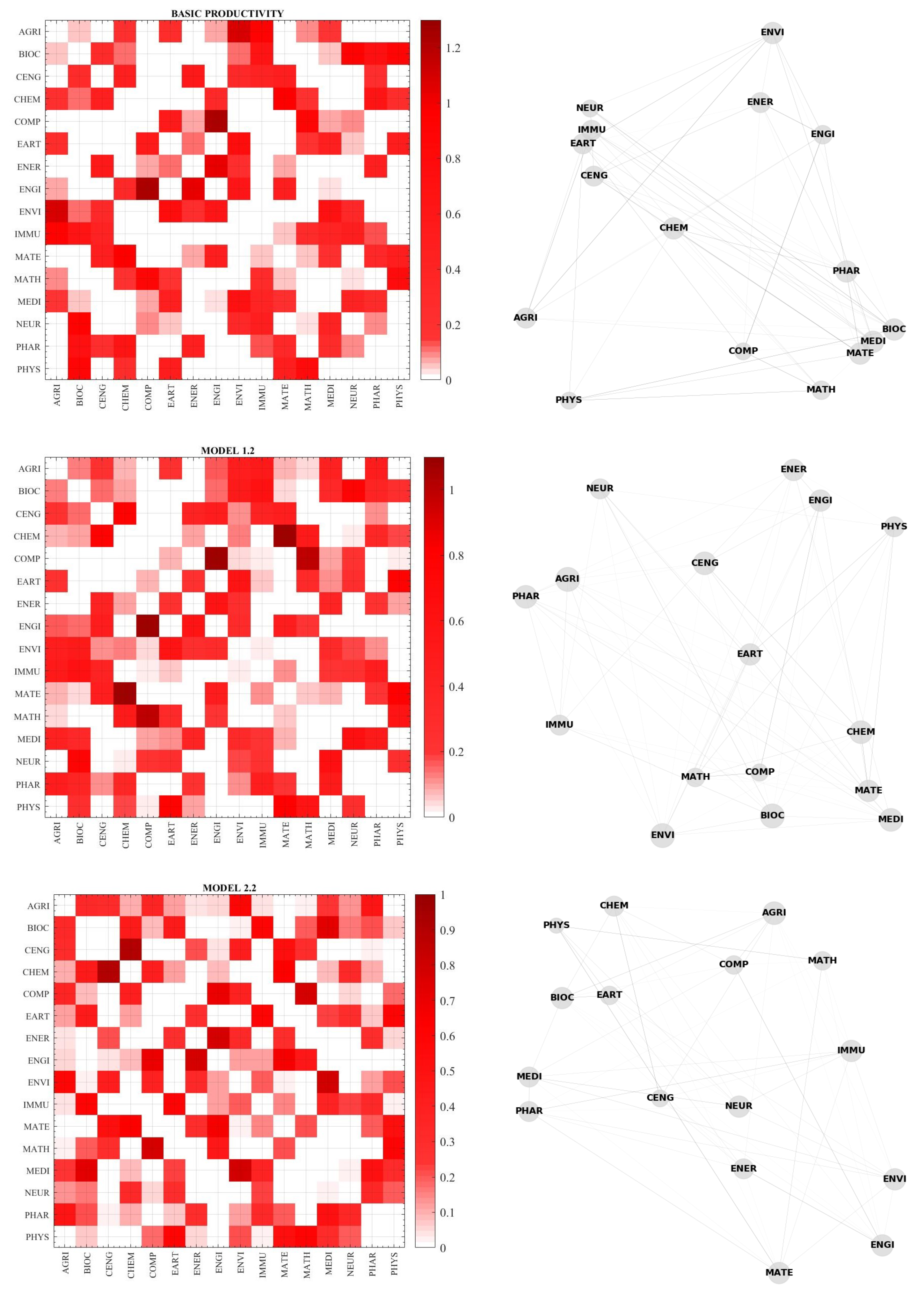

Table 1 describes the main components of our model and the correspondence between the Ising spin model from statistical physics and economic productivity analysis.

The in physics measure a direct and reciprocal (mutual) effect (the interaction) of one entity on another entity (and vice versa). The concept of interaction in physics can find its correspondence in the interdependency in Input-Output economic analysis. The latter means the existence of a mutual influence between sectors (disciplines).

The coupling parameters

generate the configurations of the system that may be characterized by the correlations between the spin variables, the so-called overlap measures (see also [

63]), defined as follows:

where

is time. As it is well known, a correlation measures the association between two variables. It shows a tendency of one variable to change with some regularity when the other changes, but this tendency may be moderated (influenced) by other factors, and depends on the whole configuration, including indirect effects. Correlation does not mean a direct effect or relation. On the other hand, interactions or interdependencies (

) refer to strict relationships between variables which allow us to describe the impact of the variation of one variable on another. Assuming this model to make inference permits us to consider correlations beyond the interdependencies among the units of analysis. As we will see in the application (see

Section 5), the productivity of two disciplines may be correlated because they tend to be associated in their variation, but they may not interact. Here we impose

without loss of generality. The

represent the interaction strength between

i and

j. The higher the value of

the stronger is the interaction between

i and

j.

4.3. Maximum-Likelihood and Pseudo-Likelihood Estimates

Though the likelihood function definition has deep roots in information theory, Bayesian inference and statistical mechanics, as discussed in the Section above, the realization of an optimization algorithm able to draw the optimal

is hindered by the general intractability of computing

and its gradient [

18,

26]. Hence, in place of maximizing the likelihood function, we may define and maximize a different objective function, the so-called pseudo-likelihood. It is possible to show [

18,

64] that the estimation of the parameters obtained by a pseudo-likelihood maximization is consistent with the maximization of the likelihood function, that is the two functions are maximized by the same set of parameters. This statement becomes exact in the case of infinite sampling [

18]. We do not discuss in detail here how these results are achieved. We discuss only the guidelines, redirecting for details elsewhere (see e.g., [

65] and the references cited in this sub-section). By its very establishment, the pseudo-likelihood function permits to solve the optimization problem avoiding the troubles related to the computation of

. It has indeed the advantage to be maximized in polynomial time. The pseudo-likelihood function is based on the so-called local conditional likelihood functions,

at each node of the network,

,

. The symbol

means a set of variables

with

. The local conditional probability (single variable pseudo-likelihood) at the

i-th node is

The local Hamiltonian

and the local partition function is

. Letting

, the Log-Pseudo-Likelihood function is

The gradient of the log-pseudo-likelihood function with respect to the parameter

can be easily calculated, obtaining

where ‘

’ states for ensemble average calculated for the pdf

with parameters set

. It is possible to rephrase it, obtaining

where ‘

’ states for ensemble average calculated with the pdf in Equation (

7) or Equation (

8) with set of parameters

. The gradient of the log likelihood function is

By comparing Equations (

15) and (

16) it is possible to infer that in the limit of large

T: (i) both the gradients go to zero if the elements of the set

are the ‘true’ parameters defining the pdf which generates the data; (ii)

.

Specifics on computation of log-pseudo-likelihood function and its gradient with respect to

, and details on optimization algorithm are reported in

Appendix B.

The methods employed and the codes written to implement the related algorithms have been tested on the Ising model with known coupling coefficients on random graphs, thus guaranteeing the right convergence of the inference procedure and the proper reconstruction of the interaction network.