Baseline Methods for Bayesian Inference in Gumbel Distribution

Abstract

1. Introduction

2. Domains of Attraction of Gumbel Distribution

2.1. Gumbel Baseline Distribution

2.2. Exponential Baseline Distribution

2.3. Normal Baseline Distribution

2.4. Other Baseline Distributions

3. Bayesian Estimation Methods

3.1. Classical Bayesian Estimation for the Gumbel Distribution

- Draw a starting sample from starting distributions, , respectively, given by Equation (23).

- For , given the chain is currently at ,

- Sample candidates for the next sample from a proposal distribution,

- Calculate the ratios

- Set

- Iterate the former procedure. Notice that

3.2. Baseline Distribution Method

3.2.1. Gumbel Baseline Distribution

3.2.2. Exponential Baseline Distribution

3.2.3. Normal Baseline Distribution

3.3. Improved Baseline Distribution Method

4. Simulation Study

- is the number of block maxima, ; and

- is the block size,

- Burn-in period: Eliminate the first generated values.

- Take different initial values and select them for each sample.

- Make a thinning to assure lack of autocorrelation.

- To choose an estimator for the parameters, we compared mean- and median-based estimations. They were reasonably similar, due to the high symmetry of posterior distributions. Therefore, we chose the mean of the posterior distribution to make estimations of the parameters.

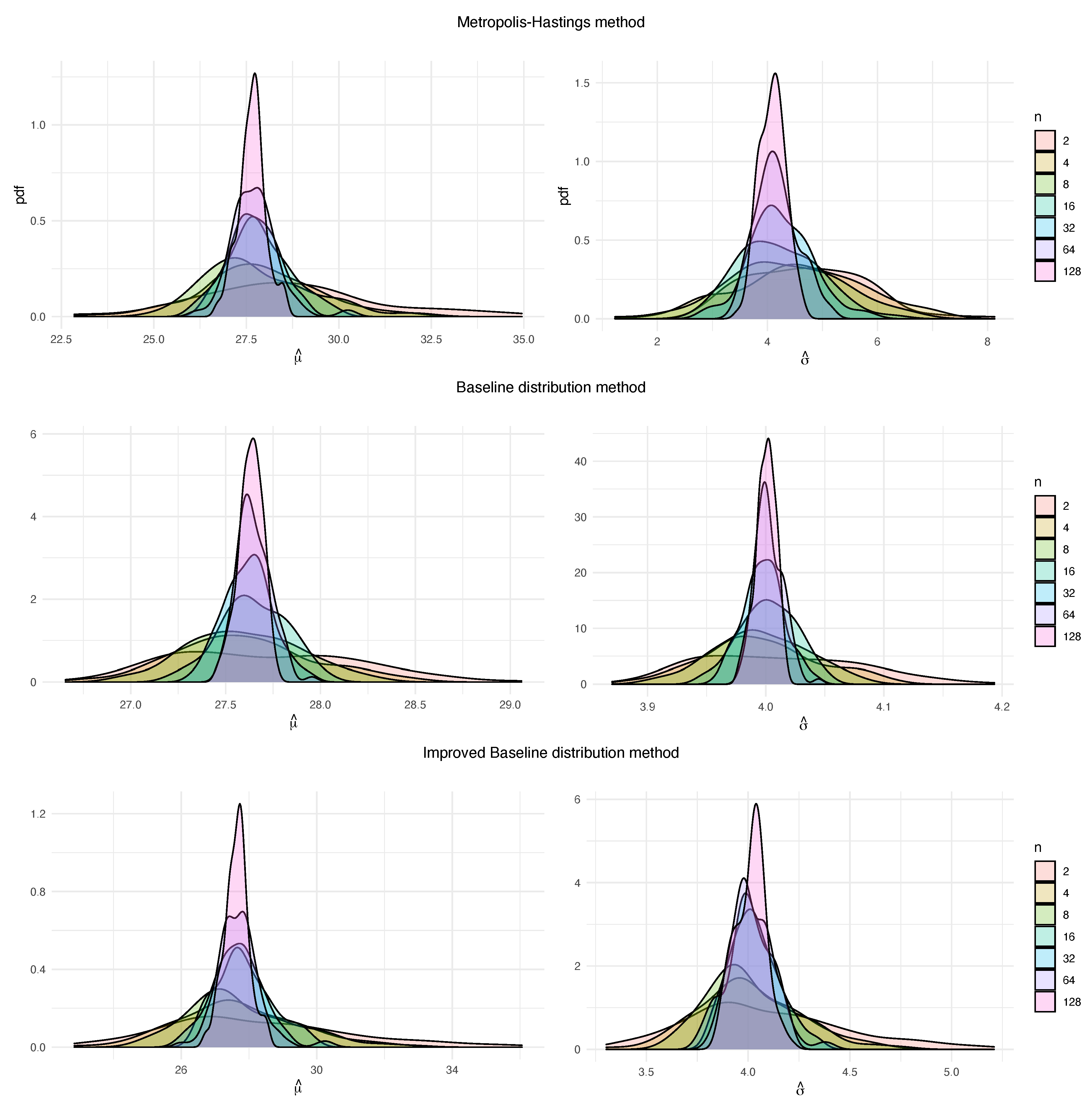

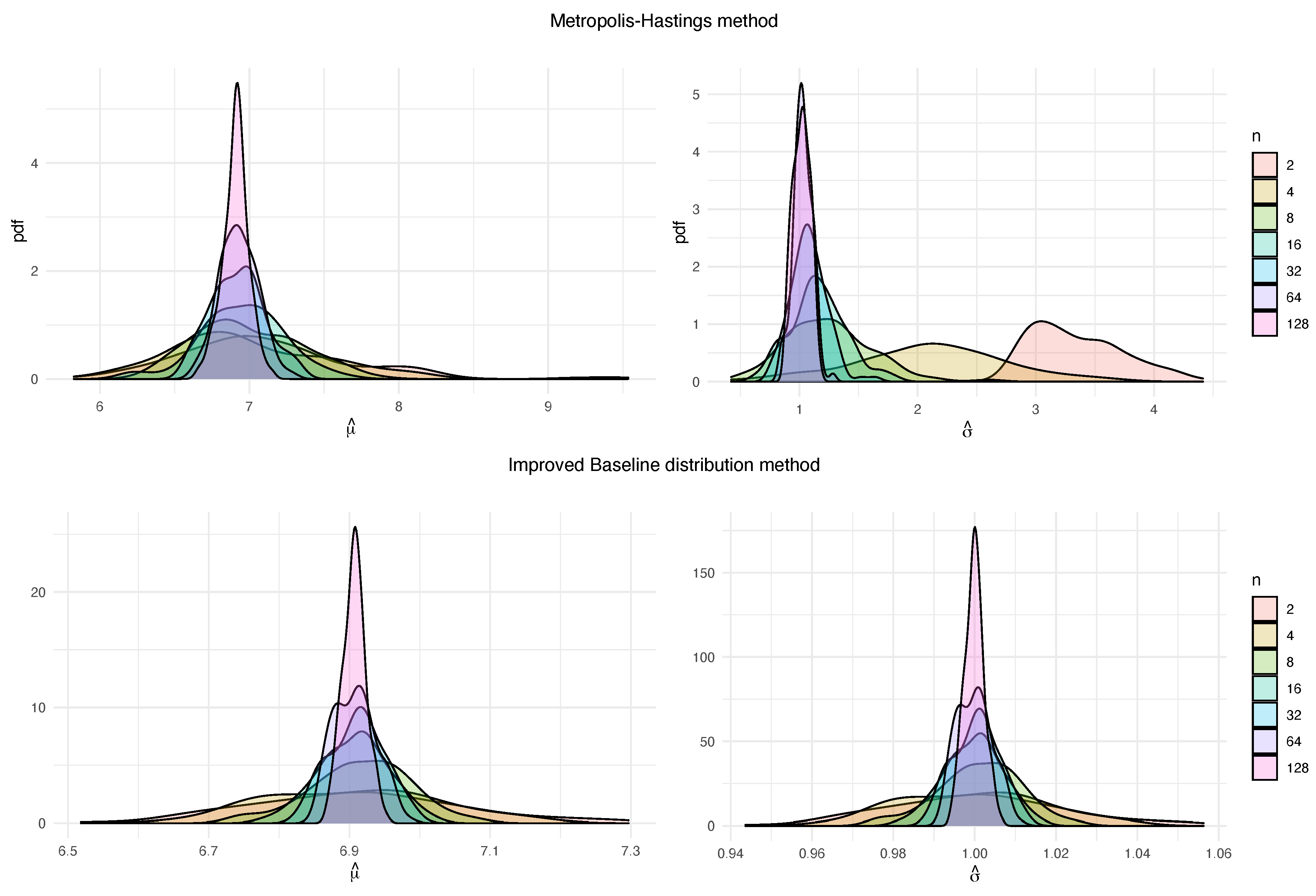

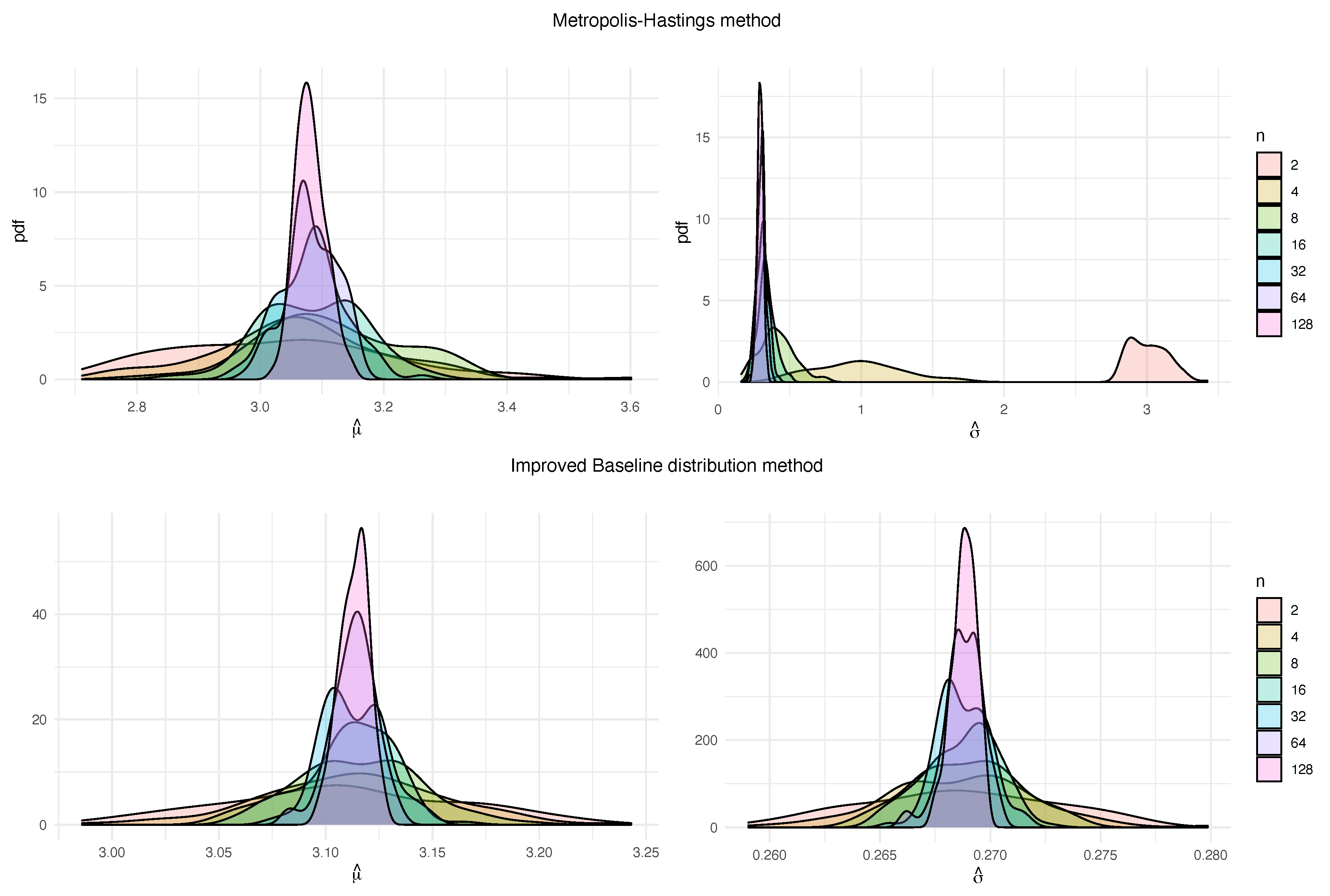

- MHM usually provides high skewed estimations for the posterior distributions. BDM is the method that shows less skewness.

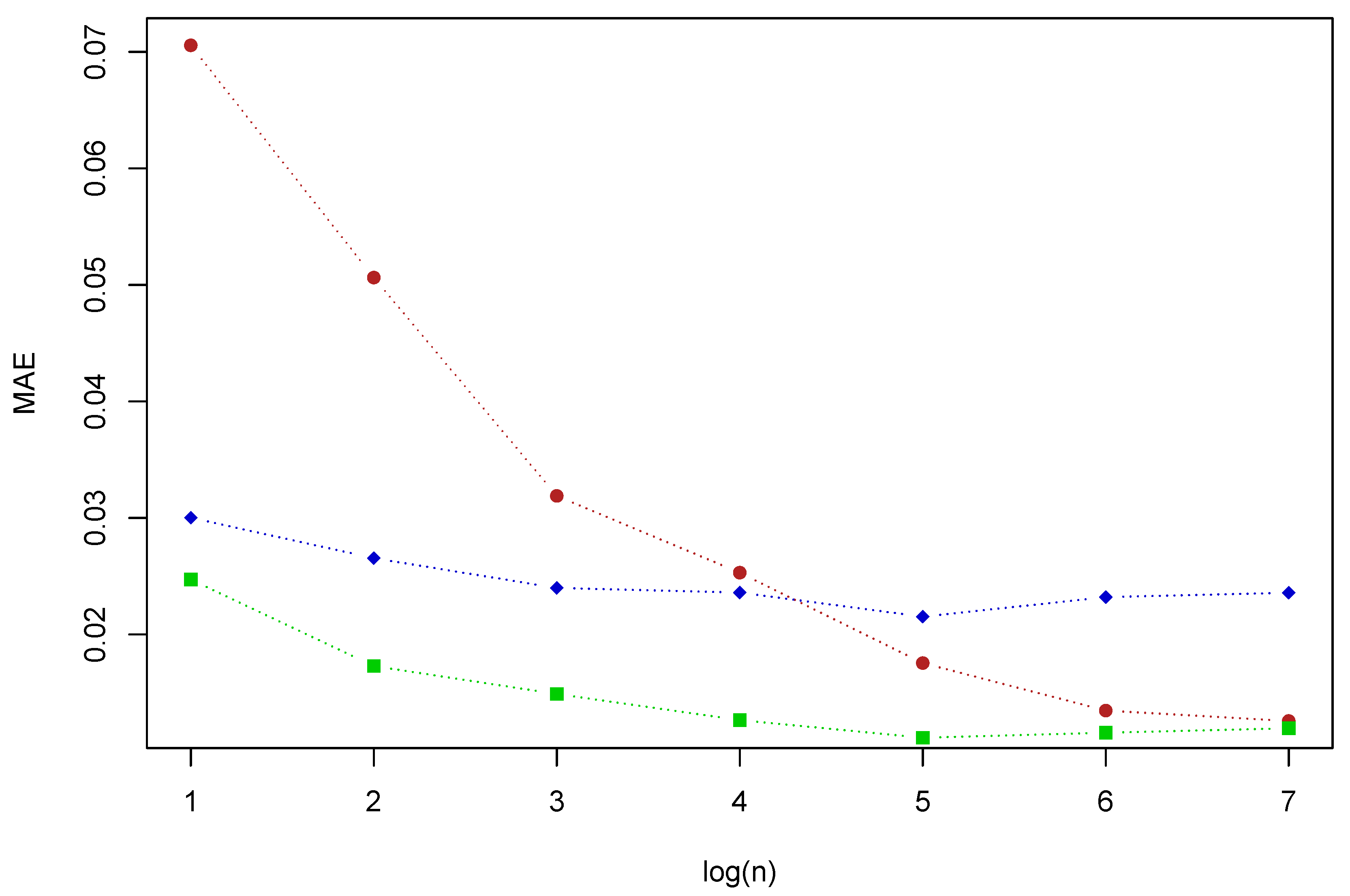

- BDM is the method that offers estimations for posterior distribution with less variability. IBDM provides higher variability, but we must keep in mind that this method stresses the importance of extreme values, therefore more variability is expectable than the one provided by BDM. The method with highest variability is MHM.

- The election of the most suitable method also depends on the characteristics of the problem. When block maxima data are very similar to the baseline distribution, BDM provides the best estimations and the lowest measures of error. On the contrary, when extreme data differ from baseline data, IBDM offers the lowest errors. IBDM is the most stable method: regardless of the differences between extreme data and baseline data, it provides reasonably good measures of error.

4.1. Gumbel Baseline Distribution

- Mean error:

- Root mean square error:

- Mean absolute error:

4.2. Exponential Baseline Distribution

- Mean error:

- Root mean square error:

- Mean absolute error:

4.3. Normal Baseline Distribution

5. Conclusions

- One of the most common problems in EVT is estimating the parameters of the distribution, because the data are usually scarce. In this work, we considered the case when block maxima distribution is a Gumbel, and we developed two bayesian methods, BDM and IBDM, to estimate posterior distribution, making use of all the available data of the baseline distribution, not only the block maxima values.

- The methods were proposed for three baseline distributions, namely Gumbel, Exponential and Normal, but the new strategy can easily be applied to some other baseline distributions, following the relations shown in Table 1.

- We performed a broad simulation study to compare BDM and IBDM methods to classical Metropolis–Hastings method (MHM). The results are based on numerical studies, but theoretical support still needs to be provided.

- We obtained that posterior distributions of BDM and IBDM are more concentrated and less skewed than MHM.

- In general, the results obtained show that the methods which offer lower measures of error are BDM and IBDM, as they leverage all the data. The classical method, MHM, shows the worst results, especially when extreme data are scarce.

- IBDM is the most stable method: regardless of the differences between extreme data and baseline data, it provides reasonably good measures of error. When the extreme data are scarce, both new methods, BDM and IBDM, improve MHM meaningfully.

Author Contributions

Funding

Conflicts of Interest

References

- Nogaj, M.; Yiou, P.; Parey, S.; Malek, F.; Naveau, P. Amplitude and frequency of temperature extremes over the North Atlantic region. Geophys. Res. Lett. 2006, 33. [Google Scholar] [CrossRef]

- Coelho, C.A.S.; Ferro, C.A.T.; Stephenson, D.B.; Steinskog, D.J. Methods for Exploring Spatial and Temporal Variability of Extreme Events in Climate Data. J. Clim. 2008, 21, 2072–2092. [Google Scholar] [CrossRef]

- Acero, F.J.; Fernández-Fernández, M.I.; Carrasco, V.M.S.; Parey, S.; Hoang, T.T.H.; Dacunha-Castelle, D.; García, J.A. Changes in heat wave characteristics over Extremadura (SW Spain). Theor. Appl. Climatol. 2017, 1–13. [Google Scholar] [CrossRef]

- García, J.; Gallego, M.C.; Serrano, A.; Vaquero, J. Trends in Block-Seasonal Extreme Rainfall over the Iberian Peninsula in the Second Half of the Twentieth Century. J. Clim. 2007, 20, 113–130. [Google Scholar] [CrossRef]

- Re, M.; Barros, V.R. Extreme rainfalls in SE South America. Clim. Chang. 2009, 96, 119–136. [Google Scholar] [CrossRef]

- Acero, F.J.; García, J.A.; Gallego, M.C. Peaks-over-Threshold Study of Trends in Extreme Rainfall over the Iberian Peninsula. J. Clim. 2011, 24, 1089–1105. [Google Scholar] [CrossRef]

- Acero, F.J.; Gallego, M.C.; García, J.A. Multi-day rainfall trends over the Iberian Peninsula. Theor. Appl. Climatol. 2011, 108, 411–423. [Google Scholar] [CrossRef]

- Acero, F.J.; Parey, S.; Hoang, T.T.H.; Dacunha-Castelle, D.; García, J.A.; Gallego, M.C. Non-stationary future return levels for extreme rainfall over Extremadura (southwestern Iberian Peninsula). Hydrol. Sci. J. 2017, 62, 1394–1411. [Google Scholar] [CrossRef]

- Wi, S.; Valdés, J.B.; Steinschneider, S.; Kim, T.W. Non-stationary frequency analysis of extreme precipitation in South Korea using peaks-over-threshold and annual maxima. Stoch. Environ. Res. Risk Assess. 2015, 30, 583–606. [Google Scholar] [CrossRef]

- García, A.; Martín, J.; Naranjo, L.; Acero, F.J. A Bayesian hierarchical spatio-temporal model for extreme rainfall in Extremadura (Spain). Hydrol. Sci. J. 2018, 63, 878–894. [Google Scholar] [CrossRef]

- Ramos, A.A. Extreme value theory and the solar cycle. Astron. Astrophys. 2007, 472, 293–298. [Google Scholar] [CrossRef]

- Acero, F.J.; Carrasco, V.M.S.; Gallego, M.C.; García, J.A.; Vaquero, J.M. Extreme Value Theory and the New Sunspot Number Series. Astrophys. J. 2017, 839, 98. [Google Scholar] [CrossRef]

- Acero, F.J.; Gallego, M.C.; García, J.A.; Usoskin, I.G.; Vaquero, J.M. Extreme Value Theory Applied to the Millennial Sunspot Number Series. Astrophys. J. 2018, 853, 80. [Google Scholar] [CrossRef]

- Castillo, E.; Hadi, A.S.; Balakrishnan, N.; Sarabia, J.M. Extreme Value and Related Models with Applications in Engineering and Science; Wiley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Castillo, E. Estadística de valores extremos. Distribuciones asintóticas. Estad. Esp. 1987, 116, 5–35. [Google Scholar]

- Smith, R.L.; Naylor, J.C. A Comparison of Maximum Likelihood and Bayesian Estimators for the Three- Parameter Weibull Distribution. Appl. Stat. 1987, 36, 358. [Google Scholar] [CrossRef]

- Coles, S.; Pericchi, L.R.; Sisson, S. A fully probabilistic approach to extreme rainfall modeling. J. Hydrol. 2003, 273, 35–50. [Google Scholar] [CrossRef]

- Bernardo, J.M.; Smith, A.F.M. (Eds.) Bayesian Theory; Wiley: Hoboken, NJ, USA, 1994. [Google Scholar] [CrossRef]

- Kotz, S.; Nadarajah, S. Extreme Value Distributions: Theory and Applications; ICP: London, UK, 2000. [Google Scholar]

- Coles, S.G.; Tawn, J.A. A Bayesian Analysis of Extreme Rainfall Data. Appl. Stat. 1996, 45, 463. [Google Scholar] [CrossRef]

- Rostami, M.; Adam, M.B. Analyses of prior selections for gumbel distribution. Matematika 2013, 29, 95–107. [Google Scholar] [CrossRef]

- Chen, M.H.; Shao, Q.M.; Ibrahim, J.G. Monte Carlo Methods in Bayesian Computation; Springer: New York, NY, USA, 2000. [Google Scholar] [CrossRef]

- Vidal, I. A Bayesian analysis of the Gumbel distribution: An application to extreme rainfall data. Stoch. Environ. Res. Risk Assess. 2013, 28, 571–582. [Google Scholar] [CrossRef]

- Lye, L.M. Bayes estimate of the probability of exceedance of annual floods. Stoch. Hydrol. Hydraul. 1990, 4, 55–64. [Google Scholar] [CrossRef]

- Rostami, M.; Adam, M.B.; Ibrahim, N.A.; Yahya, M.H. Slice sampling technique in Bayesian extreme of gold price modelling. Am. Inst. Phys. 2013, 1557, 473–477. [Google Scholar] [CrossRef]

- Gumbel, E.J. Statistics of Extremes (Dover Books on Mathematics); Dover Publications: New York, NY, USA, 2012. [Google Scholar]

- Gnedenko, B. Sur la distribution limite du terme maximum d’une serie aleatoire. Ann. Math. 1943, 44, 423–453. [Google Scholar] [CrossRef]

- Fisher, R.A.; Tippett, L.H.C. Limiting forms of the frequency distribution of the largest or smallest member of a sample. In Mathematical Proceedings of the Cambridge Philosophical Society; Cambridge University Press: Cambridge, UK, 1928; Volume 24, pp. 180–190. [Google Scholar]

- Ferreira, A.; de Haan, L. Extreme Value Theory; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Plummer, M.; Best, N.; Cowles, K.; Vines, K. CODA: Convergence Diagnosis and Output Analysis for MCMC. R News 2006, 6, 7–11. [Google Scholar]

| Baseline Distribution F | ||

|---|---|---|

| Exponential | ||

| Gamma | ||

| Gumbel | ||

| Log-Normal | ||

| Normal | ||

| Rayleigh |

| k | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 100 | 1000 | ||||||||

| MHM | BDM | IBDM | MHM | BDM | IBDM | MHM | BDM | IBDM | ||

| 2 | −0.0879 (0.2290) | 0.0752 (0.1594) | −0.0038 (0.1848) | −0.1056 (0.2136) | 0.0044 (0.0635) | −0.0450 (0.1658) | −0.0495 (0.1659) | 0.0061 (0.0343) | 0.0159 (0.1782) | 1/4 |

| 0.3128 (0.9906) | 0.3376 (0.6637) | 0.3350 (0.7905) | 0.3326 (0.8535) | 0.1017 (0.3046) | 0.2866 (0.6906) | 0.1578 (0.6573) | 0.0354 (0.1464) | 0.1137 (0.6850) | 1 | |

| 1.6813 (4.6198) | 1.0156 (2.2176) | 1.3856 (2.6874) | 0.2687 (3.1694) | −0.0001 (1.1367) | 0.3466 (2.0640) | 1.0252 (2.6346) | 0.0747 (0.4899) | 0.6297 (2.8024) | 4 | |

| 16 | 0.0040 (0.0677) | 0.0036 (0.0477) | −0.0026 (0.0680) | −0.0018 (0.0645) | −0.0050 (0.0260) | −0.0083 (0.0636) | 0.0118 (0.0678) | −0.0002 (0.0119) | 0.0014 (0.0636) | 1/4 |

| 0.0712 (0.2556) | 0.0505 (0.1966) | 0.0528 (0.2470) | 0.0454 (0.2821) | −0.0033 (0.1019) | 0.0218 (0.2657) | 0.0499 (0.2536) | 0.0006 (0.0441) | 0.0219 (0.2446) | 1 | |

| 0.2096 (1.3466) | 0.1711 (0.8447) | 0.2450 (1.1989) | 0.0625 (1.0148) | −0.0010 (0.3901) | 0.0861 (1.0165) | 0.2545 (0.8414) | 0.0178 (0.1779) | 0.2324 (0.8607) | 4 | |

| 128 | 0.0018 (0.0225) | 0.0023 (0.0170) | 0.0012 (0.0224) | 0.0012 (0.0233) | 0.0001 (0.0088) | 0.0006 (0.0230) | −0.0038 (0.0208) | −0.0005 (0.0039) | −0.0045 (0.0206) | 1/4 |

| 0.0258 (0.0992) | 0.0155 (0.0650) | 0.0233 (0.0982) | 0.0070 (0.0958) | 0.0006 (0.0355) | 0.0041 (0.0953) | −0.0037 (0.0944) | 0.0004 (0.0170) | −0.0067 (0.0948) | 1 | |

| −0.0077 (0.3712) | 0.0098 (0.2236) | 0.0002 (0.3623) | 0.0070 (0.3897) | 0.0007 (0.1425) | 0.0052 (0.3879) | 0.0215 (0.3650) | 0.0001 (0.0636) | 0.0097 (0.3632) | 4 | |

| k | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 100 | 1000 | ||||||||

| MHM | BDM | IBDM | MHM | BDM | IBDM | MHM | BDM | IBDM | ||

| 2 | 2.4739 (2.6201) | 0.0309 (0.0584) | 0.0258 (0.0560) | 2.6628 (2.6967) | 0.0013 (0.0119) | 0.0048 (0.0216) | 2.7177 (2.7371) | 0.0007 (0.0045) | 0.0024 (0.0155) | 1/4 |

| 2.3399 (2.4169) 0.8238 (1.6431) | 0.1121 (0.2259) 0.3104 (0.7600) | 0.1324 (0.2591) 0.4727 (0.9749) | 2.3384 (2.3760) 0.6607 (1.1945) | 0.0206 (0.0596) 0.0056 (0.2174) | 0.0445 (0.1085) 0.0790 (0.4192) | 2.4139 (2.4441) 0.7284 (1.2739) | 0.0043 (0.0204) 0.0117 (0.0680) | 0.0130 (0.0774) 0.0905 (0.3978) | 1 | |

| 0.8238 (1.6431) | 0.3104 (0.7600) | 0.4727 (0.9749) | 0.6607 (1.1945) | 0.0056 (0.2174) | 0.0790 (0.4192) | 0.7284 (1.2739) | 0.0117 (0.0680) | 0.0905 (0.3978) | 4 | |

| 16 | 0.0292 (0.0577) | 0.0006 (0.0165) | 0.0031 (0.0279) | 0.0229 (0.0560) | −0.0008 (0.0051) | −0.0037 (0.0264) | 0.0462 (0.0788) | 0.0000 (0.0016) | 0.0070 (0.0293) | 1/4 |

| 0.1520 (0.2835) | 0.0152 (0.0604) | 0.0367 (0.1234) | 0.1339 (0.2740) | −0.0003 (0.0198) | 0.0032 (0.0959) | 0.1495 (0.2712) | 0.0001 (0.0059) | 0.0058 (0.0605) | 1 | |

| 0.3237 (0.9959) | 0.0742 (0.2826) | 0.1261 (0.4765) | 0.3159 (0.8719) | −0.0010 (0.0755) | 0.0176 (0.2238) | 0.2071 (0.7576) | 0.0023 (0.0237) | 0.0294 (0.1211) | 4 | |

| 128 | 0.0074 (0.0181) | 0.0009 (0.0058) | 0.0055 (0.0162) | 0.0036 (0.0203) | 0.0001 (0.0017) | 0.0018 (0.0184) | 0.0027 (0.0179) | −0.0001 (0.0005) | 0.0009 (0.0161) | 1/4 |

| 0.0300 (0.0778) | 0.0041 (0.0221) | 0.0220 (0.0693) | 0.0313 (0.0756) | 0.0003 (0.0069) | 0.0203 (0.0616) | 0.0138 (0.0695) | 0.0000 (0.0023) | 0.0037 (0.0518) | 1 | |

| 0.0557 (0.2423) | 0.0062 (0.0759) | 0.0287 (0.1843) | 0.0415 (0.2805) | −0.0020 (0.0274) | 0.0024 (0.1286) | 0.0884 (0.2522) | −0.0001 (0.0086) | 0.0123 (0.0753) | 4 | |

| k | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 100 | 1000 | ||||||||

| MHM | BDM | IBDM | MHM | BDM | IBDM | MHM | BDM | IBDM | ||

| 2 | −0.0645 (0.1569) | 0.0287 (0.1297) | 0.0207 (0.1278) | −0.0563 (0.1485) | 0.0098 (0.0880) | 0.0061 (0.0878) | −0.0652 (0.1471) | −0.0004 (0.0339) | −0.0011 (0.0339) | 1/2 |

| −0.0818 (0.2022) | 0.0157 (0.1409) | 0.0084 (0.1382) | −0.0915 (0.2032) | −0.0078 (0.0780) | −0.0115 (0.0782) | −0.0945 (0.2012) | 0.0002 (0.0330) | −0.0006 (0.0330) | 1 | |

| −0.0909 (0.2442) | 0.0299 (0.1400) | 0.0221 (0.1377) | −0.1004 (0.2448) | −0.0033 (0.0673) | −0.0070 (0.0674) | −0.0954 (0.2437) | 0.0066 (0.0377) | 0.0058 (0.0376) | 2 | |

| 16 | −0.0232 (0.0725) | −0.0075 (0.0476) | 0.0029 (0.0490) | −0.0263 (0.0743) | −0.0041 (0.0255) | −0.0035 (0.0254) | −0.0224 (0.0705) | −0.0030 (0.0128) | −0.0029 (0.0128) | 1/2 |

| −0.0156 (0.0680) | −0.0006 (0.0440) | 0.0096 (0.0452) | −0.0221 (0.0832) | −0.0008 (0.0302) | −0.0002 (0.0302) | −0.0159 (0.0750) | 0.0008 (0.0110) | 0.0008 (0.0110) | 1 | |

| −0.0109 (0.0741) | 0.0019 (0.0429) | 0.0120 (0.0455) | −0.0230 (0.0750) | −0.0027 (0.0294) | −0.0021 (0.0293) | −0.0220 (0.0743) | 0.0001 (0.0132) | 0.0122 (0.0132) | 2 | |

| 128 | −0.0053 (0.0282) | −0.0050 (0.0197) | 0.0076 (0.0229) | −0.0003 (0.0249) | −0.0007 (0.0097) | 0.0005 (0.0097) | −0.0093 (0.0251) | −0.0012 (0.0044) | −0.0010 (0.0044) | 1/2 |

| −0.0039 (0.0244) | −0.0057 (0.0183) | 0.0069 (0.0218) | −0.0020 (0.0243) | 0.0002 (0.0100) | 0.0013 (0.0101) | −0.0007 (0.0227) | 0.0002 (0.0036) | 0.0003 (0.0036) | 1 | |

| −0.0010 (0.0243) | −0.0022 (0.0153) | 0.0103 (0.0216) | −0.0002 (0.0267) | 0.0012 (0.0113) | 0.0024 (0.0115) | −0.0004 (0.0269) | 0.0012 (0.0044) | 0.0013 (0.0044) | 2 | |

| k | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 100 | 1000 | ||||||||

| MHM | BDM | IBDM | MHM | BDM | IBDM | MHM | BDM | IBDM | ||

| 2 | −0.1097 (0.2923) | −0.2932 (0.3274) | −0.1942 (0.2210) | −0.1120 (0.2975) | −0.1183 (0.1356) | −0.1204 (0.1481) | −0.1101 (0.2991) | −0.0132 (0.0443) | −0.0319 (0.0559) | 1/4 |

| −0.1023 (0.2481) | −0.0049 (0.1139) | −0.0222 (0.1404) | −0.1009 (0.2609) | 0.0078 (0.0718) | −0.0077 (0.0876) | −0.1053 (0.2704) | 0.0047 (0.0394) | −0.0150 (0.0482) | 1 | |

| −0.0822 (0.1643) | 0.0252 (0.1197) | −0.0079 (0.1374) | −0.0803 (0.1790) | 0.0079 (0.0892) | −0.0103 (0.1073) | −0.0824 (0.1876) | −0.0045 (0.0393) | −0.0227 (0.0497) | 4 | |

| 16 | −0.0089 (0.0736) | −0.0650 (0.0765) | −0.0435 (0.0644) | −0.0131 (0.0785) | −0.0166 (0.0326) | −0.0278 (0.0500) | −0.0046 (0.0638) | −0.0006 (0.0134) | −0.0198 (0.0283) | 1/4 |

| −0.0085 (0.0750) | 0.0024 (0.0546) | −0.0030 (0.0645) | −0.0092 (0.0658) | −0.0042 (0.0282) | −0.0164 (0.0423) | −0.0109 (0.0724) | 0.0012 (0.0144) | −0.0184 (0.0292) | 1 | |

| −0.0060 (0.0679) | 0.0033 (0.0427) | −0.0049 (0.0574) | −0.0171 (0.0719) | −0.0022 (0.0338) | −0.0185 (0.0472) | −0.0028 (0.0675) | −0.0006 (0.0142) | −0.0194 (0.0281) | 4 | |

| 128 | 0.0054 (0.0323) | −0.0101 (0.0188) | −0.0116 (0.0299) | 0.0041 (0.0282) | −0.0011 (0.0116) | −0.0154 (0.0272) | 0.0021 (0.0235) | −0.0008 (0.0044) | −0.0207 (0.0260) | 1/4 |

| 0.0090 (0.0336) | −0.0002 (0.0172) | −0.0038 (0.0296) | 0.0058 (0.0301) | 0.0014 (0.0116) | −0.0126 (0.0263) | 0.0016 (0.0249) | −0.0002 (0.0050) | −0.0204 (0.0258) | 1 | |

| 0.0052 (0.0357) | −0.0020 (0.0188) | −0.0063 (0.0320) | 0.0052 (0.0288) | −0.0006 (0.0116) | −0.0147 (0.0272) | 0.0020 (0.0277) | 0.0001 (0.0048) | −0.0200 (0.0255) | 4 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martín, J.; Parra, M.I.; Pizarro, M.M.; Sanjuán, E.L. Baseline Methods for Bayesian Inference in Gumbel Distribution. Entropy 2020, 22, 1267. https://doi.org/10.3390/e22111267

Martín J, Parra MI, Pizarro MM, Sanjuán EL. Baseline Methods for Bayesian Inference in Gumbel Distribution. Entropy. 2020; 22(11):1267. https://doi.org/10.3390/e22111267

Chicago/Turabian StyleMartín, Jacinto, María Isabel Parra, Mario Martínez Pizarro, and Eva L. Sanjuán. 2020. "Baseline Methods for Bayesian Inference in Gumbel Distribution" Entropy 22, no. 11: 1267. https://doi.org/10.3390/e22111267

APA StyleMartín, J., Parra, M. I., Pizarro, M. M., & Sanjuán, E. L. (2020). Baseline Methods for Bayesian Inference in Gumbel Distribution. Entropy, 22(11), 1267. https://doi.org/10.3390/e22111267