Abstract

To date, testing for Granger non-causality using kernel density-based nonparametric estimates of the transfer entropy has been hindered by the intractability of the asymptotic distribution of the estimators. We overcome this by shifting from the transfer entropy to its first-order Taylor expansion near the null hypothesis, which is also non-negative and zero if and only if Granger causality is absent. The estimated Taylor expansion can be expressed in terms of a U-statistic, demonstrating asymptotic normality. After studying its size and power properties numerically, the resulting test is illustrated empirically with applications to stock indices and exchange rates.

Keywords:

Granger causality; nonparametric test; U-statistic; financial time series; high frequency data JEL Classification:

C12; C14; C58; G10

1. Introduction

Characterizing causal interactions between time series has been challenging until Granger in his pioneering work brought forward the concept later known as Granger causality [1]. Since then, testing causal effects has attracted attention not only in Economics and Econometrics, but also in the domains of neuroscience [2,3], biology [4] and physics [5], among others.

The vector autoregressive (VAR) modeling-based test has become a popular methodology over the last decades, with repeated debates on its validity. As we see it, there are at least two critical problems with parametric causality tests. First, being based on a classical linear VAR model, traditional Granger causality tests may overlook significant nonlinear dynamical relationships between variables. As Granger [6] put it, nonlinear models represent the proper way to model the real world which is ‘almost certainly nonlinear’. Secondly, parametric approaches to causality testing bear the risk of model mis-specification. A wrong regression model could lead to a lack of power, or worse, unjustified conclusions. For example, Baek and Brock construct an example where nonlinear causal relations cannot be detected by a traditional linear causality test [7].

In a series of studies, authors have tried to relax parametric model assumptions and provide nonparametric versions of Granger causality tests, which essentially are tests for conditional independence. Hiemstra and Jones were among the first to propose a formal nonparametric approach [8]. By modifying Beak and Brock’s nonparametric method [7], and developing asymptotic theory, Hiemstra and Jones obtained a nonparametric test for Granger causality. However, as this test suffers from a fundamental inconsistency problem, [9] proposed a modified, consistent version of the test based on kernel density estimators, hereafter referred to as the DP test. Alternative semiparametric and nonparametric tests for conditional independence have been proposed based on, among other, additive models [10], the Hellinger distance measure [11], copulas [12], generalized empirical distribution functions [13], empirical likelihood ratios [14] and characteristic functions [15].

The scope of this paper is to provide a novel test for Granger causality, based on the information-theoretical notion of transfer entropy (hereafter TE), coined by Schreiber [16]. The transfer entropy was initially used to measure asymmetric information exchange in a bivariate system. By using appropriate conditional densities, the transfer entropy is able to measure information transfer from one variable to another. This property makes it attractive for detecting conditional dependence in dynamical settings in a general (distributional) sense. We refer to [17,18] for detailed reviews of the relation between Granger causality and directed information theory.

Despite the attractive properties of the transfer entropy and related information-theoretical notions, such as the mutual information—the application of concepts from information theory to time series analysis has proved difficult due to the lack of asymptotic theory for nonparametric estimators of these information-theoretical measures. For example, Granger and Lin [19] utilize entropy to detect serial dependence using critical values obtained by simulation. Hong and White [20] prove asymptotic normality for an entropy-based statistic, but the asymptotics only hold for a specific kernel function. Barnett [21] established an asymptotic distribution for transfer entropy estimators in parametric settings. Establishing asymptotic distribution theory for a fully nonparametric transfer entropy measure is challenging, if not impossible. Diks and Fang [22] provide numerical comparisons to gain some insights into the statistical behavior of nonparametric transfer entropy-based tests.

In this paper, we propose a test statistic based on a first order Taylor expansion of the transfer entropy, which is shown to be asymptotically normally distributed. Instead of deriving the limiting distribution of the transfer entropy—which is hard to track—directly, we bypass the problem by focusing on a quantity that locally (near the null hypothesis) is similar, but globally different, while still sharing the global positive-definiteness property with the transfer entropy. Furthermore, we show that this new test statistic is closely related to the DP test (Section 2.2), and follow a similar approach to finding the asymptotic normal distribution of the estimator of the Taylor expansion.

This paper is organized as follows. Section 2 provides a short introduction to the nonparametric DP test and its lack of power against certain alternatives. Subsequently, the transfer entropy and a nonparametric test based on its first order Taylor expansion near the null hypothesis are introduced. The close linkage of this novel test statistic with the DP test is shown, and asymptotic normality is proved using an asymptotically equivalent U-statistic representation of the estimator. Section 2 also discusses the optimal bandwidth selection rule for specific cases. Section 3 deals with Monte Carlo simulations; three different data generating processes are considered, enabling a direct comparison of size and power between the modified DP test and the DP test. Section 4 considers two financial applications. In the first, we apply the new test to stock volume and return data to make a direct comparison with the DP test; in the second application high frequency exchange rates of main currencies are tested. Finally, Section 5 summarizes.

2. A Transfer Entropy-Based Test Statistic for Granger Non-Causality

2.1. Nonparametric Granger Non-Causality Tests

This subsection provides some basic concepts and definitions for Granger causality, and the idea of nonparametrically testing for conditional independence. We restrict ourselves to the bivariate setting as it is the most common implementation, although generalization to multivariate densities is possible.

Intuitively, for a strictly stationary bivariate process , , it is said that Granger causes if current and past values of contain some additional information, beyond that in current and past values of , about future values of . A linear Granger causality test based on a parametric VAR model can be seen as a special case where testing for conditional independence is equivalent to testing a restriction in the conditional mean specification.

In a more general setting, the null hypothesis of Granger non-causality can be rephrased in terms of conditional dependence between two time series: is a Granger cause of if the distribution of conditional on its own history is not the same as that conditional on the histories of both and . If we denote the information set of and until time t by and , respectively, and use ‘∼’ to denote equivalence in distribution, we may give a formal and general definition for Granger causality. For a strictly stationary bivariate process , , is a Granger cause of if, for all ,

In the absence of Granger causality, i.e.,

has no influence on the distribution of future . This is also referred to as Granger non-causality and often expressed as conditional independence between and as

for , Granger non-causality, as expressed in Equation (1), lays the first stone for a nonparametric test without imposing any parametric assumptions, apart from strict stationarity and weak dependence, about the data generating process or underlying distributions for and . The orthogonality here concerns not only the conditional mean, but also higher conditional moments. We assume two things here. First, is a strictly stationary bivariate process. In practice, it is infeasible in nonparametric settings to condition on the entire past of and . We therefore implicitly consider the process to be of finite Markov orders, and in the past of and , respectively.

The null hypothesis of Granger non-causality is that : is not a Granger cause of . To keep focus on the main contribution of this paper—the Taylor expansion of the transfer entropy and its asymptotic distribution—in this paper we limit ourselves to the bivariate case with single lags in the past such that , which so far has been the case considered most in the literature on nonparametric Granger non-causality. Extensions to higher lags and/or higher-variate processes are feasible, but require reduction of the bias order by data sharpening or higher-order density estimation kernels (see e.g., Diks and Wolski, 2016). We define the three-variate vector , where ; and indicates a random variable W with distribution equal to the invariant distribution of . Within the bivariate setting, W is a three-dimensional vector. In terms of density functions (which are assumed to exist), and given , Equation (1) can be phrased as

for all in the support of W, or equivalently as

for all in the support of W. A nonparametric test for Granger non-causality seeks to find statistical evidence of violation of Equation (2) or Equation (3). There are many nonparametric measures available for this purpose, some of which are mentioned above. However, as far as we know, the DP test, to be described below, currently is the only fully nonparametric test that is known to have correct asymptotic size under the null hypothesis of Granger non-causality.

2.2. The DP Test

Hiemstra and Jones [8] proposed to test the condition expressed by Equation (2) by calculating correlation integrals for each density and measuring the discrepancy between two sides of the equation. However, their test is known to suffer from severe size distortion due to the fact that the quantity on which the test is based is inconsistent with Equation (2). To overcome this problem, DP suggest to use a conditional dependence measure by incorporating a local weight function and formulating Equation (3) as

Under the null hypothesis of no Granger causality, the term within the large round brackets vanishes, and the expectation goes to zero. As noted in [23], Equation (4) can be treated as an infinite number of moment restrictions. Although testing for Equation (4) for a specific function g instead of testing Equation (2) or Equation (3) may lead to a loss of power against some specific alternatives, there is also an advantage to do so. For example, in the DP test, the weight function is taken to be , as this leads to a U-statistic representation of the corresponding estimator, which enables the analytical derivation of the asymptotic normality of the test statistic. In principle, other choices for will also do as long as the test has satisfactory power against alternatives of interest. Since in the DP test, , it tests the implication

of , rather than itself.

Given a local density estimator of a -variate random vector W at as

where is a finite variance, zero mean, kernel density function (e.g., the standard normal density function) and h is the bandwidth, the DP test develops a third order U-statistic estimator for the functional q, given by

where the normalization factor is inherited from the U-statistic representation of . It is worth mentioning that a second order square (or rectangular) kernel is adopted by DP. However, there are two main drawbacks of using a square kernel. First, a square kernel will yield a discontinuous density estimate , which is not attractive from a practical perspective. Second, it weighs all neighbor points equally, overlooking their relative distance to the estimation point . Therefore, a smooth kernel function—the Gaussian kernel—is used here, namely the product kernel function defined as , where is element in W. Using a standard univariate Gaussian kernel, , is the standard multivariate Gaussian kernel as described in [24,25].

For , DP prove the asymptotic normality of . Namely, if the bandwidth depends on the sample size as for constants and , then the test statistic in Equation (7) satisfies

where is a consistent estimator of the asymptotic variance of . DP suggest to implement an one-sided version of the test, rejecting : against the alternative : if is too large. That is, given the asymptotic critical value , the null hypothesis is rejected at significance level if .

2.3. Inconsistency of the DP Test

The drawback of the DP test arises from the fact that in Equation (5), obtained for a specific weight function , need not be equivalent to in Equations (2) and (3); it merely is an implication of . For consistently testing , an analogue of q is desirable that satisfies the positive definiteness property stated next, which q does not satisfy.

Definition 1.

A functional s of the distribution of W is positive definite if with if and only if and are conditionally independent given .

From the previous reasoning, it is obvious that Equation (5) is implied by Equation (3), and Definition 1 states that a strictly positive q is achieved if and only if is violated. In other words, the null hypothesis of Granger non-causality requires that and are independent conditionally on , which is just a sufficient, but not a necessary, condition for . With Definition 1, coincides with and a consistent estimator of q, i.e., as suggested by DP, will have unit asymptotic power. If this property is not satisfied, a test for could deviate from the test on . Although [23] identified specific sub-classes of processes for which q is positive definite, we can easily construct a counterexample where the DP test has no power even if strongly Granger causes . For completeness, such a counterexample is given next.

Inspired by the example in [26], where a closely-related test for unconditional independence is proposed, we consider a conditional counterpart to illustrate that q is not positive definite. The one-sided DP test will be seen to suffer from a lack of power for this example process. As we show below, in the case where , this drawback cannot be overcome even with a two-sided DP test.

Consider the process where, as before, . We assume that the i.i.d. continuous variable , with probability of being positive, where . Further, there is no dependence between and , and does not depend on but on in such a way that the conditional density of is given by

for .

Given Equation (9), the marginal densities of , and can be calculated to be all equal, with and , while the conditional probability of being larger than zero given is given by for , and for . Hence, for , is a Granger cause of since has an impact on the distribution of , given . For this example, we can explicitly calculate q defined in Equation (5), which is found to be . For , q has a negative value. In this situation, the one-sided DP test, which rejects for large q, is not a consistent test for Granger non-causality. One may argue that this is not a problem if we use a two-sided test at the price of losing some power. However, the inconsistency of the DP test—which tests rather than —then would still be illustrated by the example if , for which exactly, while is clearly a Granger cause of ; the DP test will only have trivial power against this alternative.

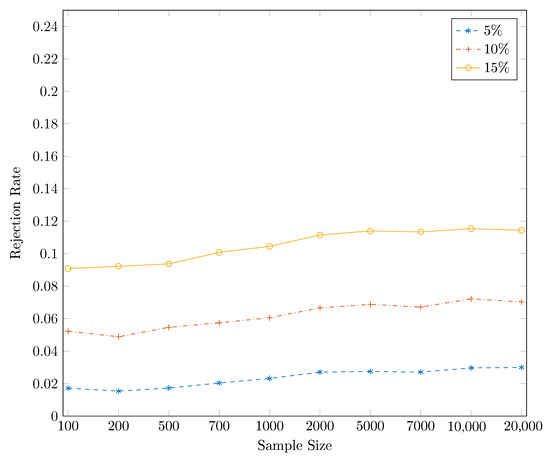

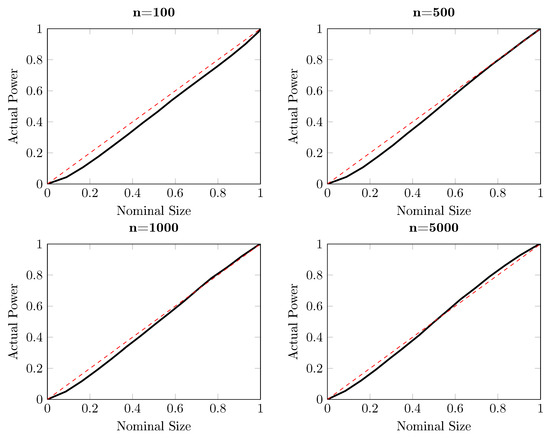

Figure 1 reports the power of the one-sided DP test as a function of the sample size for different significance levels, based on independent simulations. Three nominal sizes are illustrated here: 5%, 10% and 15%, and the sample size ranges from 100 to . It is striking from Figure 1 that the DP test hardly has power against the alternative with , for which . The same conclusion can be drawn from Figure 2, where the size-power plots [27] are given. For almost all sub-panels with different sample sizes, the power of the DP test is around the diagonal line for this particular example when , which indicates that the DP test has only trivial power to detect Granger causality from to .

Figure 1.

Power for the one-sided DP test for the artificial process for , for which , at nominal size, from the bottom to the top, 5%, 10% and 15%, respectively, based on independent simulations.

Figure 2.

Size-power plots for the one-sided DP test for the artificial process for , for which , based on independent simulations. Each subplot draws the actual power against the nominal size for different sample sizes, ranging from 100 to 5000. The solid curve represents the actual power and the red dash line indicates the diagonal, indicating the nominal size of a test.

The lack of power of the one-sided DP test in this example is hardly alleviated by its two-sided counterpart, as a result of the absence of equivalence between and conditional independence. The difference between and its implication gives rise to the lack of power of the DP test as the estimated quantity is not positive definite. In the next subsection, a new test statistic, based on the information-theoretical concept transfer entropy, is introduced and the test statistic is shown to be positive definite, which overcomes the inherited drawback of the DP test. In fact, this new test statistic shares many similarities with the DP test statistic, but also has an information-theoretical interpretation for its non-negativity.

2.4. Information-Theoretical Interpretation

In a very different context from testing for conditional independence, the problem of information feedback and impact also has drawn much attention since 1950. Information theory, as a branch of applied mathematical theory of probability and statistics, studies the transmission of information over a noisy channel. This entropy, also referred to as Shannon entropy, is a key measure in the field of information theory brought forward in [28,29]. The entropy measures the uncertainty and randomness associated with a random variable. Suppose that S is a random vector with density , then the Shannon entropy is defined as

There is a long history of applying information measures in econometrics. For example, ref. [30] uses the Kullback–Leibler information criterion (KLIC) [31] to construct a one-sided test for serial independence. Since then, nonparametric tests using entropy-based measures for independence between two time series are becoming prevalent. Granger and Lin [19] use entropy measure to identify the lags in a nonlinear bivariate model. Granger et al. [32] study dependence with a transformed metric entropy, which has the additional advantage of allowing multiple comparisons of distances and turns out to be a proper measure of distance. Hong and White [20] provide a new entropy-based test for serial dependence, and show that the test statistic is asymptotically normal.

Although inspiring, those results cannot be applied directly to measure conditional dependence. We therefore consider that the transfer entropy (TE) introduced in [16] is a suitable measure to serve this purpose. The TE quantifies the amount of information explained in one series k steps ahead from the state of another series, given the information contained in its own past. We briefly introduce the TE and KLIC before we further discuss its relation with the modified DP test.

Suppose that we have a bivariate process , and for brevity we put , and . Again, we limit ourselves to lag for simplicity, and consider the three-dimensional vector as before. The transfer entropy is a nonlinear and nonparametric measure for the amount of information contained in X about Z, in addition to the information about Z that already contained in Y. Although the TE defined in [16] applies to discrete variables, it is easily generalized to continuous variables. Conditional on Y, is defined as

Using the conditional mutual information , the TE can be equivalently formulated in terms of four Shannon entropies as

In order to construct a test for Granger causality based on the TE, it remains to be shown that the TE is a proper basis for testing the null hypothesis. The following theorem, as a direct result of the properties of the KLIC, lays the quantitative foundation for testing based on the TE.

Theorem 1.

The transfer entropy , as a functional of the joint density of , is positive definite; that is, with equality if and only if for all in the support of W.

Proof.

Equation (1) follows from generalizing Theorem 3.1 in Chapter 2 of [33], where the divergence between two different densities has been considered. An alternative proof is given in Equation (A.1) in Appendix A by using Jensen’s inequality and concavity of the log function. □

It is not difficult to verify that the condition for coincides with Equations (2) and (3) for Granger non-causality under the null hypothesis. This positive definiteness makes a desirable measure for constructing a one-sided test of Granger causality; any divergence from zero is a sign of conditional dependence of Y on X. To estimate , one may follow the recipe in [34] by measuring k-nearest neighbor distances. A more natural method, applied in this paper, is to use the plug-in kernel estimates given in Equation (6), and replace unknown expectations by sample averages.

However, the direct use of the TE to test Granger non-causality is not easy due to the lack of asymptotic theory for the test statistic. It has been shown [19] that the asymptotic distribution of entropy-based estimators usually depends on strict assumptions regarding the dataset. Over the years several break-throughs have been made with the application of entropy to testing serial independence, e.g., [30] obtains an asymptotic distribution for an entropy measure by a sample-splitting technique and [20] derives asymptotic normality under bounded support data and quartic kernel assumptions. However, the limiting distribution of the natural nonparametric TE estimator is still unknown under more general conditions.

One may argue in favor of using simulation techniques to overcome the problem of the lack of asymptotic theory. However, as suggested in [11], there exist estimation biases of TE statistics for non-parametric dependence measures under the smoothed bootstrap procedure. Even with parametric test statistics, it has been noticed [21] that the TE-based estimator is generally biased. Surrogate data are also applied widely, for instance in [35,36] to detect information transfer. We therefore consider the direct usage of the TE for nonparametric tests for Granger non-causality difficult, if not impossible.

Below, we show that a first order Taylor expansion of the TE provides a way out to construct the asymptotic distribution of this meaningful information measure. In the next section, we show that the first order Taylor expansion of the TE can form the basis of a modified DP test for conditional independence. This not only helps to circumvent the problem of asymptotic distribution for entropy-based statistic, but also endows the modified DP test with positive definiteness.

In the remaining part of this section we will introduce the first order Taylor expansion of the TE, and the positive definiteness of the measure will be given afterwards. Starting with Equation (10), we perform the first order Taylor expansion locally at , which is

where ‘h.o.t’ stands for ‘higher order terms’ in , which is small close to the null hypothesis. Ignoring the higher order terms in the transfer entropy makes the distribution of the test statistic tractable, without (up to leading order) affecting the dependence measure close to the null hypothesis, where by definition a powerful test is needed.

By ignoring higher order terms, we define the first order expansion as a measure for conditional dependence. The following theorem states that , which with slight abuse of language we still refer to as a transfer entropy, inherits the positive definiteness of the TE.

Theorem 2.

The transfer entropy ϑ, as a functional of the joint density of , is positive definite; that is, with equality if and only if for all in the support of W.

Proof.

See Equation (A.2). □

Equation (2) indicates that the divergence measure has the desirable property of positive definiteness, which the measure q used in the DP test is lacking. However, direct estimation of Equation (11) does not lead to a practically useful test statistic without the asymptotic distribution. In the next subsection we show that the nonparametric estimator of is asymptotically normal. The key to this result is the fact that the DP statistic and the newly proposed statistic only differ in terms of the weight function in Equation (4), and that the proof of asymptotic normality of the DP test can be easily adjusted to accommodate this new weight function.

2.5. A Modified DP Test

In comparing Equations (3) and (4), it can be seen that the discrepancy between and arises from incorporation of the weight function to the null hypothesis. In principle other positive functions can be used, such as those discussed by DP. As long as the corresponding estimator of the divergence measure has a U-statistic representation, asymptotic normality follows from the theory of U-statistics. Particularly, we propose to modify the DP test by dividing all terms in the expectation of Equation (5) by the function , since then, by Theorem 2

is not just implied by , but equivalent to it.

One can also think of Equation (12) as the result of plugging in a different weight function in Equation (4). By the choice instead of , which was used by DP, Equation (12) simplifies to

which is equivalent to the first order Taylor expansion in Equation (11) and hence to by Equation (2). To estimate , we propose to use the following statistic with density estimator defined in Equation (6):

where The reason for estimating in this form is that, with the sample statistic , we can obtain a third order U-statistic representation of , similar to that for the DP test statistic, by which asymptotic normality follows.

The asymptotic normality of is stated in Equation (3) below, which relies on the following two lemmas concerning the uniform consistency of density estimators.

Lemma 1.

(Uniform consistency of ) Let be a stationary sequence of 3-variate random variables with a continuous and bounded Lebesgue density f, satisfying the strong mixing conditions in Assumption 2 of [37]. If for the estimation of f, based on the first n values , the kernel density estimator is used with kernel function , as given in Equation (6), with n-dependent bandwidth , , and is bounded and integrable, then

Proof.

Equation (1) is a special case of Theorem 7 in [37], which more generally concerns the uniform consistency of the kernel estimator of f and its derivatives. □

Equation (1) provides the uniform consistency with probability one for a class of kernel estimators of multivariate density functions. This is a generalization of the consistency result of the univariate density estimation of [38,39] to the multivariate case with dependent observations. Note that to serve our purpose here we need uniform convergence, which is stronger than pointwise convergence. We refer to [40] for a detailed discussion between different types of convergence.

We next consider , which differs from in Equation (14) only in having in the denominator replaced by the true unknown function . In the next lemma, the short-hand notation and is used.

Lemma 2.

Under the conditions of Lemma Equation (1), if in addition , then and have the same limiting distribution. More formally stated,

Proof.

See Equation (A.3). □

Theorem 3.

If the bivariate time series is strictly stationary and satisfies at least one of the mixing conditions (a), (b) or (c) in Theorem 1 of [41], the corresponding random vector satisfies the conditions of Lemmas 1 and 2, and the density estimation kernel has bandwidth , is asymptotically normally distributed. In particular

where is an HAC estimator of the long-run variance of .

Proof.

See Equation (A.4). □

When implementing the test based on Equation (14), some comments regarding the treatment of the marginals are in order. Note that is invariant under invertible smooth transformations of the marginals due to the form of Equation (13) assuming that and are continuous (the ratio of densities of the same variables is invariant under marginal transforms). Therefore, the dependence structure between and remains intact under invertible marginal transforms. Although our testing framework does not depend crucially on the restrictive assumption of a uniform distribution for the time series as in [20,42], we recommend to use the probability integral transformation (PIT) on each of the marginals, as suggested by DP, as this usually improves the performance of statistical dependence tests. The reason is that, contrary to directly calculating the test statistics on the original data, the bounded support after transforming the marginals to a uniform distribution avoids non-existing moments during the bias and variance evaluation, which helps to stabilize the test statistic. There are alternative ways to transform the marginal variables into a bounded support, for example, by using a logistic function as [20]. Here, we decided to just apply the PIT, as it does not require any user-specified parameters, and always leads to identical (uniform) marginals. The procedure is to transform the original series () to () such that () is the empirical CDF of () and the empirical distribution of () is uniform.

Since the transfer entropy-based measure is non-negative, tests based on the statistic are implemented as one-sided tests, rejecting the null hypothesis if , where is the th quantile of standard normal distribution for a given significance level .

2.6. Bandwidth Selection

In nonparametric settings, there typically is no uniformly most powerful test against all alternatives. Hence, it is unlikely that a uniformly optimal bandwidth exists. As long as the bandwidth tends to zero with as , our test has unit asymptotic power. Yet, we may define the optimal bandwidth in the sense of asymptotically minimal mean squared error (MSE). When balancing the first and forth leading terms in Equation (A5) to minimize the squared bias and variance, for a second order kernel, it is easy to show that the optimal bandwidth for the DP test is given by

with and the series expansion for the second moment of kernel function and expectation of bias, respectively. Since the convergence rate of the MSE, derived in Equation (A.4), is not affected by the way we construct the new test statistic, the derivation of Equation (15) remains intact and we calibrated the optimal bandwidth for our new test, finding

where the scale factor involved is a result of bias and variance adjustment for replacing the square kernel by the Gaussian kernel (the variance of the uniform DP kernel was , which we rounded off to ). Intuitively, the and terms are different from those for the DP test; more details can be found in Equation (A.5).

The optimal value for C is process-dependent and difficult to track analytically. For example, for a (G)ARCH process the optimal bandwidth is approximately given by where (see DP). Applying Equation (16), we proceed with for (G)ARCH processes. To gain some insights into the bandwidth, we illustrate the test size and power with a 2-variate ARCH process, given by

We let and run 5000 Monte Carlo simulations for time series length varying from 200 to 5000. The size is assessed based on testing Granger non-causality from to , and for the power we use the same process but testing from Granger non-causality from to . The results are presented in Table 1, from which it can be seen that the modified DP test is conservative in the sense that its empirical size is lower than the nominal size 0.05 in all cases, while the power increases when a increases and when the sample size increases.

Table 1.

Observed size and power of the test for bivariate ARCH process Equation (17).

3. Size/Power Simulations

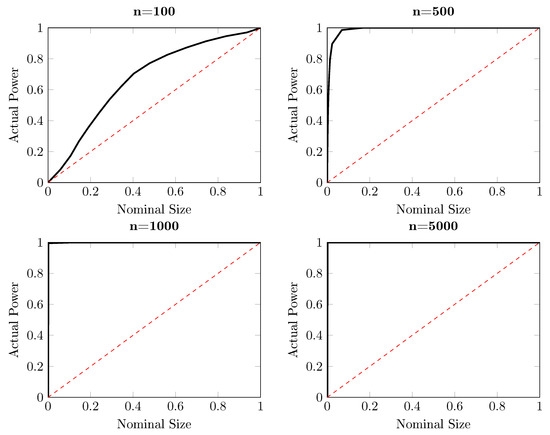

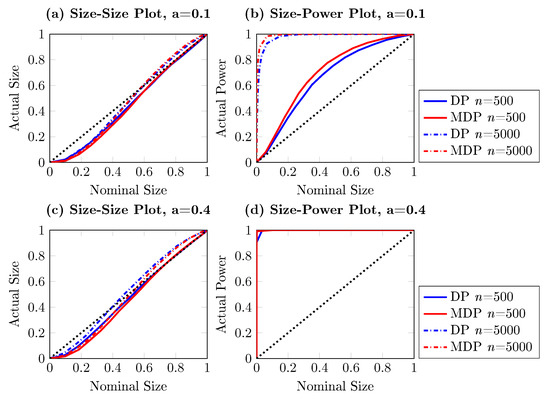

This section investigates the performance of the modified DP test. Before proceeding with new data generating processes, we first revisit the example illustrated in Equation (9) for which the DP test fails to detect that is Granger causing . The modified DP test is performed with replications, with the same bandwidth. The counterpart of the power-size plots for the DP test in Figure 2 is delivered in Figure 3. In contrast with the lack of power of the DP test, for time series length and larger, the modified DP test already has a very high power in this artificial experiment, as expected.

Figure 3.

Size-power plots for the one-sided modified DP test for the artificial process for , for which , based on independent replications. Each subplot shows the observed power against the nominal size for different sample sizes, ranging from 100 to 5000. The solid curve represents the observed power and the red dashed line corresponds with the diagonal, indicating the nominal size of the test.

Next, we use numerical simulations to study the behavior of the modified DP test, while direct comparisons between the modified DP test and the DP test are also given. Three processes are being considered. In the first experiment, we consider a simple bivariate VAR process, given by

The second process is designed as a nonlinear VAR process in Equation (19). Again, the size and power are investigated by testing for Granger non-causality in two different directions.

The last process is the same as the example we used for illustrating the performance of the bandwidth selection rule, which is a bivariate ARCH process also given in Equation (17),

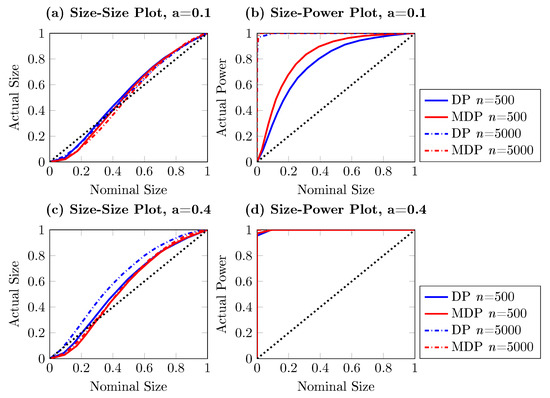

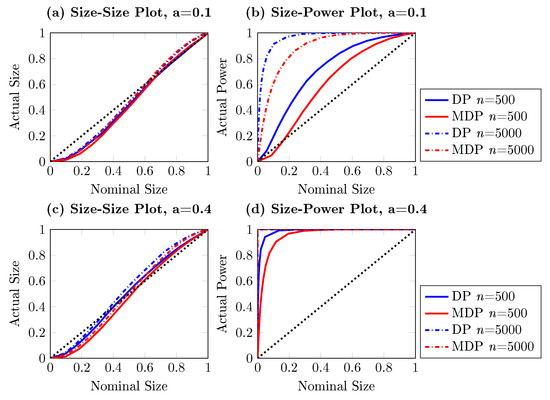

The results, which are shown in Figure 4, Figure 5 and Figure 6, are obtained with 5000 simulations for each process. We present the DP test and the modified DP test with both the empirical size–size and size–power plots for the three processes in Equations (18)–(20) for sample sizes and , respectively. The control parameter a is considered to take the values and . As before, the empirical size is obtained by testing for Granger non-causality from to , and the empirical power is the observed rejection rate of testing for Granger non-causality from to .

Figure 4.

Size–size and size–power plots of Granger non-causality tests, based on 5000 simulations. The DGP is bivariate nonlinear vector autoregressive (VAR) as in Equation (19), with Granger causing . The left (right) column shows observed rejection rates under the null (alternative) hypothesis, the blue lines stand for DP test while the red lines indicate the modified DP test. The solid line and dashed line present results for sample size and , respectively.

Figure 5.

Size–size and size–power plots of Granger non-causality tests, based on 5000 simulations. The DGP is the bivariate linear VAR of Equation (18), with Granger causing . The left (right) column shows observed rejection rates under the null (alternative) hypothesis, the blue lines stand for the DP test while the red lines indicate the modified DP test. The solid line and dashed line present results for sample size and , respectively.

Figure 6.

Size–size and size–power plots of Granger non-causality tests, based on 5000 simulations. The DGP is bivariate ARCH as in Equation (20), with Granger causing . The left (right) column shows observed rejection rates under the null (alternative) hypothesis, the blue lines stand for DP test while the red lines indicate the modified DP test. The solid line and dashed line present results for sample size and , respectively.

It can be seen from Figure 4, Figure 5 and Figure 6 that the modified DP test is slightly more conservative than the DP test under the null hypothesis. However, the size distortion reduces when the sample size increases. The modified DP test is more powerful than the DP test in the linear and nonlinear VAR settings given in Equations (18) and (19). Overall, we see that the larger the sample size and the stronger the causal effect are, the better the asymptotic performance of the modified DP test is.

4. Empirical Illustration

4.1. Stock Volume–Return Relation

In this section, we first revisit the stock return–volume relation considered in [8] and DP. This topic has a long research history. Early empirical work mainly focused on the positive correlation between volume and stock price change, see [43]. The later literature exposed directional relations, for example, [44] found that large price movements are followed by high volume. In [45], authors observed a high-volume return premium; namely, periods of extremely high (low) volume tend to be followed by positive (negative) excess returns. More recently, [46] investigated the power law cross-correlations between price changes and volume changes of the S&P 500 Index over a long period.

We use daily volume and returns data for the three most-followed indices in US stock markets, the Standard and Poor’s 500 (S&P), the NASDAQ Composite (NASDAQ) and the Dow Jones Industrial Average (DJIA), between January 1985 and October 2016. The daily volume and adjusted daily closing prices were obtained from Yahoo Finance. The time series were converted by taking log returns multiplied by 100. In order to adjust for the day-of-the-week and month-of-the-year seasonal effects in both mean and variance of stock returns and volumes, we performed a two-stage adjustment process, similar to the procedure applied in [8]. We replace Akaike’s information criterion used by [8] with the [47] information criterion to be more stringent on picking up variables, having no intention to provoke a debate over the two criteria; we simply prefer a more parsimonious liner model to avoid potential overfitting. We apply our test not only to the raw data, but also on VAR filtered residuals and EGARCH(1,1,1) filtered residuals. We have tried different error distributions like normal, Students’ t, GED and Hansen’s skewed t [48]. The differences caused by different distributional assumptions are small; we only report the results based on the Students’ t distribution due to space considerations. The idea of filtering is to remove linear dependence and the effect of heteroskedasticity to isolate the nonlinear and higher moment relationships among series, respectively.

Table 2, Table 3 and Table 4 report the resulting t statistics for both the DP test and our modified DP test in both directions. The linear Granger F-values based on the optimal VAR models are also given. Two bandwidth values are used: and , after standardization, where the latter value roughly corresponds to the derived optimal bandwidth () and the larger bandwidth, also used in DP, is added as a robustness check.

Table 2.

Test Statistics for the Standard and Poor’s 500 (S&P 500) returns and volume data. ‘MPD’ stands for ‘modified DP’.

Table 3.

Test Statistics for the NASDAQ returns and volume data.

Table 4.

Test Statistics for the Dow Jones Industrial Average (DJIA) returns and volume data. ’MDP’ stands for ‘modified DP’.

Generally speaking, the results indicate that the effect in the return–volume direction is stronger than vice versa. For the test results on the raw data, the F-tests based on the linear VAR model and both nonparametric tests suggest evidence of return affecting volume for all three indexes. For the other direction, causality from volume to return, the linear Granger test finds no evidence of causal impact while the nonparametric tests claim strong causal effect except for the DJIA where only the modified DP test finds a causal link from volume to return. As argued above, the results for the linear test are suspicious since it only examines linear causal effects in the conditional mean; information exchange from higher moments is completely ignored.

A direct comparison between the DP test and the modified DP test shows that the new test is more powerful overall. For the unfiltered data, both tests find a strong causal effect in two directions for S&P and NASDAQ, but for the DJIA, the t-statistics of the DP test are weaker than those of the modified DP test. The bi-directional causality between return and volume remains unchanged after linear VAR filtration, although the DP test again shows weaker evidence. The result also suggests that the causality is strictly nonlinear. The linear test (F-test) is unable to spot these nonlinear linkages.

Further, in the direction from Volume to Return, these nonlinear causalities tend to vanish after EGARCH filtering. Thus, the bi-directional linkage is reduced to a one-directional relation from return to volume. The modified DP statistics, however, are in general larger than the DP t-values, and indicate more causal relations. In contrast with the DP test, our test suggests that the observed nonlinear causality cannot be completely attributed to second moment effects. Heteroskedasticity modeling may reduce this nonlinear feature to some extent, but its impact is not as strong as the DP test would suggest.

4.2. Application to Intraday Exchange Rates

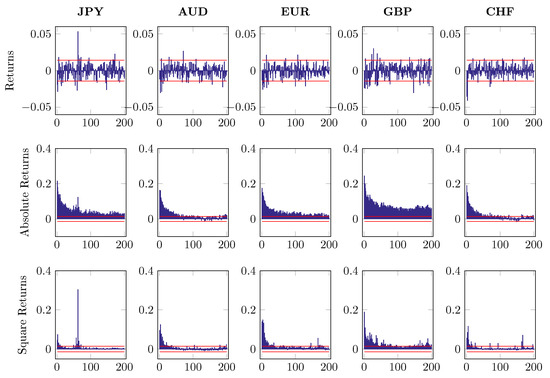

In the second application, we apply the modified DP test to intraday exchange rates. We consider five major currencies: JPY, AUD, GBP, EUR and CHF, all against the USD. The data, obtained from Dukascopy Historical Data Feed, contain 5-min bid and ask quotes for the third quarter of 2016; from July 1 to September 30, with a total of 92 trading days and 26,496 high frequency observations. We use 5-minute data, corresponding to the sampling frequency of 288 quotes per day, which is high enough to avoid measurement errors (see [49]) but also low enough for the micro-structure not to be of major concern.

Although the foreign exchange market is one of the most active financial markets in the world, where trading takes place 24 h per day, intraday trading is not always active. Thus, we delete the thin trading period, from Friday 21:00 GMT until Sunday 20:55 GMT, also to keep the intraday periodicity intact. We calculate the exchange rate returns as in [50]. First, the average log bid and log ask prices are calculated, then the differences between the log prices at consecutive times are obtained. Next, we remove the conditional mean dynamics by fitting an MA(1) model and using the residuals as our return series following [51]. Finally, intraday seasonal effects are filtered out using estimated time-of-day dummies following [50], i.e.,

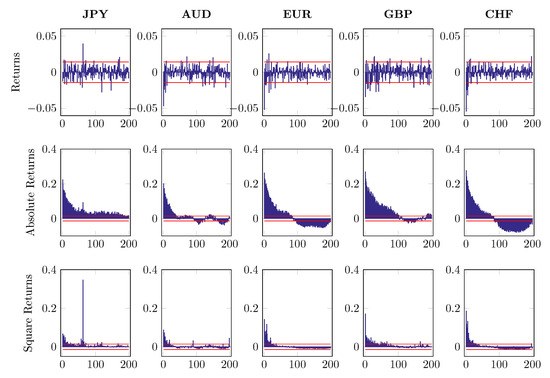

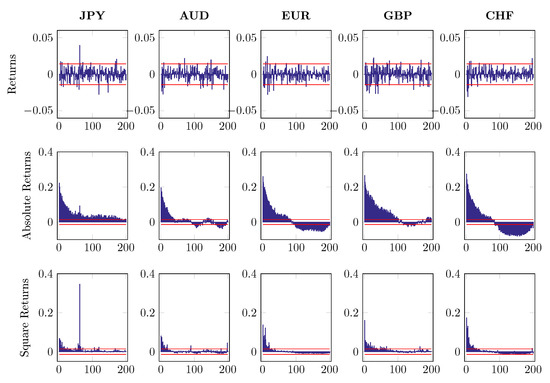

where denotes intraday log returns after MA(1) filtering. The subscript indicates five different currencies and stands for time t on day n. The first component of return series refers to a deterministic intraday seasonal component while is the nonseasonal return portion, which is assumed to be independent of . To distinguish from , we fit the time-of-day dummies to and use the estimated to standardize the return with the restriction . Figure 7, Figure 8 and Figure 9 report the first 200 autocorrelations of returns, absolute returns and squared returns, when checking on the raw series, MA(1) residuals and EGARCH residuals, respectively.

Figure 7.

Autocorrelations of returns, absolute returns and square returns, up to 200 lags.

Figure 8.

Autocorrelation of returns, absolute returns and square returns after MA(1) component is removed, up to 200 lags.

Figure 9.

Autocorrelation of returns, absolute returns and square returns after MA(1) and GARCH filtering, up to 200 lags.

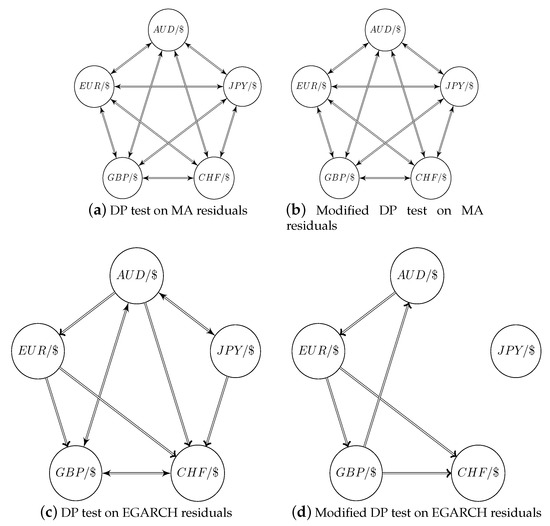

We perform pairwise nonparametric Granger causality tests on the MA(1) filtered and seasonally adjusted data, as well as on the standardized residuals after EGARCH(1,1,1) filtering. We use the skewed t distribution introduced in [48] to model the innovation terms. We choose a bandwidth of 0.2768, according to Equation (16).

The test results are shown in Table 5 for both MA(1) de-meaned and de-seasoned data, as well as EGARCH filtered data. Although not reported here, there is statistical evidence for strong bi-directional causality among all currency pairs on raw return data at 5-min lag. These bi-directional causalities do not disappear after removing the MA(1) component and seasonal component. However, the observed information spillover is significantly weaker after the EGARCH filtering. When testing based on the EGARCH standardized residuals, only a few pairs still show signs of a strong causal relation. Especially, the directional relation of is the only one detected by both the DP test and the modified DP test at the 1% level of significance. A graphical representation is provided in Figure 10, where one can clearly see that most causal links are gone after EGARCH filtering. The modified DP test exposes five uni-directional linkages among the EGARCH filtered returns at the 5% level. The and are the most important driving currencies. While the DP test also admits the importance of and particularly , which shows bi-directional causality between and .

Table 5.

Test statistics for the pairwise Granger causality on raw exchange returns.

Figure 10.

Graphical representation of pairwise causalities on MA and seasonally filtered residuals, as well as EGARCH filtered residuals. The arrows in the graph indicate a directional causality at the 5% level of significance.

To sum up, we find evidence of strong causal links among exchange returns at an intraday high-frequency timescale. Each currency has predictive power for other currencies, implying high co-movements in the international exchange market. Although those directional linkages are not affected by the de-meaning procedure, we may reduce most of them by taking the volatility dynamics into account. When filtering out heteroskedasticity by EGARCH estimation, there only exist a few pairs containing spillover effects.

5. Summary and Conclusions

Borrowing the concept of transfer entropy from Information Theory, this paper develops a novel nonparametric test statistic for Granger non-causality. The asymptotic normality of the test statistic is derived by taking advantage of a U-statistic representation, similar to that applied in the DP test. The modified DP statistic, however, improves the DP statistic in at least the two respects: firstly, the positive definiteness of the quantity on which the test statistic is based, paves the way for properly testing for differences between conditional densities; secondly, the weight function in our test is motivated from an information-theoretical point of view, while the weight function in the DP test was selected in an ad hoc manner.

The simulation study confirms that the modified DP test has good size and power properties for a wide range of data generating processes. In the first application, a direct comparison with the DP test confirms that the DP test may suffer from a lack of power for specific processes, while the second application to high frequency exchange return data helps us better understand whether the spillover channel in exchange rate markets arises from conditional mean, conditional variance or higher conditional moments. Some obvious extensions to future work include the incorporation of additional lags of the variables and a generalization to higher-variate settings to allow for conditioning on additional, possibly confounding, variables.

Author Contributions

C.D. and H.F. contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors thank seminar participants at the University of Amsterdam and the Tinbergen Institute, as well as participants of the 10th International Conference on Computational and Financial Econometrics (Seville, December 09-11, 2016), the 25th Annual Symposium of the Society for Nonlinear Dynamics and Econometrics (Paris, Mar 30-31, 2017) and the 2017 International Association for Applied Econometrics Conference (Sapporo, Jun 26-30, 2017). The authors wish to extend particular gratitude to Simon Broda and Chen Zhou for their comments on an earlier version of this paper; and to SURFsara for providing the LISA cluster. Hao Fang is grateful to SNDE and IAAE for their conference support.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Proof of Positive-Definiteness of the Transfer Entropy

According to the definition of TE in Equation (14), the expectation over the logarithm of the density ratio is evaluated: . Define the reciprocal of the density ratio in the logarithm as a random variable R in such a way: , we could rewrite the TE as . Since is a concave function of R, following Jensen’s inequality we have

Next, as random variable R is nonnegative since it is defined as a ratio of densities. For any realization of , . This is because as a concave function, is bounded from above by the tangent line at point (1,0), which is given by . It follows that

On combining Equations (A1) and (A2), we have , where the last equality holds simply as a result of integral of the over its full support delivering 1. A similar argument can be found in [52]. Thus, we have proved that . It is obvious that the equality holds if and only if , which is equivalent to . This completes the proof of Equation (3).

Appendix A.2. Proof of Positive-Definiteness of the First Order Te Statistic

Starting from Equation (11) and the definition of t,

with equality if and only if for any in the support of . In the fourth step, we completed the square by using the fact that the integral over the whole support of the pdf is 1. Finally, follows naturally from the integrand being non-negative.

Appendix A.3. Proof of Lemma 2

For a vector , let

and

By Equation (1), uniformly, as , but also and uniformly by the continuous mapping theorem.

Write and for and its estimator based on , and likewise

and

One may write and . We then find that, up to higher-order terms in resulting from the factor in Equation (14),

The squared difference satisfies

by the uniform convergence of . It follows that

if .

Appendix A.4. Asymptotic Distribution of the Modified Dp Statistic

Applying Lemma 2, it remains to be proven that

According to the definition of , Equation (14) is a re-scaled DP statistic with the scaling factor . In a similar manner of Theorem 1 in DP, we can obtain the asymptotic behavior of by making use of the optimal mean squared error (MSE) bandwidth developed in [53] for this point estimator. For the moment, we consider the case where the vectors are assumed to be independent and identically distributed. We later allow for weak dependence in the time series context as described at the end of this section.

The test statistic can be expressed by a degree three U-statistic by symmetrization with respect to the indices . Further, defining two kernel functions as and , we assume the three mild conditions adapting from [53] for controlling the rate of convergence of the point-wise bias as well as the serial expansions of the kernel functions, being

where all remainder terms are of higher orders, i.e., , and and the convergence rate is controlled by the parameters , and . The conditions in Equation (A4) are satisfied if is set as the order of kernel function , which is 2 for the Gaussian kernel, and , depend on the dimensions of the variables under consideration via and . Define , we can show that the mean squared error of DP statistic as a function of sample size dependent bandwidth is given by

endgroup.

The scaling factor in the modified test statistic enters the MSE in Equation (A5) by mainly changing the bandwidth-independent variance term. For the other bandwidth-dependent terms, just re-scales the coefficients without affecting the convergence rates. Thus, we may still allow for all the h-dependent terms to be to ensure that -term asymptotically dominates (in which case asymptotic normality of the test statistic obtains). Therefore, adopting a sample size-dependent bandwidth , with C, , one finds

where is a consistent estimator of the asymptotic variance . The bivariate case, for and , requires . In the time series setting, under the assumption that the processes are stationary and satisfies at least one of the mixing conditions (a), (b) or (c) in Theorem 1 in [41], the long-run variance of is given by , where . The variance can then be estimated using an HAC estimator for the long-run variance of [41,54].

Appendix A.5. Optimal Bandwidth for the Modified Dp Test

The optimal bandwidth should balance the squared bias and variance of the test statistic, given in Equation (A5). Particularly, the first and fourth terms are leading, and all reminders are of higher order. The optimal bandwidth should have a similar form as the one for DP test in Equation (15).

In fact, the difference between two bandwidths is up to a scalar as a result of replacing the square kernel by a Gaussian one. Assuming the product kernel in Equation (6), the bias and variance of the density estimator are described by the following [24,55]:

where is the th moment of a kernel function, with the corresponding order of the kernel. For Gaussian kernel , . The function is the so called roughness function of the kernel. For a k-dimensional vector, the multivariate density estimation is carried out with a bandwidth vector . It is not difficult to see that and defined in Equation (A4) depend on the kernel function used trough the functions and .

Using the superscripts ‘G’ and ‘SQ’ to denote the Gaussian and square kernels respectively, [55] shows

References

- Granger, C.W. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Bressler, S.L.; Seth, A.K. Wiener–Granger causality: A well established methodology. Neuroimage 2011, 58, 323–329. [Google Scholar] [CrossRef] [PubMed]

- Ding, M.; Chen, Y.; Bressler, S.L. Granger causality: Basic theory and application to neuroscience. In Handbook of Time Series Analysis: Recent Theoretical Developments and Applications; John Wiley & Sons: New York, NY, USA, 2006; pp. 437–460. [Google Scholar]

- Guo, S.; Ladroue, C.; Feng, J. Granger causality: Theory and applications. In Frontiers in Computational and Systems Biology; Springer: Berlin, Germany, 2010; pp. 83–111. [Google Scholar]

- Barrett, A.B.; Barnett, L.; Seth, A.K. Multivariate Granger causality and generalized variance. Phys. Rev. E 2010, 81, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Granger, C.W.J. Forecasting in Business and Economics; Academic Press: New York, NY, USA, 1989. [Google Scholar]

- Baek, E.G.; Brock, W.A. A General Test for Nonlinear Granger Causality: Bivariate Model; Working Paper; Iowa State University and University of Wisconsin: Madison, WI, USA, 1992. [Google Scholar]

- Hiemstra, C.; Jones, J.D. Testing for linear and nonlinear Granger causality in the stock price-volume relation. J. Financ. 1994, 49, 1639–1664. [Google Scholar]

- Diks, C.; Panchenko, V. A new statistic and practical guidelines for nonparametric Granger causality testing. J. Econ. Dyn. Control. 2006, 30, 1647–1669. [Google Scholar] [CrossRef]

- Bell, D.; Kay, J.; Malley, J. A non-parametric approach to non-linear causality testing. Econ. Lett. 1996, 51, 7–18. [Google Scholar] [CrossRef]

- Su, L.; White, H. A nonparametric Hellinger metric test for conditional independence. Econom. Theory 2008, 24, 829–864. [Google Scholar] [CrossRef]

- Bouezmarni, T.; Rombouts, J.V.; Taamouti, A. Nonparametric copula-based test for conditional independence with applications to Granger causality. J. Bus. Econ. Stat. 2012, 30, 275–287. [Google Scholar] [CrossRef]

- Linton, O.; Gozalo, P. Testing conditional independence restrictions. Econom. Rev. 2014, 33, 523–552. [Google Scholar] [CrossRef]

- Su, L.; White, H. Testing conditional independence via empirical likelihood. J. Econom. 2014, 182, 27–44. [Google Scholar] [CrossRef]

- Wang, X.; Hong, Y. Characteristic function based testing for conditional independence: A nonparametric regression approach. Econom. Theory 2018, 34, 815–849. [Google Scholar] [CrossRef]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar] [CrossRef] [PubMed]

- Hlaváčková-Schindler, K.; Paluš, M.; Vejmelka, M.; Bhattacharya, J. Causality detection based on information- theoretic approaches in time series analysis. Phys. Rep. 2007, 441, 1–46. [Google Scholar] [CrossRef]

- Amblard, P.O.; Michel, O.J. The relation between Granger causality and directed information theory: A review. Entropy 2012, 15, 113–143. [Google Scholar] [CrossRef]

- Granger, C.; Lin, J.L. Using the mutual information coefficient to identify lags in nonlinear models. J. Time Ser. Anal. 1994, 15, 371–384. [Google Scholar] [CrossRef]

- Hong, Y.; White, H. Asymptotic distribution theory for nonparametric entropy measures of serial dependence. Econometrica 2005, 73, 837–901. [Google Scholar] [CrossRef]

- Barnett, L.; Bossomaier, T. Transfer entropy as a log-likelihood ratio. Phys. Rev. Lett. 2012, 109, 138105. [Google Scholar] [CrossRef]

- Diks, C.; Fang, H. Transfer Entropy for Nonparametric Granger Causality Detection: An Evaluation of Different Resampling Methods. Entropy 2017, 19, 372. [Google Scholar] [CrossRef]

- Diks, C.; Wolski, M. Nonlinear Granger causality: Guidelines for multivariate analysis. J. Appl. Econom. 2016, 31, 1333–1351. [Google Scholar] [CrossRef]

- Wand, M.P.; Jones, M.C. Kernel Smoothing; Chapman & Hall/CRC: New York, NY, USA, 1994. [Google Scholar]

- Silverman, B.W. Density Estimation for Statistics and Data Analysis; CRC Press: New York, NY, USA, 1986; Volume 26. [Google Scholar]

- Skaug, H.J.; Tjøstheim, D. Nonparametric tests of serial independence. In Developments in Time Series Analysis; Rao, T.S., Ed.; Developments in Time Series Analysis; Chapman & Hall: London, UK, 1993; pp. 207–209. [Google Scholar]

- Davidson, R.; MacKinnon, J.G. Graphical methods for investigating the size and power of hypothesis tests. Manchaester Sch. 1998, 66, 2–23. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Shannon, C.E. Prediction and entropy of printed English. Bell Syst. Tech. J. 1951, 30, 50–64. [Google Scholar] [CrossRef]

- Robinson, P.M. Consistent nonparametric entropy-based testing. Rev. Econ. Stud. 1991, 58, 437–453. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Granger, C.; Maasoumi, E.; Racine, J. A dependence metric for possibly nonlinear processes. J. Time Ser. Anal. 2004, 25, 649–669. [Google Scholar] [CrossRef]

- Kullback, S. Information Theory and Statistics; Courier Corporation: New York, NY, USA, 1968. [Google Scholar]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef]

- Wibral, M.; Pampu, N.; Priesemann, V.; Siebenhühner, F.; Seiwert, H.; Lindner, M.; Lizier, J.T.; Vicente, R. Measuring information-transfer delays. PLoS ONE 2013, 8, e55809. [Google Scholar] [CrossRef]

- Papana, A.; Kyrtsou, C.; Kugiumtzis, D.; Diks, C. Detecting Causality in Non-stationary Time Series Using Partial Symbolic Transfer Entropy: Evidence in Financial Data. Comput. Econ. 2016, 47, 341–365. [Google Scholar] [CrossRef]

- Hansen, B. Uniform convergence rates for kernel estimation with dependent data. Econom. Theory 2008, 24, 726–748. [Google Scholar] [CrossRef]

- Nadaraya, E. On non-parametric estimates of density functions and regression curves. Theory Probab. Appl. 1965, 10, 186–190. [Google Scholar] [CrossRef]

- Schuster, E.F. Estimation of a probability density function and its derivatives. Ann. Math. Stat. 1969, 40, 1187–1195. [Google Scholar] [CrossRef]

- Wied, D.; Weißbach, R. Consistency of the kernel density estimator: A survey. Stat. Pap. 2012, 53, 1–21. [Google Scholar] [CrossRef][Green Version]

- Denker, M.; Keller, G. On U-statistics and v. Mises’ statistics for weakly dependent processes. Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete 1983, 64, 505–522. [Google Scholar] [CrossRef]

- Pompe, B. Measuring statistical dependences in a time series. J. Stat. Phys. 1993, 73, 587–610. [Google Scholar] [CrossRef]

- Karpoff, J.M. The relation between price changes and trading volume: A survey. J. Financ. Quant. Anal. 1987, 22, 109–126. [Google Scholar] [CrossRef]

- Gallant, A.R.; Rossi, P.E.; Tauchen, G. Stock prices and volume. Rev. Financ. Stud. 1992, 5, 199–242. [Google Scholar] [CrossRef]

- Gervais, S.; Kaniel, R.; Mingelgrin, D.H. The high-volume return premium. J. Financ. 2001, 56, 877–919. [Google Scholar] [CrossRef]

- Podobnik, B.; Horvatic, D.; Petersen, A.M.; Stanley, H.E. Cross-correlations between volume change and price change. Proc. Natl. Acad. Sci. USA 2009, 106, 22079–22084. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Hansen, B.E. Autoregressive conditional density estimation. Int. Econ. Rev. 1994, 35, 705–730. [Google Scholar] [CrossRef]

- Andersen, T.G.; Bollerslev, T. Answering the skeptics: Yes, standard volatility models do provide accurate forecasts. Int. Econ. Rev. 1998, 39, 885–905. [Google Scholar] [CrossRef]

- Diebold, F.X.; Hahn, J.; Tay, A.S. Multivariate density forecast evaluation and calibration in financial risk management: High-frequency returns on foreign exchange. Rev. Econ. Stat. 1999, 81, 661–673. [Google Scholar] [CrossRef]

- Bollerslev, T.; Domowitz, I. Trading patterns and prices in the interbank foreign exchange market. J. Financ. 1993, 48, 1421–1443. [Google Scholar] [CrossRef]

- Diks, C. Nonparametric tests for independence. In Encyclopedia of Complexity and Systems Science; Springer: Berlin, Germany, 2009; pp. 6252–6271. [Google Scholar]

- Powell, J.L.; Stoker, T.M. Optimal bandwidth choice for density-weighted averages. J. Econom. 1996, 75, 291–316. [Google Scholar] [CrossRef]

- Denker, M.; Keller, G. Rigorous statistical procedures for data from dynamical systems. J. Stat. Phys. 1986, 44, 67–93. [Google Scholar] [CrossRef]

- Hansen, B.E. Lecture notes on nonparametrics. In Lecture Notes; University of Wisconsin: Madison, WI, USA, 2009. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).