Abstract

In 2000, Kennedy and O’Hagan proposed a model for uncertainty quantification that combines data of several levels of sophistication, fidelity, quality, or accuracy, e.g., a coarse and a fine mesh in finite-element simulations. They assumed each level to be describable by a Gaussian process, and used low-fidelity simulations to improve inference on costly high-fidelity simulations. Departing from there, we move away from the common non-Bayesian practice of optimization and marginalize the parameters instead. Thus, we avoid the awkward logical dilemma of having to choose parameters and of neglecting that choice’s uncertainty. We propagate the parameter uncertainties by averaging the predictions and the prediction uncertainties over all the possible parameters. This is done analytically for all but the nonlinear or inseparable kernel function parameters. What is left is a low-dimensional and feasible numerical integral depending on the choice of kernels, thus allowing for a fully Bayesian treatment. By quantifying the uncertainties of the parameters themselves too, we show that “learning” or optimising those parameters has little meaning when data is little and, thus, justify all our mathematical efforts. The recent hype about machine learning has long spilled over to computational engineering but fails to acknowledge that machine learning is a big data problem and that, in computational engineering, we usually face a little data problem. We devise the fully Bayesian uncertainty quantification method in a notation following the tradition of E.T. Jaynes and find that generalization to an arbitrary number of levels of fidelity and parallelisation becomes rather easy. We scrutinize the method with mock data and demonstrate its advantages in its natural application where high-fidelity data is little but low-fidelity data is not. We then apply the method to quantify the uncertainties in finite element simulations of impedance cardiography of aortic dissection. Aortic dissection is a cardiovascular disease that frequently requires immediate surgical treatment and, thus, a fast diagnosis before. While traditional medical imaging techniques such as computed tomography, magnetic resonance tomography, or echocardiography certainly do the job, Impedance cardiography too is a clinical standard tool and promises to allow earlier diagnoses as well as to detect patients that otherwise go under the radar for too long.

1. Introduction

While Uncertainty Quantification (UQ) has become a term on its own in the computational engineering community, Bayesian Probability Theory is not widely spread yet. A comprehensive collection of reviews on the various methods and aspects of UQ from the point of view of the computational engineering and applied mathematics community can be found in Reference [1]. In References [2,3,4], a statistician’s perspective is discussed. The computational effort for performing UQ with brute force is typically prohibitively large; thus, surrogate models such as Polynomial Chaos Expansion (PCE) [5,6,7,8] or Gaussian Process (GP) regression [9,10,11,12] are used, the latter of which has had its renaissance recently from within the machine learning community.

This work is inspired by the article of Kennedy and O’Hagan in 2000 [13]. They performed UQ by making use of a computer simulation with different levels of “fidelity”, “sophistication”, “accuracy”, or “quality”. In other words, a cheap, simplified simulation serves as a surrogate. We will refer to this approach as Multi-Fidelity scheme (MuFi). The idea of MuFi [14], and MuFi with GPs specifically [15], recently found increasing attention again. In contrast to previously reported MuFi-GPs , we do not learn the parameters and subsequently neglect the parameter uncertainties but explicitly incorporate them in a rigorous manner. We find that this is tractable analytically for all parameters but especially for the ones that occur nonlinearly or inseparably in the GPs covariance.

While UQ in general has arrived fully in the biomedical engineering community [16], the Bayesian approach has not. Biehler et al. [17] were, to the best knowledge of the authors, the first to apply a Bayesian MuFi Scheme in the context of computational biomechanical UQ. We apply our method to quantify the uncertainties in finite element simulations [18] of Impedance Cardiography (ICG) [19] of Aortic Dissection (AD) [20]. The aorta is the largest blood vessel in the human body. In aortic dissection, blood fluid dynamics force open a tear in a weakened aortic wall, dilate it, and fill the wall itself with blood. This deforms the geometry of the aorta and, obviously, affects blood circulation unfavourably (p. 459, [21]). Aortic Dissection is highly dangerous and likely lethal if untreated. Thus, a fast response and, hence, a fast diagnosis are key to the treatment of patients. For diagnosis, physicians use a variety of imaging techniques such as Magnetic Resonance Tomography (MRT), Computed Tomography (CT), and Echocardiography [22]. Echocardiography performed by a trained cardiologist is comparably cheap and fast, yet sound wave propagation might be hindered, e.g., by the rib cage or body fat. In CT and MRT, the radiation fully penetrates the body. Still, they require a trained radiologist and long measurement times and pose radiation risks and high costs. Most importantly, these examinations are not performed without a specific reason.

Alternatively, impedance cardiography is rather cheap and simple and, more importantly, available in any clinic and many medical practices. In ICG, one places a pair of electrodes on the thorax (upper body), injects a defined low-amplitude, alternates electric current into the body, and measures the voltage drop. The specific resistance of blood is much lower than that of muscle, fat, or bone [23]. Since electric current seeks the path of least resistance, the current propagates through the aorta rather than through, e.g., the spine. Thus, if the local blood volume changes due to aortic dissection, the impedance signal changes as well. Impedance cardiography could therefore complement existing clinical procedures and could detect aortic dissection when medical imaging is not performed, be it due to the absence of suspicion or to the unavailablity of the device itself. We find a number of parameters which are well defined but usually neither known precisely nor accessible in the clinical setting, e.g., the size of the aortic dissection. A clinical trial is extremely difficult, and we resort to a theoretical investigation instead, in which we account for the uncertainties as well.

In Section 2, we develop a Bayesian uncertainty quantification model based on Gaussian processes using multi-fidelity data. We scrutinize the method with mock data in Section 3 and show that learning regression parameters has little meaning when data is little. In Section 4, we apply our method to finite element simulations of impedance cardiography of aortic dissection and show that low-fidelity data can indeed decrease high-fidelity uncertainties.

2. Bayesian Multi-Fidelity Scheme

2.1. Statistical Model

Let be the conditional complex. Let denote the ranked levels of fidelity of a simulation, with level being the highest fidelity. is a vector of simulation results of fidelity-level t given input vector . We assume that is a realisation of a Gaussian Process (GP) with a Markov property of order 1, meaning that level t depends on level only via the following recursive relationship:

with a “difference-GP” and a proportionality constant . Further, we assume that all information about a level is contained in the data corresponding to the same pivot point at that level and its previous level. Formally, that is . The difference-GP shall be defined by the covariance matrix and the mean function . is a matrix of regression functions evaluated at with size , where is the length of input vector and is the expansion power, i.e., number of regression functions, at level t. are the coefficients of level t’s regression functions, and is the set of hyperparameters parametrizing the kernel function . Formally, this is

At this point, neither have we chosen the basis functions building the mean function nor have we chosen the kernel functions building the covariance matrices. Let us subsume the parameters as , , , , , and with . The data shall be with , which comprises the input vector at level t, namely , and its corresponding computer code outputs at level t, namely , and at the previous level , namely . Further, we require a nested design of input vectors, i.e., . We want to draw conclusions from the predictive posterior probability of at a set of points x,

This thing is quite unhandy. We will instead just deal with its moments only, namely the posterior mean and the posterior covariance , where the diagonal of the posterior covariance is the uncertainty band of the prediction. The moments of follow from

We have used the fact that reduces to Dirac’s delta-distribution since is uniquely determined by Equation (1) and the knowledge of all difference-GPs, . Thus, integration with respect to is merely a replacement of . Per construction, we can factor . Since is assumed to obey a GP, the prior probability of is multivariate normal. If the likelihood is Gaussian, then the posterior probability of , , is multivariate normal as well. Integration with respect to yields thus the standard result of the posterior mean value (see, e.g., Reference [10]) and results in a replacement of the GP with its posterior mean.

2.2. Prediction and Its Uncertainty

To compute posterior mean and covariance, we are thus left with integration with respect to the hyperparameters. This is done analytically with all parameters () but the parameters of the kernel function, , since those usually occur nonlinearly in the kernel function. We are then left with numerical integration of expectations and covariances, both conditioned on . We assume flat priors for and and Jeffreys’ prior for , i.e., . The prior of needs to be chosen only after the covariance kernel has been chosen. Let denote the expectation value conditioned on . Here, for ease of notation, we will instead write only. The technicalities shall be detailed in the Appendix A , and the result is as follows:

where is determined from the data and Equation (2), is the complete Gamma-function, is the matrix determinant, and the conditional expectations of the hyperparameters are

where for and 0 otherwise and the abbreviations are

is the number of pivot points in input vector , and is the expansion order of level t’s mean function. For , we need to define , , and . Quite importantly, we find following the requirement:

since otherwise the second moments of are not defined. The numerical evaluation of this result merely involves a couple of matrix operations. The only input is the data, regression functions, and covariance matrices. No parameters need to be tuned.

3. Algorithm and Mock Data Scrutiny

We test the method for the special case of two levels. Then, posterior mean and covariance are simply

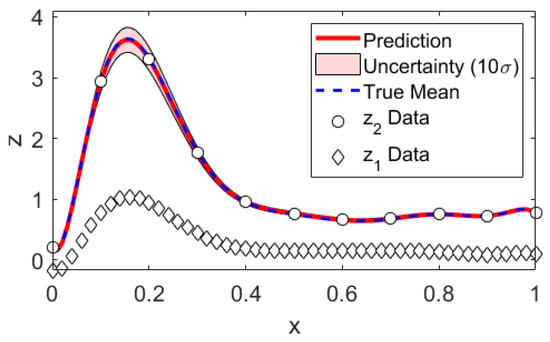

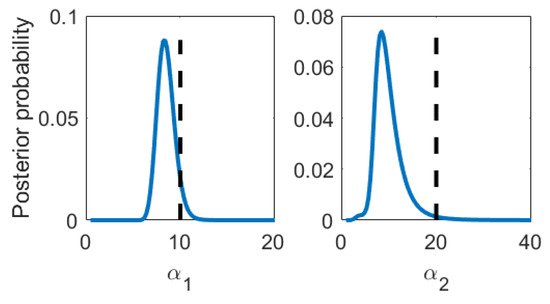

We generate mock data according to Equations (1) and (2) and compare the data analysis results to the underlying truth. We have chosen as mean function bases the Legendre polynomials up to orders 10 and 4 for Levels 1 and 2, respectively. This is convenient since this basis is both orthogonal as well as normalized on already and the map onto the desired domain is trivial. The covariance kernel was chosen to be the squared exponential kernel, where and were defined as the inverse of the correlation length squared. This choice was inspired by the typical form of signals encountered in impedance cardiography, about which we will talk more in Section 4. The data set was one sample drawn per level; see Figure 2 . We chose Jeffreys’ prior for . The integration bounds can be read from Figure 1, and an integration grid of 100 × 100 equally sized volumes turned out to be well converged. As an intermediate result, we compare the multi-fidelity estimates to the true parameter values in Table 1. The posterior probabilities of are Gaussian. Since and are not and thus cannot be reasonably well described with mean and variance only, we additionally show their posterior probabilities in Figure 1. The predictions and prediction uncertainties are compared to the true mean in Figure 2. We find that both the parameter estimates as well as the predictions statistically match the truth within their uncertainties. The mean function parameter uncertainties () clearly illustrate that learning parameters by optimization has little meaning if there is little data. As the data set grows big, the posterior will contract to the maximum likelihood solution. Still, and luckily, the prediction uncertainties are kept low because the mean function parameter uncertainties do not appear in the prediction uncertainties directly. We emphasize that our proposed method naturally is applied to little data problems.

Figure 2.

Mock data analysis: Prediction. Note that the uncertainties have been multiplied by a factor of 10 for illustrative purposes.

Figure 1.

Mock data analysis: Posterior probability density functions of the nonlinear kernel parameters and . Black dashed line: True value

Table 1.

Mock data analysis: Comparison of the hyperparameter estimates with their true values.

Since on level 2 we only have 11 data points, the expansion order of the mean function is limited to a maximum of 7 according to Equation (4d). Unsurprisingly, the solution rapidly worsens as we approach this constraint and entirely breaks down as we reach it because the posteriors become nonconclusive. This is exactly where we find the strength of our multi-fidelity approach. We can choose a high-order mean function on a level where data is abundant and a low-order mean function on a level which we are actually interested in but where data is scarce. The trick is thus actually that the difference of the levels can be modeled by a low-order mean function.

For the sake of completeness, we report the converged log-evidence to be . In real-life applications of the method, one should and could compare different choices of mean function expansions and covariance kernel functions by Bayesian model comparison [24], i.e., compute each choice’s evidence. Let be the number of hyperparameters in kernel function . Since factorizes, the integrals are -dimensional each rather than one single integral of dimension , making the computation of the evidence relatively easy. In our case, numerical Riemann integration was good enough. When choosing more sophisticated kernel functions with more hyperparameters, one might need to use statistical integration methods such nested sampling [25], which conveniently and automatically yields the evidence as well.

For the sake of numerical stability, it is advisable to rescale the data. Further, one might want to improve the condition numbers of the prior covariance matrices, , by adding a small term proportional to the identity matrix, where the proportionality constant should be several orders of magnitude smaller than .

Finally, we would like to point out that Equation (4) suggests trivial parallelisation of the code levels. This is not easily recognizable in the presentation of Kennedy and O’Hagan [3] but was found by Le Gratiet and Garnier [26] already.

The algorithm, implemented in Matlab (R2019a), shall be available on https://github.com/Sranf/Bayesian-MuFi-GP.git.

4. Application to Finite Element Simulations of Impedance Cardiography of Aortic Dissection

In this section, we quantify the uncertainties of real simulation data. We compare the uncertainties of our Bayesian MuFi-GP with normal Bayesian GP neglecting the additional LoFi data, that is, the special case of in Equation (4). We have described the physical and physiological model in our previous work [20,27] but shall restate a brief summary here for the reader’s convenience.

We solve Laplace’s equation:

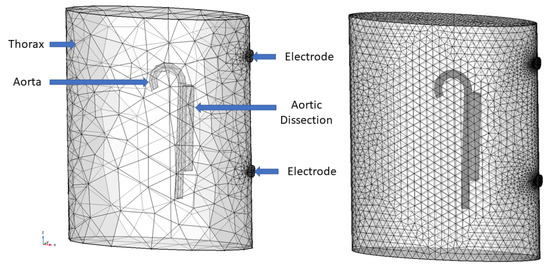

with finite elements on a geometry depicted in Figure 3, where V is the electric potential, is the electrical conductivity (not to be confused with the regression parameter in the GP kernel), is the angular current frequency, is the permittivity, and i is the imaginary unit. We had von-Neumann boundary conditions, where on the top electrode and on the bottom electrode, where the surface integral of the current was held constant at 4 mA and air was assumed to be perfectly insulating. The current had a frequency of 100 kHz. We considered one cardiac cycle that spans one second. The dynamics were modelled via a time-dependent radius of the aorta and its dissection, which arises from pressure waves in a pulsatile flow. Further, the blood conductivity is parametrized in time via its dependence on flow velocity. In the dissected aorta, we assume flow to be stagnant [20,28]. The voltage drop is then measured from just below the top electrode to just above the bottom electrode. The impedance is then the ratio of voltage over current, and the admittance is the inverse of the impedance. We used Comsol Multiphysics for the modelling [29].

Figure 3.

Right: Mesh-converged HiFi model with 100,000–550,000 degrees of freedom. Left: LoFi model with 9000–15,000 with labels of the geometrical objects. Adapted from Reference [27]

For the uncertainty quantification, we chose to expand the mean functions in Legendre polynomials up to order 8 and 2 for HiFi and LoFi, respectively. We choose the squared exponential kernel for both covariance matrices. In principle, one should compute the evidence for a number of plausible choices and choose the one with the most evidence. For the mean function, that would most simply be different expansion orders, while the covariance kernel could be taylored to the PDE at hand to enforce physical behaviour, as suggested in References [30,31].

For uncertain parameters, we consider the radius of the dissected aorta and perform a number of simulations with sensible values within the physiological and physical range, i.e., – mm. The LoFi data set consisted of 24 time series, each with 21 pivot points in time. The HiFi data set consisted of 3 time series (5 mm, 11 mm, and 18 mm), each with 11 pivot points in time.

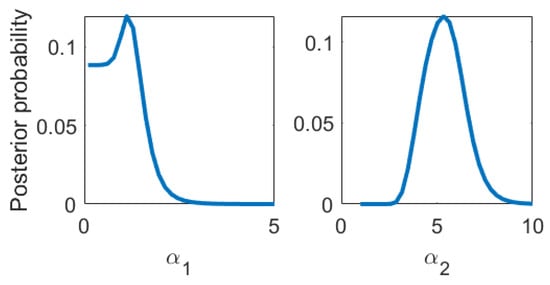

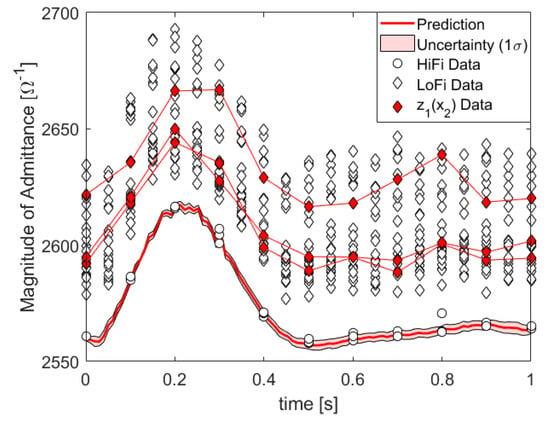

In Figure 4, we show the posterior of the kernel parameters, which turns out to be quite conclusive. In Figure 5, we plot the HiFi predictions and uncertainties which are enhanced by LoFi data and compare them to HiFi predictions and uncertainties which are not enhanced by LoFi data as well as to the test data set.

Figure 4.

Posterior probability of the nonlinear kernel parameters.

Figure 5.

Data, prediction, and prediction uncertainty of the absolute value of the admittance, i.e., the inverse impedance in units of inverse Ohm: denotes LoFi data at the same pivot points as HiFi data.

5. Conclusions

We devised a fully Bayesian multi-level Gaussian process model to improve uncertainty quantification of expensive and little high-fidelity simulation data by augmenting the data set with low-fidelity simulations. Our proposed method is rigorous and logically consistent, no ad hoc assumptions have been made, and the user is spared the embarrassment of having to tune any parameters. The method was scrutinized with mock data and shown to work with as little data as where simple Bayesian Gaussian process regression is not conclusive at all. We applied the method to finite element simulations of impedance cardiography of aortic dissection and quantified the uncertainty due to the unknown size of the aortic dissection. By using meshes of both high fidelity (defined by mesh convergence) and low fidelity, we reduced the uncertainty significantly. We have thus further shown that uncertainties due to geometrical parameters can be described with Gaussian processes on each level of fidelity. With a coarsened mesh, the result is qualitativelybut not quantitatively similar. Usually, that is not good enough and the low-fidelity data is entirely useless to the engineer. Here we show that this is not necessarily true in the context of uncertainty quantification. Ultimately, we want to diagnose aortic dissection from impedance cardiography signals, i.e., in the parlance of probability theory, we need to compare the evidences of healthy and diseased aortae. Unambiguous judgement will most likely, if at all, be possible only with several electrodes at once.

Author Contributions

Conceptualization, S.R.; methodology, S.R. and W.v.d.L.; software, S.R., W.v.d.L., G.M.M., V.B., and A.R.-K.; formal analysis, S.R.; investigation, S.R.; resources, S.R. and G.M.M; data curation, S.R., G.M.M., and V.B.; writing—original draft preparation, S.R.; writing—review and editing, W.v.d.L.; visualization, S.R.; supervision, W.v.d.L., K.E., and A.R.-K; project administration, S.R.; funding acquisition, W.v.d.L. and K.E. All authors contributed to the discussions. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Lead project “Mechanics, Modeling, and Simulation of Aortic Dissection” of the TU Graz (biomechaorta.tugraz.at).

Acknowledgments

The authors would like to acknowledge the use of HPC resources provided by the ZID of Graz University of Technology.

Conflicts of Interest

The authors declare no conflict of interest.

Sample Availability

The two-level code implemented in Matlab is available on https://github.com/Sranf/Bayesian-MuFi-GP.git. The ICG data is available from the authors upon reasonable request.

Appendix A. Mathematical Proofs

We start from Equation (3) and want to compute Equation (4) from it. We reintroduce the notation of conditional expectations, where is the expectation of Q given some P, i.e., the integration is done with respect to all parameters but P.

Thus, in the moments of , by integration with respect to , we can replace by its posterior mean:

Appendix A.1. Parameter Posterior and Parameter Estimates

We need the posterior of the parameters:

The exponent of this Gaussian is a quadratic form in , and we rewrite it as

From this form, we can read the (conditional) expectation and covariance of . We can now integrate with respect to . We assume a flat prior for :

with , as the number of pivot points in input vector , and as the number of basis functions of level t. Apparently, the expectation of is independent of and the covariance of is independent of . We will next tend to integrating with respect to . We find in only and thus as a quadratic form again:

We can now integrate with respect to . We assume a flat prior:

The last random variable we can treat analytically is . With Jeffrey’s prior , we have

With the substitution , we find this to be a -integral:

The moments of are -integrals as well, and we find

For the above -integral and the -moments to exist, we require , i.e., Equation (4d). Note that, in the case of , we have no integration with respect to and thus find one power of less in the above -integral, i.e., we need to substitute . The constraint is accordingly weakened for , which is due to the absence of the single parameter . For most choices of the covariance kernel, we cannot go further analytically.

Now, we have everything we need to marginalize in the conditional expectations of .

which is now relatively easy.

The expected mean function parameters are simply

The variance we read from the quadratic form in is as follows:

with as the kth diagonal element of .

Appendix A.2. Predictive Mean

We rewrite such that

The first term, , assumes a rather simple form and only depends on and . Since we found previously, and each occurs linearly. We find

The second term is rather trivial.

We have thus shown the predictive mean in Equation (4).

Appendix A.3. Predictive Covariance

We easily find

Due to the assumption of independence of the levels or each levels’ parameters, formally , the cross-terms mixing different levels vanish in the expectation value and it suffices to reduce the double sum to a single sum. Further, we can factor the expectation of the product of and the expectation of the sum.

The first factor on the right-hand side can be expressed via the GP’s posterior covariance and mean:

where

is the GPs’ posterior covariance and the predictive mean we already know as follows:

We have thus shown everything we claimed.

References

- Ghanem, R.G.; Owhadi, H.; Higdon, D. Handbook of Uncertainty Quantification; Springer: Basel, Switzerland, 2017. [Google Scholar]

- Haylock, R. Bayesian Inference about Outputs of Computationally Expensive Algorithms with Uncertainty on the Inputs. Ph.D. Thesis, Universtity of Nottingham, Nottingham, UK, May 1997. [Google Scholar]

- O’Hagan, A.; Kennedy, M.C.; Oakley, J.E. Uncertainty Analysis and Other Inference Tools for Complex Computer Codes. 1999. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.51.446&rep=rep1&type=pdf (accessed on 26 December 2019).

- O’Hagan, A. Bayesian analysis of computer code outputs: A tutorial. Reliab. Eng. Syst. Saf. 2006, 91, 1290–1300. [Google Scholar] [CrossRef]

- Wiener, N. The Homogeneous Chaos. Am. J. Math. 1938, 60, 897–936. [Google Scholar] [CrossRef]

- Ghanem, R.G.; Spanos, P.D. Stochastic Finite Elements: A Spectral Approach; Springer: Basel, Switzerland, 1991. [Google Scholar]

- Xiu, D.; Karniadakis, G.E. The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM J. Sci. Comput. 2005, 27, 1118–1139. [Google Scholar] [CrossRef]

- O’Hagan, A. Polynomial Chaos: A Tutorial and Critique from a Statistician’s Perspective. Available online: http://tonyohagan.co.uk/academic/pdf/Polynomial-chaos.pdf (accessed on 25 June 2019).

- O’Hagan, A. Curve Fitting and Optimal Design for Prediction. J. R. Stat. Soc. Ser. B Methodol. 1978, 40, 1–42. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Williams, C.K. Gaussian Processes for Machine Learning; The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Bishop, C. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1996. [Google Scholar]

- MacKay, D.J. Information Theory, Inference, and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Kennedy, M.C.; O’Hagan, A. Predicting the output from a complex computer code when fast approximations are available. Biometrika 2000, 87, 1–13. [Google Scholar] [CrossRef]

- Koutsourelakis, P.S. Accurate Uncertainty Quantification using inaccurate Computational Models. SIAM J. Sci. Comput. 2009, 31, 3274–3300. [Google Scholar] [CrossRef]

- Perdikaris, P.; Raissi, M.; Damianou, A.; Lawrence, N.D.; Karniadakis, G.E. Nonlinear information fusion algorithms for data-efficient multi-fidelity modelling. Proc. R. Soc. A Math. Phys. Eng. Sci. 2017, 473, 20160751. [Google Scholar] [CrossRef] [PubMed]

- Eck, V.G.; Donders, W.P.; Sturdy, J.; Feinberg, J.; Delhaas, T.; Hellevik, L.R.; Huberts, W. A guide to uncertainty quantification and sensitivity analysis for cardiovascular applications. Int. J. Numer. Methods Biomed. Eng. 2016, 32, e02755. [Google Scholar] [CrossRef] [PubMed]

- Biehler, J.; Gee, M.W.; Wall, W.A. Towards efficient uncertainty quantification in complex and large-scale biomechanical problems based on a Bayesian multi-fidelity scheme. Biomech. Model. Mechanobiol. 2015, 14, 489–513. [Google Scholar] [CrossRef] [PubMed]

- Zienkiewicz, O.C.; Taylor, R.L.; Zhu, J.Z. The Finite Element Method: Its Basis and Fundamentals; Elsevier: Amsterdam, The Netherlands, 1967. [Google Scholar]

- Miller, J.C.; Horvath, S.M. Impedance Cardiography. Psychophysiology 1978, 15, 80–91. [Google Scholar] [CrossRef] [PubMed]

- Reinbacher-Köstinger, A.; Badeli, V.; Biro, O.; Magele, C. Numerical Simulation of Conductivity Changes in the Human Thorax Caused by Aortic Dissection. IEEE Trans. Magn. 2019, 55, 1–4. [Google Scholar] [CrossRef]

- Humphrey, J.D. Cardiovascular Solid Mechanics; Springer: Basel, Switzerland, 2002; p. 758. [Google Scholar]

- Khan, I.A.; Nair, C.K. Clinical, diagnostic, and management perspectives of aortic dissection. Chest 2002, 122, 311–328. [Google Scholar] [CrossRef] [PubMed]

- Gabriel, C.; Gabriel, S.; Corthout, E.C. The dielectric properties of biological tissues: I. Literature survey. Phys. Med. Biol. 1996, 41, 2231–2249. [Google Scholar] [CrossRef] [PubMed]

- Sivia, D.; Skilling, J. Data Analysis: A Bayesian Tutorial; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Skilling, J. Nested sampling for general Bayesian computation. Bayesian Anal. 2006, 1, 833–859. [Google Scholar] [CrossRef]

- Le Gratiet, L.; Garnier, J. Recursive Co-Kriging Model for Design of Computer Experiments With Multiple Levels of Fidelity. Int. J. Uncertain. Quantif. 2014, 4, 365–386. [Google Scholar] [CrossRef]

- Ranftl, S.; Melito, G.M.; Badeli, V.; Reinbacher-Köstinger, A.; Ellermann, K.; von der Linden, W. On the Diagnosis of Aortic Dissection with Impedance Cardiography: A Bayesian Feasibility Study Framework with Multi-Fidelity Simulation Data. Proceedings 2019, 33, 24. [Google Scholar] [CrossRef]

- Alastruey, J.; Xiao, N.; Fok, H.; Schaeffter, T.; Figueroa, C.A. On the impact of modelling assumptions in multi-scale, subject-specific models of aortic haemodynamics. J. R. Soc. Interface 2016, 13. [Google Scholar] [CrossRef] [PubMed]

- Comsol, A.B.; Stockholm, S. Comsol Multiphysics Version 5.4. Available online: http://www.comsol.com (accessed on 4 June 2019).

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Inferring solutions of differential equations using noisy multi-fidelity data. J. Comput. Phys. 2017, 335, 736–746. [Google Scholar] [CrossRef]

- Albert, C.G. Gaussian processes for data fulfilling linear differential equations. Proceedings 2019, 33, 5. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).