Abstract

Computer-mediated-communication (CMC) research suggests that unembodied media can surpass in-person communication due to their utility to bypass the nonverbal components of verbal communication such as physical presence and facial expressions. However, recent results on communicative humanoids suggest the importance of the physical embodiment of conversational partners. These contradictory findings are strengthened by the fact that almost all of these results are based on the subjective assessments of the behavioural impacts of these systems. To investigate these opposing views of the potential role of the embodiment during communication, we compare the effect of a physically embodied medium that is remotely controlled by a human operator with such unembodied media as telephones and video-chat systems on the frontal brain activity of human subjects, given the pivotal role of this region in social cognition and verbal comprehension. Our results provide evidence that communicating through a physically embodied medium affects the frontal brain activity of humans whose patterns potentially resemble those of in-person communication. These findings argue for the significance of embodiment in naturalistic scenarios of social interaction, such as storytelling and verbal comprehension, and the potential application of brain information as a promising sensory gateway in the characterization of behavioural responses in human-robot interaction.

1. Introduction

Verbal communication is an integral element of mankind’s societal evolution whose intention is signified through such nonverbal means as facial expressions, motions, and the physical presence of communicating partners. Research suggests that a proper communication has a direct positive influence on physical and mental health [1] as well as the quality of learning [2,3]. On the other hand, Internet-based technologies [4] present easy-to-use alternatives for exchanging verbal information without nonverbal signatures. In their seminal work, Reference [5] demonstrated the tendency of individuals to treat computers and people alike in their responses which covered a wide range of social [6] and behavioural domains [7]. In fact, computer-mediated-communication (CMC) research [8] argues that the utility of unembodied media might surpass in-person communication due to its ability to bypass the nonverbal components that are inherent in face-to-face communication [9]. For instance, Joinson [10] noted that the CMC effect increases self-disclosure in individuals in contrast with in-person communication. Along the same direction, Zimmerman [11] concluded that CMC elicits emotionally richer, more relationship-oriented verbal interaction among emotionally disturbed adolescents than in-person communication. A common theme that links these findings is the elimination of the physical presence of participants (e.g., absence of visual communication) which is a significant advantage of CMC. This viewpoint finds further evidence in the growing adaptation of voice assistant agents (e.g., Siri, Cortana, Google Now, Amazon Alexa, etc.), implying individuals tend to undermine the significant influence of the physical embodiment for representing non-verbal information during verbal communication.

However, recent findings in communicative humanoids emphasize the importance of the physical embodiment of conversational partners in human-robot interaction [12]. For instance, Mann et al. [13] suggested that people are more responsive to robots than computer-based healthcare systems. Tele-operated robots (i.e., remotely controlled robotic media) seem to increase the feelings of being together in remote communication. For example, Sakamoto and colleagues [14] found that an anthropomorphic tele-operated robot improved the effect of social tele-presence more than audio- and video-conferencing. Tanaka and colleagues [15] compared the effect of a robotic medium with audio-, video-, and avatar-based media for communication and found that it enhanced the sense of a human presence at the same level as the partner’s live video stream. In addition, applications of robotic media in elderly care and intervention support their physical embodiment as a crucial factor in their effectiveness [16,17]. Broadbent [18] presented a comprehensive review of interactive robotic media.

Although these results imply the potentially positive effect of physical embodiment, almost all of them are based on the subjective assessments of the behavioural impacts of physically embodied media. This severely limits the possibility of drawing a reliable conclusion. In fact, there is a paucity of research on the neurophysiological effect of physical embodiment during verbal communication.

Previous research identified the critical role of the prefrontal cortex (PFC) in social and embodied cognition [19], given its anatomical organization that allows for the integration of information from all five senses [20]. The pivotal role of the medial (m)PFC [21] is emphasized in mediating social interaction as part of the brain’s mentalizing network [22,23], which starts from infancy [24]. In this respect, Takahashi et al. [25] showed that the mPFC modulates responses to the anthropomorphic impressions of interacting agents. Krach et al. [26] concluded that an agent’s human-likeness significantly influences mPFC activity and its corresponding mentalizing network. In fact, Chaminade et al. [27] identified the occurrence of this modulating process in response to both human-human as well as human-robot interactions. These findings argue that PFC responses are rather integrated toward physical embodiment, regardless of the nature (i.e., human, synthetic, or otherwise) of the communicating agent. However, it is crucial to note that some of the findings [25,26,27] are based on the modulation of both, the embodiment (i.e., physically situated medium) and the intelligence (human and synthetic) of their adapted media. Moreover, they mainly focused on the impact of these media in the context of interactive games. As a result, their findings fail to adequately answer more subtle questions about whose appearance is more suitable for such media, given the same level of intelligence.

Research on working memory (WM) [28] also identified PFC activation in a variety of tasks, from mental tasks with high cognitive loads [29] to such social cognition as emotional responses [30] and story comprehension [31,32]. An important implication of these results is the activation of some similar regions in PFC, regardless of the nature of the potential effect under investigation. Therefore, considering the involvement of PFC in verbal communication, as well as social and embodied cognition along with the activation of similar PFC areas in response to both auditory and embodied stimuli, it is plausible to address the utility of these neuroscientific findings to investigate other potential influences of physical embodiments on our brains during verbal communication.

We utilize these findings to study the neurophysiological effect of physical embodiment through analysis of the near-infrared spectroscopy (NIRS) [33] time series of the frontal brain activity of human subjects. We chose NIRS due to its non-invasive operational setup, portability and relative immunity to body movement, compared with other imaging techniques [34]. These advantages provide a tremendous opportunity for the adaptation of NIRS devices in naturalistic experiments such as gaming and human-robot interaction [35,36]. Although NIRS has a weaker signal-to-noise ratio (SNR) than functional magentic resonance imaging (fMRI) [37], research found that the hemodynamic responses captured as blood oxy/deoxy-genation hemoglobin highly correlate with the Blood Oxygen Level Dependent (BOLD) in fMRI [38]. This correlation is unaffected by the nature of cognitive tasks (ibid).

The human brain, like any other healthy physiological system, is an inherently complex system whose dynamics strongly correlate with the productivity of its cognitive functions such as attention and language [39]. Interestingly, an increase in complexity reflects the information content of the dynamics of physiological systems which, in turn, have a direct correspondence to the variational information in their activities [40]. Given these observations, in conjunction with PFC’s pivotal role in processing auditory and embodiment stimuli, we investigate the effect of physical embodiment on inducing potential changes in the variational information of frontal brain activity; that is, its information content or dynamics.

Hypothesis 1.

In comparison with unembodied media, a physically embodied medium induces an increase in the information content of the frontal brain activity of human subjects in response to communicated contents.

We tested the validity of this hypothesis in a storytelling experiment in which senior citizens and adults in their 20s and 30s listened to three-minute stories from Greek mythology about constellations using three different forms of media: an audio speaker, a video-chat system, and a teleoperated robot. face-to-face was our control setting in which we communicated these stories with the participants in-person. These settings enabled us to primarily investigate the effect of physical embodiment while maintaining the same level of conveyed intelligence (i.e., human intelligence). The inclusion of video-chat also allowed us to compare any potential difference between the effect of physical embodiment as opposed to the presence [41]. It is also crucial to note that the aim of the present study was not to analyze the effect of embodiment on the human-medium interaction [42,43,44] and instead focused on verifying whether the embodiment of a medium bore a significant effect on the brain activation in response to verbally communicated content. To this end, we prevented any physical contact between the participants and the media in this study (see Section 5.2, for details).

We used near-infrared spectroscopy (NIRS) to record the frontal brain activity of the participants while they listened to the stories. They answered questionnaires about the difficulty of the narrated stories, their interest in the topics of these stories, and the degree of fatigue caused by listening to them, as well as their perceived feelings of the storyteller’s presence after each of the three types of media at the end of each storytelling session. We used self-assessed responses for three reasons. First, we used the responses to the feelings of the human presence to verify whether the potential influence of the physical embodiment is also reflected in a more immediate cognitive state of the participants. Second, we utilized the other self-assessed responses to justify whether such potential changes are associated with embodiment or whether other physiological factors are also involved, for example, fatigue, lack/loss of interest, and so forth. Last, these self-assessed responses allowed us to compare the viability of the information content of the frontal brain activity of the human subjects as a unique biomarker for the identification of the effect of physical embodiment with such behavioural signals as eye movement.

Matarić [45] adequately notes that today’s robotics impact is not merely on assisting humans perform their physical tasks but facilitating their social interaction in such a broad range of areas as child education [3,12] and elderly care [46,47]. In this regard, Yang et al. [48] consider the social interaction as one of the grand challenges of the robotics for twenty first century with modeling social dynamics, learning social and moral norms, and building a robotic theory of mind (ToM) [49] as three most significant challenges among all. It is apparent that interactive media that can comprehend their human companions’ intentions to respond effectively is the minimum requirement if such interactions and relationships are to last long. We believe that the use of neurophysiological responses such as PFC activity offers a significant complementary information for modeling the human behaviour to take the human-robot interaction research a step closer to achieving some of the missing components of truly interactive intelligent media. For instance, patterns of brain activation can form highly informative neurophysiological feedback that is otherwise hidden, even from the individuals themselves. This presents a substantial contribution to such paradigms as interactive learning in multimodal modeling of the human behaviour [50]. Furthermore, inclusion of the brain can form a stepping stone for rich and usable models of human mental state [48] and eventually a new venue to complement the behavioural- and observational-based study and analysis of a robotic ToM [49] through critical investigation of its implications in human’s neurological responses while interacting with their synthetic companions. Our results contribute to the field of human-robot interaction via providing further insights on the effect of the physical embodiment on human’s brain activation pattern, thereby taking our understandings a step closer to achieving rich and usable models of human mental state [48] and eventually a foundation for study and analysis of a robotic theory of mind [49].

2. Results

2.1. Variational Information of Frontal Brain Activity

2.1.1. Left-Hemispheric PFC

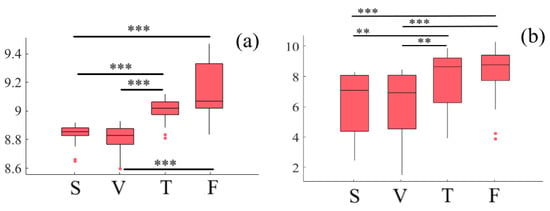

Considering the effect of media and age factors, a two-way mixed-design ANOVA indicates a significant difference with respect to media (p < 0.001, F(1.75, 50.87) = 42.03, = 0.42). However, this difference is non-significant in case of age (p = 0.97, F(1, 29) = 0.002, = 0.0) and the interaction between these factors (p = 0.70, F(1.75, 50.87) = 1.01, = 0.02). Post hoc comparison with modified sequentially rejective Bonferroni correction implies significant differences between speaker and Telenoid (p < 0.001, t(29) = 7.61, M = 8.78, SD = 0.17, M = 9.01, SD = 0.08), speaker and face-to-face (p < 0.001, t(29) = 12.71, M = 9.15, SD = 0.18), video-chat and Telenoid (p < 0.001, t(29) = 8.08, M = 8.76, SD = 0.17), as well as video-chat and face-to-face (p < 0.001, t(29) = 12.28). However, it also suggests non-significant differences between speaker and video-chat (p = 0.26, t(29) = 1.15) as well as Telenoid and face-to-face (p = 0.16, t(29) = 1.42) (Figure 1a).

Figure 1.

Descriptive statistics of post hoc comparison on DE of (a) left-hemispheric and (b) right-hemispheric frontal brain activity of participants in different media settings. Asterisks mark media with significant differences (*: p < 0.05, **: p < 0.01, ***: p < 0.001).

2.1.2. Right-Hemispheric PFC

Considering the effect of media and age factors, a two-way mixed-design ANOVA indicates a significant difference with respect to media (p < 0.001, F(3, 29) = 9.05, = 0.35). However, this difference is non-significant in case of age (p = 0.14, F(1, 29) = 2.32, = 0.02) and the interaction between these factors (p = 0.12, F(3, 87) = 1.99, = 0.002). Post hoc comparison with modified sequentially rejective Bonferroni correction implies significant differences between speaker and Telenoid (p < 0.01, t(29) = 2.60, M = 6.32, SD = 2.05, M = 7.78, SD = 1.90), speaker and face-to-face (p < 0.001, t(29) = 5.02, M = 8.33, SD = 1.61), video-chat and Telenoid (p < 0.01, t(29) = 2.66, M = 6.28, SD = 1.20), as well as video-chat and face-to-face (p < 0.001, t(29) = 4.99). However, it also suggests non-significant differences between speaker and video-chat (p = 0.96, t(29) = 0.06) as well as Telenoid and face-to-face (p = 0.16, t(29) = 1.44) (Figure 1b).

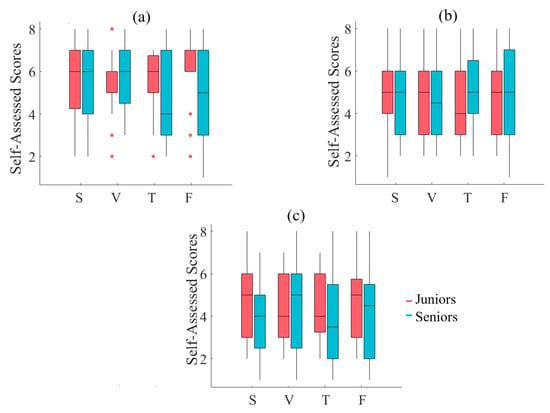

2.2. Self-Assessed Responses to Feeling of Human Presence

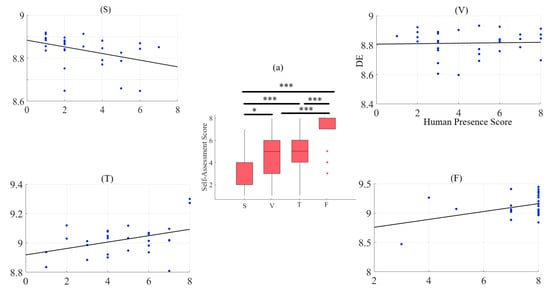

A two-way mixed-design ANOVA with media and age factors implies a significant effect of media (p < 0.001, F(3, 87) = 43.03, = 0.41), with non-significant effects of age (p = 0.42, F(1, 29) = 0.68, = 0.01), and the interaction between these factors (p = 0.18, F(3, 87) = 1.65, = 0.03). Post hoc comparison with modified sequentially rejective Bonferroni correction implies significant differences between speaker and Telenoid (p < 0.001, t(29) = 6.32, M = 1.75, SD = 0.07, M = 4.77, SD = 2.06), speaker and face-to-face (p < 0.001, t(29) = 11.14, M = 7.17, SD = 1.68), speaker and video-chat (p < 0.001, t(29) = 4.74), video-chat and face-to-face (p < 0.001, t(29) = 6.60), and face-to-face and Telenoid (p < 0.001, t(29) = 5.91). However, it suggests a non-significant difference between Telenoid and video-chat (p = 0.60, t(29) = 0.49) (Figure 2a).

Figure 2.

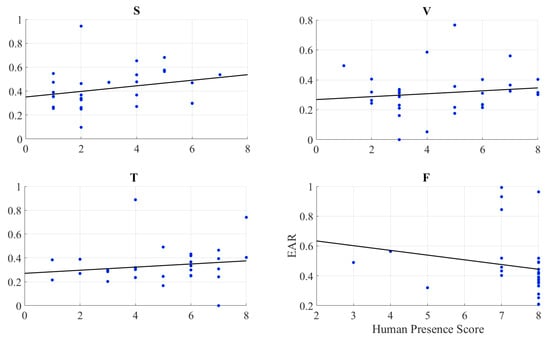

(a) Descriptive statistics of post hoc comparison with Shaffer’s modified sequentially rejective Bonferroni correction of self-assessment of participants to induced sense of human presence in different media settings. Asterisks mark media with significant differences (*: p < 0.05, **: p < 0.01, ***: p < 0.001). Subplots (S), (V), (T), and (F): Pearson correlation between variational information of left-hemispheric frontal brain activity of human subjects and their self-assessed responses to induced feelings of human presence by speaker (S), video-chat (V), Telenoid (T), and face-to-face (F).

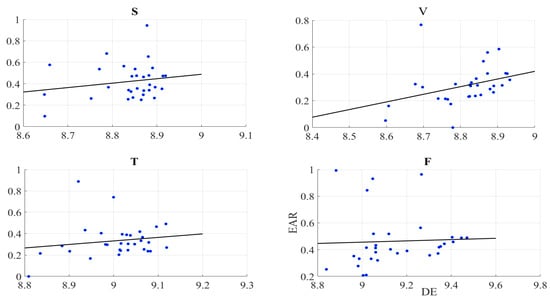

2.2.1. Left-Hemispheric PFC

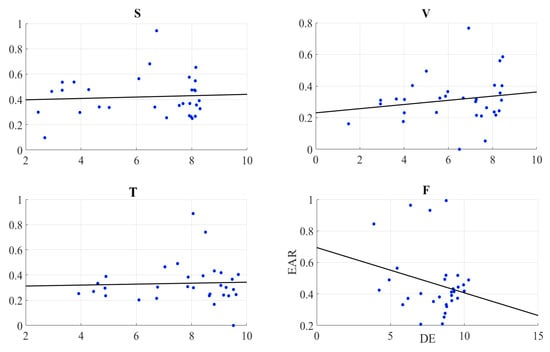

We found no correlation between the information content of the frontal brain activity (i.e., DE) of the participants and the EAR of their gazing behaviour in the speaker (r = 0.19, p = 0.32, M = 0.42, SD = 0.16), video-chat (r = 0.34, p = 0.06, M = 0.31, SD = 0.15), Telenoid (r = 0.17, p = 0.40, M = 0.33, SD = 0.16), and face-to-face (r = 0.04, p = 0.82, M = 0.46, SD = 0.20) (Figure 3).

Figure 3.

Pearson correlation between left-hemispheric frontal brain activity (i.e., DE) of participants and eye aversion ratio (EAR) of their gazing data: speaker (S), video-chat (V), Telenoid (T), and face-to-face (F).

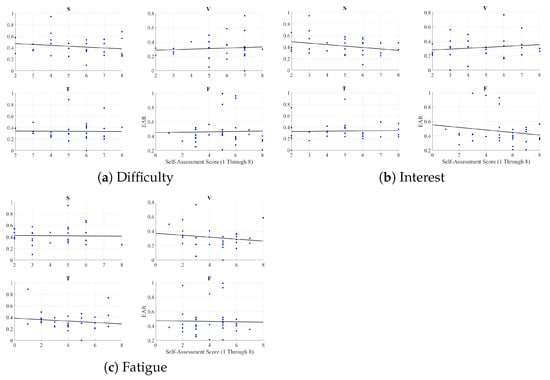

Considering the responses of the participants about the difficulty of the narrated stories, their interest in them, and the fatigue caused by listening to them, a two-way mixed-design ANOVA with media and age factors is non-significant with respect to media, age, and interaction among these factors (all three self-assessments). Furthermore, the Pearson correlation results among these responses and the variational information of the frontal brain activity of the participants are non-significant (SM 2 through SM 6 for analysis details).

2.2.2. Feeling of Human Presence and Left-Hemispheric Information Content

We observed a significant moderate correlation between the variational information of the frontal brain activity of the participants with their self-assessed responses to the feelings of the presence of the remote storyteller in face-to-face (r = 0.40, p < 0.03) and Telenoid (r = 0.40, p < 0.03). Interestingly, we found a significant weak anti-correlation for the speaker medium (r = −0.38, p < 0.05). On the other hand, it is non-significant in the case of video-chat (r = 0.04, p = 0.85) (Figure 2).

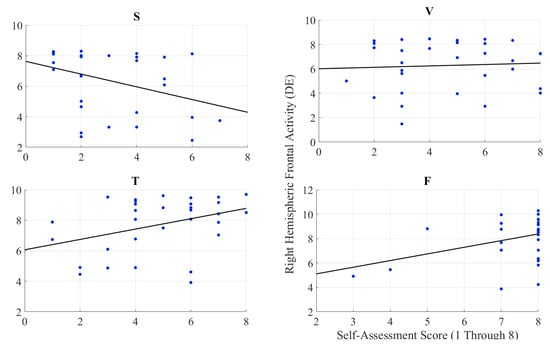

2.2.3. Feeling of Human Presence and Right-Hemispheric Information Content

We observed a significant moderate correlation between the variational information of the right-hemispheric frontal brain activity of the participants with their self-assessed responses to the feelings of the presence of the remote storyteller in face-to-face (r = 0.38, p < 0.05) and Telenoid (r = 0.37, p < 0.05) (Figure 4). Interestingly, we found a significant weak anti-correlation for the speaker medium (r = −0.37, p < 0.05). On the other hand, it is non-significant in the case of video-chat (r = 0.06, p = 0.74).

Figure 4.

Pearson correlation between variational information of right-hemispheric frontal brain activity of human subjects and their self-assessed responses to induced feelings of human presence by speaker (S), video-chat (V), Telenoid (T), and face-to-face (F).

2.3. Gaze Data

We found no correlation between the self-assessed responses of the participants to their feelings of the presence and EAR in speaker (r = 0.26, p = 0.16), video-chat (r = 0.19, p = 0.46), Telenoid (r = 0.16, p = 0.40), as well as face-to-face (r = −0.19, p = 0.30) (Figure 5).

Figure 5.

Pearson correlation between self-assessed responses of participants to feelings of presence and eye aversion ratio (EAR) of their gazing data: speaker (S), video-chat (V), Telenoid (T), and face-to-face (F).

Right-Hemispheric PFC

We found no correlation between the information content of the frontal brain activity (i.e., DE) of the participants and the EAR in speaker (r = 0.07, p = 0.72, M = 0.42, SD = 0.16), video-chat (r = 0.18, p = 0.34, M = 0.31, SD = 0.15), Telenoid (r = 0.04, p = 0.82, M = 0.33, SD = 0.16), and face-to-face (r = −0.25, p = 0.18, M = 0.46, SD = 0.20) (Figure 6).

Figure 6.

Pearson correlation between information content (i.e., DE) of the right-hemispheric frontal brain activity of participants and their eye aversion ratio (EAR) in speaker (S), video-chat (V), Telenoid (T), and face-to-face (F).

3. Discussion

We studied the effect of physical embodiment on the information content of the frontal brain activity of human subjects in a storytelling experiment through comparative analyses of the effect of a physically embodied medium between video-chat and speaker. The face-to-face setting was our control setting. Our motivation for studying the frontal brain activity of the human subjects was due to neuroscientific findings on the association of this region with verbal communication that requires social cognition [19] as well as story comprehension [31,32]. Below, we discuss the implications of different aspects of our results in support of our hypothesis.

3.1. PFC Activation and Physical Embodiment

We observed a significant increase in the variational information of the frontal brain activity by physical embodiment. We also observed that this increase was non-significant in comparison with the in-person setting. On the other hand, the physically embodied medium induced an increase in the variational information of the frontal brain activity of the participants that differed significantly from video-chat and speaker. Additionally, the results of the ANOVA analysis implied a non-significant difference in the magnitude of this increase for both hemispheres. These results implied that face-to-face and the physically embodied medium induced a similar activation effect on the PFC of the participants.

Although the cognitive load on the working memory has a substantial bearing on PFC activation [29], it is unlikely that such an effect had a significant impact on the increased information content of the frontal brain activity of the participants. This is due to the observation that our results indicated no correlations between the variational information of the frontal activity of the participants and their responses to such feelings as difficulty of the stories and fatigue caused by listening to them. On the other hand, findings from social cognition express PFC’s pivotal role in processing the social as well as the anthropomorphic characteristics of the embodiment [19,23]. For instance, these results imply its involvement in modulating responses to the anthropomorphic impressions of interacting agents [25,26], regardless of their nature (i.e., human-human or human-robot interaction) [27]. An important observation about these findings is the modulation of both the embodiment (i.e., appearance of agents) and the intelligence (human and synthetic intelligence) of the partners during communication/interaction. This complicates the possibility of drawing a conclusion about the extent of the embodiment’s impact on the frontal activity of human subjects. Our results complemented these findings by maintaining the same level of conveyed intelligence (i.e., identical stories by the same storyteller) to solely analyze the media’s physical aspects. In such a setting, it is plausible to expect PFC to respond identically, if the media’s influence were negligible. Contrary to this expectation, our results suggest the potential utility of physical embodiment for enhancing PFC responses to physical embodiment.

Our results on the bilateral increase in the information content of the frontal brain activity in participants that are in line with recent findings on the effect of natural speech (at least within the context of storytelling) [32,51,52] point at the contribution of physical embodiment to processes related to social cognition in PFC that respond to physical embodiment and stories [23]. As for PFC roles, Forbes et al. [19] recognized three primary categories within the context of social cognition: social perceptual (processing and identification of the features of others), social categorization (effect of normative and stereotypes on perception and information processing of an individual), and attributional (understanding/interpreting the actions and the behaviours of others) processes. In this respect, the observed increase in PFC’s information content can be plausibly attributed to the perception of such social features as other’s face [19]. Although this might suggest that the human-like facial characteristic of a medium is sufficient for activation of this process, this interpretation is refuted by the lower PFC activation in the video-chat setting even though the participants were looking at the storyteller on the display. This identified the central role of physical embodiment (as opposed to mere presence) in activation of the PFC’s social perceptual process within the context of verbal communication.

In our results, the substantial effect of the situational cues of a medium on the PFC’s social categorization process is reflected in non-significant difference of the induced increase in the information content of the frontal brain activity of the participants for the physically embodied medium and in-person communication. More specifically, it implied the ability of the physical embodiment to persuade the participants to interpret the actual presence of the storyteller and her presence through the physically embodied medium under the same social categorical norm/stereotype. This observation finds further evidence in the significant correlation between the increased information content of the frontal brain activity of the participants and their feelings of the human presence in the case of the face-to-face and the physically embodied medium. On the other hand, the lower PFC’s variational information in the speaker and video-chat settings might indicate their categorization as audio-player and TV by this process.

It is apparent that the perception of the stimuli under different social categorial norms has an immediate impact on their attributed trait characteristics by the human subjects [53,54]. Forbes et al. [19] argued that the involvement of attributional processes in modulating information is pertinent to the mentalization process. Considering the controlled level of intelligence in our study, it is plausible to expect that this process contribute identically to our results. On the other hand, a previous fMRI study [25] identified the change in an individual’s impression of the embodied media with differing appearances which, in turn, indicated the different degree of the contribution of the social perceptual and the social categorization processes to PFC’s attributional process. Therefore, it is plausible to consider the observed increase in the PFC’s information content in response to the physically embodied medium and in-person as an indication of the establishment of a tentative mental association between the physically embodied medium (i.e., the other) and the participants. This, in turn, complements the results of such association processes with respect to the autonomy of the media [25,26,27].

3.2. PFC Activation and Feeling of Human Presence

Further evidence for the potential effect of physical embodiment on the neurophysiological responses of human subjects came from the correlational analysis of the variational information of the frontal brain activity of the participants and their self-assessed responses to their feelings of the presence of the remote storyteller. Although these responses were positively correlated for the in-person and the physically embodied medium, they implied an anti-correlation with respect to the speaker. Interestingly, the self-assessed responses of the participants showed a similar level of correlation in the physically embodied medium and in-person settings. Moreover, the negative correlation in the case of the speaker medium is an interesting observation, considering the total absence of a physical presence in this setting. Similarly, the non-significant correlation of the video-chat emphasized the crucial role of physical embodiment in producing such an effect in brain neurological responses, stressing the physical (e.g., actual presence in face-to-face and physically embodied medium for humanoids) over the visual [55] property of a medium as the primary source for the manifestation of such neurophysiological responses.

This observation accumulated further support in the non-significant responses of our participants to questionnaires regarding their emotional states with respect to the task (i.e., listening to stories), such as the difficulty of the story topic, their interest in them and the fatigue induced by listening to them (SM 2 through SM 6 for details). These results imply that the brain activity of the participants was not affected by their impressions of the conversation and/or the communicated stories.

3.3. PFC Activation and Gazing Patterns

Our results indicated that the gazing behaviour of the participants had no substantial contribution in the observed changes in the variational information of their brain frontal activity. This suggestion reflects that we found no correlation between the eye-movement/aversion behaviour of the participants and the information content of their frontal brain activity. Similarly, our results also indicated that the eye-movement/aversion of the participants and their self-assessed responses to the feelings of the human presence were not correlated. The latter observation suggests that the observed increase in the information content of the frontal brain activity of the participants was uniquely associated with the effect of physical embodiment and that this effect was absent in their behavioural responses.

3.4. PFC Activation and Aging

We observed a bilateral increase in the information content of PFC. Furthermore, our analyses indicated a non-significant effect of the age factor for both hemispheres, implying that PFC activation during physically embodied verbal communication deviated from the dedifferentiation hypothesis [56] that implies the reorganization of the PFC pattern of activation from lateralized to bilateral activation with aging. Our results suggest that PFC activation during the physically embodied verbal communication was potentially more distributed between the two hemispheres, contrary to the left-lateralized activation in mere WM tasks [57]. These results indicate that the influence of physical embodiment on the information content of the frontal brain activity of the participants was not affected by their age. They also provide a quantitative answer to the divided stances on the effect of age on the responses of individuals to physical embodiment based on their behavioural and psychological assessments [58,59]. Seider et al. [60] argued that increasing the level of cognitive activity reduces the risk of such cognitive consequences of aging as dementia. In fact, research on aging and cognition identified a substantial correlation between the increased variational information of brain activity and the dynamics of healthy cognitive functioning [39]. In this respect, our results contribute to a general theory of diminished cognitive performance with aging by focusing on the potential benefit of the adaptation of physically embodied media as a complementary cognitive training strategy to delay and/or prevent such age-related diseases as Alzheimer’s and dementia by inducing an increase in the variational information of the frontal brain activity of senior citizens [17].

4. Concluding Remarks

Although our results indicated the significant role of the physical embodiment during verbal communication, a larger human sample is necessary for an informed conclusion on the utility of these results. It is also necessary to investigate the effect of physical embodiment in a more continuum age group (e.g., kids, adolescents, as well as younger adults between the two age groups included in the present study) and in a more culturally diverse setting.

One crucial difference between our findings and many other previous studies in neuroscience is the choice of summary statistics for the quantification of PFC activation. Whereas previous results are based on averaging-based strategies, the observed bilateral pattern of PFC activation suggests the utility of the variational information of brain activity [61,62,63,64] to address the shortcoming of averaging-based strategies [65,66]. However, further investigation is crucial of the effect of the choice of different summary statistics on the quantitative analyses of the brain activity of humans in basic naturalistic scenarios (e.g., listening to a story narrated by another human) to reevaluate the extent of the underlying emotional and mental reflexes that resulted in such a spread in the frontal brain activity of the individuals in our study. Moreover, such comparative analysis allows a more informed conclusion to be drawn on the observed differences in the frontal activation than with such neuroscientific frameworks as the dedifferentiation hypothesis and its implications on aging brains.

Our results emphasized the potential of physical embodiment for increasing the information content of the frontal brain activity of senior citizens. This observation supports the utility of physically embodied media as a complementary cognitive training strategy for addressing diminished cognitive performance with aging and age-related diseases such as Alzheimer’s and dementia. These observations suggest that the observed effects are potentially inherent to PFC and its responses to social cognition [19]. However, future research with a larger sample of seniors must determine whether the observed effect of the physical embodiment on the information content of their frontal brain activity is sustainable. In addition, we must also verify whether this increase in PFC’s information content improves their cognitive performance.

It is also crucial to compare the effect of physical embodiment with more realistic humanoids as well as other mechanical-looking robots to explore the depths of the potential of the minimalist strategy of the uncanny valley effect. Saygin et al. [67] argued that an android induces a larger suppression effect with a greater predictive coding than humans and humanoid robots. However, their results focused on body movements not conversation or story comprehension scenarios. In this regard, our results indicated the effectiveness of DE for quantification of the PFC responses to minimal anthropomorphic features of a physically embodied medium that induced the feeling of the human presence. An important implication of these results is the potential application of brain information as a promising sensory gateway in the characterization of behavioural responses in human-robot interaction. Therefore, integration of such technologies as NIRS in conjunction with the use of DE in comparative analysis of the effect of different robotic media on PFC activation can determine the utility of DE for evaluation of their design strategy.

In addition, correspondence must be achieved between these responses and communication topics. In particular, future research must determine whether such responses are context-independent or whether their occurrence as well as their degree of variability are affected by the choice of topics. In this respect, our results indicated that DE quantifies the PFC responses of the participants to the story topic in terms of an increase and/or a decrease in information content of this region. Therefore, analysis of the PFC activation in response to the broader topics can determine the ability of DE to quantify their effect on PFC activation which, in turn, allows for modulation of the communicated content through such physically embodied media.

Our study primarily focused on verifying whether the embodiment of a medium bore a significant effect on the brain activation in response to verbally communicated content. Therefore our results, at their present state, are insufficient to allow for drawing informed correspondence between them and the effect of embodiment within the context of social interaction in a broader sense [68]. This limits the utility of our findings for drawing an informed conclusion on different aspects of social interaction (e.g., turn taking (pp. 7–55) [69], gestures and facial expressions [68], effect of the tune and prosody during verbal communication [70], etc.) that might play a relevant and/or more pivotal role in the observed effect of embodiment. For instance, some studies [71] suggested a substantial difference between face-to-face versus mediated contexts due to the effect of the variation in action formation. In the same vein, Rauchbauer et al. [42] compared a human-human interaction (HHI) to human-robot interaction (HRI) and reported that neural markers of mentalizing and social motivation were only present in HHI and not HRI. Therefore, it is important to extend our results to the case of socially interactive and naturalistic scenarios to include fine-grained characterization of verbal and non-verbal behaviours recorded during the interaction to investigate their neural correlates. Such comprehensive analyses are of utmost importance, considering the recent neuroscientific findings that emphasize the fundamental difference between social interaction and social observation [72].

Our results identified the role of physical embodiment in enhancing the PFC activation in response to verbal communication that requires social cognition [19] as well as story comprehension [31]. Moreover, they complemented the findings on its involvement in modulating responses to the anthropomorphic impressions of interacting agents [25,26] by maintaining the same level of conveyed intelligence (i.e., identical stories by the same storyteller). An important factor that demands further scrutiny is the extent of the effect of the varying intelligence in conjunction with the physical embodiment on the dynamics of the observed increase in PFC activation and its information content. As a result, investigation of the effect of these media on the brain activity of human subjects in an interactive setting (e.g., conversations other than listening to a story) is yet another arena for further exploration of the impact of these media and their physical embodiment on our daily lives and well-being.

5. Materials and Methods

5.1. Participants

Our participants consisted of two groups of individuals: seniors (ten females and ten males, 62–80 years old, MEAN = 70.70, SD = 4.62) and juniors (eleven females and four males, 20–33 years old, MEAN = 22.39, SD = 2.82) groups. Such age separation allowed us to investigate the potential effect of age on the neurological and behavioural responses of the participants to different media. Although the age of the participants is crucial, its significance has been overlooked in highly varying conclusions in previous studies on human-robot interaction [58,59].

All participants were free of neurological or psychiatric disorders and had no history of hearing impairment. Since we failed to collect the NIRS time series of the brain activity of four elderly adults due to recording complications, they were excluded from our analyses. We used an employment service centre for older people and a job-offering site for university students to recruit our participants.

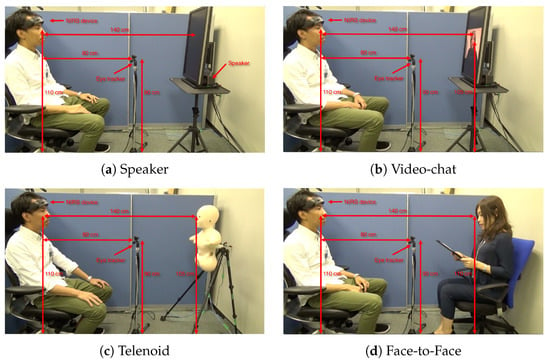

5.2. Communication Media

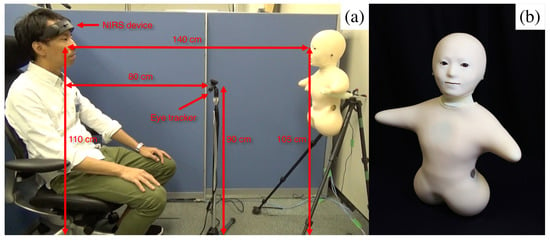

We used an audio speaker, a video-chat system, a humanoid robotic medium and a human (i.e., in-person control condition) to analyze the extent of their effect on the variational information of the participants’ frontal brain activity during the storytelling. Our choice of media reflected the dominant modes of communication in society, including telephone (audio speaker) and video-conferencing (video-chat). We chose a minimalist teleoperated android called Telenoid R4™ (Telenoid hereafter, Figure 7b) [73] to investigate the effect of physical embodiment. Telenoid is approximately 50.0 cm long and weighs about 3.0 kg. It comes with nine degrees-of-freedom (3 for its eyes, 1 for its mouth, 3 for its neck, and 2 for its arms) and is equipped with an audio speaker on its chest. It is primarily designed to investigate the basic and essential elements of embodiment for the efficient representation and transfer of a human-like presence. Therefore, its design follows a minimalist anthropomorphic principle to convey a gender-and-age-neutral look-and-feel. In our present study, we chose a minimalist anthropomorphic embodiment to eliminate the projection of such physical traits as gender and age onto our robotic medium, thereby allowing us to exclusively focus on the utility of minimal anthropomorphic features and their potential effects on the brain activity of human subjects.

Figure 7.

(a) Experimenter demonstrates experimental setup. (b) Telenoid. Figures for other settings (i.e., speaker, video-chat, and face-to-face) are provided in Appendix A.

Telenoid conveyed the vocal information of its teleoperator through its speaker. Its motion was generated based on the operator’s voice, using an online speech-driven head motion system [74]. However, its eyes and arms were motionless in this study. We placed Telenoid on a stand approximately 1.40 m (Figure 7a) from the participant’s chair to prevent any confounding effect due to tactile interaction (e.g., holding, hugging, etc.). We adjusted this stand to resemble an eye-contact setting between Telenoid and the participant. We maintained the same distance in the case of the other media as well as for the in-person setting. In in-person condition, we adjusted the storyteller’s seat to maintain eye-contact with the participant. For the video-chat, we adjusted its placeholder in such a way that the storyteller’s appearance on the screen resembled an eye-contact setting. In the speaker setting, we placed the video-chat screen in front of the participant (like in the video-chat condition) and placed the speaker behind its screen (Appendix A and Figure A1a–d). We used the same audio device in the speaker and video-chat settings to prevent any confounding effect due to audio quality. We used the same recorded voice of a woman, who was naive to the purpose of this study, in speaker, video-chat, and Telenoid. In the face-to-face setting, the same woman read the stories to the participants.

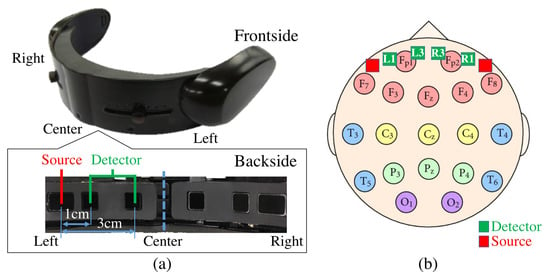

5.3. Sensor Devices

We used near infrared spectroscopy (NIRS) to collect the frontal brain activity of the participants and acquired their NIRS time series data using a wearable optical topography system called “HOT-1000”, developed by Hitachi High-Technologies Corp (Tokyo, Japan). (Figure 8). Participants wore this device on their forehead to record their frontal brain activity through detection of the total blood flow by emitting a wavelength laser light (810 nm) at a 10.0 Hz sampling rate. Data acquisition was carried out through four channels (, , , and , Figure 8). Postfix numerical values that are assigned to these channels specify their respective source-detector distances. In other words, and have a 1.0 cm source-detector distance and and have a 3.0 cm source-detector distance. Note that whereas a short-detector distance of 1.0 cm is inadequate for the data acquisition of cortical brain activity using NIRS (e.g., 0.5 cm [75], 1.0 cm [76], 1.5 cm [77], and 2.0 cm [78]), 3.0 cm is suitable [75,77].

Figure 8.

(a) Near infrared spectroscopy (NIRS) device in present study. Bottom subplot on left shows arrangement of source-detector of four channels of this device. Distances between short (i.e., 1.0 cm) and long (i.e., 3.0 cm) source and detector of left and right channels are shown. (b) Arrangement of 10–20 International Standard System: In this figure, relative locations of channels of NIRS device in our study (i.e., L1, L3, R1, and R3) are depicted in red (i.e., sources) and green (i.e., detectors) squares. L1, R1, L3, and R3 are channels with short (i.e., 1.0 cm) and long (i.e., 3.0 cm) source-detector distances.

To investigate whether the potential changes in the frontal brain activity of the participants were affected by their eye-movement, we also collected their gaze data throughout the storytelling sessions using Tobii Eye Tracker 4C controller. We recorded the eye-movements using pupil centre corneal reflection (PCCR) remote eye-tracking technology at a sampling rate of 90.0 Hz. Prior to gaze data acquisition, we calibrated our device and placed it approximately 80.0 cm from the participant’s seat and 30.0 cm above the ground between the stand of the media and chair of the participants (Figure 7a).

To ensure synchronized data acquisition across sensors, we collected data streams for the video, NIRS, and the gazes through “labstreaminglayer” [79] system.

5.4. Paradigm

We investigated the effect of three different communication media on the prefrontal cortex activity of the human subjects. Our experimental paradigm consisted of three-minute storytelling sessions in which a woman narrated three-minute stories from Greek mythology through three kinds of communication media: an audio speaker, a video-chat system, and Telenoid. In addition, we considered face-to-face to be a control setting through which we communicated these stories with participants in-person. This resulted in four separate sessions per participant. To prevent any confounding effect of visual distraction and to control the field of view of the participants within the same spatial limit, we placed their seat in a cubicle throughout the experimental sessions (height = 130.0 cm, width = 173.0 cm, depth = 210.0 cm). This cubicle’s side wall is visible in blue in Figure 7a.

Every participant first gave written informed consent in the waiting room next to the experimental room. Then, a male experimenter explained the experiment’s full procedure to the participants. This included the total number of session (four sessions), the duration of the narrated story in each session (i.e., three minutes), instructions about the one-minute rest period prior to the actual session (i.e., sitting still with eyes closed), and the content of the stories. The experimenter also asked the participant to focus on listening to the stories that were either narrated by a woman through a medium or in-person. Next, he led the participants to the experimental room and helped them to get seated in an armchair with proper head support in a sound-attenuated testing chamber. Then the experimenter calibrated the eye tracker device and instructed the participants to fully relax and keep their eyes closed. Last, the experimenter verified the proper adjustment of the medium (or helped the storyteller got comfortable in the proper position for the in-person condition) and began the experimental session. In every session, we first acquired one-minute rest data. Next, the experimenter asked the participants to open their eyes and prepare to listen to the story, followed by a story session during which we recorded the NIRS time series of the frontal brain activity of the participants. During the face-to-face setting, we asked the storyteller to maintain as much eye-contacts with the participants as possible. Figure 7a shows the experimental setup in the Telenoid setting.

At the end of each session, all participants completed questionnaires on their present emotional states. Questions included their perceived level (on an 8-point scale with 1 = not at all and 8 = very much) of the difficulty of the narrated story, their degree of interest in its content, and the induced feeling of fatigue due to listening to it. We also asked about their perceived feeling (on an 8-point scale with 1 = strongly disagree and 8 = strongly agree) of the human presence of the adapted medium in that session. We provided our participants a one-minute rest period prior to the commencement of each of the storytelling sessions and asked them to keep their eyes closed. In this period, we prepared the setting for the next storytelling session. We video-recorded all the activities throughout the experiment.

Every subject participated in all four sessions. We kept the content of the stories intact in these sessions and randomized the order of the media among the participants without changing the order of the narrated stories. The entire experiment lasted about 90 min per participant.

5.5. Data Processing

5.5.1. NIRS

First, we baseline-normalized the data by subtracting the mean of the one-minute rest periods. This step that is customary in brain imaging research was to remove the brain activity that was present prior to the start of the task (reflected in the resting period’s recording) and hence did not pertain to the task period’s brain activation. Next and in order to attenuate the effect of systemic physiological artefacts [80] (e.g., cardiac pulsations, respiration, etc.), we applied a one-degree polynomial Butterworth filter with 0.01 and 0.6 Hz for low and high bandpass which was then followed by performing the linear detrending on the data. Detrending of the signal that was adapted from signal processing and time series analysis and forecasting was a necessary step to ensure that the assumptions of stationarity and homoscedasticity (as reflected in wide spread application of linear models in analysis of fNIRS/fMRI time series) were not strongly violated (e.g., due to seasonality and/or repetitive increasing/decreasing patterns). Last, we attenuated the effect of skin blood flow (SBF) using an eigen decomposition technique [81]. This approach considers the first three principal components of the NIRS time series data of the frontal brain activity of the participants during their rest period to represent the SBF. Subsequently, it eliminates the SBF effect by removing these three components from the NIRS time series data of the participants during the task periods. We followed the same approach and removed the first three principal components of the respective rest period of the participants from the NIRS time series of their frontal brain activity that was recorded during the task period (i.e., listening to the stories). Similar to our NIRS recording, [81] also used 3.0 cm source-detector distance channels. Cooper et al. [82] show that this filter also attenuates the motion artefact (e.g., head motion).

A growing body of evidence [61] supports the central role of signal variability in the quantification of the brain’s neurological responses to stimuli and advocates the possibility of the presence of significant information. Miller [62] argued that direct correspondence exists between the amount of information and variance since anything that increases the variance also increases the amount of information. Similarly, Cohen et al. [63] considered the ability to identify meaningful variation in data pertinent to brain activation as an indicator of an effective analysis approach. These results collectively imply that variability in the time series of brain activity efficiently summarizes its responses to a wide range of stimuli. Therefore, given the effect of story encoding and comprehension on frontal brain activity [31,83,84] and considering the involvement of this region in mentalizing physical embodiment [26,27,85], in our experiment we chose to address signal variability to investigate the potential effect of physical embodiment during verbal communication.

The aforementioned findings are in line with the concept of entropy in information theory [86]. These results support the utility of entropy to the solution concept of the decoding of the information content of physiological and neurophysiological signals [87,88]. Extending these empirical results, Keshmiri et al. [64] proved that differential entropy (DE, i.e., entropy of a continuous random variable) quantifies the information content of the frontal brain activity in terms of a shift (e.g., increase and/or decrease) in its variational information. Given these observations, we adapted DE to summarize the variational information of the brain activity of the participants in our study.

We computed the DE, , of a three-minute time series of the frontal brain activity of the ith participant as [86]:

where is the variance of the NIRS time series of the frontal brain activity of the ith participant and gives the average variational information of the frontal brain activity of the ith participant.

5.5.2. Gaze Data

We first acquired the portion of these data per medium and per participant that fell within the pre-determined elliptical area that surrounded the spatial location of the storyteller’s face. We chose this elliptical area based on the storyteller’s chair in a face-to-face location or her image’s location on the screen for the video-chat and the speaker (Appendix A, Figure A1b–d). We placed this elliptical area around Telenoid’s face (Figure 7a). Given its location, we considered a 14.0 and 16.0 cm elliptical area as its short and long diameters around its face location. Next, we formulated a gazing metric (eye aversion ratio (EAR)) to determine the relative deviation of the visual attention of the participants from the media during the storytelling session:

where is the number of gazing data points that fell within the pre-determined elliptical area (described above) per medium and per participant. which is a hypothetical maximum number of gaze data points of the participants in the absence of any eye-aversion is calculated as . EAR resembles an explicit eye-movement measurement that quantifies (0 ≤ EAR ≤ 1) the deviation of the visual attention of the participants from media or the human speaker. as , implying the least aversive behaviour and/or deviation of the eye-field-of-view from the media or the human speaker. It is also worth noting that EAR restricts the legitimate gaze of the participants to data points that fall within the face area in media/in-person, suggesting that the remainder of the gaze data of the participants indicates eye-movement/aversion. Therefore, a strongly conservative metric ensures the eye-movements of the participants without underestimating the potential effect on PFC activation.

5.6. Statistical Analyses

We analyzed the effect of the ages of the participants by the variational information of their frontal brain activity with a two-way mixed-design ANOVA with age (i.e., elderly and younger participants) and media factors (i.e., speaker (S), video-chat (V), Telenoid (T), and face-to-face (F)). We used the Mendoza multisample sphericity test for this purpose. When sphericity was violated, we adjusted the degrees of freedom with Greenhouse-Geisser’s epsilon and conducted post hoc comparisons with Shaffer’s modified sequentially rejective Bonferroni correction.

For the analysis of the self-assessed responses of the participants to their perceived feelings of the human presence, we used a two-way mixed-design ANOVA (age and media factors) with the Mendoza multisample sphericity test. When sphericity was violated, we adjusted the degrees of freedom with Greenhouse-Geisser’s epsilon and conducted post hoc comparisons with Shaffer’s modified sequentially rejective Bonferroni correction. We used Gramm [89] for data visualization (SM 2, SM 3, and SM 4 for these results with respect to other self-assessed responses). Prior to the two-way mixed-design ANOVA analyses, we checked our data for normality using a one-sample Kolmogorov-Smirnov test. All media passed this test, except for DE and the self-assessed feelings of the human presence by elderly adults in face-to-face setting.

We analyzed the potential correspondence between the self-assessed responses of the participants to their feelings of the human presence and the induced variational information in their frontal brain activity (i.e., DE) by adapted media using Pearson correlations (for the Pearson correlation results with respect to other self-assessed responses as well as analyses of the self-assessed responses, see Appendix B, Appendix C, Appendix D, Appendix E, Appendix F, Appendix G).

For the gaze data, we utilized EAR, as formulated in Equation (2), to determine any potential confounding effect of the eye-movements of the participants on the information content of their frontal brain activity (i.e., DE). We calculated the Pearson correlations between the EAR of the participants and the information content of their frontal brain activity. Additionally, we computed the Pearson correlation between EAR and the self-assessed responses of the participants to their feelings of the human presence (SM 7 for the Pearson correlation results with respect to other self-assessed responses) to determine whether their gazing patterns revealed any potential preference toward embodiment and/or anthropomorphic impressions (e.g., view of the storyteller in video-chat or her actual presence face-to-face). The existence of a potentially significant correlation between EAR and the self-assessed responses of the participants might indicate the sufficiency and reliability of their behavioural responses for the evaluation of the effect of physical embodiment, discarding the necessity of the analysis of frontal brain activity as a unique neurophysiological marker of such an effect.

We used Python 2.7 for data acquisition and processing. We carried out a shift function, a difference asymmetry function, and Pearson correlation analyses in Matlab R2016a and used R 3.3.2 for parametric and non-parametric mixed-design analyses.

Author Contributions

Conceptualization, H.S. and R.Y.; formal analysis, S.K.; resources, H.I.; data curation, R.Y.; Writing–Original draft preparation, S.K.; Writing–Review and editing, S.K. and H.S.; project administration, H.I.; funding acquisition, S.K. and H.S.

Funding

This research was funded by JSPS KAKENHI, Grant No. JP19K20746, JST CREST Grant Number JPMJCR18A1, JST ImPACT Grant No.: 2014-PM11-07-01, and JSPS KAKENHI, Grant No. 16K16480.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PFC | Prefrontal cortex |

| MSE | Differential entropy |

| NIRS | Near-infrared spectroscopy |

| EAR | Eye aversion ratio |

| S | Speaker setting |

| V | Video-chat setting |

| T | Telenoid setting |

| F | Face-to-face (in-person) setting |

| M | Mean of the variable “a” |

| SD | Standard deviation of the variable “a” |

Appendix A. Experimental Setup

We investigated the effect of three different communication media on prefrontal cortex activity of human subjects. Our experimental paradigm comprised of three-minute-long storytelling sessions in which we narrated one of the asterism-based Greek mythological stories (in Japanese). We communicated these stories through three communication media, namely, speaker, video-chat system, and a humanoid robot (referred to as Telenoid hereafter). In addition, we considered face-to-face as a control setting in which we communicated these stories with participants in-person. This resulted in four separate sessions, per participant. These settings are shown in Figure A1, subplots (a) through (d). In order to prevent the confounding effect of visual distraction and to control the field of the participants, we placed the seat of the participants within the confine of a cubicle throughout the experimental sessions (Height = 130.0 cm, Width = 173.0 cm, Depth = 210.0 cm). The side wall of this cubicle is visible in blue in Figure A1, subplots (a) through (d).

Figure A1.

Experimental setup. (a) Speaker. (b) Video-chat. (c) Telenoid. (d) Face-to-Face.

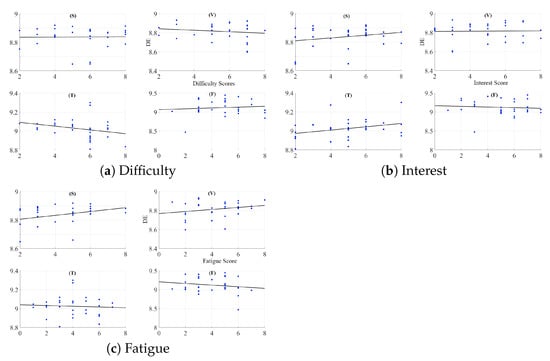

Appendix B. Difficulty of Narrated Stories

A two-way mixed-design ANOVA with media (i.e., speaker (S), video-chat system (V), Telenoid (T), and face-to-face (F)) and age (i.e., Senior and Junior groups) factors indicates non-significant effect of media (p = 0.46, F(2.65, 76.83) = 0.84, = 0.01), age (p = 0.27, F(1, 29) = 1.29, = 0.03), and the interaction between these factors (p = 0.25, F(2.65, 76.83) = 1.39, = 0.02) (Figure A2a).

Figure A2.

Self-assessed responses of participants: (a) difficulty of the stories. (b) their interest in them. (c) feeling of fatigue due to listening to them.

Appendix C. Interest in Content of Stories

A two-way mixed-design ANOVA with media and age factors indicates non-significant effect of media (p = 0.60, F(2.80, 81.08) = 0.61, = 0.006), age (p = 0.98, F(1, 29) = 0.001, = 0.0), and the interaction between these factors (p = 0.82, F(2.80, 81.08) = 0.29, = 0.003) (Figure A2b).

Appendix D. Induced Fatigue Due to Listening to Stories

A two-way mixed-design ANOVA with media and age factors indicates non-significant effect of media (p = 0.80, F(2.36, 68.40) = 0.28, = 0.003), age (p = 0.28, F(1, 29) = 1.19, = 0.03), and the interaction between these factors (p = 0.74, F(2.36, 68.40) = 0.36, = 0.004) (Figure A2c).

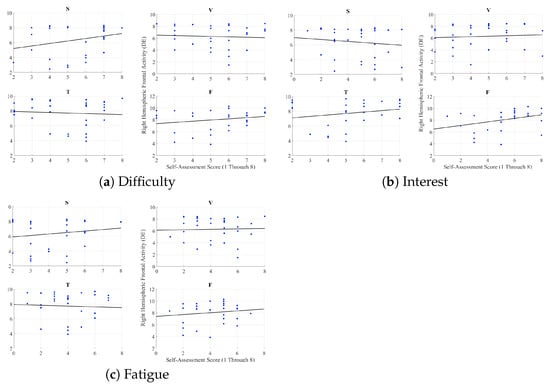

Appendix E. Left Hemispheric Frontal Brain Activity and Self-Assessed Responses of the Participants

We observed no correlation between the variational information of the left frontal brain activity of the participants and their responses to difficulty of the narrated stories in all media settings (speaker: r = 0.02, p = 0.94, video-chat: r = −0.14, p = 0.44, face-to-face: r = 0.10, p = 0.59, Telenoid: r = −0.27, p = 0.14) (Figure A3a). Similarly, We found no correlation between the variational information of the left frontal brain activity of the participants and their interest in these stories: speaker (r = 0.24, p = 0.20), video-chat (r = 0.02, p = 0.91), face-to-face (r = −0.08, p = 0.66), and Telenoid (r = 0.31, p = 0.09) (Figure A3b). Last, we observed no correlations between the variational information of the left frontal brain activity of the participants and their feelings of fatigue due to listening to these stories: speaker: r = 0.32, p = 0.08, video-chat: r = 0.22, p = 0.23, face-to-face: r = −0.16, p = 0.38, Telenoid: r = −0.06, p = 0.73 (Figure A3c).

Figure A3.

Pearson correlations between left-hemispheric frontal brain activity and self-assessed responses of participants to difficulty of stories, their interest in them, and feeling of fatigue due to listening to them. (S) speaker (V) video-chat (T) Telenoid (F) face-to-face.

Appendix F. Right Hemispheric Frontal Brain Activity and Self-Assessed Responses of the Participants

We observed no correlations between the right-hemispheric frontal brain activity of the participants and their self-assessed responses to difficulty of the stories (speaker: r = 0.26, p = 0.16, video-chat: r = −0.06, p = 0.75, Telenoid: r = −0.07, p = 0.71, face-to-face: r = 0.23, p = 0.22) (Figure A4a), their interest in them (speaker: r = −0.12, p = 0.53, video-chat: r = 0.07, p = 0.71, Telenoid: r = r = 0.21, p = 0.25, face-to-face: r = 0.35, p = 0.06) (Figure A4b), and the fatigue caused by listening to them (speaker: r = 0.17, p = 0.37, video-chat: r = 0.03, p = 0.86, Telenoid: r = −0.05, p = 0.78, face-to-face: r = 0.14, p = 0.46) (Figure A4c).

Figure A4.

Pearson correlations between right-hemispheric frontal brain activity and self-assessed responses of participants to difficulty of stories, their interest in them, and feeling of fatigue due to listening to them. (S) speaker (V) video-chat (T) Telenoid (F) face-to-face.

Appendix G. Pearson Correlation Between Eye Aversion Ratio (EAR) and Self-Assessed Responses of the Participants

We found no correlation between the responses of the participants about the difficulty of the narrated stories and EAR: speaker (r = −0.17, p = 0.36), video-chat (r = 0.08, p = 0.65), Telenoid (r = −0.01, p = 0.95), and face-to-face (r = 0.02, p = 0.90) (Figure A5a).

Similarly, we found no correlation between their interest in these stories and EAR: speaker (r = −0.27, p = 0.14), video-chat (r = 0.15, p = 0.42), Telenoid (r = 0.04, p = 0.85), and face-to-face (r = −0.17, p = 0.36) (Figure A5b).

Last, we observed no correlation between the feelings of fatigue due to listening to these stories and EAR: speaker (r = −0.02, p = 0.92), video-chat (r = −0.16, p = 0.38), Telenoid (r = −0.13, p = 0.47), and face-to-face (r = −0.02, p = 0.92) (Figure A5c).

Figure A5.

Pearson correlation between EAR and self-assessed responses of participants to difficulty of stories, their interest in them, and feeling of fatigue due to listening to them in speaker (S), video-chat (V), Telenoid (T), and face-to-face (F).

References

- Holt-Lunstad, J.; Smith, T.B.; Layton, J.B. Social relationships and mortality risk: A meta-analytic review. PLoS Med. 2013, 7, 369–379. [Google Scholar] [CrossRef]

- Nakanishi, J.; Sumioka, H.; Ishiguro, H. Impact of mediated intimate interaction on education: A huggable communication medium that encourages listening. Front. Psychol. 2016, 7, 510. [Google Scholar] [CrossRef] [PubMed]

- Michaelis, J.E.; Mutlu, B. Reading socially: Transforming the in-home reading experience with a learning-companion robot. Sci. Robot. 2018, 3, eaat5999. [Google Scholar] [CrossRef]

- Carroll, J.M. Human-computer interaction: Psychology as a science of design. Annu. Rev. Psychol. 1997, 48, 61–83. [Google Scholar] [CrossRef] [PubMed]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places, 1st ed.; CSLI Publications: Los Angeles, CA, USA, 2002. [Google Scholar]

- Nass, C.; Moon, Y.; Green, N. Are machines gender neutral? Gender-stereotypic responses to computers with voices. J. Appl. Soc. Psychol. 1997, 27, 864–876. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y.; Carney, P. Are respondents polite to computers? Social desirability and direct responses to computers. J. Appl. Soc. Psychol. 1999, 29, 1093–1110. [Google Scholar] [CrossRef]

- Holt-Lunstad, J.; Smith, T.B.; Layton, J.B. Computer-mediated communication: Impersonal, Interpersonal, and hyperpersonal integration. Commun. Res. 1996, 23, 3–43. [Google Scholar] [CrossRef]

- Finn, K.E.; Abigail, J.S.; Sylvia, B.W. Video-Mediated Communication, 2nd ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1997. [Google Scholar]

- Joinson, A.N. Self-disclosure in computer-mediated communication: The role of self-awareness and visual anonymity. Eur. J. Soc. Psychol. 2001, 31, 177–192. [Google Scholar] [CrossRef]

- Joinson, A.N. Effects of computer conferencing on the language use of emotionally disturbed adolescents. Behav. Res. Methods Instrum. Comput. 1987, 19, 224–230. [Google Scholar] [CrossRef]

- Cicourel, F.T.A.; Movellan, J.R. Socialization between toddlers and robots at an early childhood education center. Proc. Natl. Acad. Sci. USA 2007, 104, 17954–17958. [Google Scholar] [CrossRef]

- Mann, J.A.; MacDonald, B.A.; Kuo, I.H.; Li, X.; Broadbent, E. People respond better to robots than computer tablets delivering healthcare instructions. Comput. Hum. Behav. 2015, 43, 112–117. [Google Scholar] [CrossRef]

- Sakamoto, D.; Kanda, T.; Ono, T.; Ishiguro, H.; Hagita, N. Android as a telecommunication medium with a human-like presence. In Proceedings of the Human Robot Interaction (HRI), Arlington, VA, USA, 9–11 March 2007; ACM: Washington, DC, USA, 2007; pp. 193–200. [Google Scholar]

- Kanda, T.; Nakanishi, H.; Ishiguro, H. Physical embodiment can produce robot operator’s pseudo presence. Front. ICT 2015, 2, 112–117. [Google Scholar] [CrossRef][Green Version]

- Tanaka, M.; Ishii, A.; Yamano, E.; Ogikubo, H.; Okazaki, M.; Kamimura, K.; Konishi, Y.; Emoto, S.; Watanabe, Y. Effect of a human-type communication robot on cognitive function in elderly women living alone. Med. Sci. Monit. 2012, 18, CR550–CR557. [Google Scholar] [CrossRef] [PubMed]

- Pu, L.; Moyle, W.; Jones, C.; Todorovic, M. The effectiveness of social robots for older adults: A systematic review and meta-analysis of randomized controlled studies. Gerontologist 2018, 59, e37–e51. [Google Scholar] [CrossRef] [PubMed]

- Broadbent, E. Interactions with robots: The truths we reveal about ourselves. Annu. Rev. Psychol. 2017, 68, 627–652. [Google Scholar] [CrossRef] [PubMed]

- Forbes, C.E.; Grafman, J. The role of the human prefrontal cortex in social cognition and moral judgment. Annu. Rev. Neurosci. 2010, 33, 299–324. [Google Scholar] [CrossRef] [PubMed]

- Mayer, R.F. The Prefrontal Cortex: Anatomy, Physiology, and Neuropsychology of the Frontal Lobe; Raven: New York, NY, USA, 1997. [Google Scholar]

- Mitchell, J.P.; Macrae, C.N.; Banaji, M.R. Dissociable medial prefrontal contributions to judgments of similar and dissimilar others. Neuron 2006, 50, 655–663. [Google Scholar] [CrossRef] [PubMed]

- Amodio, D.M.; Frith, C.D. Meeting of minds: The medial frontal cortex and social cognition. Nat. Rev. Neurosci. 2006, 7, 268–534. [Google Scholar] [CrossRef]

- Molenberghs, P.; Johnson, H.; Henry, J.D.; Mattingley, J.B. Understanding the minds of others: A neuroimaging meta-analysis. Neurosci. Biobehav. Rev. 2016, 65, 276–291. [Google Scholar] [CrossRef]

- Grossmann, T. The role of medial prefrontal cortex in early social cognition. Front. Hum. Neurosci. 2013, 7, 340. [Google Scholar] [CrossRef]

- Takahashi, H.; Terada, K.; Morita, T.; Suzuki, S.; Haji, T.; Kozima, H.; Yoshikawah, M.; Matsumotoi, Y.; Omorib, T.; Asadaa, M.; et al. Different impressions of other agents obtained through social interaction uniquely modulate dorsal and ventral pathway activities in the social human brain. Cortex 2014, 58, 289–300. [Google Scholar] [CrossRef] [PubMed]

- Krach, S.; Hegel, F.; Wrede, B.; Sagerer, G.; Binkofski, F.; Kircher, T. Can machines think? Interaction and perspective taking with robots investigated via fMRI. PLoS ONE 2008, 3, e2597. [Google Scholar] [CrossRef] [PubMed]

- Chaminade, T.; Rosset, D.; Fonseca, D.D.; Nazarian, B.; Lutscher, E.; Cheng, G.; Deruelle, C. How do we think machines think? An fMRI study of alleged competition with an artificial intelligence. Front. Hum. Neurosci. 2012, 6, 103. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A. Working memory: Theories, models, and controversies. Annu. Rev. Psychol. 2012, 63, 1–29. [Google Scholar] [CrossRef] [PubMed]

- Owen, A.M.; McMillan, K.M.; Laird, A.R.; Bullmore, E. N-Back working memory paradigm: A meta-analysis of normative functional neuroimaging studies. Hum. Brain Mapp. 2005, 25, 46–59. [Google Scholar] [CrossRef]

- Wolf, I.; Dziobek, I.; Heekeren, H.R. Neural correlates of social cognition in naturalistic settings: A model-free analysis approach. NeuroImage 2010, 49, 894–904. [Google Scholar] [CrossRef]

- Mar, R.A. The Neural Bases of social cognition and story comprehension. Ann. Rev. Psychol. 2011, 62, 103–134. [Google Scholar] [CrossRef]

- Mar, R.A. The neuropsychology of narrative: Story comprehension, story production and their interrelation. Neuropsychologia 2004, 42, 1414–1434. [Google Scholar] [CrossRef]

- Ferrari, M.; Quaresima, V. A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. NeuroImage 2012, 63, 921–935. [Google Scholar] [CrossRef]

- Ferrari, M.; Quaresima, V. Functional near-infrared spectroscopy for the assessment of speech related tasks. Brain Lang. 2012, 121, 90–109. [Google Scholar] [CrossRef]

- Naseer, N.; Hong, K.S. fNIRS-based brain-computer interfaces: A review. Front. Hum. Neurosci. 2015, 9, 3. [Google Scholar] [CrossRef] [PubMed]

- Canning, C.; Scheutz, M. Functional near-infrared spectroscopy in human-robot interaction. J. Hum. Robot Interact. 2013, 2, 62–84. [Google Scholar] [CrossRef]

- Gagnon, L.; Yücel, M.A.; Dehaes, M.; Cooper, R.J.; Perdue, K.L.; Selb, J.; Huppert, T.J.; Hoge, R.D.; Boas, D.A. Quantification of the cortical contribution to the NIRS signal over the motor cortex using concurrent NIRS-fMRI measurements. NeuroImage 2011, 59, 3933–3940. [Google Scholar] [CrossRef] [PubMed]

- Cui, X.; Bray, S.; Bryant, D.M.; Glover, G.H.; Reiss, A.L. A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. NeuroImage 2011, 54, 2808–2821. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Hausdorff, J.M.; Ivanov, P.C.; Peng, C.K.; Stanley, H.E. Fractal dynamics in physiology: Alterations with disease and aging. Proc. Natl. Acad. Sci. USA 2002, 99, 2466–2472. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.C. Complexity and noise. A phase space approach. J. Phys. I 1991, 1, 971–977. [Google Scholar]

- Schultze, U. Embodiment and presence in virtual worlds: A review. J. Inf. Technol. 2010, 25, 434–449. [Google Scholar] [CrossRef]

- Rauchbauer, B.; Nazarian, B.; Bourhis, M.; Ochs, M.; Prévot, L.; Chaminade, T. Brain activity during reciprocal social interaction investigated using conversational robots as control condition. Philos. Trans. R. Soc. B 2019, 374, 20180033. [Google Scholar] [CrossRef]

- Keshmiri, S.; Sumioka, H.; Yamazaki, R.; Ishiguro, H. Older People Prefrontal Cortex Activation Estimates Their Perceived Difficulty of a Humanoid-Mediated Conversation. IEEE Robot. Autom. Lett. 2019, 4, 4108–4115. [Google Scholar] [CrossRef]

- Keshmiri, S.; Sumioka, H.; Okubo, M.; Yamazaki, R.; Nakae, A.; Ishiguro, H. Potential Health Benefit of Physical Embodiment in Elderly Counselling: A Longitudinal Case Study. In Proceedings of the International Conference on Systems, Man, and Cybernetics (SMC2018), Miyazaki, Japan, 7–10 October 2018. [Google Scholar] [CrossRef]

- Matarić, M.J. Socially assistive robotics: Human augmentation versus automation. Sci. Robot. 2017, 2, eaam5410. [Google Scholar] [CrossRef]

- Robinson, H.; MacDonald, B.; Kerse, N.; Broadbent, E. The psychosocial effects of a companion robot: A randomized controlled trial. J. Am. Med. Dir. Assoc. (JAMA) 2013, 14, 661–667. [Google Scholar] [CrossRef]

- Soler, M.V.; Agüera-Ortiz, L.; Rodrìguez, J.O.; Rebolledo, C.M.; Muñoz, P.A.; Pérez, I.R.; Ruiz, E.O.; Sánchez, A.B.; Cano, V.H.; Chillón, L.C.; et al. Social robots in advanced dementia. Front. Aging Neurosci. 2015, 14, 133. [Google Scholar] [CrossRef]

- Yang, G.Z.; Bellingham, J.; Dupont, P.E.; Fischer, P.; Floridi, L.; Full, R.; Jacobstein, N.; Kumar, V.; McNutt, M.; Merrifield, R.; et al. The grand challenges of Science Robotics. Sci. Robot. 2018, 3, eaar7650. [Google Scholar] [CrossRef]

- Scassellati, B. Theory of mind for a humanoid robot. Auton. Robots 2002, 12, 13–24. [Google Scholar] [CrossRef]

- Clabaugh, C.; Matarić, M. Robots for the people, by the people: Personalizing human-machine interaction. Sci. Robot. 2018, 3, eaat7451. [Google Scholar] [CrossRef]

- Lerner, Y.; Honey, C.J.; Silbert, L.J.; Hasson, U. Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J. Neurosci. 2011, 31, 2906–2915. [Google Scholar] [CrossRef]

- Huth, A.G.; de Heer, W.A.; Griffiths, T.L.; Theunissen, E.F.; Gallant, J.L. Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 2016, 532, 453–458. [Google Scholar] [CrossRef] [PubMed]

- Gilbert, D.T.; Malone, P.S. The correspondence bias. Psychol. Bull. 1995, 117, 21. [Google Scholar] [CrossRef]

- Storms, M.D. Videotape and the attribution process: Reversing actors’ and observers’ points of view. J. Pers. Soc. Psychol. 1973, 27, 165. [Google Scholar] [CrossRef]

- Chaminade, T.; Zecca, M.; Blakemore, S.J.; Takanishi, A.; Frith, C.D.; Micera, S.; Dario, P.; Rizzolatti, G.; Gallese, V.; Umiltà, M.A. Brain response to humanoid robot in areas implicated in the perception of human emotional gesture. PLoS ONE 2010, 5, e11577. [Google Scholar] [CrossRef]

- Sleimen-Malkoun, R.; Temprado, J.J.; Hong, S.L. Aging induced loss of complexity and dedifferentiation: Consequences for coordination dynamics within and between brain, muscular and behavioral levels. Front. Aging Neurosci. 2014, 6, 140. [Google Scholar] [CrossRef]

- Li, T.; Luo, Q.; Gong, H. Gender-specific hemodynamics in prefrontal cortex during a verbal working memory task by near-infrared spectroscopy. Behav. Brain Res. 2010, 209, 148–153. [Google Scholar] [CrossRef]

- Nomura, T.; Kanda, T.; Suzuki, T.; Kato, K. Prediction of human behavior in human robot interaction using psychological scales for anxiety and negative attitudes toward robots. IEEE Trans. Robot. 2008, 24, 442–451. [Google Scholar] [CrossRef]

- Ezer, N.; Fisk, A.D.; Rogers, W.A. More than a servant: Self-reported willingness of younger and older adults to having a robot perform interactive and critical tasks in the home. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications: Los Angeles, CA, USA, 2009; Volume 53, pp. 136–140. [Google Scholar] [CrossRef]

- Seider, T.R.; Fieo, R.A.; O’Shea, A.; Porges, E.C.; Woods, A.J.; Cohen, R.A. Cognitively Engaging Activity Is Associated with Greater Cortical and Subcortical Volumes. Front. Aging Neurosci. 2016, 8, 94. [Google Scholar] [CrossRef]

- Garrett, D.D.; Samanez-Larkin, G.R.; MacDonald, S.W.; Lindenberger, U.; McIntosh, A.R.; Grady, C.L. Moment-to-moment brain signal variability: A next frontier in human brain mapping? Neurosci. Biobehav. Rev. 2013, 37, 610–624. [Google Scholar] [CrossRef]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Physiol. Rev. 2001, 10, 343–352. [Google Scholar] [CrossRef]

- Cohen, J.D.; Daw, N.; Engelhardt, B.; Hasson, U.; Li, K.; Niv, Y.; Norman, K.A.; Pillow, J.; Ramadge, P.J.; Turk-Browne, N.B.; et al. Computational approaches to fMRI analysis. Nat. Neurosci. 2017, 20, 304–313. [Google Scholar] [CrossRef]

- Keshmiri, S.; Sumioka, H.; Yamazaki, R.; Ishiguro, H. Differential Entropy Preserves Variational Information of Near-Infrared Spectroscopy Time Series Associated with Working Memory. Front. Neuroinform. 2018, 12, 33. [Google Scholar] [CrossRef]

- Ben-Yakov, A.; Honey, C.J.; Lerner, Y.; Hasson, U. Loss of reliable temporal structure in event-related averaging of naturalistic stimuli. NeuroImage 2012, 63, 501–506. [Google Scholar] [CrossRef]

- Haynes, J.D.; Rees, G. Decoding mental states from brain activity in humans. Nat. Rev. Neurosci. 2007, 7, 523–534. [Google Scholar] [CrossRef]