Does Classifier Fusion Improve the Overall Performance? Numerical Analysis of Data and Fusion Method Characteristics Influencing Classifier Fusion Performance

Abstract

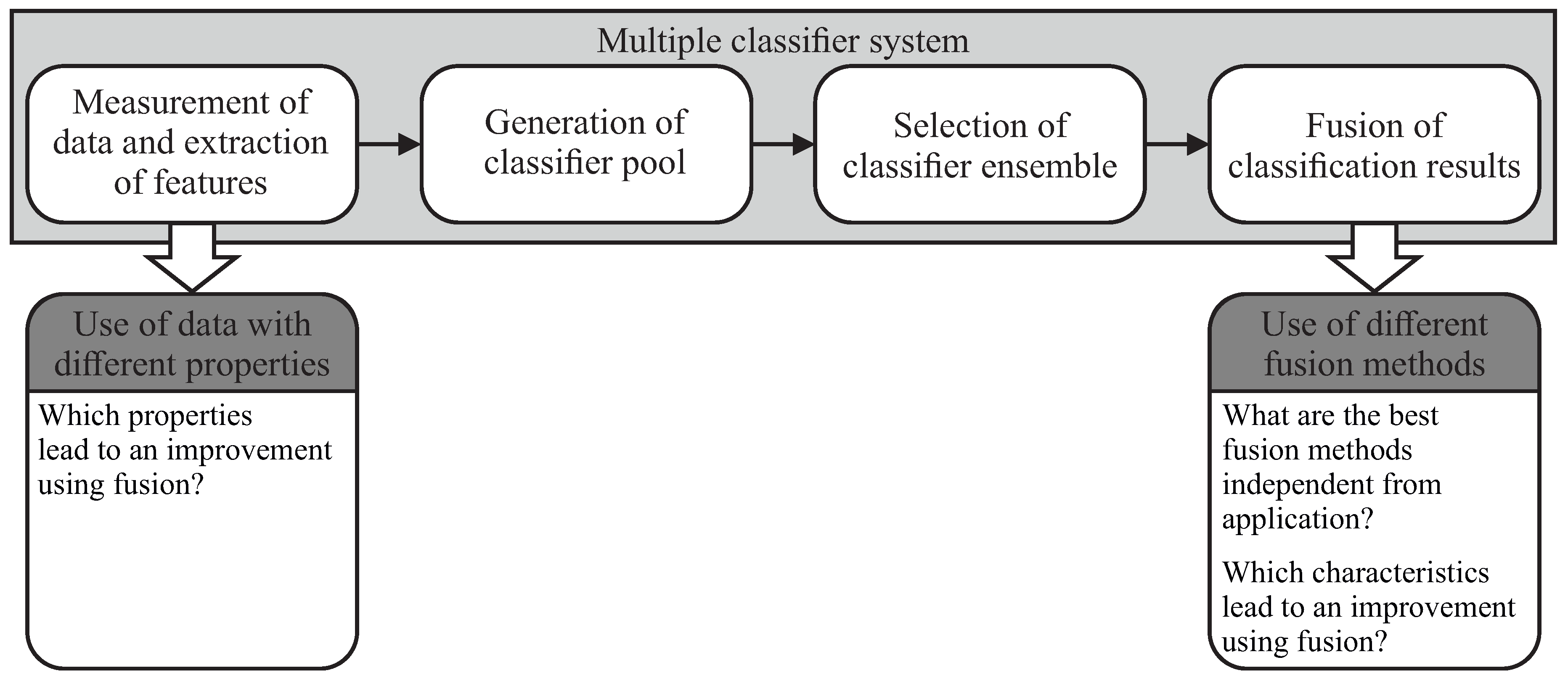

1. Introduction

2. Considered Properties

2.1. Attributes and Requirements of Fusion Methods

2.1.1. Type of Classifier Output Levels

2.1.2. Use of Classifier Outputs

2.1.3. Training of Fusion Method Parameters

2.2. Data Characteristics

2.2.1. Simple Measures

2.2.2. Information Theoretic Measures

3. Application Using Benchmark Data

3.1. Fusion Algorithms

3.2. Classification Methods

3.3. Data Sets

3.4. Experimental Procedure

3.4.1. Nested Cross Validation

3.4.2. Performance Measure

4. Experimental Results

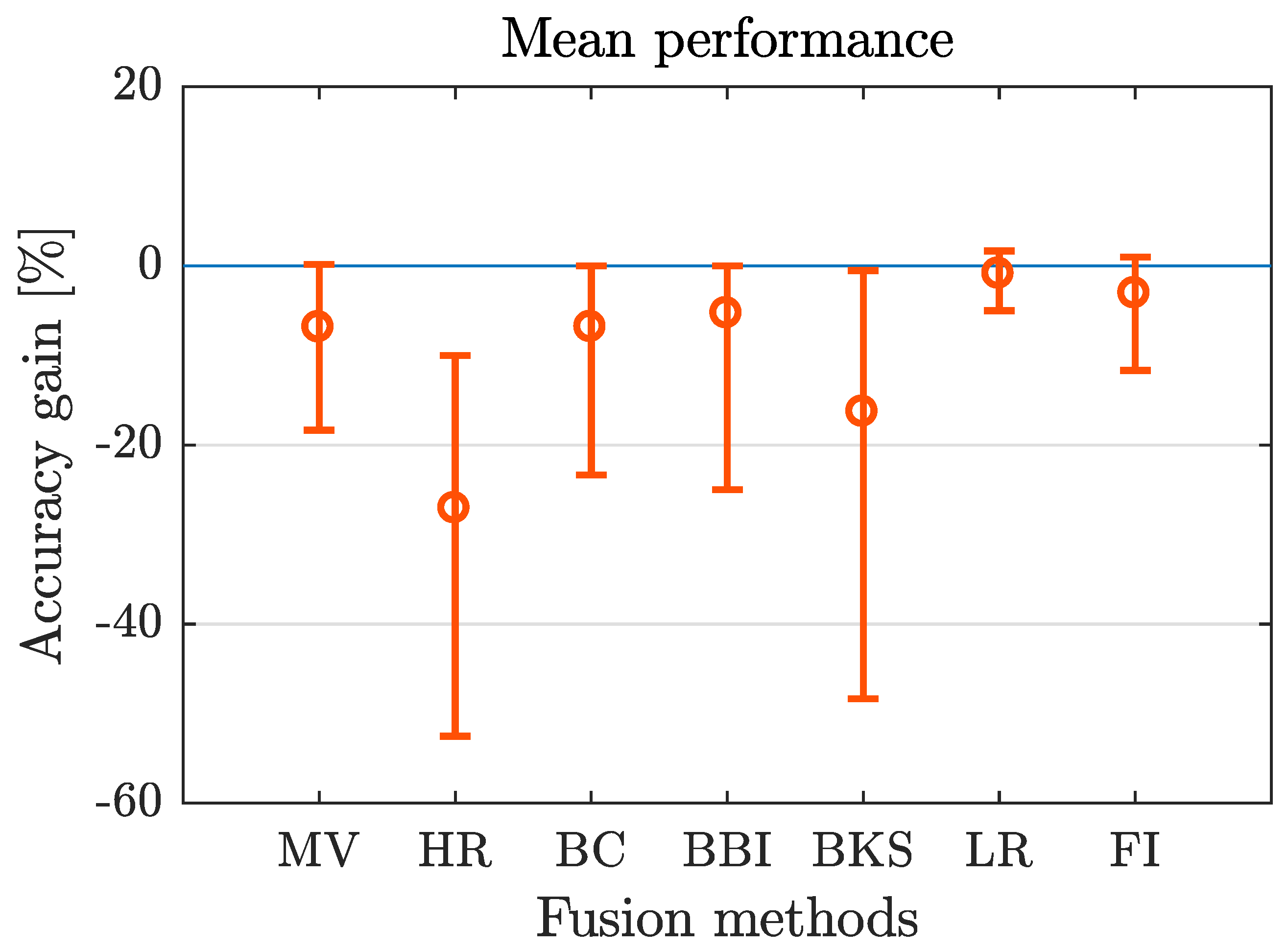

4.1. Mean Performance of Fusion Methods

Lessons Learned

4.2. Performance Related to Fusion Method Characteristics

Lessons Learned

4.3. Performance Related to Data Characteristics

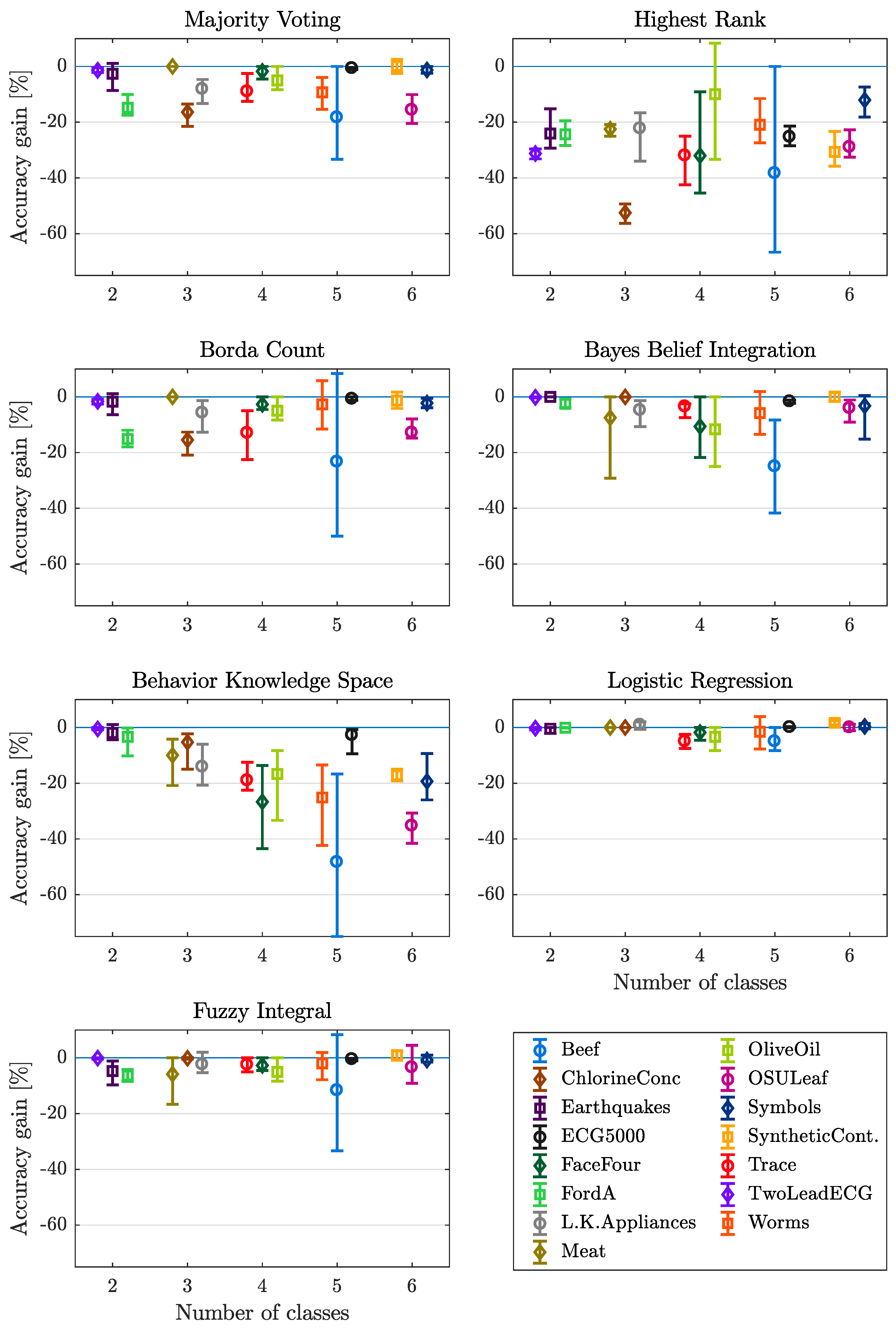

4.3.1. Number of Classes

Lessons Learned

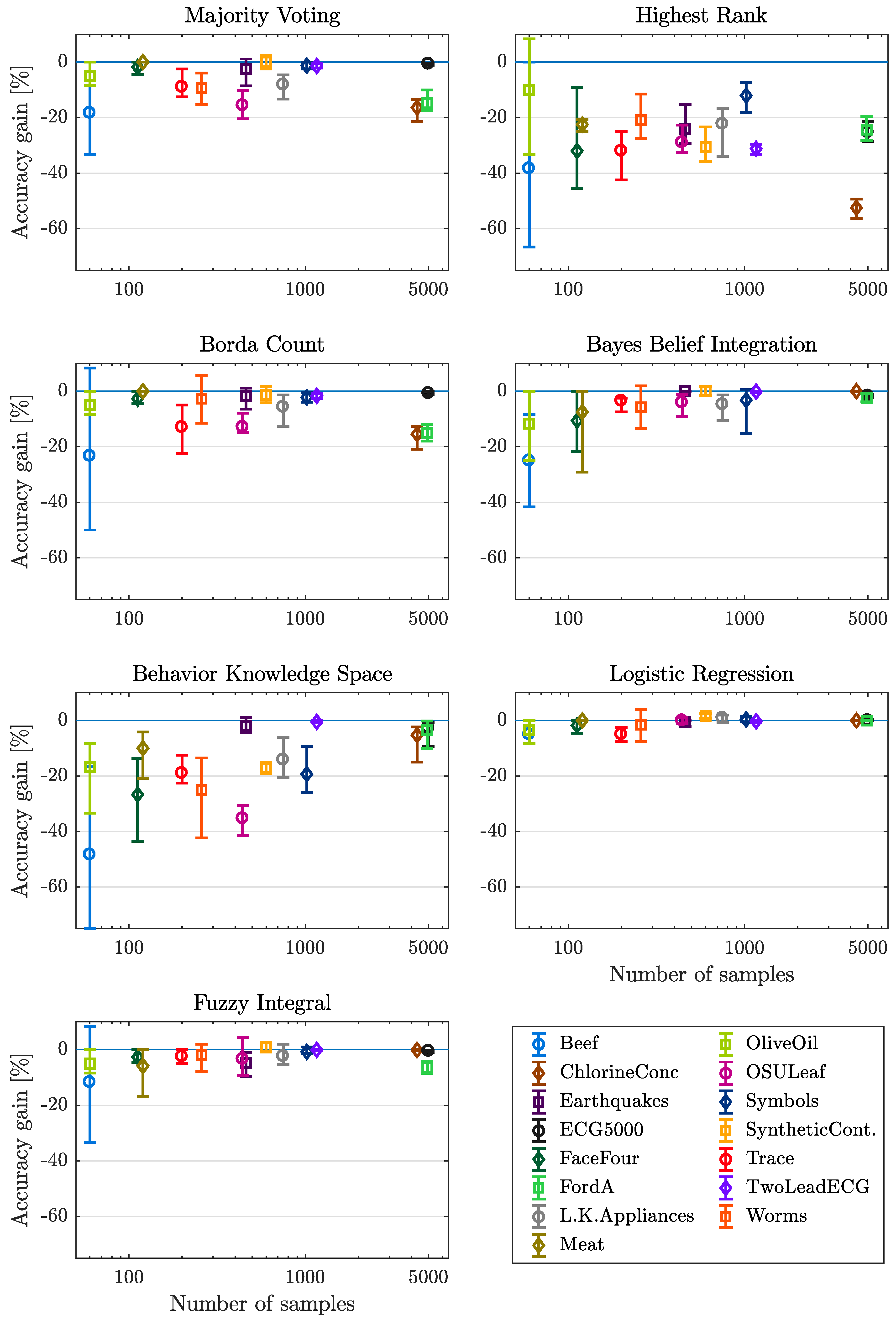

4.3.2. Number of Samples

Lessons Learned

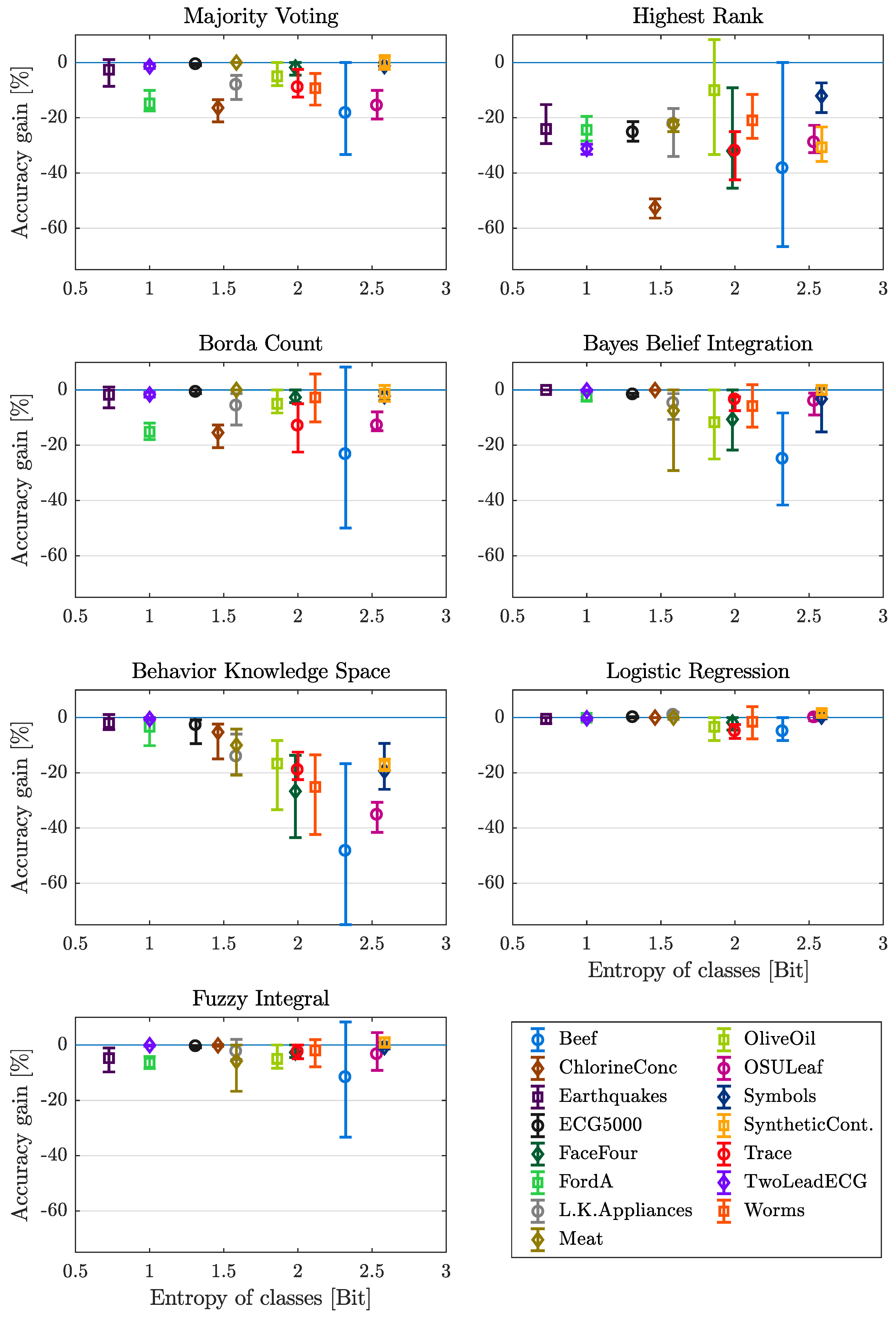

4.3.3. Entropy of Classes

Lessons Learned

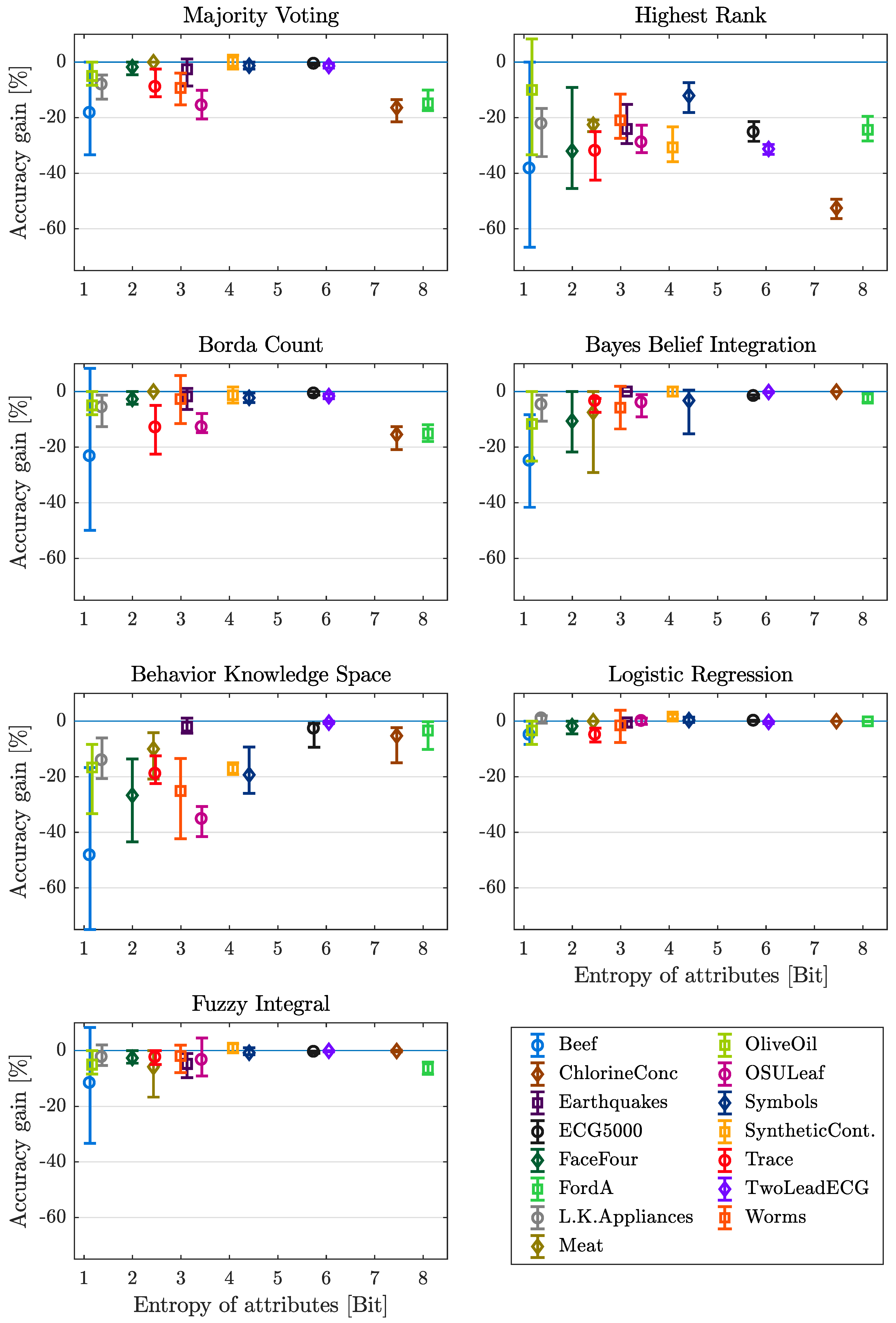

4.3.4. Entropy of Attributes

Lessons Learned

4.4. Are Fusion Methods Improving the Overall Performance? Conclusions from the Numerical Analysis

5. Summary and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ruta, D.; Gabrys, B. An overview of classifier fusion methods. Comput. Inf. Syst. 2000, 7, 1–10. [Google Scholar]

- Ho, T.K.; Hull, J.J.; Srihari, S.N. Decision combination in multiple classifier systems. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 66–75. [Google Scholar]

- Ding, W.; Jing, X.; Yan, Z.; Yang, T.Y. A survey on data fusion in internet of things: Towards secure and privacy-preserving fusion. Inf. Fusion 2019, 51, 129–144. [Google Scholar] [CrossRef]

- Azzedin, F.; Ghaleb, M. Internet-of-Things and Information Fusion: Trust Perspective Survey. Sensors 2019, 19, 1929. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Smith, K.A. On learning algorithm selection for classification. Appl. Soft Comput. 2006, 6, 119–138. [Google Scholar] [CrossRef]

- Britto, A.S.; Sabourin, R.; Oliveira, L.E.S. Dynamic selection of classifiers—A comprehensive review. Pattern Recognit. 2014, 47, 3665–3680. [Google Scholar] [CrossRef]

- Shafer, G.; Logan, R. Implementing dempster’s rule for hierarchical evidence. Artif. Intell. 1987, 33, 271–298. [Google Scholar] [CrossRef]

- Huang, Y.S.; Suen, C.Y. The behavior-knowledge space method for combination of multiple classifiers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993; pp. 347–352. [Google Scholar]

- Suen, C.Y.; Lam, L. Multiple classifier combination methodologies for different output levels. In Proceedings of the Multiple Classifier Systems. First International Workshop MCS 2000, Cagliari, Italy, 21–23 June 2000; pp. 52–66. [Google Scholar]

- Kuncheva, L.I.; Bezdek, J.C.; Duin, R.P.W. Decision templates for multiple classifier fusion: An experimental comparison. Pattern Recognit. 2001, 34, 299–314. [Google Scholar] [CrossRef]

- Kuncheva, L.I. Combining Pattern Classifiers: Methods and Algorithms; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Michie, D.; Spiegelhalter, D.J.; Taylor, C.C. Machine Learning, Neural and Statistical Classification; Ellis Horwood Ltd.: New York, NY, USA, 1994. [Google Scholar]

- Bezdek, J.C.; Keller, J.; Krisnapuram, R.; Pal, N. Fuzzy Models and Algorithms for Pattern Recognition and Image Processing; Springer Science & Business Media: New York, NY, USA, 2006; Volume 4. [Google Scholar]

- Anderson, D.T.; Bezdek, J.C.; Popescu, M.; Keller, J.M. Comparing fuzzy, probabilistic, and possibilistic partitions. IEEE Trans. Fuzzy Syst. 2010, 18, 906–918. [Google Scholar] [CrossRef]

- Duin, R.P.W.; Tax, D.M.J. Classifier conditional posterior probabilities. In Advances in Pattern Recognition, Proceedings of Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR); Amin, A., Dori, D., Pudil, P., Freeman, H., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1451, pp. 611–619. [Google Scholar]

- Xu, L.; Krzyzak, A.; Suen, C.Y. Methods of combining multiple classifiers and their applications to handwriting recognition. IEEE Trans. Syst. Man Cybern. 1992, 22, 418–435. [Google Scholar] [CrossRef]

- Duin, R.P.W. The combining classifier: To train or not to train? In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 2, pp. 765–770. [Google Scholar]

- Suen, C.Y. Recognition of totally unconstrained handwritten numerals based on the concept of multiple experts. In Proceedings of the 1st International Workshop on Frontiers in Handwriting Recognition, Montreal, QC, Canada, 2–3 April 1990; pp. 131–143. [Google Scholar]

- Walt, C.V.D.; Barnard, E. Data characteristics that determine classifier performance. SAIEE Afr. Res. J. 2006, 98, 87–93. [Google Scholar]

- Schubert Kabban, C.M.; Greenwell, B.M.; DeSimio, M.P.; Derriso, M.M. The probability of detection for structural health monitoring systems: Repeated measures data. Struct. Health Monit. 2015, 14, 252–264. [Google Scholar] [CrossRef]

- Cho, S.B.; Kim, J.H. Combining multiple neural networks by fuzzy integral and robust classification. IEEE Trans. Syst. Man Cybern. 1995, 25, 380–384. [Google Scholar]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The weka data mining software. ACM SIGKDD Explor. Newsl. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Zheng, A. Evaluating Machine Learning Models; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed]

- Moreno-Seco, F.; Iesta, J.M.; de Len, P.J.P.; Mic, L. Comparison of classifier fusion methods for classification in pattern recognition tasks. In Structural, Syntactic, and Statistical Pattern Recognition; Yeung, D.Y., Kwok, J.T., Fred, A., Roli, F., de Ridder, D., Eds.; SSPR /SPR 2006. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; pp. 705–713. [Google Scholar]

- Bouckaert, R.R.; Frank, E.; Hall, M.; Kirkby, R.; Reutemann, P.; Seewald, A.; Scuse, D. Waikato Environment for Knowledge Analysis (WEKA) Manual for Version 3-7-8 (accessed: 2018-05-20); The University of Waikato: Hamilton, New Zealand, 2013. [Google Scholar]

- Bouckaert, R.R.; Frank, E. Evaluating the replicability of significance tests for comparing learning algorithms. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining; Springer: Berlin/Heidelberg, Germany, 2004; pp. 3–12. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence IJCAI’95, Montreal, QC, Canada, 20–25 August 1995; Volume 2, pp. 1137–1145. [Google Scholar]

- Rodriguez, J.D.; Perez, A.; Lozano, J.A. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 569–575. [Google Scholar] [CrossRef] [PubMed]

- Varma, S.; Simon, R. Bias in error estimation when using cross-validation for model selection. BMC Bioinform. 2006, 7, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Krstajic, D.; Buturovic, L.J.; Leahy, D.E.; Thomas, S. Cross-validation pitfalls when selecting and assessing regression and classification models. J. Cheminform. 2014, 6, 10. [Google Scholar] [CrossRef] [PubMed]

- Wong, T.-T. Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recognit. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

| Output Level | Trainable Method | |

|---|---|---|

| No | Yes | |

| Abstract | Majority Voting | Bayes Belief Integration Behavior Knowledge Space |

| Soft | Highest Rank Borda Count | Logistic Regression Fuzzy Integral |

| Name of Dataset | Samples | Classes | Data Origin |

|---|---|---|---|

| Beef | 60 | 5 | Spectrograph |

| ChlorineConcentration | 4307 | 3 | Simulated |

| Earthquakes | 461 | 2 | Vibrations |

| ECG5000 | 5000 | 5 | ECG measurement |

| FaceFour | 112 | 4 | Images |

| FordA | 4921 | 2 | Engine noise |

| LargeKitchenAppliances | 750 | 3 | Energy consumption |

| Meat | 120 | 3 | Spectrograph |

| OliveOil | 60 | 4 | Spectrograph |

| OSULeaf | 442 | 6 | Images |

| Symbols | 1020 | 6 | Images |

| SyntheticControl | 600 | 6 | Simulated |

| Trace | 200 | 4 | Simulated |

| TwoLeadECG | 1162 | 2 | ECG measurement |

| Worms | 258 | 5 | Motion |

| Data Set/Fusion Method | MV | HR | BC | BBI | BKS | LR | FI |

|---|---|---|---|---|---|---|---|

| Beef | −18.33 | −38.33 | −23.33 | −25.00 | −48.33 | −5.00 | −11.67 |

| ChlorineConcentration | −16.39 | −52.52 | −15.46 | −0.02 | −5.27 | 0.00 | −0.14 |

| Earthquakes | −2.59 | −24.07 | −1.73 | 0.00 | −1.95 | −0.43 | −4.76 |

| ECG5000 | −0.66 | −25.30 | −0.76 | −1.68 | −2.78 | 0.08 | −0.52 |

| FaceFour | −1.78 | −32.02 | −2.69 | −10.63 | −26.68 | −1.78 | −2.69 |

| FordA | −14.83 | −24.36 | −15.14 | −2.48 | −3.35 | −0.06 | −6.38 |

| LargeKitchenAppliances | −8.13 | −22.27 | −5.73 | −4.80 | −14.13 | 0.93 | −2.40 |

| Meat | 0.00 | −22.50 | 0.00 | −7.50 | −10.00 | 0.00 | −5.83 |

| OliveOil | −5.00 | −10.00 | −5.00 | −11.67 | −16.67 | −3.33 | −5.00 |

| OSULeaf | −15.63 | −28.95 | −12.89 | −4.08 | −35.28 | 0.00 | −3.42 |

| Symbols | −1.27 | −12.06 | −2.25 | −3.24 | −19.31 | 0.39 | −0.78 |

| SyntheticControl | 0.17 | −30.67 | −1.33 | 0.00 | −17.17 | 1.67 | 1.00 |

| Trace | −9.00 | −32.00 | −13.00 | −3.50 | −19.00 | −5.00 | −2.50 |

| TwoLeadECG | −1.38 | −31.24 | −1.63 | −0.17 | −0.52 | −0.26 | −0.09 |

| Worms | −9.28 | −20.94 | −2.71 | −5.81 | −25.13 | −1.52 | −1.95 |

| Mean percentage | −6.94 | −27.15 | −6.91 | −5.37 | −16.37 | −0.95 | −3.14 |

| Characteristic | Lessons Learned |

|---|---|

| Overall performance | In most cases fusion leads to a deterioration in accuracy compared to the best individual classifier. The LR fusion method leads to the best, HR to worst results regarding the considered data sets and fusion methods. |

| Classifier output level | The level of classifier output does not have significant influence on the fusion performance. |

| Use of classifier output | Class-indifferent methods perform slightly better than class-conscious methods. |

| Necessity of training | Trainable methods perform slightly better than non trainable methods. |

| Number of classes | For most fusion methods an increasing number of classes (up to 5 classes) leads to decreasing performance. For a small number of classes, BBI, BKS, LR and FI are suitable but for a higher number of classes, only LR and FI are recommended. |

| Number of samples | All trainable methods show increasing performance for increasing number of samples. If a large amount of data is available, trainable methods should be preferred, if only a small number of samples can be used, LR or FI should be applied. |

| Entropy of classes | An increasing entropy of classes leads to a decreased performance for most fusion methods, although the information content is higher and the classes are more even distributed for high entropy of classes. For a small entropy of classes, only LR and FI show good performance, while only HR is not recommended to be implemented for data with higher entropy of classes. |

| Entropy of attributes | An increasing performance can be observed for increasing entropy of attributes, because more information can be extracted using data sets with a high entropy of attributes. Considering data with low entropy of attributes, only LR should be used as fusion method. For data with higher entropy of attributes, LR and also BBI, BKS and FI can be recommended. |

| Sensitivity to all data characteristics | Using the fusion method LR, the results are at least sensitive to the changes in the data characteristics, while BKS shows the most sensitivity. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rothe, S.; Kudszus, B.; Söffker, D. Does Classifier Fusion Improve the Overall Performance? Numerical Analysis of Data and Fusion Method Characteristics Influencing Classifier Fusion Performance. Entropy 2019, 21, 866. https://doi.org/10.3390/e21090866

Rothe S, Kudszus B, Söffker D. Does Classifier Fusion Improve the Overall Performance? Numerical Analysis of Data and Fusion Method Characteristics Influencing Classifier Fusion Performance. Entropy. 2019; 21(9):866. https://doi.org/10.3390/e21090866

Chicago/Turabian StyleRothe, Sandra, Bastian Kudszus, and Dirk Söffker. 2019. "Does Classifier Fusion Improve the Overall Performance? Numerical Analysis of Data and Fusion Method Characteristics Influencing Classifier Fusion Performance" Entropy 21, no. 9: 866. https://doi.org/10.3390/e21090866

APA StyleRothe, S., Kudszus, B., & Söffker, D. (2019). Does Classifier Fusion Improve the Overall Performance? Numerical Analysis of Data and Fusion Method Characteristics Influencing Classifier Fusion Performance. Entropy, 21(9), 866. https://doi.org/10.3390/e21090866