Abstract

Beimel et al. in CCC 12’ put forward a paradigm for constructing Private Information Retrieval (PIR) schemes, capturing several previous constructions for servers. A key component in the paradigm, applicable to three-server PIR, is a share conversion scheme from corresponding linear three-party secret sharing schemes with respect to a certain type of “modified universal” relation. In a useful particular instantiation of the paradigm, they used a share conversion from -CNF over to three-additive sharing over for primes where and . The share conversion is with respect to the modified universal relation . They reduced the question of whether a suitable share conversion exists for a triple to the (in)solvability of a certain linear system over . Assuming a solution exists, they also provided a efficient (in ) construction of such a sharing scheme. They proved a suitable conversion exists for several triples of small numbers using a computer program; in particular, yielded the three-server PIR with the best communication complexity at the time. This approach quickly becomes infeasible as the resulting matrix is of size . In this work, we prove that the solvability condition holds for an infinite family of ’s, answering an open question of Beimel et al. Concretely, we prove that if and , then a conversion of the required form exists. We leave the full characterization of such triples, with potential applications to PIR complexity, to future work. Although larger (particularly with ) triples do not yield improved three-server PIR communication complexity via BIKO’s construction, a richer family of PIR protocols we obtain by plugging in our share conversions might have useful properties for other applications. Moreover, we hope that the analytic techniques for understanding the relevant matrices we developed would help to understand whether share conversion as above for , where m is a product of more than two (say three) distinct primes, exists. The general BIKO paradigm generalizes to work for such ’s. Furthermore, the linear condition in Beimel et al. generalizes to m’s, which are products of more than two primes, so our hope is somewhat justified. In case such a conversion does exist, plugging it into BIKO’s construction would lead to major improvement to the state of the art of three-server PIR communication complexity (reducing Communication Complexity (CC) in correspondence with certain matching vector families).

1. Introduction

A Private Information Retrieval (PIR) protocol [1] is a protocol that allows a client to retrieve the bit in a database, which is held by two or more servers, each holding a copy of the database, without exposing information about i to any single server (assuming the servers do not collaborate). In the protocol specification, the servers do not communication amongst each other. The main complexity measure to optimize in this setting is the communication complexity between client ans servers. In the single-server setting, the Communication Complexity (CC) is provably very high - provably, the whole database needs to be communicated. In the computational setting [2], communication-efficient single-server PIR is essentially solved with essentially optimal CC [3]. In this work, we focus on information theoretic (in fact perfect) PIR protocols. See [4] and the references within for the additional motivation for the study of information theoretic PIR.

All known PIR protocols only use one round of communication (although it is not part of the definition of PIR), so we will only consider this setting. In this setting, the client sends a query to each server and receives an answer in return. PIR is a special case of secure Multi-Party Computation (MPC), which is a very general and useful cryptographic primitive, allowing a number of parties to compute a function f over their inputs while keeping that input private from an adversary that may corrupt certain subsets of the parties (to the extent allowed by knowing the output of f) [5,6]. The PIR setting is useful on its own right, as a minimalistic useful client-server setting of MPC, where the goal is to minimize communication complexity, potentially minimizing the overhead on the client, which may be much weaker than the server.

In [4], the authors proposed the following approach to constructing PIR protocols, which captures some of the previous protocols for three servers or more, and put forward a new three-server PIR protocol, with the best known asymptotic communication complexity to date.

Let us describe their general framework, for a k-server PIR.

1.1. Biko’s Framework for K-Server PIR

The framework uses two key building blocks. One is a pair of k-party linear secret sharing schemes over abelian rings , respectively. The pair is also equipped with a share conversion scheme from with respect to some “useful” relation . That is, for a value s shared according to , the scheme allows locally (performing a computation on each share separately) computing a sharing of a value o according to , such that holds (note that this is generally non-trivial, as the conversion is performed locally on each share, without knowing anything about the other shares or the randomness used to generate the sharing).

In [4], used small (constant) finite rings. The notion of share conversion employed in [4] generalized [7]’s work on locally converting from the arbitrary linear secret-sharing schemes without changing the secret, by allowing an arbitrary relation between the secrets and by allowing to be linear over different rings. The second building block is an encoding of the inputs as a longer element . The PIR protocol has the following structure:

- The client “encodes” the input via for a quite large, but not very large from a certain code .

- Every bit is shared via . The client sends each share to server .

- The servers are able to evaluate locally any shallow circuit from a certain set roughly as follows. At the bottom level, we use the linearity of to evaluate a sequence of gates taking linear combinations of the ’s over . Let y denote the resulting shared values vector. Apply the share conversion to the output of each of the ’s to obtain a converted vector of values over . Finally, locally evaluate some linear combination of the elements , using the linearity of . Each server sends its resulting share to the client. The particular circuit to evaluate is that of an “encoding” of their database, in turn viewed as a function .

- The client reconstructs the output value from the obtained shares, using ’s reconstruction procedure. It then decodes from (the decoding procedure takes only as input; it does not use x or the client’s randomness).

Note that the “privacy” property of takes care of keeping the client’s input private from any single server. It is not known how, and likely impossible, to devise a share conversion scheme for a relation that is sufficiently useful to compute locally all functions following the framework suggested above, in a way that encodes every . Therefore, to encode all functions , BIKOencodes the inputs x and function using a larger parameter . To gain in communication complexity, the code is carefully chosen so that the resulting family implemented by the above protocol is as large as possible (relative to l). More precisely, its VCdimension is at least , allowing one to implement any Boolean function on . On the other hand, we must be able to match it with a relation R for which a share conversion exists (Recall that the VC dimension captures the ability of a set of functions to “encode” any function by restriction to a subset of size of values. Here, D is typically larger than . By Saur’s lemma, the VC dimension is very closely related to the size of the family .) There is a tradeoff between the share conversions we are able to devise and the complexity of . The larger in the above scheme is, the smaller l that can be used for the encoding, and the lower the communication complexity of the resulting PIR protocol. Note that the framework yields constructions where the communication complexity of bits is dominated by the client’s message length and only constant-length server replies. On the other hand, for more complex , one would need share conversion schemes for “trickier” relations, which are harder to come by.

Only a few families of instantiations of the framework are known, with [4]’s particular instantiation for three-server PIR as one example. Other instantiations are implicit in some previous PIR protocols; see Section 2, or [4] for additional examples and more intuition.

1.1.1. BIKO’s New Family of Three-Server PIR

In their new three-server PIR, the work in [4] instantiated the components of the above framework using a certain (family of) rings , for which suitable encodings and share conversion exist. Details follow. The rings are parameterized by three numbers , where are distinct primes. Let us denote . The various components are instantiated as follows:

- is the (2,3)-CNF secret sharing scheme for the ring , for certain , where are distinct primes. is the three-additive secret sharing over the ring , for some .

- Let . The input is encoded as an element u of an S-Matching Vector (MV) family of size at least over [8]. Briefly, for , such an S-MV family is a sequence of vectors , where for all i, , and for all , . In particular, [8] demonstrates that an S-MV family of any VC dimension exists for all where are distinct primes for S as small as ; the set of all elements in that are zero or one modulo each of the ’s, expect for zero. In particular, the vector’s length for an instance corresponding to VC dimension is .

- A share conversion between and for a relation is obtained for certain triples . Informally, this relation maps to zero and zero to some non-zero value in . This type of share conversion scheme is another novel technical contribution of [4] (and the focus of our work).

The complete BIKO construction is roughly as follows:

- The client sends an encoding of its input , which belongs to the the MV-family (here, can be achieved). The servers locally evaluate the following circuit:using the following “noisy” procedure:

- Generate the vector of values where . This evaluation uses the linearity of CNF and produces a local -CNF over the share of each value .

- Apply share conversion from to as specified above on each , obtaining a vector of ’s, shared according to .

- Locally evaluate , and then, send () shares to the client. The client outputs zero if this value is zero, and one otherwise.

It is not hard to see that the above construction always produces the correct value. In particular, by the definition of , only (the conversion of) contributes a non-zero value to .

Theorem 1

([4], informal). Assume there exists a share conversion scheme from -CNF over and three-additive over for some β, where is as above. Then, there exists a three-server PIR with communication complexity , where . The best asymptotic communication complexity is obtained by setting , using [8]’s -MV family over over with and a share conversion with respect to for . In particular, the servers’ messages are of length .

Intuitively, having S-MV families for a “small” S as above facilitates the construction of share conversion schemes as we needed for , since a small S imposes fewer constraints on the pairs in the relation.

To conclude, it follows from Theorem 1 that to obtain three-server PIR with sub-polynomial (in database size) CC, it suffices to design a share conversion for from to over groups corresponding to any triple of parameters as described above. Another useful contribution of [4] is reducing the question of whether such a conversion exists (for some ) to a system of equations and inequalities over ; see the following sections for more details on the usefulness of this characterization (a solution to the system corresponds to the share conversion scheme).

1.1.2. On the Choice of Input and Output Schemes

In retrospect, trying to convert from CNF to additive is a natural choice. For once, the CNF scheme is known as the most redundant secret sharing scheme and additive is the least redundant one [7]. More specifically, CNF sharing is convertible to any other linear scheme over the same field for the same access structure, or one contained it with respect to the identity relation. This implies that for any relation, if share conversion between two linear schemes exists for that relation, it must exist for CNF to additive. Here the more standard linearity over fields is considered, and the same field us used for both schemes. Still, for the more general notion of linearity over rings, and allowing different rings for the two schemes, this still provides quite strong evidence that starting with CNF, and trying to convert to additive is a good starting point. As to the choice of the structure of the particular over which the conversion is defined, following are some of [4]’s considerations for their choice. One observation is that trying to convert from, say, to would require finding a solution to a system of inequalities over for a fixed p. This problem is generally NP-complete, which is likely to make the problem hard to analyze, even in this special case. Thus, the authors extend the search to allow conversion to three-additive over some extension field . As is constant, it does not have much effect on the share complexity of the resulting PIR protocol.

Using composite sizes for both and would lead to a greatly improved VC dimension of , with respect to the canonical relation , where . In fact, the that can be locally evaluated over is universal, resulting in near-optimal communication complexity. However, [4] have proven this type of conversion does not exist, indicating that for smaller S for such could also be hard to find.

1.2. Our Contribution

As described above, the contribution of [4] was two-fold. First, they put forward a useful framework for designing PIR protocols, capturing some of the best-known three-server (or more) PIR protocols. Second, they put forward an instantiation of the framework, which reduces the question of the existence of a three-server PIR protocol to the existence of a share conversion for certain parameters and certain linear sharing schemes over abelian rings determined by the parameters. They further reduced the question of the existence of a share conversion as above for parameters to the question of whether a corresponding system of linear equations is solvable over . This system in turn is not solvable over some extension field iff a certain system of both equalities and inequalities over is solvable over some field .

While the solvability of a system can be verified efficiently for a concrete instance, it does not provide a simple condition for characterizing triples for which solutions exist. More concretely, even the question of whether an infinite set of such triples exists remains open. We resolve this question in the affirmative, proving the following.

Theorem 2.

Let where are primes. Then, a share conversion from -CNF over to three-additive over exists for some .

In a nutshell, our goal is to study the solvability of the particular system from [4] corresponding to a given . Our main tool is a clever form of elimination operations on the (particular) matrix , so that at each step, the intermediate system is solvable iff is. The special type of elimination we develop is useful here as it allows keeping our particular system relatively simple at every step. This is as opposed to using standard Gaussian elimination, for instance, which would have made the resulting system quite messy. The operations are largely oblivious of the particular value of until a very late point in the game. The proof consists of a series of a (small) number of our elimination steps. On a high level, we perform a few such steps. At this point, the matrix becomes quite complicated (at the end of Section 4.2). Then, we perform a change of basis (Section 4.5) to facilitate another convenient application of the step. At this point, we are ready to complete the proof by directly proving that the system is not solvable (and thus, a share conversion exists).

1.2.1. On the Potential Usefulness of Our Result for PIR

In this work, we identified a certain infinite set of parameters for which a share conversion as required in Theorem 1 exists. This result itself does not appear to yield improved three-server PIR protocols by instantiating the share conversion with the newly-found parameters via Theorem 1. Indeed, increasing beyond the minimal possible (which was already known) does not seem to help. However, Theorem 1 generalizes to m’s, which are products of a larger number of primes. If a share conversion for derived from such exists, it can be paired with an -MV family (which is also known to exist from [8]) with significantly improved (over m a product of two primes) VC dimension, to obtain an improved three-server PIR seamlessly. Specifically for , an MV family with VC dimension in for exists.

Therefore, it remains to design a corresponding share conversion scheme (or prove it impossible to rule out this direction). Furthermore, the linear algebraic characterization of the existence of such sharing schemes remains the same. Therefore, hopefully, the analytic techniques we developed here for the the case where m is a product of two primes that could help understand the case of a larger number of primes.

1.2.2. Road Map

In Section 2, we refer to some related work on PIR. In Section 3, we include some of the terminology and preliminary results from [4], which is our starting point. In Section 4, we present our main result, which is broken into subsections as follows. As explained above, we start with the reduction of the problem by [4] to verify whether a certain matrix-vector pair , spans b. We perform a series of elimination steps on the matrix to bring it to a simpler form so that spans iff spans b. All elimination steps are of the same general form. Section 4.1 formalizes this form as a certain lemma. Section 4.2 outlines an initial sequence of applications of that lemma. In Section 4.5, we perform a change of basis to help facilitate further use of the lemma. In Section 4.6, we indeed apply the lemma once again. Finally, in Section 4.7, we obtain a simple enough matrix for which we are able to prove spans directly for the proper choice of parameters, proving our positive result.

2. Related Work

2.1. Schemes with Polynomial CC

As stated in the Introduction, our work is a followup on a particular approach to constructing PIR protocols [1], focusing on servers (almost the hardest case). We will survey some of the most relevant prior works on PIR (omitting numerous others). Already in [1], the authors suggested a non-trivial solution to the hardest two-server case, with communication complexity , and more generally, for servers. Building on a series of prior improvements, the work in [9] put forward protocols with CC for large k. Their result also improved upon the state-of-the-art at the time for small values of k, in particular achieving CC of for , improving upon the best previously-known CC of .

Work Falling in the Framework on BIKO

In [9], they restated some of the previous results in a more arithmetic language, in terms of polynomials. Furthermore, they considered a certain encoding of the inputs and element-wise secret sharing the encoding, which is somewhat close to the BIKO framework.

Particularly interesting for our purposes is their presentation of a “toy” protocol achieving CC of from earlier literature reformulated in [9], which constituted one of the building blocks of the construction of [9]. That protocol was almost an instance of the BIKO framework as sketched in the Introduction, and it is instructive to consider the differences (the full-fledged result of [9] used additional ideas, and we will not go over it here for the sake of simplicity).

- The client “encodes” its input via where by mapping the input to a vector with exactly two ones and zeroes elsewhere.

- Every bit is shared via , which is -CNF over .

- The servers represent their database as a degree-two polynomial in (where all monomials are of degree exactly two). To evaluate a polynomial:proceed as follows:

- (a)

- At the bottom level, perform a two-to-one share conversion for evaluating each monomial, where the output for gate is a three-additive sharing of the result . In some more detail, let denote the additive shares produced in Step 1 of the CNF-sharing. The computation is made possible since , which needs to be computed; for every share-monomial , at least one of the servers does not miss any shares in it. Each server outputs the sum of the monomials it knows as its new share.

- (b)

- Using the linearity of the three-additive scheme, the servers compute a three-additive sharing , based on the shares of the individual monomial evaluations .

Each server sends its resulting share to the client. - The client reconstructs the output value from the obtained shares, as in BIKO.

This framework differs from BIKO in a few ways. First, the structure of the circuit locally evaluated is different. In the above example, “many-to-one” rather than “one-to-one” secret sharing is used at the bottom level. Then, the linearity of is employed on the upper level. In BIKO, first, the linearity of is used to evaluate a vector of linear combinations, then a one-to-one share conversion from is used at the middle level, then again, the linearity of is used at the top gate. Besides the different structure of the circuit, using many-to-one share conversion is the main conceptual change. It employs the extra property of -CNF, which allows it to evaluate degree-two polynomials, rather than just linear functions. In the evaluation process, the output is already converted to the less redundant three-additive sharing. The relation the share conversion respects is just the evaluation of the monomial: iff . Conceptually, local evaluation of linear functions as used in BIKO is in fact already a many-to-one share conversion from a linear scheme to itself, evaluating a relation , which is satisfied iff for a certain linear function ℓ.

2.2. Schemes with Sub-Polynomial CC

The first three-server PIR protocol with (unconditionally) sub-polynomial (in ) CC was put forward by [10]. It falls precisely into BIKO’s framework, using a share conversion from the so-called -modified Shamir secret over for where are odd primes to three-additive over the field where p is some prime, such that m divides .

To get to three, rather than four servers, some additional properties of were in fact required. The share conversion is with respect to the relation (actually, a slightly stricter relation, even). Concretely, the work in [10] found an example of such groups as above obtained from , , having an additional useful property, which allowed going down from four to three servers via computer-aided search. We note that a share conversion from -CNF to three-additive over that pair of groups also exists since -CNF can be converted to any linear scheme for an access structure containing a two-threshold (including modified -Shamir) [7].

The encoding used is via a -MV family over , as in [4]. The evaluation points in the Shamir scheme used are tailored to in the corresponding MV family. It achieves a CC of , later improved to by BIKO’s construction.

Qualitatively, the result of [10], preceded by [11] in terms of using a similar idea, and most later constructions greatly improved the CC relative to earlier constructions such as [9] by using MV-codes rather than low-degree polynomial codes. The former codes had a surprisingly large rate. Indeed, looking at MV-families from the perspective of [12], these are also polynomial codes, over a basis of monomials corresponding to an MV family, rather than low-degree monomials.

All the above examples generalize to larger k, with somewhat improved complexity; we focused on the (hardest) here for simplicity.

Two-Server PIR

In a breakthrough result, the work in [13] matched the CC of two-server PIR with the CC of the best-known three-server PIR, improving from the best previously-known to . Their work cleverly combined and extended several non-trivial ideas, both new and ones that appeared in previous work in some form. In a nutshell, one idea is to encode inputs via MV codes, where the operations on the vectors are done “in the exponent”, resulting in a variant of MV codes as polynomials, as defined in [12] and implicitly used in [10]. Another idea is to output both evaluations of these “exponentiated” polynomials and the vector of their partial derivatives, to yield more information to facilitate reconstruction given only two servers.

As for casting their result into the BIKO framework(we believe it is a meaningful generalization thereof, as we describe below):

- The client encodes its input via a vector in an -MV family (with l about as explained above).

- Every bit is shared via , which is the -modified Shamir scheme over . The evaluation points are zero and one. We note that the choice of m is not as constrained as in the construction of [10], and many other pairs would work as well. This holds as the purpose of the polynomial involved is somewhat different.

- Let denote the ring . Let , which has multiplicative degree six in . The servers are able to evaluate locally the encoded database:roughly as follows:

- (a)

- At the bottom level, use the linearity of to evaluate locally each .

- (b)

- Convert each of the ’s into a -sharing scheme over a ring , obtaining a vector of ’s. The conversion is a many-to-one conversion with respect to a relation R over the entire input vector, the current and , so that that outputs if is in , and otherwise undefined.

- (c)

- Use the “almost linearity” of over our particular subset of values to evaluate . Here. “almost linearity” means that to evaluate a sum of shared secrets, we add some of the coordinates and copy other coordinates.

- The client reconstructs the output value from the obtained shares, according to (the reconstruction function is of degree-two.) In a nutshell, the coefficient is the only one determining the free coefficient of a degree-six univariate polynomial obtained from restricting F to the line , where z is a random vector. The reconstruction procedure uses the shared evaluations as derivatives to compute this free coefficient, which is non-zero iff is one. In the original protocol description of [13], the client used the input for reconstructing the output, which is not consistent with share conversion, where the input is not required for reconstruction. We restate the above protocol by letting the servers “play back” the original shares to the client to stay consistent with the BIKO share conversion framework.

To summarize, the construction falls into an extended BIKO framework, where the middle-layer share conversions are many-to-one rather than one-to-one. Furthermore, the scheme is non-linear and is defined over a non-constant domain. It is important to observe that the relations used for the conversions are independent of the database function F itself, but only depend on its size. The locally-evaluated relations are as small as , similarly to BIKO. One potential difficulty with using such an extended design framework is in verifying whether a many-to-one share conversion exists, or even one-to-one for a large share domain of , as we have here.

One question that remains open in the two-server setting is to make the server’s output size constant as in [4,10] for three servers.

3. Preliminaries

3.1. Secret Sharing Schemes

For a set and sequence of shares , we denote by the sequence of shares . A secret sharing scheme for n parties implements an access structure specified by a monotone function specifying the so-called qualified sets of parties that recover the secret (preimages of one), while other sets learn nothing about the secret (in this paper, we consider the standard perfect setting). We refer to such a scheme as an n-party secret sharing scheme. More formally, an n-party secret sharing scheme is a randomized mapping:

where S is a finite domain of secrets and R is a randomness set, while are finite share domains such that the following holds:

- Correctness: For all with , referred to as qualified sets, and all , we have:We use sets and their characteristic vectors interchangeably.

- Privacy: For all with and all , the following distributions are equal.

Access structures where the set of qualified subsets is exactly those of size some t or more, are called threshold access structures.

We say that a secret sharing scheme is linear over some ring G, generalizing the standard notion of linearity over a field, if for some for some finite Abelian ring G. Each share consists of one or more linear functions for the form (Observe that unlike linear schemes over a field, such a scheme does not always have perfect correctness for all secrets , but this is not required in our context. We will require perfect privacy though.) A useful property of such schemes is that they allow evaluating locally linear functions of the shares. That is, for a pair of sharings of some , respectively, (performing addition coordinate-wise on each group element in the share vector) is a sharing of . Similarly, for , is sharing of .

See [14] for a survey on secret sharing.

Let us recall the well-known schemes -CNF and 3-additive for completeness. The schemes are more general, -CNF is a special case of -CNF and 3-additive is a special case of -DNF with . Here we explicitly recall the definitions only of the special cases we need.

A 3-additive secret sharing scheme over a ring G is a randomized mapping , where , such that . It is not hard to see that indeed implements an access structure where only the set is qualified.

A -CNF over a ring G is defined by a randomized mapping for , . It is defined as follows

- Calculate a three-additive sharing .

- Output shares where equals the two elements from the tuple in one, which are not at its index. For example, .

It is not hard to see that -CNF is indeed a 2-threshold scheme. It is also not hard to see that the above schemes are linear over their respective rings.

A -modified Shamir scheme over a ring G is defined by a randomized mapping outputs shares , where is a distinct constant ‘evaluation point’.

It is not hard to see that -CNF is indeed a two-threshold scheme. It is also not hard to see that the above schemes are linear over their respective rings.

3.2. Share Conversion

We recall the definition of (generalized) share conversion schemes as considered in our paper. Our definition is exactly the definition in [4], in turn adopted from previous work.

Definition 1

([4]). Let and be two n-party secret-sharing schemes over the domains of secrets and , respectively, and let be a relation such that, for every , there exists at least one such that . A share conversion scheme from to with respect to relation C is specified by (deterministic) local conversion functions such that: If is a valid sharing for some secret s in , then is a valid sharing for some secret in such that .

(In [4], they referred to such share conversion schemes as “local” share conversion. As this is the only type we consider here, we will refer to it as simply “share conversion”.)

For a pair of Abelian groups (when are rings, we consider as groups with respect to the operation of the rings), we define the relation as in [4] ( will be clear from the context, so we exclude the groups from the definition of to simplify notation).

Definition 2

(The relation CS [4]). Let and be finite Abelian groups, and let (when are rings, we will by default refer to the additive groups of the rings in this context). The relation converts to any nonzero and every to . There is no requirement when . Formally,

Given , where are primes and p is a prime, we consider pairs of rings . We denote .

3.3. Our Starting Point: The Modeling of [4]

In this work, we study the existence of share conversions for three parties with respect to the canonical relation as above, from -CNF to three-additive for various parameters .

Our starting point is the characterization from [4] of triples for which a share conversion with respect to as above exists via a linear-algebraic constraint.

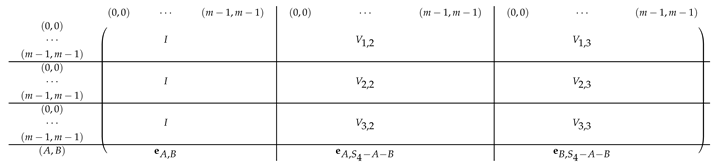

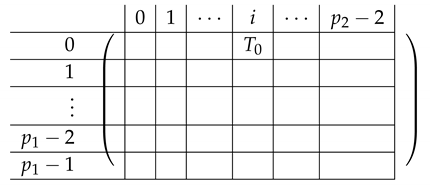

In some more detail, consider as above. A share conversion from -CNF to three-additive exists for iff a certain condition is satisfied by the following matrix over .

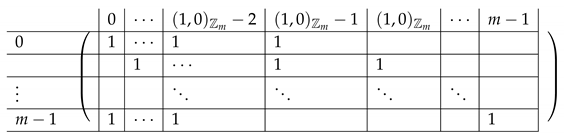

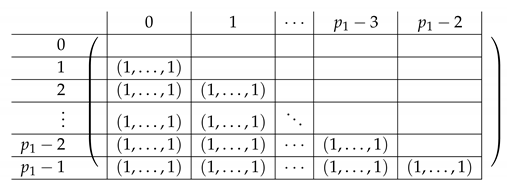

In the matrix , the rows are indexed by triples , corresponding to -CNF sharings of some . Namely, are the (additive) shares generated by in Step 1. The rows corresponding to form . The rows corresponding to form . The columns of are indexed by values in . Intuitively, an index of a column corresponds to share of the -CNF scheme being equal to . Row has ones at three locations: , and zeros elsewhere. That is, there are zeros at columns corresponding to the shares output by corresponding to produced in Step 1 of ’s execution.

We are searching for a vector u that by multiplying it with each row of the “equality rows” of the matrix, it will be equal zero, and by multiplying it with one of the inequality rows, it will not be equal to zero.

The solution vector u (and thus, the columns of the matrix) is indexed by . The index of an entry corresponds to a CNF-share that equals , and the value at this index is the value in to which share is converted.

Indeed, it is not hard to see that a share conversion scheme exists iff a solution to the system:

exists.

Some basic linear-algebraic observations imply that the above is equivalent to the fact that the rows of do not span some row of over (this simplification matters, as it does not require us to know in advance, if it exists).

Furthermore, the work in [4] provided a quantitative lower bound on , depending on the degree difference between and (the latter is not significant towards our goal of just understanding feasibility). Their characterization is summarized in the following theorem.

Theorem 3

(Theorem 4.5 [4]). Let . Then, we have:

- If , then there is no conversion from -CNF sharing over to additive sharing over with respect to , for every .

- If , then there is a conversion from -CNF sharing over to additive sharing over with respect to .Furthermore, in this case, every row v of is not spanned by the rows of .

Moreover, it was proven in [4] that the above is in fact equivalent to having the rows of not span any row of . The latter simplification is a result of certain symmetry existing for the particular relation and secret sharing schemes in question.

Corollary 1.

For every row v of , v is not spanned by the rows of iff there exists some for which a conversion from -CNF sharing over to additive sharing over with respect to exists.

Theorem 3 provides a full characterization via a condition that given can be verified in polynomial time in . More precisely, the size of our matrix is (in fact slightly smaller, at , if working with Corollary 1, but this is insignificant), so verifying the condition amounts to solving a set of linear equations, which naively takes about time, or slightly better using improved algorithms for matrix multiplication, and the running time cannot be better than using generic matrix multiplication algorithms. Thus, the complexity of verification grows very fast with m, becoming essentially infeasible for circa 50.

In any case, direct verification of the condition on concrete inputs does not answer the following fundamental question.

Question 1 (Informal).

Do there exist infinitely many triples for which a share conversion scheme from -CNF to three-additive secret sharing (with parameters as discussed above) exists?

It was conjectured in [4] that all tuples where and are all odd allow for such a sharing scheme. We answer this question in the affirmative. While it is not clear that our result may be directly useful towards constructing better PIR schemes, our work is a first step towards possibly improving PIR complexity using this direction, in terms of the tools developed. See the discussion in Section 5 for more details.

To resolve this question, we develop an analytic understanding of the condition for the particular type of matrices at hand. Our goal is to simplify the matrices into a more human-understandable form, so we are able to verify directly the linear-algebraic condition for infinitely many parameter triples.

3.4. Some Notation

In this paper, we will consider matrices over some field , typically over a finite field . For matrices with the same number of columns, denotes the matrix comprised by concatenating B below A. For matrices with the same number of rows, we denote by the matrix obtained by concatenating B to the right of A. For entry of a matrix A, we use the standard notation of . More generally, for a matrix , for subsets of rows and of columns, denotes the sub-matrix with rows restricted to R and columns restricted to C (ordered in the original order of rows and columns in A). As special cases, using a single index i instead of R (C) refers to a single row (column). A “·” instead of R (C) stands for ().

We often consider imposing a block structure upon a matrix A. The block structure is specified by a grid defined by a partition of the columns into non-empty sets of consecutive columns and a partition of the rows into non-empty sets of consecutive rows . The matrix A viewed as a block matrix is not a matrix where entry is the sub-matrix . We denote the block matrix obtained from A by V (for instance, a matrix named is replaced by ). In a block matrix V, we typically index the matrix by subscripts: denotes (the matrix at) entry of V. For instance, denotes entry in (sub)matrix .

Typically, the ’s and the ’s are of the same size, and start at zero. Sometimes, the sets will not be of the same size (typically, the first or last set will be of a different size than the rest). Furthermore, most generally, may start elsewhere. Additionally, the indices may be consecutive modulo u (v), so one of the sets () may not consist of truly consecutive indices.

Most of the time, index arithmetic will be done modulo the matrices’ number of rows and columns (we will however state this explicitly).

4. Our Result

4.1. Starting Point and Main Technical Tool

Starting off with Corollary 1, it suffices to prove the following theorem.

We prove the following result.

Theorem 4.

Assume , , and . Then, there exists a row v in such that does not span v.

As a corollary from Corollary 1 and Theorem 4, we immediately obtain:

Corollary 2.

Assume , , and . Then, there exists some for which a conversion from -CNF sharing over to additive sharing over with respect to exists.

To prove Theorem 4, we choose any vector v in the , outside of , and prove that does not span v. The particular choice of v we will make is a somewhat convenient choice, but any v will do, so we will fix it later. To this end, we apply carefully-chosen row operations, but in a specific way, so we (as humans) can understand the matrices that result.

Our main technical tool is the following simple lemma.

Lemma 1.

Let A denote a matrix in , and let . Let denote non-empty sets of rows and columns, respectively. obtained from A by a sequence of row operations on A, so that is a basis of , and the rest of the rows in are zero. Then, span iff span .

The proof of the Lemma follows by the fact that any solution to must have zero at indices corresponding to , to obtain zero at the coordinates corresponding to (and the fact that row operations on do not change the solvability of a system ).

We start with and need to resolve the question of whether span v. On a high level, we proceed by applying the following lemma several times, thereby reducing the problem to considering a certain submatrix of the original matrix.

4.2. A Few Initial Elimination Sequences

4.2.1. Elimination Step 0

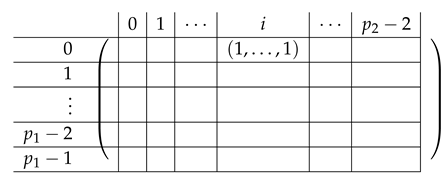

We introduce some more notation we will use along the way. We think of the matrix as divided into blocks of . We denote constants by capital letters (e.g., a secret value , or index ) and running indices by small letters, typically . Row 1 of the block matrix V, is indexed inside by , going over all that constitute an additive sharing of . Here, denotes a single element of , corresponding to its residues modulo and , respectively. We omit the subscript when it is clear from the context whether a pair is in or in . Similarly, rows correspond to , respectively. The rows inside rows in the block matrix are internally indexed by (c is determined by as ), where the ’s are ordered lexicographically (we stress that the particular order is not important for analyzing the rank of the matrix, but it will play some role in creating a matrix that “looks simple” and is easier to understand). The last block is indexed by some to be chosen later; as follows from Corollary 1, any can be chosen.

Column in the block matrix is internally indexed by the CNF-share received by . Column is indexed by (share received by ), and is indexed by (share received by ). As in the case of rows, the values internally indexing a column of V are ordered lexicographically.

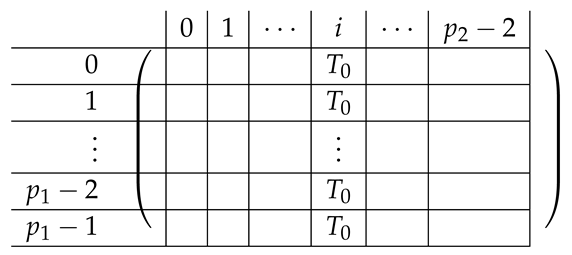

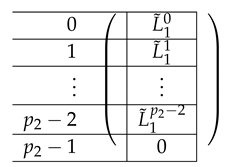

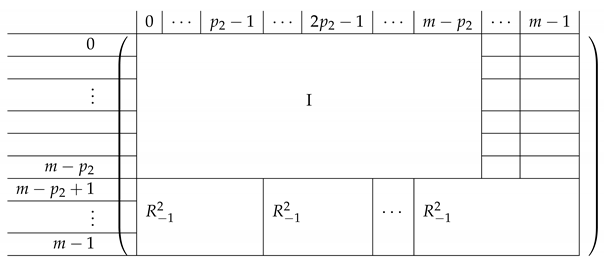

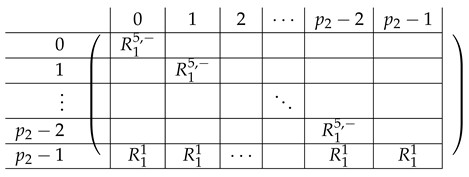

Pictorially, the matrix , has the following general form.

|

In the above, I denotes the identity matrix, and each indeed equals I. The other ’s for are permutation matrices, and denotes a row vector with one at index , and zeroes elsewhere.

For a fixed , row equals for Block Columns respectively.

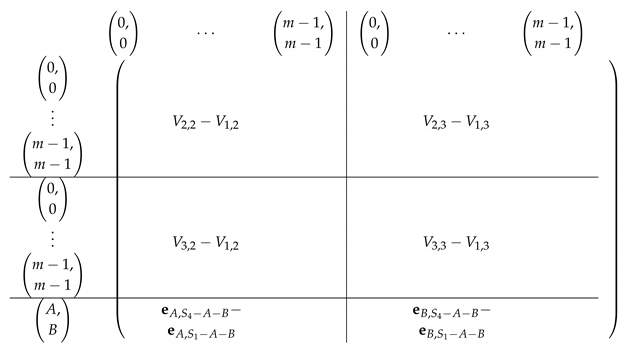

Next, we apply Lemma 1 to with , and ( corresponds to the first column in the block). The sequence of operations to b-zero the columns in is carried out by subtracting from each row (indexed by ) in Blocks the corresponding row (indexed by ) in Block 1. The resulting matrix (of size ) is described in the following.

4.2.2. Elimination Step 1

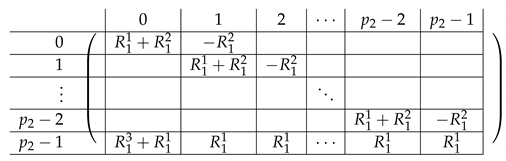

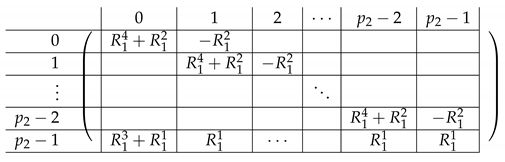

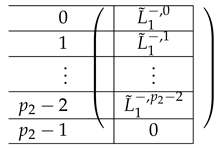

We view the matrix as a block matrix with the same subdivision into blocks as in (with one less block in both rows and columns, with Row 1 and Column 1 removed). Let denote the corresponding block matrix.

Consider the row indexed by in for (corresponds to Block Rows respectively in ). We have:

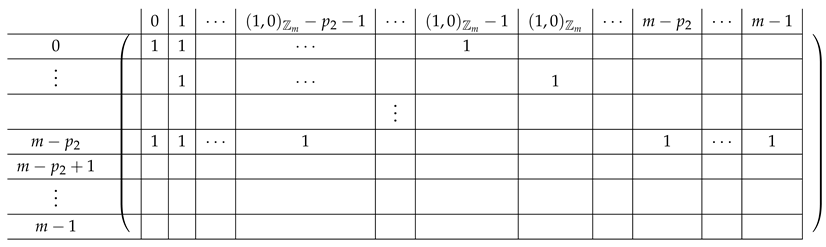

Observation 1.

The row indexed by - equals:

Here, for .

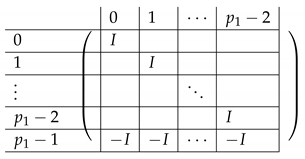

The resulting matrix is depicted next.

|

Next, we find a basis for and apply Lemma 1 again, “getting rid” of the first block column in . For , let .

We will need another simple observation.

Observation 2.

The rows of for are a permutation of the sequence of all vectors of the form . Additionally, , and therefore, generates .

We are now ready to demonstrate that the set of rows of , according to the coordinate of one in their row: constitutes the basis of for , as we seek. Therefore, we will again be able to apply Lemma 1. The other rows are spanned by this set, in one of two ways. We classify them into two types according to these ways. We refer to them as Type 1 and Type 2.

Type 1 constitutes of rows in indexed by with , for which the location of the ‘1’ in is for c as above. We observe that every such row is spanned by , as for every , we have:

Here, the transition from Equation (2) to Equation (3) is by Observation 1 and the observation that for a fixed a, is a permutation over , so the change of coordinates we perform is valid. We b-zero rows of of Type 1 by adding all other rows of the form to each of them.

Type 2 includes all rows in for are of this type. Consider a row indexed by in (in particular, the row in block is of that type). It is spanned by rows in as follows:

Here, the transition to Equation (8) is by observing that a telescopic sum is formed, where all but the first and last ’s in the sum cancel out. Note that the number in the superscript of the sum indeed exists, as generates , and so, in particular, for some integer .

Rearranging the equality above, we get:

That is, we have identified the linear combinations of rows in that add up to .

4.3. Elimination Step 2

After applying Lemma 1 to each row to Type 1 and Type 2 and making it zero, we turn to considering the remaining matrix . It is a block matrix of size , with blocks corresponding to in , with , and one row, respectively. Let us calculate the form of rows of both types, when restricted to (following elimination step 1 from Section 4.2.2).

Type 1 in : From Equation (2), we conclude that for a fixed a, for the (single) b such that , we have:

Type 2 in : This type consists of row blocks in . By construction, we have:

4.4. Permuting the Columns

Next, we permute the columns of , to gain insight into its form. Note that this does not affect the question of whether (last line) is spanned by the rest of the rows in , . We refer to the new matrix as and the resulting block matrix as .

The permutation is as follows: the content of a column indexed by moves to in the new matrix. We therefore obtain the following matrix M for Types 1 and 2, respectively (from Equations (12) and (16)).

4.4.1. Type 1

Here, the last transition is due to the fact that dividing by is a permutation over (as is invertible).

4.4.2. Type 2

For , we have:

4.4.3. Summary

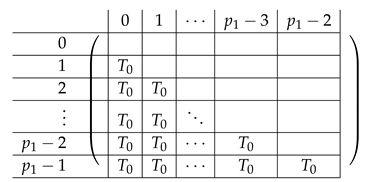

We have obtained a matrix with rows of the following form.

- Type 1 (contributed by Row Block 1 in ) yields all vectors of the form:for .

- Type 2 (contributed by Row Block 2 in ) yields all vectors of the form:For all pairs .

- The last line of is subsequently referred to as Type 3. Similarly to Type 2, it follows from Equation (23) that the line in the last block () has the form:Here, is some pair of constants (corresponding to to be chosen above) to be fixed later.

In the above, in the sum limit corresponds to “lifting” the element of into and viewing as an integer in . Note that no “wrap around” occurs as in , so we get the correct number in the sum limit.

4.5. A Change of Basis

Occasionally, we will refer to rows as matrices, with rows indexed by b and columns by c.

As we have witnessed, so far, we have performed applications of Lemma 1 on the original matrix A, which was a simple block matrix of size blocks, and the upper three row blocks had three cells of size m m each and another “block” row with three cells of size 1 × m each cell. By performing two applications of the lemma, we obtained a much smaller matrix . Namely, we obtained a block matrix with 3 × 1 blocks, with cells of size m × m, m m, and 1 × m, respectively. The price we have paid for the reduction in size is that the structure of the matrix has became more complex. In particular, it is no longer clear how to identify additional row sets to continue applying the lemma conveniently.

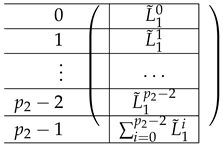

To create a matrix of more manageable form, we perform a change of basis. We suggest the following basis for the row space of . . Here:

and:

In all indices here and elsewhere, the arithmetic is over .

Indeed is a basis of . To prove this, we first note that it is an independent set. Roughly, this follows by separately considering the vectors in B with nonzero values in each row separately and the fact that divides m.

Next, we show that the rows of are indeed spanned by the above set of vectors. Furthermore, the matrix re-written in this basis will have a nice block structure that we will be able to exploit for the purpose of using Lemma 1.

We denote by a vector in and by a vector in .

To rewrite our matrix , we will specify an ordering of the vectors in B, from left to right

- The columns in come first, in increasing order of c, starting from zero.

- The columns of the form in are ordered on several levels:

- (a)

- On the highest level, order ’s according to the index i above, starting from zero up to . There are blocks of this form on the highest level.

- (b)

- For a fixed i, order ’s according to increasing order of j starting from zero, up to .

- (c)

- For fixed , order in increasing order of , starting from zero up to .

We order the rows of the matrix as follows:

- The rows of Type 2 appear first, then Type 1, then Type 3 (the distinguished row to span via the others).

- Within Type 1, we denote .

- Within Type 2, denote by the vector . Consider one such vector, , We order these vectors according to i, then j, then b, where . Similarly, for Type 3, denote .

Let us now study the form of the resulting matrix, divided into blocks, as follows from the representation of the various vectors in in basis B.

Let us represent the matrix as a block matrix, then we further break down each block into lower level blocks as follows. Let us denote the new matrix by .

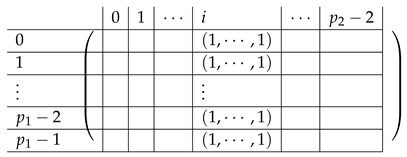

The Type 2 set of rows in has structure as depicted in the following matrix. . Let us describe each of the matrices below.

4.5.1. The Matrix

Here, the “right side” is a block matrix. Its contents are as follows.

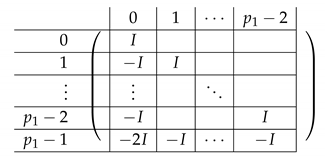

|

The left-side matrix is a block matrix of size (where indeed the number of rows in each of its blocks is consistent with that of ). It has the following structure.

|

We refer to this partition into blocks of as the “Level-1” partition of .

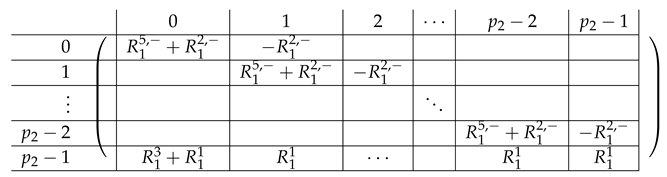

We continue next with describing the “Level-0” detail of of . The matrix for is a block matrix of the following form:

for a matrix to be specified later. Note that by the structure of , the last matrix is a block matrix of size , where each block equals .

|

The matrix is a matrix of the following form.

|

Here, I is the identity matrix. The matrix is a matrix of the following form.

|

Here, is a matrix, as above. Finally, the matrix is a block matrix of the following form.

|

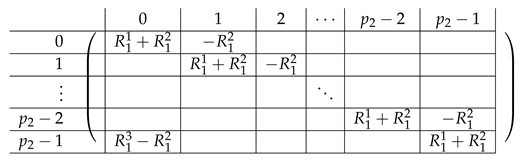

It remains to specify . It is a circulant matrix of the following form.

|

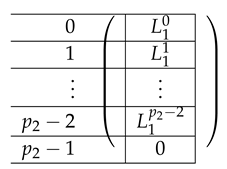

4.5.2. The Matrix

We choose . Then, the line is of the form:

4.5.3. The Matrix

The matrix is of the form . To describe the left and right parts, we apply a certain transformation to and , respectively. First, view each as a block matrix comprised of blocks of size ( has blocks, and has ). Now, is obtained from by applying a linear mapping satisfying to each block X, replacing X by (it is not important how exactly we define it on other inputs). The matrix is obtained from by replacing each block by a linear transformation that maps I to the zero vector and to . That is, the resulting equals:

where equals:

|

|

The resulting matrix equals:

where the resulting is of the form:

|

|

4.6. Another Elimination Sequence

From now on, assume that and that . We leave the full analysis of other cases for future work. We are now able to apply Lemma 1. We perform this step for corresponding to the L-part blocks of and proceed in several steps. We perform the row operations starting at a grosser resolution and then proceed to finer resolution.

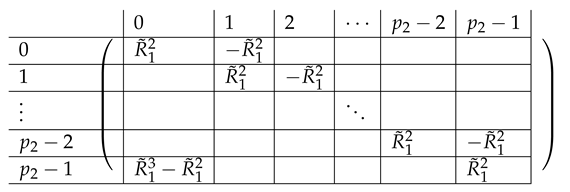

4.6.1. Step 1: Working at the Resolution of Level-1 Blocks

View as a block matrix of Level-1 as described above. Let denote the corresponding block matrix. Replace the last row of , by . We thus obtain a new matrix of the following form. has the same block structure as on all levels, so we do not repeat that, but rather only review its content.

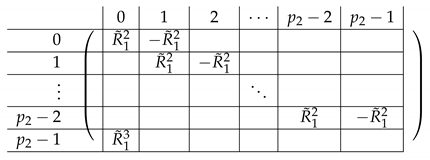

The resulting right side is as follows.

|

The resulting left-side matrix is:

|

We perform a similar transformation on , resulting in:

where equals:

and equals

|

|

4.6.2. Step 2: Working at the Resolution of Level-0 Blocks

Here, we view the matrix as a block matrix over Level-0 blocks. That is, denote by the row block corresponding to the Level-0 block inside the Level-1 block of . We transform into a matrix as follows.

For each , replace each row in with . The resulting matrix is of the form .

The right side is as follows.

where equals:

|

|

The resulting left-side matrix is:

where is of the form:

|

|

Finally, we apply a similar transformation to resulting in that equals:

where is of the form:

|

|

The resulting right-hand side is (there is no change, since the first block-row in is zero).

4.6.3. Step 3: Working within Level-0 Blocks

Here, we move to working with individual rows and complete the task of leaving a basis of the original ’s rows as the set of non-zero rows of the matrix obtained by a series of row operations. To this end, our goal is to understand the set of remaining rows in . In the part, each Level-0 block-column (with blocks of size ) has only (here, one appears m times) as non-zero rows, and in each row, there are non-zero entries in only one of the blocks. Thus, it suffices to find a basis for the set G of vectors.

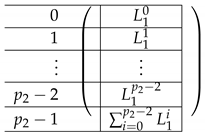

Lemma 2.

Assume . Then, the index set satisfies that is a basis for G. In particular, we have . Here, x is computed as follows: first calculate y as modulo (that is, in ). Then, we “lift” y back into () and then set x to be y modulo p – that is, x is an element of (note that all non-zero coefficients in the linear combination that results in indeed belong to I).

Another observation that will be useful to us identifies the dual of .

Lemma 3.

Assume . Then, the set of vectors:

is a basis of , where denotes the left kernel of the matrix M.

The observations are rather simple to prove by basic techniques; see Appendix A. Note that the general theory of cyclotomic matrices is not useful here, as it holds over infinite or large (larger than matrix size) fields, so we proceed by ad-hoc analysis of the (particularly simple) matrices at hand.

We handle the part first. We conclude from Lemma 2 that for every block specified by where , in , the rows indexed by (as in Lemma 2) span all rows in that block. Furthermore, for the purpose of Lemma 1, we b-zero the rest of the rows, by a sequence of row operations as specified by in Lemma 3, starting from row and moving forward up to . That is, for b -zeroing row (where ) in as above, we store the combination:

in row of .

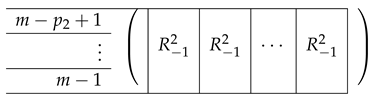

Overall, the resulting is as follows:

is identical to , except for replacing with .

That is, in the last block row all cells are , and there are such cells.

Here, is of the form:

|

|

Next, we handle the part. Here, we b-zero the remaining rows in by adding the right combination of rows in . The combination is determined by the “in particular” part of Lemma 3.

The resulting matrix is identical to , except for in each being replaced by . Here, has the form:

|

Here, becomes zero, which was our goal. Note that as opposed to previous transformations, the transformation performed on does not “mirror” the transformation performed on and in fact involves rows from both and . is identical to , except that in each Level-1 block for , the first row of (the content of this block) is replaced by:

It remains to b-zero the L-part of . For simplicity, we focus on (which is the only non-zero block in ) and then use the resulting linear dependence to produce the new row .

This results in:

4.6.4. A Reduced Matrix We will Analyze Directly

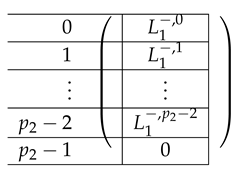

Taking to be the set of rows in that correspond to non-zero rows in and corresponding to L, we obtain the following matrix . On Level-1, it has a block structure similar to that of (where the number of rows changes in some of the matrices). More concretely, has the form:

|

Here, is identical to except that the top rows in it are removed. That is, it is identical to , except that the (0,0)th Level-0 block in replaces I by C, which are equal.

|

Similarly, is obtained from in the same manner. In this case, only zero rows are removed. is precisely (no rows were eliminated from there, as all corresponding rows on the left side became zero). Similarly, .

4.7. Completing the Proof - Analysis of

We are now ready to make our conclusion, assuming and . We stress that further analysis of the matrix is needed for identifying all p’s for which a share conversion exists. In fact, some of the detailed calculations of the resulting matrix structure are not needed for our conclusion, and we could instead identify only the properties that we need of various sub-matrices. However, some of the details may be useful for future analysis, so we made all the calculations.

Our last step is to reduce the matrix “modulo” the set G: for every row r in and every Level-0 block in this row, we reduce the contents of that row “modulo” . That is, we complement the basis of specified in Lemma 2 into a basis of , where is one of the added vectors and define a linear mapping L taking elements of to zero and other elements of the basis onto themselves (it is inconsequential what the other base elements are). Indeed, observe that is not in , as it is not in , as implied by Lemma 2. To verify this, observe for instance that:

We apply a linear mapping L taking to and other base elements to themselves. Recall that Level-0 blocks indeed have m columns each. We make the following observations. We let denote the resulting matrix.

Observation 3.

The rows of are zero.

Observation 4.

maps to .

Observation 3 follows easily from the form of the matrix and Lemmas 2 and 3, which implies that is exactly the kernel of S from Lemma 2 (this is the reason we need Lemma 2: it is easier to verify that a given vector is not in , rather than verifying it is not in ).

Observation 4 follows by the structure of and definition of L.

Now, if is spanned by the rest of the rows in , then it must be the case that the same dependence exists in . Thus, it suffices to prove that the latter does not hold. Assume for the sake of contradiction that:

for some vector v. In the following, we use for viewing v as a block vector with Level-0 blocks. Note that unusually for this type of matrix, the blocks in the first row have rows, and in other block row, the cells have m rows, as usual. Similarly, we use to impose Level-1 structure onto v.

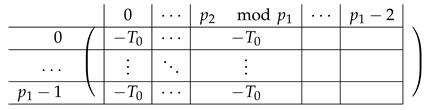

By the structure of , we conclude that is of the form:

|

Observe that in , non-zero values exist only in Level-1 blocks . As there are such blocks and , by our assumption, we conclude that the last row contributes zero to , as in the last block, the output needs to be zero, and it equals , which is the same contribution for all (Level-1) blocks.

To agree with at block i, we must then have . Viewing as a block-matrix of Level00, because of the zeroes at all blocks but Block 0, the contributions of all block-rows but the first one to is:

In the above, the last equality is due to the fact that . Thus, we must have . However, we observe that is a subset of , where S is specified in Lemma 3 and thus cannot equal (which is not in ).

This concludes the proof of Theorem 4.

5. Future Directions

Our work leaves several interesting problems open.

- For what other parameters is a share conversion from -CNF to three-additive possible? For instance, surprisingly, is possible for as follows from [10]. Our analysis does not explain this phenomenon, as we did not complete the full analysis of the resulting matrix. We believe that given the work we have done, this is a very realistic goal.

- We need to understand share conversion for different sets S. One direction is by considering m’s, which are a product of more than two primes. As discussed in the Introduction, already using three primes, a conversion from (2,3)-CNF over would improve over the best known constructions for three-server PIR via the BIKO paradigm. One advantage of such schemes following the BIKO framework is the constant answer size achieved. Here, we initially worked with two primes, rather than with three, to develop the tools and intuition for a slightly technically simpler setting.

- As discussed in the Introduction, some of the previous results not falling in the BIKO framework can be viewed as instances of an extended BIKO framework using a “many-to-one” share conversion. Viewing PIR protocols as based on share conversions between secret sharing schemes is apparently “the right” way to look at it. As certain evidence, using the more redundant CNF instead of Shamir as in [10] was a useful insight from secret sharing allowing us to further improve CC. In particular, in the case that there are no share conversions for for m’s that are a product of three or more primes, perhaps a suitable many-to-one conversion may still exist.Further extending this view could lead to new insights on PIR design. In particular, in many of the existing schemes, a shallow circuit is evaluated essentially by performing share conversions. In particular, local evaluation of linear functions over the inputs is a special case of such a many-to-one conversion (from a linear scheme to itself). In all schemes we have surveyed here, PIR for a large family of functions (of size ) was implemented via share conversion for a small set of relations. For instance, BIKO’s 3-PIR was based on linear functions (implementing inner products with vectors in the MV family) and a share conversion for , thus only relations. Similarly, in the two-server PIR, the situation is similar.One concrete direction may be proving lower bounds on PIR protocols based on “circuits” containing only certain share conversion “gates”. Perhaps analogies to circuit complexity could be made, borrowing techniques from circuit lower bounds. Insights for such limited classes of schemes could hopefully advance our understanding of lower bounds for PIR CC; currently, the best-known lower bound even for two-server PIR is , only slightly above trivial [15].

Author Contributions

A.P.-C. and L.S. contributed equally in this paper.

Funding

This research received no external funding.

Acknowledgments

We thank Roi Benita for greatly simplifying the transition from to at an earlier step of working on this project.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Deferred Proofs

Appendix A.1. Proof of Lemma 2

We start with a few Claims to be used by the proof.

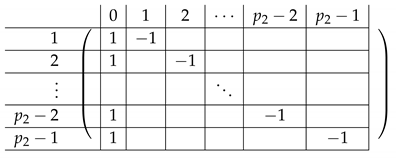

Lemma A1.

Let p be a prime and an integer. Let denote a matrix in where the row is:

Then .

Proof of Lemma A1.

In the following, all index arithmetic in vectors and matrices is done over . First, observe that . In particular:

Let us figure out the left kernel of , K. In particular, for all , all coordinates of are equal.

We note that since for all :

then by the structure of the columns, we have . Since p is prime and , the sequence goes over all entries in z. We thus conclude that for some . By a simple calculation, we have . As as an element of , then to obtain , we must have . That is, there is no non-zero linear combination of leading to zero, so are independent, as required. □

Observation 5.

There exist at least two ’s that belong to distinct orbits that are non-zero.

Proof of Observation 5.

For any single orbit (), one may view the matrix as consisting of blocks, with the first block in each row starting at entry i (and one of the blocks possibly wrapping around). The matrix (with rows permuted for convenience) is thus a block-matrix such that every block initially consists of entries of the form for in each block. Consider a linear L mapping applied to the resulting block matrix , replacing each block with a single element of that maps to one. Then, clearly, is a matrix over satisfying the conditions of Lemma A1. This is the case since , and each row has mod non-zero blocks. By Lemma A1, the rows of are therefore linearly independent. We conclude that so are the rows of (or else the rows of would also be dependent via the same linear combination). Therefore, the rows of the are also linearly independent. □

Proof of Lemma 2.

We start with the “in particular” part. To prove this, recall that in each row in , there are exactly consecutive one. That is, for , we have . That is, fits into mod (lifted back to ) times. Indeed, mod exists, as and is a prime, so it does not equal zero mod .

We therefore get:

over . This holds, since at every point, we add exactly x vectors that contribute one to that point. Here, the integer x is viewed as an element of . As , x is non-zero in .

It is not hard to see that every other vector in is spanned by : Let use define an orbit as a sub-set of vectors for . It is easy to see that we have for any . Therefore, for any for , we have:

Indeed, in the above equation, all summands but are in .

To complete the proof, it remains to prove that is an independent set. Assume for contradiction that a non-trivial linear combination of leads to zero. Let:

where not all ’s are zero. Splitting the sum into orbits, we get:

Consider all orbits in which non-zero coefficients in the above combination exist. By Observation 5, . In particular, starting from , not all ’s corresponding to are non-zero (because only for and equals otherwise).

Thus, we have . Note that the vector on the left-hand side has the property that every block of consecutive elements starting at some index is of the form . On the right-hand side, as orbit has non-zero ’s, but not all of them non-zero, the sum:

is non constant. The latter holds since all the rows indexed by this orbit are independent by Lemma A1, and lead to constant . We thus conclude that only such z’s lead to constant . As at least one in this orbit is zero, and at least one is not. z cannot be of this form, and thus, the is not constant. On the other hand, by the structure of , every block of size starting at is of the form . In particular, there must exist two consecutive blocks starting at respectively with different values respectively. In this pair of blocks, each intersects the block starting at from orbit . Thus, on the right-hand side, this block has the same value at indices (a non-empty set, as ) and a different value at indices . Going over the following ’s, a similar situation results. Spelling it out, (if it exists) will perhaps create one or more additional “imbalanced” blocks and maybe affect the original block we singled out, affected by . In the latter case, it will not fix the original imbalance. To see that, let us consider the sequence of orbits affecting the block in the way described above. As we add the contribution of the ’s from left to right, we consider the sequence going from the end of the block to the left up to entry included. It is not hard to show by induction on j that this sequence consists of the same value. When adding the contribution of , this sequence (of length at least two at this point, as ) is broken into two, where the first entry in the sequence now differs from the last. The delta equal between these entries is , where are the constants corresponding to , as explained for above. We conclude that the right-hand side is not a multiple of (as the block is not constant) and in particular may not equal zero. □

Appendix A.2. Proof of Lemma 3

Proof of Lemma 3.

We prove the claim in two steps. First, we observe that S is the right kernel of . Then, by recalling , the claim follows. To prove the first observation, we note that indeed, S is a subset of the right kernel of , by calculating the products for each , essentially following from the observation that the sum over all rows indexed by any single orbit (as defined in the previous section) is the same. Next, it is easy to see that the set S is independent; for instance, focus on the first block of columns, and observe that the rank of is by itself . Finally, defined in Lemma 2 is . As span , then by the Cayley–Hamilton theorem, the rank of the right kernel of is . We conclude that S is a basis of the (right) kernel of , which concludes the proof. □

References

- Chor, B.; Kushilevitz, E.; Goldreich, O.; Sudan, M. Private Information Retrieval. J. ACM 1998, 45, 965–981. [Google Scholar] [CrossRef]

- Kushilevitz, E.; Ostrovsky, R. Replication is NOT Needed: SINGLE Database, Computationally-Private Information Retrieval. In Proceedings of the 38th Annual Symposium on Foundations of Computer Science, FOCS ’97, Miami Beach, FL, USA, 19–22 October 1997; pp. 364–373. [Google Scholar]

- Gentry, C.; Ramzan, Z. Single-Database Private Information Retrieval with Constant Communication Rate. In Proceedings of the Automata, Languages and Programming, 32nd International Colloquium, ICALP 2005, Lisbon, Portugal, 11–15 July 2005; pp. 803–815. [Google Scholar]

- Beimel, A.; Ishai, Y.; Kushilevitz, E.; Orlov, I. Share Conversion and Private Information Retrieval. In Proceedings of the 27th Conference on Computational Complexity, CCC 2012, Porto, Portugal, 26–29 June 2012; pp. 258–268. [Google Scholar]

- Ben-Or, M.; Goldwasser, S.; Wigderson, A. Completeness Theorems for Non-Cryptographic Fault-Tolerant Distributed Computation (Extended Abstract). In Proceedings of the 20th Annual ACM Symposium on Theory of Computing, Chicago, IL, USA, 2–4 May 1988; pp. 1–10. [Google Scholar]

- Goldreich, O.; Micali, S.; Wigderson, A. How to Play any Mental Game or A Completeness Theorem for Protocols with Honest Majority. In Proceedings of the 19th Annual ACM Symposium on Theory of Computing, New York, NY, USA, 25–27 May 1987; pp. 218–229. [Google Scholar] [CrossRef]

- Cramer, R.; Damgård, I.; Ishai, Y. Share Conversion, Pseudorandom Secret-Sharing and Applications to Secure Computation. In Theory of Cryptography Conference; Springer: Berlin/ Heidelberg, Germany, 2005; pp. 342–362. [Google Scholar]

- Grolmusz, V. Constructing set systems with prescribed intersection sizes. J. Algorithms 2002, 44, 321–337. [Google Scholar] [CrossRef]

- Beimel, A.; Ishai, Y.; Kushilevitz, E.; Raymond, J. Breaking the O(n1/(2k−1)) Barrier for Information-Theoretic Private Information Retrieval. In Proceedings of the 43rd Symposium on Foundations of Computer Science (FOCS 2002), Vancouver, BC, Canada, 16–19 November 2002; pp. 261–270. [Google Scholar]

- Efremenko, K. 3-Query Locally Decodable Codes of Subexponential Length. SIAM J. Comput. 2012, 41, 1694–1703. [Google Scholar] [CrossRef]

- Yekhanin, S. Towards 3-query locally decodable codes of subexponential length. J. ACM 2008, 55. [Google Scholar] [CrossRef]

- Dvir, Z.; Gopalan, P.; Yekhanin, S. Matching Vector Codes. SIAM J. Comput. 2011, 40, 1154–1178. [Google Scholar] [CrossRef]

- Dvir, Z.; Gopi, S. 2-Server PIR with Subpolynomial Communication. J. ACM 2016, 63, 39. [Google Scholar] [CrossRef]

- Beimel, A. Secret-Sharing Schemes: A Survey. In International Conference on Coding and Cryptology; Springer: Berlin/Heidelberg, Germany, 2011; pp. 11–46. [Google Scholar]

- Wehner, S.; de Wolf, R. Improved Lower Bounds for Locally Decodable Codes and Private Information Retrieval. In International Colloquium on Automata, Languages, and Programming; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).