1. Introduction

In image processing, the first operation performed on a specific plot in most cases is level quantization. The number of levels depending on the objectives of the study may be different. The second operation is to sampling of the quantized plot using Cartesian (or polar) coordinates. The problems that arise here are typical for the sampling-recovery algorithms (SRA) of realizations of random fields; it is necessary to determine based on a priori information about the image and selected criteria, the optimal sampling-recovery procedure and then evaluate the quality of restoration.

Gaussian models of random fields are the simplest, because for multidimensional distributions of random variables, the analytical expressions representing a priori information about the field are known. The situation with non-Gaussian field models is fundamentally different, since the analytical description becomes very complicated and the level quantization operation only aggravates the situation. This circumstance explains the fact that the number of real images being analyzed is constantly growing, see [

1,

2,

3] etc.

Various models of the random fields, the realizations of which are quantized by level and sampled on a plane, are known in the literature (see, for example, the fundamental books [

4,

5,

6,

7,

8], as well as the journal articles [

9,

10,

11,

12,

13,

14]. However, the authors of the present article are not aware of any works discussing the statistical models of random non-Gaussian fields with a finite number of states (i.e., analytical field models after a quantization). In addition, there are no publications devoted to the SRA description of realizations of such fields. The proposed work is intended to remedy the aforementioned shortcomings.

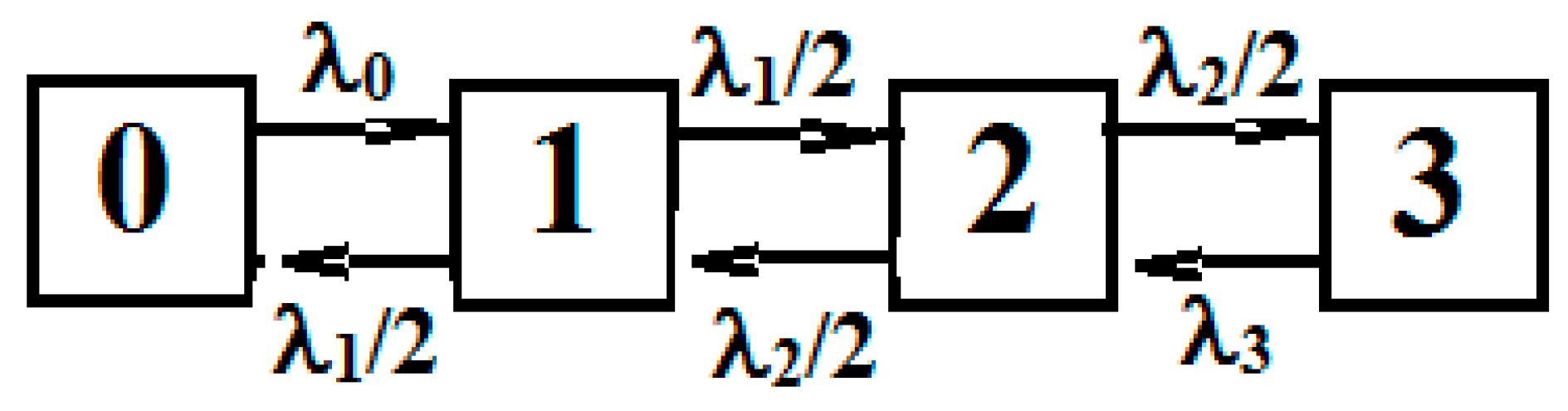

The first part of this work deals with a generalization of a random field model with jumps (or piecewise constant states). The first publication devoted to the analytical description of non-Gaussian fields with jumps in brightness is [

15], which is a “chessboard with random rectangles”. This model was further generalized in [

16,

17]. In [

17] the number of levels was increased to four. Realizations of such random fields with piecewise constant states are produced by the summation of realizations of binary Markov processes given along the coordinate axes. In the present work we generalize the model [

17] by forming the field realizations as a sum of realizations of piecewise constant Markov processes with an arbitrary number of states (such processes are called markovian chains with continuous time). Obviously, field realizations also have an arbitrary number of states. Using realizations of Markov processes as generators of field realizations makes it possible to analytically describe a random field model with piecewise constant states.

The second part of the work is devoted to the study of the sampling-recovery algorithm (SRA) of the realizations of the proposed field model. The SRA for random continuous processes and fields is widely known. However, the studies of SRA of realizations of discontinuous processes and fields are scarce. We begin with a brief discussion of SRA of random processes with piecewise constant states. SRA for the realizations of such processes have pronounced differences from features SRA for realizations of continuous random processes. Namely, in case of SRA of continuous processes, it is necessary to determine the recovery function in the interpolation and/or extrapolation modes, as well as assess the quality of the recovery. In that case the recovery function and the recovery error are the functions of time. SRA for realizations of piecewise continuous processes are completely different, since here it becomes necessary to determine the procedure for estimating the instants of transitions from one state to another, as well as assess the estimation´s the quality. In other words, it comes down to estimating a set of random variables. Naturally, in both cases SRA are based on a priori information about random processes, which are significantly different.

Realizations of random processes and fields in SRA are investigated by applying the conditional mean rule (CMR). The optimal structures of the reducing agents of realizations are determined for an arbitrary number and location of the samples. The conditional mean recovery algorithm automatically provides the minimum mean square recovery error. Numerous examples of the application of the discussed technique can be found in [

18,

19,

20,

21]. In particular, on the basis of this method were analyzed the realizations of the SRA binary Markov process [

22] and the realizations of the SRA of a piecewise continuous Markov process with an arbitrary number of states [

23]. The results of [

22] served as the basis for the study of SRA for random piecewise constant fields [

16,

17] with the number of states equal to 2, 3, 4. In this work we study using the approach [

23] the SRA for the field realizations with an arbitrary number of states. It is clear that an increase in the number of levels of field values increases the possibilities of describing random field models quantized by levels. We emphasize that the optimal SRA entails the non-periodic sampling of realizations. This nontrivial feature is overcome by the CMR method used.

The proposed model is flexible, since a specific type of realizations of a field model with the desired features can be formed by an expedient choice of probabilistic characteristics of the generating Markov processes.

The article consists of five sections. The first describes the proposed random field model with piecewise constant states, the second describes the sampling-recovery algorithm of field realizations and discusses the conditions under which realizations of generating processes can be restored using a known field realization. The third one justifies the choice of sampling intervals for realizations of processes for a given probability of state missing. In the fourth section, the instants of transition from one state to another are estimated from discrete samples. The fifth gives two examples that illustrate the proposed algorithms.

2. Description of the Model of a Random Field with Piecewise Constant States

We form a random piecewise-constant field

by summing two independent homogeneous Markov processes

and

The processes

and

have continuous arguments and discrete sets of states (let it be the sets

and

which are defined on the axes

and

. Hereinafter, it is convenient to perceive

and

as time axes.

are the numbers of states of the corresponding processes. We do not introduce special notation for realizations of random functions that are different

and

, therefore Equation (1) will also be understood as the sum of realizations. The Markov property of formative processes allows an analytic description of the structure of such a field. It might be possible to abandon the Markov properties of the generating processes, but this significantly complicates the analytical description of the SRA (for comparison, see, for example, [

24]).

It is assumed that the characteristics of the processes

and

(the output density and the transition probability) are known. Denote the output density (or output intensity) of the states: for

, for

the meaning of which is tat:

We also assume that for and there are given graphs or transition probability matrices .

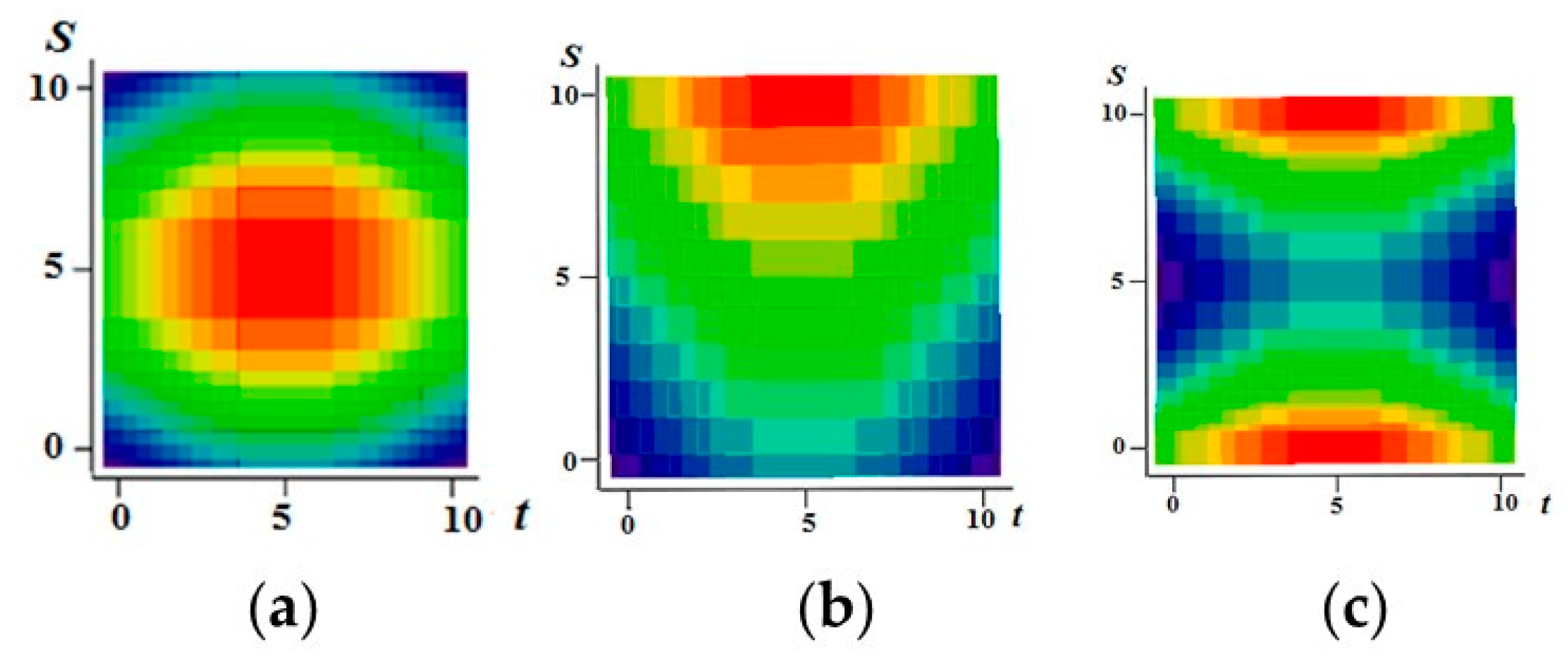

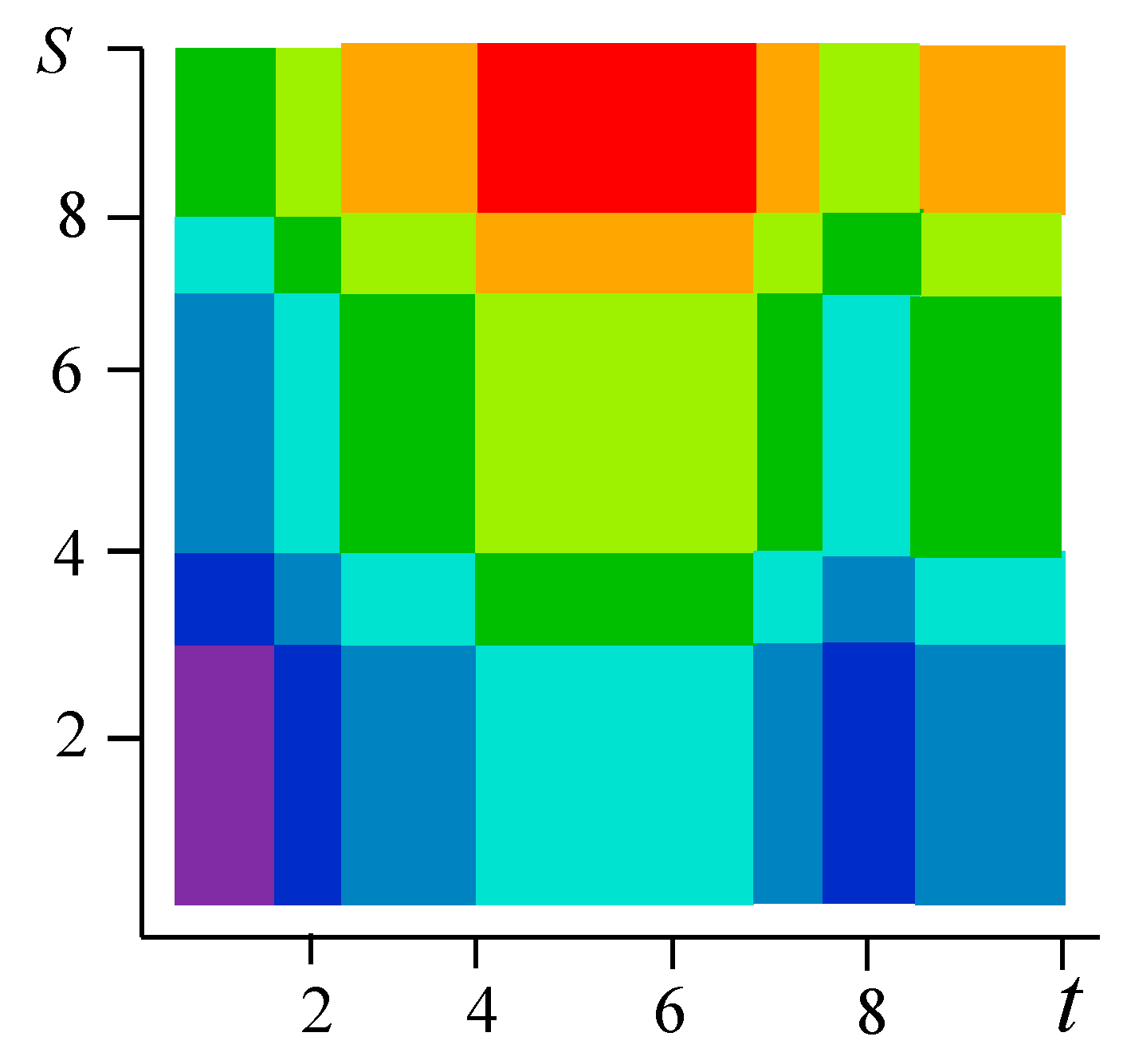

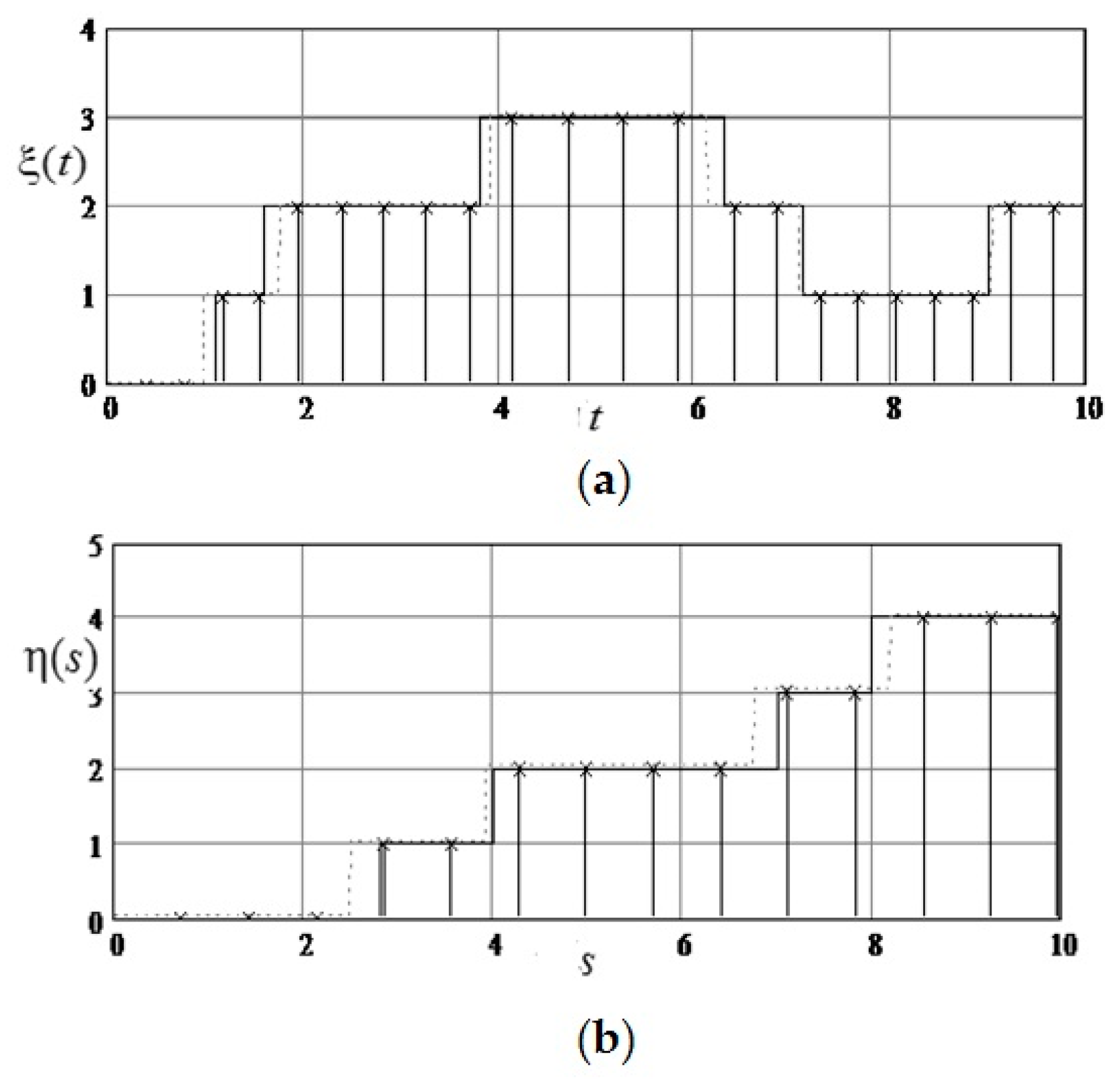

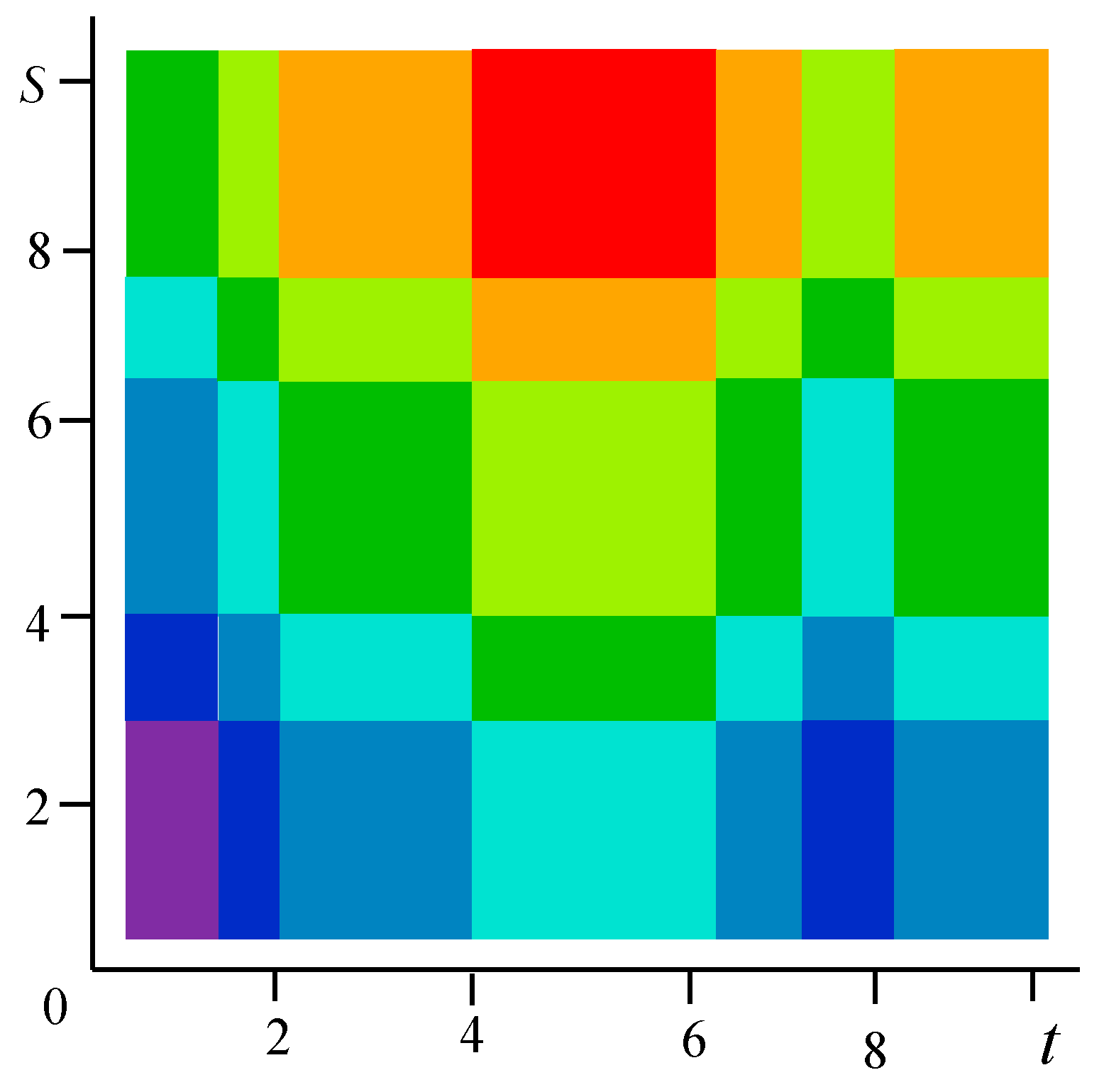

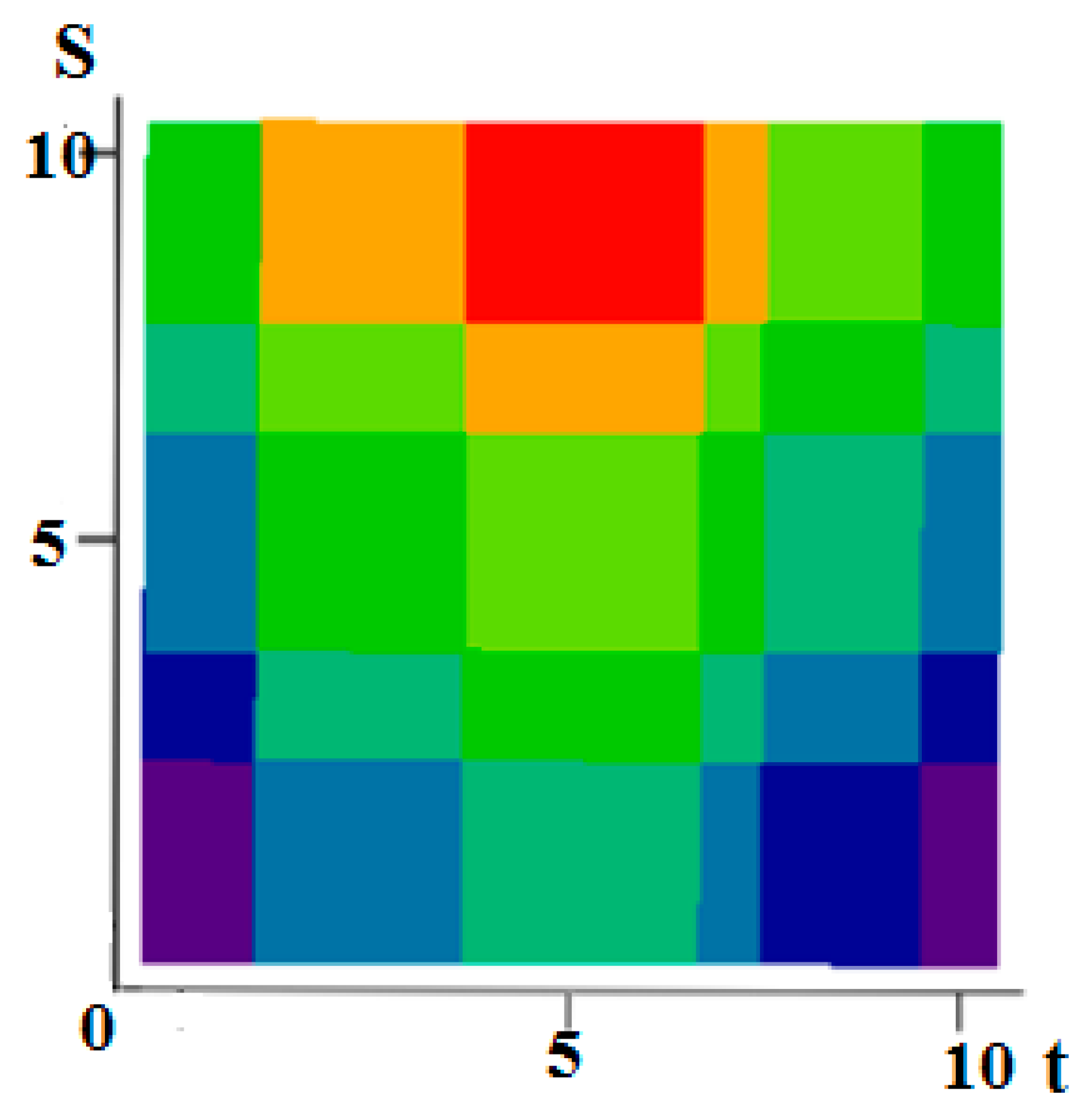

Figure 1 shows examples of realizations of fields formed by the sum Equation (1). The number of levels according to the values of each function in

Figure 1a–c is 10 (a total of 19 field levels). High intensity of red color means the maximum value of the field, and high intensity of blue color minimum.

Figure 1a–c illustrate the flexibility of the proposed model. It is possible to form various realizations of the random field by selecting the parameters of the generating realizations. Specifically, it is possible to create field realizations with a fairly large area where there are no transitions. Such areas can be in the center (

Figure 1a) or shifted in any direction (

Figure 1b shows an upward). It is also possible to simulate two maxima (

Figure 1c). Small areas with different sizes can be created around such areas, and so on. The

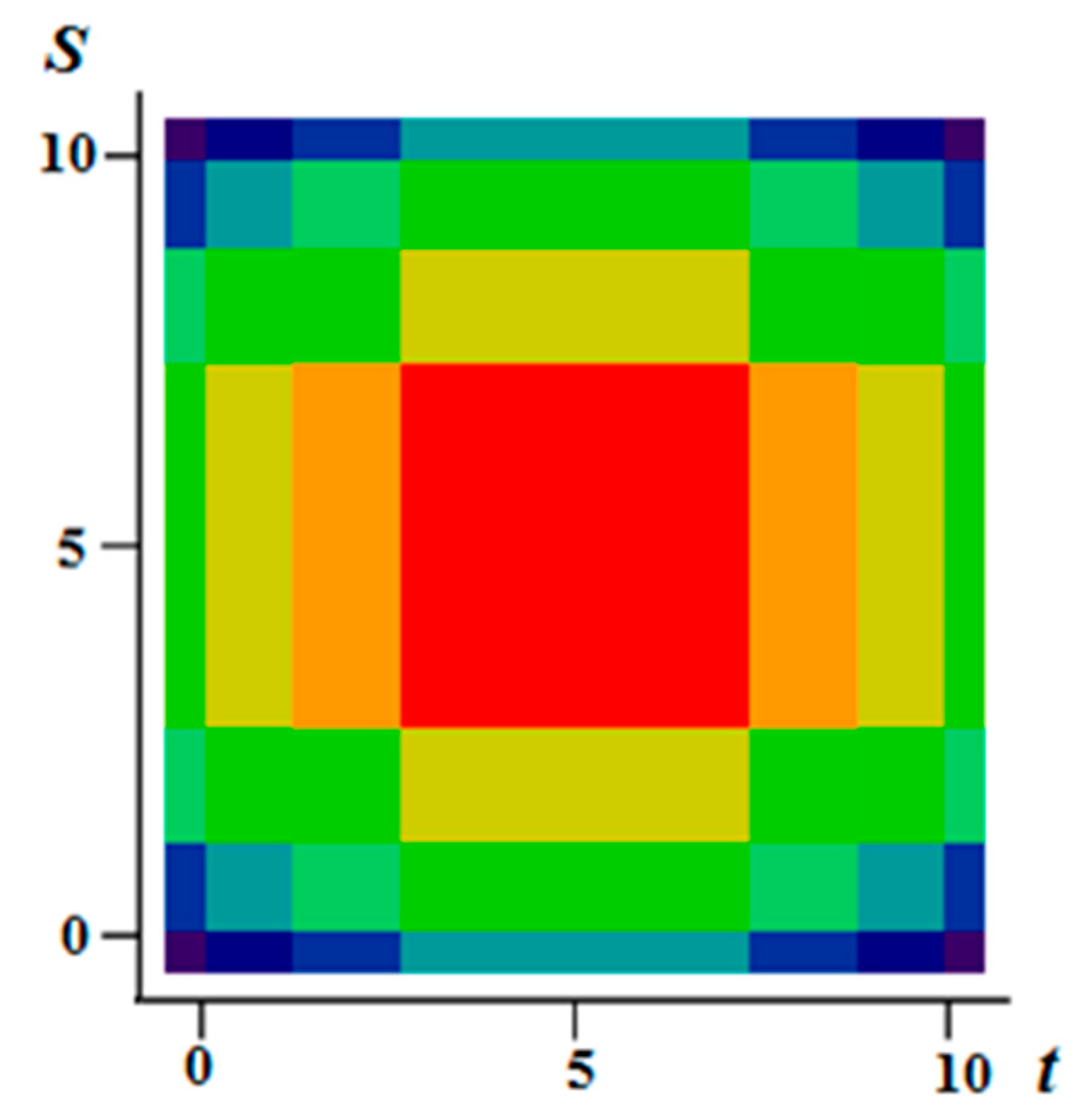

Figure 2 shows a field with a maximum at the center (similar to

Figure 1a) with a coarser quantification 8 levels for field values.

3. Description of the Sample-Recovery Algorithm of the Realization of the Field Model

Unrecoverable errors associated with the omission of states may occur in the sampling of realizations of processes with piecewise constant states. Such errors occur in the case when the duration of the process in the state is less than the sampling interval. The SRA of realization of the processes under consideration should take into account this phenomenon. Namely, it is necessary to determine the SRA so that when recovering, the probability of skipping a state is no more than a given level

[

22,

23]:

This post expresses the idea of the condition: “it is advisable not to skip states”. A similar requirement must be met when analyzing the SRA of a random field

. Below, in

Section 4, we detail the condition Equation (2) is detailed.

The description of the SRA realizations of the field is associated with the analysis of the SRA realizations of the generating processes and . At the same time, knowing the samples of the field realization, one must not only estimate the position of the switching points of the process realizations, but also determine the states of the processes themselves. The latter circumstance is important because the residence time of the process in a given state and the sampling interval depend on the state itself. We emphasize this circumstance, since sampling turns out to be fundamentally non-periodic.

To determine the realizations of

and

from known values of the field

, it is enough to know its value at one point of one of the formative realizations (

or

). For example, if at some point

the value

is known, then by Equation (1) we have

Then at an arbitrary point

of the

axis one can write:

Finally, the application Equation (1) yields

The possibility of unambiguous recovery depends on the sets of states of the formative processes and on the specific states used in a given field realization. There may be situations where recovery is trivial. For example, let the process have states: ( is the measurement discreteness; we take ) and the process has states: .

Table 1 shows the values of the function

for arbitrary

and

.

In particular, for

, it turns out

field levels. The values of this function are given in

Table 2.

In the examples, all values of the function are different, and therefore each field value corresponds to a single pair , so restoring the realizations of the processes and is trivial.

The situation when the process state grids have the same step is quite important. For example, let the processes

and

have

integer states:

. The values of the sum with

are shown in

Table 3. It is clear that if the field has the values 1, 2, 3 or 5, then the value of the pair

is not uniquely determined. If the value of the field is 0 or 6, then the value of the pair

is uniquely determined, and therefore the realizations

and

are determined in accordance with Equations (3) and (4).

For this important case (the case of sets with the same grid step), the necessary and sufficient condition for the uniqueness of the recovery of generating realizations is the following statement: among the values of the realization of the field

there is either the minimum possible value

or the maximum possible value

where

,

are the minimum and maximum values of the sets

and

.

The sufficiency is obvious, since each of these values corresponds to a single pair: for , and for , and then by Equations (3) and (4) the realizations and are restored.

The necessity: from the uniqueness follows the fulfillment of condition Equations (5) and (6). Suppose that none of the conditions Equations (5) and (6) is satisfied, i.e., among the values

there is neither a point

, nor a point

. Or else, this condition is written differently: for any value

,

and show that recovery is not unique. Indeed, the available values of

fields are obtained by summing some values of

and some values of

, and therefore the system of algebraic equations

relatively unknown terms

is solvable by construction (the question is whether the solution is unique). Let be

that generate the available values of

field.

Condition Equation (8) (the absence of two “necessary” points

and

) is satisfied only in two cases:

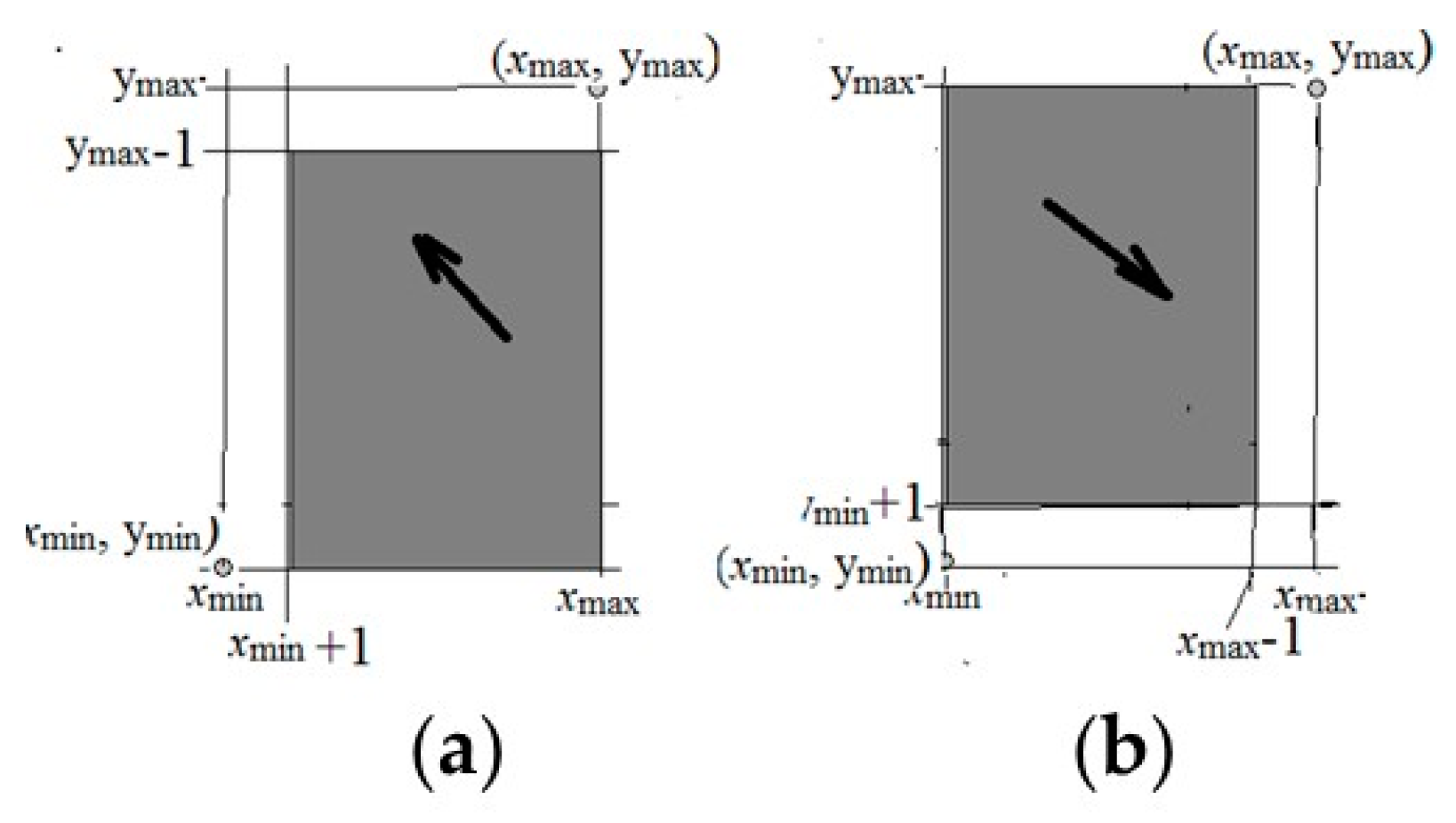

These two situations are illustrated in

Figure 3a,b.

We form a new solution by marking the values with strokes. If Equation (9) is true, then from each

we subtract 1, and to each

we add 1:

It is clear that

also satisfies system Equation (8), and in the

Figure 3, the range of values a) goes to region b).

If true Equation (10), then we add to each 1 and subtract 1 from each

These values also satisfy system Equation (8), while in

Figure 3 the domain of (b) goes into region (a). Thus, if condition Equations (5) and (6) is not satisfied, then the solution is not unique. The necessity is shown.

Note that if the uniqueness condition is not satisfied, then the restoration is possible up to constants, and this is quite acceptable in many practical situations.

So, the necessary and sufficient condition for the uniqueness of the recovery of formative realizations

and

is the existence (among the values of a given field realization) of an least one extreme value

of a field that can be represented by the sum in the only way:

where

and

are the sets of the states of the processes, respectively,

and

.

The sampling procedure consists of the following:

- (1)

Determining the values among the given field values together with the corresponding values of the arguments and .

- (2)

The definition of formative realizations and by Equations (3) and (4).

- (3)

Calculation of sampling intervals and along the and axes and sampling points: .

- (4)

The sampling results are sets of values

The field recovery procedure consists of the following actions:

- (1)

Restoration of realizations and by the values of and

- (2)

Determining the recovered values of the

field in accordance with Equation (1):

4. Determination of Sampling Intervals

SRA for realization of Markov processes with a finite number of states are described in [

22]. Let a continuous time Markov process have states

with the corresponding exit from states intensities

and the matrix of conditional probabilities

(on the diagonal

). Let

be the duration of being in state

, and

be the duration of being in the next state

. By the property of a Markov process, the random variables

and

are independent and distributed according to the exponential law with the parameters

and

with density

and with the corresponding distribution function

.

At a sampling instant , it is necessary to determine the next instant , i.e., determine the sampling interval , which is selected from the condition Equation (2) of a given small probability of a gap following the state unknown state .

Next, we will use the property of the exponential distribution: the conditional distribution of the remainder of the transition does not depend on the elapsed waiting time and coincides with the distribution of the time in the state. The event “skip of state” (mentioned in Equation (2)) means that we have a condition:

for any

, for which

, i.e., the maximum of the probability in

must be equal to

:

where

is the distribution function of the sum

. Obviously, the greater

is, the less is

. Equation (13) reaches its maximum in that state

, at which

is maximal:

The distribution function of the sum

is equal to

. Note that

and

enter this expression symmetrically, which allows us to move to a one-parameter family of functions. Denote

It is easy to show, the probability density of a random variable

is determined by formula:

Then for a random variable

, the distribution function, depending on the parameter

, as is easily shown [

23], is equal top

Condition Equation (13) takes the form:

From Equations (16) and (17), one can find the value of the sampling interval:

where

is the function inverse to

with respect to the first variable (the monotonic increase in

of the function

is taken into account). Note that the dependence of the interval

on

appears through

and

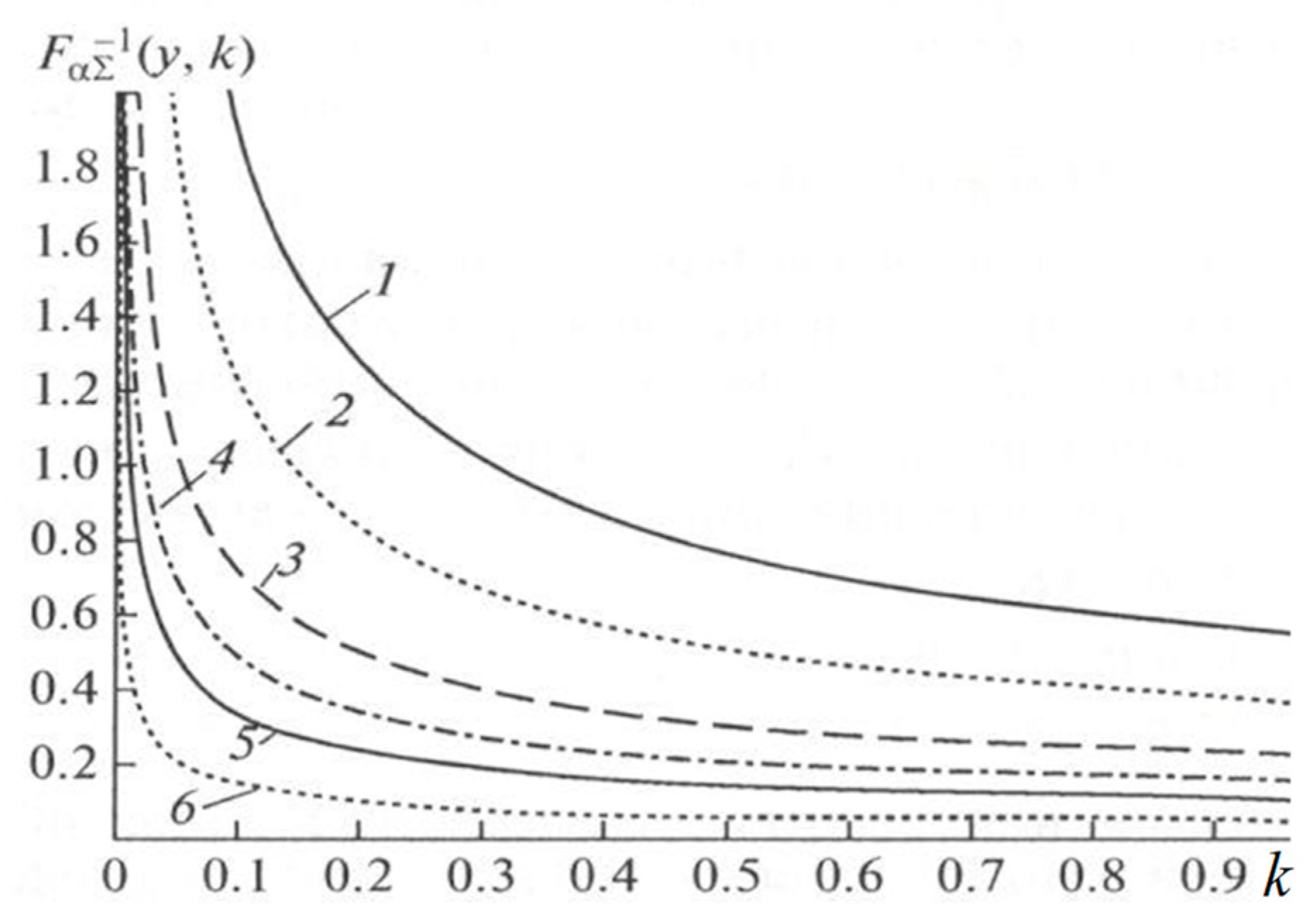

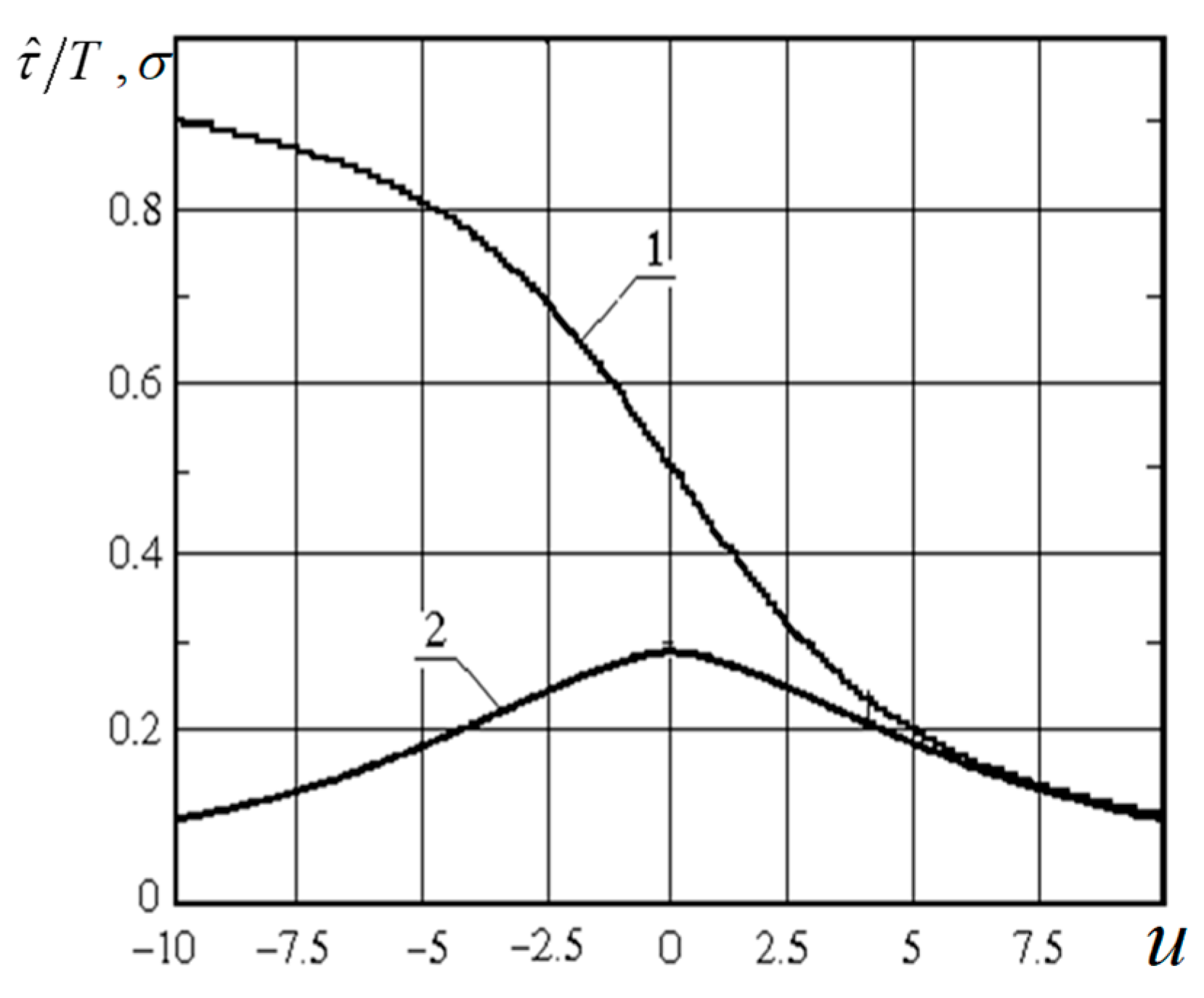

. The graphs of functions

[

23] depending on the parameter

for different

are shown in

Figure 4. There is also an approximate solution of Equation (16) for

obtained by expanding in powers of small

[

23]:

The quality of the approximation Equation (19) with respect to the exact solution is no worse than 10% at

, [

23].

Let at the sampling instant

the sample to have the value

The least favorable next state, according to Equation (14), is the state with the number

. We calculate the quantities

and

by the relation Equation (17), and the value

is selected by the Equation (18). Then the next sampling time is determined in an obvious way

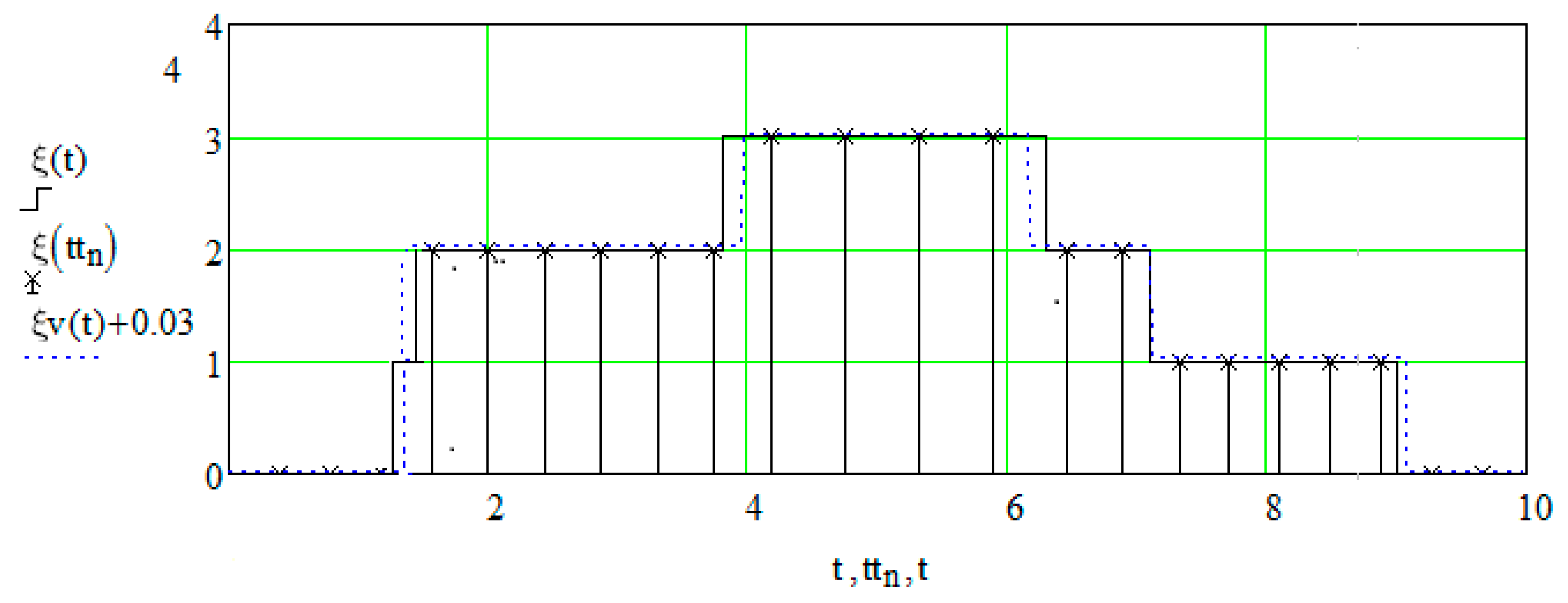

Note that the functions and are calculated in advance. The sampling of is performed similarly. Sampling results are sequences of pairs and similarly

5. Results Recovery of Formative Realizations from Discrete Samples

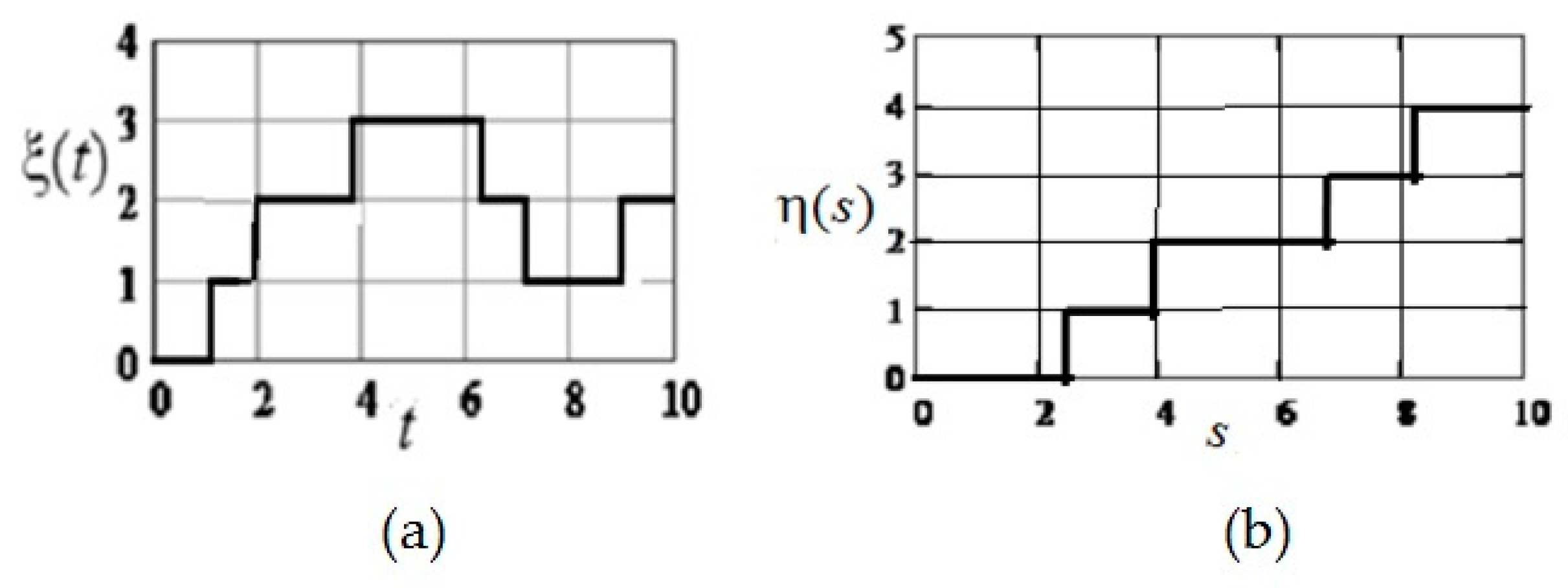

Consider the realization of the process on the axis and denote on it the sampling points that need to be determined: . Suppose that on the sampling interval there is a transition from state to state : (here ).

It is required to estimate the unobservable value

-the random istant of transition from

to

. The best estimate (in terms of minimal error variance) is the expectation of conditional distribution

, provided that

. Due to the homogeneity of the process, we assume that

. The density of the conditional distribution of the point

on the interval

provided that there is only one transition in the interval, is determined by the following relation [

23]:

where

. Equation (20) describes a truncated exponential distribution, which in the case

degenerates into a uniform one. By density Equation (20), the conditional expectation is determined, which is an estimate

with the minimum variance for the transition instant

:

where indicated:

,

We can verify that is an odd function and .

Specifically,

the expression for the variance of the estimate is

Graphs of the relative values of the estimate

and the standard deviation

, as functions of the argument

are shown in

Figure 5.

Using Equation (22), we obtain the estimated values for the process switching points. The restoration is performed according to the values measured in accordance with Equation (12).

For the processes

: if the interval

is the switching interval, i.e.,

, then we determine the value of the estimate of the transition point

using the Equation (22):

where

is the sequence number of the switching interval on the

axis,

The value of the recovered realization

at the point

of the next interval is determined by the relation:

if

.

For the process

: if the interval

is the next transition interval, i.e.,

, then we determine the value of the transition point

estimate using Equation (22):

in Equation (22), instead

we use

,

; instead

we use

;

is the sequence number of the transition interval on the

axis,

The values of the reconstructed realization at points

are as follows:

For the recovered realizations of the processes

and

we determine the reconstructed value of the field realization by Equation (1):