Abstract

Non-negative tensor factorization (NTF) is a widely used multi-way analysis approach that factorizes a high-order non-negative data tensor into several non-negative factor matrices. In NTF, the non-negative rank has to be predetermined to specify the model and it greatly influences the factorized matrices. However, its value is conventionally determined by specialists’ insights or trial and error. This paper proposes a novel rank selection criterion for NTF on the basis of the minimum description length (MDL) principle. Our methodology is unique in that (1) we apply the MDL principle on tensor slices to overcome a problem caused by the imbalance between the number of elements in a data tensor and that in factor matrices, and (2) we employ the normalized maximum likelihood (NML) code-length for histogram densities. We employ synthetic and real data to empirically demonstrate that our method outperforms other criteria in terms of accuracies for estimating true ranks and for completing missing values. We further show that our method can produce ranks suitable for knowledge discovery.

1. Introduction

1.1. Motivation

In recent years, as the variety of data has grown rapidly, the data often contains more than two attributes and there are only non-negative values. For example, purchase data can be constructed as trading amounts of customers × commodities × shops × times. Another example is that web access data can be organized as access times of hosts × users × months. Such data can be conveniently organized as a non-negative high dimensional data tensor and analyzed by means of Non-negative Tensor Factorization (We focus on non-negative CANDECOMP/PARAFAC (CP) decomposition [1] in this paper) (NTF, [2]), which can be thought of as a generalization of well-known methodology of Non-negative Matrix Factorization (NMF, [3]).

For a third-order non-negative data tensor and a non-negative rank , NTF factorizes into three factor matrices , , and . Denoting , , and as the element of and V respectively, the element-wise approximate factorization of the NTF model can be formulated as follows:

Here, represents an element in the approximation error tensor . For simplicity, we denote the non-negative rank as rank in the rest of this paper following the terminology in [4]. Note that this is not the same notion as rank in matrices.

In contrast to NMF, NTF can not only extract the patterns hidden in the data tensor, but also simultaneously analyze multiple factors. For example, in the web access log analysis, three factorized matrices can represent influences of hosts, users and months, in which NTF can analyze not only users’ web behaviors but also its change according to time. As a method of multi-way analysis, NTF has been widely applied to various fields such as signal representations [5], sound source analysis [6] and computer vision [4].

There is a problem that we have to determine the value of R before performing NTF on a data tensor, which will greatly influence the factorized result. In fact, a successful decision of rank R can lead to an effective factorization which extracts vital features as well as eliminates the noise in the data. On the contrary, if the selected rank is too small, NTF will fail to reel off some key features, and if it is too large, we may obtain a result that is much influenced by irregularities of data.

Even though the selection of rank is very important, in most studies on NTF the value of R is simply determined by trial and error or specialists’ insights, and there does not exist a good way to determine R automatically.

1.2. Contribution

The contribution of this paper is summarized as follows:

(A) Proposal of a novel criterion for rank estimation for NTF. In this paper, we propose a method to select an appropriate value of rank for NTF based on the minimum description length (MDL) principle [7]. The MDL principle asserts that the best model must have the minimum code-length to encode the data as well as the model. The reasons why we choose the MDL principle among a number of existing statistical model selection criteria (e.g., AIC [8], BIC [9], cross-validation (CV), etc.) are: (1) it has consistency [10], and (2) it is guaranteed rapid convergence in the framework of probably approximately correct (PAC) learning [11].

Our application of the MDL is not straightforward. It is unique in the following two aspects:

- (A-a)

- Tensor slice based approach: It is not appropriate to employ the MDL principle directly to NTF because the number of elements in factor matrices is too much smaller than that in a data tensor, hence the model complexity might be ignored inappropriately. We call this problem the imbalance problem. Our key idea to resolve this problem is that we first slice the data tensor into multiple tensor slices, namely matrices, then select the rank for each tensor slice, and finally select the largest rank of tensor slices as the best rank of the data tensor. We call this methodology the tensor slice based approach.

- (A-b)

- NML-based code-length calculation: When the goodness of a model is measured in terms of its code-length, the selection of a coding method is important. We specifically utilize the normalized maximum likelihood (NML) code-lengths [12] to encode the results of factorization. This is due to the facts (1) the NML code-length has optimality under the Shtarkov’s minimax regret [13], and (2) it achieves Rissanen’s lower bound on code-length [10].

(B) Empirical demonstration of effectiveness of the proposed criterion. We employ synthetic and real data sets to empirically demonstrate the effectiveness of our proposed method in terms of:

- (B-a)

- Accuracy for estimating true rank: When the true rank that generates the data is known, we measure the goodness of any rank estimation method in terms of its estimation accuracy.

- (B-b)

- Accuracy for completing missing values: When the true rank is unknown, we measure the goodness of any rank estimation method in terms of accuracy of competing missing values.

We compare the proposed method with other criteria such as AIC, BIC, CV in terms of both measures (B-a) and (B-b). We further show using real data sets of web access data and app usage data that our method actually produces suitable ranks so that knowledge discovery can be conducted by looking at the bases in combination with external knowledge.

The next section introduces some related work on rank selection criteria for NTF and NMF. Then, we propose our MDL-based method in Section 3, and presents a comparison method named MDL2stage in Section 4. In Section 5, we show experimental results of our proposed method and other criteria on both synthetic and real data sets to demonstrate the effectiveness of our method. Finally, we summarize the conclusion of this paper in Section 6.

2. Related Work

In most of practical studies on NTF, the value of rank is either decided by trial and error or by specialists’ insights (see e.g., [6,14]). In addition, AIC and BIC have also been used for model selection in NTF [15].

Unlike NTF, there are a number of rank selection methods for NMF. As a special case of NTF, NMF aims to factorize a non-negative data matrix into factor matrices and as , where E is the approximation error matrix. In addition to general methods such as AIC, BIC and cross-validation [16], more involved criterion have been developed to select the rank for NMF, such as the MDL criterion with latent variable completion [17] and the non-parametric Bayes method [18]. The MDL code-length under the assumption of model regularity is studied in [19].

Squires et al. [20] proposed two rank selection methods for NMF based on the MDL principle. In this study, data matrix X is considered as the message from a transmitter to a receiver and it is sent in such a way that a transmitter send W, H and E and a receiver reconstruct X from them. When rank R is low, encoding of W and H requires shorter code-lengths while that of E does longer one. On the contrary, when R is high, encoding of W and H requires longer code-lengths while that of E does shorter one. The MDL principle is used to find the best solution of the trade-off between the accuracy and the complexity. Squires et al. proposed two methods for calculating code-lengths with Shannon information: where the probability p is known in advance. Note that the NML code-length we use can be thought of as an extension of Shannon information into the situation where the parameter of probability distribution is unknown in advance [10].

3. Proposed Method

This section proposes an MDL-based rank estimation method for NTF. To do this, we extend the study on NMF by [20] into NTF in a non-trivial way. As noted in Section 1.2, in the case of tensors, we may suffer from the imbalance problem, that is, the number of elements in factor matrices is too much smaller than that of elements in a data tensor. For instance, in case of and rank , error tensor has = 125,000 elements, while the total number of elements in three factor matrices is only . In such cases, compared with the code-length for the error tensor, those of factor matrices are too small to have an influence on the total result. Therefore, the NTF-based rank selection methods tend to choose the model that best fits the data and ignore the complexity of model in some way. As a consequence, the trade-off between complexity and errors cannot be well formalized.

Our key idea is to take the tensor slice based approach. The overall flow of our method is summarized as follows: We first produce a number of tensor slices from a non-negative data tensor, then consider those slices as non-negative data matrices and employ NMF to factorize them. Next, we select a rank for each tensor slice so that the total code-length is minimized, and finally select the largest one among the selected ranks for slices as the rank of the original tensor. Note that we calculate the code-length with the NML code-length, rather than Shannon information used in [20].

First of all, for a third-order non-negative tensor , the three kinds of its two-dimensional slices are: Horizontal slices , lateral slices , and frontal slices . Each tensor slice can be treated as a matrix to be factorized as follows:

where represents a tensor slice of the non-negative data tensor . and are the two non-negative factor matrices, both of which consist of R factors. denotes the error matrix. When the data tensor has a true rank R, any has the rank no more than R. Therefore, we could select appropriate ranks for all s and select the maximum value among them as the rank of the tensor.

Next, we select a rank of so that the total code-length is minimum. The total code-length of with rank R is given by:

where is the code-length required for encoding x under the prefix condition. In order to calculate the code-lengths for elements in and , we need to discretize them with an appropriate precision , since they are real-valued numbers with unlimited precision, which cannot be encoded. For a given precision , the elements in each matrix are first vectorized to build a vector, then discretized into bins with precision to create a histogram. Letting the minimum and maximum of elements be and , respectively, the histogram has bins:

where is the number of bins.

Then we employ the NML code-length in order to compute the code-length of , each of which denotes an element assigned to a bin. The NML code-length is a theoretically reasonable coding method when the parameter value is unknown. Let be the probability distribution with parameter under the model . According to [12], given a data sequence , the NML code-length for under the model is given by:

where denotes the maximum likelihood estimator of from . The second term in Equation (3) is generally difficult to calculate. Rissanen [12] derived its asymptotic approximation formula as follows:

where k is the number of parameters in model , denotes the determinant of the Fisher information matrix, and satisfies uniformly over .

For and , the zero terms in factor matrices are generally much more than the non-zero terms. Thus we separately compute the code-length of zero terms, namely the first bin in the histogram, and non-zero terms as follows:

where is either or . represents the zero terms in , and denotes the non-zero terms in .

For the zero terms or the first bin in the histogram, by applying Equations (3) and (4) into the Bernoulli model, their NML code-length is calculated as follows:

where n is the total number of elements in or , and denotes the number of zero values in this matrix.

For the binned data in , and , by applying Equations (3) and (4) into histogram densities with s bins, we can compute their NML code-lengths as:

where is the number of elements in the i-th bin, and is the gamma function. is the code-length of an integer [10], which can be computed as:

where the summation is taken over all the positive iterates. Using Equations (5) and (6), the total description length of can be calculated as follows:

After we apply the MDL principle to select the rank with the shortest total code-length for each tensor slice, we select the largest one from all of slices’ ranks as the rank of the tensor. This estimate can be seen as an lower bound of the rank of the tensor from the fact that the ranks of slices is smaller than that of the data tensor, that is, if the rank of tensor X is R and the decomposition is represented as , each slice can be represented as with rank R matrices and . For example, each element of can be represented as , where and .

We show the entire procedure in Algorithm 1.

| Algorithm 1 Rank Selection with Tensor Slices |

|

4. Comparison Method: MDL2stage

We further developed a novel algorithm, MDL2stage, as a comparison method. MDL2stage is based on tensor slice similarly with the proposed method, but it encodes the factorized results of NMF via the two-stage code-length. All of its calculation is exactly the same as our proposed method except the way of encoding the error matrix and the non-zero terms in the factor matrices.

In MDL2stage, we fit a parametric probability distribution to the histogram to estimate the probability density of each bin. Generally, we assume that elements in the histogram generated by the error matrix follow the normal distribution, and assume that the non-zero elements in the histograms of two factor matrices and are gamma-distributed. Then we use two-stage code-length to calculate the description length of and the non-zero terms in and as follows:

where is or non-zero terms in or , denotes the estimated probability of an element to be in the i-th bin.

The total code-length for a tensor slice with rank R is:

Again, we apply the MDL principle to choose the rank with the shortest total code-length for each slice, and select the greatest rank from all of slices’ ranks as the rank of tensor.

As for the computational complexity for our proposed method and MDL2stage, the cost of factorizing a tensor slice with size is for each iteration. Since there are K such tensor slices, the total computational complexity of the two methods based on tensor slice is per iteration. By similar arguments, performing NMF on all tensor slices costs , where denotes the number of iterations in NMF.

Although theoretically we have to perform NMF on slices, numerical experiments have proven that actually we only need to factorize K tensor slices which have the biggest size , where we assume that K is the smallest value among I, J and K. This is because that smaller tensor slices usually give comparatively low ranks. Therefore, when we choose the largest rank over all ranks of slices, whether or not to use tensor slices with smaller sizes does not influence the final result.

5. Experiments

5.1. Comparison Methods

In addition to our proposed method and MDL2stage, we employ other five criteria for comparison. They are categorized into two classes: One is the based on tensor slice and the other is based on tensor.

For tensor slice based criteria, we first slice the data tensor into tensor slices , then perform NMF on the sliced matrices to obtain factor matrices and , and the error matrix . There are four comparison methods based on tensor slice. Two of them are derived from AIC (Akaike’s information criteria) [8], which we denote as AIC1 and AIC2. In order to apply AIC, we consider two probabilistic models where is generated by , and elements in follow the histogram model in our proposed method and MDL2stage, respectively. Therefore, the leading terms in AIC1 and AIC2 are the same as those in Equations (6) and (9) for , but the remaining penalty terms are both . The other two tensor slice based criteria are derived from BIC (Bayesian information criteria) [9], which we denote as BIC1 and BIC2. The leading terms in them are the same as those in AIC1 and AIC2, but the remaining penalty terms are replaced with , where n is the number of elements in . We select the rank which minimizes those criteria as the tensor slice’s rank, and choose the greatest one from all of the slice’s ranks as the rank of the tensor.

The only tensor-based method is cross-validation, it leaves out some elements from the tensor and then performs NTF to factorize the tensor with missing cells. It then calculates the prediction error between the predicted values of the left out elements and their original values. Therefore, the rank with the lowest prediction error is chosen as the rank of the tensor.

We made the implementation of our proposed method and all of the comparison methods except cross validation available (https://github.com/fuyunhui/Model-Selection-for-NTF).

5.2. Experiments on Synthetic Data

We first evaluate our method in terms of accuracy for estimating true ranks using synthetic data. In this experiment, we employed SimTensor [21] to generate synthetic data tensors. To create a third-order non-negative artificial tensor , we used the “stochastic” module to generate the random factor matrices , , and with a given true rank , then the synthetic tensor was obtained by computing the sum of multi-way outer products. We created several non-negative artificial tensors with different true rank and the same size . All of the synthetic tensors were applied sparse white noise, and the true rank was set from 15 to 40, which were comparatively low because we want to evaluate the performance of each rank selection method accurately.

As we knew the true value of rank for synthetic data tensors, following studies in [17], the performance of any rank selection method can be evaluated by its benefit value. Benefit measures how close the predicted rank is to the true rank. The definition of benefit is given as follows:

where T denotes the number of trials, which was set to be 10 in this experiment, and represents the estimated rank in the t-th trial. If , then the benefit becomes zero. We set and precision .

The benefits for seven criteria versus six different values of true rank are shown in Table 1. Bold numbers indicate the best result for each true rank. Obviously, when the true rank was very small compared with the size of the tensor, cross-validation gave a good result, but as increased, the rank selected by our proposed method was closest to .

Table 1.

Benefits for seven criteria versus six different values of true rank. Boldfaces describe best performances.

The reason that our method performed low benefits in case of small true rank can be considered as follows. When the true rank is extremely small and the noise is relatively large compared with the Frobenius norm of the data tensor , the code-length of error tensor becomes to dominate the entire code-length even when the true rank is given. In such cases, methods based on the MDL principle such as our methods and MDL2stage leads to overestimate the true rank.

In order to demonstrate argumentation above, we performed additional experiments with smaller noises. Using 0.5% sparse white noise instead of 1% sparse noise, we evaluated the benefit of the proposed method and MDL2stage in case of . The proposed method exhibited 0.82 and 0.89 of benefit for and 20, whereas MDL2stage exhibited 0.69 and 0.62. Therefore, we conclude that the reason that the proposed method underperformed when the true rank is extremely small is because the noise is relatively large to the size of data tensor. Moreover, we can see MDL2stage tends to underestimate the true rank and these effects have canceled out in this experiments of extremely small true rank with large noise.

Note that the computational complexity of cross-validation is where t is the number of repeating leaving out missing cells and summing the prediction error. Thus cross-validation will take too much time for large tensors and high candidate ranks. Moreover, AIC1 and AIC2 tended to choose more than ten larger rank than , so their benefits were always 0. Thus, we have demonstrated that our proposed method is able to choose a suitable rank for large synthetic data tensors with a comparatively small but not extremely small true rank. We also experimentally confirmed that, in spite of the fact that our estimate is based on the lower bound of the tensor rank, our proposed method does not suffer terribly from underestimation.

5.3. Experiments on Real Data

5.3.1. On-Line Retail Data Set

The on-line retail data set [22] has been published by UCI machine learning repository [23]. It consists of transnational logs for transactions occurring between 12/2010 and 12/2011 for an on-line retail. We constructed a third-order non-negative data tensor containing 911 commodities, 671 customers and 12 months. So an element indicated the monthly trading amount of the i-th commodity bought by the j-th customer in the k-th month.

Using this data set, we evaluated the performance of our method. The evaluation metric was the accuracy of completing missing values since the true rank was unknown in this case. This accuracy was measured as follows: We first estimated the rank of tensor with different rank selection criteria, then left out some missing cells from the data tensor and conducted cross-validation to compute the prediction error of missing values for the selected ranks. We repeated this process ten times by randomly selecting 5% missing cells to calculate the average prediction errors for all the methods. We compared our method with other methods, except cross-validation. This is because the computation cost of cross-validation is too high especially for tensors with large size and a high rank. The precision was set to be 0.001.

Table 2 shows ranks estimated by the six criteria and their prediction errors. AVE R and STDDEV mean the average value of estimated ranks and its standard deviation through ten trials, respectively. Prediction error for the selected rank is denoted as Error. We see that our proposed method obtained the lowest prediction error. The value of prediction errors seems to be large. Note, however, that the squared Frobenius norm of the data tensor is . So we could say that the prediction error of our proposed method was a suitable value. In addition, the number of patterns chosen by our method was 37 in average, which might be a sensible choice for 911 commodities and 671 customers, especially compared with 7 for BIC-based criteria and 500 for MDL2stage and the AIC-based criteria.

Table 2.

Ranks estimated by six criteria and their prediction errors. Boldfaces describe best performances.

5.3.2. Web Access Data Set

We also evaluate the performance of our method in terms of knowledge discovery. We employed the data set containing web access logs during 6 months provided by VALUES, Inc. (https://www.valuesccg.com/). Those web access logs were organized into a third-order data tensor . The three axes of were user, host and time period. So an element represented the count of user i accessed host j during time period k, and the size of was . Here, we split a week into weekdays and weekends, and split a day into 12 time periods, so there were 24 time periods in total.

We make the following process for knowledge discovery.

Step 1:Conduct NTF to extract bases. We conduct NTF with rank R estimated by a criteria. The factor matrix corresponding to the axis of user can be considered as R patterns of users.

Step 2:Specify a pattern of profiles and time periods. We are given external knowledge about users’ profiles (e.g., age, gender, occupation) in addition to the data tensor. Thus we specify a pattern of combinations of profile and time period such as “female & daytime” that we like to investigate.

Step 3:Give matching scores to each base. For each base obtained in Step 1, we give it a score to indicate how well the user and period in this base match the pattern specified in Step 2. The score is calculated as follows: First compute a matching score of a user belonging to a base, then multiply the score by the user’s weight in the base, finally sum all the matching scores up to get the total score. The matching score is calculated as +1 or more if the attribute matches, or less otherwise.

Step 4:Knowledge discovery from the base with the largest score. Once scores are calculated for all the bases, the base that best matches the pattern specified in Step 2 can be found. We can characterize the pattern by analyzing such a base.

In addition, we generated random vectors and specified patterns for user and time period as baselines. We set precision , and we repeated each experiment 10 times.

Table 3 shows ranks estimated by six criteria and their scores for four specified patterns. The bottom 2 rows show the baselines, and bold numbers indicate results having the largest scores. We observed that our proposed method achieved best scores for all the specified patterns except “male at day”. Therefore we consider our proposed method selected most suitable ranks whereas AIC-based methods and MDL2stage preferred larger ranks, and BIC-based methods inclined to smaller ranks. For the “male at day” pattern, we checked the highest scored base with rank and found that its time period weights had a non-zero value only at one point, which led to a high score although it is not reasonable as a meaningful web access pattern.

Table 3.

Rank estimated by 6 criteria and their scores for web data. Boldfaces describe best performances.

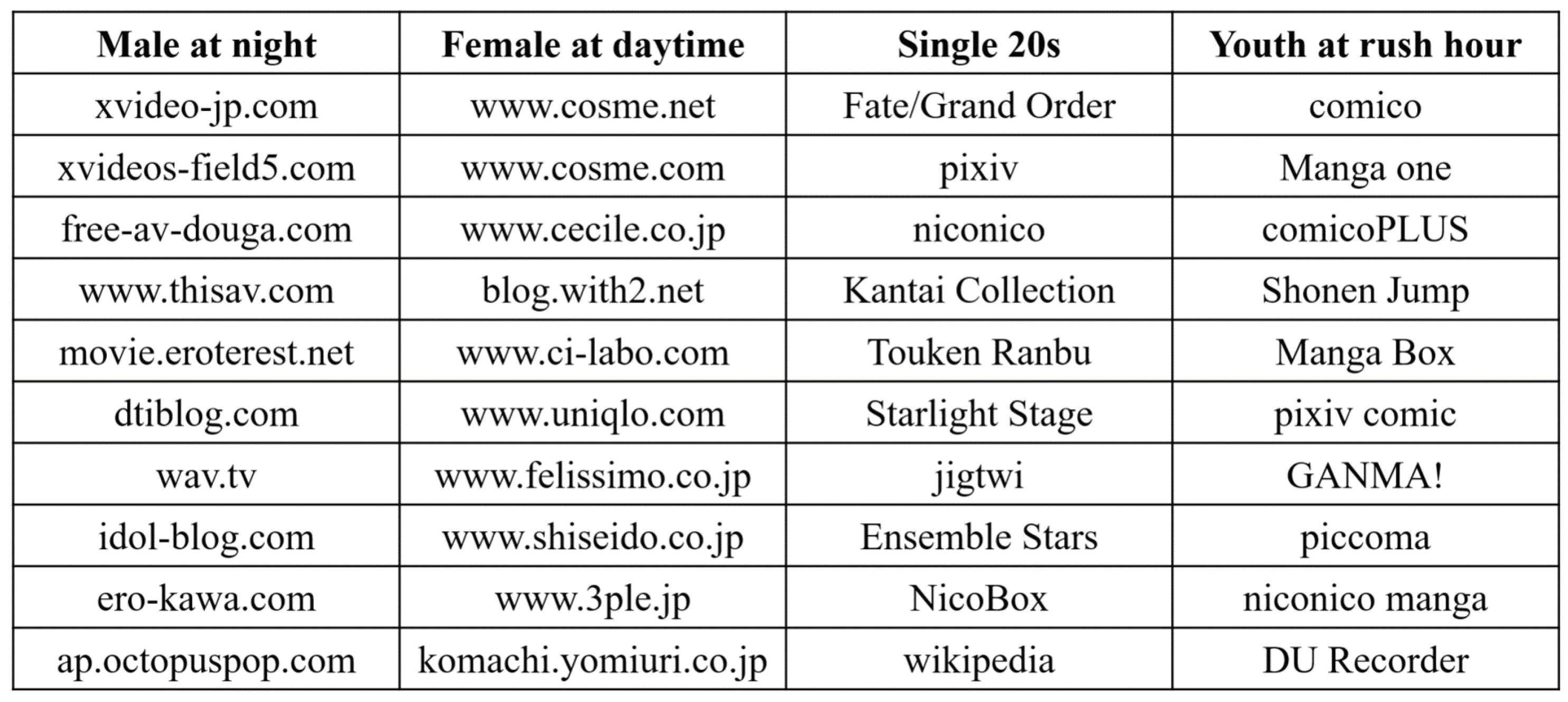

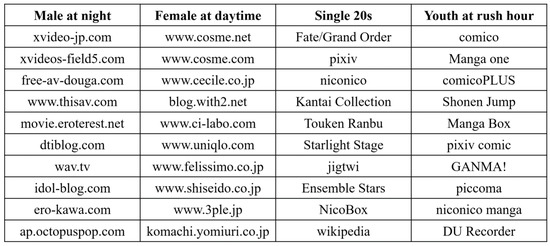

The left two columns in Figure 1 show the top 10 highest valued web pages in bases with the highest scores for “male at night” pattern and “female at day” pattern, respectively. It is clearly observed that all of the top 10 valued websites in the first pattern were related to adult video, while those in the second pattern were mainly related to cosmetic and fashion. Actually, most of the top 100 highest valued users in the “male at night” pattern were men who lived in Kanto area in Japan with ages from 35 to 54 years old, and the access time was distributed only at midnight. In addiction, it turned out that most of the top 100 highest valued users in “female at day” pattern were middle-aged women, and the access time was distributed from 8 a.m. to 8 p.m. For simplicity and the limitation of paper length, we do not show the top 10 highest valued web pages for “male at day” pattern and “female at night” pattern. Thus, we observe that our proposed method produced a reasonable rank while extracting bases better matching the specified patterns.

Figure 1.

The top 10 valued web pages and apps in 4 winner bases.

5.3.3. App Usage Data Set

We employed the data set consisting of app usage logs during 1 year provided by VALUES, Inc. and applied the same analysis as the web access data. We constructed a third-order data tensor containing 2593 users, 2118 apps and 12 time periods of each day. Then an element denotes the count of user i used app j during time period k. We set precision , and repeated each trial 10 times to calculate the average rank and its standard deviation.

Table 4 shows ranks estimated by six criteria and their scores.This time, our proposed method found best bases for both two specified patterns. The right two columns in Figure 1 show the top ten highest valued apps in bases with the highest scores. For the “single 20s” pattern, most of the top ten highest valued apps are popular smart phone games that target the crowd who love animation and comics. For the “Youth at rush hour” pattern, all of the top ten highest valued apps except the last one belong to the comic category in Google Play because in Japan, we can find office worker using their smart phone to watch comics at rush hour everywhere. Thus, we observe that our proposed method selected more suitable rank than other criteria for the app usage data set as well.

Table 4.

Rank estimated by 6 criteria and their scores for app data. Boldfaces describe best performances.

6. Conclusions

This paper has proposed a novel rank selection method for NTF on the basis of the MDL principle. Our method is unique in the tensor slice approach and the NML-based code-length calculation. Using synthetic and real data sets, we have demonstrated the effectiveness of our proposed method in terms of accuracies for estimating true rank and completing missing values. The proposed method has turned out to perform well, especially when the size of the data tensor is large and the true rank is not extremely small. We have also shown that our method produces suitable ranks for knowledge discovery. Future work can include the development of more computationally efficient variants of our method. Recently the decomposed normalized maximum likelihood (DNML) codelength criterion [24] has been developed for latent variable modeling on the basis of the MDL principle. It has been verified that DNML is widely applicable and has good theoretical properties. It remains for future study how we can apply DNML to the problem of tensor rank estimation.

Author Contributions

Conceptualization, S.M. and K.Y.; methodology, Y.F., S.M. and K.Y.; software, Y.F. and S.M.; validation, Y.F., S.M. and K.Y.; formal analysis, Y.F., S.M. and K.Y.; investigation, Y.F.; resources, K.Y.; data curation, Y.F. and S.M.; writing—original draft preparation, Y.F.; writing—review and editing, Y.F., S.M. and K.Y.; visualization, Y.F.; supervision, S.M. and K.Y.; project administration, K.Y.; funding acquisition, K.Y.

Funding

This research was funded by JST-CREST grant number JPMJCR1304 and JST AIP grant number JPMJCR19U4.

Conflicts of Interest

The authors declare no conflict of interest.The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| NTF | Non-negative Tensor Factorization |

| MDL | Minimum Description Length |

| NML | Normalized Maximum Likelihood |

| NMF | Non-negative Matrix Factorization |

| AIC | Akaike Information Criterion |

| BIC | Bayesian Information Criterion |

| CV | Cross Validation |

References

- Kiers, H.A.L. Towards a standardized notation and terminology in multiway analysis. J. Chemom. 2000, 14, 105–122. [Google Scholar] [CrossRef]

- Welling, M.; Weber, M. Positive tensor factorization. Pattern Recognit. Lett. 2001, 22, 1255–1261. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by nonnegative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Shashua, A.; Hazan, T. Non-negative tensor factorization with applications to statistics and computer vision. In Proceedings of the 22nd International Conference on Machine Learning (ICML ’05), Bonn, Germany, 7–11 August 2005; pp. 792–799. [Google Scholar] [CrossRef]

- Cichocki, A.; Zdunek, R.; Choi, S.; Plemmons, R.; Amari, S.-i. Non-Negative Tensor Factorization using Alpha and Beta Divergences. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP ’07), Honolulu, HI, USA, 15–20 April 2007; Volmue 3, pp. 1393–1396. [Google Scholar]

- Barker, T.; Virtanen, T. Semi-supervised non-negative tensor factorisation of modulation spectrograms for monaural speech separation. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; pp. 3556–3561. [Google Scholar]

- Rissanen, J. Modeling By Shortest Data Description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Automat. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the Dimension of a Model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Rissanen, J. Optimal Estimation of Parameters; Cambridge University Press: London, UK, 2012. [Google Scholar]

- Yamanishi, K. A learning criterion for stochastic rules. Mach. Learn. 1992, 9, 165–203. [Google Scholar] [CrossRef]

- Rissanen, J. Fisher information and stochastic complexity. IEEE Trans. Inf. Theor. 1996, 42, 40–47. [Google Scholar] [CrossRef]

- Shtarkov, Y. Universal sequential coding of single messages. Problemy Peredachi Informatsii 1987, 23, 3–17. [Google Scholar]

- Kirbiz, S.; Günsel, B. A multiresolution non-negative tensor factorization approach for single channel sound source separation. Signal Process. 2014, 105, 56–69. [Google Scholar] [CrossRef]

- Goutte, C.; Amini, M.R. Probabilistic tensor factorization and model selection. In Proceedings of the Advances in Neural Information Processing Systems 23 (NIPS 2010), Hyatt Regency, BC, Canada, 6–11 December 2010; pp. 1–4. [Google Scholar]

- Owen, A.B.; Perry, P.O. Bi-cross-validation of the SVD and the nonnegative matrix factorization. Ann. Appl. Stat. 2009, 3, 564–594. [Google Scholar] [CrossRef]

- Ito, Y.; Oeda, S.; Yamanishi, K. Rank Selection for Non-negative Matrix Factorization with Normalized Maximum Likelihood Coding. In Proceedings of the 2016 SIAM International Conference on Data Mining (SDM 2016), Miami, FL, USA, 5–7 May 2016; pp. 720–728. [Google Scholar]

- Hoffman, M.D.; Blei, D.M.; Cook, P.R. Bayesian Nonparametric Matrix Factorization for Recorded Music. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 439–446. [Google Scholar]

- Tanaka, S.; Kawamura, Y.; Kawakita, M.; Murata, N.; Takeuchi, J. MDL Criterion for NMF with Application to Botnet Detection. In Proceedings of the International Conference on Neural Information Processing, Kyoto, Japan, 16–21 October; Springer: Berlin, Germany, 2016; pp. 570–578. [Google Scholar]

- Squires, S.; Prügel-Bennett, A.; Niranjan, M. Rank Selection in Nonnegative Matrix Factorization using Minimum Description Length. Neural Comput. 2017, 29, 2164–2176. [Google Scholar] [CrossRef] [PubMed]

- Fanaee-T, H.; Gama, J. SimTensor: A synthetic tensor data generator. arXiv 2016, arXiv:1612.03772. [Google Scholar]

- Chen, D.; Sain, S.L.; Guo, K. Data mining for the online retail industry: A case study of RFM model-based customer segmentation using data mining. J. Database Market. Custom. Strateg. Manag. 2012, 19, 197–208. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI Machine Learning Repository, University of California, Irvine, School of Information and Computer Sciences. 2019. Available online: http://archive.ics.uci.edu/ml (accessed on 27 June 2019).

- Yamanishi, K.; Wu, T.; Sugawara, S.; Okada, M. The decomposed normalized maximum likelihood code-length criterion for selecting hierarchical latent variable models. Data Min. Knowl. Discov. 2019, 33, 1017–1058. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).