Abstract

We establish an universal property of logarithmic loss in the successive refinement problem. If the first decoder operates under logarithmic loss, we show that any discrete memoryless source is successively refinable under an arbitrary distortion criterion for the second decoder. Based on this result, we propose a low-complexity lossy compression algorithm for any discrete memoryless source.

1. Introduction

In the lossy compression problem, logarithmic loss is a criterion allowing a “soft” reconstruction of the source, a departure from the classical setting of a deterministic reconstruction. In this setting, the reconstruction alphabet is the set of probability distributions over the source alphabet. More precisely, let x be the source symbol from the source alphabet , and be the reconstruction symbol which is the probability measure on . Then the logarithmic loss is given by

Clearly, if the reconstruction has a small probability on the true source symbol x, the amount of loss will be large.

Although logarithmic loss plays a crucial role in the theory of learning and prediction, relatively little work has been done in the context of lossy compression, notwithstanding the two-encoder multi-terminal source coding problem under logarithmic loss [1,2], or recent work on the single-shot approach to lossy source coding under logarithmic loss [3]. Note that lossy compression under logarithmic loss is closely related to the information bottleneck method [4,5,6]. In this paper, we focus on universal properties of logarithmic loss in the context of successive refinement.

Successive refinement is a network lossy compression problem where one encoder wishes to describe the source to two decoders [7,8]. Instead of having two separate coding schemes, the successive refinement encoder designs a code for the decoder with a weaker link, and sends extra information to the second decoder on top of the message of the first decoder. In general, successive refinement coding cannot do as well as two separate encoding schemes optimized for the respective decoders. However, if we can achieve the point-to-point optimum rates using successive refinement coding, we say the source is successively refinable.

Although necessary and sufficient conditions of successive refinability is known [7,8], proving (or disproving) successive refinability of the source is not a simple task. Equitz and Cover [7] found a discrete source that is not successively refinable using Gerrish problem [9]. Chow and Berger found a continuous source that is not successively refinable using Gaussian mixture [10]. Lastras and Berger showed that all sources are nearly successively refinable [11]. However, still only a few sources are known to be successively refinable. In this paper, we show that any discrete memoryless source is successively refinable as long as the weaker link employs logarithmic loss, regardless of the distortion criterion used for the stronger link.

In the second part of the paper, we show that this result can be useful to design a lossy compression algorithm with low complexity. Recently, the idea of successive refinement is applied to reduce the complexity of point-to-point lossy compression algorithm. Venkataramanan et al. proposed a new lossy compression for Gaussian source where the codewords are linear combination of sub-codewords [12]. No and Weissman also proposed a low-complexity lossy compression algorithm for Gaussian source using extreme value theory [13]. Both algorithms are successively describing source and achieve low complexity. Roughly speaking, successive refinement algorithm provides a smaller size of codebook. For example, the naive random coding scheme has a codebook of size when the blocklength is n and the rate is R. On the other hand, if we can design a successive refinement scheme with half rate in the weaker link, then the size of codebook is each. Thus, the overall codebook size is . The above idea can be generalized to successive refinement scheme with L decoders [12,14]

The universal property of logarithmic loss in successive refinement implies that, for any point-to-point lossy compression of discrete memoryless source, we can insert a virtual intermediate decoder (weaker link) under logarithmic loss without losing any rates at the actual decoder (stronger link). As we discussed, this property allows us to design a lossy compression algorithm with low-complexity for any discrete source and distortion pair. Note that previous works only focused on specific source and distortion pair such as binary source with Hamming distortion.

The remainder of the paper is organized as follows. In Section 2, we revisit some of the known results pertaining to logarithmic loss. Section 3 is dedicated to successive refinement under logarithmic loss in the weaker link. In Section 4, we propose a low complexity compression scheme that can be applied to any discrete lossy compression problem. Finally, we conclude in Section 5.

Notation: denotes an n-dimensional random vector while denotes a specific possible realization of the random vector . denotes a support of random variable X. Also, Q denotes a random probability mass function while q denotes a specific probability mass function. We use natural logarithm and nats instead of bits.

2. Preliminaries

2.1. Successive Refinability

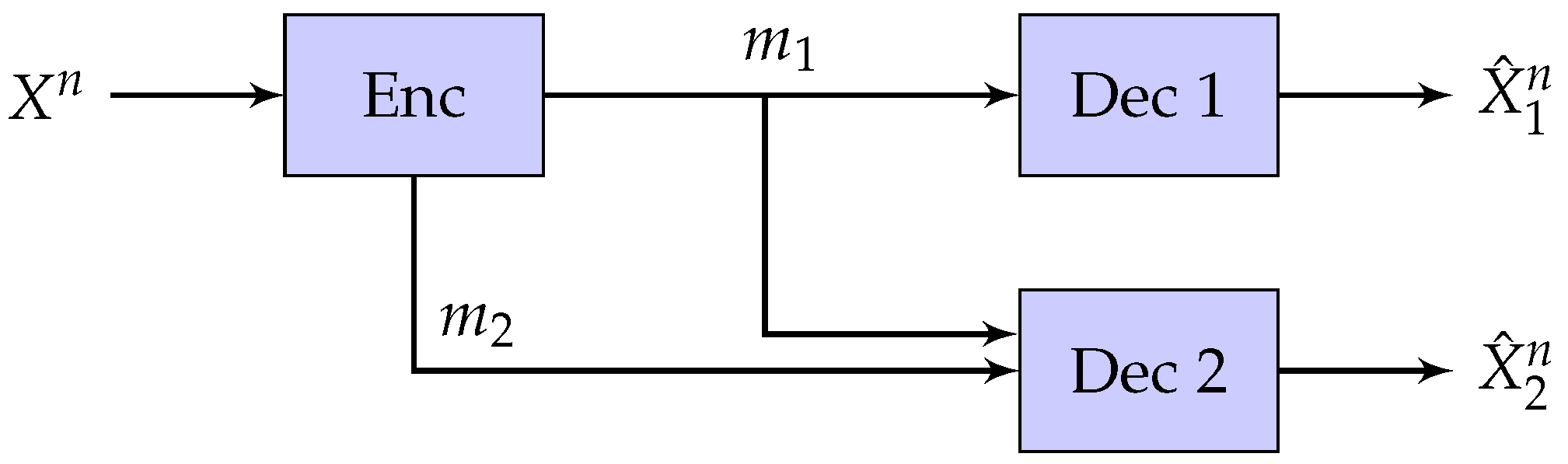

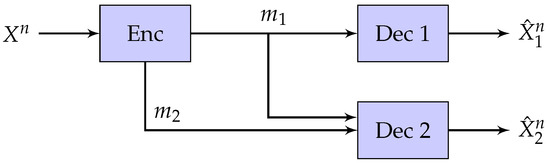

In this section, we review the successive refinement problem with two decoders. Let the source be i.i.d. random vector with distribution . The encoder wants to describe to two decoders by sending a pair of messages where for . The first decoder reconstructs based only on the first message . The second decoder reconstructs based on both and . The setting is described in Figure 1.

Figure 1.

Successive Refinement.

Let be a distortion measure for i-th decoder. The rates of code are simply defined as

An -successive refinement code is a coding scheme with block length n and excess distortion probability where rates are and target distortions are . Since we have two decoders, the excess distortion probability is defined by .

Definition 1.

A rate-distortion tuple is said to be achievable if there is a family of , -successive refinement code where

For some special cases, both decoders can achieve the point-to-point optimum rates simultaneously.

Definition 2.

Let denote the rate-distortion function of the i-th decoder for . If the rate-distortion tuple is achievable, then we say the source is successively refinable at . If the source is successively refinable at for all , then we say the source is successively refinable.

The following theorem provides a necessary and sufficient condition of successive refinable sources.

Theorem 1 ([7,8]).

A source is successively refinable at if and only if there exists a conditional distribution such that forms a Markov chain and

for .

Note that the above results of successive refinability can easily be generalized to the case of k decoders.

2.2. Logarithmic Loss

Let be a set of discrete source symbols (), and be the set of probability measures on . Logarithmic loss is defined by

for and . Logarithmic loss between n-tuples is defined by

i.e., the symbol-by-symbol extension of the single letter loss.

Let be the discrete memoryless source with distribution . Consider the lossy compression problem under logarithmic loss where the reconstruction alphabet is . The rate-distortion function is given by

The following lemma provides a property of the rate-distortion function achieving conditional distribution.

Lemma 1.

The key idea is that we can replace Q by , and have lower rate and distortion, i.e.,

which directly implies (1).

Interestingly, since the rate-distortion function in this case is a straight line, a simple time sharing scheme achieves the optimal rate-distortion trade-off. More precisely, the encoder losslessly compresses only the first fraction of the source sequence components. Then, the decoder perfectly recovers those losslessly compressed components and uses as its reconstruction for the remaining part. The resulting scheme obviously achieves distortion D with rate .

Furthermore, this simple scheme directly implies successive refinability of the source. For , suppose the encoder losslessly compresses the first fraction of the source. Then, the first decoder can perfectly reconstruct fraction of the source with the message of rate and distortion while the second decoder can achieve distortion with rate . Since both decoders can achieve the best rate-distortion pair, it follows that any discrete memoryless source under logarithmic loss is successively refinable.

We can formally prove successive refinability of discrete memoryless source under logarithmic loss using Theorem 1. I.e., by finding random probability mass functions that satisfy

where forms a Markov chain.

Let be a deterministic probability mass function (pmf) in that has a unit mass at x. In other words,

Then, consider random pmfs . Since the support of and is finite, we can define the following conditional pmfs.

3. Successive Refinability

Main Results

Consider the successive refinement problem with a discrete memoryless source as described in Section 2.1. Specifically, we are interested in the case where the first decoder is under logarithmic loss and the second decoder is under some arbitrary distortion measure . We only have a following benign assumption that if for all x, then . This is not a hard restriction since if and have the same distortion values for all x, then there is no reason to have both reconstruction symbols.

The following theorem shows that any discrete memoryless source is successive refinable as long as the weaker link is under logarithmic loss. This implies an universal property of logarithmic loss in the context of successive refinement.

Theorem 2.

Let the source be arbitrary discrete memoryless. Suppose the distortion criterion of the first decoder is logarithmic loss while that of the second decoder is an arbitrary distortion criterion . Then the source is successively refinable.

Proof.

The source is successively refinable at if and only if there exists a such that

Let be the conditional distribution for the second decoder that achieves the informational rate-distortion function . i.e.,

Since the weaker link is under logarithmic loss, we have . This implies that . Thus, we can assume throughout the proof. For simplicity, we further have a benign assumption that there is no such that for all x. (See Remark 1 for the case where such exists.)

Without loss of generality, suppose . Consider a random variable with the following pmf for some :

The conditional distribution is given by

The joint distribution of is given by

It is clear that if and if . Since , there exists an such that .

We are now ready to define the Markov chain. Let and for all . The following lemma implies that there is a one-to-one mapping between q and y.

Lemma 2.

If for all , then .

The proof of lemma is given in Appendix A. Since is a one-to-one mapping, we have

Also, we have

Furthermore, forms a Markov chain since forms a Markov chain. This concludes the proof. □

The key idea of the theorem is that (1) is the only loose required condition for the rate-distortion function achieving conditional distribution. Thus, for any distortion criterion in the second stage, we are able to choose an appropriate distribution that satisfies both (1) and the condition for successive refinability.

Remark 1.

The assumption for all is not necessary. Appendix B shows another joint distribution that satisfies conditions for successive refinability when the above assumption does not hold.

The distribution in the above proof is one simple example that has a single parameter ϵ, but we can always find other distributions that satisfy the condition for successive refinability. In the next section, we propose totally different distribution that achieves a Markov chain with . This implies that the above proof does not rely on the assumption.

Remark 2.

In the proof, we used random variable Y to define . On the other hand, if the joint distribution satisfies conditions of successive refinability, there exists a random variable Y where forms a Markov chain and . This is simply because we can set , which implies .

Theorem 2 can be generalized to successive refinement problem with K intermediate decoders. Consider random variables for such that forms a Markov chain and the joint distribution of is given by

where . Similar to the proof of Theorem 2, we can show that for all satisfy the condition for successive refinability (where posterior distributions should be distinct for all to guarantee one-to-one correspondence). Thus, we can conclude that any discrete memoryless source with K intermediate decoders is successively refinable as long as all the intermediate decoders are under logarithmic loss.

4. Toward Lossy Compression with Low Complexity

As we mentioned in Remark 1, the choice of joint distribution in the proof of Theorem 2 is not unique. In this section, we propose another joint distribution that satisfies the conditions for successive refinability. It naturally suggests a new lossy compression algorithm which we will discuss in Section 4.3.

4.1. Rate-Distortion Achieving Joint Distribution: Small

Recall that . We first consider the case where is not too large so that is close to . We will clarify this later. For simplicity, we further assume that . Consider a random variable with the following pmf for some

If it is clear from context, we simply use for the sake of notation. We further define a random variable Y that is independent to Z such that , where denotes a sum modulo s. This can be achieved by following pmf and conditional pmf.

If , we have . Also, it is clear that increases as increases. Since we assume that is not too large, there exists such that . We will discuss about the case of general in Section 4.2. The joint distribution of is given by

We are now ready to define the Markov chain. Let and for all where . For simplicity, we assume that and are not the same for all . Since is a one-to-one mapping, we have

Also, we have

Furthermore, forms a Markov chain since forms a Markov chain. Thus, the above construction of joint distribution satisfies the conditions for successive refinability.

4.2. Rate-Distortion Achieving Joint Distribution: General

The joint distribution in the previous section only works for small . It is because has a natural upper-bound from (5) which is . In this section, we generalize the proof in the previous section for general . The key observation is that if we pick the maximum , then . This implies that we can focus on the smaller set of reconstruction alphabet .

Let , and define random variables recursively. More precisely, we define the random variable based on for .

where

Similar to the definition of Y, we assume and are independent, and denotes modulo k sum. Each time step, the alphabet size of decreases by one. Thus, we have , and therefore with probability 1. Furthermore, we have

For , there exists k such that . Thus, there exists Y that satisfies and for some . This implies that

Similar to the previous section, we assume that if . Then, we can set which satisfies the conditions for successive refinability.

4.3. Iterative Lossy Compression Algorithm

The joint distribution from the previous section naturally suggests a simple successive refinement scheme. Consider the lossy compression problem where the source is i.i.d. and the distortion measure is . Let D be the target distortion, and be the rate of the scheme where is the rate-distortion function. Let be the rate-distortion achieving distribution.

For block length n, we propose a new lossy compression scheme that mimics successive refinement with decoders. Similar to the previous section, let and

We further let for that satisfy . Now, we are ready to describe our coding scheme. Generate a sub-codebook where each sequence is generated according to i.i.d. for all m. Similarly, generate sub-codebooks for where each sequence is generated according to i.i.d. for all m.

Upon observing , the encoder finds that minimizes where the distortion measure is defined as follows.

Note that is simply the logarithmic loss between x and .

Similarly, for , the encoder iteratively finds that minimizes where

Upon receiving , the decoder reconstructs

Suppose , and . Similar to [12,14], this scheme has two main advantages compare to naive random coding scheme. First, the number of codewords in the proposed scheme is , while the naive scheme requires codewords. Also, in each iteration, the encoder finds the best codeword among sub-codewords. Thus, the overall complexity is as well. On the other hand, the naive scheme requires complexity.

Remark 3.

The proposed scheme constructs from binary sequences. The reconstruction after each stage can be viewed as

where . Thus, the decoder starts from binary sequence , and the alphabet size increases by 1 at each iteration. After -th iteration, it reaches the final reconstruction where the size of alphabet is s.

5. Conclusions

To conclude our discussion, we summarize our main contributions. In the context of successive refinement problem, we showed another universal property of logarithmic loss that any discrete memoryless source is successively refinable as long as the intermediate decoders operate under logarithmic loss. We applied the result to the point-to-point lossy compression problem and proposed a lossy compression scheme with lower complexity.

Funding

This work was supported by 2017 Hongik University Research Fund.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Proof of Lemma 2

For ,

On the other hand, for ,

Let be d-tilted information [15]:

where and the expectation is with respect to marginal distribution . Csiszár [16] showed that for -almost every ,

If for all x, it implies that for all x which contradicts our assumption. On the other hand, if for all x, it also contradicts our assumption. Thus, are different from each other for all .

Appendix B. Proof of the Special Case of Theorem 2

Similar to the main proof of Theorem 2, we assume . Suppose there exists such that for all x. Without loss of generality, we assume , i.e., for all x.

Consider a random variable with the following conditional pmf for some :

It is clear that if and if . Since , there exists an such that . We also have and for all . The following lemma implies the one-to-one mapping between q and y.

Lemma A1.

If for all , then .

Proof.

If , the conditional distribution is given by

Then,

where the last equality is because for all x. In other words, .

On the other hand, if , the conditional distribution is given by

Then,

As we have seen in Appendix A, cannot be equal to if . Since for all x, we can say that for all x implies . □

The remaining part of the proof is exactly the same as the main proof.

References

- Courtade, T.A.; Wesel, R.D. Multiterminal source coding with an entropy-based distortion measure. In Proceedings of the 2011 IEEE International Symposium on Information Theory Proceedings, St. Petersburg, Russia, 31 July–5 August 2011; pp. 2040–2044. [Google Scholar]

- Courtade, T.; Weissman, T. Multiterminal Source Coding Under Logarithmic Loss. IEEE Trans. Inf. Theory 2014, 60, 740–761. [Google Scholar] [CrossRef]

- Shkel, Y.Y.; Verdú, S. A single-shot approach to lossy source coding under logarithmic loss. IEEE Trans. Inf. Theory 2018, 64, 129–147. [Google Scholar] [CrossRef]

- Tishby, N.; Pereira, F.; Bialek, W. The information bottleneck method. In Proceedings of the 37th Annual Allerton Conference on Communication, Control, and Computing, Monticello, IL, USA, 22–24 September 1999; pp. 368–377. [Google Scholar]

- Harremoës, P.; Tishby, N. The information bottleneck revisited or how to choose a good distortion measure. In Proceedings of the 2007 IEEE International Symposium on Information Theory, Nice, France, 24–29 June 2007; pp. 566–570. [Google Scholar]

- Gilad-Bachrach, R.; Navot, A.; Tishby, N. An information theoretic tradeoff between complexity and accuracy. In Learning Theory and Kernel Machines; Springer: Berlin/Heidelberg, Germany, 2003; pp. 595–609. [Google Scholar]

- Equitz, W.H.; Cover, T.M. Successive refinement of information. IEEE Trans. Inf. Theory 1991, 37, 269–275. [Google Scholar] [CrossRef]

- Koshelev, V. Hierarchical coding of discrete sources. Probl. Peredachi Inf. 1980, 16, 31–49. [Google Scholar]

- Gerrish, A.M. Estimation of Information Rates. Ph.D. Thesis, Yale University, New Haven, CT, USA, 1963. [Google Scholar]

- Chow, J.; Berger, T. Failure of successive refinement for symmetric Gaussian mixtures. IEEE Trans. Inf. Theory 1997, 43, 350–352. [Google Scholar] [CrossRef]

- Lastras, L.; Berger, T. All sources are nearly successively refinable. IEEE Trans. Inf. Theory 2001, 47, 918–926. [Google Scholar] [CrossRef]

- Venkataramanan, R.; Sarkar, T.; Tatikonda, S. Lossy Compression via Sparse Linear Regression: Computationally Efficient Encoding and Decoding. IEEE Trans. Inf. Theory 2014, 60, 3265–3278. [Google Scholar] [CrossRef]

- No, A.; Weissman, T. Rateless lossy compression via the extremes. IEEE Trans. Inf. Theory 2016, 62, 5484–5495. [Google Scholar] [CrossRef] [PubMed]

- No, A.; Ingber, A.; Weissman, T. Strong successive refinability and rate-distortion-complexity tradeoff. IEEE Trans. Inf. Theory 2016, 62, 3618–3635. [Google Scholar] [CrossRef]

- Kostina, V.; Verdú, S. Fixed-length lossy compression in the finite blocklength regime. IEEE Trans. Inf. Theory 2012, 58, 3309–3338. [Google Scholar] [CrossRef]

- Csiszár, I. On an extremum problem of information theory. Stud. Sci. Math. Hung. 1974, 9, 57–71. [Google Scholar]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).