Abstract

For continuous numerical data sets, neighborhood rough sets-based attribute reduction is an important step for improving classification performance. However, most of the traditional reduction algorithms can only handle finite sets, and yield low accuracy and high cardinality. In this paper, a novel attribute reduction method using Lebesgue and entropy measures in neighborhood rough sets is proposed, which has the ability of dealing with continuous numerical data whilst maintaining the original classification information. First, Fisher score method is employed to eliminate irrelevant attributes to significantly reduce computation complexity for high-dimensional data sets. Then, Lebesgue measure is introduced into neighborhood rough sets to investigate uncertainty measure. In order to analyze the uncertainty and noisy of neighborhood decision systems well, based on Lebesgue and entropy measures, some neighborhood entropy-based uncertainty measures are presented, and by combining algebra view with information view in neighborhood rough sets, a neighborhood roughness joint entropy is developed in neighborhood decision systems. Moreover, some of their properties are derived and the relationships are established, which help to understand the essence of knowledge and the uncertainty of neighborhood decision systems. Finally, a heuristic attribute reduction algorithm is designed to improve the classification performance of large-scale complex data. The experimental results under an instance and several public data sets show that the proposed method is very effective for selecting the most relevant attributes with high classification accuracy.

1. Introduction

Over the past few decades, data classification has become one of the important aspects of data mining, machine learning, pattern recognition, etc. As an important application of rough set models in a variety of practical problems, attribute reduction methods in information systems have been drawing wide attention of researchers [1,2]. It is a fundamental research theme in the field of granular computing [3]. Since a lot of information is gathered and it may include a large number of redundant and noisy attributes, the main objective of attribute reduction based on rough sets is to eliminate the redundant attributes, classify data and extract useful information [4].

Attribute reduction in rough set theory has been recognized as an important feature selection method [2]. Considering whether the evaluation criterion involves classification models, the existing feature selection methods can be broadly classified into the following three categories [5]: filter, wrapper and embedded methods. Based on the intrinsic properties of data set, the filter methods select a feature subset as a preprocessing step that is independent of the learning algorithm [6]. Lyu et al. [7] investigated a filter method based on maximal information coefficient, which eliminates redundant information that does not require additional processes. The wrapper methods use a classifier to find the most discriminant feature subset by minimizing an error prediction function [8]. Jadhav et al. [9] designed a wrapper feature selection method and performed functional ranking based on information gain directed genetic algorithm. Unfortunately, the wrapper methods not only exhibit sensitivity to the classifier, but also tend to consume a lot of runtime [10]. Hence, few works in the field employ these methods. The embedded methods integrate feature selection in the training process to reduce the total time required for reclassifying different subsets [11]. Imani et al. [12] introduced an embedded algorithm based on a Chisquare feature selector. But it is not as accurate as the wrapper in classification problems. On the basis of the above analysis, our attribute reduction method is based on the filter method, in which a heuristic search algorithm is used to find an optimal attribute reduction subset for complex data sets using neighborhood rough set model.

Rough set theory has become an efficient mathematical tool for attribute reduction to discover data dependencies and reduce redundancy attributes contained in data sets [13,14]. Gu et al. [15] proposed a kernelized fuzzy rough set, but the result is critically depending on setting control parameters and the design of objective function. Raza and Qamar [16] presented a parallel rough sets-based dependency calculation method for feature selection, and it could directly find the positive region-based objects without calculating the positive region itself. However, rough sets can only deal with attributes of a specific type in information systems using a binary relation [17]. In addition, the traditional rough set model need to discretize the data when dealing with continuous data, but the process ignores the differences among data and affects the information expression of the original attribute set to a certain degree [18]. Moreover, the original property of the continuous-valued data will change after discretization, and some useful information will be lost [8]. To overcome this drawback, scholars have developed many extensions for the traditional rough set model [19,20]. As an extended rough set model, neighborhood rough set model is introduced to solve the problem that classical rough sets cannot handle continuous numerical data. Since most of data in attribute reduction are numerical, when utilizing neighborhood rough sets, the discretization of continuous data can be avoided [21]. Hu et al. [22] developed a neighborhood rough set model via the δ-neighborhood set to deal with discrete and continuous data sets. Chen et al. [13] proposed a neighborhood rough sets-based feature reduction fish swarm algorithm to deal with numerical data sets. Sun et al. [23] studied a gene selection algorithm based on Fisher linear discriminant and neighborhood rough sets, which is of great practical significance for cancer clinical diagnosis. Mu et al. [24] investigated a gene selection method using Fisher transformation based on neighborhood rough sets for numerical data sets. Li et al. [14] developed a feature reduction method based on neighborhood rough sets and discernibility matrix, but there is a hypothesis that all features data are available. Nonetheless, since the global neighborhood in this field is only used to deal with decision systems, that is, each sample uses the same neighborhood value in different conditional attribute combinations; this method has a high time complexity and does not result in the optimal δ value [24]. Wang et al. [1] constructed local neighborhood rough sets to deal with labeled data. It is well known that the neighborhood rough sets can be employed to deal with an information system with heterogeneous attributes including categorical and numerical attributes [22]. However, a large number of existing attribute reduction algorithms based on rough set model and its variations only analyze finite sets, which would limit their application to some extension. Halmos [25] used Lebesgue measure as measure theory to achieve uncertainty measures. Song and Li [26] stated Lebesgue theorem in non-additive measure theory. Xu et al. [27] introduced Lebesgue integral over infinite interval and presented a computation method based on kernel function for uncertainty measures. Halcinová et al. [28] investigated the weighted Lebesgue integral by Lebesgue differentiation theorem, and used Lebesgue measure to develop the standard weighted Lp-based sizes. Park et al. [29] expressed a measurable map through Lebesgue integration to define the Cumulative Distribution Transform of density functions. Recently, scholars [30,31,32] introduced Lebesgue measure as a promising additional method to resolve some problems in the application of data analysis. As inspired by the ideas of Lebesgue measure that can efficiently evaluate infinite sets, it is therefore necessary to employ Lebesgue measure to study uncertainty measures and efficient reduction algorithms for infinite sets in neighborhood decision systems.

Uncertainty measures play an important role in the uncertainty analysis of granular computing models [33]. Ge et al. [34] researched a positive region-based reduction algorithm from the relative discernibility perspective in rough set theory. Sun and Xu [35] proposed a positive region-based granular space for feature selection based on rough sets. Nevertheless, the positive region in these models only draws attention to the consistent samples whose similarity classes are completely contained in some decision classes [36]. Meng et al. [37] presented an intersection neighborhood for numerical data, and designed a gene selection algorithm using positive region and gene ranking based on neighborhood rough sets. Liu et al. [38] designed a hash-based algorithm to calculate the positive region of neighborhood rough sets for attribute reduction. Li et al. [39] investigated a positive region-based related attribute reduction in neighborhood-based decision-theoretic rough set model. In summary, these literatures about attribute reduction are all based on rough sets or neighborhood rough sets from algebra view. And some of the abovementioned algorithms can achieve optimal reducts with criterion preservation, but in a sense, their reducts still have some redundant attributes that can be further deleted [2]. What’s more, a lot of reduction algorithms still have higher time expenses when dealing with high-volume and high-dimensional data sets.

Until now, the attribute reduction models based on information theory have been studied extensively. Information entropy introduced by Shannon [40] is a very useful method for the representation of information contents in various domains [41]. Liang et al. [42] proposed information entropy, rough entropy and knowledge granulation for classifications in an incomplete information system. Sun et al. [43] presented some rough entropy-based uncertainty measures to improve computational efficiency of a heuristic feature selection algorithm. Gao et al. [2] introduced an attribute reduction algorithm based on the maximum decision entropy in decision-theoretic rough set model. Nevertheless, most of traditional rough sets-based methods for attribute reduction in the information view are not suitable to measure neighborhood classes of a real-value data set [20]. Liu et al. [44] combined information entropy with neighborhood rough sets to develop neighborhood mutual information. Chen et al. [20] constructed a gene selection method based on neighborhood rough sets and neighborhood entropy gain measures. Wang et al. [45] presented a feature selection method based on discrimination measures using conditional discrimination index in neighborhood rough sets. However, it is noted that the monotonicity of the abovementioned uncertainty measures does not always hold. In general, the attribute significance measures constructed in these algorithms can be applicable for numeric data sets, and these literatures for attribute reduction are based on rough sets or neighborhood rough sets from the information view.

As we can see, many existing methods of attribute reduction in neighborhood rough sets usually only start from the algebraic point of view or the information point of view, while the definition of attribute significance based on algebraic view only describes the effect of attributes on the subset of classification contained [46]. The definition of attribute significance based on information view only describes the influence of attributes on the uncertain classification subset contained in the domain and suitable for small-scale data sets [47]. Thus, they each have certain limitations in the real-world application. To overcome the shortcoming, considering to efficiently combine the above two views, Wang et al. [46] studied rough reduction in both the algebra view and the information view, and illustrated the definition of reduction and relative reduct in both the algebra view and the information view. Attribute reduction algorithms under the algebra and information viewpoints in rough set theory have been enhanced by filtering out redundant objects by Qian et al. [48]. Although these methods have their own advantages, they are still inefficient and not suitable for reducing large-scale high-dimensional data, and the enhanced algorithms only decrease the computation time to a certain extent [47]. Inspired by this, to study neighborhood rough sets from the two views and achieve great uncertainty measures in neighborhood decision systems, the algebra view and the information view will be combined to develop attribute reduction algorithm for infinite sets in continuous-valued data sets. It follows that the Lebesgue measure [25] is introduced into neighborhood entropy to investigate the uncertainty measures in neighborhood decision systems, an attribute reduction method using Lebesgue and entropy measures is presented, and then a heuristic search algorithm is designed to analyze the uncertainty and noisy of continuous and complex data sets.

The rest of this paper is structured as follows: Some related concepts are briefly reviewed in Section 2. Section 3 describes Lebesgue measure-based neighborhood uncertainty measures, neighborhood entropy-based uncertainty measures, and comparison analysis with two representative reducts. Then, an attribute reduction algorithm with complexity analysis is presented. In Section 4, the classification experiments are conducted on public five UCI data sets and four gene expression data sets. Finally, Section 5 summarizes this study.

2. Previous Knowledge

In this section, we introduce some basic concepts and properties of decision system. The detailed descriptions can be found in literatures [22,41,49,50].

2.1. Rough Sets

Given a decision system DS = <U, C, D, V, f>, usually written more simply as DS = <U, C, D>, where U = {x1, x2, ⋯, xl} is a sample set named universe; C = {a1, a2, ⋯, as} is a conditional attributes set that describes the samples; D is a set of classification decision attributes; and Va is a value set of attribute a; f: U × {C ∪ D} → V is a map function; and f(a, x) represents the value of x on attribute a C ∪ D.

Given a decision system DS = <U, C, D> with B C, for any two samples x, y U, the equivalence relation [49] is described as:

Then, for any sample x U, [x]B = {y|y U, (x, y) IND(B)} is an equivalence class of x, and U/IND(B) (U/B for short) is called as a partition that is composed of the equivalence classes.

The equivalence class defines two classical sets, named upper and lower approximation sets, as the elementary units [49]. Given a decision system DS = <U, C, D> with B C and X U, the upper approximation set and the lower approximation set of X with respect to B can be described, respectively, as:

The precision is the ratio of the lower approximation set and the upper approximation set to measure the imprecision of a rough set. The roughness is an inverse of precision by a subtraction in the following.

Given a decision system DS = <U, C, D> with B C and X U, the approximation precision of X with respect to B is described as:

Given a decision system DS = <U, C, D> with B C and X U, the approximation roughness of X with respect to B is described as:

The approximation precision and the approximation roughness are used to measure the uncertainty and evaluate a rough set of information systems [41].

2.2. Information Entropy Measures

Given a decision system DS = <U, C, D> with BC and U/B = {X1, X2, ⋯, Xn}, the information entropy [50] of B is described as:

where is the probability of Xi U/B, and |Xi| denotes the cardinality of the equivalence class Xi.

Given a decision system DS = <U, C, D> with B1, B2 C, U/B1 = {X1, X2, ⋯, Xn}, and U/B2 = {Y1, Y2, ⋯, Ym}, then the joint entropy [50] of B1 and B2 is defined as:

where , i = 1, 2, ⋯, n, and j = 1, 2, ⋯, m.

Given a decision system DS = <U, C, D> with B1, B2 C, U/B1 = {X1, X2, ⋯, Xn}, and U/B2 = {Y1, Y2, ⋯, Ym}, then the conditional information entropy [50] of B2 with respect to B1 is defined as:

where , i = 1, 2, ⋯, n, and j = 1, 2, ⋯, m.

2.3. Neighborhood Rough Sets

Given a real-value data set, which is formalized as a neighborhood decision system NDS = <U, C, D, V, f, ∆, δ>, where U = {x1, x2, ⋯, xl} is a sample set named universe; C = {a1, a2, ⋯, as} is a set of all conditional attributes; D is a decision attributes set; and Va is a value set of attribute a; f: U × { C ∪ D } → V is a map function; ∆ → [0, ∞] is a distance function; and δ is a neighborhood parameter with 0 ≤ δ ≤ 1. In the following, NDS = <U, C, D, V, f, ∆, δ> is simply noted as NDS = <U, C ∪ D, δ>.

Given a neighborhood decision system NDS = <U, C ∪ D, δ> with B C, a distance function ∆ → [0, ∞], and a neighborhood parameter δ [0, 1], then the neighborhood relation [22] is described as:

According to the definition of neighborhood relation, for any x U, the neighborhood class of x with respect to B C is expressed as:

Because the Euclidean distance function effectively reflects the basic information of the unknown data [22], it is introduced into this paper, and its formula is expressed as:

where N is the cardinality of subset B.

Given a neighborhood decision system NDS = <U, C D, δ> with B C and X U, the neighborhood upper approximation set and the neighborhood lower approximation set of X with respect to B are denoted, respectively, as:

3. Attribute Reduction Using Lebesgue and Entropy Measures in Neighborhood Decision Systems

3.1. Lebesgue Measure-Based Neighborhood Uncertainty Measures

Aiming at the problem that the existing neighborhood rough set model cannot handle the infinite sets, a neighborhood rough set model combined with Lebesgue measure is proposed, which is on the basis of the neighborhood rough set model and the measure theory. Then, the concept of Lebesgue measure is introduced to extend neighborhood rough sets for an infinite set.

For any M-dimensional Euclidean space RM, let E be a point set in RM, and for an open interval Ii of each column covered E, holds. Then, the sum of its volume is , and all of μ form a bounded below set of numbers. The infimum is called the Lebesgue outer measure of E, denoted as m*(E), i.e.,:

The Lebesgue inner measure can be described as (E) = |I| − m*(I – E). If (E) = m*(E), then E is said to be measurable, denoted as the Lebesgue measure m(E). When the Lebesgue measure of U is 0, it can be shown as the cardinality of U, i.e., |U|. Here, m(X) is used uniformly to describe the Lebesgue measure of a set X in this paper.

Definition 1.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and any BC, ∆B(x, y) is a distance function between two objects, and a neighborhood parameter 0 ≤ δ ≤ 1, for any x, yU, then a Lebesgue measure of neighborhood class with respect to B is defined as

Proposition 1.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, and P, QC, for any xU, then the following properties hold:

(1) m(U) = |U|.

(2) If QP, then.

(3) If 0 ≤ α ≤ δ ≤ 1, then.

(4) For any qQ, m((x)) ≤ m((x)).

(5) m() ≠ 0 and m() = m(U).

Proof.

(1) This proof is straightforward.

(2) Suppose that any attribute subset Q, R P C, there must exist P = Q R. From Equation (10), one has that = {y|x, y U, ∆Q(x, y) ≤ δ} and ={y|x, y U, ∆Q R(x, y) ≤ δ}. Then, it can be obtained from Proposition 1 in [20] that ∆Q(x, y) ≤ R(x, y), i.e., ∆Q(x, y) ≤ ∆P(x, y). It follows from Equation (10) that . Therefore, by Equation (15), holds.

(3) For any 0 ≤ α ≤ δ ≤ 1, it follows immediately from Proposition 1 in [41] that holds. Hence, one has .

(4) Suppose that any q Q C, it follows from Proposition 1 in [41] that . Then, one has that .

(5) For any P C, it can be obtained from Proposition 1 in [41] that and = U. Hence, both and m() = m(U) hold. □

Definition 2.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and XU, an upper approximation and a lower approximation of X with respect to B based on Lebesgue measure are defined, respectively, as:

Proposition 2.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and X, Y U, then the following properties hold:

(1)≤.

(2).

(3).

(4) XY.

(5) XY.

Proof.

(1) This proof is straightforward.

(2) Suppose that any , it follows from Equation (12) that (x) ∩ (X ∪ Y) ≠ Ø, and then ( (x) X) ( (x) Y) ≠ Ø. It is obvious that (x) X ≠ Ø or (x) Y ≠ Ø. From Equation (12), one has that x or x , and then . Thus, it can be obtained that . Therefore, one has m( (X Y)δ) = m( (X)δ) + m ( (Y)δ).

(3) Since there exist X X Y U and Y X Y U, and it follows from Equation (5) in [49] that and , which yields (X)δ (Y)δ (X Y)δ. Obviously, m( (X)δ (Y)δ) ≤ m ( (X Y)δ) such that one has m( (X)δ) + m((Y)δ) ≤ m((X Y)δ). Hence, m((X Y)δ) ≥ m( (X)δ) + m( (X)δ) can be obtained.

(4) Suppose that X Y, it follows that X Y = X, and then . Similar to the Equation (5) in [49], it can be obtained that in rough sets. Obviously, , and then . One has that . Hence, holds.

(5) For any X Y, it follows that X Y = Y, and then . From the proof of (2), one has that . Obviously, . Thus, holds. □

Definition 3.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and XU, a new neighborhood approximate precision of X with respect to B based on Lebesgue measure is defined as:

In a neighborhood decision system, the measure of neighborhood approximate precision is the percentage of possible correct decisions when classifying objects. It is monotonically increasing with the growth of a conditional attribute.

Property 1.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and XU, then there exists 0 ≤≤ 1.

Proof.

Suppose that B C and X U, it follows from Proposition 2 that established. Then, it is obvious that , and from Equation (18), one has that 0 ≤ ≤ 1. □

The neighborhood approximation precision is used to reflect the degree of knowledge of acquiring set X. When = 1, the B boundary of X is an empty set. At this time, the set X is precisely defined on B. When < 1, the set X has a non-empty B boundary domain, and the set X is undefined on B. Of course, some other metrics can also be used to define the imprecision of X.

Definition 4.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and XU, a new neighborhood roughness of X with respect to B based on Lebesgue measure is defined as:

The neighborhood roughness of X with respect to B is opposite and complementary to the neighborhood approximate precision. It represents the degree of incompleteness of obtaining knowledge of set X.

Property 2.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and XU, then there exists 0 ≤≤ 1.

Proof.

It follows immediately from Property 1 that the proof is straightforward. □

Proposition 3.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, QPC and XU, then one has≤and≥.

Proof.

Suppose that for any Q P C and x U, it follows from Proposition 1 that . By Equation (12), and hold. Similarly, from Equation (13), one has and . It can be obtained that and . It follows from Proposition 2 that and . Then, it is obvious that so that one has . Therefore, both ≤ and ≥ hold. □

It is known that the classical measurement methods are used to estimate a set of data classified in a knowledge system [3]. From Proposition 3, the neighborhood approximate precision and the neighborhood roughness are used to measure the uncertainty of rough classification in neighborhood decision systems.

3.2. Neighborhood Entropy-Based Uncertainty Measures

In rough set theory, information entropy is used as a measure to assess the value of equivalence class in a discrete decision system [50]. However, it is not appropriate to measure the neighborhood classes in real-value data sets [18]. To solve this problem, the concept of neighborhood has been introduced into information entropy to extend Shannon entropy [51]. But, most of neighborhood entropy-based measures and their variations only analyze finite sets, which would limit the practical application of neighborhood rough sets to a certain degree. It is known that the Lebesgue measure can measure the uncertainty of the infinite sets [25]. Then, the Lebesgue measure is introduced to study the uncertainty measures of infinite sets in neighborhood decision systems.

Given a neighborhood decision system NDS = <U, C D, δ> with B C, is a neighborhood class of xi U, and then Hu et al. [21] described the neighborhood entropy of xi with respect to B as follows:

Definition 5.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, BC and xiU, a new neighborhood entropy of B based on Lebesgue measure is defined as:

Definition 6.

Given a neighborhood decision system NDS = <U, C D, δ> with non-empty infinite set U, and B C, an average neighborhood entropy of the universe U based on Lebesgue measure is defined as:

Proposition 4.

Given a neighborhood decision system NDS = <U, CD, δ > with non-empty infinite set U and P, QC, for any xiU, if, then Hδ(P) = Hδ(Q).

Proof.

Suppose that for any xi U, , and it follows that from Equation (15) that . Then, it can be obtained that . Hence, by Equation (22), one has Hδ(P) = Hδ(Q). □

Definition 7.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and BC,is the neighborhood class of xi in neighborhood relation, and [xi]d is an equivalence class formed by the decision attribute d of xi in equivalence relation. Then, a joint entropy of subsets B and d based on Lebesgue measure is defined as:

Proposition 5.

Given a neighborhood decision system NDS = <U, C{d}, δ> with non-empty infinite set U, and QPC, then Hδ(Qd) ≤ Hδ(Pd).

Proof

Suppose that any Q P C, it can be obtained from Proposition 1 that and . Let [xi]d be an equivalence class formed by d of xi in equivalence relation. Then, holds. Obviously, one has that . It follows that . Then, it is obvious that . Thus, it can be obtained that . Therefore, Hδ(Qd) Hδ(Pd) holds. □

Definition 8.

Given a neighborhood decision system NDS = <U, C{d}, δ> with non-empty infinite set U and BC, for any class of object xU with respect to B,is a neighborhood class of x generated by the neighborhood relation NRδ(B), [xi]d is an equivalence class of xi generated by equivalence relation IND(d), and U/{d} = {d1, d2, …, dt,…}. Then, the neighborhood roughness joint entropy based on Lebesgue measure of d with respect to B is defined as:

It is noted that Wang et al. [46] stated that all conceptions and computations in rough set theory based on the upper and lower approximation sets are called the algebra view of the rough set theory, and the notions of information entropy and its extensions are called the information view of rough sets. It follows from Equation (24) that is the neighborhood roughness of di with respect to B in the algebra view and it represents the degree of incompleteness of obtaining knowledge of set di, and is the definition of joint entropy in the information view. Hence, Definition 8 can efficiently analyze and measure the uncertainty of neighborhood decision systems based on Lebesgue and entropy measures from both the algebra view and the information view.

Property 3.

Given a neighborhood decision system NDS = <U, C {d}, δ> with non-empty infinite set U, B C, and U/{d} = {d1, d2, …, dt,…}, then

Proof.

Suppose that for any B C, it follows from Proposition 1 that m(U) = |U|. Then, one has that . As known from Equation (23), due to m( ≤ m(U), it is obvious that ≤ 0 and ≥ 0. Therefore, NRH(d, B) ≥ 0 holds. □

Proposition 6.

Given a neighborhood decision system NDS = <U, C D, δ> with non-empty infinite set U, P Q C, and U/{d} = {d1, d2, …, dt,…}, then NRH(D, Q) ≤ NRH(D, P).

Proof.

Suppose that for any Q P C and X U, it follows from Proposition 3 that and ≤ . It is clear that . From Proposition 5, one has that Hδ(Qd) ≤ Hδ(Pd). Then, it can be obviously obtained that . When for any x U, one has and , where 1 ≤ j. Thus, NRH(D, Q) = NRH(D, P). Therefore, NRH(D, Q) ≤ NRH(D, P) holds. □

The monotonicity is one of the most important properties for an effective uncertainty measure of attribute reduction. According to Proposition 6, it is quite obvious that the neighborhood roughness joint entropy is monotonically increasing when adding the conditional attributes, which validates the monotonicity of the proposed uncertainty measure. Furthermore, the monotonicity contributes to the selection of the greedy method for attribute reduction.

Definition 9.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and BC, for any aB, then the internal attribute significance of a in B relative to D is defined as:

Siginner(a, B, D) = NRH(D, B) − NRH(D, B − {a}).

Definition 10.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and BC, for any aC − B, then the external attribute significance of a relative to D is defined as:

Definition 11.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and any aC, if NRH(D, C) > NRH(D, C − {a}), that is, Siginner(a, C, D) > 0, then the attribute a is a core of C relative to D.

Definition 12.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and BC, if any aB is necessary in B if and only if Siginner(a, B, D) > 0; otherwise a is unnecessary. If each aB is necessary, one can say that B is independent; otherwise B is dependent.

Definition 13.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and BC, if NRH(D, B) = NRH(D, C), and for any aB, there exists NRH(D, B) > NRH(D, B − {a}), then it is said that B is a reduct of C relative to D.

3.3. Comparative Analysis with Two Representative Reducts

It is known that the definition of reducts from the algebra view is usually equivalent to its definition from the information view in a general information system. What’s more, the relative reduct of a decision system in the information view includes that in the algebra view. Thus, Wang et al. [46] declared that any relative reduct of a decision system in the information view must be its relative reduct in the algebra view, so that some heuristic algorithms can be designed further using this conclusion. Based on the ideas of the classification in [46], the definition of reducts based on neighborhood roughness joint entropy of a neighborhood decision system should be developed from the algebra view and the information view in neighborhood rough set theory. For convenience, the reduct in Definition 13 is named as the neighborhood entropy reduct. Liu et al. [38] presented a reduct based on positive region in the neighborhood decision system similar with classical rough set model. This representative relative reduct based on positive region is called the algebra view of the neighborhood rough set theory. Chen et al. [20] defined information quantity similar to information entropy to evaluate the neighborhood classes, used the joint entropy gain to evaluate the significance of a selecting attribute, and proposed a representative joint entropy gain-based reduction algorithm, which is called a reduct in the information view of neighborhood rough sets.

Given a neighborhood decision system NDS = <U, C D, δ> with non-empty infinite set U, B C and D = {d}. Then, a positive region reduct of the neighborhood decision system is presented as follows in [38]: for any a B, if |POSB(D)| = |POSC(D)| and |POSB − {a}(D)| < |POSB(D)|, where POSB(D) = is the positive region of D with respect to B, B is a relative reduct of the neighborhood decision system.

Proposition 7.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U, and BC, if B is a neighborhood entropy reduct of the neighborhood decision system, then B is a positive region reduct of the neighborhood decision system.

Proof.

Let U = {x1, x2, …, xn, …}, and U/D = {d1, d2, …, dt, …}. Suppose that for a subset B C, it follows from Definition 13 that if NRH(D, B) = NRH(D, C), and for any a B, there exists NRH(D, B) > NRH(D, B − {a}), then B is a neighborhood entropy reduct of C relative to D. When NRH(D, B) = NRH(D, C), it can be obtained from Proposition 6 that , and hold, where any x U and 1 ≤ j. By Equation (13), one has that . So it is obvious that POSB(D) = POSC(D), i.e., |POSB(D)| = |POSC(D)|. For any a B, B − {a} B, and from Theorem 1 in [38], one has that , so that POSB − {a}(D) POSB(D) holds. Because for any a B, there exists NRH(D, B) > NRH(D, B − {a}), thus holds. It follows that POSB − {a}(D) POSB(D). Thus, |POSB − {a}(D)| < |POSB(D)| for any a B. Therefore, B is a positive region reduct of the neighborhood decision system. □

Notably, the inverse relation of this proposition generally does not hold. According to the above discussions, Proposition 7 shows that the definition of the neighborhood entropy reduct includes that of positive region reduct in the algebra view.

Given a neighborhood decision system NDS = <U, C D, δ> with non-empty infinite set U, B C and D = {d}. For any a B, a reduct of the neighborhood decision system, named as the entropy gain reduct is proposed in [20] as follows: if H(Bd) = H(Cd) and H({B − {a}}d) < H(Bd), where describes the joint entropy of B and d, B is an entropy gain reduct of the neighborhood decision system.

Proposition 8.

Given a neighborhood decision system NDS = <U, CD, δ> with non-empty infinite set U and BC, then B is a neighborhood entropy reduct of the neighborhood decision system if and only if B is an entropy gain reduct of the neighborhood decision system.

Proof.

Let U = {x1, x2, …, xn, …}, and U/D = {d1, d2, …, dt, …}. Suppose that for a subset B C, it follows from Definition 13 that if NRH(D, B) = NRH(D, C), and for any a B, there exists NRH(D, B) > NRH(D, B − {a}), then B is a neighborhood entropy reduct of C relative to D. Similar to the proof of Proposition 7, when NRH(D, B) = NRH(D, C), from Proposition 6, one has that , where any x U and 1 ≤ j. It is obvious that H(Bd) = H(Cd). Since B − {a} B, from Proposition 2 in [20], one has that H({B − {a}}d) ≤ H(Bd). Because for any a B, there exists NRH(D, B) > NRH(D, B − {a}), so H({B − {a}}d) < H(Bd) holds. Hence, B is an entropy gain reduct of the neighborhood decision system.

Suppose that for a subset B C, and any a B, if H(Bd) = H(Cd) and H({B − {a}}d) < H(Bd), then B is an entropy gain reduct of C relative to D. Similar to the proof of Proposition 6, when , by Equations (16), (17) and (19), one has that , and then it is obvious that , where any x U and 1 ≤ j. Thus, it can be obtained from Equation (24) that NRH(D, B) = NRH(D, C). Because B − {a} B, it follows from Proposition 6 that NRH(D, B − {a}) ≤ NRH(D, B). Since for any a B, there exists H({B − {a}}d) < H(Bd). So, one has that NRH(D, B − {a}) < NRH(D, B). Therefore, B is a neighborhood entropy reduct of the neighborhood decision system. □

Proposition 8 shows that in a neighborhood decision system, the neighborhood entropy reduct is equivalent to the entropy gain reduct in the information view. According to Propositions 7 and 8, it can be concluded that the definition of neighborhood entropy reduct includes two representative reducts proposed in the algebra view and the information view. Therefore, the definition of neighborhood entropy reduct denotes a mathematical quantitative measure to evaluate the knowledge uncertainty of different attribute sets in neighborhood decision systems.

3.4. Description of the Attribute Reduction Algorithm

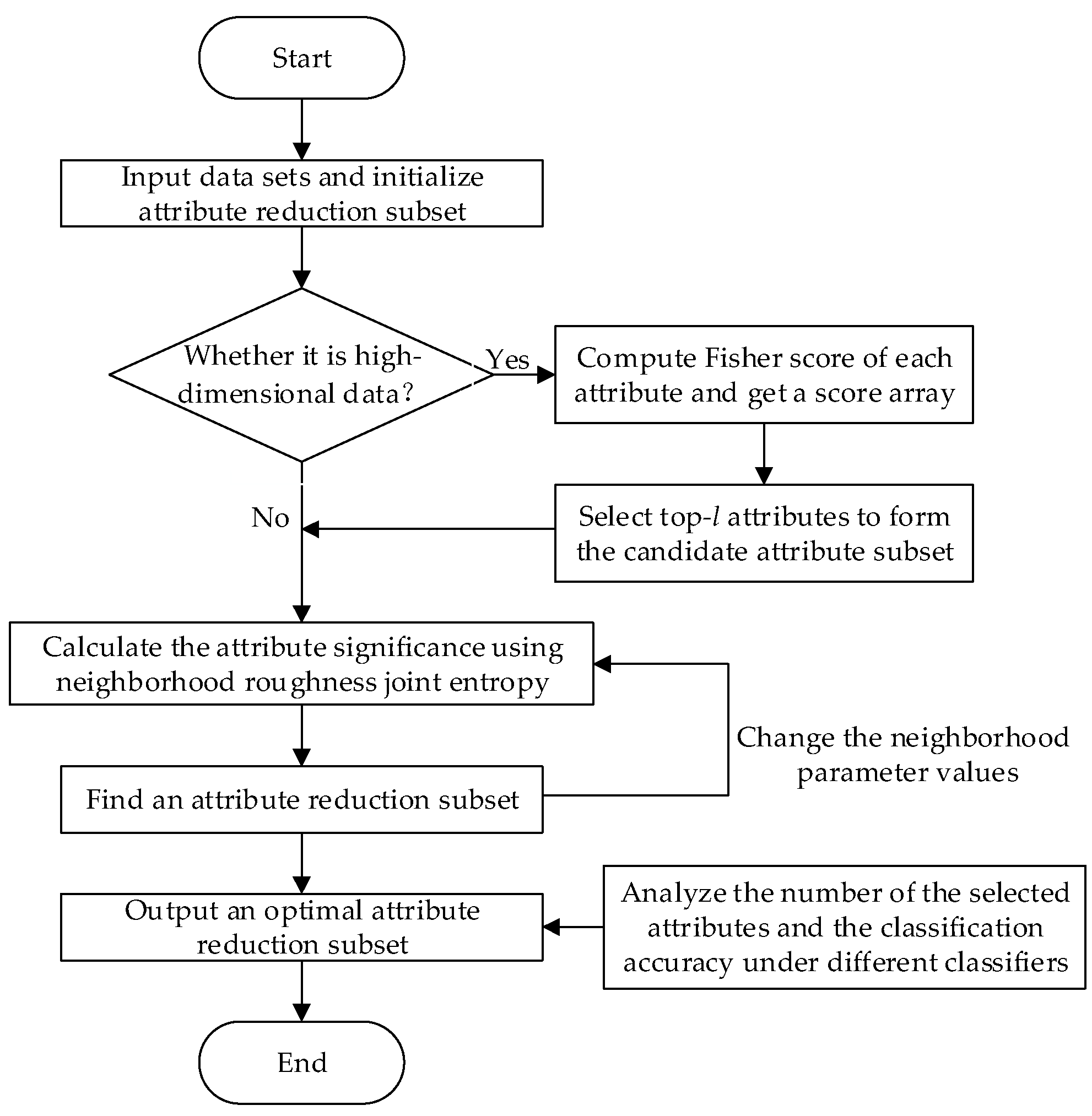

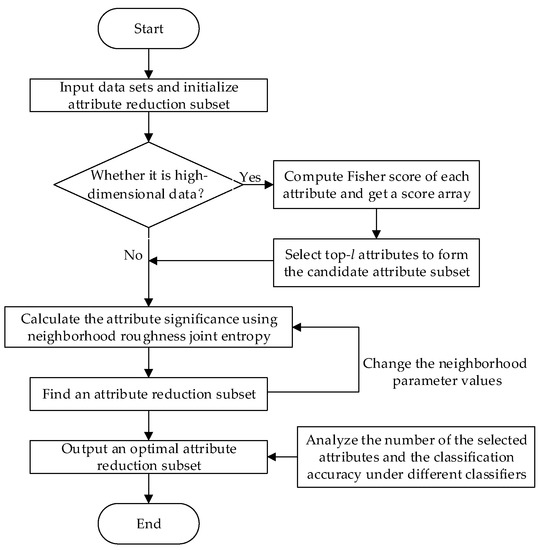

In order to facilitate the understanding of the attribute reduction method, the process of attribute reduction algorithm for data classification is illustrated in Figure 1.

Figure 1.

Flowchart of the attribute reduction algorithm for data classification.

To support efficient attribute reduction, an attribute reduction algorithm based on neighborhood roughness joint entropy (ARNRJE) is constructed and described as Algorithm 1.

| Algorithm 1. ARNRJE |

| Input: A neighborhood decision system NDS = <U, C D, δ>, and neighborhood parameter δ. Output: An optimal reduction set B.

|

3.5. Complexity Analysis of ARNRJE

In the ARNRJE algorithm, suppose that there are m attributes and n samples, and then the calculation of neighborhood classes is frequent in neighborhood decision systems. The process of deriving the neighborhood classes has a great influence on the time complexity of selecting attributes. Notably, the main computation of ARNRJE includes two aspects: obtaining neighborhood classes and computing the neighborhood roughness joint entropy. Then, the buckets sorting algorithm [38] is introduced to further reduce the time complexity of neighborhood classes, and the time complexity of computing neighborhood classes should be O(mn). Meanwhile, the time complexity of calculating neighborhood roughness joint entropy is O(n). Since O(n) < O(mn), the complexity of computing neighborhood roughness joint entropy should be O(mn). Because there exist two loops at steps 3 and 8 of the ARNRJE algorithm, in the worst case, the time complexity of ARNRJE algorithm is O(m3n). As known that, in the process of achieving attribute reduction task, we usually select a litter of attributes. Suppose that the number of selected attributes is mR, and in the computation of neighborhood classes, we only need to consider the candidate attributes without touching on the whole attribute set. So, the complexity of computing neighborhood classes is decreased to O(mRn). For the ARNRJE algorithm, the times of the outer loop are m and the times of the inner loop are m – mR. Thus, the time complexity of the ARNRJE algorithm is O(mRn(m – mR)m). It is obvious that mR m in most cases. Therefore, the time complexity of ARNRJE algorithm is approximately O(mn). So far, ARNRJE appears to be more efficient than some of the existing algorithms for attribute reduction in [18,36,45,52,53] for neighborhood decision systems. Furthermore, its space complexity is O(mn).

3.6. An Illustrative Example

In the following, the performance of ARNRJE algorithm is shown through an illustrative example in [20]. A neighborhood decision system NDS = <U, C D, δ > is employed, where U = {x1, x2, x3, x4}, C = {a, b, c}, and D = {d} with the values {Y, N}. The neighborhood decision system is shown in Table 1.

Table 1.

A neighborhood decision system.

For Table 1, we use Algorithm 1 for attribute reduction. Given δ = 0.3, the attribute reduction steps are as follows:

(1) Initialize B = Ø.

(2) Let B = C = {a, b, c}, and the Euclidean distance function is used to calculate the distance between any two objects as follows:

∆B(x1, x2) = 0.54, ∆B(x1, x3) = 0.35, ∆B(x1, x4) = 0.68, ∆B(x2, x3) = 0.16, ∆B(x2, x4) = 0.41, and ∆B(x3, x4) = 0.302.

Then, we can get the following neighborhood classes:

(x1) = {x1}, (x2) = {x2, x3}, (x3) = {x2, x3}, and (x4) ={x4}.

Under the equivalence relation, U/d = {d1, d2} = {{x1, x2},{x3, x4}}, the Euclidean distance function is ∆B, and then the upper and lower approximation sets of attribute subset B about d1 and d2 are calculated, respectively, by

= {x1, x2, x3}, = {x1}; = {x2, x3, x4}, and = {x4}.

Thus, one has that , , , and .

It follows that

(3) For all the attributes in C, the attribute significance is calculated by

siginner(a, C, D) = 0.5081, siginner(b, C, D) = 0, and siginner(c, C, D) = 0.2075.

Since siginner(a, C, D) > 0 and siginner(c, C, D) > 0, then one has B = {a, c}.

(4) Since B = {a, c} ≠ Ø, by computing, then NRH(D, B) = 0.83.

(5) Because NRH(D, B) = NRH(D, C), it is obtained that the reduction set B = {a, c}.

(6) One computes NRH(D, B – {a}) = NRH(D, {c}) = 0.3219 and NRH(D, B – {c}) = NRH(D, {a}) = 0.2925. Since NRH(D, B – {a}) ≤ NRH(D, B) and NRH(D, B – {c}) ≤ NRH(D, B), then B = {a, c} holds.

(7) Return the reduction attribute subset B = {a, c}.

4. Experimental Results and Analysis

4.1. Experiment Preparation

The objective of an attribute reduction method usually includes two aspects: one is to select a small number of attributes and the other is to maintain high classification accuracy. To verify the classification performances of our proposed attribute reduction method described in Subsection 3.4, the comprehensive results of all contrasted algorithms can be achieved and analyzed on nine public data sets (five UCI data sets and four gene expression data sets). The selected five UCI data sets with low-dimensional attributes include the Wine, Sonar, Segmentation, Wdbc, and Wpbc data sets [54]. The selected four gene expression data sets with high-dimensional attributes include the Prostate, DLBCL, Leukemia and Tumors data sets [55]. All the data sets are summarized in Table 2.

Table 2.

Description of the seven public data sets.

The experiments were performed on a personal computer running Windows 10 with an Intel(R) Core(TM) i5-6500 CPU operating at 3.20 GH, and 4.0 GB memory. All the simulation experiments were implemented in MATLAB 2016a programming software, and two different classifiers (KNN and LibSVM) were selected to verify the classification accuracy in WEKA software, where the parameter k in KNN was set to 3 and the linear kernel functions were selected in LibSVM. All of the following experimental comparisons for classification on the selected attributes are implemented using a 10-fold cross-validation with all the test data sets, where every data set is first randomly divided into ten portions which are the same size subset of data each other, one data subset is used as the testing data set, the rest nine data subsets are used as the training data set, and each of the ten data subsets only is employed exactly once as the testing data set; secondly, the operation of the cross-validation is repeated ten times; finally, the average of ten test results is as the obtained classification accuracy [45].

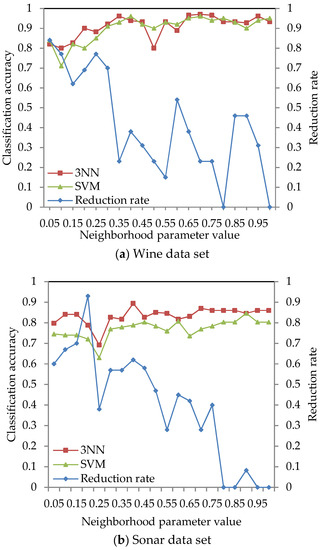

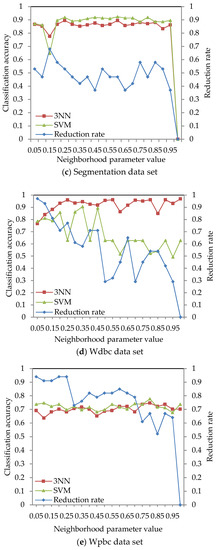

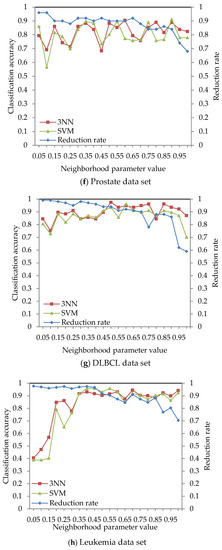

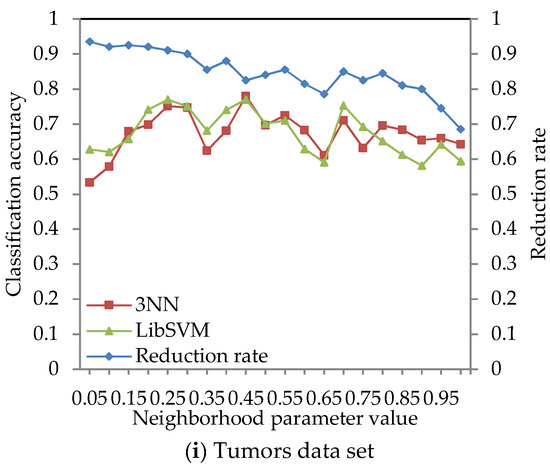

4.2. Effect of Different Neighborhood Parameter Values

The following part of our experiments concerns the reduction rate and the classification accuracy under the different neighborhood parameter values. The reduction rate and the classification accuracy of an attribute subset for the different neighborhood parameter values are discussed to obtain a suitable neighborhood parameter value and a subset of attributes. Chen et al. [20] adopted a reduction rate for evaluating the attribute redundancy degree of attribute reduction algorithms, which is described as:

where |C| is the number of all of the conditional attributes in the data set, and |R| is the number of the reduced attributes obtained under the different neighborhood parameter values.

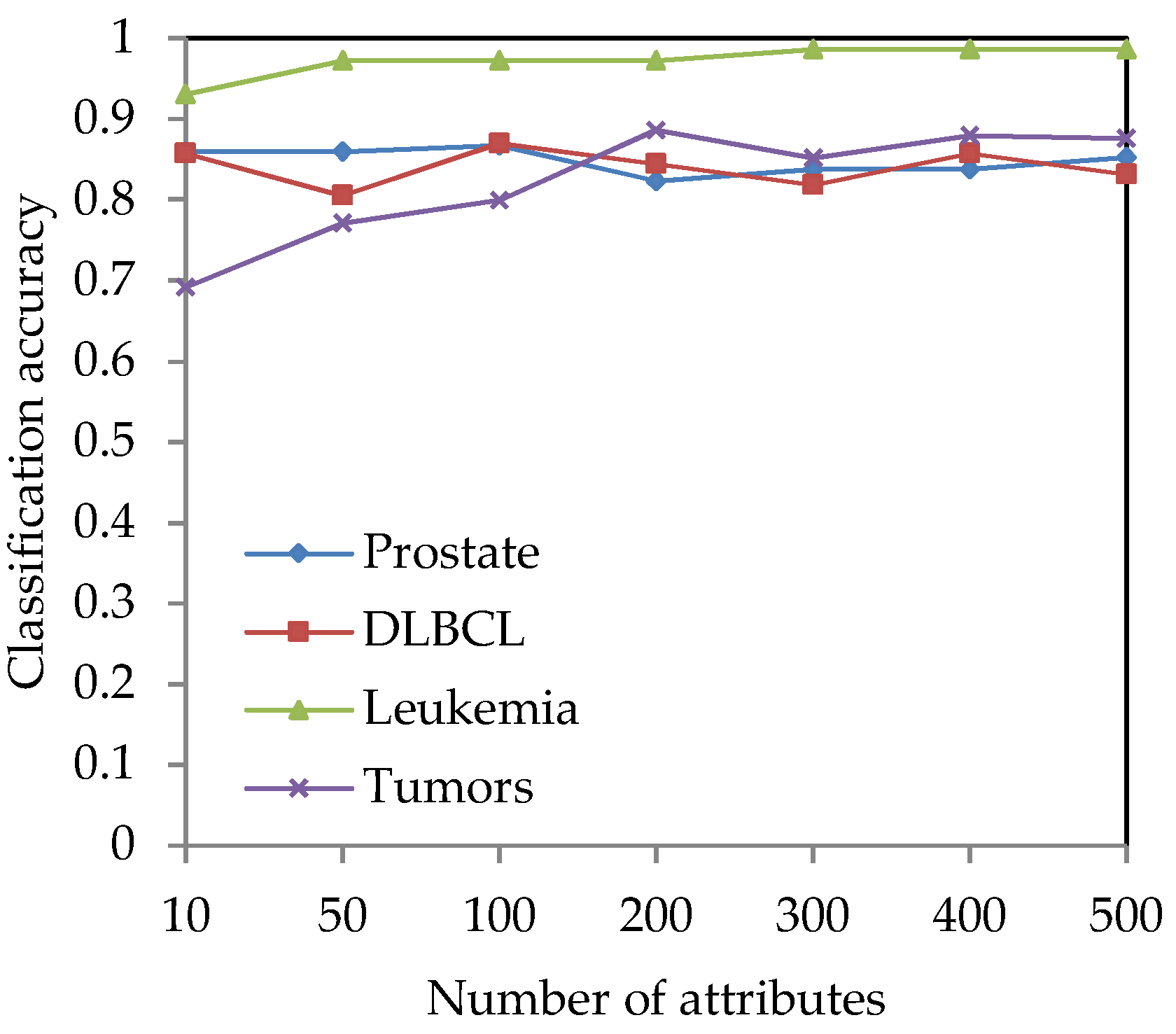

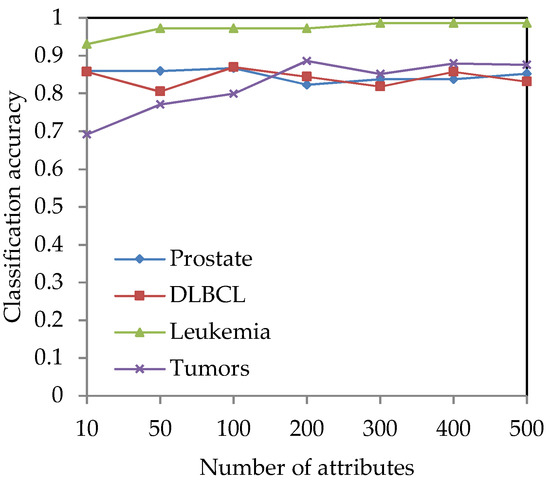

For the four high-dimensional gene expression data sets (Prostate, DLBCL, Leukemia and Tumors), the Fisher score method [8] is used for preliminary dimension reduction. For each gene expression data set, the Fisher score method is used to calculate the Fisher score value and sequence it for each gene, and l genes are selected to construct a candidate gene subset. The classification accuracy under different dimensions is obtained by using WEKA software, so that the appropriate dimension can be selected for the attribute reduction algorithm. Figure 2 shows the changing trend of the classification accuracy versus the number of genes on the four gene expression data sets.

Figure 2.

The classification accuracy versus the number of genes on the four gene expression data sets.

From Figure 2, it can be seen that when the number of genes increases, the classification accuracy is also changed. Since the cardinality of selected genes and the classification accuracy for selected genes are all important, they are two indices for evaluating the classification performance of attribute reduction methods. Then, the appropriate values of l need to be selected from Figure 2. Hence, the values of l are set to 50-dimension and 100-dimension for the Prostate and DLBCL data set, respectively. For the Leukemia and Tumors data sets, the values of l can be set to 300-dimension and 200-dimension, respectively.

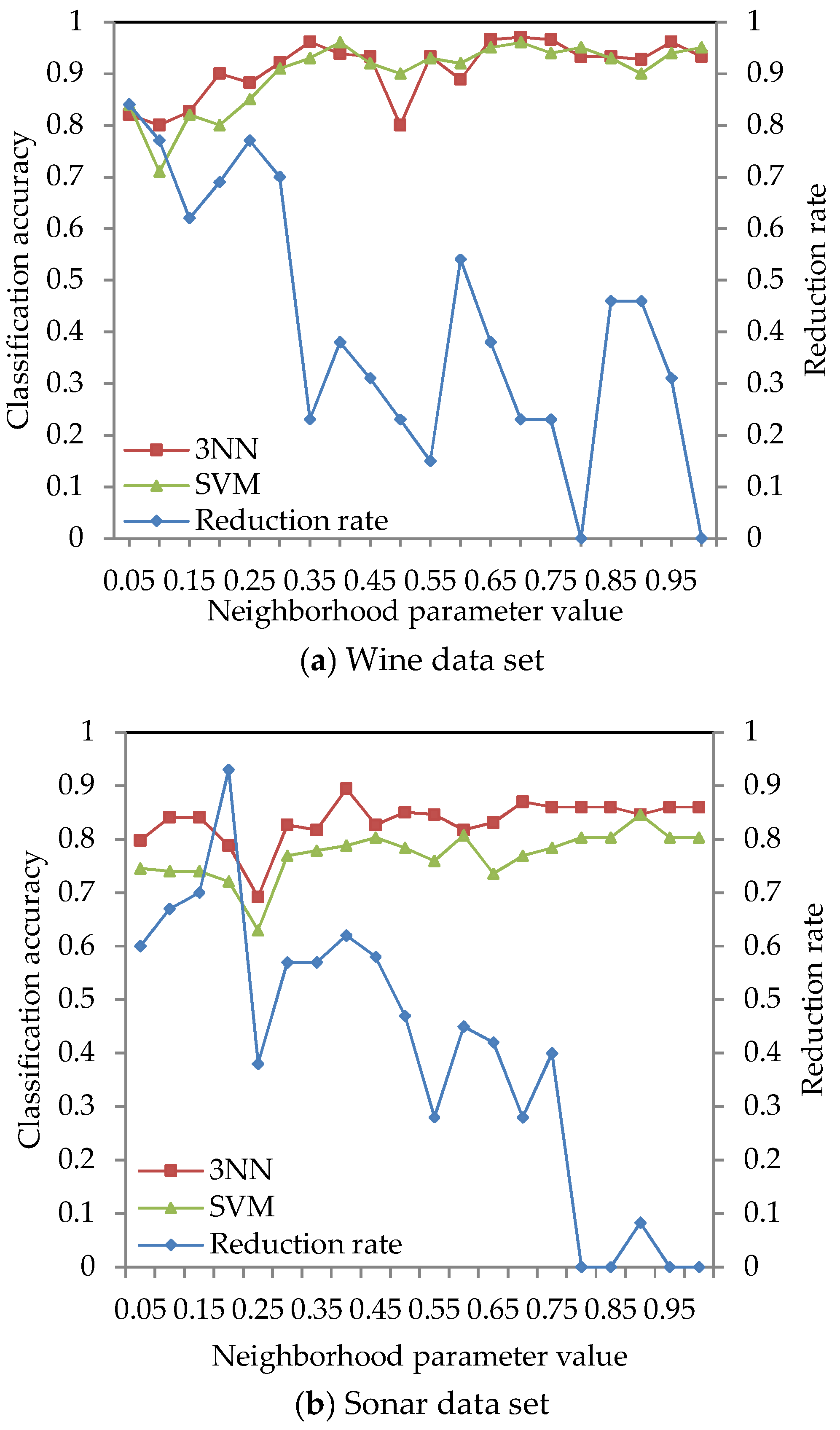

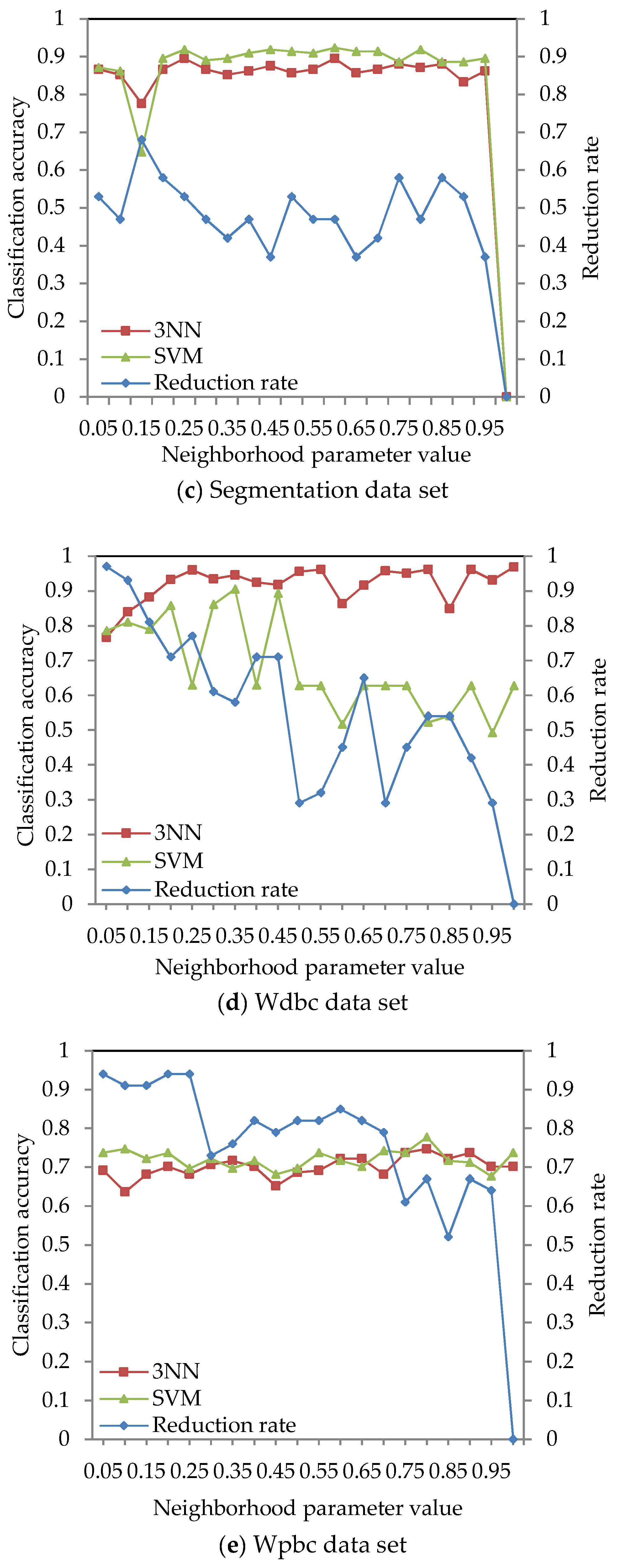

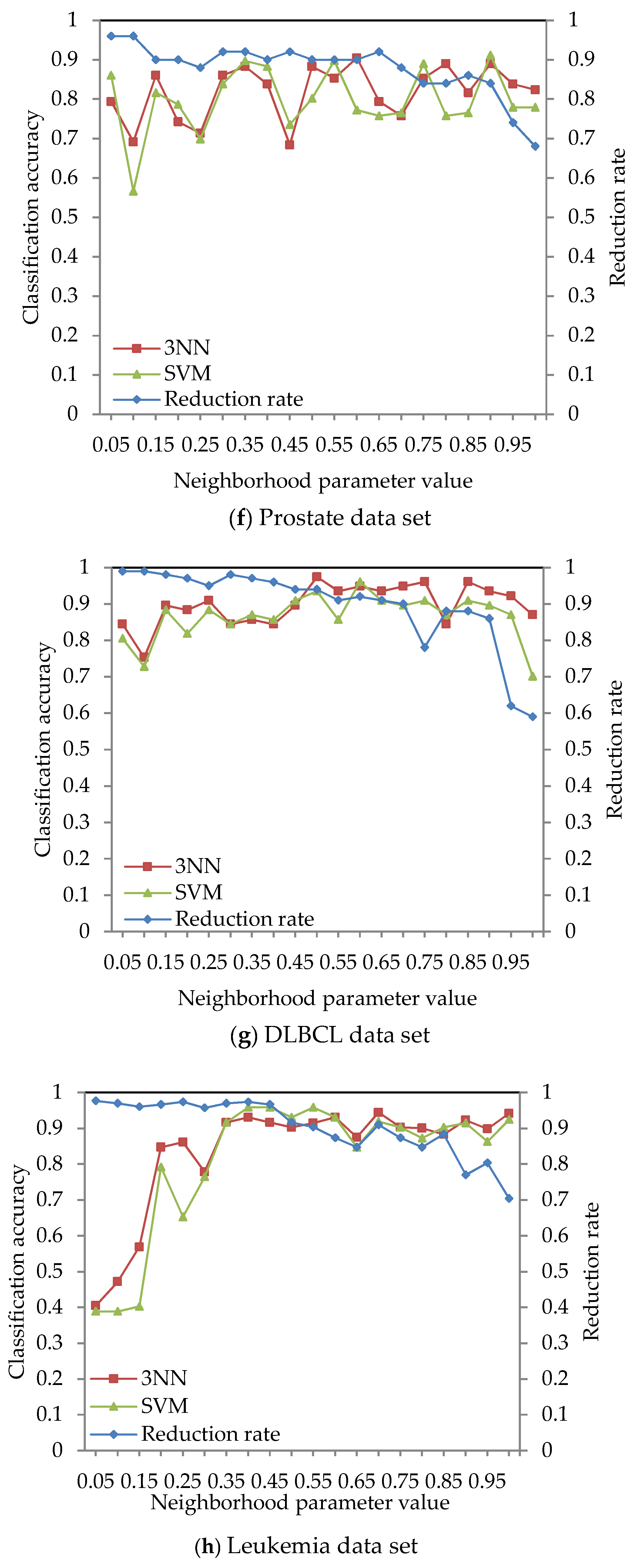

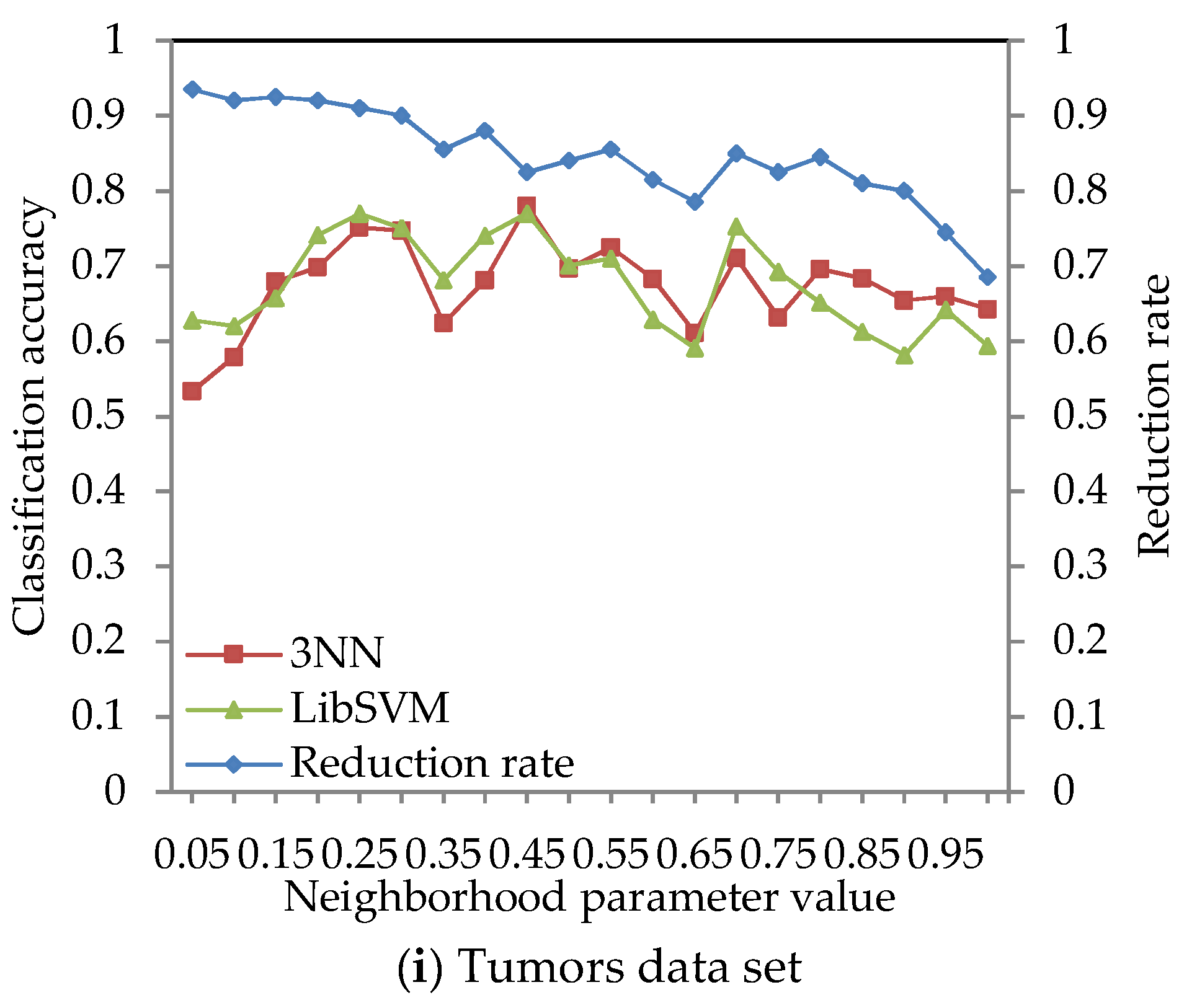

The classification accuracy of data sets given in Table 2 was obtained by using the ARNRJE algorithm with different neighborhood parameter values. After obtaining the results of attribute reduction with different parameters, WEKA is used to obtain the classification accuracy under the 3NN and LibSVM classifiers. The results are shown in Figure 3, where the horizontal coordinate denotes the neighborhood parameters with δ [0.05, 1] at intervals of 0.05, and the left and the right vertical coordinate represent the classification accuracy and the reduction rate, respectively.

Figure 3.

Reduction rate and classification accuracy for nine data sets with neighborhood parameter values.

Figure 3 shows that the classification accuracy of selected attributes by the ARNRJE algorithm is increasing, and the reduction rate is decreasing with the neighborhood parameter values changing from 0.05 to 1 in most cases. It is easily observed that the different neighborhood parameter values have great influence on the classification performance of ARNRJE. Then, this illustrates that the thinner the granule is, the smaller the roughness of the granule is when the values of different neighborhood parameter are smaller. It follows that the reduction rate increases as the roughness of the granule decreases. Thus, the optimal neighborhood parameter values can be selected for each data set. Figure 3a shows the classification accuracy of the Wine data set with different neighborhood parameter values, and the neighborhood parameter can be set to 0.65. Figure 3b demonstrates the classification accuracy of the Sonar data set with different neighborhood parameter values. The reduction rate decreases as the neighborhood parameter values increase, and the classification accuracy of selected attributes reaches the relative maximum value when the neighborhood parameter is 0.4. So, the neighborhood parameter of the Sonar data set can be set to 0.4. From Figure 3c, it can be seen that the classification accuracy reaches the relative best performance when the parameter equals 0.75 on the Segmentation data set. Figure 3d displays the classification accuracy of the Wdbc data set for the different neighborhood parameters. The neighborhood parameter of the Wdbc data set can be set to 0.35. Similar to Figure 3b, Figure 3e reveals the classification accuracy of selected attributes reaching a relative maximum when the parameter is set as 0.55 for Wpbc data set. For Prostate and DLBCL with the different neighborhood parameter values in Figure 3f,g, the neighborhood parameter of the Prostate data set can be set to 0.9, and that of the DLBCL data set should be set to 0.5. Figure 3h,i demonstrates that the reduction rate is decreasing as the parameters increase in most situations, and the neighborhood parameter of Leukemia and Tumors data sets can be set to 0.4 and 0.25, respectively.

4.3. Comparison of Reduction Results with Three Related Reduction Algorithms

This portion of our experiments evaluates the performance of our proposed ARNRJE algorithm in terms of the selected attribute subset of data sets. The ARNRJE algorithm is compared with the following two reduction algorithms: (1) the fuzzy information entropy-based feature selection algorithm (FINEN) [52,58], and (2) the neighborhood entropy-based feature selection algorithm (NEIEN) [18]. By using the neighborhood parameters where the classification accuracy is obtained in Subsection 4.2, the attribute reduction results and the number of selected attributes on the nine data sets from Table 2 are shown in Table 3.

Table 3.

The reduction results and the number of selected attributes with the three reduction algorithms.

Table 3 lists the selected attribute subsets. It can be seen that the best attribute subsets selected by the FINEN, NEIEN, and ARNRJE algorithms are the same as each other in some situations, and the number of attributes selected by ARNRJE is less than those of FINEN and NEIEN in the majority of cases. For the Wine, Sonar, and Segmentation data sets, the numbers of attributes selected by the three reduction algorithms are different, where the ARNRJE exhibits the best performance. The slight differences of the three data sets may be caused by the fact that the selected attribute subsets are obtained through reducing the whole data set. For the Wdbc, Wpbc, Prostate, DLBCL, Leukemia and Tumors data sets, the selected attribute subsets are different in general, but the numbers of attributes selected by the three algorithms are very close to each other. Therefore, the proposed ARNRJE algorithm is efficient in dimension reduction of low-dimensional and high-dimensional data sets.

4.4. Comparison of Classification Results with Six Reduction Methods on Two Different Classifiers

To further demonstrate the classification performance of our proposed method, six methods are used to evaluate the classification accuracy on the selected attributes. The ARNRJE algorithm is compared with the five related reduction methods, which include: (1) the original data processing method (ODP), (2) the neighborhood rough set algorithm (NRS) [22], (3) the fuzzy rough dependency constructed by intersection operations of fuzzy similarity relations algorithm (FRSINT) [53], (4) the FINEN algorithm [52,58], and (5) the NEIEN algorithm [18]. The two classifiers (3NN and LibSVM) in WEKA are employed to test the classification accuracy. Table 4 denotes the average sizes of attribute subsets selected by the six methods using 10-fold cross validation. What’s more, the corresponding classification accuracy of selected attributes under the 3NN and LibSVM classifiers with 10-fold cross validation is shown in Table 5 and Table 6, respectively.

Table 4.

Average sizes of attribute subsets selected by the six methods using 10-fold cross validation.

Table 5.

Classification accuracy of the six methods under the 3NN classifier.

Table 6.

Classification accuracy of the six methods under the LibSVM classifier.

From Table 4, comparing the average sizes of selected attribute subsets by using 10-fold cross validation, the FRSINT, NEIEN, and ARNRJE algorithms are obviously superior to the RS and FINEN algorithms, but the ARNRJE algorithm is slightly inferior to the FRSINT and NEIEN algorithms. From Table 5 and Table 6, the difference among the six methods can be clearly identified. Then, it can be clearly observed that the classification accuracy of the proposed ARNRJE algorithm outperforms that of the other five methods on most of the nine data sets, except for the Segmentation, Wdbc, and Tumors data sets under the 3NN classifier and the Sonar, Segmentation, Wdbc, and Tumors data sets under the LibSVM classifier. Furthermore, the average classification accuracy of the ARNRJE is the highest on the LibSVM classifier, and has greatly improvement, but the ARNRJE is 0.2% lower than that of FINEN in classification accuracy on the 3NN classifier. From Table 4 and Table 5 under 3NN classifier, although ARNRJE is not as well as FRSINT and NEIEN in the average sizes of selected attribute subsets, the classification accuracy of ARNRJE is nearly 2%–6% higher than that of FRSINT, and that of ARNRJE is approximately 1%–6% higher than that of NEIEN, except for the Segmentation data set. In addition, the classification performance of ARNRJE is better than that of NRS and FINEN on the whole. Though there is some difference in the number of attributes selected by ARNRJE, NRS and FINEN, the accuracy of ARNRJE is higher than that of NRS and FINEN, except for the Wdbc, Segmentation, and Tumors data sets, respectively. The reason is that some important information attributes of the Wdbc and Segmentation data sets are lost in the process of reduction for ARNRJE. Similarly, as seen from Table 4 and Table 6, under the LibSVM classifier, the classification accuracy of ARNRJE is 1%–6% higher than that of FRSINT, ARNRJE is 2%–6% higher than that of NEIEN in classification accuracy, and compared with FINEN, the accuracy of ARNRJE is 0.5%–5% higher, except for the Sonar, Segmentation, Wdbc, and Tumors data sets. For the Wdbc data set, the classification accuracy of ARNRJE is 4% lower than NRS, but ARNRJE selects the less attributes than NRS, and exhibits the better classification accuracy than that obtained by NRS on the other six data sets. As far as the average classification accuracy is concerned, our ARNRJE algorithm shows great stability on the 3NN and LibSVM classifiers, whereas the classification accuracy of the ODP, NRS, and FINEN algorithms is slightly unstable. Based on the results in Table 4, it can be seen that for the Sonar, Segmentation, Wdbc, and Tumors data sets, the proposed ARNRJE method reduces some important attributes in the process of reduction, resulting in the decrease of classification accuracy of reduction sets with fewer attributes. The above results show that no algorithm is congruously better than the others for different learning tasks and classifiers. Overall speaking, our proposed approach can obviously reduce the redundant data and outperforms the other related attribute reduction methods. The experimental results show that our method is an efficient reduction method for redundant data sets, and can improve the classification accuracy for most of the data sets.

In the above experiments, the coarse ordering of the five methods on time complexity is as follows: O(FINEN) = O(FRSINT) > O(NRS) > O(NEIEN) > O(ARNRJE), where O(A) represents the time complexity of A algorithm. The time complexity of NEIEN algorithm is O(n2) [18]. For the UCI data set with low-dimension, the NEIEN algorithm has the lower time complexity. As we know, the number of samples is usually much greater than that of attributes on the UCI data sets in most cases, whereas for the gene expression data sets, the number of genes is much larger than that of samples. Since the time complexity of ARNRJE algorithm is O(mn), it is less than that of the NEIEN for large-scale and high-dimensional data sets. Although the time complexity of NEIEN is lower than that of ARNRJE on UCI data sets, the classification performance of ARNRJE algorithm is higher than that of NEIEN algorithm in most instances. For the NRS algorithm, the time complexity is O(m2nlogn) [22]. Since the time of the FRSINT algorithm is mainly spent on getting the fuzzy-rough membership of each sample for different decision classes, the FRSINT algorithm runs slowly and its time complexity is O(m2n2) [45,53]. In addition, the time complexity of FINEN is also O(m2n2) [52], which is time-consuming. The reason is that since the FINEN algorithm is based on similarity relation, they need a lot of time to calculate the similarity relation of attributes [38]. Therefore, it can be easily proven that the ARNRJE algorithm has lower time complexity, can effectively reduce the redundancy, improve the classification accuracy, and optimize the classification process of large-scale complex data.

4.5. Comparison of Recall Rate with Three Reduction Methods on Two Different Classifiers

The final portion of our experiments is to measure the recall classification index to evaluate the classification performances of three reduction methods on two different classifiers. The recall rate [59] as a metric is employed to assess the classification performance, which is described as:

where True Positive (TP) denotes the number of positive instances diagnosed correctly, and False Negative (FN) represents the number of positive instances detected as negative.

Table 7 and Table 8 demonstrate the testing results of the recall rate with the FINEN, NEIEN with ARNRJE on the nine data sets on the 3NN and LibSVM classifiers, respectively. From Table 7 and Table 8, the ARNRJE algorithm achieves the highest average recall rate under the two classifiers, and outperforms the FINEN and NEIEN algorithms on most of all the nine data sets. It can be seen from Table 7 under the 3NN classifier that ARNRJE is nearly 4% lower than FINEN for Prostate, and 3% for Tumors in recall rate, respectively. From Table 8 under the LibSVM classifier, ARNRJE is slightly inferior to FINEN for Wdbc and NEIEN for Tumors. The reason is that some important information attributes are lost in the process of preliminary dimensionality reduction or reduction for ARNRJE for the Prostate, DLBCL, Wdbc and Tumors data sets. Thus, this causes misclassification of conditional attributes, and leads to the slightly lower recall rate. The above results manifest that for different learning tasks and classifiers, no algorithm can consistently superior to other algorithms. In general, our proposed ARNRJE algorithm has a relatively good classification performance by measuring the recall rate.

Table 7.

The recall rate with the three reduction algorithms under 3NN.

Table 8.

The recall rate with the three reduction algorithms under LibSVM.

5. Conclusions

Attribute reduction, one of the important steps in classification learning, can improve the classification performance in most of cases and decrease the cost of classification. Uncertainty measures for calculating distinguishing ability of attribute subsets play an important role in the process of attribute reduction. The neighborhood rough model can effectively solve the reduction problem of numerical and continuous-valued information system. In this paper, a neighborhood rough sets-based attribute reduction method using Lebesgue and entropy measures is proposed to improve the classification performance of continuous data set. Based on Lebesgue and entropy measures, some neighborhood entropy-based uncertainty measures in neighborhood decision systems is investigated. Then, the neighborhood roughness joint entropy is presented for handling the uncertainty and noisy of neighborhood decision systems, which combines the algebraic view and the information view in neighborhood rough sets. Moreover, their corresponding properties and relationships are discussed. Thus, a heuristic search algorithm is constructed to improve the computational efficiency of selected attributes in neighborhood decision systems. The experimental results show that our proposed algorithm can obtain a small, effective attribute subset with great classification performance.

Author Contributions

L.S. and J.X. conceived the methodology and designed the experiments; L.W. achieved the algorithms and implemented the experiments; S.Z. analyzed the results; and L.W. drafted the manuscript. All authors read and revised the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grants 61772176, 61402153 and 61370169), the China Postdoctoral Science Foundation (Grant 2016M602247), the Plan for Scientific Innovation Talent of Henan Province (Grant 184100510003), the Key Scientific and Technological Project of Henan Province (Grant 182102210362), the Young Scholar Program of Henan Province (Grant 2017GGJS041), the Natural Science Foundation of Henan Province (Grants 182300410130, 182300410368 and 182300410306), and the Ph.D. Research Foundation of Henan Normal University (Grant qd15132).

Conflicts of Interest

The authors declare no conflict of interests.

References

- Wang, Q.; Qian, Y.H.; Liang, X.Y.; Guo, Q.; Liang, J.Y. Local neighborhood rough set. Knowl.-Based Syst. 2018, 135, 53–64. [Google Scholar] [CrossRef]

- Gao, C.; Lai, Z.H.; Zhou, J.; Zhao, C.R.; Miao, D.Q. Maximum decision entropy-based attribute reduction in decision-theoretic rough set model. Knowl.-Based Syst. 2018, 143, 179–191. [Google Scholar] [CrossRef]

- Zhang, X.Y.; Miao, D.Q. Quantitative/qualitative region-change uncertainty/certainty in attribute reduction: Comparative region-change analyses based on granular computing. Inf. Sci. 2016, 334, 174–204. [Google Scholar] [CrossRef]

- Dong, H.B.; Li, T.; Ding, R.; Sun, J. A novel hybrid genetic algorithm with granular information for feature selection and optimization. Appl. Soft Comput. 2018, 65, 33–46. [Google Scholar] [CrossRef]

- Hu, L.; Gao, W.F.; Zhao, K.; Zhang, P.; Wang, F. Feature selection considering two types of feature relevancy and feature interdependency. Expert Syst. Appl. 2018, 93, 423–434. [Google Scholar] [CrossRef]

- Liu, Y.; Xie, H.; Tan, K.Z.; Chen, Y.H.; Xu, Z.; Wang, L.G. Hyperspectral band selection based on consistency-measure of neighborhood rough set theory. Meas. Sci. Technol. 2016, 27, 055501. [Google Scholar] [CrossRef]

- Lyu, H.Q.; Wan, M.X.; Han, J.Q.; Liu, R.L.; Wang, C. A filter feature selection method based on the Maximal Information Coefficient and Gram-Schmidt Orthogonalization for biomedical data mining. Comput. Biol. Med. 2017, 89, 264–274. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Zhang, X.Y.; Qian, Y.H.; Xu, J.C.; Zhang, S.G.; Tian, Y. Joint neighborhood entropy-based gene selection method with fisher score for tumor classification. Appl. Intell. 2018. [Google Scholar] [CrossRef]

- Jadhav, S.; He, H.M.; Jenkins, K. Information gain directed genetic algorithm wrapper feature selection for credit rating. Appl. Soft Comput. 2018, 69, 541–553. [Google Scholar] [CrossRef]

- Mariello, A.; Battiti, R. Feature selection based on the neighborhood entropy. IEEE Trans. Neural Netw. Learn. Syst. 2018, 99, 1–10. [Google Scholar] [CrossRef]

- Das, A.K.; Sengupta, S.; Bhattacharyya, S. A group incremental feature selection for classification using rough set theory based genetic algorithm. Appl. Soft Comput. 2018, 65, 400–411. [Google Scholar] [CrossRef]

- Imani, M.B.; Keyvanpour, M.R.; Azmi, R. A novel embedded feature selection method: A comparative study in the application of text categorization. Appl. Artif. Intell. 2013, 27, 408–427. [Google Scholar] [CrossRef]

- Chen, Y.M.; Zeng, Z.Q.; Lu, J.W. Neighborhood rough set reduction with fish swarm algorithm. Soft Comput. 2017, 21, 6907–6918. [Google Scholar] [CrossRef]

- Li, B.Y.; Xiao, J.M.; Wang, X.H. Feature reduction for power system transient stability assessment based on neighborhood rough set and discernibility matrix. Energies 2018, 11, 185. [Google Scholar] [CrossRef]

- Gu, X.P.; Li, Y.; Jia, J.H. Feature selection for transient stability assessment based on kernelized fuzzy rough sets and memetic algorithm. Int. J. Electr. Power Energy Syst. 2015, 64, 664–670. [Google Scholar] [CrossRef]

- Raza, M.S.; Qamar, U. A parallel rough set based dependency calculation method for efficient feature selection. Appl. Soft Comput. 2018, 71, 1020–1034. [Google Scholar] [CrossRef]

- Luan, X.Y.; Li, Z.P.; Liu, T.Z. A novel attribute reduction algorithm based on rough set and improved artificial fish swarm algorithm. Neurocomputing 2015, 174, 522–529. [Google Scholar] [CrossRef]

- Hu, Q.H.; Zhang, L.; Zhang, D.; Pan, W.; An, S.; Pedrycz, W. Measuring relevance between discrete and continuous features based on neighborhood mutual information. Expert Syst. Appl. 2011, 38, 10737–10750. [Google Scholar] [CrossRef]

- Chakraborty, D.B.; Pal, S.K. Neighborhood rough filter and intuitionistic entropy in unsupervised tracking. IEEE Trans. Fuzzy Syst. 2018, 26, 2188–2200. [Google Scholar] [CrossRef]

- Chen, Y.M.; Zhang, Z.J.; Zheng, J.Z.; Ma, Y.; Xue, Y. Gene selection for tumor classification using neighborhood rough sets and entropy measures. J. Biomed. Inf. 2017, 67, 59–68. [Google Scholar] [CrossRef]

- Hu, Q.H.; Pan, W.; An, S.; Ma, P.J.; Wei, J.M. An efficient gene selection technique for cancer recognition based on neighborhood mutual information. Int. J. Mach. Learn. Cybern. 2010, 1, 63–74. [Google Scholar] [CrossRef]

- Hu, Q.H.; Yu, D.; Liu, J.F.; Wu, C.X. Neighborhood rough set based heterogeneous feature subset selection. Inf. Sci. 2008, 178, 3577–3594. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, X.Y.; Xu, J.C.; Wang, W.; Liu, R.N. A gene selection approach based on the Fisher linear discriminant and the neighborhood rough set. Bioengineered 2018, 9, 144–151. [Google Scholar] [CrossRef] [PubMed]

- Mu, H.Y.; Xu, J.C.; Wang, Y.; Sun, L. Feature genes selection using Fisher transformation method. J. Intell. Fuzzy Syst. 2018, 34, 4291–4300. [Google Scholar] [CrossRef]

- Halmos, P.R. Measure Theory; Litton Educational Publishing, Inc. and Springer-Verlag New York Inc.: New York, NY, USA, 1970; pp. 100–152. [Google Scholar]

- Song, J.J.; Li, J. Lebesgue theorems in non-additive measure theory. Fuzzy Sets Syst. 2005, 149, 543–548. [Google Scholar] [CrossRef]

- Xu, X.; Lu, Z.Z.; Luo, X.P. A kernel estimate method for characteristic function-based uncertainty importance measure. Appl. Math. Model. 2017, 42, 58–70. [Google Scholar] [CrossRef]

- Halčinová, L.; Hutník, O.; Kiseľák, J.; Šupina, J. Beyond the scope of super level measures. Fuzzy Sets Syst. 2018. [Google Scholar] [CrossRef]

- Park, S.R.; Kolouri, S.; Kundu, S.; Rohde, G.K. The cumulative distribution transform and linear pattern classification. Appl. Comput. Harmon. Anal. 2018, 45, 616–641. [Google Scholar] [CrossRef]

- Marzio, M.D.; Fensore, S.; Panzera, A.; Taylor, C.C. Local binary regression with spherical predictors. Stat. Probab. Lett. 2019, 144, 30–36. [Google Scholar] [CrossRef]

- Khanjani Shiraz, R.K.; Fukuyama, H.; Tavana, M.; Di Caprio, D. An integrated data envelopment analysis and free disposal hull framework for cost-efficiency measurement using rough sets. Appl. Soft Comput. 2016, 46, 204–219. [Google Scholar] [CrossRef]

- Zhang, Z.F.; David, J. An entropy-based approach for assessing the operation of production logistics. Expert Syst. Appl. 2019, 119, 118–127. [Google Scholar] [CrossRef]

- Wang, C.Z.; He, Q.; Shao, M.W.; Xu, Y.Y.; Hu, Q.H. A unified information measure for general binary relations. Knowl.-Based Syst. 2017, 135, 18–28. [Google Scholar] [CrossRef]

- Ge, H.; Yang, C.J.; Li, L.S. Positive region reduct based on relative discernibility and acceleration strategy. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2018, 26, 521–551. [Google Scholar] [CrossRef]

- Sun, L.; Xu, J.C. A granular computing approach to gene selection. Bio-Med. Mater. Eng. 2014, 24, 1307–1314. [Google Scholar]

- Fan, X.D.; Zhao, W.D.; Wang, C.Z.; Huang, Y. Attribute reduction based on max-decision neighborhood rough set model. Knowl.-Based Syst. 2018, 151, 16–23. [Google Scholar] [CrossRef]

- Meng, J.; Zhang, J.; Li, R.; Luan, Y.S. Gene selection using rough set based on neighborhood for the analysis of plant stress response. Appl. Soft Comput. 2014, 25, 51–63. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, W.L.; Jiang, Y.L.; Zeng, Z.Y. Quick attribute reduct algorithm for neighborhood rough set model. Inf. Sci. 2014, 271, 65–81. [Google Scholar] [CrossRef]

- Li, W.W.; Huang, Z.Q.; Jia, X.Y.; Cai, X.Y. Neighborhood based decision-theoretic rough set models. Int. J. Approx. Reason. 2016, 69, 1–17. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Techn. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Chen, Y.M.; Xue, Y.; Ma, Y.; Xu, F.F. Measures of uncertainty for neighborhood rough sets. Knowl.-Based Syst. 2017, 120, 226–235. [Google Scholar] [CrossRef]

- Liang, J.Y.; Shi, Z.Z.; Li, D.Y.; Wierman, M.J. Information entropy, rough entropy and knowledge granulation in incomplete information systems. Int. J. Gen. Syst. 2006, 35, 641–654. [Google Scholar] [CrossRef]

- Sun, L.; Xu, J.C.; Tian, Y. Feature selection using rough entropy-based uncertainty measures in incomplete decision systems. Knowl.-Based Syst. 2012, 36, 206–216. [Google Scholar] [CrossRef]

- Liu, Y.; Xie, H.; Chen, Y.H.; Tan, K.Z.; Wu, X. Neighborhood mutual information and its application on hyperspectral band selection for classification. Chemom. Intell. Lab. Syst. 2016, 157, 140–151. [Google Scholar] [CrossRef]

- Wang, C.Z.; Hu, Q.H.; Wang, X.Z.; Chen, D.G.; Qian, Y.H.; Dong, Z. Feature selection based on neighborhood discrimination index. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2986–2999. [Google Scholar] [CrossRef]

- Wang, G.Y. Rough reduction in algebra view and information view. Int. J. Intell. Syst. 2003, 18, 679–688. [Google Scholar] [CrossRef]

- Teng, S.H.; Lu, M.; Yang, A.F.; Zhang, J.; Nian, Y.J.; He, M. Efficient attribute reduction from the viewpoint of discernibility. Inf. Sci. 2016, 326, 297–314. [Google Scholar] [CrossRef]

- Qian, Y.H.; Liang, J.Y.; Pedryce, W.; Dang, C.Y. Positive approximation: An accelerator for attribute reduction in rough set theory. Artif. Intell. 2010, 174, 597–618. [Google Scholar] [CrossRef]

- Pawlak, Z.; Skowron, A. Rudiments of rough sets. Inf. Sci. 2007, 177, 3–27. [Google Scholar] [CrossRef]

- Sun, L.; Xu, J.C. Information entropy and mutual information-based uncertainty measures in rough set theory. Appl. Math. Inf. Sci. 2014, 8, 1973–1985. [Google Scholar] [CrossRef]

- Chen, Y.M.; Wu, K.S.; Chen, X.H.; Tang, C.H.; Zhu, Q.X. An entropy-based uncertainty measurement approach in neighborhood systems. Inf. Sci. 2014, 279, 239–250. [Google Scholar] [CrossRef]

- Hu, Q.H.; Yu, D.; Xie, Z.X.; Liu, J.F. Fuzzy probabilistic approximation spaces and their information measures. IEEE Trans. Fuzzy Syst. 2006, 14, 191–201. [Google Scholar]

- Jensen, R.; Shen, Q. Semantics-preserving dimensionality reduction: Rough and fuzzy-rough-based approaches. IEEE Trans. Knowl. Data Eng. 2004, 16, 1457–1471. [Google Scholar] [CrossRef]

- UCI Machine Learning Repository. Available online: http://archive.ics.uci.edu/ml/index.php (accessed on 15 December 2018).

- BROAD INSTITUTE, Cancer Program Legacy Publication Resources. Available online: http://portals. broadinstitute.org/cgi-bin/cancer/datasets.cgi (accessed on 15 December 2018).

- Faris, H.; Mafarja, M.M.; Heidari, A.A.; Aljarah, I.; Zoubi, A.M.A.; Mirjalili, S.; Fujita, H. An efficient binary salp swarm algorithm with crossover scheme for feature selection problems. Knowl.-Based Syst. 2018, 154, 43–67. [Google Scholar] [CrossRef]

- Wang, T.H.; Li, W. Kernel learning and optimization with Hilbert–Schmidt independence criterion. Int. J. Mach. Learn. Cybern. 2018, 9, 1707–1717. [Google Scholar] [CrossRef]

- Yager, R.R. Entropy measures under similarity relations. Int. J. Gen. Syst. 1992, 20, 341–358. [Google Scholar] [CrossRef]

- Sun, L.; Liu, R.N.; Xu, J.C.; Zhang, S.G.; Tian, Y. An affinity propagation clustering method using hybrid kernel function with LLE. IEEE Access 2018, 6, 68892–68909. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).