1. Introduction

Considering an isolated ideal gas with

N particles initially occupying only part of a box, after long enough time diffusion, the gas spreads all over the volume uniformly (

Figure 1). From the standard macroscopic thermodynamics it is simple to show the gas entropy is increased by

, where

V (

) is the final (initial) occupied volume [

1,

2].

This is a quite typical example of the entropy increase in the macroscopic thermodynamics. However, notice that isolated quantum systems always follow the unitary evolution, and the system density matrix is described by the von Neumann equation , which guarantees the von Neumann entropy does not change with time. In principle, this result should also apply for many-body systems, then it seems inconsistent with the above entropy increase in the standard macroscopic thermodynamics.

Indeed, this is not a problem that only appears in quantum physics, and classical physics has the same situation. For an isolated classical system, the ensemble evolution follows the Liouville equation [

1,

3,

4],

which is derived from the Hamiltonian dynamics. Here

is the Poisson bracket, and

is the probability density around the microstate

at time

t. As a result, the Gibbs entropy of the whole system keeps a constant and never changes with time,

Therefore, this constant entropy result exists in both quantum and classical physics.

This is rather confusing when compared with our intuition of the “irreversibility” happening in the macroscopic world [

4,

5,

6,

7,

8,

9,

10,

11]. Moreover, even if the particles have complicated nonlinear interactions, although the system dynamics could be highly chaotic and unpredictable, the (classical) Liouville or (quantum) unitary dynamics still guarantees the entropy of isolated systems does not change with time.

Here we need to make some clarification on the word “irreversibility”. In thermodynamics, a “reversible (irreversible)” process means the system is (not) always in the thermal equilibrium state at every moment. Throughout this paper, we adopt the meaning in dynamics: for any initial condition, if some function (distribution, state, etc.) always approaches the same steady state, then such kind of behavior is regarded as “irreversible”.

On the other hand, if there is no inter-particle interaction, the microstate evolution is well predictable, but the above macroscopic diffusion process still happens irreversibly until the gas achieves the new uniform distribution in the whole volume. From this sense, it seems that the above contradiction between the constant entropy and the appearance of macroscopic irreversibility does not depend on whether there exist complicated interactions. Thus we need to ask: how could the macroscopic irreversibility and entropy increase arise from the underlying microscopic dynamics, which is reversible with time-reversal symmetry [

6,

12]?

Recently, it is noticed that the irreversible entropy production in open systems indeed is deeply related with correlation between the open system and its environment [

13,

14,

15,

16]. In an open system, the entropy of the system itself can either increase or decrease, depending on whether it is absorbing or emitting heat to its environment. Subtracting such thermal entropy due to the heat exchange, the rest part of the system entropy change is called the irreversible entropy production [

17,

18,

19,

20,

21], and that increases monotonically with time until reaching the thermal equilibrium.

Under proper approximations, we can prove indeed the thermal entropy change due to the heat exchange is just equal to the entropy change of the environment state [

16,

22,

23], and the irreversible entropy production is equivalent as the correlation generation between the open system and its environment, which is measured by the relative entropy [

13,

14,

24,

25,

26] or their mutual information [

16,

23]. At the same time, the system and its environment together as a whole system maintains constant entropy during the evolution. In this sense, the constant global entropy and the increase of the system-environment correlation well coincide with each other. Moreover, when the baths are non-thermal states, which are beyond the application scope of the standard macroscopic thermodynamics, we could see such correlation production still applies (see

Section 2.4).

That is to say, due to the practical restrictions of measurements, indeed some correlation information hiding in the global state is difficult to be sensed, and that results to the appearance of the macroscopic irreversibility as well as the entropy increase. In principle, such correlation understanding could also apply for isolated systems. Indeed, in the above diffusion example, our observation that “the gas spread all over the volume uniformly” is implicitly focused on the spatial distribution only, rather than the total ensemble state.

For the classical ideal gas with no inter-particle interactions, the Liouville equation for the ensemble evolution can be exactly solved [

4,

9,

27]. Notice that in practice, it is the spatial and momentum distributions that are directly measured, but not the full ensemble state. We can prove that the spatial distribution

, as a marginal distribution of the whole ensemble, always approaches the new uniform one as its steady state. Moreover, by examining the correlation between the spatial and momentum distributions, we can see their correlation increases monotonically, and could reproduce the entropy increase in standard thermodynamics. At the same time, the total ensemble state

keeps constant entropy during the diffusion process (see

Section 3).

For the non-ideal gas with weak particle interactions, the dynamics of the single-particle probability distribution function (PDF)

can be described by the Boltzmann equation [

1,

8]. According to the Boltzmann

H-theorem,

always approaches the Maxwell-Boltzmann (MB) distribution as its steady state, and its entropy increases monotonically. Notice that the single-particle PDF

is a marginal distribution of the full ensemble state

, which is obtained by averaging out all the other particles. Thus

does not contain the particle correlations, and the increase of its entropy indeed implicitly reflects the increase of the inter-particle correlations, which exactly reproduces the entropy increase result in the standard macroscopic thermodynamics. At the same time, the total ensemble

still follows the Liouville equation with constant entropy.

The correlation production between the particles could also help us understand the Loschmidt paradox: when we consider the “backward” evolution, since significant particle correlations have established [

28], the molecular-disorder assumption, which is the most crucial approximation in deriving the Boltzmann equation, indeed does not hold. Therefore, the Boltzmann equation as well as the

H-theorem of entropy increase does not apply for the “backward” evolution (see

Section 4).

In sum, the global state keeps constant entropy, but in practice, usually it is the partial information (e.g., marginal distribution, single-particle observable expectations) that is directly accessible to our observation, and that gives rise to the appearance of the macroscopic irreversibility [

9,

13,

14,

15,

16,

23,

24,

25,

27,

29,

30,

31,

32,

33,

34]. The entropy increase in the standard macroscopic thermodynamics indeed reflects the correlation increase between different degrees of freedom (DoF) in the many-body system. In this sense, the reversibility of microscopic dynamics (for the global state) and the macroscopic irreversibility (for the partial information) coincide with each other. More importantly, this correlation understanding applies for both quantum and classical systems, and for both open and isolated systems; besides, it does not depends on whether there exist complicated particle interactions, and also can be used to describe time-dependent non-equilibrium systems.

2. The Correlation Production in Open Systems

In this section, we first discuss the thermodynamics of an open system, which is surrounded by an environment exchanging energy with it. The open system can absorb or emit heat to the environment, as a result, the entropy of the open system itself can either increase or decrease. Thus the thermodynamic irreversibility is not simply related to the entropy change of the open system alone, but should be described by the “irreversible entropy”, which increases monotonically with time.

Here we first give a brief review about this formalism for the irreversible entropy production, which is an equivalent statement for the second law. Then we will show indeed this irreversible entropy production in open systems is just equivalent with the correlation increase between the system and its environment [

13,

16,

29], which is measured by their mutual information. Moreover, if the baths contacting with the system are not canonical thermal ones, the temperatures are no longer well defined, and this situation is indeed not within the applicable scope of the second law in standard thermodynamics, but we will see the the correlation production still applies in this case.

2.1. The Irreversible Entropy Production Rate

Now we first briefly review the formalism of entropy production [

17,

18,

19,

20,

21]. The entropy change

of an open system can be regarded as coming from two origins, i.e.,

where

comes from the heat exchange with external baths, and

is regarded as the irreversible entropy change. The exchanging part

can be either positive or negative, indicating the heat absorbing or emitting of the system. But the irreversible entropy change

, as stated by the second law, should always be positive.

If the system is contacted with a thermal bath in the equilibrium state with temperature

T, the entropy change due to the heat exchange can be written as

(hereafter we refer it as the thermal entropy), where

is the heat absorbed by the system. Then the second law can be expressed as

where the equality holds only for reversible processes. This is just the Clausius inequality for an infinitesimal process [

1,

18,

21].

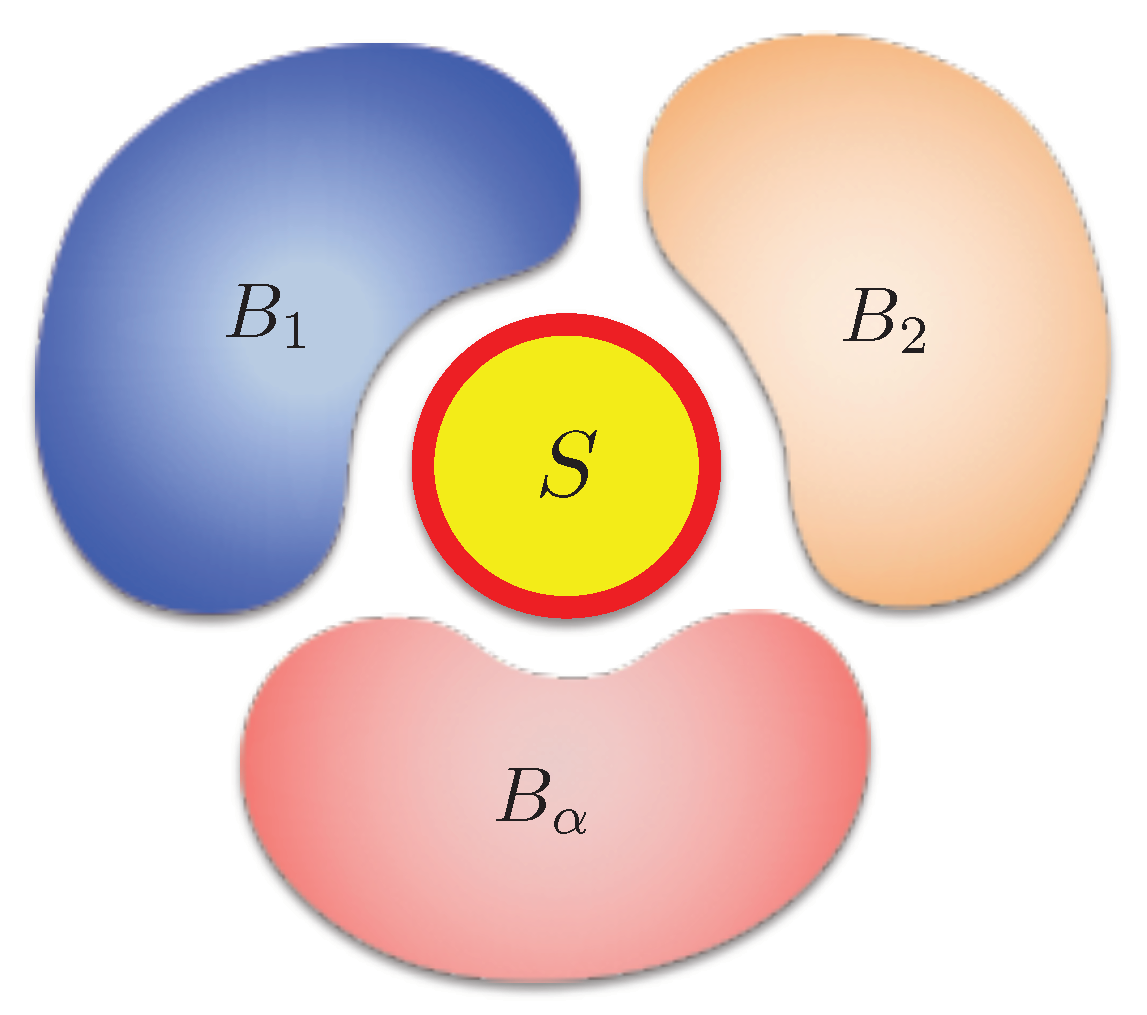

More generally, if the system contacts with multiple independent thermal baths with different temperatures

at the same time (

Figure 2), the irreversible entropy change should be generalized as [

17]

where

is the heat absorbed from bath-

[

18,

21]. For example, for a system contacting with two thermal baths with temperatures

, in the steady state, we have

and

, thus the above equation gives [

21]

It is easy to verify

always comes together with

, and vice versa. That means, the heat always flows from the high temperature area to the low temperature area, which is just the Clausius statement of the second law.

Therefore, for an open system, the second law can be equivalently expressed as a simple inequality

, which means the irreversible entropy change always increases monotonically. This can be also expressed by the entropy production rate (EPr), which is defined as

and

is equivalent as saying the irreversible entropy keeps increasing.

Besides the equivalence with the standard second law statements, the entropy production formalism also provides a proper way to quantitively study the non-equilibrium thermodynamics. Considering there is only one thermal bath, the system would get thermal equilibrium with the bath in the steady state. At this time, the system state no longer changes, and there is no net heat exchange between the system and the bath, thus when .

In contrast, if the system contacts with multiple thermal baths with different temperatures, in the steady state, although the system state no longer changes with time, there still exists net heat flux between the system and the baths. Therefore, different from the thermal equilibrium, such a steady state is a stationary non-equilibrium state [

17]. Notice that in this case the EPr remains a finite positive value

(see the example of Equation (

6)) when

, which indicates there is still on-going production of irreversible entropy. Therefore,

(or

) well indicates whether (or not) the system is in the thermal equilibrium state.

Here the above discussions about the entropy production apply for both classical and quantum systems, as long as the quantities like and are calculated by the classical ensemble or quantum state correspondingly.

2.2. The Production Rate of the System-Bath Correlation

Now we will show the above EPr is indeed equivalent as the production rate of the correlation between the open system and its environment. Usually the dynamics of the open system alone is more often concerned in literature. The baths, due to their large size, are usually considered as unaffected by the system, and only provides a background with fluctuations. But the system surely has influence to its environment [

16,

23,

35]. For example, when the system emits energy, this energy is indeed added to the environment. To study the correlation between the system and its environment, here we also need to know the dynamics of the whole environment.

2.2.1. Quantum Case

Here we first consider a quantum system contacting with several independent thermal baths with temperatures

. Initially, each bath-

stays in the canonical thermal state

with

as the normalization factor. The exact changing rate of the information entropy of bath-

is given by

. To make further calculation, we assume the bath state

does not change too much from the initial state, thus

, then the bath entropy change

becomes [

16,

22,

23,

26]

Notice that here

is the energy increase of bath-

, thus it is just equal to the energy loss of the system to bath-

(i.e.,

) when the system-bath interaction strength is negligibly small. Therefore, the above EPr

(Equation (

7)) can be rewritten as

.

Since initially the different baths are independent from each other and do not interact with each other directly, we assume they cannot generate significant correlations during the evolution, thus the entropy of the whole environment is simply the summation of that from each single bath, namely,

. Therefore, the above EPr

can be further rewritten as

Here

is the von Neumann entropy of the whole

s + b state, which does not change with time (

), since the whole

s + b system is an isolated system and follows the unitary evolution [

36].

Therefore, the production rate of the irreversible entropy

is just equivalent with the production rate of the mutual information between the system and its environment,

, which measures their correlation [

36]. That means, the second law statement that the irreversible entropy keeps increasing (

) can be also equivalently understood as, the correlation between the system and its environment, as measured by the mutual information, always keeps increasing until they get the equilibrium.

2.2.2. Classical Case

The above discussions about quantum open systems also applies for classical ones. For classical systems, the initial state of bath-

should be represented by the canonical ensemble distribution

where

denotes the momenta and positions of the DoF in bath-

. Then we consider the changing rate of the Gibbs entropy of bath-

, and that is

Here we adopted the similar approximation

as above, and this result is simply the classical counterpart of Equation (

9).

Therefore, for classical open systems, the EPr

in Equation (

7) also can be rewritten as

. Furthermore, since the whole

s + b system is an isolated system, its dynamics follows the Liouville equation, thus the Gibbs entropy of the whole

s + b system does not change with time, i.e.,

. Therefore, the equivalence between the irreversible entropy production and the system-bath correlation (Equation (

10)) also holds for classical systems. That means, for both classical and quantum open systems contacting with thermal baths, the second law can be equivalently stated as, the correlation between the system and its environment, which is measured by their mutual information, always keeps increasing.

2.3. Master Equation Representation

Besides the above general discussions, the time-dependent dynamics of the open system, either classical or quantum, can be quantitively described by a master equation. With the help of the master equations, the above EPr can be further written in a more detailed form. Here we will show this for both classical and quantum cases.

2.3.1. Classical Case

For a classical open system, the interaction with the baths would lead to the probability transition between its different states, and this dynamics is usually described by the Pauli master equation [

37]

which is a Markovian process. Here

is the probability to find the system in state-

n (whose energy is

), and

is the probability transition rate from state-

m to state-

n due to the interaction with the thermal bath-

. The back and forth transition rates between states-

should satisfy the following ratio [

17,

38]

which means the “downward” transition to the low energy state is faster than the “upward” one by a Boltzmann factor. In the case of only one thermal bath, with this relation, the detailed balance

simply leads to the Boltzmann distribution

in the steady state.

If there are multiple thermal baths, the energy average

gives an energy-flow conservation relation

thus

is the heat current flowing into the system from bath-

(

). We can put these relations, as well as the Gibbs entropy of the system

, into the above EPr (

7), obtaining [

39]

Notice that each summation term must be non-negative, thus we always have

, which is just consistent with the above second law statement that the irreversible entropy keeps increasing.

holds only when

for any

, and this is possible only when all the baths have the same temperature, which means the thermal equilibrium. Otherwise, in the steady state, although it is time-independent, there still exists non-equilibrium flux flowing across the system, and that is indicated by

.

2.3.2. Quantum Case

For a quantum system weakly coupled with the multiple thermal baths, usually its dynamics can be described by the GKSL (Lindblad) equation [

40,

41],

where

is the system state and

describes the dissipation due to bath-

. Using the von Neumann entropy

and heat current

, the EPr (

7) can be rewritten as the following Spohn formula [

39,

42,

43,

44,

45,

46,

47]

Here

satisfies

, and we call it the

partial steady state associated with bath-

. If the system only interacts with bath-

, then

should be its steady state when

. Clearly,

should be the thermal state (

) when bath-

is the canonical thermal one with temperature

, and the term

is the corresponding exchange of thermal entropy.

The positivity of

is not so obvious as the classical case (

16), but still we can prove

, if the master Equation (

17) has the standard GKSL form (see the proof in Appendix of Reference [

16] or References [

42,

43]). The GKSL form of the master Equation (

17) indicates it describes a Markovian process [

40,

41,

48], which is similar like the above classical case. Again this is consistent with the above discussions about the second law statement.

2.3.3. Remark

In the above discussions, we focused on the case that the baths are canonical thermal ones. As a result, in the above master equations, the transition rate ratios (

14) appear as the Boltzmann factors, and the partial steady states

of the system are the canonical thermal states. Strictly speaking, only for canonical thermal baths, the temperature

T is well defined, and the thermal entropy

can be applied, as well as the above EPr (

7), which is the starting point to derive the master equation representations (Equations (

16) and (

18)).

If the baths are non-thermal states, there is no well-defined temperature, thus the above EPr in standard thermodynamics in

Section 2.1, especially the thermal entropy

, does not apply. But master equations still can be used to study the dynamics of such systems. Due to the interaction with non-thermal baths, the transition rate ratios (

14) do not need to be the Boltzmann factors, but we can verify the last line of Equation (

16) still remains positive. Thus Equation (

16) can be regarded as a generalized EPr beyond standard thermodynamics, however, now it is unclear to tell its physical meaning, as well as its relation with the non-thermal bath.

The quantum case has the same situation. If the master equation (

17) comes from non-thermal baths, the partial steady state

would not be the thermal state with the temperature of bath-

, but the quantity

(last line of Equation (

18)) could still remain positive [

16,

42,

43]. However, in this case the physical meaning of the Spohn formula (

18) is not clear now.

In the following example of an open quantum system interacting with non-thermal baths, we will show that, although it is beyond the applicable scope of standard thermodynamics, the Spohn formula (

18) is still equal to the production rate of the system-bath correlation, which is the same as the thermal bath case in

Section 2.2, and the term

is just equal to the informational entropy change of bath-

.

2.4. Contacting with Squeezed Thermal Baths

When the heat baths contacting with the system are not canonical thermal ones, it is possible to construct a heat engine that “seemingly” works beyond the Carnot bound. For example, in an optical cavity, a collection of atoms with non-vanishing quantum coherence can be used to generate light force to do mechanical work by pushing the cavity well [

49]; a squeezed light field can be used to as the reservoir for an harmonic oscillator which expands and compresses as a heat engine [

50]. In these studies, it seems that the efficiency of the heat engine could be higher than the Carnot bound

. However, since the baths are not canonical thermal ones, the parameter

T can no longer be regarded as the well defined temperature. As we have emphasized, such kind of systems are indeed not within the applicable scope of standard thermodynamics, therefore they do not need to obey the second law inequalities that are based on canonical thermal baths [

51].

In this non-thermal bath case, the thermal entropy

does not apply, but the information entropy is still well defined. Now we study the system-bath mutual information when the baths are non-thermal states [

16]. We consider an example of a single mode boson (

) which is linearly coupled with multiple squeezed thermal baths (

and

), and they interact through

. The initial states of the baths are squeezed thermal ones,

where

is the squeezing operator for bath-

. Below we will use the master equation to calculate the Spohn formula (

18), and compare it with the result by directly calculating the bath entropy change. We will see, in this non-thermal case, the Spohn formula (

18) is still equal to the increasing rate of the correlation between the system and the squeezed baths.

2.4.1. Master Equation

We first look at the dynamics of the open system alone. The total

s + b system follows the von Neumann equation

. Based on it, after the Born-Markovian approximation [

38,

52], we can derive a master equation

for the open system

(interaction picture), where (see the detailed derivation in Reference [

16])

Here

,

. The parameters

and

take values from

in Equation (

19) when

, and

is the Planck function. The decay factor

is defined from the coupling spectrums

and

. In addition, we have omitted the phase of

, thus

. From this master equation, we obtain

Here

is in the interaction picture,

, and

is in the Schrödinger picture.

For this master equation, the partial steady state

associated with bath-

, which satisfies

, is a squeezed thermal one,

where

is the squeezing operator. Now we can put this result into the Spohn formula (

18), then the term

gives

When there is no squeezing (

), this equation exactly returns to the thermal bath result

(see Equation (

21)), which is the exchange of the thermal entropy. However, due to the quantum squeezing in the bath, clearly this

term is no longer the thermal entropy, and now it looks too complicated to tell its physical meaning. Below, we are going to show that here this

term is just the informational entropy changing of bath-

.

2.4.2. Bath Entropy Dynamics

Now we calculate the entropy change

of bath-

directly by adopting the similar approximation as Equation (

9), and that gives

Unlike the thermal baths case in Equation (

9), here it is not easy to see how the bath entropy dynamics

is related the system dynamics. However, notice that

is simply determined by the time derivative of the bath operator expectations like

and

, which can be further calculated by Heisenberg equations. After certain Markovian approximation, in the weak coupling limit (

), we can prove the following relation (see the detailed proof in Reference [

16]),

where

and

are the summation weights associated with the bath mode

.

These two relations well connects the dynamics of bath-

(left sides) with that of the open system (right sides). For example, let

, then the above relation becomes

, which is just the heat emission-absorption relation

, and we have utilized it in the discussion below Equation (

9). To calculate the above entropy change Equation (

24) for the squeezed thermal bath, let

,

, then we obtain

This result exactly equals to the term

in the above Spohn formula (see Equation (

23)). Therefore, in this non-thermal bath case, the changing rate of the system-bath mutual information is just equal to the Spohn formula (

18),

thus its positivity is still guaranteed [

16].

That means that although the non-thermal baths are beyond the applicable scope of standard thermodynamics, namely,

does not apply, the system-bath correlation

still keeps increasing monotonically like in the thermal bath case (

Section 2.2). Therefore, this system-bath correlation production may be a generalization for the irreversible entropy production which also applies for the non-thermal cases. Tracing back to the original consideration of the irreversible entropy change (Equation (

3)), it turns out the term

can also be regarded the informational entropy change of the bath, and it gives the relation

in the special case of canonical thermal bath.

2.5. Discussions

Historically, the Spohn formula was first introduced by considering the distance between the system state

and its final steady state

, which is measured by their relative entropy

(Spohn [

42]). When

,

, and this distance decreases to zero. Thus, for a Markovian master equation

, the EPr is defined from the time derivative of this distance, i.e.,

Therefore, this EPr-

serves as a Lyapunov index for the master equation. It was proved that

, and

when

. When there is only one thermal bath, this EPr-

returns to the thermodynamics result,

.

However, when the open system contacts with multiple heat baths as described by the master Equation (

17), denoting

, the above EPr-

still goes to zero when

. Thus it does not tell the difference between achieving the equilibrium state or the stationary non-equilibrium state (see the example of Equation (

6)). Later (Spohn, Lebowitz [

43]), this EPr-

was generalized to be the form of Equations (

7) and (

18), and its positivity can be proved by the similar procedure given in the previous study (Spohn [

42]).

In standard thermodynamics, the second law statement requires a monotonic increase of the irreversible entropy, not only compared with the initial state. Notice that in the proof for the positivity of the EPr, the Markovianity is necessary for both classical and quantum cases. If the master equation of the open system is non-Markovian, it is possible that there exist certain periods where , which means the decrease of the irreversible entropy (or the system-bath correlation). When comparing EPr with standard thermodynamics, a coarse-grained time scale is more proper (which usually means the Markovian process), thus may be acceptable if it appears only in short time scales.

In the above discussions, clearly the most important part is how to calculate the bath entropy dynamics directly. This is usually quite difficult since the bath contains infinite number of DoF. The above calculation can be done mainly thanks to the approximation . The results derived thereafter are consistent with the previous conclusions in thermodynamics, but still we need more examination about the validity of this approximation.

There are a few models of open system that are exactly solvable for this examination. In Reference [

23], the bath entropy dynamics was calculated when a two-level-system (TLS) is dispersively coupled with a squeezed thermal bath. In this problem, the density matrix evolution of each bath mode can be exactly solved. The state of each bath mode is the probabilistic summation of two displaced Gaussian states

, which keep separating and recombining periodically in the phase space. Thus the exact entropy dynamics can be calculated and compared with the result based on the above approximation.

It turns out that the above approximation fits the exact result quite well in the high temperature area; in the low temperature area, the approximated result diverges to infinity when , but the exact result remains finite. This is because in the high temperature area, this can be better approximated as a single Gaussian state when the separation of is quite small; while in the low temperature area, the uncertainty of mainly comes from the probabilities but not the entropy in the Gaussian states . Namely, in the low temperature area, the influence from the system to the bath is bigger, especially for nonlinear systems like the TLS. If the bath states cannot be well treated as Gaussian ones, the above approximation is questionable, and how to calculate the bath entropy in this case remains an open problem.

3. The Entropy in the Ideal Gas Diffusion

In the above discussions about the correlation production between the open system and its environment, we utilized an important condition, i.e., the whole s + b system is an isolated system, thus its entropy does not change with time. In the quantum case, the whole s + b system follows the von Neumann equation , thus the von Neumann entropy does not change during the unitary evolution. Likewise, in the classical case, the whole system follows the Liouville equation , thus the Gibbs entropy keeps a constant.

However, still this is quite counter-intuitive compared with our intuition of the macroscopic irreversibility. For example, considering the diffusion process of an ideal gas as we mentioned in the very beginning (

Figure 1), although there are no particle interactions and the dynamics of the whole system is well predictable, still we could see the diffusion proceeds irreversibly, and would finally occupy the whole volume uniformly.

In this section, we will show this puzzle also can be understood in the sense of correlation production. In open systems, we have seen it is the system-bath correlation that increases, while the total

s + b entropy does not change. In an isolated system, there is no partition for “system” and “bath”, but we will see it is the correlation between different DoF, e.g., position-momentum, and particle-particle, that increases monotonically, while the total entropy does not change [

4,

27].

3.1. Liouville Dynamics of the Ideal Gas Diffusion

Here we make a full calculation on the phase-space evolution of the above ideal gas diffusion process in classical physics, so as to examine the dynamical behavior of the microstate, as well as its entropy.

Since there is no interaction between particles, the dynamics of the

DoF are independent from each other. Assuming there is no initial correlation between different DoF, the total

N-particle microstate PDF always can be written as a product form, i.e.,

for

, thus this problem can be reduced to study the PDF of a single DoF

. Correspondingly, the Liouville equation is

where

is the single DoF Hamiltonian.

This equation is exactly solvable, and the general solution is

. The detailed form of the function

should be further determined by the initial and boundary conditions. We assume initially the system starts from an equilibrium state confined in the area

, namely

Here

is the MB distribution, with

as the average kinetic energy, and

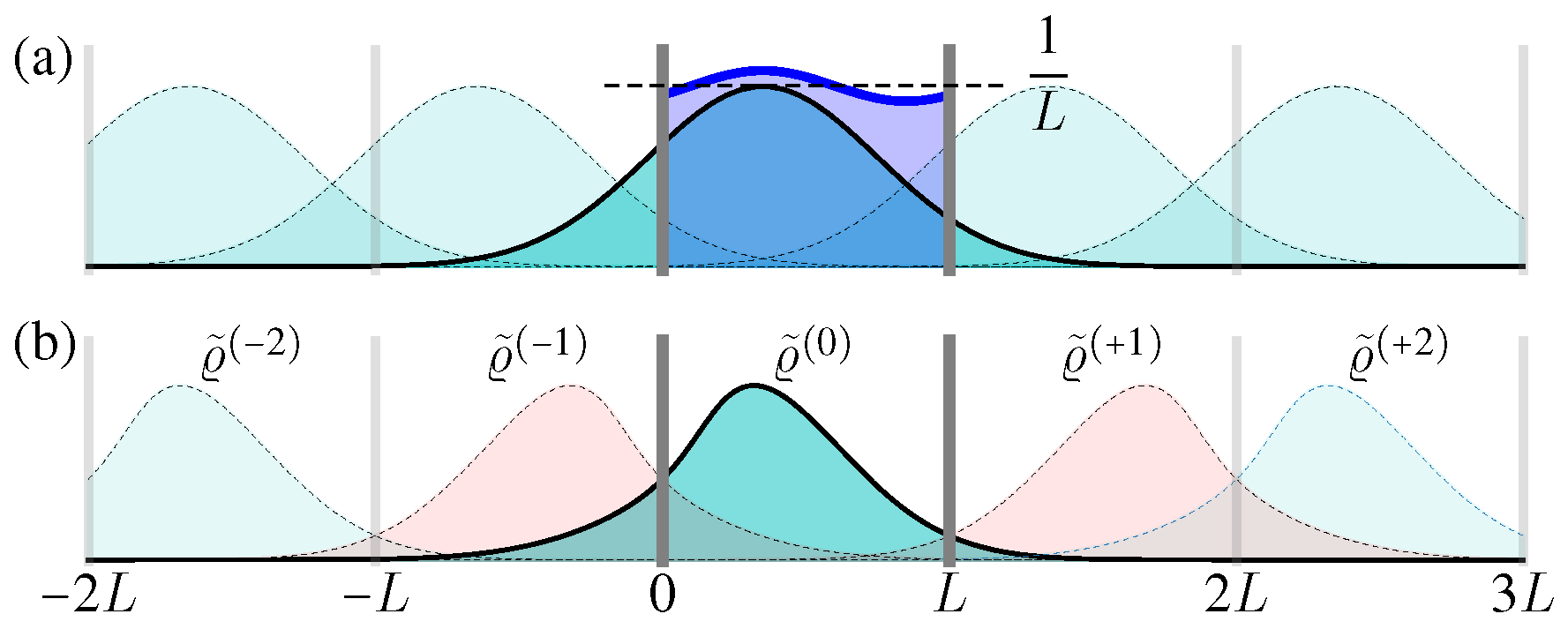

is a normalization factor.

(x) is the initial spatial distribution (

Figure 3a)

Such a product form of

indicates the spatial and momentum distributions have no correlations a priori.

For the diffusion in free space

, the time-dependent solution is

which satisfies both the Liouville Equation (

29) and the initial condition (

30).

For a confined area

with periodic boundary condition

, the solution can be constructed with the help of the above free space one, i.e.,

Here

can be regarded as the periodic “image” solution in the interval

(

Figure 4a) [

35]. Clearly, Equation (

33) satisfies the periodic boundary condition, as well as the initial condition (

30), and it is simple to verify each summation term satisfies the above Liouville Equation (

29), thus Equation (

33) describes the full microstate PDF evolution in the confined area

with periodic boundary condition.

From these exact solutions (

32) and (

33), it is clear to see the microstate PDF

can no longer hold the separable form like

once the diffusion starts, thus indeed it is not evolving towards any equilibrium state, since an equilibrium state must have a separable form similar like the initial condition (

30) (see also

Figure 3a).

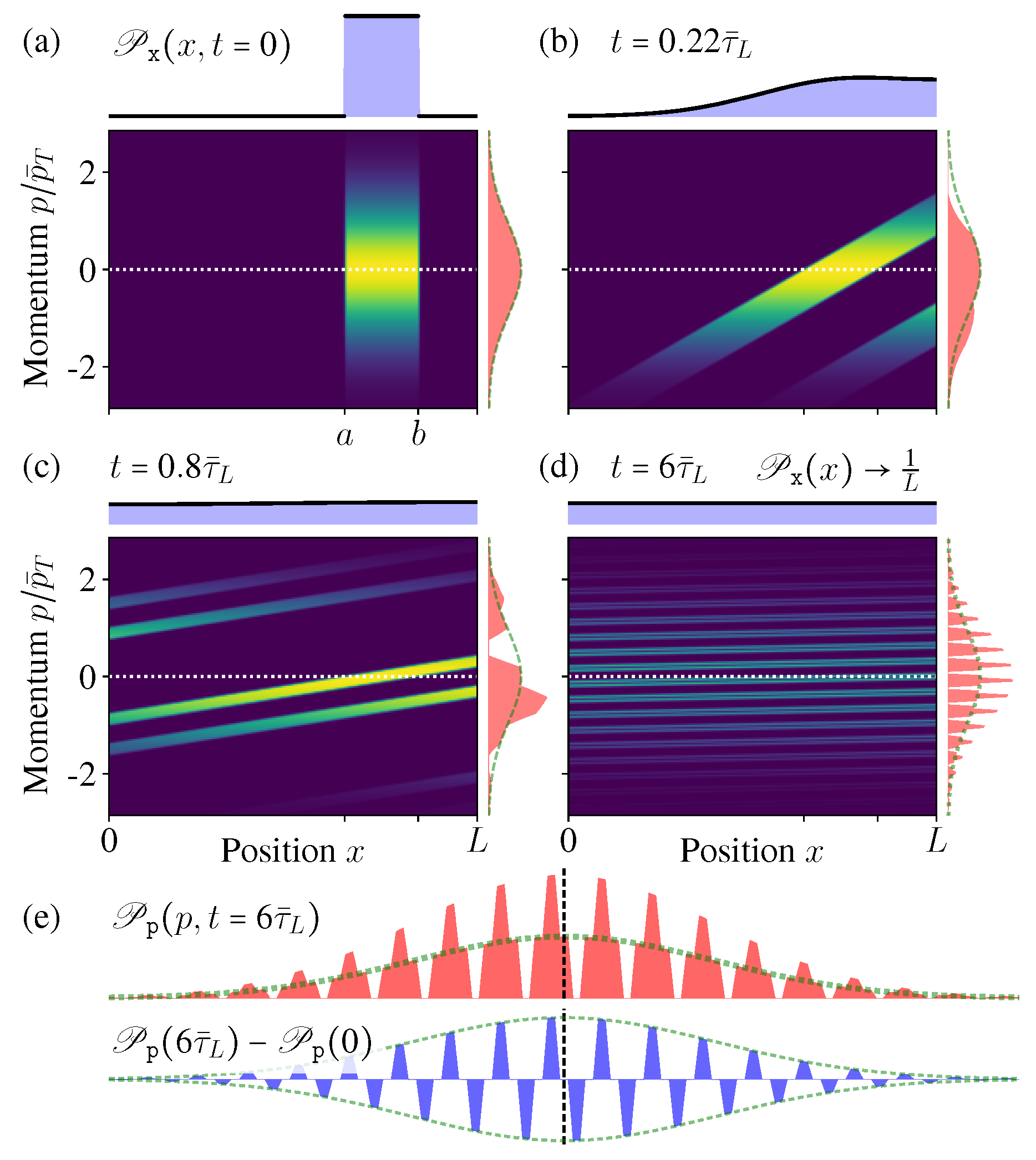

In

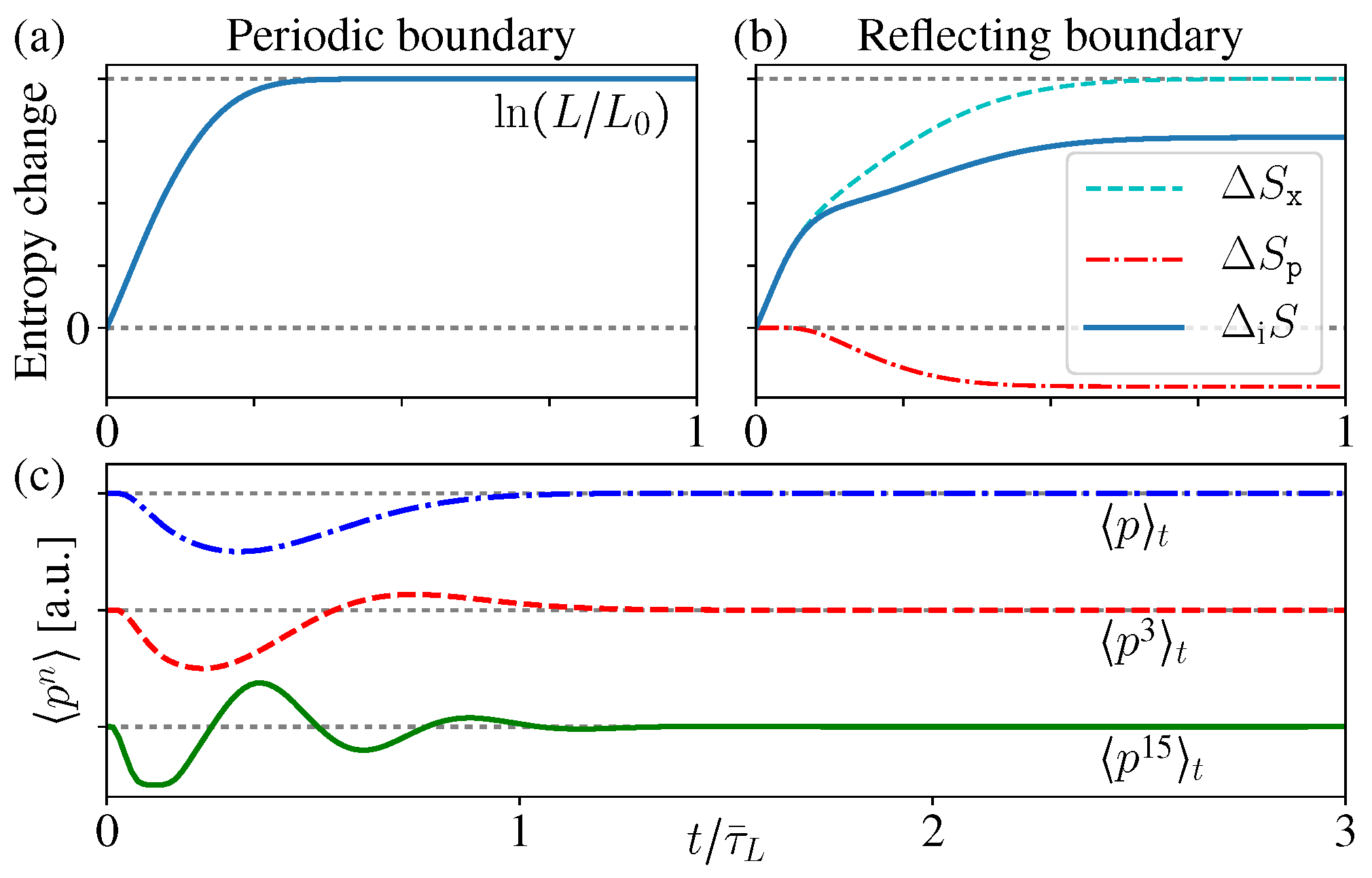

Figure 3 we show the microstate PDF

at different times. As the time increases, the “stripe” in

Figure 3a becomes more and more inclined; once exceeding the boundary, it winds back from the other side due to the periodic boundary condition and generates a new “stripe” (

Figure 3c). After very long time, more and more stripes appear, much denser and thinner, but they would never occupy the whole phase space continuously (

Figure 3d,e).

Figure 3e shows the conditional PDF of the momentum when the position is fixed at

(the vertical dashed line in

Figure 3d). In the limit

, it becomes an exotic function discontinuous everywhere, but not the MB distribution. All these features indicate that, during this diffusion process of the isolated ideal gas, indeed the ensemble is not evolving towards the new equilibrium state as expected in the macroscopic intuition. Even after long time relaxation, the microstate PDF

is not approaching the equilibrium state.

3.2. Spatial and Momentum Distributions

Even after long time relaxation, the ideal gas would not achieve the new equilibrium state. This result looks counter-intuitive, since clearly we can see the particles spread all over the box uniformly after long enough time relaxation. However, we must notice that the fact “spreading all over the box uniformly” is implicitly focused on the position distribution

alone, but not the whole ensemble state

. As a marginal distribution of

, the spatial distribution

does approach the new uniform one as its steady state (

Figure 3d), and now we show indeed this is true for any initial state of

[

4,

27].

We first consider the initial spatial distribution is a

-function concentrated at

,

. Since we have obtained the analytical results (

32) and (

33) for the ensemble evolution

, the spatial distribution

emerges as its marginal distribution by averaging over the momentum:

where

. With the increase of time

t, these Gaussian terms becomes wider and lower (

Figure 4a). Therefore, when

, the spatial distribution

always approaches the uniform distribution in

.

Any initial spatial distribution can be regarded as certain combination of -functions, i.e., . Therefore, for any initial , the spatial distribution always approaches the uniform one as its steady state. In this sense, although the underlying Liouville dynamics obeys the time-reversal symmetry, the “irreversible” diffusion appears into our sight.

On the other hand,

never changes with time, and always maintains its initial distribution, which can be proved by simply changing the integral variable:

This is all because of the periodic boundary condition, and the particles always move freely. If the particles can be reflected back at the boundaries, this momentum distribution would also change with time.

3.3. Reflecting Boundary Condition

Now we consider the reflecting boundary condition. In this case, when the particles hit the boundaries at

, their positions do not change, but their momentum should be suddenly changed from

p to

. Correspondingly, the analytical result for the ensemble evolution can be obtained by summing up the “reflection images” (

Figure 4b), i.e.,

Here

, and

means making a mirror reflection to the function

along the axis

. Clearly, each summation term

can be regarded as shifted from

or its mirror reflection, thus they all satisfy the differential relation in the Liouville Equation (

29).

To verify the boundary condition, consider a diffusing distribution in

, initially described by

. As the time increases, it diffuses wider and even exceeds the box range

. The exceeded part should be reflected at the boundaries

as the next order

, and added back to the total result

. This procedure should be done iteratively, namely, when the term

exceeds the boundaries, it generates the reflected term

as the next summation order (

Figure 4b).

Therefore, this result is quite similar to the above periodic case, except reflection should be made to certain summation terms. Based on the same reason as above, each summation term becomes more and more flat during the diffusion, thus the spatial distribution

always approaches the new uniform one as its steady state [

4].

The ensemble evolution is shown in

Figure 5a–d, which is quite similar with the above periodic case. Again,

is indeed not evolving towards the equilibrium state. A significant difference is the momentum distribution

now varies with time. This is because the collision at the boundaries changes the momentum direction, thus

is no longer conserved, although the kinetic energy

does not change (as the momentum amplitude).

Notice that the reflection “moves” the probability of momentum

p to the area of

, therefore, we see that some “areas” of

are “cut” off from the initial MB distribution, and “added” to its mirror position along

(especially

Figure 5c,d). Thus

is different from the initial MB distribution.

Since the reflection transfers the probability of

p to its mirror position

, the difference

is always an odd function (lower blue in

Figure 5e). As a result, the even moments

of

are the same with the MB distribution, but the odd ones

are changed.

As the time increases, more and more “stripes” appear in

, much thinner and denser. As a result, when calculating the odd orders

, the contributions from the nearest two stripes in

(who have similar

p values), positive and negative, tends to cancel each other (lower blue in

Figure 5e). Therefore, in the limit

, the odd orders

also approach the same value of the original MB distribution (zero) (

Figure 6c).

Therefore, in the long time limit,

approaches an exotic function discontinuous everywhere, which is different from the initial MB distribution, but all of its moments

have the same values as the initial MB distribution [

53,

54]. In usual experiments, practically it is difficult to tell the difference of these two different distributions [

9].

3.4. Correlation Entropy

From the above exact results for the ensemble evolution, we have seen that the macroscopic appearance of the new uniform distribution is indeed only about the spatial distribution, which just reflects the marginal information of the whole state , thus this macroscopic appearance is not enough to conclude whether is approaching the new equilibrium state with entropy increase.

However, in practical experiments, the full joint distribution

is difficult to be measured directly. Usually it is the spatial and momentum distributions

and

that are directly accessible for measurements, e.g., by measuring the gas density and pressure. Therefore, based on these two marginal distributions, we may “infer” the microstate PDF as [

7,

25,

55]

which indeed neglected the correlation between these two marginal distributions. As a result, in the long time limit,

approaches the new uniform distribution, while

“behaves” similarly like the initial MB distribution, thus this inferred state

just looks like a new “equilibrium state”.

The entropy change of this inferred state is

where

is guaranteed by the Liouville dynamics, and

Notice that the term

in Equation (

38) is just the mutual information between the marginal distributions

and

, which is the measure for their correlation [

36,

56] (see the discussion about the entropy for continuous PDF in

Appendix A).

Therefore, here

just describes the correlation increase between the spatial and momentum distributions. During the diffusion process, this correlation entropy

increases monotonically for both periodic and reflecting boundary cases (

Figure 6a,b). Notice that this is quite similar with the above discussions about open systems, namely, the total entropy does not change, while the correlation entropy increases “irreversibly” [

13,

14,

15,

16,

23,

24,

25,

29,

30,

31].

For the periodic boundary case,

does not change, and

approaches the uniform distribution after long time, thus the above entropy increase (

38) gives

, where

is the length of the initially occupied area. When considering the full

N-particle state of the ideal gas, the corresponding inferred state is

, thus it gives the entropy increase as

. Notice that this result exactly reproduces the above thermodynamic entropy increase as mentioned in the beginning of this section (

Figure 6a) (these conclusions still hold in the thermodynamics limit

, since the system sizes always appear in ratios, e.g.,

).

For the reflecting boundary case,

still holds and gives

, but now

varies with time. Moreover, it is worthwhile to notice that

is decreasing with time (

Figure 6b), which looks a little counter-intuitive. The reason is, as we mentioned before, the boundary reflections change the momentum directions and so as the distribution

, but the average energy

does not change, thus the thermal distribution (which is the initial distribution) should have the maximum entropy [

20,

55]. Therefore, during the evolution, the deviation of

from the initial MB distribution leads to the decrease of its entropy

. However, clearly this is quite difficult to be sensed in practice, and the total correlation entropy change

still increases monotonically.

3.5. Resolution Induced Coarse-Graining

Historically, the problem of the constant entropy from the Liouville dynamics was first studied by Gibbs [

3]. To understand why the entropy increases in standard thermodynamics, he noticed that, if we change the order of taking limit when calculating the “ensemble volume” (the entropy), the results are different. Usually, at a certain time

t, the phase space is divided into many small cells with a finite volume

, and we make summation from all these cells, then let the cell size

. That gives the result of constant entropy. However, if we keep the cell size finite, and let the time

first, then let the cell size

, that would give an increasing entropy.

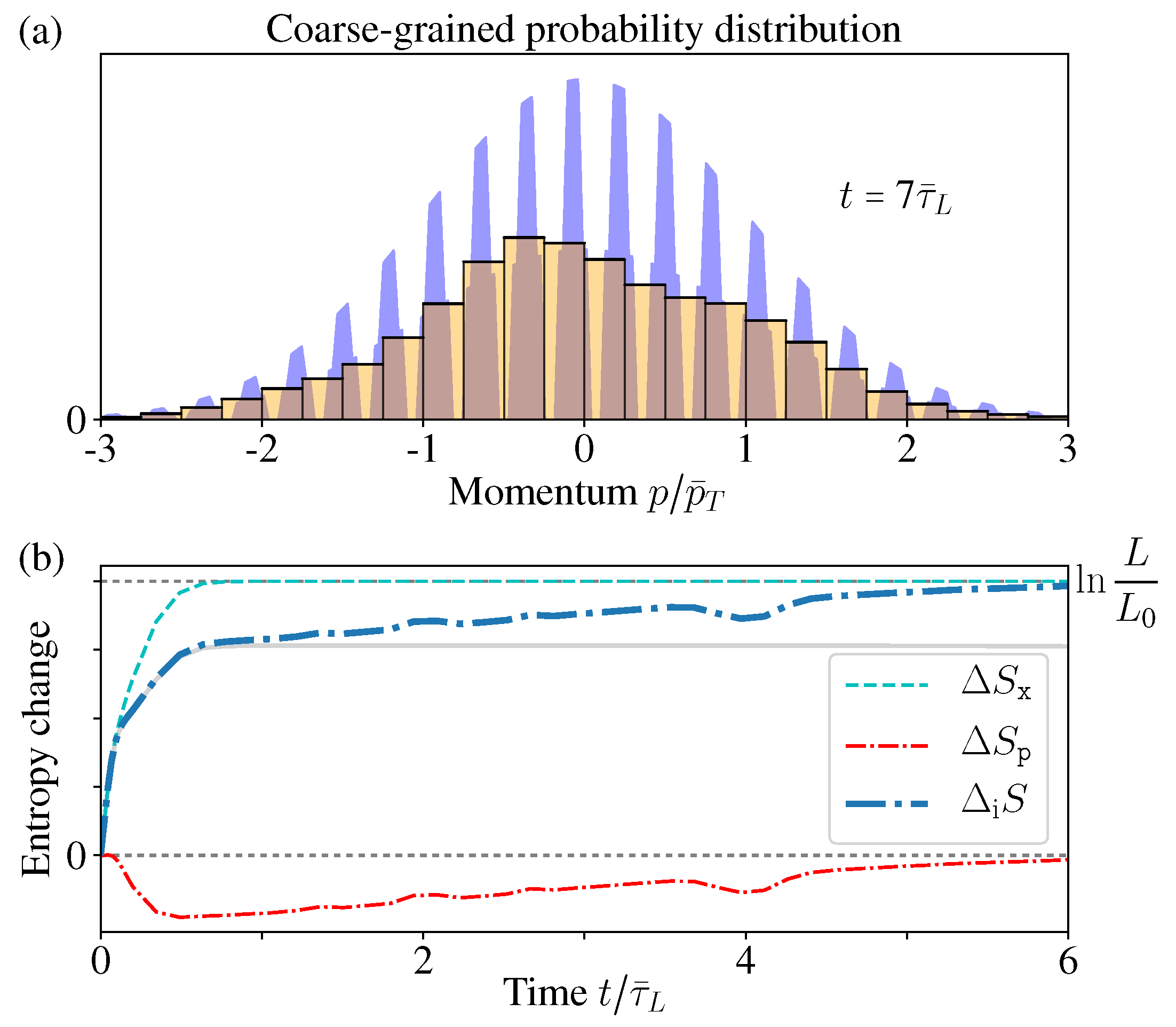

This idea is now more specifically described as the “coarse-graining” [

3,

4,

8]. The “coarse-grained” ensemble state

is obtained by taking the phase-space average of the exact one

over a small volume around each point

, namely,

Here

is the small volume around the point

in the phase space. From

Figure 3d and

Figure 5d, we can see after long time relaxation, the coarse-grained

could approach the equilibrium state.

In practical measurements, there always exists a finite resolution limit, and that can be regarded as the physical origin of coarse-graining. When measuring a continuous PDF

, we should first divide the continuous area

into

N intervals, and then measure the probability that appears in the interval between

and

, which is denoted as

. In the limit

, the histogram

becomes the continuous probability density (see also

Appendix A).

Here is determined by the measurement resolution, but practical measurements always have a finite resolution limit , thus cannot approach 0 arbitrarily. Therefore, the fine structure within the minimum resolution interval of the continuous PDF cannot be sensed in practice. However, usually is assumed to be a smooth function within this small interval, thus indeed is coarse-grained by its average value in this small region, and the resolution limit practically determines the coarse-graining size.

Remember in the reflecting boundary case, the momentum distribution

approaches an exotic function with a structure of dense comb and discontinuous everywhere. Therefore, such an exotic structure within the resolution limit indeed cannot be observed in practice. For a fixed measurement resolution

, there always exists a certain time

, so that after

, the “comb teeth” in

Figure 7a are finer than the resolution

. In addition, when

, the coarse-grained distribution

(with coarse-graining size

) would well approach the thermal distribution (

Figure 7b).

In

Figure 6c, we have shown that all the moments

of

have no difference with the initial MB distribution. Now due to the finite resolution limit, again we have no way to tell the difference between the exotic function

and its coarse-graining

, which goes back to the initial MB distribution (

Figure 7a). In this sense, the momentum distribution

has no “practical” difference with the thermal equilibrium distribution.

3.6. Entropy “Decreasing” Process

Now we see the correlation entropy, rather than the total entropy, coincides closer to the irreversible entropy increase in macroscopic thermodynamics. Here we show it could be possible, although not quite feasible in practice, to construct an “entropy decrease” process.

To achieve this, we first let the ideal gas experience the above diffusion process with “entropy increase” for a certain time

(

Figure 3 and

Figure 5). From the state

at this moment (e.g.,

Figure 5d), we construct a new “initial state” by reversing its momentum

. Since the Liouville equation obeys time-reversal symmetry, this new “initial state” would evolve into

after time

, which is just the original equilibrium state confined in

(

Figure 5a). That means, the idea gas exhibits a process of “reversed diffusion”.

During this time-reversal process, the total Gibbs entropy, which contains the full information, still keeps constant. However, the correlation entropy change

(no matter whether coarse-grained) would exactly experience the reversed “backward” evolution of

Figure 6, which is an “entropy decreasing” process.

This is just the idea of the Loschmidt paradox [

8,

57,

58,

59,

60], except two subtle differences: (1) here we are talking about the ideal gas with no particle collision, but the original Loschmidt paradox was about the Boltzmann transport equation in the presence of particle collisions; (2) here we are talking about the correlation entropy between the spatial and momentum distributions, while the Boltzmann equation is about the entropy of the single-particle PDF.

We must notice that such an initial state

is NOT an equilibrium state, but contains very delicate correlations between the spatial and momentum distributions [

5]. To see such a time-reversal process, the initial state must be precisely prepared to contain such specific correlations between the marginal distributions (

Figure 5d), which is definitely quite difficult for practical operation. Therefore, such an “entropy decrease” process is rarely seen in practice (except some special cases like the Hahn echo [

61] and back-propagating wave [

62,

63]).

4. The Correlation in the Boltzmann Equation

In the above section, we focused on the diffusion of the ideal gas with no inter-particle interactions, and the initial momentum distribution has been assumed to be the MB distribution a priori. If the initial momentum distribution is not the MB one, the particles could still exhibit the irreversible diffusion filling the whole volume, but would never become the thermal equilibrium distribution.

If there exist weak interactions between the particles, as shown by the Boltzmann H-theorem, the single-particle PDF could always approach the MB distribution as its steady state, together with the irreversible entropy increase. In addition, in this case, the above “coarse-graining” is not needed for to approach the thermal equilibrium distribution.

Notice that, in the Boltzmann

H-theorem, only the single-particle PDF

is concerned. The total ensemble

in the

-dimensional phase space should still follow the Liouville equation, thus its entropy does not change with time. Therefore, the

H-theorem conclusion also can be understood as the inter-particle correlations are increasing irreversibly [

1,

5,

32]. Again, this is quite similar like the correlation understanding in the last two sections, and here the inter-particle correlation entropy could well reproduce the entropy increase result in the standard macroscopic thermodynamics.

The debates about the Boltzmann H-theorem started ever since its birth. The most important one must be the Loschmidt paradox raised in 1876: due to the time-reversal symmetry of the microscopic dynamics of the particles, once their momenta are reversed at the same time, the particles should follow their incoming paths “backward”, which is surely a possible evolution for the microstate; however, if the entropy of must increase (according to the H-theorem), then the corresponding “backward” evolution must give an entropy decreasing process, which is contradicted with the H-theorem conclusion.

In this section, we will see the slowly increasing inter-particle correlations could be helpful in understanding this paradox. Namely, due to the significant inter-particle correlation established during the “forward” process, indeed the “backward” process no longer satisfies the molecular-disorder assumption, which is a crucial approximation in deriving the Boltzmann equation, thus it is not suitable to be described by the Boltzmann equation, and the H-theorem does not apply in this case either.

4.1. Derivation of the Boltzmann Equation

We first briefly review the derivation of the Boltzmann transport equation [

1,

64,

65]. When there is no external force, the evolution equation of the single-particle microstate PDF

is

The left side is just the above Liouville Equation (

29) of the ideal gas, and the right side is the probability change due to the particle collision (assuming only bipartite collisions exist).

This collision term, rewritten as , contains two contributions: means the collision between two particles kicks particle-1 out of its original region around , thus decreases; likewise, means the collision kicks particle-1 into the region around and that increases .

These two collision contributions can be further written down as (denoting

)

where

is the two-particle joint probability, and

denotes the transition ratio (or scattering matrix) from the initial state

scattered into the final state

. Due to the time-reversal and inversion symmetry of the microscopic scattering process, the transition ratios

and

equal to each other (see

Figure 8). Therefore, the above Equation (

41) is further written as [denoting

]

Now we adopt the “molecular-disorder assumption”, i.e., the two-particle joint PDF can be approximately written as the product of the two single-particle PDF

This assumption is usually known as the Stosszahlansatz, or the molecular chaos hypothesis. The word “Stosszahlansatz” was introduced by Ehrenfest in 1912, and its original meaning is “the assumption of collision number” [

8,

60,

66]. Here we adopted the wording from Boltzmann’s paper in 1896 [

64,

65]. Essentially this is requiring that the correlation between the two particles is negligible. Then the Boltzmann transport equation is obtained as [denoting

]

4.2. H-theorem and the Steady State

Now we further review how to prove the

H-theorem from the above Boltzmann equation, and find out its steady state. Defining the Boltzmann

H-function as

, the Boltzmann Equation (

45) guarantees

decreases monotonically (

), and this is the

H-theorem.

To prove this theorem, we put the Boltzmann equation into the time derivative

. The Liouville diffusion term gives (denoting

)

which can be turned into a surface integral and vanishes. In addition, the collision term gives

where the second line is because exchanging the integral variables

gives the same value. We can further apply the similar trick by exchanging the integral variables

, and that gives

Here the transition ratio

is non-negative, and notice that

always holds for any PDF

. Therefore, we obtain

, which means the function

decreases monotonically, and this encloses the proof.

In the above inequality, the equality holds if and only if , which means the collision induced increase and decrease of must balance each other everywhere, thus it is also known as the detailed balance condition.

The time-independent steady state of

can be obtained from this detailed balance equation

. Taking the logarithm of the two sides, it gives

Notice that the two sides of the above equation depends on different variables, and has a conservation form. Therefore,

must be a combination of some conservative quantities. During the collision

, the particles collides at the same position, and the total momentum and energy are conserved, thus

must be their combinations, namely,

, where

,

,

are constants. Therefore,

must be a Gaussian distribution of

at any position

.

Furthermore, the diffusion term in Equation (

45) requires

in the steady state, thus

must be homogenous for any position

. The average momentum

should be 0 for a stationary gas. Therefore, the steady state of the Boltzmann Equation (

45) is a Gaussian distribution

independent of the position

, which is the MB distribution.

4.3. Molecular-Disorder Assumption and Loschmidt Paradox

In the above two sections, we demonstrated all the critical steps deriving the Boltzmann equation. Notice that there is no special requirement for the interaction form of the collisions, as long as it is short-ranged so as to make sure only bipartite collisions exist. The contribution of the collision interaction is implicitly contained in the transition rate , and the only properties we utilized are (1) and (2) . Thus it does not matter whether the interaction is nonlinear.

No doubt to say, the molecular-disorder assumption (

) is the most important basis in the above derivations. As mentioned by Boltzmann (pp. 29 in Reference [

65]), “

…The only assumption made here is that the velocity distribution is molecular-disordered (namely,

in our notation)

at the beginning, and remains so. With this assumption, one can prove that H can only decrease, and also that the velocity distribution must approach that of Maxwell.” Before this approximation, indeed Equation (

43) is still formally exact. Clearly, the validity of this assumption, which is imposed on the particle correlations, determines whether the Boltzmann Equation (

45) holds. Now we will re-examine this assumption as well as the Loschmidt paradox.

Once two particles collide with each other, they get correlated. In a dilute gas, collisions do not happen very frequently, and once two particles collides with each other, they could hardly meet each other again. Therefore, if initially there is no correlations between particles, we can expect that, on average, the collision induced bipartite correlations are negligibly small, and thus this molecular-disorder assumption holds well.

Now we look at the situation in the Loschmidt paradox. First, the particles experience a “forward” diffusion process for a certain time. According to the H-theorem, the entropy increases in this process. Then suppose all the particle momenta are suddenly reversed at this moment. From this initial state, the particles are supposed to evolve “backward” exactly along the incoming trajectory, and thus exhibit an entropy decreasing process, which is contradicted with the H-theorem conclusion, and this is the Loschmidt paradox.

However, we should notice that, indeed the first “forward” evolution has established significant (although very small in quantity) bipartite correlations in this new “initial state” [

28]. This is quite similar like the above discussion about the momentum-position correlation in

Section 3.6. Thus, the above molecular-disorder assumption

does not apply in this case. As a result, the next “backward” evolution is indeed unsuitable to be described by Boltzmann Equation (

45). Therefore, the entropy increasing conclusion of the

H-theorem (

) does not need to hold for this “backward” process. As well, the preparation of such a specific initial state is definitely unfeasible in practice, thus the “backward” entropy decreasing process is rarely seen.

We emphasize that the Boltzmann Equation (

45) is about the single-particle PDF

, which is obtained by averaging over all the other

particles from the full ensemble

. Clearly,

omits much information in

, but indeed it is enough to give most macroscopic thermodynamic quantities. For example, the average kinetic energy of each single molecular

determines the gas temperature

T, and the gas pressure on the wall is given by

[

1].

In contrast, indeed the inter-particle correlations ignored by the single-particle PDF

are quite difficult to be sensed in practice. Therefore, the

N-particle ensemble may be “inferred” as

, which clearly omits the inter-particle correlations in the exact

. Similar like the discussion in

Section 3.4, based on this inferred ensemble

, the entropy change gives

, which exactly reproduces the result in standard thermodynamics. Thus

indeed characterizes the increase of the inter-particle correlations.

In sum, even in the presence of the particle collisions, the full N-particle ensemble still follows the Liouville equation exactly, thus its Gibbs entropy does not change. On the other hand, the single-particle PDF follows the Boltzmann equation, thus its entropy keeps increasing until reaching the steady state. In addition, this roots from our ignorance of the inter-particle correlations in the full .

5. Summary

In this paper, we study the correlation production in open and isolated thermodynamic systems. In a many-body system, the microscopic dynamics of the whole system obeys the time-reversal symmetry, which guarantees the entropy of the global state does not change with time. Based on the microscopic dynamics, indeed the full ensemble state is not evolving towards the new equilibrium state as expected from the macroscopic intuition. However, the correlation between different local DoF, as measured by their mutual information, generally increases monotonically, and its amount could well reproduce the entropy increase result in the standard macroscopic thermodynamics.

In open systems, as described by the second law in standard thermodynamics, the irreversible entropy production increases monotonically. It turns out that this irreversible entropy production is just equal to the correlation production between the system and its environment. Thus, the second law can be equivalently understood as the system-bath correlation is increasing monotonically, while at the same time, the s + b system as a whole still keeps constant entropy.

In isolated systems, there is no specific partition for “system” and “environment”, but we could see the momentum and spatial distributions, as the marginal distributions of the total ensemble, exhibit the macroscopic irreversibility, and their correlation increases monotonically, which reproduces the entropy increase result in standard thermodynamics. In the presence of particle collisions, different particles are also establishing correlations between each other. As a result, the single-particle distribution exhibits the macroscopic irreversibility as well as the entropy increase in standard thermodynamics, which is just the result of the Boltzmann H-theorem. At the same time, the full ensemble of the many-body system still follows the Liouville equation, which guarantees its entropy does not change with time.

It is worth noticing that, in practice, usually it is the partial information (e.g., marginal distribution, few-body observable expectations) that is directly accessible to our observation. However, indeed most macroscopic thermodynamic quantities are obtained only from such partial information like the one-body distribution, and that is why they exhibits irreversible behaviors. However, due to the practical restrictions in measurements, the dynamics of the full ensemble state, such as the constant entropy behavior, is quite difficult to be sensed in practice.

In sum, the global state keeps constant entropy, while partial information exhibits the irreversible entropy increase. However, in practice, it is the partial information that is directly observed. In this sense, the macroscopic irreversible entropy increase does not contradict with the microscopic reversibility. Clearly, such correlation production understanding can be applied for both quantum and classical systems, no matter whether there exist complicated particle interactions, and it can be well used for time-dependent non-equilibrium states. Moreover, it is worths noticing that, if the bath of an open system is a non-thermal state, indeed this is beyond the application scope of the standard thermodynamics, but we could see such correlation production understanding still applies in this case. We notice that such correlations can be found in many of recent studies of thermodynamics, and it is also quite interesting to notice that similar idea can be used to understand the paradox of blackhole information loss, where the mutual information of the radiation particles is carefully considered [

67,

68,

69,

70].