Abstract

In the world of generalized entropies—which, for example, play a role in physical systems with sub- and super-exponential phase space growth per degree of freedom—there are two ways for implementing constraints in the maximum entropy principle: linear and escort constraints. Both appear naturally in different contexts. Linear constraints appear, e.g., in physical systems, when additional information about the system is available through higher moments. Escort distributions appear naturally in the context of multifractals and information geometry. It was shown recently that there exists a fundamental duality that relates both approaches on the basis of the corresponding deformed logarithms (deformed-log duality). Here, we show that there exists another duality that arises in the context of information geometry, relating the Fisher information of -deformed exponential families that correspond to linear constraints (as studied by J.Naudts) to those that are based on escort constraints (as studied by S.-I. Amari). We explicitly demonstrate this information geometric duality for the case of -entropy, which covers all situations that are compatible with the first three Shannon–Khinchin axioms and that include Shannon, Tsallis, Anteneodo–Plastino entropy, and many more as special cases. Finally, we discuss the relation between the deformed-log duality and the information geometric duality and mention that the escort distributions arising in these two dualities are generally different and only coincide for the case of the Tsallis deformation.

1. Introduction

Entropy is one word for several distinct concepts [1]. It was originally introduced in thermodynamics, then in statistical physics, information theory, and last in the context of statistical inference. One important application of entropy in statistical physics, and in statistical inference in general, is the maximum entropy principle, which allows us to estimate probability distribution functions from limited information sources, i.e., from data [2,3]. The formal concept of entropy was generalized to also account for power laws that occur frequently in complex systems [4]. Literally dozens of generalized entropies have been proposed in various contexts, such as relativity [5], multifractals [6], or black holes [7]; see [8] for an overview. All these generalized entropies, whenever they fulfil the first three Shannon–Khinchin axioms (and violate the composition axiom) are special cases of the -entropy asymptotically [9]. Generalized entropies play a role in non-multinomial, sub-additive systems (whose phase space volume grows sub-exponentially with the degrees of freedom) [10,11] and in systems, whose phase space grows super-exponentially [12]. All generalized entropies, for sub-, and super-exponential systems, can be treated within a single, unifying framework [13].

With the advent of generalized entropies, depending on context, two types of constraint are used in the maximum entropy principle: traditional linear constraints (typically moments), , motivated by physical measurements, and the so-called escort constraints, , where u is some nonlinear function. Originally, the latter were introduced with multifractals in mind [4]. Different types of constraint arise from different applications of relative entropy. While for physics-related contexts (such as thermodynamics), linear constraints are normally used, in other applications, such as non-linear dynamical systems or information geometry, it might be more natural to consider escort constraints. The question about their correct use and the appropriate form of constraints has caused a heated debate in the past decade [14,15,16,17,18,19]. To introduce escort distributions in the maximum entropy principle in a consistent way, two approaches have been discussed. The first [20] appears in the context of deformed entropies that are motivated by superstatistics [21]. It was later observed in [22] that this approach is linked to other deformed entropies with linear constraints through a fundamental duality (deformed-log duality), such that both entropies lead to the same functional form of MaxEnt distributions. The second way to obtain escort distributions was studied by Amari et al. and is motivated by information geometry and the theory of statistical estimation [23,24]. There, escort distributions represent natural coordinates on a statistical manifold [24,25].

In this paper, we show that there exists another duality relation between this information geometric approach with escort distributions and an approach that uses linear constraints. The relation can be given a precise information geometric meaning on the basis of the Fisher information. We show this within the framework of -deformations [26,27,28]. We establish the duality relation for both cases in the relevant information geometric quantities. As an example, we explicitly show the duality relation for the class of -exponentials, introduced in [9,10]. Finally, we discuss the relation between the deformed-log duality and the information geometric duality and show that these have fundamental differences. Each type of duality is suitable for different applications. We hope that this paper helps to avoid confusion in the use of escort distributions in the various contexts.

Let us start with reviewing central concepts of (non-deformed) information geometry, in particular relative entropy and its relation to the exponential family of distributions through the maximum entropy principle. Let us consider a probability simplex, , with n independent probabilities, , and probability . Its value is not independent, but determined by the normalization condition, . Further, consider a parametric family of distributions with parameter vector , where is a parametric space. In this paper, we focus on probabilities over discrete sample spaces, only. For the continuous case see the mathematical formulation of Pistone and Sempi [29]. For the sake of simplicity, we sometimes do not explicitly write the parameter vector and consider as the independent parameters of the distribution. It is easy to show that the choice of determines the choice of the parameters .

Relative entropy, or Kullback–Leibler divergence, is defined as:

For the uniform distribution , i.e., , we have:

where is the Shannon entropy, . Let us consider a set of linear constraints, , and denote the configuration vector as . Shannon entropy is maximized under this set of linear constraints and the normalization condition, by functions belonging to the exponential family of probability distributions, which can be written as:

guarantees normalization. Fisher information defines the metric on the parametric manifold by taking two infinitesimally-separated points, and , and by expanding ,

For the exponential family of distributions, it is a well-known fact that Fisher information is equal to the inverse of the probability in Equation (3):

2. Deformed Exponential Family

We briefly recall the definition of -deformed logarithms and exponentials as introduced by Naudts [26]. The deformed logarithm is defined as:

for some positive, strictly-increasing function, , defined on . Then, is an increasing, concave function with . is negative on and positive on . Naturally, the derivative of is . The inverse function of exists; we denote it by . Finally, the -exponential family of probability distributions is defined as a generalization of Equation (3):

We can express in the form:

which allows us to introduce dual coordinates to . This is nothing but the Legendre transform of , which is defined as:

where:

Because:

holds, and using , we obtain that:

where is the so-called escort distribution. With , the elements of are given by:

where we define . The Legendre transform provides a connection between the exponential family and the escort family of probability distributions, where the coordinates are obtained in the form of escort distributions. This generalizes the results for the ordinary exponential family of distributions, where the dual coordinates form a mixture family, which can be obtained as the superposition of the original distribution. The importance of dual coordinates in information geometry comes from the existence of a dually-flat geometry for the pair of coordinates. This means that there exist two affine connections with vanishing coefficients (Christoffel symbols). For the exponential family of distributions, the connection determined by the exponential distribution is called e-connection, and the dual connection leading to a mixture family is called m-connection [25]. For more details, see, e.g., [24]. We next look at generalizations of the Kullback–Leibler divergence and the Fisher information for the case of -deformations.

3. Deformed Divergences, Entropies, and Metrics

For the -deformed exponential family of distributions, we have to define the proper generalizations of the relevant quantities, such as the entropy, divergence, and metric. A natural approach is to start with the deformed Kullback–Leibler divergence, denoted by . -entropy can then be defined as:

where ∼ means that the relation holds up to a multiplicative constant depending only on n. Similarly, the -deformed Fisher information is:

There is now more than one way to generalize the ordinary Kullback–Leibler divergence. The first is Csiszár’s divergence: [30]

where f is a convex function. For , we obtain the Kullback–Leibler divergence. Note, however, that the related information geometry based on the generalized Fisher information is trivial, because we have:

i.e., the rescaled Fisher information metric; see [27]. The second possibility is to use the divergence of Bregman type, usually defined as:

where the symbol “·” denotes the scalar product. This type of divergence can be understood as the first-order Taylor expansion of f around , evaluated at . Let us next discuss the two possible types of the Bregman divergence, which naturally correspond to the -deformed family of distributions. For both, the -exponential family of distributions is obtained from the maximum entropy principle of the corresponding -entropy, however, under different constraints. Note that the maximum entropy principle is just a special version of the more general minimal relative entropy principle, which minimizes the divergence functional w.r.t. , for some given prior distribution .

3.1. Linear Constraints: Divergence a là Naudts

One generalization of the Kullback–Leibler divergence was introduced by Naudts [26] by considering , which leads to:

The corresponding entropy can be expressed as:

is maximized by the -exponential family of distributions under linear constraints. The Lagrange functional is:

which leads to:

and we get:

which is just Equation (8), averaged over the distribution . Note that Equation (23) provides the connection to thermodynamics, because is a so-called Massieu function. For a canonical ensemble, i.e., one constraint on the average energy , parameter plays the role of an inverse temperature, and can be related to the free energy, . Thus, the term can be interpreted as the thermodynamic entropy, which is determined from Equation (23). This is a consequence of the Legendre structure of thermodynamics.

The corresponding MaxEnt distribution can be written as:

Finally, the Fisher information metric can be obtained in the following form:

3.2. Escort Constraints: Divergence a là Amari

Amari et al. [23,24] used a different divergence introduced in [31], which is based on the choice of . This choice is motivated by the fact that the corresponding entropy is just the dual function of , i.e., . This is easy to show, because:

Thus, the divergence becomes:

and the corresponding entropy can be expressed from Equation (26) as:

so it is a dual function of . For this reason, the entropy is called “canonical”, because it is obtained by the Legendre transform from the Massieu function . Interestingly, the entropy is maximized by the -exponential family of distributions under escort constraints. The Lagrange function is:

After a straightforward calculation, we get:

and the corresponding MaxEnt distribution can be expressed as:

where:

Here, denotes the average under the escort probability measure, . Interestingly, in the escort constraints scenario, the “MaxEnt” entropy is the same as the “thermodynamic” entropy in the case of linear constraints. We call this entropy, , the dual entropy. Finally, one obtains the corresponding metric:

Note that the metric can be obtained from as: , which is the consequence of the Legendre structure of escort coordinates [24]. For a summary of the -deformed divergence, entropy, and metric, see Table 1.

Table 1.

-deformation of divergence, entropy, and Fisher information corresponding to the -exponential family under linear and escort constraints. For the ordinary logarithm, , the two entropies become the Shannon entropy, and the divergence is Kullback–Leibler.

3.3. Cramér–Rao Bound of Naudts Type

One of the important applications of the Fisher metric is the so-called Cramér–Rao bound, which is the lower bound for the variance of an unbiased estimator. The generalization of the Cramér–Rao bound for two families of distributions was given in [26,27]. Assume these two families of distributions to be denoted by and , with their corresponding expectation values, and . Let denote the estimator of the family that fulfills , for some function f, and let us consider a mild regularity condition . Then:

where:

If is the -exponential family of distributions, in Equation (34), equality holds for the escort distribution , [28]. It is easy to see that for this case, i.e., for the -exponential family and the corresponding escort distribution, the following is true:

This provides a connection between the Cramér–Rao bound and the -deformed Fisher metric. In the next section, we show that the Cramér–Rao bound can be also estimated for the case of the Fisher metric of the “Amari type”.

4. The Information Geometric “Amari–Naudts” Duality

In the previous section, we have seen that there are at least two natural ways to generalize divergence, such that the -exponential family of distributions maximizes the associated entropy functional, however under different constraint types. These two ways result in two different geometries on the parameter manifold. The relation between the metric and can be expressed by the operator, T:

where

with the normalization factor, . Note that the operator acts locally on the elements of the metric. In order to establish the connection to the Cramér–Rao bound, let us focus on the transformation of .

4.1. Cramér–Rao Bound of the Amari Type

The metric of the “Amari case” can be seen as a conformal transformation [32] of the metric that is obtained in the “Naudts case”, for a different deformation of the logarithm. Two metric tensors are connected by a conformal transformation if they have the same form, except for the global conformal factor, , which depends only on the point p. Our aim is to connect the Amari metric with the Cramér–Rao bound and obtain another type of bound for the estimates that are based on escort distributions. To this end, let us consider a general metric of the Naudts type, corresponding to -deformation, and a metric of the Amari type, corresponding to -deformation. They are connected through the conformal transformation, which acts globally on the whole metric. The relation can be expressed as:

By using previous results in this relation, we obtain:

from which we see that and , i.e.,

Note that might not be concave because:

Concavity exists, if . To now make the connection with the Cramér–Rao bound, let us take , so , and:

As a consequence, there exist two types of Cramér–Rao bound for a given escort distribution, which might be used to estimate the lower bound of the variance of an unbiased estimator, obtained from two types of Fisher information.

4.2. Example: Duality of -Entropy

We demonstrate the “Amari–Naudts” duality on the general class of -entropies [9,10], which include all deformations associated with statistical systems that fulfil the first three Shannon–Khinchin axioms. These include most of the popular deformations, including Tsallis q-exponentials [4] and stretched exponentials studied in connection with the entropies by Anteneodo and Plastino [33]. The generalized -logarithm is defined as:

where c and d are the scaling exponents [8,9] and r is a free scale parameter (that does not influence the asymptotic behavior). The associated -deformation is:

The inverse function of , the deformed -exponential, can be expressed in terms of the Lambert W-function, which is the solution of equation, . The deformed -exponential is:

where . The corresponding entropy that is maximized by -exponentials (see [8] for their properties) is the -entropy:

where . This is an entropy of “Naudts type”, since it is maximized with -exponentials under linear constraints. We can immediately write the metric as:

The corresponding entropy of “Amari type”, i.e., maximized with -exponentials under escort constraints:

is:

and its metric is:

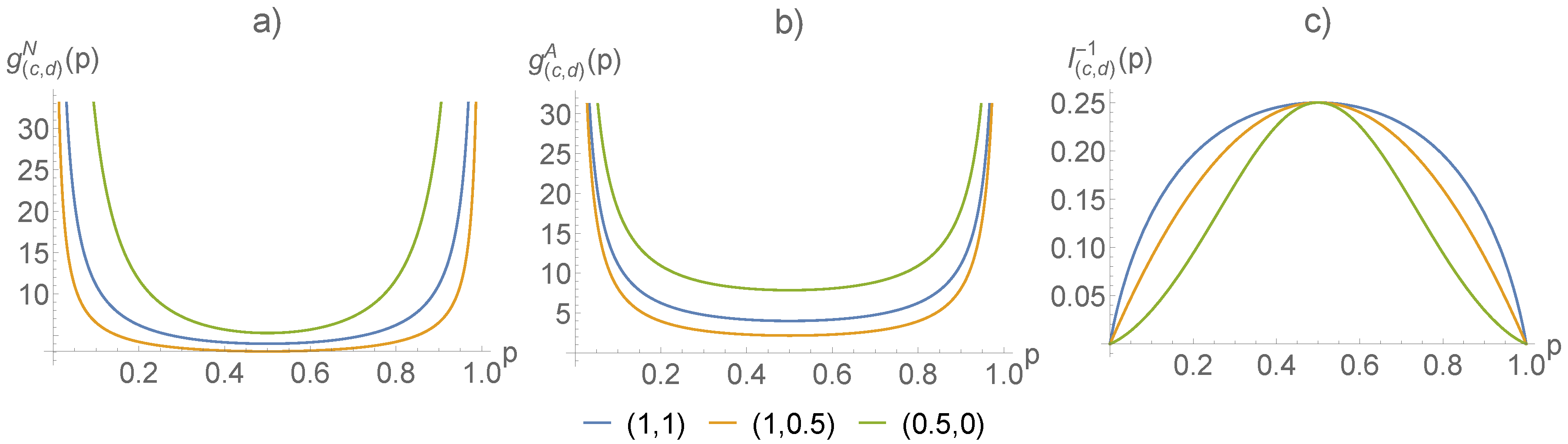

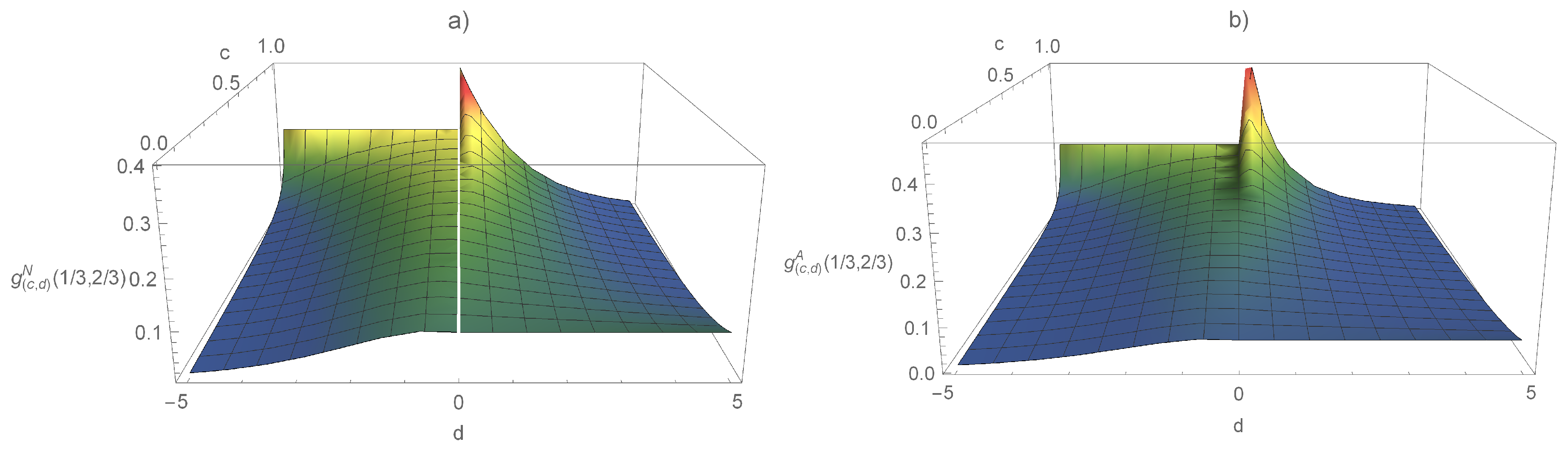

The metric of the Amari type of the -entropy was already discussed in [34] based on -logarithms. However, as demonstrated above, the metric can be found without using the inverse -deformed logarithms, which in the case of -logarithms lead to Lambert W-functions. The Fisher metric of Naudts and Amari type and the corresponding Cramér–Rao bound are shown in Figure 1. The scaling parameter is set (following [9]) to , for , and , for . The Fisher metric of both types is displayed in Figure 2 as a function of the parameters c and d for a given point, . We see that both types of metric have a singularity for . This point corresponds to distributions with compact support. For one-dimensional distributions, the singularity corresponds to the transition between distributions with support on the real line and distributions with support on a finite interval.

Figure 1.

Fisher metric for corresponding to various -deformations (, , ) for (a) the Naudts type, (b) the Amari type, and (c) the Cramér–Rao bound corresponding to the metric.

Figure 2.

Fisher metric for -deformations as a function of c and d of Naudts type (a) and Amari type (b). The metric is evaluated at a point .

Interestingly, for , the metric simplifies to:

which corresponds to the Tsallis q-exponential family of distributions. Therefore, is just a conformal transformation of the Fisher information metric for the exponential family of distributions, as shown in [24]. Note, that only for Tsallis q-exponentials, the relation between and can be expressed as (see also Table 2):

where and . This is nothing but the well-known additive duality of Tsallis entropy [11]. Interestingly, q-escort distributions form a group with and , where is the multiplicative duality [35].

Table 2.

Two important special cases of -deformations and related quantities: Power laws (Tsallis) and stretched exponentials.

This is not the case for more general deformations, because typically, the inverse does not belong to the class of escort distributions. Popular deformations belonging to the -family, as the Tsallis q-exponential family or the stretched exponential family, are summarized in Table 2.

5. Connection to the Deformed-Log Duality

A different duality of entropies and their associated logarithms under linear and escort averages has been discussed in [22]. There, two approaches were discussed. The first uses the generalized entropy of trace form under linear constraints. It was denoted by:

It corresponds to the Naudts case, . The second approach, originally introduced by Tsallis and Souza [20], uses the trace form entropy:

Note that in [20], the notion of the deformed logarithm is not used (as in Equation (55)). However, it is again an entropy of the Naudts type with the deformed logarithm . Equation (55) is maximized under the escort constraints:

where . The linear constraints are recovered for . This form is dictated by the Shannon–Khinchin axioms, as discussed in the next section. Let us assume that the maximization of both approaches—Equation (54) under linear, and Equation (55) under escort constraints—leads to the same MaxEnt distribution. One can then show that there exists the following duality (deformed-log duality) between and (x):

Let us focus on specific -deformations, so that . Then, is also a -deformation, with:

It is straightforward to calculate the metric corresponding to the entropy :

where:

Thus, the Tsallis–Souza approach results in yet another information matrix. We may also start from the other direction and look at the situation when the escort distribution for the information geometric approach is the same as the escort distribution for the Tsallis–Souza approach. In this case, we get:

We find that the entropy must be expressed as:

Note that for and , we obtain the Tsallis entropy:

which corresponds to for , which is nothing but the mentioned Tsallis additive duality. It turns out that Tsallis entropy is the only case where the deformed-log duality and the information geometric duality result in the same class of functionals. In general, the two dualities have different escort distributions.

6. Discussion

We discussed the information geometric duality of entropies that are maximized by -exponential distributions under two types of constraint: linear constraints, which are known from contexts such as thermodynamics, and escort constraints, which appear naturally in the theory of statistical estimation and information geometry. This duality implies two different entropy functionals: and . For , they both boil down to Shannon entropy. The connection between the entropy of Naudts type and the one of Amari type can be established through the corresponding Fisher information through the Cramér–Rao bound. Contrary to the deformed-log duality introduced in [22], the information theoretic duality introduced here cannot be established within the framework of -deformations, since is not a trace form entropy. We demonstrated the duality between the Naudts approach with linear constraints and the Amari approach with escort constraints, within the example of -entropies, which include a wide class of popular deformations, including Tsallis and Anteneodo–Plastino entropy as special cases. Finally, we compared the information geometric duality to the deformed-log duality and showed that they are fundamentally different and result in other types of Fisher information.

Let us now discuss the role of information geometric duality and possible applications in information theory and thermodynamics. Recall that the Shannon entropic functional is determined by the four Shannon–Khinchin (SK) axioms. In many contexts, at least three of the axioms should hold:

- (SK1) Entropy is a continuous function of the probabilities only and should not explicitly depend on any other parameters.

- (SK2) Entropy is maximal for the equi-distribution .

- (SK3) Adding a state to a system with does not change the entropy of the system.

Originally, the Shannon–Khinchin axioms contain four axioms. The fourth describes the “composition rule” for entropy of a joint system ). The only entropy satisfying all four SK axioms is Shannon entropy. However, Shannon entropy is not sufficient to describe the statistics of complex systems [10] and can lead to paradoxes in applications in thermodynamics [12]. Therefore, instead of imposing the fourth axiom in situations where it does not apply, it is convenient to consider a weaker requirement, such as generic scaling relations of entropy in the thermodynamic limit [9,13]. It is possible to show that the only type of duality satisfying the first three Shannon–Khinchin axioms is the deformed-log duality of [22]. Moreover, entropies that are neither trace-form, nor sum-form (sum-form entropies are in the form ) might be problematic from the view of information theory and coding. For example, it is then not possible to introduce a conditional entropy consistently [36] because the corresponding conditional entropy cannot be properly defined. This is related to the fact that the Kolmogorov definition of conditional probability is not generally valid for escort distributions [37]. Additional issues arise from the theory of statistical estimation, since only entropies of the form , i.e., sum-form entropies, can fulfil the consistency axioms [38]. From this point of view, the deformed-log duality using the class of Tsallis–Souza escort distributions can play a role in thermodynamical applications [39], because the corresponding entropy fulfills the SK axioms. On the other hand, the importance of escort distributions considered by Amari and others is in the realm of information geometry (e.g., dually-flat geometry or generalized Cramér–Rao bound), and their applications in thermodynamics might be limited. Finally, for the case of the Tsallis q-deformation, both dualities, the information geometric and the deformed-log duality, reduce to the well-known additive duality .

Author Contributions

All authors contributed to the conceptualization of the work, the discussion of the results, and their interpretation. J.K. took the lead in technical computations. J.K. and S.T. wrote the manuscript.

Funding

This work was supported by the Austrian Science Fund FWFunder I 3073.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Thurner, S.; Corominas-Murtra, B.; Hanel, R. Three faces of entropy for complex systems: Information, thermodynamics, and the maximum entropy principle. Phys. Rev. E 2017, 96, 032124. [Google Scholar] [CrossRef] [PubMed]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620. [Google Scholar] [CrossRef]

- Harremoës, P.; Topsøe, F. Maximum entropy fundamentals. Entropy 2001, 3, 191–226. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Kaniadakis, G. Statistical mechanics in the context of special relativity. Phys. Rev. E 2002, 66, 056125. [Google Scholar] [CrossRef] [PubMed]

- Jizba, P.; Arimitsu, T. The world according to Rényi: Thermodynamics of multifractal systems. Ann. Phys. 2004, 312, 17–59. [Google Scholar] [CrossRef]

- Tsallis, C.; Cirto, L.J. Black hole thermodynamical entropy. Eur. Phys. J. C 2013, 73, 2487. [Google Scholar] [CrossRef]

- Thurner, S.; Hanel, R.; Klimek, P. Introduction to the Theory of Complex Systems; Oxford University Press: Oxford, UK, 2018. [Google Scholar]

- Hanel, R.; Thurner, S. A comprehensive classification of complex statistical systems and an axiomatic derivation of their entropy and distribution functions. Europhys. Lett. 2011, 93, 20006. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 2011, 96, 50003. [Google Scholar] [CrossRef]

- Tsallis, C.; Gell-Mann, M.; Sato, Y. Asymptotically scale-invariant occupancy of phase space makes the entropy Sq extensive. Proc. Natl. Acad. Sci. USA 2005, 102, 15377–15382. [Google Scholar] [CrossRef]

- Jensen, H.J.; Pazuki, R.H.; Pruessner, G.; Tempesta, P. Statistical mechanics of exploding phase spaces: Ontic open systems. J. Phys. A 2018, 51, 375002. [Google Scholar] [CrossRef]

- Korbel, J.; Hanel, R.; Thurner, S. Classification of complex systems by their sample-space scaling exponents. New J. Phys. 2018, 20, 093007. [Google Scholar] [CrossRef]

- Tsallis, C.; Mendes, R.S.; Plastino, A.R. The role of constraints within generalized nonextensive statistics. Physica A 1998, 261, 534–554. [Google Scholar] [CrossRef]

- Abe, S. Geometry of escort distributions. Phys. Rev. E 2003, 68, 031101. [Google Scholar] [CrossRef] [PubMed]

- Ohara, A.; Matsuzoe, H.; Amari, S.I. A dually flat structure on the space of escort distributions. J. Phys. Conf. Ser. 2010, 201, 012012. [Google Scholar] [CrossRef]

- Bercher, J.-F. A simple probabilistic construction yielding generalized entropies and divergences, escort distributions and q-Gaussians. Physica A 2012, 391, 4460–4469. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S.; Tsallis, C. On the robustness of q-expectation values and Renyi entropy. Europhys. Lett. 2009, 85, 20005. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S.; Tsallis, C. Limit distributions of scale-invariant probabilistic models of correlated random variables with the q-Gaussian as an explicit example. Eur. Phys. J. B 2009, 72, 263–268. [Google Scholar] [CrossRef]

- Tsallis, C.; Souza, A.M.C. Constructing a statistical mechanics for Beck-Cohen superstatistics. Phys. Rev. E 2003, 67, 026106. [Google Scholar] [CrossRef]

- Beck, C.; Cohen, E.D.G. Superstatistics. Physica A 2003, 322, 267–275. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S.; Gell-Mann, M. Generalized entropies and logarithms and their duality relations. Proc. Natl. Acad. Sci. USA 2012, 109, 19151–19154. [Google Scholar] [CrossRef]

- Amari, S.I.; Cichocki, A. Information geometry of divergence functions. Bull. Pol. Acad. Sci. Tech. Sci. 2010, 58, 183–195. [Google Scholar] [CrossRef]

- Amari, S.I.; Ohara, A.; Matsuzoe, H. Geometry of deformed exponential families: Invariant, dually-flat and conformal geometries. Physica A 2012, 391, 4308–4319. [Google Scholar] [CrossRef]

- Ay, N.; Jost, J.; Le, H.V.; Schwachhöfer, L. Information Geometry; Springer: Berlin, Germany, 2017. [Google Scholar]

- Naudts, J. Deformed exponentials and logarithms in generalized thermostatistics. Physica A 2002, 316, 323–334. [Google Scholar] [CrossRef]

- Naudts, J. Continuity of a class of entropies and relative entropies. Rev. Math. Phys. 2004, 16, 809–822. [Google Scholar] [CrossRef]

- Naudts, J. Generalised Thermostatistics; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar]

- Pistone, G.; Sempi, C. An infinite-dimensional geometric structure on the space of all the probability measures equivalent to a given one. Ann. Stat. 1995, 23, 1543–1561. [Google Scholar] [CrossRef]

- Csiszar, I. Why least squares and maximum entropy? An axiomatic approach to inference for linear inverse problems. Ann. Stat. 1991, 19, 2032–2066. [Google Scholar] [CrossRef]

- Vigelis, R.F.; Cavalcante, C.C. On ϕ-Families of probability distributions. J. Theor. Probab. 2013, 26, 870–884. [Google Scholar] [CrossRef]

- Ohara, A. Conformal flattening for deformed information geometries on the probability simplex. Entropy 2018, 20, 186. [Google Scholar] [CrossRef]

- Anteneodo, C.; Plastino, A.R. Maximum entropy approach to stretched exponential probability distributions. J. Phys. A 1999, 32, 1089. [Google Scholar] [CrossRef]

- Ghikas, D.P.K.; Oikonomou, F.D. Towards an information geometric characterization/classification of complex systems. I. Use of generalized entropies. Physica A 2018, 496, 384–398. [Google Scholar] [CrossRef]

- Tsallis, C. Generalization of the possible algebraic basis of q-triplets. Eur. Phys. J. Spec. Top. 2017, 226, 455–466. [Google Scholar] [CrossRef]

- Ilić, V.M.; Stanković, M.S. Generalized Shannon–Khinchin axioms and uniqueness theorem for pseudo-additive entropies. Physica A 2014, 411, 138–145. [Google Scholar] [CrossRef]

- Jizba, P.; Korbel, J. On the uniqueness theorem for pseudo-additive entropies. Entropy 2017, 19, 605. [Google Scholar] [CrossRef]

- Uffink, J. Can the maximum entropy principle be explained as a consistency requirement? Stud. Hist. Philos. Sci. B 1995, 26, 223–261. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S.; Gell-Mann, M. Generalized entropies and the transformation group of superstatistics. Proc. Natl. Acad. Sci. USA 2011, 108, 6390–6394. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).