The Entropy-Based Time Domain Feature Extraction for Online Concept Drift Detection

Abstract

1. Introduction

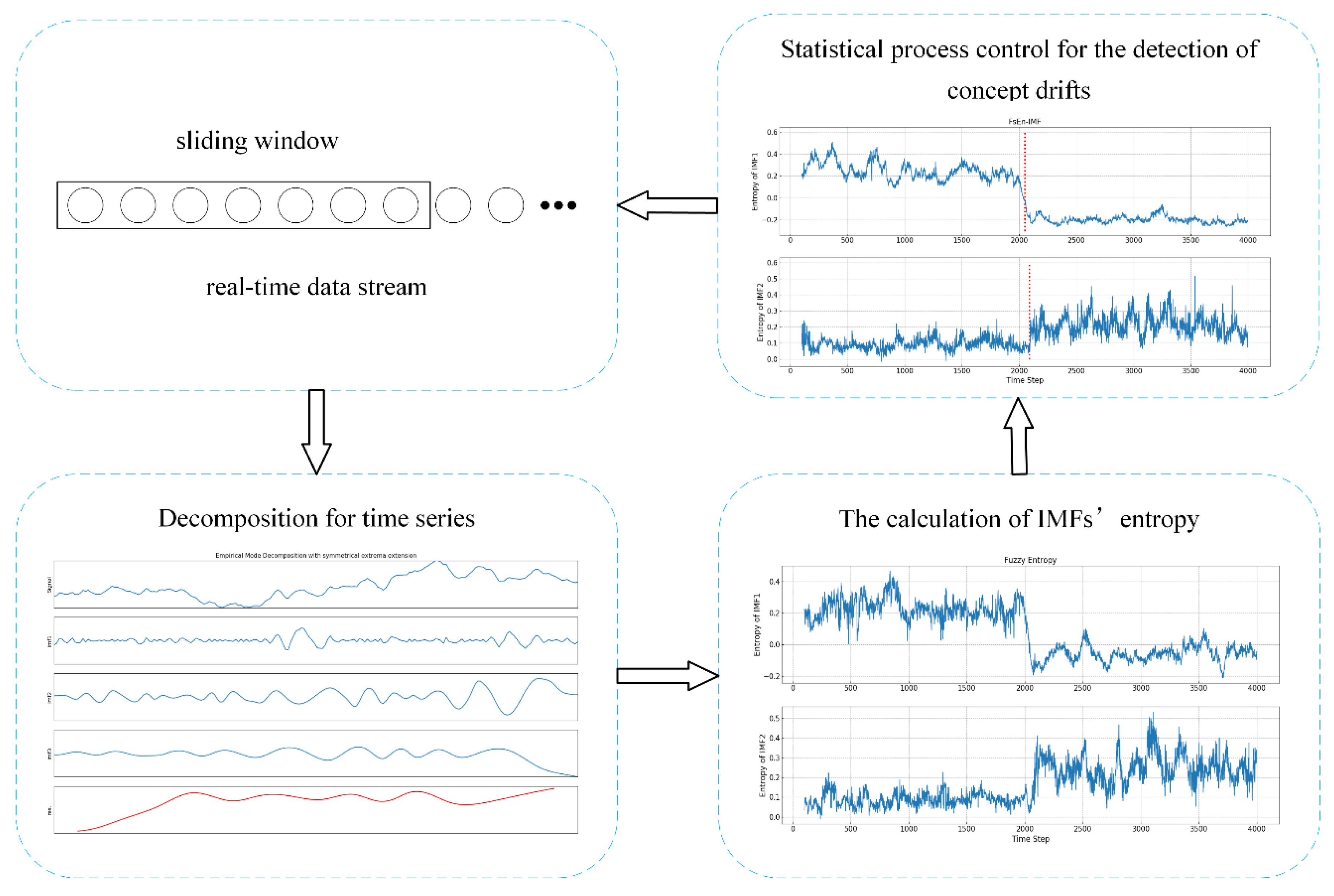

- A novel unsupervised algorithm is proposed for online time series concept drift detection, which can effectively detect the occurrence of concept drift in streaming data by capturing the fine structures of data in different time scale.

- Entropy methods are used to capture the changes of intrinsic structures of the original sequence in different time domains, where multiple application scenarios are discussed according to the characteristics of entropies in detail.

- A statistical process control method based on GLR is designed to monitor the changes of the obtained entropy information, which can determine the concept drift in time and reduce the false alarms.

2. Related Works

3. Model

3.1. The Decomposition for Time Series

- Let present the time series. contains M local maximums and N local minimums, and their indexes are denoted as and , respectively. In this way, the corresponding local maximum and local minimum are and .

- Start from the left side. When , if the value of the left end point is larger than the first local minimum value, that is , then the local maximum value point is used as the center of symmetry to extend d units to left. The time indexes and values of the extension sequence are:

- When , if the value of the left end point is smaller than the first local minimum value, that is , then the local minimum value point is used as the center of symmetry to extend d units to left. The time indexes and values of the extension sequence are:

- When or , the left endpoint is used as the symmetric center to extend d units to the left, and the time indexes and values of the extension sequence are obtained as follows:

- Extend the right endpoint in the same way.

- Find out all local maximum points and local minimum points in the sequence after extension, and fit the upper envelope of the maximum points and the lower envelope of the minimum points by cubic spline interpolation. Then, the original sequence is between the upper envelope and the lower envelope. Subsequently, by calculating the mean of the upper envelope and the lower envelope, the original sequence can be converted into a new sequence :

- Check if the obtained meets the following conditions:

- (1)

- The number of local extremum points and the number of zero crossing points is equal or the difference is at most 1.

- (2)

- The average of the envelopes of the local maximum and the local minimum is zero.

If the above two conditions are satisfied, the obtained is called as s-th IMF, where s indicates the number of repeats of steps 6 and 7. Then, the obtained is denoted by And if not, replace with . Repeat step 6 until meets the above criteria. - Residual is the difference between and obtained in step 7 and then is replaced by to calculate the next IMF. The steps 6–7 are repeated f times until the obtained f-th residual is a monotonic function. In this way, the original time series is represented in the following form:

- Delete the data of the extension part and retain only the data decomposed from the original part.

3.2. The Calculation of IMFs’ Entropy

- Time series are provided, and a threshold (usually chosen as 0.2 , where is the standard deviation of the original sequence) for similarity comparison and a metric (usually chosen as 2 or 3) for defining the length of the reconstructed sequence.

- The original sequence is reconstructed to obtain subsequences . Among them, subsequence .

- The distance between two reconstructed vectors and is calculated, where is determined by the maximum difference of the corresponding position elements in the two vectors.

- Count the number of vectors satisfying the following conditions, and calculate the ratio between the number and the total subsequence data length:This process is called the template matching process of , and represents the matching probability between any and template .

- Calculate the average similarity rate:

- According to steps 1–5 above, the average similarity rate is calculated when the length of subsequence is divided by + 1.

- Calculate the approximate entropy:

3.3. Statistical Process Control for the Detection of Concept Drifts

- When the number of consecutive observations reaches a predefined number, is calculated.

- If , where is an appropriate control threshold, it means that there is insufficient evidence for the occurrence of shifts of variance and mean in the data stream.

- If , it means that there is evidence for the occurrence of shifts of variance and mean in the data stream.

3.4. The Overall Approach of Concept Drifts Detection

| Algorithm 1 The overall algorithm of ETFE | |

| Input: data stream x1, x2, … | |

| Initialization: Initialize the parameters of the specified entropy, the size of sliding window , the threshold of control limit | |

| 1 | foreach observation in stream do |

| 2 | if then |

| 3 | sliding window append |

| 4 | continue |

| 5 | else |

| 6 | sliding window append |

| 7 | imfs EMD({}) /* use EMD with the data in sliding window */ |

| 8 | entropy value Entropy({imfs}) /* use the specific entropy method to calculate the entropy value of imfs */ |

| 9 | update the interim parameters of GLR with entropy value |

| 10 | calculate the GLR test statistic |

| 11 | /* GLR test is used for finding the change point ϑ */ |

| 12 | If then |

| 13 | There is no evidence of drift occurs |

| 14 | else |

| 15 | There is evidence of drift occurs |

| 16 | drift detection position ϑ |

| 17 | drift detection time i |

| 18 | restart from the next observation |

4. Performance

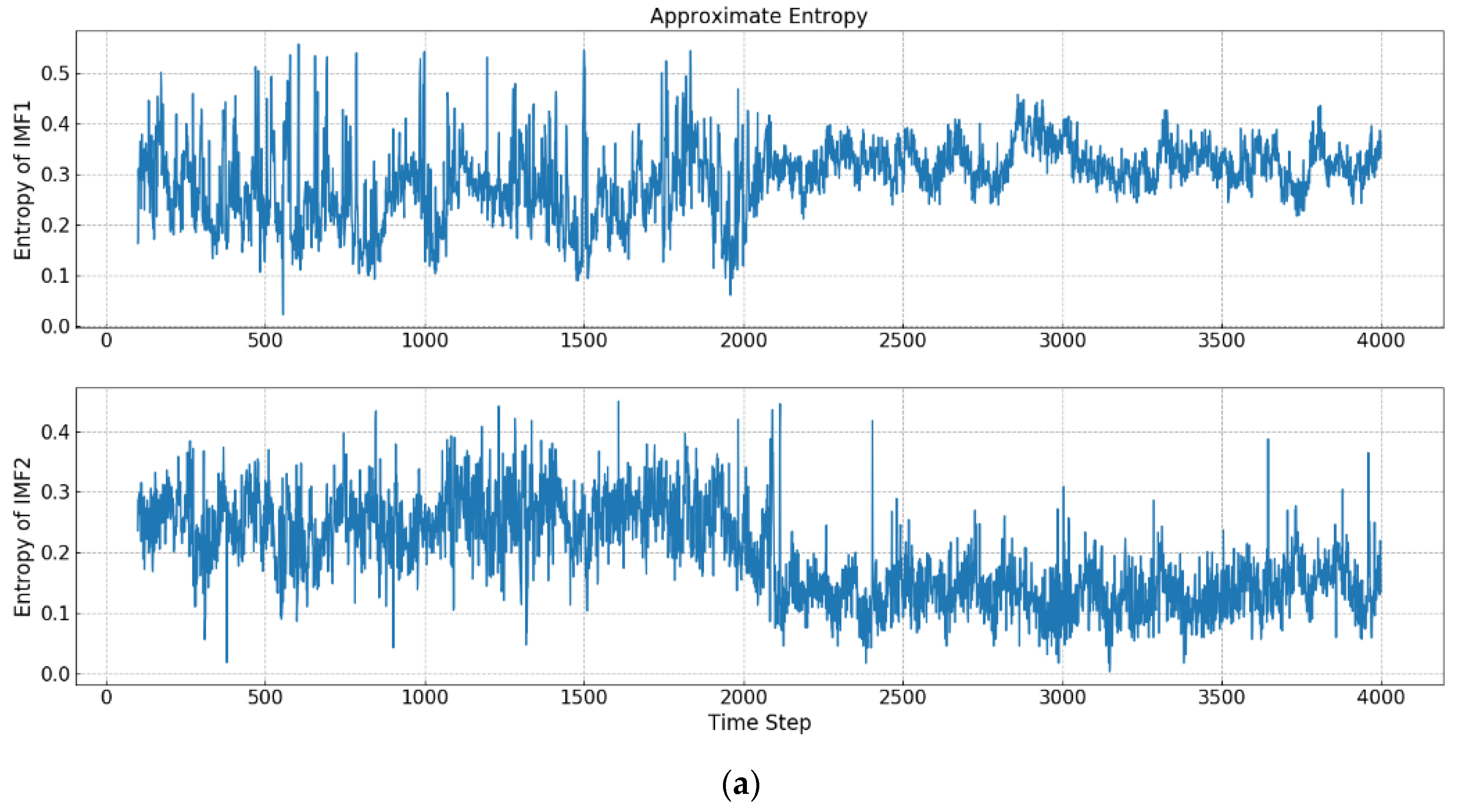

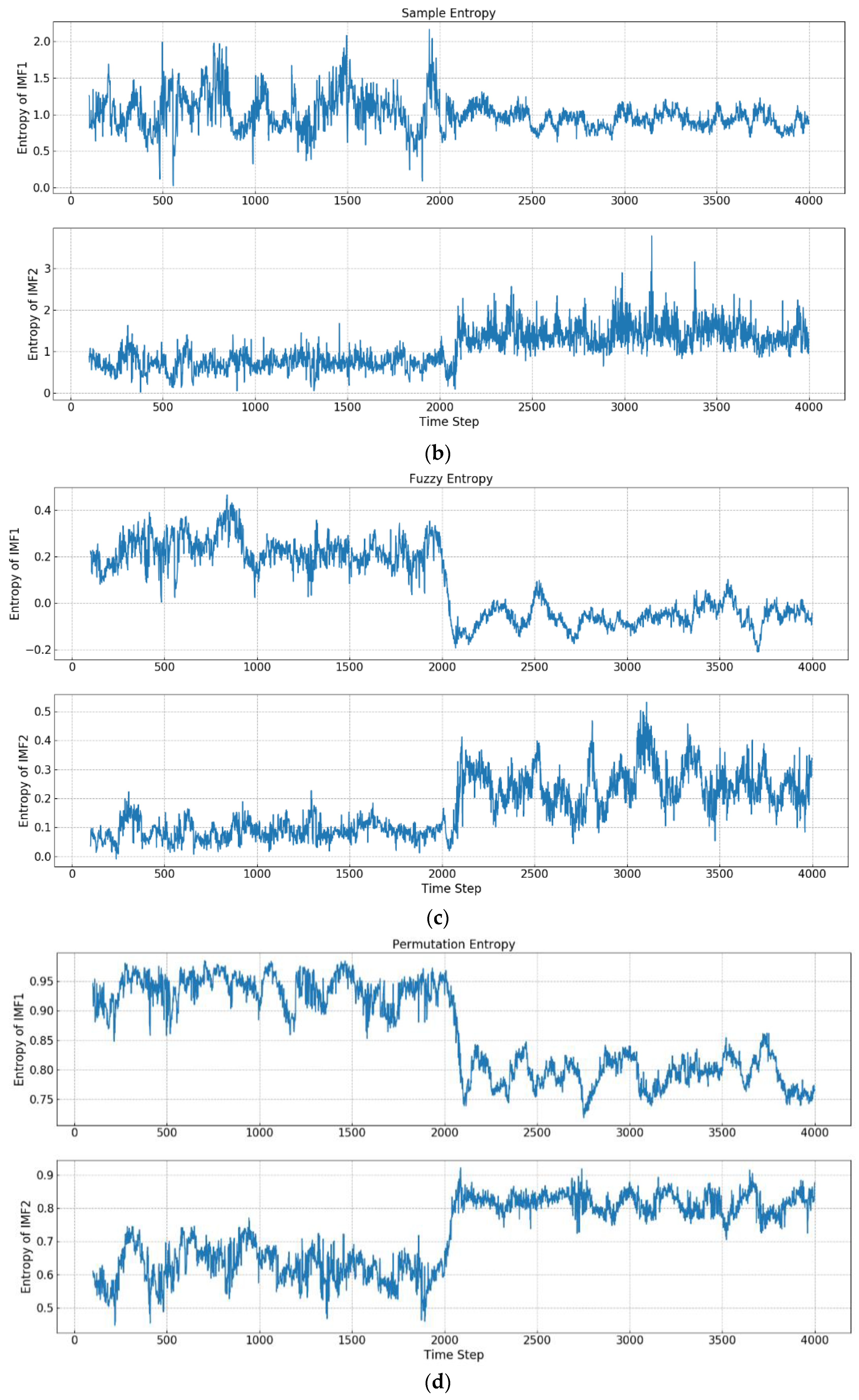

4.1. The Evaluation of Various Entropy Methods

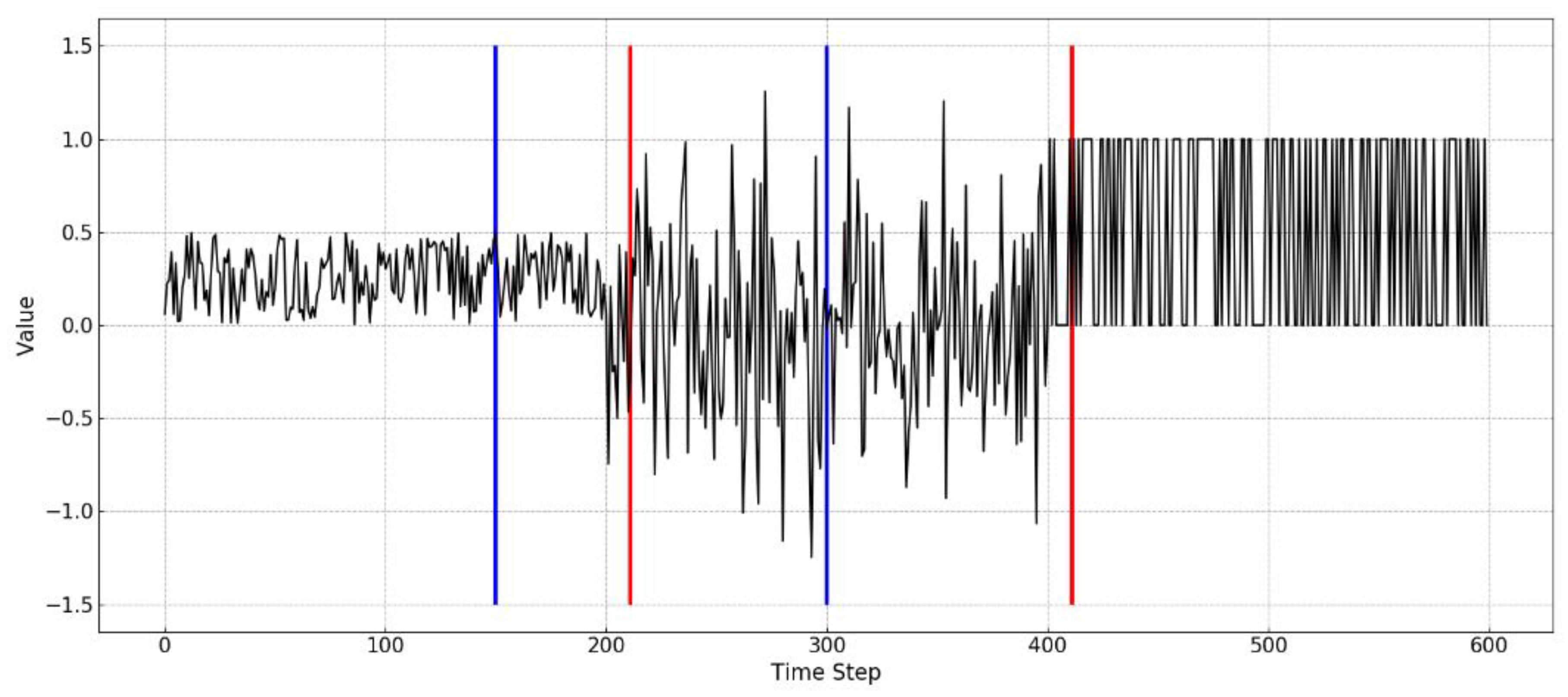

4.2. Experiments in Synthetic Data

4.3. Experiments in Real Data

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Deb, C.; Zhang, F.; Yang, J.; Lee, S.E.; Shah, K.W. A review on time series forecasting techniques for building energy consumption. Renew. Sustain. Energy Rev. 2017, 74, 902–924. [Google Scholar] [CrossRef]

- Luo, C.; Tan, C.; Zheng, Y.J. Long-term prediction of time series based on stepwise linear division algorithm and time-variant zonary fuzzy information granules. Int. J. Approx. Reason. 2019, 108, 38–61. [Google Scholar] [CrossRef]

- Straat, M.; Abadi, F.; Göpfert, C.; Hammer, B.; Biehl, M. Statistical mechanics of on-line learning under concept drift. Entropy 2018, 20, 775. [Google Scholar] [CrossRef]

- Liu, H.; Xu, B.; Lu, D.; Zhang, G. A path planning approach for crowd evacuation in buildings based on improved artificial bee colony algorithm. Appl. Soft Comput. 2018, 68, 360–376. [Google Scholar] [CrossRef]

- Sethi, T.S.; Kantardzic, M. On the reliable detection of concept drift from streaming unlabeled data. Expert Syst. Appl. 2017, 82, 77–99. [Google Scholar] [CrossRef]

- Barros, R.S.M.; Santos, S.G.T.G. A Large-scale Comparison of Concept Drift Detectors. Inf. Sci. 2018, 451–542, 348–370. [Google Scholar] [CrossRef]

- Ji, C.; Zhao, C.; Liu, S.; Yang, C.; Pan, L.; Wu, L.; Meng, X. A fast shapelet selection algorithm for time series classification. Comput. Netw. 2019, 148, 231–240. [Google Scholar] [CrossRef]

- Costa, M.; Goldberger, A. Generalized Multiscale Entropy Analysis: Application to Quantifying the Complex Volatility of Human Heartbeat Time Series. Entropy 2015, 17, 1197–1203. [Google Scholar] [CrossRef]

- Luo, C.; Zhang, N.; Wang, X. Time series prediction based on intuitionistic fuzzy cognitive map. Soft Comput. 2019, 1–16. [Google Scholar] [CrossRef]

- Gama, J.; Medas, P.; Castillo, G.; Rodrigues, P.P. Learning with Drift Detection. In Proceedings of the 17th Brazilian Symposium on Artificial Intelligence, São Luis, Brazil, 29 September–1 October 2004. [Google Scholar]

- Ross, G.J.; Adams, N.M.; Tasoulis, D.K.; Hand, D.J. Exponentially weighted moving average charts for detecting concept drift. Pattern Recognit. Lett. 2012, 33, 191–198. [Google Scholar] [CrossRef]

- Nishida, K.; Yamauchi, K. Detecting Concept Drift Using Statistical Testing//Discovery Science. In Proceedings of the 10th International Conference, Sendai, Japan, 1–4 October 2007. [Google Scholar]

- Minku, L.L.; Yao, X. DDD: A New Ensemble Approach for Dealing with Concept Drift. IEEE Trans. Knowl. Data Eng. 2012, 24, 619–633. [Google Scholar] [CrossRef]

- Krawczyk, B.; Minku, L.L.; Gama, J.; Stefanowski, J.; Woźniak, M. Ensemble learning for data stream analysis: A survey. Inf. Fusion 2017, 37, 132–156. [Google Scholar] [CrossRef]

- Ditzler, G.; Polikar, R. Hellinger Distance Based Drift Detection for Nonstationary Environments. In Proceedings of the IEEE Symposium on Computational Intelligence in Dynamic and Uncertain Environments (CIDUE), Paris, France, 11–15 April 2011. [Google Scholar]

- Liu, A.; Lu, J.; Liu, F.; Zhang, G. Accumulating regional density dissimilarity for concept drift detection in data streams. Pattern Recognit. 2018, 76, 256–272. [Google Scholar] [CrossRef]

- Cavalcante, R.C.; Minku, L.L.; Oliveira, A.L.I. FEDD: Feature Extraction for Explicit Concept Drift Detection in Time Series. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016. [Google Scholar]

- Nannan, Z.; Chao, L. Adaptive online time series prediction based on a novel dynamic fuzzy cognitive map. J. Intell. Fuzzy Syst. 2019, 36, 5291–5303. [Google Scholar] [CrossRef]

- Costa, F.G.D.; Mello, R.F.D. A Stable and Online Approach to Detect Concept Drift in Data Streams. In Proceedings of the Brazilian Conference on Intelligent Systems, Sao Paulo, Brazil, 18–22 October 2014. [Google Scholar]

- Luo, C.; Tan, C.; Wang, X.; Zheng, Y. An evolving recurrent interval type-2 intuitionistic fuzzy neural network for online learning and time series prediction. Appl. Soft Comput. 2019, 78, 150–163. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Siegmund, D.; Venkatraman, E.S. Using the Generalized Likelihood Ratio Statistic for Sequential Detection of a Change-Point. Ann. Stat. 1995, 23, 255–271. [Google Scholar] [CrossRef]

- Cavalcante, R.C.; Oliveira, A.L.I. An approach to handle concept drift in financial time series based on Extreme Learning Machines and explicit Drift Detection. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Guajardo, J.A.; Weber, R.; Miranda, J. A model updating strategy for predicting time series with seasonal patterns. Appl. Soft Comput. 2010, 10, 276–283. [Google Scholar] [CrossRef]

- Ji, C.; Zou, X.; Liu, S.; Pan, L. ADARC: An anomaly detection algorithm based on relative outlier distance and biseries correlation. Softw: Pract Exper. 2019, 1–17. [Google Scholar] [CrossRef]

- Zhu, B.; Chevallier, J. Forecasting Carbon Price with Empirical Mode Decomposition and Least Squares Support Vector Regression. Appl. Energy 2017, 191, 521–530. [Google Scholar] [CrossRef]

- Sharma, R.; Vignolo, L.; Schlotthauer, G.; Colominas, M.A.; Rufiner, H.L.; Prasanna, S.R. Empirical Mode Decomposition for adaptive AM-FM analysis of Speech: A Review. Speech Commun. 2017, 88, 39–64. [Google Scholar] [CrossRef]

- Wu, F.; Qu, L. An improved method for restraining the end effect in empirical mode decomposition and its applications to the fault diagnosis of large rotating machinery. J. Sound Vib. 2008, 314, 586–602. [Google Scholar] [CrossRef]

- Deng, Y.; Wang, W.; Qian, C.; Wang, Z.; Dai, D. Boundary-processing-technique in EMD method and Hilbert transform. Chin. Sci. Bull. 2001, 46, 954. [Google Scholar] [CrossRef]

- Xiong, T.; Bao, Y.; Hu, Z. Does restraining end effect matter in EMD-based modeling framework for time series prediction? Some experimental evidences. Neurocomputing 2014, 123, 174–184. [Google Scholar] [CrossRef]

- Luo, C.; Song, X.; Zheng, Y. A novel forecasting model for the long-term fluctuation of time series based on polar fuzzy information granules. Inf. Sci. 2019, 512, 760–779. [Google Scholar] [CrossRef]

- Pincus, S. Approximate entropy (ApEn) as a complexity measure. Chaos 1995, 5, 110–117. [Google Scholar] [CrossRef]

- Richman, J.S.; Lake, D.E.; Moorman, J.R. Sample Entropy. Methods Enzymol. 2004, 384, 172–184. [Google Scholar]

- Kosko, B. Fuzzy entropy and conditioning. Inf. Sci. 1986, 40, 165–174. [Google Scholar] [CrossRef]

- Bandt, C.; Pompe, B. Permutation entropy: A natural complexity measure for time series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Fadlallah, B.; Chen, B.; Keil, A.; Príncipe, J. Weighted-permutation entropy: A complexity measure for time series incorporating amplitude information. Phys. Rev. E 2013, 87, 022911. [Google Scholar] [CrossRef]

- Liu, X.; Wang, X.; Zhou, X.; Jiang, A. Appropriate use of the increment entropy for electrophysiological time series. Comput. Biol. Med. 2018, 95, 13–23. [Google Scholar] [CrossRef] [PubMed]

- Zamba, D.M.H.D. Statistical Process Control for Shifts in Mean or Variance Using a Changepoint Formulation. Technometrics 2005, 47, 164–173. [Google Scholar]

- Frías-Blanco, I.; del Campo-Ávila, J.; Ramos-Jimenez, G.; Morales-Bueno, R.; Ortiz-Díaz, A.; Caballero-Mota, Y. Online and Non-Parametric Drift Detection Methods Based on Hoeffding’s Bounds. IEEE Trans. Knowl. Data Eng. 2015, 27, 810–823. [Google Scholar] [CrossRef]

- Bhaduri, M.; Zhan, J.; Chiu, C.; Zhan, F. A Novel Online and Non-Parametric Approach for Drift Detection in Big Data. IEEE Access 2017, 5, 15883–15892. [Google Scholar] [CrossRef]

- Willsky, A.S.; Jones, H.L. A generalized likelihood ratio approach to the detection and estimation of jumps in linear systems. IEEE Trans. Autom. Control 1976, 21, 108–112. [Google Scholar] [CrossRef]

- Ross, G.J. Parametric and Nonparametric Sequential Change Detection in R: The cpm Package. J. Stat. Softw. 2015, 66, 1–20. [Google Scholar]

- Github. Available online: https://github.com/dingfengqian/ETFE (accessed on 7 November 2019).

- Stevenson, N.; Tapani, K.; Lauronen, L.; Vanhatalo, S. A dataset of neonatal EEG recordings with seizures annotations. Sci. Data 2019, 6, 190039. [Google Scholar] [CrossRef]

- Cohen, J. Weighted kappa: Nominal scale agreement with provision for scaled disagreement or partial credit. Psychol. Bull. 1968, 70, 213–220. [Google Scholar] [CrossRef]

| Time Series Group | Concept | ||

|---|---|---|---|

| Linear 1 | 1 | {0.9, −0.2, 0.8, −0.5} | 0.5 |

| 2 | {−0.3, 1.4, 0.4, −0.5} | 1.5 | |

| 3 | {1.5, −0.4, −0.3, 0.2} | 2.5 | |

| 4 | {−0.1, 1.4, 0.4, −0.7} | 3.5 | |

| Linear 2 | 1 | {1.1, −0.6, 0.8, −0.5, −0.1, 0.3} | 0.5 |

| 2 | {−0.1, 1.2, 0.4, 0.3, −0.2, −0.6} | 1.5 | |

| 3 | {1.2, −0.4, −0.3, 0.7, −0.6, 0.4} | 2.5 | |

| 4 | {−0.1, 1.1, 0.5, 0.2, −0.2, −0.5} | 3.5 | |

| Linear 3 | 1 | {0.5, 0.5} | 0.5 |

| 2 | {1.5, 0.5} | 1.5 | |

| 3 | {0.9, −0.2, 0.8, −0.5} | 2.5 | |

| 4 | {0.9, 0.8, −0.6, 0.2, −0.5, −0.2, 0.4} | 3.5 |

| Entropy Type | Parameters |

|---|---|

| Approximate Entropy | = 3, r = 0.2 std |

| Sample Entropy | = 3, r = 0.2 std |

| Fuzzy Entropy | = 3, r = 0.2 std |

| Permutation Entropy | = 4, = 1 |

| Weighted Permutation Entropy | = 4, = 1 |

| Increment Entropy | = 3, φ = 2 |

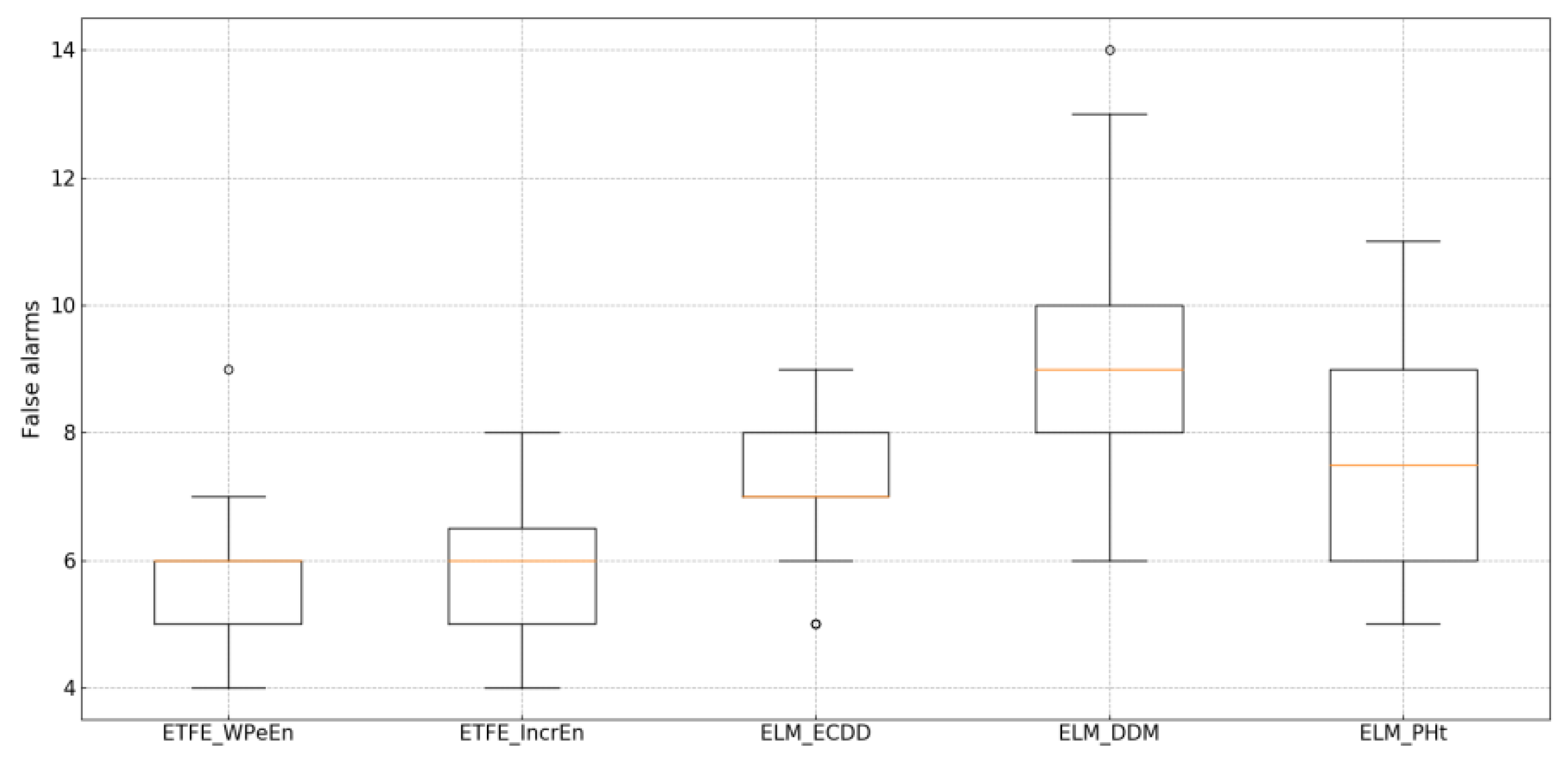

| Data Set | Method | Detection Delay (Instances) | Detection Position Offset (Instances) | False Alarms | Miss Detection Numbers |

|---|---|---|---|---|---|

| Linear 1 | ETFE_ApEn | 222.31 ± 60.91 | 45.19 ± 10.61 | 11.57 ± 2.11 | 0 |

| ETFE_SampEn | 216.30 ± 71.21 | 59.05 ± 19.52 | 10.881.95 | 0.030.17 | |

| ETFE_FuzzEn | 264.53 ± 89.27 | 47.33 ± 8.91 | 12.611.67 | 0.060.24 | |

| ETFE_PeEn | 249.8277.36 | 31.62 ± 9.26 | 11.381.42 | 0.030.17 | |

| ETFE_WPeEn | 280.44 ± 81.46 | 63.51 ± 11.61 | 10.931.38 | 0 | |

| ETFE_IncrEn | 251.71 ± 89.98 | 34.24 ± 13.56 | 11.552.37 | 0.030.17 | |

| FEDD_cos | 197.33 ± 56.67 | 197.33 ± 56.67 | 2.471.33 | 0 | |

| FEDD_pear | 188.78 ± 43.91 | 188.78 ± 43.91 | 2.521.17 | 0.030.17 | |

| ELM_ECDD | 419.23 ± 97.34 | 419.23 ± 97.34 | 3.472.37 | 0.560.61 | |

| ELM_DDM | 306.66 ± 45.65 | 306.66 ± 45.65 | 4.891.92 | 0.430.49 | |

| ELM_PHt | 487.34 ± 87.40 | 487.34 ± 87.40 | 3.561.87 | 0.540.51 | |

| Linear 2 | ETFE_ApEn | 300.9590.40 | 32.0412.63 | 13.612.58 | 0 |

| ETFE_SampEn | 398.20101.34 | 56.021.42 | 12.431.53 | 0.030.17 | |

| ETFE_FuzzEn | 401.20121.77 | 67.234.76 | 11.581.07 | 0.030.17 | |

| ETFE_PeEn | 345.9477.95 | 28.011.93 | 10.981.53 | 0 | |

| ETFE_WPeEn | 298.3581.85 | 44.119.80 | 12.531.57 | 0.030.17 | |

| ETFE_IncrEn | 387.3299.29 | 51.221.13 | 10.122.10 | 0.060.24 | |

| FEDD_cos | 256.9387.67 | 256.9387.67 | 2.561.11 | 0.030.17 | |

| FEDD_pear | 248.3498.24 | 248.3498.24 | 2.121.23 | 0.030.17 | |

| ELM_ECDD | 455.86104.98 | 455.86104.98 | 3.641.82 | 0.540.42 | |

| ELM_DDM | 411.3194.56 | 411.3194.56 | 4.781.63 | 0.370.38 | |

| ELM_PHt | 516.78132.54 | 516.78132.54 | 3.361.66 | 0.580.61 | |

| Linear 3 | ETFE_ApEn | 411.52121.66 | 72.911.22 | 10.771.41 | 0 |

| ETFE_SampEn | 512.38205.30 | 101.229.25 | 9.891.54 | 0 | |

| ETFE_FuzzEn | 503.06211.45 | 41.219.31 | 11.551.34 | 0 | |

| ETFE_PeEn | 385.57113.48 | 52.321.54 | 12.711.87 | 0.030.17 | |

| ETFE_WPeEn | 431.35138.35 | 57.614.52 | 9.781.10 | 0 | |

| ETFE_IncrEn | 392.52177.43 | 48.310.56 | 11.121.16 | 0 | |

| FEDD_cos | 289.7889.63 | 289.7889.63 | 2.831.53 | 0 | |

| FEDD_pear | 304.3989.10 | 304.3989.10 | 2.321.29 | 0.030.17 | |

| ELM_ECDD | 501.89 160.35 | 501.89160.35 | 3.661.86 | 0.600.22 | |

| ELM_DDM | 453.80174.23 | 453.80174.23 | 4.231.43 | 0.410.33 | |

| ELM_PHt | 567.32214.55 | 567.32214.55 | 3.491.44 | 0.530.39 |

| Data Set | Method | Detection Delay (instances) | Detection Position Offset (instances) | False Alarms | Miss Detection Numbers |

|---|---|---|---|---|---|

| Linear 1 | ETFE_ApEn | 382.91154.34 | 45.1911.35 | 9.131.24 | 0.030.17 |

| ETFE_SampEn | 489.02169.55 | 69.0522.44 | 9.331.54 | 0 | |

| ETFE_FuzzEn | 494.17201.74 | 78.3331.52 | 11.562.11 | 0.060.24 | |

| ETFE_PeEn | 329.82139.45 | 60.1317.88 | 11.171.33 | 0.030.17 | |

| ETFE_WPeEn | 430.21111.43 | 71.5626.43 | 9.211.49 | 0 | |

| ETFE_IncrEn | 442.26122.43 | 44.2410.36 | 10.081.34 | 0.060.24 | |

| FEDD_cos | 197.3356.67 | 197.3356.67 | 2.471.33 | 0 | |

| FEDD_pear | 188.7843.91 | 188.7843.91 | 2.521.17 | 0.030.17 | |

| ELM_ECDD | 419.2397.34 | 419.2397.34 | 3.472.37 | 0.560.61 | |

| ELM_DDM | 306.6645.65 | 306.6645.65 | 4.891.92 | 0.430.49 | |

| ELM_PHt | 487.3487.40 | 487.3487.40 | 3.561.87 | 0.540.51 | |

| Linear 2 | ETFE_ApEn | 467.32144.77 | 72.3019.82 | 9.641.34 | 0 |

| ETFE_SampEn | 472.35156.81 | 83.1421.43 | 9.891.16 | 0.060.24 | |

| ETFE_FuzzEn | 367.75135.65 | 77.2713.21 | 12.571.96 | 0.100.3 | |

| ETFE_PeEn | 578.85189.11 | 48.349.87 | 11.341.78 | 0 | |

| ETFE_WPeEn | 414.35131.32 | 53.1212.21 | 11.561.99 | 0.060.24 | |

| ETFE_IncrEn | 517.56176.44 | 67.3315.43 | 12.331.87 | 0.060.24 | |

| FEDD_cos | 256.9387.67 | 256.9387.67 | 2.561.11 | 0.030.17 | |

| FEDD_pear | 248.3498.24 | 248.3498.24 | 2.121.23 | 0.030.17 | |

| ELM_ECDD | 455.86104.98 | 455.86104.98 | 3.641.82 | 0.540.42 | |

| ELM_DDM | 411.3194.56 | 411.3194.56 | 4.781.63 | 0.370.38 | |

| ELM_PHt | 516.78132.54 | 516.78132.54 | 3.361.66 | 0.580.61 | |

| Linear 3 | ETFE_ApEn | 543.87189.45 | 89.5335.43 | 11.211.60 | 0.030.17 |

| ETFE_SampEn | 598.45197.05 | 134.2362.34 | 9.801.42 | 0.060.24 | |

| ETFE_FuzzEn | 532.54156.07 | 88.2321.33 | 11.771.50 | 0 | |

| ETFE_PeEn | 433.33145.67 | 124.6665.41 | 11.461.23 | 0.060.24 | |

| ETFE_WPeEn | 513.45173.40 | 111.7654.98 | 9.081.09 | 0.030.17 | |

| ETFE_IncrEn | 612.24211.04 | 156.7871.37 | 8.561.76 | 0 | |

| FEDD_cos | 289.7889.63 | 289.7889.63 | 2.831.53 | 0 | |

| FEDD_pear | 304.3989.10 | 304.3989.10 | 2.321.29 | 0.030.17 | |

| ELM_ECDD | 501.89160.35 | 501.89160.35 | 3.661.86 | 0.600.22 | |

| ELM_DDM | 453.80174.23 | 453.80174.23 | 4.231.43 | 0.410.33 | |

| ELM_PHt | 567.32214.55 | 567.32214.55 | 3.491.44 | 0.530.39 |

| Data. | Annotated Period (s) | Selected Period (s) | ||

|---|---|---|---|---|

| A | B | C | ||

| EEG1 | [104, 121] | [96, 122] | [96, 121] | [70, 150] |

| EEG1 | [1179, 1209] | [1178, 1206] | [1179, 1194] | [1150, 1220] |

| EEG5 | [975, 1508] | [993, 1449] | [993, 1446] | [500, 2000] |

| EEG7 | [95, 112] | [97, 106] | [98, 110] | [80, 130] |

| EEG14 | [255, 278] | [254, 282] | [256, 279] | [210, 310] |

| EEG14 | [3331, 3342] | [3330, 3343] | [3330, 3342] | [3310, 3360] |

| EEG16 | [5685, 5707] | [5692, 5707] | [5685, 5706] | [5660, 5730] |

| EEG17 | [2957, 3011] | [2904, 3116] | [2901, 2940] | [2800, 3200] |

| EEG20 | [559, 586] | [563, 584] | [565, 585] | [540, 610] |

| EEG20 | [3827, 3885] | [3824, 3899] | [3827, 3886] | [3760, 3960] |

| EEG20 | [3962, 3980] | [3952, 3985] | [3965, 3980] | [3930, 4010] |

| EEG25 | [3449, 3477] | [3414, 3484] | [3451, 3473] | [3400, 3490] |

| EEG25 | [4792, 4814] | [4767, 4829] | [4792, 4811] | [4750, 4860] |

| EEG31 | [1885, 1964] | [1887, 1966] | [1887, 1966] | [1800, 2040] |

| EEG31 | [2423, 2524] | [2423, 2523] | [2423, 2522] | [2320, 2620] |

| EEG38 | [5367, 5460] | [5369, 5438] | [5369, 5438] | [5300, 5490] |

| EEG38 | [5857, 5886] | [5840, 5889] | [5859, 5885] | [5800, 6020] |

| EEG44 | [294, 375] | [297, 375] | [293, 374] | [210, 450] |

| EEG44 | [644, 661] | [647, 663] | [644, 663] | [620, 690] |

| EEG44 | [2504, 2518] | [2508, 2517] | [2504, 2517] | [2480, 2540] |

| EEG47 | [1841, 1898] | [1841, 1896] | [1832, 1898] | [1790, 1960] |

| EEG51 | [4356, 4684] | [4373, 4679] | [4344, 4663] | [4040, 5000] |

| EEG62 | [1344, 1725] | [1346, 1725] | [1336, 1725] | [940, 2125] |

| EEG63 | [2423, 2526] | [2427, 2528] | [2424, 2519] | [2330, 2630] |

| EEG67 | [751, 780] | [753, 788] | [754, 782] | [720, 810] |

| EEG67 | [1366, 1410] | [1367, 1410] | [1348, 1407] | [1300, 1470] |

| EEG73 | [1429, 1454] | [1429, 1454] | [1429, 1479] | [1380, 1500] |

| EEG76 | [391, 436] | [393, 432] | [386, 435] | [350, 475] |

| EEG79 | [565, 620] | [540, 620] | [566, 620] | [460, 700] |

| EEG79 | [2441, 2494] | [2416, 2490] | [2444, 2493] | [2360, 2570] |

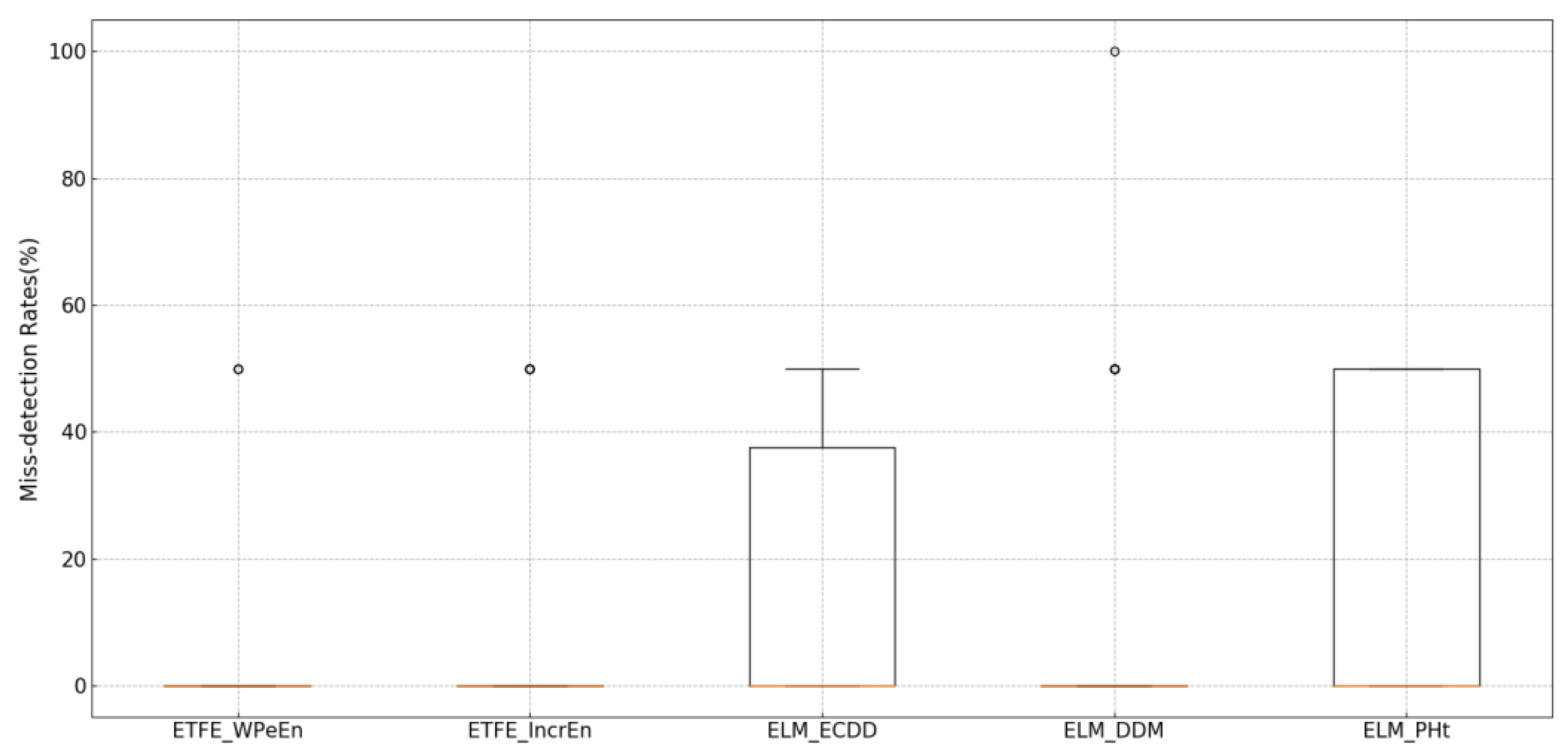

| Methods Comparison | Expert A | Expert B | Expert C |

|---|---|---|---|

| ETFE_WPeEn | 0.824 (0.705–0.901) | 0.802 (0.698–0.895) | 0.833 (0.716–0.871) |

| ETFE_IncrEn | 0.815 (0.731–0.897) | 0.798 (0.717–0.874) | 0.825 (0.729–0.903) |

| ELM_ECDD | 0.655 (0.545–0.713) | 0.637 (0.596–0.744) | 0.678 (0.530–0.796) |

| ELM_DDM | 0.715 (0.601–0.813) | 0.694 (0.612–0.785) | 0.723 (0.578–0.849) |

| ELM_PHt | 0.709 (0.604–0.785) | 0.661 (0.591–0.762) | 0.735 (0.586–0.801) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, F.; Luo, C. The Entropy-Based Time Domain Feature Extraction for Online Concept Drift Detection. Entropy 2019, 21, 1187. https://doi.org/10.3390/e21121187

Ding F, Luo C. The Entropy-Based Time Domain Feature Extraction for Online Concept Drift Detection. Entropy. 2019; 21(12):1187. https://doi.org/10.3390/e21121187

Chicago/Turabian StyleDing, Fengqian, and Chao Luo. 2019. "The Entropy-Based Time Domain Feature Extraction for Online Concept Drift Detection" Entropy 21, no. 12: 1187. https://doi.org/10.3390/e21121187

APA StyleDing, F., & Luo, C. (2019). The Entropy-Based Time Domain Feature Extraction for Online Concept Drift Detection. Entropy, 21(12), 1187. https://doi.org/10.3390/e21121187