Abstract

In this paper, we will study the key enumeration problem, which is connected to the key recovery problem posed in the cold boot attack setting. In this setting, an attacker with physical access to a computer may obtain noisy data of a cryptographic secret key of a cryptographic scheme from main memory via this data remanence attack. Therefore, the attacker would need a key-recovery algorithm to reconstruct the secret key from its noisy version. We will first describe this attack setting and then pose the problem of key recovery in a general way and establish a connection between the key recovery problem and the key enumeration problem. The latter problem has already been studied in the side-channel attack literature, where, for example, the attacker might procure scoring information for each byte of an Advanced Encryption Standard (AES) key from a side-channel attack and then want to efficiently enumerate and test a large number of complete 16-byte candidates until the correct key is found. After establishing such a connection between the key recovery problem and the key enumeration problem, we will present a comprehensive review of the most outstanding key enumeration algorithms to tackle the latter problem, for example, an optimal key enumeration algorithm (OKEA) and several nonoptimal key enumeration algorithms. Also, we will propose variants to some of them and make a comparison of them, highlighting their strengths and weaknesses.

1. Introduction

A side-channel attack may be defined as any attack by which an attacker is able to obtain private information of a cryptographic algorithm from its implementation instead of exploiting weaknesses in the implemented algorithm itself. Most of these attacks are based on a divide-and-conquer approach through which the attacker obtains ranking information about the chunks of the secret key and then uses such information to construct key candidates for that key. This secret key is the result of the concatenation of all the key parts, while a chunk candidate is a possible value of a key part that is chosen because the attack suggests a good probability for that value to be correct. Particularly, we will focus on a particular side-channel attack, known as cold boot attack. This is a data remanence attack in which the attacker is able to read sensitive data from a source of computer memory after supposedly having been deleted. More specifically, exploiting the data remanence property of dynamic random-access memories (DRAMs), an attacker with physical access to a computer, may procure noisy data of a secret key from main memory via this attack vector. Hence, after obtaining such data, the attacker’s main task is to recover the secret key from its noisy version. As it will be revealed by the literature in Section 2, the research effort, after the initial work showing the practicability of cold boot attacks [1], has focused on designing tailor-made algorithms for efficiently recovering keys from noisy versions for a range of different cryptographic schemes whilst exploring the limits of how much noise can be tolerated.

The above discussion raises the following question: can we devise a general approach to key recovery in the cold boot attack setting, i.e., a general algorithmic strategy that can be applied to recovering keys from noisy versions of those keys for a range of different cryptographic schemes? In this research paper, we work toward answering this question by studying the key enumeration problem, which is connected to the key recovery problem in the cold boot attack setting. Therefore, this paper, to the best of our knowledge, is the first to present a comprehensive review of the most outstanding key enumeration algorithms to tackle the key enumeration problem. Explicitly, our major contributions in this research work are the following:

- We present the key recovery problem in a general way and establish a connection between the key recovery problem and the key enumeration problem.

- We describe the most outstanding key enumeration algorithms methodically and in detail and also propose variants to some of them. The algorithms included in this study are an optimal key enumeration algorithm (OKEA); a bounded-space near-optimal key enumeration algorithm; a simple stack-based, depth-first key enumeration algorithm; a score-based key enumeration algorithm; a key enumeration algorithm using histograms; and a quantum key enumeration algorithm. For each studied algorithm, we describe its inner functioning, showing its functional and qualitative features, such as memory consumption, amenability to parallelization; and scalability.

- Finally, we make an experimental comparison of all the implemented algorithms, drawing special attention to their strengths and weaknesses. In our comparison, we benchmark all the implemented algorithms by running them in a common scenario to measure their overall performance.

Note that the goal of this research work is not only to study the key enumeration problem and its connection to the key recovery problem but also to show the gradual development of designing key enumeration algorithms, i.e., our review also focuses on pointing out the most important design principles to look at when designing key enumeration algorithms. Therefore, our review examines the most outstanding key enumeration algorithms methodically, via describing their inner functioning, the algorithm-related data structures, and the benefits and drawbacks from using such data structures. Particularly, this careful examination shows us that, by properly using data structures and by making the restriction on the order in which the key candidates are enumerated less strict, we may devise better key enumeration algorithms in terms of overall performance, scalability, and memory consumption. This observation is substantiated in our experimental comparison.

This paper is organised as follows. In Section 2, we will first describe the cold boot attack setting and the attack model we will use throughout this paper. In Section 3,we will describe the key recovery problem in a general way and establish a connection between the key recovery problem and the key enumeration problem. In Section 4, we will examine several key enumeration algorithms to tackle the key enumeration problem methodically and in detail, e.g., an optimal key enumeration algorithm (OKEA), a bounded-space near-optimal key enumeration algorithm, a quantum key enumeration algorithm, and variants of other key enumeration algorithms. In Section 5, we will make a comparison of them, highlighting their strengths and weaknesses. Finally, in Section 6, we will draw some conclusions and give some future research lines.

2. Cold Boot Attacks

A cold boot attack is a type of data remanence attack by which sensitive data are read from a computer’s main memory after supposedly having been deleted. This attack relies on the data remanence property of DRAMs that allows an attacker to retrieve memory contents that remain readable in the seconds to minutes after power has been removed. Since this attack was first described in the literature by Halderman et al. nearly a decade ago [1], it has received significant attention. In this setting, more specifically, an attacker with physical access to a computer can retrieve content from a running operating system after performing a cold reboot to restart the machine, i.e., not shutting down the operating system in an orderly manner. Since the operating system was shut down improperly, it will skips file system synchronization and other activities that would occur on an orderly shutdown. Therefore, following a cold reboot, such an attacker may use a removable disk to boot a lightweight operating system and then copy stored data in memory to a file. As another option or possibility, such an attacker may take the memory modules off the original computer and quickly put them in a compatible computer under the attacker’s control, which is then started and put into a state of readiness for operation in order to access the memory content. Also, this attacker may perform a further analysis against the data that was dumped from memory to find various sensitive information, such as cryptographic keys contained in it [1]. This task may be performed by making use of various forms of key finding algorithms [1]. Unfortunately for such an attacker, the bits in memory will degrade once the computer’s power is interrupted. Therefore, if the adversary retrieves any data from the computer’s main memory after the power is cut off, the extracted data will probably have random bit variations. This is, the data will be noisy, i.e., differing from the original data.

The lapse of time for which cell memory values are maintained while the machine is off depends on the particular memory type and the ambient temperature. In fact, the research paper [1] reported the results of multiple experiments that show that, at normal operating temperatures (25.5 °C to 44.1 °C), there is little corruption within the first few seconds but this phase is then followed by a quick decay. Nevertheless, by employing cooling techniques on the memory chips, the period of mild corruption can be extended. For instance, by spraying compressed air onto the memory chips, they achieved an experiment at 50 °C and showed that less than 0.1% of bits degrade within the first minute. At temperatures of approximately −196 °C, attained by the use of liquid nitrogen, less than of bits decay within the first hour. Remarkably, once power is switched off, the memory will be divided into regions and each region will have a “ground state”, which is associated with a bit. In a 0 ground state, the 1 bits will eventually decay to 0 bits while the probability of a 0 bit switching to a 1 bit is very small but not vanishing (a common probability is circa [1]). When the ground state is 1, the opposite is true.

From the above discussion, it follows that only a noisy version of the original key may be retrievable from main memory once the attacker discovers the location of the data in it, so the main task of the attacker then is to tackle the mathematical problem of recovering the original key from a noisy version of that key. Therefore, the centre of interest of the research community after the initial work pointing out the feasibility of cold boot attacks [1] has been to develop bespoke algorithms for efficiently recovering keys from noisy versions of those keys for a range of different cryptographic schemes whilst exploring the limits of how much noise can be tolerated.

Heninger and Shacham [2] focused on the case of RSA keys, introducing an efficient algorithm based on Hensel lifting to exploit redundancy in the typical RSA private key format. This work was followed up by Henecka, May, and Meurer [3] and by Paterson, Polychroniadou, and Sibborn [4], with both research papers also paying particular attention to the mathematically highly structured RSA setting. The latter research paper, in particular, indicated the asymmetric nature of the error channel intrinsic to the cold boot setting and presented the problem of key recovery for cold boot attacks in an information theoretic manner.

On the other hand, Lee et al. [5] were the first to discuss cold boot attacks in the discrete logarithm setting. They assumed that an attacker had access to the public key , a noisy version of the private key x, and that such an attacker knew an upper bound for the number of errors in the private key. Since the latter assumption might not be realistic and the attacker did not have access to further redundancy, their proposed algorithm would likely be unable to recover keys in the true cold boot scenario, i.e., only assuming a bit-flipping model. This work was followed up by Poettering and Sibborn [6]. They exploited redundancies present in the in-memory private key encodings from two elliptic curve cryptography (ECC) implementations from two Transport Layer Security (TLS) libraries, OpenSSL and PolarSSL, and introduced cold boot key-recovery algorithms that were applicable to the true cold boot scenario.

Other research papers have explored cold boot attacks in the symmetric key setting, including Albrecht and Cid [7], who centred on the recovery of symmetric encryption keys in the cold boot setting by employing polynomial system solvers, and Kamal and Youssef [8], who applied SAT solvers to the same problem.

Finally, recent research papers have explored cold boot attacks on post-quantum cryptographic schemes. The paper by Albrecht et al. [9] evaluated schemes based on the ring—and module—variants of the Learning with Errors (LWE) problem. In particular, they looked at two cryptographic schemes: the Kyber key encapsulation mechanism (KEM) and New Hope KEM. Their analysis focused on two encodings to store LWE keys. The first encoding stores polynomials in coefficient form directly in memory, while the second encoding performs a number theoretic transform (NTT) on the key before storing it. They showed that, at a 1% bit-flip rate, a cold boot attack on Kyber KEM parameters had a cost of operations when the second encoding is used for key storage compared to operations with the first encoding. On the other hand, the paper by Paterson et al. [10] focused on cold boot attacks on NTRU. Particularly the authors of the research paper [10] were the first that used a combination of key enumeration algorithms to tackle the key recovery problem. Their cold boot key-recovery algorithms were applicable to the true cold boot scenario and exploited redundancies found in the in-memory private key representations from two popular NTRU implementations. This work was followed up by that of Villanueva-Polanco [11], which studied cold boot attacks against the strongSwan implementation of the BLISS signature scheme and presented key-recovery algorithms based on key enumeration algorithms for the in-memory private key encoding used in this implementation.

Cold Boot Attack Model

Our cold boot attack model assumes that the adversary can procure a noisy version of the encoding of a secret key used to store it in memory. We further assume that the corresponding public parameters are known exactly, without noise. We do not take into consideration here the significant problem of how to discover the exact place or position of the appropriate region of memory in which the secret key bits are stored, though this would be a consideration of great significance in practical attacks. Our goal is then to recover the secret key. Note that it is sufficient to obtain a list of key candidates in which the true secret key is located, since we can always test a candidate by executing known algorithms linked to the scheme we are attacking.

We assume throughout that a 0 bit of the original secret key will flip to a 1 with probability and that a 1 bit of the original private key will flip with probability We do not assume that ; indeed, in practice, one of these values may be very small (e.g., ) and relatively stable over time while the other increases over time. Furthermore, we assume that the attacker knows the values of and and that they are fixed across the region of memory in which the private key is located. These assumptions are reasonable in practice: one can estimate the error probabilities by looking at a region where the memory stores known values, for example, where the public key is located, and where the regions are typically large.

3. Key Recovery Problem

3.1. Some Definitions

We define an array A as a data structure consisting of a finite sequence of values of a specified type, i.e., . The length of an array, , is established when the array is created. After creation, its length is fixed. Each item in an array is called an element, and each element is accessed by its numerical index, i.e., , with . Let and be two arrays of elements of a specified type. The associative operation ‖ is defined as follows.

Both a list L and a table are defined as a resizable array of elements of a specified type. Given a list , this data structure supports the following methods.

- The method returns the number of elements in this list, i.e., the value .

- The method appends the specified element to the end of this list, i.e., after this method returns.

- The method , with , returns the element at the specified position j in this list, i.e.,

- The method removes all the elements from this list. The list will be empty after this method returns, i.e.,

3.2. Problem Statement

Let us suppose that a noisy version of the encoding of the secret key can be represented as a concatenation of chunks, each on w bits. Let us name the chunks so that . Additionally, we suppose there is a key-recovery algorithm that constructs key candidates for the encoding of the secret key and that these key candidates c can also be represented by concatenations of chunks in the same way.

The method of maximum likelihood (ML) estimation then suggests picking as c the value that maximizes . Using Bayes’ theorem, this can be rewritten as Note that is a constant and that is also a constant, independent of . Therefore, the ML estimation suggests picking as c the value that maximizes where denotes the number of positions where both c and r contain a 0 bit and where denotes the number of positions where c contains a 0 bit and r contains a 1 bit, etc. Equivalently, we may maximize the log of these probabilities, viz. Therefore, given a candidate , we can assign it a score, namely .

Assuming that each of the, at most, candidate values for chunk , , can be enumerated, then its own score also can be calculated as where the values count occurrences of bits across the chunks and . Therefore, we have . Hence, we may assume we have access to lists of chunk candidates, where each list contains up to entries. A chunk candidate is defined as a 2-tuple of the form , where the first component is a real number (candidate score) while the second component is an array of w-bit strings (candidate value). The question then becomes can we design efficient algorithms that traverse the lists of chunk candidates to combine chunk candidates , obtaining complete key candidates having high total scores obtained by summation? This question has been previously addressed in the side-channel analysis literature, with a variety of different algorithms being possible to solve this problem and the related problem known as key rank estimation [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26].

Let be the list of chunk candidates for chunk i, . Let be chunk candidates . The function returns a new chunk candidate such that Note that when , will be a full key candidate.

Definition 1.

The key enumeration problem entails traversing the lists , , while picking a chunk candidate from each to generate full key candidates c = combine (c c). Moreover, we call an algorithm generating full key candidatesca key enumeration algorithm (KEA).

Note that the key enumeration problem has been stated in a general way; however, there are many other variants to this problem. These variants relate to the manner in which the key candidates are generated by a key enumeration algorithm.

A different version of the key enumeration problem is enumerating key candidates c such that their total accumulated scores follow a specific order. For example, for many side-channel scenarios, it is necessary to enumerate key candidates c starting at the one having the highest score, followed by the one having the second highest score, and so on. In these scenarios, we need a key enumeration algorithm to enumerate high-scoring key candidates in decreasing order based on their total accumulated scores. For example, such an algorithm would allow us to find the top M highest scoring candidates in decreasing order, where . Furthermore, such an algorithm is known as an optimal key enumeration algorithm.

Another version of the same problem is enumerating all the key candidates c such that their total accumulated scores satisfy a specified property rather than a specific order. For example, for some side-channel scenarios, it would be useful to enumerate all key candidates of which their total accumulated scores lie in an interval . In these scenarios, the key enumeration algorithm has to enumerate all key candidates of which their total accumulated scores lie in that interval, however such enumeration may be not performed in a specified order; still, it does need to ensure that all fitting key candidates will be generated once it has completed. This is, the algorithm will generate all the key candidates of which their total accumulated scores satisfy the condition in any order. Such an algorithm would allow us to find the top M highest scoring candidates in any order if the interval is well defined, for example. Moreover, such an algorithm is commonly known as a nonoptimal key enumeration algorithm.

We note that the key enumeration problem arises in other contexts. For example, in the area of statistical cryptanalysis. In particular, the problem of merging two lists of subkey candidates was encountered by Junod and Vaudenay [27]. The small cardinality of the lists () was such that the simple approach that consists of merging and sorting the lists of subkeys was tractable. Another related problem is list decoding of convolutional codes by means of the Viterbi algorithm [28]. However, such algorithms are usually designed to output a small number of most likely candidates determined a priori, whilst our aim is at algorithms able to perform long enumerations, i.e., only those key enumeration algorithms designed to be able to perform enumerations of or more key candidates.

4. Key Enumeration Algorithms

In this section, we review several key enumeration algorithms. Since our target is algorithms able to perform long enumerations, our review procedure consisted of examining only those research works presenting key enumeration algorithms designed to be able to perform enumerations of or more key candidates. Basically, we reviewed research proposals mainly from the side-channel literature methodically and in detail, starting from the research paper by Veyrat-Charvillon et al. [18], which was the first to look closely at the conquer part in side-channel analysis with the goal of testing several billions of key candidates. Particularly, its authors noted that none of the key enumeration algorithms proposed in the research literature until then were scalable, requiring novel algorithms to tackle the problem. Hence, they presented an optimal key enumeration algorithm that has inspired other more recent proposals.

Broadly speaking, optimal key enumeration algorithms [18,28] tend to consume more memory and to be less efficient while generating high-scoring key candidates, whereas nonoptimal key enumeration algorithms [12,13,14,15,16,17,26,29] are expected to run faster and to consume less memory. Table 1 shows a preliminary taxonomy of the key enumeration algorithms to be reviewed in this section. Each algorithm will be detailed and analyzed below according to its overall performance, scalability, and memory consumption.

Table 1.

Brief description of reviewed key enumeration algorithms (KEAs).

4.1. An Optimal Key Enumeration Algorithm

We study the optimal key enumeration algorithm (OKEA) that was introduced in the research paper [18]. We will firstly give the basic idea behind the algorithm by assuming the encoding of the secret key is represented as two chunks; hence, we have access to two lists of chunk candidates.

4.1.1. Setup

Let and be the two lists respectively. Each list is in decreasing order based on the score component of its chunk candidates. Let us define an extended candidate as a 4-tuple of the form and its score as . Additionally, let be a priority queue that will store extended candidates in decreasing order based on their score.

This data structure supports three methods. Firstly, the method retrieves and removes the head from this queue or returns if this queue is empty. Secondly, the method inserts the specified element e into the priority queue Q. Thirdly, the method removes all the elements from the queue Q. The queue will be empty after this method returns. By making use of a heap, we can support any priority-queue operation on a set of size n in time.

Furthermore, let and be two vectors of bits that grow as needed. These are employed to track an extended candidate in . is in only if both and are set to 1. By default, all bits in a vector initially have the value 0.

4.1.2. Basic Algorithm

At the initial stage, queue Q will be created. Next, the extended candidate will be inserted into the priority queue and both and will be set to 1. In order to generate a new key candidate, the routine nextCandidate, defined in Algorithm 1, should be executed.

Let us assume that . First, the extended candidate will be retrieved and removed from Q, and then, and will be set to 0. The two blocks of instructions will then be executed, meaning that the extended candidates and will be inserted into Q. Moreover, the entries , and will be set to 1, while the other entries of X and Y will remain as 0. The routine nextCandidate will then return , which is the highest score key candidate, since and are in decreasing order. At this point, the two extended candidates and (both in Q) are the only ones that can have the second highest score. Therefore, if Algorithm 2 is called again, the first instruction will retrieve and remove the extended candidate with the second highest score, say , from Q and then the second instruction will set and to 0. The first condition will be attempted, but this time, it will be false since is set to 1. However, the second condition will be satisfied, and therefore, will be inserted into Q and the entries and will be set to 1. The method will then return , which is the second highest score key candidate.

| Algorithm 1 outputs the next highest-scoring key candidate from and . |

|

At this point, the two extended candidates and (both in Q) are the only ones that can have the third highest score. As for why, we know that the algorithm has generated and so far. Since and are in decreasing order, we have that either or . Also, any other extended candidate yet to be inserted into Q cannot have the third highest score for the same reason. Consider, for example, : this extended candidate will be inserted into Q only if has been retrieved and removed from Q. Therefore, if Algorithm 1 is executed again, it will return the third highest scoring key candidate and have the extended candidate with the fourth highest score placed at the head of Q. In general, the manner in which this algorithm travels through the matrix of key candidates guarantees to output key candidates in a decreasing order based on their total accumulated score, i.e., this algorithm is an optimal key enumeration algorithm.

Regarding how fast queue grows, let be the number of extended candidates in Q after the function nextCandidate has been called times. Clearly, we have that = 1, since only contains the extended candidate after initialisation. Also, because, after calls to the function, there will be no more key candidates to be enumerated. Note that, during the execution of the function nextCandidate, an extended candidate will be removed from Q and two new extended candidates might be inserted into Q. Considering the way in which an extended candidate is inserted into the queue, Q may contain at most one element in each row and column at any stage; hence, for .

4.1.3. Complete Algorithm

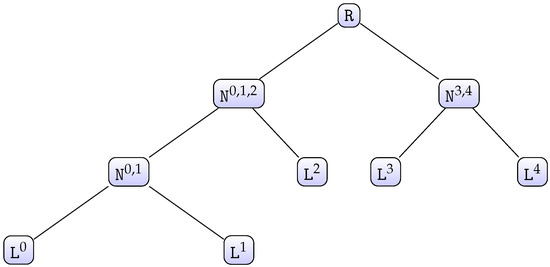

Note that Algorithm 1 works properly if both input lists are in decreasing order. Hence, it may be generalized to a number of lists greater than 2 by employing a divide-and-conquer approach, which works by recursively breaking down the problem into two or more subproblems of the same or related type until these become simple enough to be solved directly. The solutions to the subproblems are then combined to give a solution to the original problem [30]. To explain the complete algorithm, let us consider the case when there are five chunks as an example. We have access to five lists of chunk candidates , each of which has a size of . We first call , as defined in Algorithm 2. This function will build a tree-like structure from the five given lists (see Figure 1).

Figure 1.

Binary tree built from .

Each node is a 6-tuple of the form , where and are the children nodes, is a priority queue, and are bit vectors, and a list of chunk candidates. Additionally, this data structure supports the method , which returns the maximum number of chunk candidates that this node can generate. This method is easily defined in a recursive way: if is a leaf node, then the method will return or else, the method will return . To avoid computing this value each time this method is called, a node will internally store the value once it has been computed for the first time. Hence, the method will only return the stored value from the second call onwards. Furthermore, the function , as defined in Algorithm 3, returns the best chunk candidate (chunk candidate of which its score rank is j) from the node

In order to generate the first N best key candidates from the root node R, with , we simply run , as defined in Algorithm 4, N times. This function internally calls the function with suitable parameters each time it is required. Calling may cause this function to internally invoke to generate ordered key candidates from the inner node on the fly. Therefore, any inner node should keep track of the chunk candidates returned by when called by its parent; otherwise, the j best chunk candidates from would have to be generated each time such a call is done, which is inefficient. To keep track of the returned chunk candidates, each node updates its internal list (see lines 5 to 7 in Algorithm 3).

| Algorithm 2 creates and initialises each node of the tree-like structure. |

|

| Algorithm 3 outputs the best chunk candidate from the node . |

|

| Algorithm 4 outputs the next highest-scoring chunk candidate from the node . |

|

4.1.4. Memory Consumption

Let us suppose that the encoding of a secret key is bits in size and that we set ; therefore, . Hence, we have access to lists , , each of which has chunk candidates. Suppose we would like to generate the first N best key candidates. We first invoke (Algorithm 2). This call will create a tree-like structure with levels starting at 0.

- The root node at level 0.

- The inner nodes with , where is the level and is the node identification at level .

- The leaf nodes at level b for .

This tree will have nodes.

Let be the number of bits consumed by chunk candidates stored in memory after calling the function nextCandidate with R as a parameter k times. A chunk candidate at level is of the form with being a real number and being bit strings. Let be the number of bits a chunk candidate at level occupies in memory.

First note that invoking causes each internal node’s list to grow, since

- At creation of nodes (lines 2 to 4), is created by setting ’s internal list to and by setting ’s other components to null.

- At creation of both and nodes , for and , the execution of the function getCandidate (lines 9 to 10) makes their corresponding left child (right child) store a new chunk candidate in their corresponding internal list. That is, for , the ’s internal list has a new element.

Therefore,

Suppose the best key candidate is about to be generated, then will be executed for the first time. This routine will remove the extended candidate out of R’s priority queue. If it enters the first if (lines 4 to 8), it will make the call (line 5), which may cause each node, except for the leaf nodes, of the left sub-tree to store at most a new chunk candidate in its corresponding internal list. Hence, retrieving the chunk candidate may cause at most chunk candidates per level to be stored. Likewise, if it enters the second if (lines 9 to 13), it will call the function (line 10), which may cause each node, except for the leaf nodes, of the right sub-tree to store at most a new chunk candidate in its corresponding internal list. Therefore, retrieving the chunk candidate (line 10) may cause at most chunk candidates per level , to be stored. Therefore, after generating the best key candidate, chunk candidates per level , will be stored in memory; hence, bits are consumed by chunk candidates stored in memory.

Let us assume that key candidates have already been generated; therefore, bits are consumed by chunk candidates in memory, with . Let us now suppose the best key candidate is about to be generated; then, the method will be executed for the time. This routine will remove the best extended candidate out of the R’s priority queue. It will then attempt to insert two new extended candidates into R’s priority queue. As seen previously, retrieving the chunk candidate may cause at most chunk candidates per level to be stored. Likewise, retrieving the chunk candidate may also cause at most chunk candidates per level to be stored. Therefore, after generating the best key candidate, chunk candidates per level , will be stored in memory; hence,

bits are consumed by chunk candidates stored in memory.

It follows that, if N key candidates are generated, then

bits are consumed by chunk candidates stored in memory in addition to the extended candidates stored internally in the priority queue of the nodes R and Therefore, this algorithm may consume a large amount of memory if it is used to generate a large number of key candidates, which may be problematic.

4.2. A Bounded-Space Near-Optimal Key Enumeration Algorithm

We next will describe a key enumeration algorithm introduced in the research paper [13]. This algorithm builds upon OKEA and can enumerate a large number of key candidates without exceeding the available space. The trade-off is that the enumeration order is only near-optimal rather than optimal as it is in OKEA. We firstly will give the basic idea behind the algorithm by assuming the encoding of the secret key is represented as two chunks; hence, we have access to two lists of chunk candidates.

4.3. Basic Algorithm

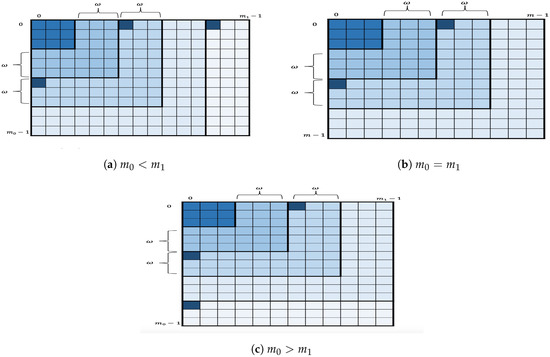

Let and be the two lists, and let be an integer such that and . Each list is in decreasing order based on the score component of its chunk candidates. Let us set and define as

where are positive integers. The key space is divided into layers of width . Figure 2 depicts each layer as a different shade of blue. Formally,

for The remaining layers are defined as follows.

Figure 2.

Geometric representation of the key space divided into layers of width .

If ,

for or else

for .

The -layer key enumeration algorithm: Divide the key space into layers of width . Then, go over , one by one, in increasing order. For each , enumerate its key candidates by running OKEA within the layer . More specifically, for each the algorithm inserts the two corners, i.e., the extended candidates , into the data structure . The algorithm then proceeds to extract extended candidates and to insert their successors as usual but limits the algorithm to not exceed the boundaries of the layer when selecting components of candidates. For the remaining layers, if any, the algorithm inserts only one corner, either the extended candidate or the extended candidate , into the data structure and then proceeds as usual while not exceeding the boundaries of the layer. Figure 2 also shows the extended candidates (represented as the smallest squares in a strong shade of blue within a layer) to be inserted into when a certain layer will be enumerated.

4.4. Complete Algorithm

When the number of chunks is greater than 2, the algorithm applies a recursive decomposition of the problem (similar to OKEA). Whenever a new chunk candidate is inserted into the candidate set, its value is obtained by applying the enumeration algorithm to the lower level. We explain an example to give an idea of the general algorithm. Let us suppose the encoding of the secret key is divided into 4 chunks; then, we have access to 4 lists of chunk candidates, each of which is of size with

To generate key candidates, we need to generate the two lists of chunk candidates for the lower level and on the fly as far as required. For this, we maintain a set of next potential candidates, for each dimension, and , so that each next chunk candidate obtained from (or ) is stored in the list (or ). Because the enumeration is performed by layers, the sizes of the data structures and are bounded by . However, this is not the case for the lists and , which grow as the number of candidates enumerated grows, hence becoming problematic as seen in Section 4.1.4.

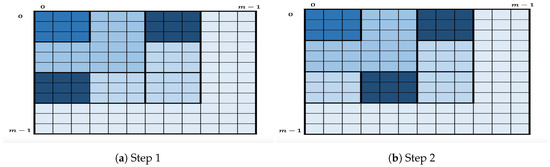

To handle this, each is partitioned into squares of size . The algorithm still enumerates the key candidates in first, then in , and so on, but in each , the enumeration will be square-by-square. Figure 3 depicts the geometric representation of the key enumeration within where a square (strong shade of blue) within a layer represents the square being processed by the enumeration algorithm. More specifically, for given nonnegative integers I and let us define as

Figure 3.

Geometric representation of the key enumeration within

Let us set ; hence,

for . The remaining layers, if any, are also partitioned in a similar way.

The in-layer algorithm then proceeds as follows. For each the in-layer algorithm first enumerates the candidates in the two corner squares by applying OKEA on S. At some point, one of the two squares is completely enumerated. Assume this is . At this point, the only square that contains the next key candidates after is the successor . Therefore, when one of the squares is completely enumerated, its successor is inserted in S, as long as S does not contain a square in the same row or column. For the remaining layers, if any, the in-layer algorithm first enumerates the candidates in the square (or ) by applying OKEA on it. Once the square is completely enumerated, its successor is inserted in S, and so on. This in-layer partition into squares reduces the space complexity, since instead of storing the full list of chunk candidates of the lower levels, only the relevant chunk candidates are stored for enumerating the two current squares.

Because this in-layer algorithm enumerates at most two squares at any time in a layer, the tree-like structure is no longer a binary tree. A node is now extended to an 8-tuple of the form , where and for are the children nodes used to enumerate at most two squares in a particular layer, is a priority queue, and are bit vectors, and is a list of chunk candidates. Hence, the function that initialises the tree-like structure is adjusted to create the two additional children for a given node (see Algorithm 5).

| Algorithm 5 creates and initialises each node of the tree-like structure. |

|

| Algorithm 6 outputs the chunk candidate from the node . |

|

| Algorithm 7 outputs the next chunk candidate from the node . |

|

Moreover, the function is also adjusted so that each node’s internal list has at most chunk candidates at any stage of the algorithm (see Algorithm 6). This function internally makes the call to if . The call to causes to restart its enumeration, i.e., after has been invoked, calling will return the first chunk candidate from . Also, the function returns the highest-scoring extended candidate from the square . Note this function is called to get the highest-scoring extended candidate from the successor of . At this point, the content of the internal list of is cleared if . Otherwise, the content of the internal list of is cleared, if . Finally, Algorithm 7 precisely describes the manner in which this enumeration works.

4.4.1. Parallelization

The original authors of the research paper [13] suggest having OKEA run in parallel per square within a layer, but this has a negative effect on the algorithm’s near-optimality property and even on its overall performance since there are squares within a layer that are strongly dependent on others, i.e., for the algorithm to enumerate the successor square, say, within a layer, it requires having information that is obtained during the enumeration of . Hence, this strategy may incur extra computation and is also difficult to implement.

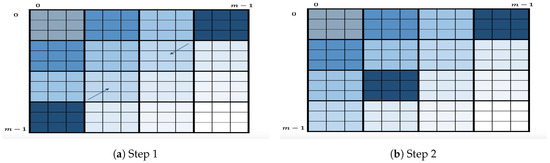

4.4.2. Variant

As a variant of this algorithm, we propose to slightly change the definition of layer. Here, a layer consists of all the squares within a secondary diagonal, as shown in Figure 4. The variant will follow the same process as the original algorithm, i.e., enumeration layer by layer starting at the first secondary diagonal. Within each layer, it will first enumerate the two square corners by applying OKEA on it. Once one of two squares is enumerated, let us say , its successor will be inserted in S as long as such insertion is possible. The algorithm will continue the enumeration by applying OKEA on the updated S and so on. This algorithm is motivated by the intuition that enumerating secondary diagonals may improve the quality of order of output key candidates, i.e., it may be closer to optimal. This variant, however, may have a potential disadvantage in the multidimensional case because it strongly depends on having all the previously enumerated chunk candidates of both dimension x and y stored. To illustrate this, let us suppose that this square is to be inserted. Then, the algorithm needs to insert its highest-scoring extended candidate, , into the queue. Hence, the algorithm needs to somehow have both and readily accessible when needed. This implies the need to store them when they are being enumerated (in previous layers). Comparatively, the original algorithm only requires having the previously generated chunk candidates of both dimension x and y stored, which is advantageous in terms of memory consumption.

Figure 4.

Geometric representation of the key enumeration for variant.

4.5. A Simple Stack-Based, Depth-First Key Enumeration Algorithm

We next present a memory-efficient, nonoptimal key enumeration algorithm that generates key candidates of which their total scores are within a given interval that is based on the algorithm introduced by Martin et al. in the research paper [16]. We note that the original algorithm is fairly efficient while generating a new key candidate; however, its overall performance may be negatively affected by its use of memory, since it was originally designed to store each new generated key candidate, each of which is tested only once the algorithm has completed the enumeration. Our variant, however, makes use of a stack (last-in-first-out queue) during the enumeration process. This helps in maintaining the state of the algorithm. Each newly generated key candidate may be tested immediately, and there is no need for candidates to be stored for future processing.

Our variant basically performs a depth-first search in an undirected graph G originated from the lists of chunk candidates . This graph G has vertices, each of which represents a chunk candidate. Each vertex is connected to the vertices . At any vertex , the algorithm will check if plus an accumulated score is within the given interval . If so, it will select the chunk candidate for the chunk i and travel forward to the vertex , or else, it will continue exploring and attempt to travel to the vertex . Otherwise, it will travel backwards to a vertex from the previous chunk , when there is no suitable chunk candidate for the current chunk i.

As can be noted, this variant uses a simple backtracking strategy. In order to speed up the pruning process, we will make use of two precomputed tables . The entry holds the global minimum (maximum) value that can be reached from chunk i to chunk . In other words,

with

Additionally, note that when each list of chunk candidates , is in decreasing order based on the score component of its chunk candidates, we can compute the entry by computing

and

Therefore, the basic variant is sped up by computing maxS (minS), which is the maximum(minimum) score that can be obtained from the current chunk candidate, and then by checking if the intersection of the intervals and is not empty.

4.5.1. Setup

We now introduce a couple of tools that we will use to describe the algorithm, using the following notations. will denote a stack. This data structure supports two basic methods [30]. Firstly, the method removes the element at the top of this stack and returns that element as the value of this function. Secondly, the method pushes e onto the top of this stack. This stack S will store 4-tuples of the form , where is the accumulated score at any stage of the algorithm, i and j are the indices for the chunk candidate , and is an array of positive integers holding the indices of the selected chunk candidates, i.e., the chunk candidate is assigned to chunk k and for each k, .

4.5.2. Complete Algorithm

Firstly, at the initialisation stage, the 4-tuple will be inserted into the stack . The main loop of this algorithm will call the function , defined in Algorithm 8, as long as the stack is not empty. Specifically the main loop will call this function to obtain a key candidate of which its score is in the range . Algorithm 8 will then attempt to find such a candidate, and once it has found such a candidate, it will return the candidate to the main loop (at this point, may not be empty). The main loop will get the key candidate, process or test it, and continue calling the function as long as S is not empty. Because of the use of the stack S, the state of Algorithm 8 will not be lost; therefore, each time the main loop calls it, it will return a new key candidate of which its score lies in the interval . The main loop will terminate once all possible key candidates of which their scores are within the interval have already been generated, which will happen once the stack is empty.

| Algorithm 8 outputs a key candidate in the interval . |

|

4.5.3. Memory Consumption

We claim that, at any stage of the algorithm, there are at most 4-tuples stored in the stack S. Indeed, after the stack is initialised, it only contains the 4-tuple . Note that, during the execution of a while iteration, a 4-tuple is removed out of the stack and two new 4-tuples might be inserted. Hence, after s while iterations have been completed, there will be 4-tuples, where , for .

Suppose now that the algorithm is about to execute the while iteration during which the first valid key candidate will be returned. Therefore, During the execution of the while iteration, a 4-tuple will be removed and only a new 4-tuple will be considered for insertion in the stack. Therefore, we have that , since . Applying a similar reasoning, we have for .

4.5.4. Parallelization

One of the most interesting features of the previous algorithm is that it is parallelizable. The original authors suggested as a parallelization method to run instances of the algorithm over different disjoint intervals [16]. Although this method is effective and has a potential advantage as the different instances will produce nonoverlapping lists of key candidates with the instance searching over the first interval producing the most-likely key candidates, it is not efficient since each instance will inevitably repeat a lot of the work done by the other instances. Here, we propose another parallelization method that partitions the search space to avoid the repetition of work.

Suppose that we want to have t parallel, independent tasks to search over a given interval in parallel. Let be the list of chunk candidates for chunk i, .

We first assume that , where is the size of . In order to construct these tasks, we partition into t disjoint, roughly equal-sized sublists . We set each task to perform its enumeration over the given interval but only consider the lists of chunk candidates .

Note that the previous startegy can be easily generalised for . Indeed, first, find the smallest integer l, with , such that . We then construct the list of chunk candidates as follows. For each -tuple , with , the chunk candidate is constructed by calculating and by setting , and then, is added to . We then partition into t disjoint, roughly equal-sized sublists and finally set each task to perform its enumeration over the given interval but only consider the lists of chunk candidates . Note that the workload assigned to each enumerating task is a consequence of the selected method for partitioning the list .

Additionally, both parallelization methods can be combined by partitioning the given interval into disjoint subintervals and by searching each such subinterval with tasks, hence amounting to enumerating tasks.

4.6. Threshold Algorithm

Algorithm 8 shares some similarities with the algorithm Threshold introduced in the research paper [14], since Threshold also makes use of an array (partialSum) similar to the array minArray to speed up the pruning process. However, Threshold works with nonnegative integer values (weights) rather than scores. Threshold restricts the scores to weights such that the smallest weight is the likeliest score by making use of a function that converts scores into weights [14].

Assuming the scores have already been converted to weights, Threshold first sorts each list of chunk candidates in ascending order based on the score/weight component of its chunk candidates. It then computes the entries of partialSum by first setting and then by computing

Threshold then enumerates all the key candidates of which their accumulated total weight lies in a range of the form , where is a parameter. To do so, it performs a similar process to Algorithm 8 by using its precomputed table (partialSum) to avoid useless paths, hence improving the pruning process. This enumeration process performed by Threshold is described in Algorithm 9.

According to its designers, this algorithm may perform a nonoptimal enumeration to a depth of if some adjustments are made in the data structure L used to store the key candidates. However, its primary drawback is that it must always start enumerating from the most likely key. Consequently, whilst the simplicity and relatively strong time complexity of Threshold is desirable, in a parallelized environment, it can only serve as the first enumeration algorithm (or can only be used in the first search task). Threshold, therefore, was not implemented and, hence, is not included in the comparison made in Section 5.

| Algorithm 9 enumerates all key candidate in the interval . |

|

4.7. A Weight-Based Key Enumeration Algorithm

In this subsection, we will describe a nonoptimal enumeration algorithm based on the algorithm introduced in the research paper [12]. This algorithm differs from the original algorithm in the manner in which this algorithm builds a precomputed table (iRange) and uses it during execution to construct key candidates of which their total accumulated score is equal to a certain accumulated score. This algorithm shares similarities with the stack-based, depth-first key enumeration algorithm described in Section 4.5 because both algorithms essentially perform a depth-first search in the undirected graph G. However, this algorithm controls pruning by the accumulated total score that a key candidate must reach to be accepted. To achieve this, the scores are restricted to positive integer values (weights), which may be derived from a correlation value in a side-channel analysis attack.

This algorithm starts off by generating all key candidates with the largest possible accumulated total weight and then proceeds to generate all key candidates of which their accumulated total weight are equal to the second largest possible accumulated total weight , and so forth, until it generates all key candidates with the minimum possible accumulated total weight . To find a key candidate with a weight equal to a certain accumulated weight, this algorithm makes use of a simple backtracking strategy, which is efficient because impossible paths can be pruned early. The pruning is controlled by the accumulated weight that must be reached for the solution to be accepted. To achieve a fast decision process during backtracking, this algorithm precomputes tables for minimal and maximal accumulated total weights that can be reached by completing a path to the right, like the tables minArray and maxArray introduced in Section 4.5. Additionally, this algorithm precomputes an additional table, iRange.

Given and , the entry points to a list of integers , where each entry represents a distinct index of the list , i.e., for . The algorithm uses these indices to construct a chunk candidate with an accumulated score w from chunk i to chunk .

In order to compute this table, we use the observation that for a given entry of , the list , with , must be defined and be nonempty. So we first set the entry to and then proceed to compute the entries for and . Algorithm 10 describes precisely how this table is precomputed.

| Algorithm 10 precomputes the table iRange. |

|

4.7.1. Complete Algorithm

Algorithm 11 describes the backtracking strategy more precisely, making use of the precomputed tables for pruning impossible paths. The integer array TWeights contains accumulated weights in a selected order, where an entry must satisfy that the list is non-empty, i.e., . This helps in constructing a key candidate with an accumulated score w from chunk 0 to chunk . In particular, TWeights may be set to , i.e., the array containing all possible accumulated scores that can be reached from chunk 0 to chunk .

Furthermore, the order in which the elements in the array TWeights are arranged is important. For this array , for example, the algorithm will first enumerate all key candidates with accumulated weight and then all those with accumulated weight and so on. This guarantees a certain quality, since good key candidates will be enumerated earlier than worse ones. However, key candidates with the same accumulated weight will be generated in no particular order, so a lack of precision in converting scores to weights will lead to some decrease of quality.

| Algorithm 11 enumerates key candidates for given weights. |

|

Algorithm 11 makes use of the table with entries, each of which is a 2-tuple of the form with and integers. For a given tuple , the component is an index of some list , with , while is the corresponding value, i.e., . The value of allows the algorithm to control if the list has been traveled completely or not, while the second component allows the algorithm to retrieve the chunk candidate of index of . This is done to avoid recalculating each time it is required during the execution of the algorithm.

We will now analyse Algorithm 11. Suppose that ; hence, . The algorithm will then set to , with being the integer from the entry of index 0 of , and then set to w (lines 3 to 5). We claim that the main while loop (lines 6 to 23) at each iteration will compute for such that the key candidate constructed at line 12 will have an accumulated score w.

Let us set . We know that the list is non-empty; hence, for any entry in the list , the list is non-empty, where

Likewise, for any entry in the list , the list is non-empty, where

Hence, for , we have that, for any given entry in the list , the list is non-empty, where

Note that, when , the list is non-empty and .

Given are already set for some ; the first inner while loop (lines 7 to 11) will set , where holds the entry of index 0 of , for . Therefore, once the while loop ends, and . Hence, the key candidate constructed from the second components will have an accumulated score w. In particular, the first time is set, and so, the first inner while loop will calculate .

Since there may be more than one key candidate with an accumulated score w, the second inner while loop (lines 14 to 19) will backtrack to a chunk , from which a new key candidate with accumulated score w can be constructed. This is done by simply moving backwards (line 15) and updating to until there is an i, , such that .

- If there is such an i, then the instruction at line 21 will update to . This means that the updated value for the second component of will be a valid index in , so will be the new chunk candidate for chunk i. Then, the first inner while loop (lines 7 to 11) will again execute and compute the indices for the remaining chunk candidates in the lists such that the resulting key candidate will have the accumulated score w.

- Otherwise, if , then the main while loop (lines 6 to 23) will end and w will be set to a new value from TWeights, since all key candidates with an accumulated score w have just been enumerated.

4.7.2. Parallelization

Suppose we would like to have t tasks executed in parallel to enumerate key candidates of which the accumulated total weights are equal to those in the array . We can split the array into t disjoint sub-arrays and then set each task to run Algorithm 11 through the sub-array . As an example of a partition algorithm to distribute the workload among the tasks, we set the sub-array to contain elements with indices congruent to i mod t from . Additionally, note that, if we have access to the number of candidates to be enumerated for each score in the array beforehand, we may design a partition algorithm for distributing the workload among the tasks almost evenly.

4.7.3. Run Times

We assume each list of chunk candidates , is in decreasing order based on the score component of its chunk candidates. Regarding the run time for computing the tables maxArray and minArray, note that each entry of the table can be computed as explained in Section 4.5. Therefore, the run time of such an algorithm is .

Regarding the run time for computing iRange, we will analyse Algorithm 10. This algorithm is composed of three For blocks. For each i, , the For loop from line 4 to line 15 will be executed times, where . For each iteration, the innermost For block (lines 6 to 11) will execute simple instructions times. Therefore, once the innermost block has finished, its run time will be , where and are constants. Then, the if block (lines 12 to 14) will be attempted and its run time will be , where is another constant. Therefore, the run time for an iteration of the For loop (lines 4 to 15) will be . Therefore, the run time of Algorithm 10 is . More specifically,

As noted, this run time depends heavily on . Now, the size of the range relies on the scaling technique used to get a positive integer from a real number. The more accurate the scaling technique is, the more different integer scores there will be. Hence, if we use an accurate scaling technique, we will probably get larger .

We will analyse the run time for Algorithm 11 to generate all key candidates of which their total accumulated weight is w. Let us assume there are key candidates of which their total accumulated score is equal to w.

First, the run time for instructions at lines 3 to 5 is constant. Therefore, we will only focus on the while loop (lines 6 to 23). In any iteration, the first inner while loop (lines 7 to 11) will execute and compute the indices for the remaining chunk candidates in the lists , with i starting at any number in , such that the resulting key candidate will have the accumulated score w. Therefore, its run time is at most , where C is a constant, i.e., it is . The instruction at line 12 will combine all chunks from 0 to , and hence, its run time is also . The next instruction will test c, and its run time will depend on the scenario in which the algorithm is being run. Let us assume its run time is , where T is a function.

Regarding the second inner while loop (lines 14 to 19), this loop will backtrack to a chunk i with , from which a new key candidate with accumulated score w can be constructed. This is done by simply moving backwards while computing some simple operations. Therefore, the run time for the second inner while loop is at most , where D is a constant, i.e., it is . Therefore, the run time for generating all key candidates of which the total accumulated score is w will be .

4.7.4. Memory Consumption

Besides the precomputed tables, it is easy to see that Algorithm 11 makes use of negligible memory while enumerating key candidates. Indeed, testing key candidates is done on the fly to avoid storing them during enumeration. However, the table iRange may have many entries.

Let be the number of entries of the table iRange. Line 2 of Algorithm 10 will create the entry that points to the list . Hence, after the instruction at line 2 has been executed, . Let us consider the For block from line 4 to line 15. For each i, , let be the set of different values w in the range such that is non-empty. After the iteration for i has been executed, the table iRange will have new entries, each of which will point to a non-empty list, with Therefore, after Algorithm 10 has completed its execution.

Note that may increase if the range is large. The size of this interval relies on the scaling technique used to get a positive integer from a real number. The more accurate the scaling technique is, the more different integer scores there will be. Hence, if we use an accurate scaling technique, we will probably get larger , making it likely for to increase. Therefore, the table iRange may have many entries.

Regarding the number of bits used in memory to store the table iRange, let us suppose that an integer is stored in bits and that a pointer is stored in bits. Once Algorithm 10 has completed its execution, we know that will point to the list , with and Moreover, by definition, we know that the list will be the list , while any other list , and , will have entries, with . Therefore, the number of bits iRange occupies in memory after Algorithm 11 has completed its execution is

Since , we have

4.8. A Key Enumeration Algorithm using Histograms

In this subsection, we will describe a nonoptimal key enumeration algorithm introduced in the research paper [17].

4.8.1. Setup

We now introduce a couple of tools that we will use to describe the sub-algorithms used in the algorithm of the research paper [17], using the following notations: H will denote a histogram, will denote a number of bins, b will denote a bin, and x will denote a bin index.

Linear Histograms

The function creates a standard histogram from the list of chunk candidates with linearly spaced bins.

Given a list of chunk candidates , the function createHist will first calculate both the minimum score and maximum score among all the chunk candidates in . It will then partition the interval into subintervals , where It then will proceed to build the list of size . The entry of will point to a list that contains all chunk candidates from such that their scores lie in . The returned standard histogram is therefore stored as the list of which its entries will point to lists of chunk candidates. For a given bin index x, outputs the list of chunk candidates contained in the bin of index x of Therefore, is the number of chunk candidates in the bin of index x of . The run time for is .

Convolution

This is the usual convolution algorithm which computes from two histograms and of sizes and , respectively, where The computation of is done efficiently by using Fast Fourier Transformation (FFT) for polynomial multiplication. Indeed, the array is seen as the coefficient representation of for In order to get , we multiply the two polynomials of degree-bound in time , with both the input and output representations in coefficient form [30]. The convoluted histogram is therefore stored as a list of integers.

Getting the Size of a Histogram

The method size() returns the number of bins of a histogram. This method simply returns , where L is the underlying list used to represent the histogram.

Getting Chunk Candidates from a Bin

Given a standard histogram and an index , the method outputs the list of all chunk candidates contained in the bin of index x of , i.e., this method simply returns the list

4.8.2. Complete Algorithm

This key enumeration algorithm uses histograms to represent scores, and the first step of the key enumeration is a convolution of histograms modelling the distribution of the lists of scores. This step is detailed in Algorithm 12.

| Algorithm 12 computes standard and convoluted histograms. |

|

Based on this first step, this key enumeration algorithm allows enumerating key candidates that are ranked between two bounds and . In order to enumerate all keys ranked between the bounds and , the corresponding indices of bins of have to be computed, as described in Algorithm 13. It simply sums the number of key candidates contained in the bins starting from the bin containing the highest scoring key candidates until we exceed and and returns the corresponding indices and

| Algorithm 13 computes the indices’ bounds. |

|

Given the list of histograms of scores H and the indices of bins of between which we want to enumerate, the enumeration simply consists of performing a backtracking over all the bins between and . More precisely, during this phase, we recover the bins of the initial histograms (i.e., before convolution) that were used to build a bin of the convoluted histogram . For a given bin b with index x of , we have to run through all the non-empty bins of indices of such that . Each will then contain at least one and at most chunk candidates of the list that we must enumerate. This leads to storing a table of entries, each of which points to a list of chunk candidates. The list pointed to by the entry holds at least one and at most chunk candidates contained in the bin of the histogram Any combination of these lists, i.e., picking an entry from each list, results in a key candidate.

Algorithm 14 describes more precisely this bin decomposition process. This algorithm simply follows a recursive decomposition. That is, in order to enumerate all the key candidates within a bin b of index x of , it first finds two non-empty bins of indices and of and , respectively. All the chunk candidates in the bin of index of will be added to the key factorisation, i.e., the entry will point to the list of chunk candidates returned by . It then continues the recursion with the bin of index of by finding two non-empty bins of indices and of and , respectively, and by adding all the chunk candidates in the bin of index of to the key factorisation, i.e., will now point to the list of chunk candidates returned by and so forth. Eventually, each time a factorisation is completed, Algorithm 14 calls the function processKF, which takes as input the table kf. The function processKF, as defined in Algorithm 15, will compute the key candidates from kf. This algorithm basically generates all the possible combinations from the lists . Note that this algorithm may be seen as a particular case of Algorithm 11. Finally, the main loop of this key enumeration algorithm simply calls Algorithm 14 for all the bins of , which are between the enumeration bounds .

| Algorithm 14 performs bin decomposition. |

|

4.8.3. Parallelization

Suppose we would like to have t tasks executing in parallel to enumerate key candidates that are ranked between two bounds and in parallel. We can then calculate the indices and and then create the array . We then partition the array into t disjoint sub-arrays and finally set each task to call the function decomposeBin for all the bins of with indices in .

As has been noted previously, the algorithm employed to partition the array directly allows efficient parallel key enumeration, where the amount of computation performed by each task may be well balanced. An example of a partition algorithm that could almost evenly distribute the workload among the tasks is as follows:

- Set i to 0.

- If is non-empty, pick an index x in such that is the maximum number or else return

- Remove x from the array , and add it to the array .

- Update i to , and go back to Step 2.

| Algorithm 15 processes table kf. |

|

4.8.4. Memory Consumption

Besides the precomputed histograms, which are stored as arrays in memory, it is easy to see that this algorithm makes use of negligible memory (only table kf) while enumerating key candidates. Additionally, it is important to note that each time the function is called, it will need to generate all key candidates obtained by picking chunk candidates from the lists pointed to by the entries of and to process all of them immediately, since the table may have changed. This implies that, if the processing of key candidates is left to be done after the complete enumeration has finished, each version of the table would need to be stored, which, again, might be problematic in terms of memory consumption.

Regarding how many bits in memory the precomputed histograms consumes, we will analyse Algorithm 12. First, note, for a given list of chunk candidates and , the function will return the standard histogram . This standard histogram will be stored as the list of size . An entry x of will point to a list of chunk candidates. The total number of chunk candidates held by all the lists pointed to by the entries of is . Therefore, the number of bits to store the list is , where is the number of bits to store a pointer and is the number of bits to store a chunk candidate . The total number of bits to store all lists is

Concerning the convoluted histograms, let us first look at . We know that is stored as a list of integers and that these entries can be seen as the coefficients of the resulting polynomial from multiplying the polynomial by . Therefore, the list of integers used to store has entries. Following a similar reasoning to the previous one, we can conclude that the list of integers used to store has entries. Therefore, for a given , the list of integers used to store has entries. The total number of entries of all the convoluted histograms is

As expected, the total number of entries strongly depends on the values and . If an integer is stored in bits, then the number of bits for storing all the convoluted histograms is

4.8.5. Equivalence with the Path-Counting Approach

The stack-based key enumeration algorithm and the score-based key enumeration algorithm can be also used for rank computation (instead of enumerating each path, the rank version counts each path). Similarly, the histogram algorithm can also be used for rank computation by simply summing the size of the corresponding bins in . These two approaches were believed to be distinct from each other. However, Martin et al. in the research paper [31] showed that both approaches are mathematically equivalent, i.e., they both compute the exact same rank when choosing their discretisation parameter correspondingly. Particularly, the authors showed that the binning process in the histogram algorithm is equivalent to the “map to weight” float-to-integer conversion used prior to their path counting algorithm (Forest) by choosing the algorithms’ discretisation parameter carefully. Additionally, in this paper, a performance comparison between their enumeration versions was carried out. The practical experiments indicated that Histogram performs best for low discretisation and that Forest wins for higher parameters.

4.8.6. Variant

A recent paper by Grosso [26] introduced a variant of the previous algorithm. Basically, the author of [26] makes a small adaptation of Algorithm 14 to take into account the tree-like structure used by their rank estimation algorithm. Also, the author claims this variant has an advantage over the previous one when the memory needed to store histograms is too large.

4.9. A Quantum Key Search Algorithm

In this subsection, we will describe a quantum key enumeration algorithm introduced in the research paper [29] for the sake of completeness. This algorithm is constructed from a nonoptimal key enumeration algorithm, which uses the key rank algorithm given by Martin et al. in the research paper [16] to return a single key candidate (the ) with a weight in a particular range. We will first describe the key rank algorithm. This algorithm restricts the scores to positive integer values (weights) such that the smallest weight is the likeliest score by making use of a function that converts scores into weights [16].

Assuming the scores have already been converted to weights, the rank algorithm first constructs a matrix with size of for a given range as follows. For and , the entry contains the number of chunk candidates such that their total score plus w lies in the given range. Therefore, is given by the number of chunk candidates , such that .

On the other hand, for and , the entry contains the number of chunk candidates that can be constructed from the chunk i to the chunk such that their total score plus w lies in the given range. Therefore, may be calculated as follows. For if

Algorithm 16 describes precisely the manner in which the matrix is computed. Once matrix is computed, the rank algorithm will calculate the number of key candidates in the given range by simply returning . Note that , by construction, contains the number of chunk candidates, with initial weight 0, that can be constructed from the chunk 0 to the chunk such that their total weight lies in the given range. Algorithm 17 describes the rank algorithm.

| Algorithm 16 creates the matrix . |

|

| Algorithm 17 returns the number of key candidates in a given range. |

|

With the help of Algorithm 17, an algorithm for requesting particular key candidates is introduced, which is described in Algorithm 18. It returns the key candidate with weight between and . Note that the correctness of the function getKey follows from the correctness of and that the algorithm is deterministic, i.e., given the same r, it will return the same key candidate k. Also, note that the key candidate does not have to be the most likely key candidate in the given range.

Equipped with the getkey algorithm, the authors of [29] introduced a nonoptimal key enumeration algorithm to enumerate and test all key candidates in the given range. This algorithm works by calling the function getKey to obtain a key candidate in the given range until there are no more key candidates in the given range. Also, for each obtained key candidate k, it is tested by using a testing function returning either 1 or 0. Algorithm 19 precisely describes how this nonoptimal key enumeration algorithm works.

| Algorithm 18 returns the key candidate with weight between and |

|

Combining together the function keySearch with techniques for searching over partitions independently, the authors of the research paper [29] introduced a key search algorithm, described in Algorithm 20. The function KS works by partitioning the search space into sections of which the size follows a geometrically increasing sequence using a size parameter . This parameter is chosen such that the number of loop iterations is balanced with the number of keys verified per block.

| Algorithm 19 enumerates and tests key candidates with weight between and |

|

| Algorithm 20 searches key candidates in a range with a size of e approximately. |

|

Having introduced the function KS, the authors of the research paper [29] transformed it into a quantum key search algorithm that heavily relies on Grover’s algorithm [32]. This is a quantum algorithm to solve the following problem: Given a black box function which returns 1 on a single input x and 0 on all other inputs, find x. Note that, if there are N possible inputs to the black box function, the classical algorithm uses queries to the black box function since the correct input might be the very last input tested. However, in a quantum setting, a version of Grover’s algorithm solves the problem using queries, with certainty [32,33]. Algorithm 21 describes the quantum search algorithm, which achieves a quadratic speedup over the classical key search (Algorithm 20) [29]. However, it would require significant quantum memory and a deep quantum circuit, making its practical application in the near future rather unlikely.

| Algorithm 21 performs a quantum search of key candidates in a range with a size of e approximately. |

|

5. Comparison of Key Enumeration Algorithms

In this section, we will make a comparison of the previously described algorithms. We will show some results regarding their overall performance by computing some measures of interest.

5.1. Implementation

All the algorithms discussed in this paper were implemented in Java. This is because the Java platform provides the Java Collections Framework to handle data structures, which reduces programming effort, increases speed of software development and quality, and is reasonably performant. Furthermore, the Java platform also easily supports concurrent programming, providing high-level concurrency application programming interfaces (APIs).

5.2. Scenario

In order to make a comparison, we will consider a common scenario in which we will run the key enumeration algorithms to measure their performance. Particularly, we generate a random secret key encoded as a bit string of 128 bits, which is represented as a concatenation of 16 chunks, each on 8 bits.

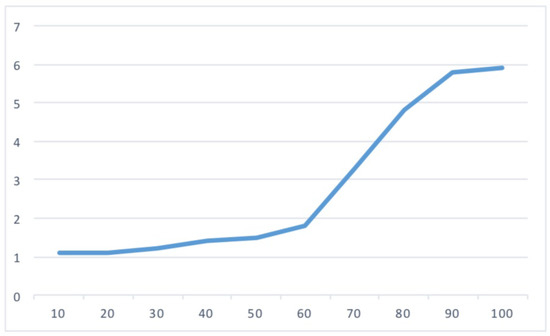

We use a bit-flipping model, as described in Section 3.2. We particularly set and to particular values, namely and , respectively. We then create an original key (AES key) by picking a random value for each chunk i, where . Once this key has been generated, its bits will be flipped according to the values and to obtain a noisy version of it, . We then use the procedure described in Section 3.2 to assign a score to each of the 256 possible candidate values for each chunk i. Therefore, once this algorithm has ended its execution, there will be 16 lists, each having 256 chunk candidates.