Entropy Churn Metrics for Fault Prediction in Software Systems

Abstract

1. Introduction

2. Related Work

3. Entropy Churn Metrics

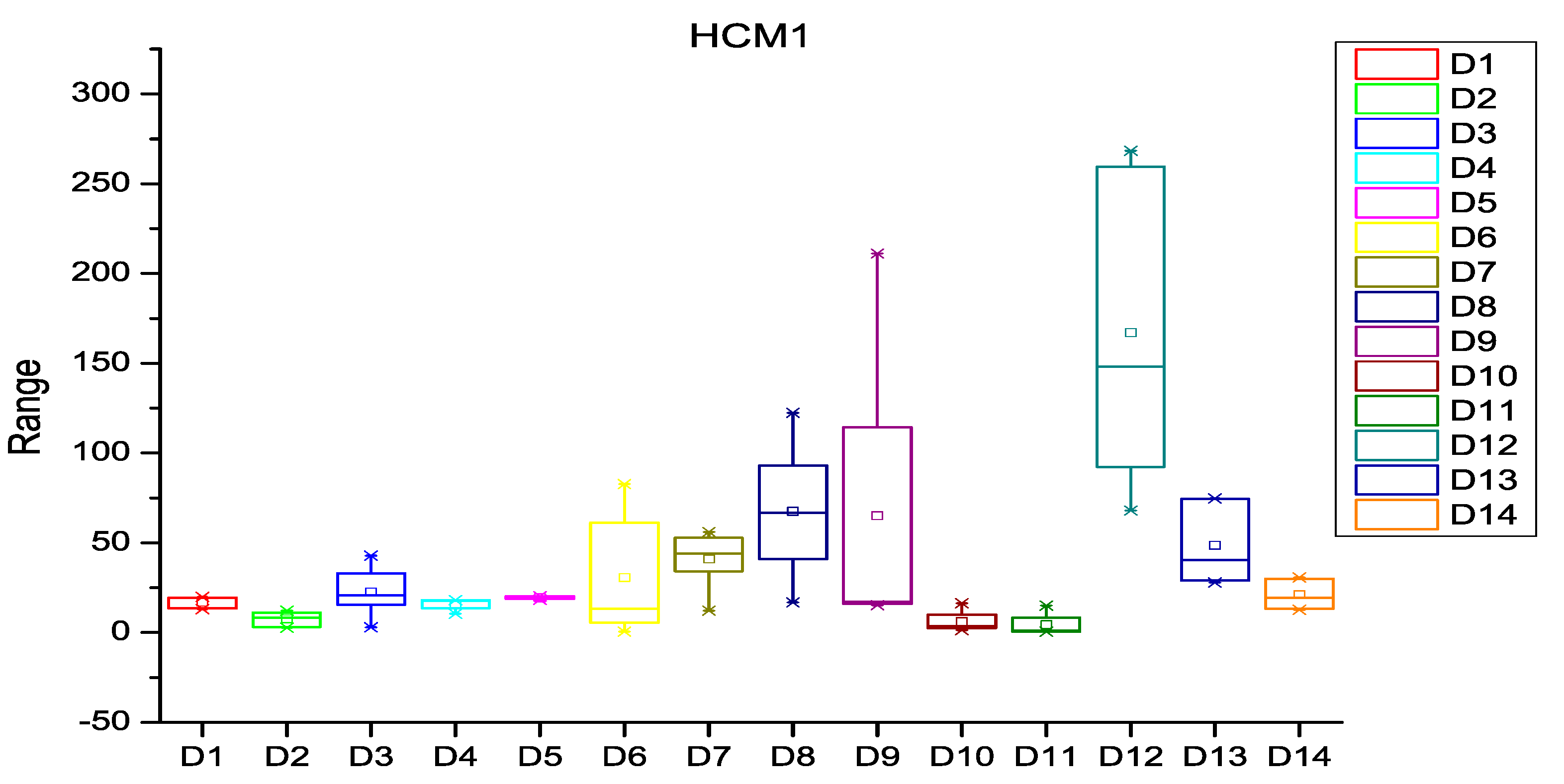

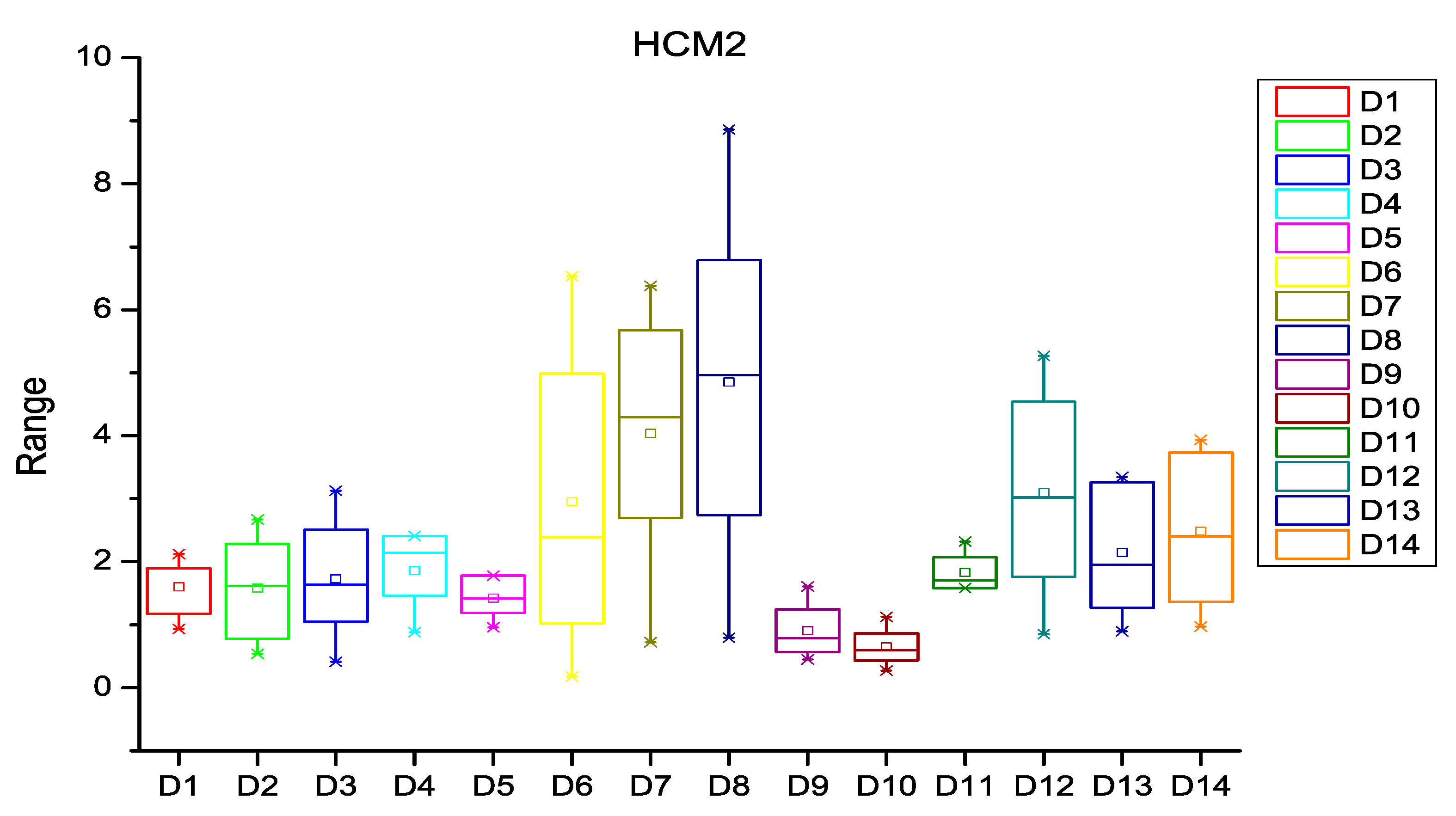

3.1. History Complexity Metrics

- HCM1: Complexitykb = 1, Complexity associated with file b in period k is equal to one and HCPF is equal to the value of Normalized Entropy.

- HCM2: Complexitykb = Pb, Complexity is equal to the probability of changes in file b in period k for files modified in that period. Otherwise, it is equal to zero.

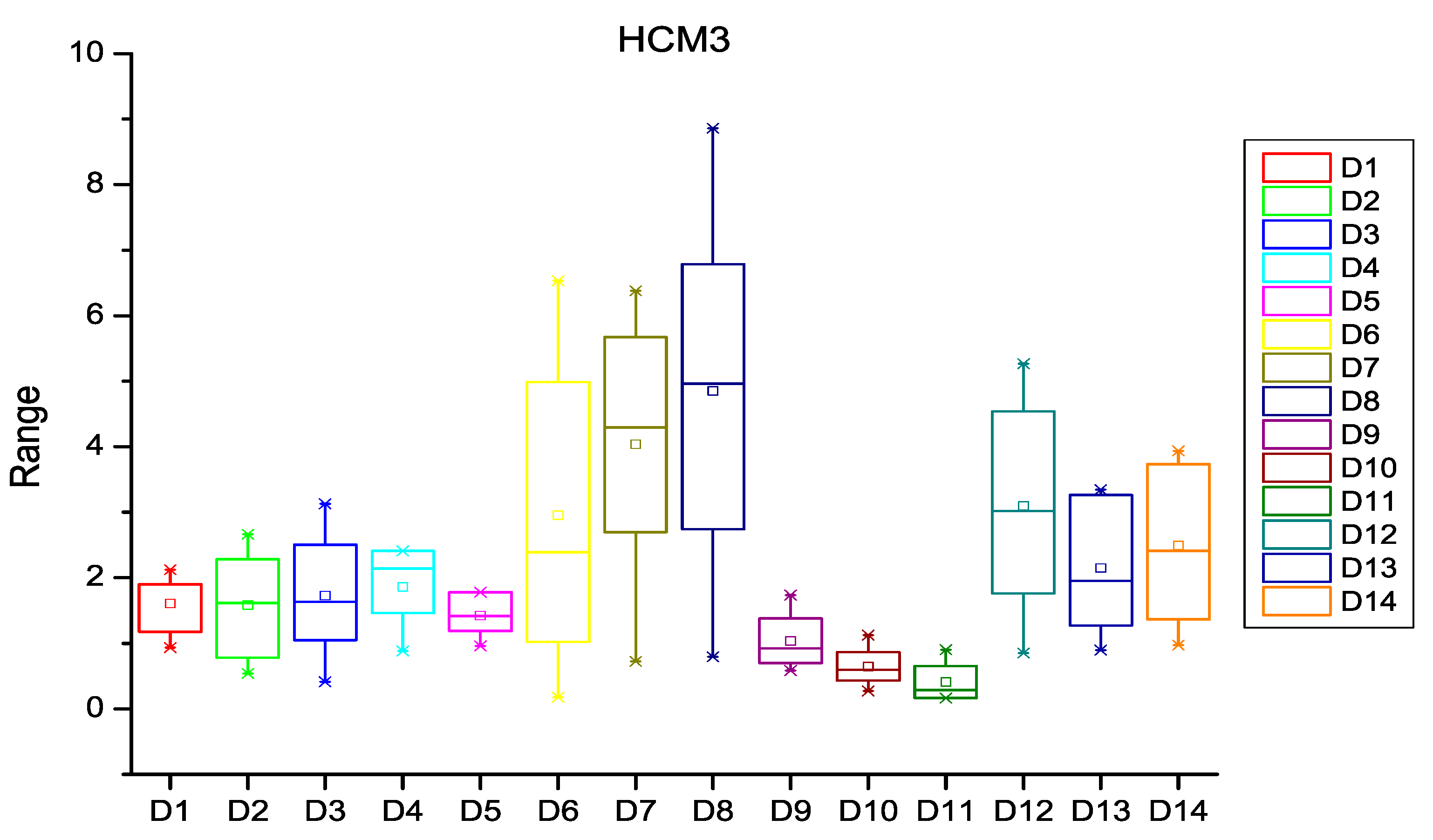

- HCM3: Complexitykb = 1/|Mk|, Complexity is equal to reciprocal of number of files modified in period k for files modified in that period. Otherwise, it is equal to zero.

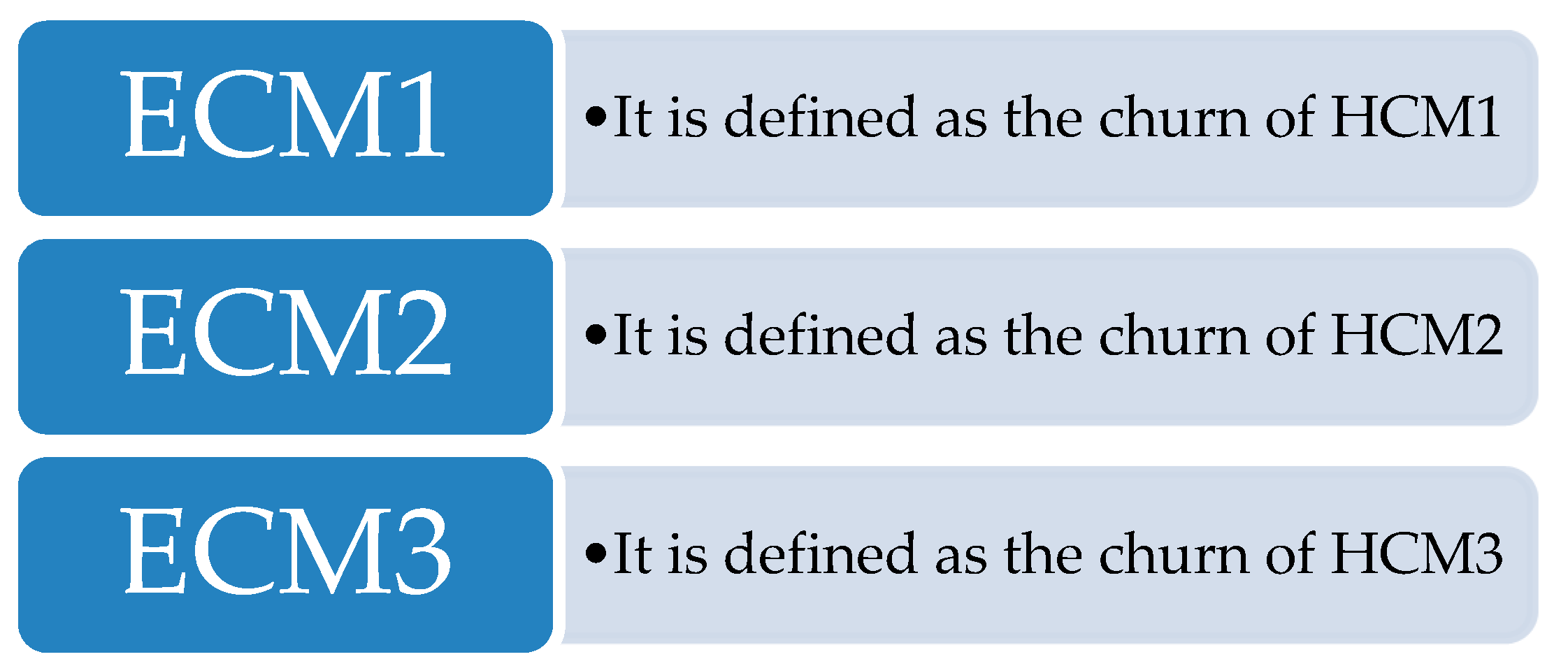

3.2. Churn of Source Code Metrics

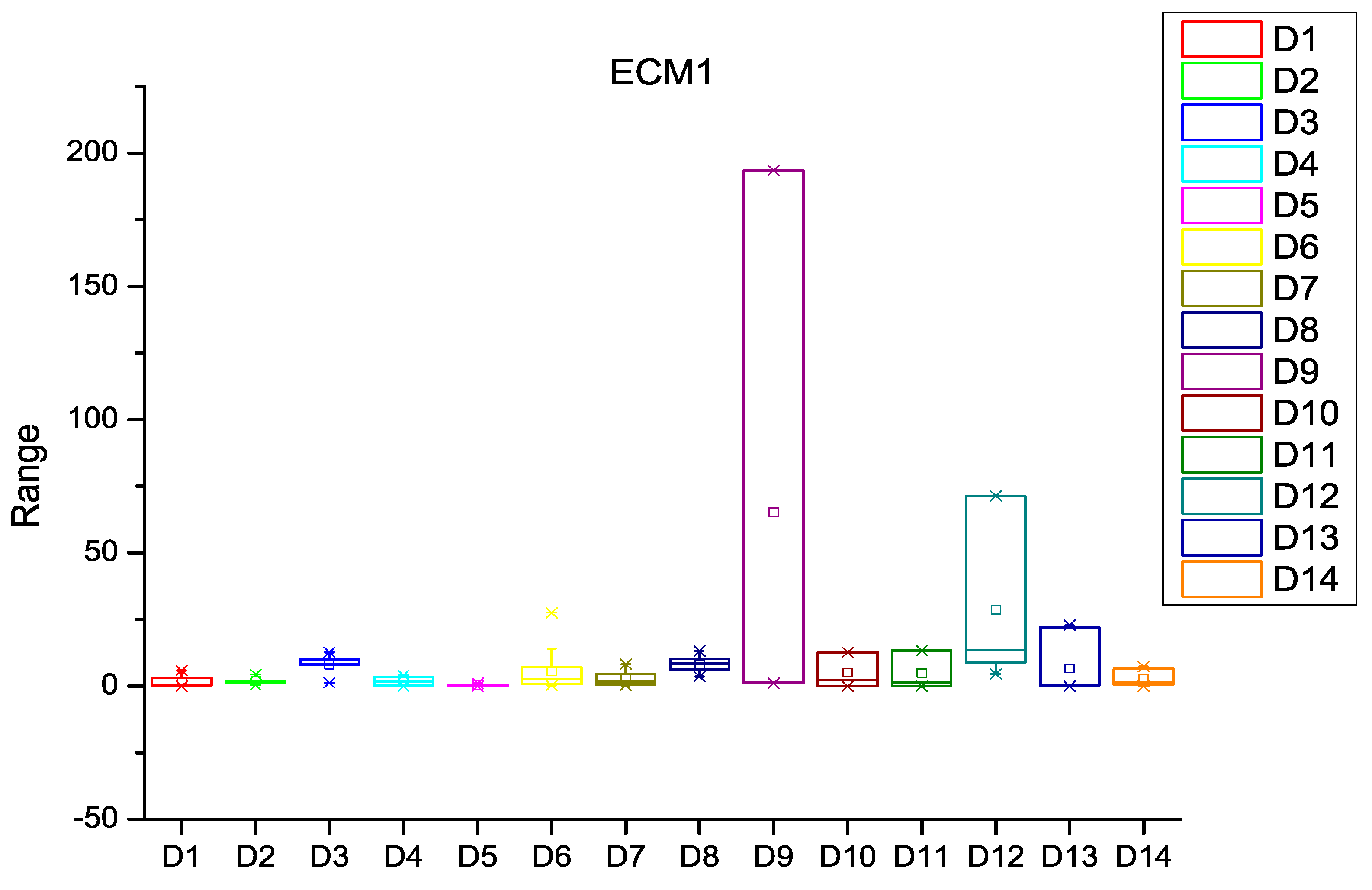

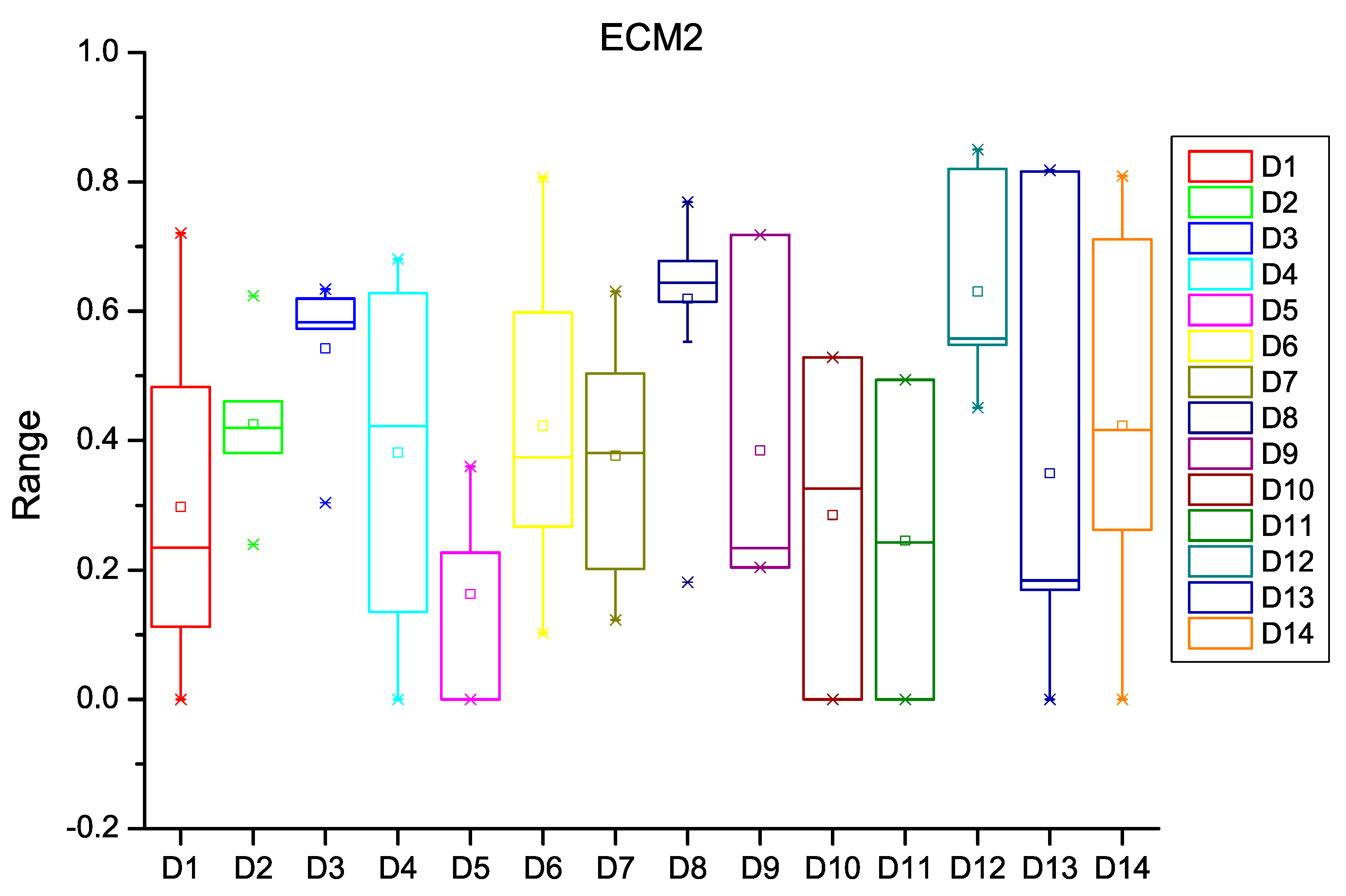

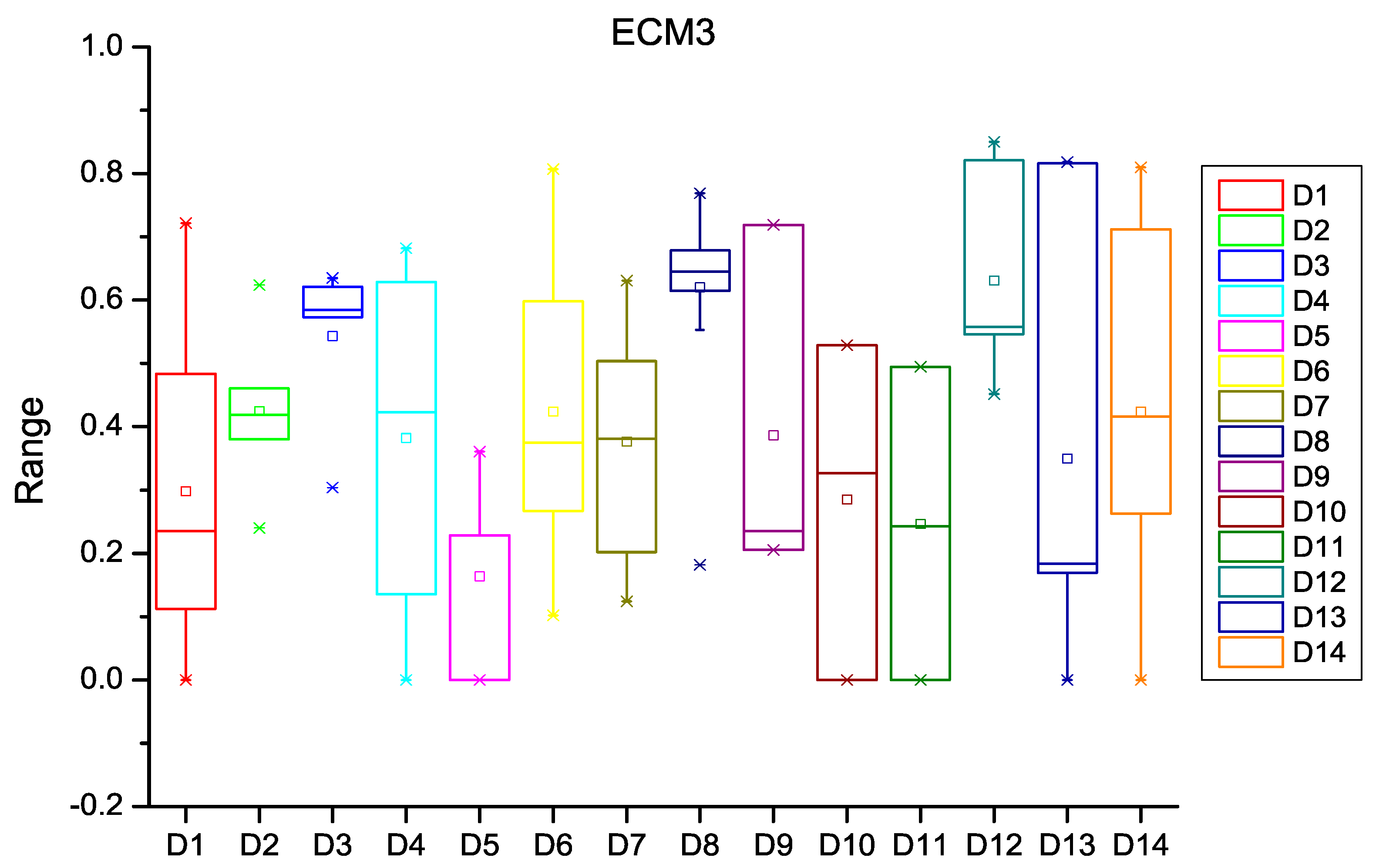

3.3. Entropy Churn Metrics (ECM)

4. Research Methodology

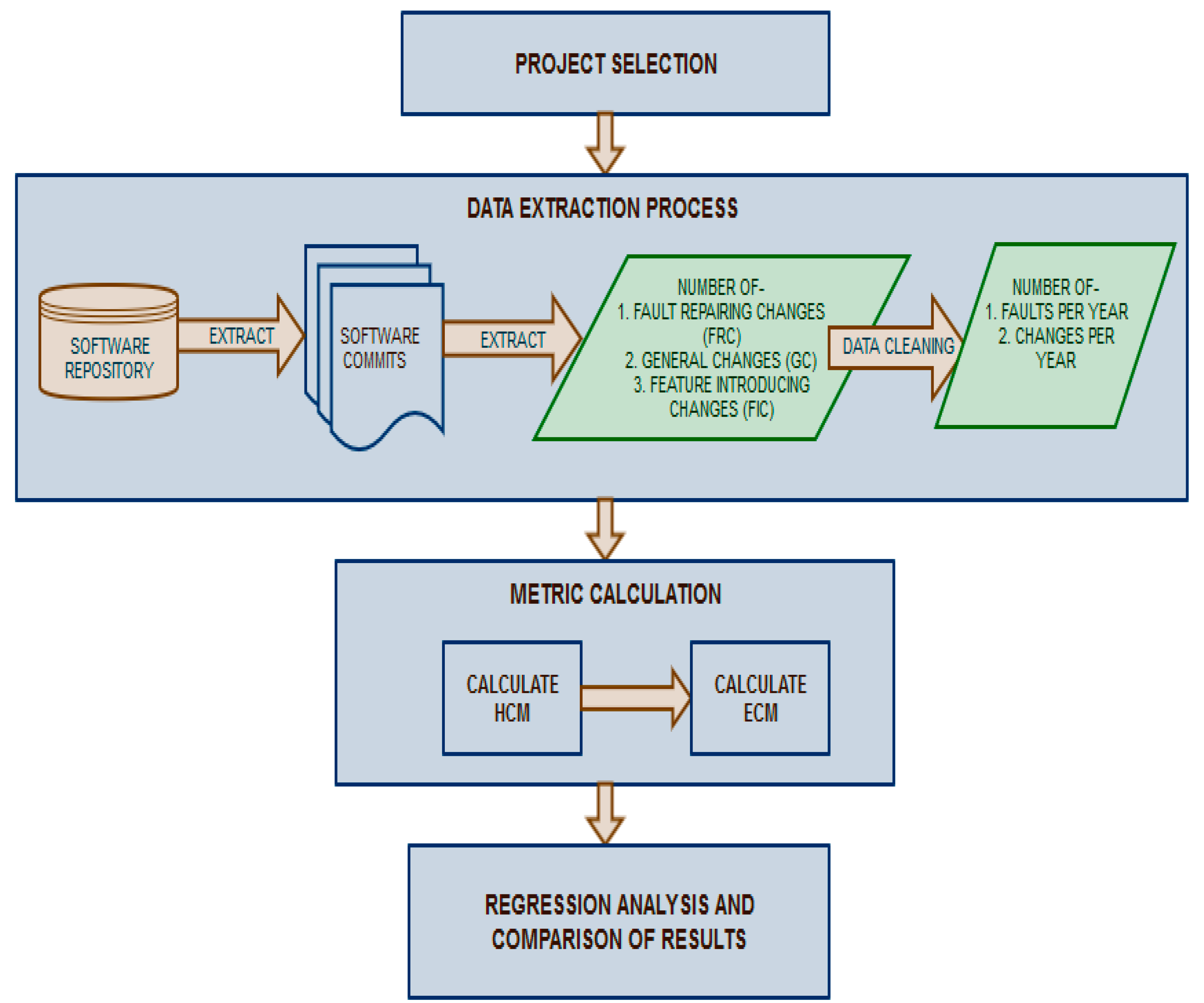

- Project Selection: The first step was to determine the software projects and subsystems to be studied. The details of the software projects considered in this study are given in Section 4.1.

- Data Extraction: The second step was to collect the number of changes per year and the number of faults per year in the selected subsystems of the software projects under study. This was done by first extracting the commits from software repositories and then analyzing the commits to determine the type of change done in the commit. The data regarding the number of changes of each type was then cleaned to determine the number of changes and faults per year. The data extraction process along with metric calculation is described in detail in Section 4.2.

- Metric Calculation: The third step was metric calculation, which used the data collected in step two for calculating HCM and ECM. The data extraction and metric calculation processes are explained in Section 4.2.

- Regression Analysis and Comparison of Results: In the next step, the two metrics (i.e., ECM and HCM) were compared for fault prediction using regression analysis. Finally, the results were analyzed and the study was concluded. The results are analyzed and discussed in Section 5 and Section 6, respectively.

4.1. Selected Software Projects and Subsystems

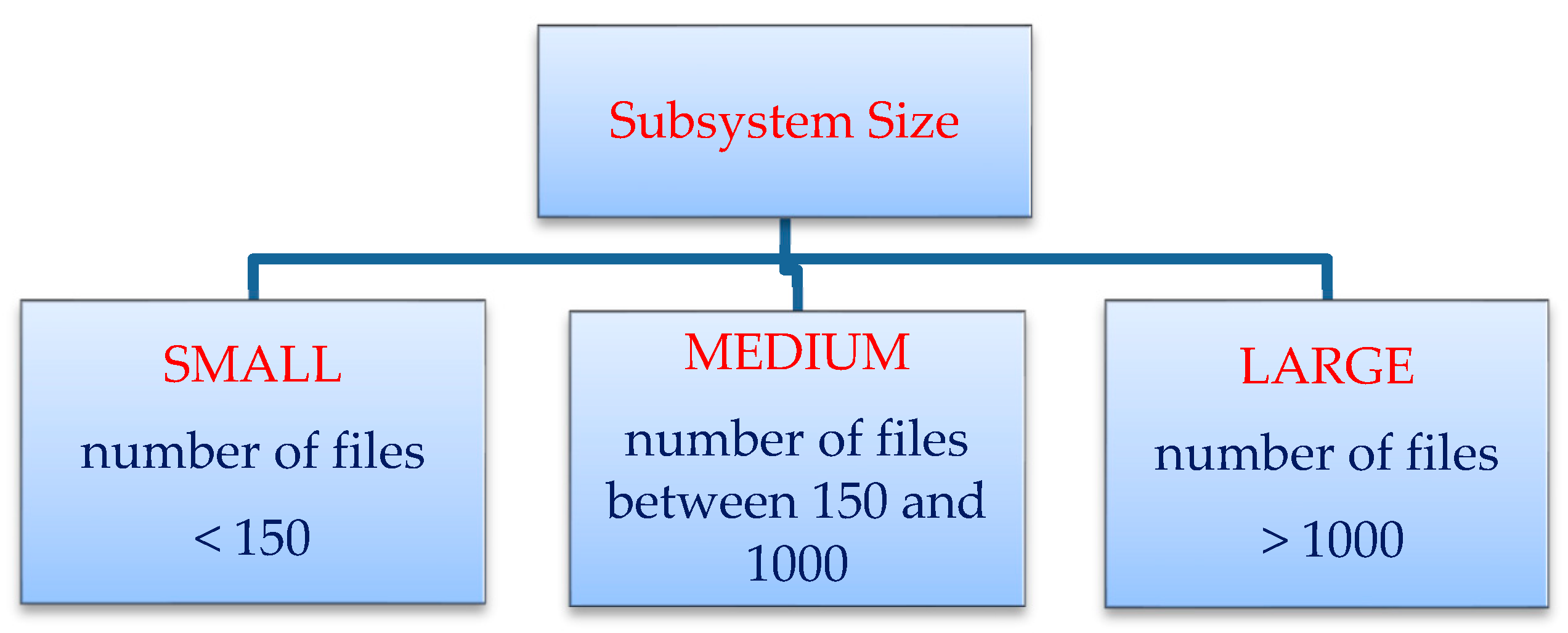

- Size and Lifetime: The selected software subsystems included representatives from small-, medium-, and large-sized systems. The selected subsystems included both systems that had been released for several years as well as new systems that had been released only a few years ago. This criterion enabled us to determine the impact of size of the system on the prediction power of HCM and ECM.

- Programming Language: The selected software subsystems were programmed using different programming languages, namely, Java, C, and C++. Subsystems with different programming languages were selected so that the impact of programming language of the subsystem on prediction performance of ECM and HCM could be studied.

- Availability of Data: All data regarding the changes in the software subsystems was extracted from open source software repositories that are accessible to all.

4.2. Data Extraction and Metrics Calculation

- Fault Repairing Changes (FRCs): the changes that are made to the software system for removing a bug/fault. These changes usually represent the fault resolution process and do not represent the code change process.

- General Changes (GCs): the changes that are done for maintenance purposes and do not reflect any changed feature in the code. Examples of general changes include changes to copyright files and reindentation of the code.

- Feature-Introducing Changes (FICs): the changes that are done with the intention of enhancing the features of the software system. These changes truly reflect the code change process.

5. Results

- Feature selection method: M5 Prime

- Eliminate collinear features: TRUE

- Minimum tolerance: 0.05

- Use bias: TRUE

- Ridge: 1.0 × 10−8

- Distribution of number of faults per year

- No. of files in the subsystem

- Programming language

5.1. Distribution of Faults

5.2. System Size

5.3. Language

6. Discussion

- It was observed that for normal distribution of faults, sometimes ECM performed better and sometimes HCM gave better results. But for non-normal distribution of faults, HCM always gave better or comparable results to ECM. Thus, while any of ECM and HCM may be used for prediction when using the distribution of faults, HCM should be the preferred metric when the distribution is non-normal.

- When the performance of HCM and ECM was analyzed with respect to the size of the system, it was observed that for small-sized systems, HCM gave better or comparable results to ECM, but for medium- and large-sized systems, ECM outperformed HCM. Thus it can be recommended to use ECM for medium- and large-sized systems and HCM for small-sized systems.

- Lastly, on analyzing the performance of ECM and HCM with regard to the programming language of the system, it was observed that when the programming language of the subsystem was C/C++, the results obtained using HCM were always better or comparable to those obtained using ECM. It was also observed that when the programming language of the system was Java, either of ECM and HCM gave better results. Hence, it can be suggested that for systems programmed in C/C++, the preferred metric should be HCM, while for systems programmed in Java, either of ECM and HCM may be preferred.

7. Threats to Validity

7.1. Threats to Internal Validity

7.2. Threats to External Validity

8. Conclusion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fenton, N.; Bieman, J. Software Metrics: ARigorous and Practical Approach, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2014; ISBN 9781439838235. [Google Scholar]

- Chidamber, S.R.; Kemerer, C.F. A metrics suite for object oriented design. IEEE Transa.Softw.Eng. 1994, 20, 476–493. [Google Scholar] [CrossRef]

- Khoshgoftaar, T.M.; Allen, E.B.; Goel, N.; Nandi, A.; McMullan, J. Detection of software modules with high debug code churn in a very large legacy system. In Proceedings of the Seventh International Symposium on Software Reliability Engineering, White Plains, NY, USA, 30 October–2 November 1996; pp. 364–371. [Google Scholar]

- Bernstein, A.; Ekanayake, J.; Pinzger, M. Improving defect prediction using temporal features and nonlinear models. In Proceedings of the Ninth International Workshop on Principles of Software Evolution (IWPSE 2007) in Conjunction with the 6th ESEC/FSE Joint Meeting, Dubrovnik, Croatia, 3–4 September 2007; ACM: New York, NY, USA, 2007; pp. 11–18. [Google Scholar]

- D’Ambros, M.; Lanza, M.; Robbes, R. An extensive comparison of bug prediction approaches. In Proceedings of the 7th IEEE Working Conference on Mining Software Repositories (MSR), Cape Town, South Africa, 2–3 May 2010; pp. 31–41. [Google Scholar]

- D’Ambros, M.; Lanza, M.; Robbes, R. Evaluating defect prediction approaches: A benchmark and an extensive comparison. Empir. Softw. Eng. 2012, 17, 531–577. [Google Scholar] [CrossRef]

- Hassan, A.E. Predicting faults using the complexity of code changes. In Proceedings of the 31st International Conference on Software Engineering, Vancouver, BC, Canada, 16–24 May 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 78–88. [Google Scholar]

- Gou, X.; Xu, Z.; Liao, H. Hesitant fuzzy linguistic entropy and cross-entropy measures and alternative queuing method for multiple criteria decision making. Inf. Sci. 2017, 388, 225–246. [Google Scholar] [CrossRef]

- Pramanik, S.; Dey, P.P.; Smarandache, F.; Ye, J. Cross Entropy Measures of Bipolar and Interval Bipolar Neutrosophic Sets and Their Application for Multi-Attribute Decision-Making. Axioms 2018, 7, 21. [Google Scholar] [CrossRef]

- Keum, J.; Kornelsen, K.; Leach, J.; Coulibaly, P. Entropy applications to water monitoring network design: A review. Entropy 2017, 19, 613. [Google Scholar] [CrossRef]

- Sahoo, M.M.; Patra, K.C.; Swain, J.B.; Khatua, K.K. Evaluation of water quality with application of Bayes’ rule and entropy weight method. Eur. J. Environ. Civil Eng. 2017, 21, 730–752. [Google Scholar] [CrossRef]

- Wu, H.; Li, Y.; Bi, X.; Zhang, L.; Bie, R.; Wang, Y. Joint entropy based learning model for image retrieval. J. Vis. Commun. Image Represent. 2018, 55, 415–423. [Google Scholar] [CrossRef]

- Gu, R. Multiscale Shannon entropy and its application in the stock market. Phys. A Stat. Mech. Appl. 2017, 484, 215–224. [Google Scholar] [CrossRef]

- Baldwin, R. Use of maximum entropy modeling in wildlife research. Entropy 2009, 11, 854–866. [Google Scholar] [CrossRef]

- Moser, R.; Pedrycz, W.; Succi, G. A comparative analysis of the efficiency of change metrics and static code attributes for defect prediction. In Proceedings of the 30th International Conference on Software Engineering, Leipzig, Germany, 10–18 May 2008; ACM: New York, NY, USA, 2008; pp. 181–190. [Google Scholar]

- Kim, S.; Zimmermann, T.; Whitehead, E.J.; Zeller, A. Predicting faults from cached history. In Proceedings of the 29th International Conference on Software Engineering, Minneapolis, MN, USA, 20–26 May 2007; IEEE Computer Society: Washington, DC, USA, 2007; pp. 489–498. [Google Scholar]

- Basili, V.R.; Briand, L.C.; Melo, W.L. A validation of object-oriented design metrics as quality indicators. IEEE Trans. Softw. Eng. 1996, 22, 751–761. [Google Scholar] [CrossRef]

- Nikora, A.P.; Munson, J.C. Developing fault predictors for evolving software systems. In Proceedings of the 5th International Workshop on Enterprise Networking and Computing in Healthcare Industry, Sydney, NSW, Australia, 5 September 2004; pp. 338–350. [Google Scholar]

- Nagappan, N.; Ball, T. Use of relative code churn measures to predict system defect density. In Proceedings of the 27th International Conference on Software Engineering, St. Louis, MO, USA, 15–21 May 2005; ACM: New York, NY, USA, 2005; pp. 284–292. [Google Scholar]

- Raja, U.; Hale, D.P.; Hale, J.E. Modeling software evolution defects: a time series approach. J. Softw. Maint. Evol. Res. Pract. 2009, 21, 49–71. [Google Scholar] [CrossRef]

- Wu, W.; Zhang, W.; Yang, Y.; Wang, Q. Time series analysis for bug number prediction. In Proceedings of the 2nd International Conference on Software Engineering and Data Mining (SEDM), Chengdu, China, 23–25 June 2010; pp. 589–596. [Google Scholar]

- Yazdi, H.S.; Pietsch, P.; Kehrer, T.; Kelter, U. Statistical Analysis of Changes for Synthesizing Realistic Test Models. Softw. Eng. Conf. 2013, 225–238. [Google Scholar]

- Yazdi, H.S.; Angelis, L.; Kehrer, T.; Kelter, U. A framework for capturing, statistically modeling and analyzing the evolution of software models. J. Syst. Softw. 2016, 118, 176–207. [Google Scholar] [CrossRef]

- Trienekens, J.J.; Kusters, R.; Kriek, D.; Siemons, P. Entropy based software processes improvement. Softw. Qual. J. 2009, 17, 231–243. [Google Scholar] [CrossRef]

- Allen, E.B.; Gottipati, S.; Govindarajan, R. Measuring size, complexity, and coupling of hypergraph abstractions of software: An information-theory approach. Softw. Qual. J. 2007, 15, 179–212. [Google Scholar] [CrossRef]

- Ma, Z. Analyzing Large-Scale OO Software by Joining Fractal and Entropy Measures. In Proceedings of the 2016 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 15–17 December 2016; pp. 1310–1314. [Google Scholar]

- Kaur, A.; Kaur, K.; Chopra, D. Entropy based bug prediction using neural network based regression. In Proceedings of the 2015 International Conference on Computing, Communication & Automation (ICCCA), Noida, India, 15–16 May 2015; pp. 168–174. [Google Scholar]

- Kaur, A.; Kaur, K.; Chopra, D. Application of Locally Weighted Regression for Predicting Faults Using Software Entropy Metrics. In Proceedings of the Second International Conference on Computer and Communication Technologies, Hyderabad, India, 24–26 July 2015; Springer: New Delhi, India, 2015; pp. 257–266. [Google Scholar]

- Kaur, A.; Kaur, K.; Chopra, D. An empirical study of software entropy based bug prediction using machine learning. Int. J. Syst. Assur. Eng. Manag. 2017, 8, 599–616. [Google Scholar] [CrossRef]

- Radjenović, D.; Heričko, M.; Torkar, R.; Živkovič, A. Software fault prediction metrics: A systematic literature review. Inf. Softw. Technol. 2013, 55, 1397–1418. [Google Scholar] [CrossRef]

- Canfora, G.; Cerulo, L.; Cimitile, M.; Di Penta, M. How changes affect software entropy: an empirical study. Empir. Softw. Eng. 2014, 19, 1–38. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Mozilla Mercurial Repository. Available online: https://hg.mozilla.org/mozilla-central/ (accessed on 27 July 2018).

- GitHub Repository. Available online: https://github.com/ (accessed on 27 July 2018).

- Kaur, A.; Chopra, D. GCC-Git Change Classifier for Extraction and Classification of Changes in Software Systems. In Proceedings of the Internet of Things for Technical Development (IoT4D 2017) Lecture Notes in Networks and Systems Intelligent Communication and Computational Technologies, Gujarat, India, 1–2 April 2017; Springer: Singapore, 2017; Volume 19, pp. 259–267. [Google Scholar]

- Hyman, M.; Vaddadi, P. Mike and Phani’s Essential C++ Techniques; Apress: Berkeley, CA, USA, 1999; Regular Expression Matching; pp. 213–224. ISBN 978-1-893115-04-0. [Google Scholar]

- Seber, G.A.; Lee, A.J. Linear Regression Analysis, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2012; ISBN 978-1-118-27442-2. [Google Scholar]

- RapidMiner Studio. Available online: https://rapidminer.com/products/studio/ (accessed on 2 August 2018).

- Hyndman, R.J.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef]

- Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

| Dataset | HCM1 | HCM2 | HCM3 | ECM1 | ECM2 | ECM3 |

|---|---|---|---|---|---|---|

| D1 | 2.757 | 1.396 | 1.396 | 11.500 | 11.500 | 11.500 |

| D2 | 4.680 | 4.261 | 4.261 | 7.658 | 7.658 | 7.658 |

| D3 | 17.691 | 17.691 | 17.691 | 17.691 | 17.691 | 17.691 |

| D4 | 1.920 | 1.920 | 1.920 | 1.920 | 1.920 | 1.920 |

| D5 | 2.059 | 2.059 | 2.059 | 1.359 | 2.059 | 2.059 |

| D6 | 15.756 | 15.756 | 15.756 | 15.756 | 15.756 | 15.756 |

| D7 | 13.877 | 16.990 | 16.990 | 23.356 | 25.748 | 25.748 |

| D8 | 42.682 | 42.270 | 42.270 | 46.546 | 46.546 | 46.546 |

| D9 | 381.786 | 381.786 | 381.786 | 76.917 | 57.880 | 57.880 |

| D10 | 69.801 | 69.801 | 69.801 | 5.711 | 37.700 | 37.700 |

| D11 | 12.656 | 12.656 | 12.656 | 3.856 | 41.272 | 41.272 |

| D12 | 140.827 | 137.312 | 137.207 | 451.133 | 451.133 | 451.133 |

| D13 | 74.449 | 66.326 | 66.326 | 161.429 | 161.429 | 161.429 |

| D14 | 30.223 | 30.223 | 30.223 | 30.223 | 30.223 | 30.223 |

| Test Statistics | |

|---|---|

| N | 14 |

| Chi-Square | 10.190 |

| df | 5 |

| Asymptotic Significance | 0.070 |

| Dataset | Distribution |

|---|---|

| D1 | Normal |

| D2 | Normal |

| D3 | Normal |

| D4 | Normal |

| D5 | Normal |

| D6 | Normal |

| D7 | Non-normal |

| D8 | Non-normal |

| D9 | Normal |

| D10 | Normal |

| D11 | Normal |

| D12 | Normal |

| D13 | Normal |

| D14 | Non-normal |

| Dataset | No. of Files | Subsystem Size |

|---|---|---|

| D1 | 17 | Small |

| D2 | 18 | Small |

| D3 | 80 | Small |

| D4 | 13 | Medium |

| D5 | 21 | Medium |

| D6 | 61 | Small |

| D7 | 38 | Small |

| D8 | 34 | Small |

| D9 | 2063 | Large |

| D10 | 385 | Medium |

| D11 | 745 | Medium |

| D12 | 132 | Small |

| D13 | 43 | Small |

| D14 | 14 | Small |

| Dataset | Programming Language |

|---|---|

| D1 | JAVA |

| D2 | JAVA |

| D3 | JAVA |

| D4 | JAVA |

| D5 | JAVA |

| D6 | C |

| D7 | C |

| D8 | C |

| D9 | JAVA |

| D10 | JAVA |

| D11 | JAVA |

| D12 | C++ |

| D13 | C++ |

| D14 | C++ |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaur, A.; Chopra, D. Entropy Churn Metrics for Fault Prediction in Software Systems. Entropy 2018, 20, 963. https://doi.org/10.3390/e20120963

Kaur A, Chopra D. Entropy Churn Metrics for Fault Prediction in Software Systems. Entropy. 2018; 20(12):963. https://doi.org/10.3390/e20120963

Chicago/Turabian StyleKaur, Arvinder, and Deepti Chopra. 2018. "Entropy Churn Metrics for Fault Prediction in Software Systems" Entropy 20, no. 12: 963. https://doi.org/10.3390/e20120963

APA StyleKaur, A., & Chopra, D. (2018). Entropy Churn Metrics for Fault Prediction in Software Systems. Entropy, 20(12), 963. https://doi.org/10.3390/e20120963