1. Introduction

Physical processes are naturally separated into two broad classes: reversible and irreversible. Time reversal for the former leads to possible physical processes while for the latter implies manifestly unphysical outcomes. Mathematical descriptions representing irreversibility are correspondingly not time reversal invariant: the equations backward in time are different from those evolving forward in time.

Typical examples for irreversible dynamics include diffusion processes either describing molecular or turbulent diffusion as well as heat conduction or chemical reactions. The diffusion equation

is the representative example, where

is a probability distribution function (PDF) that describes the probability to find a particle at a certain position

x at time

t. For the diffusion equation, solutions running backward in time correspond to a process of “undiffusing”: for example, diluted ink, under such a process, would gather itself back into the initial drops of inks added to the mixture.

That is not so for reversible processes. Typical examples for reversible dynamics are Hamiltonian mechanics, quantum mechanics, and classical electrodynamics. These are iconically representable by the wave equation

which is unchanged under time reversal. All that happens is the reversal of the propagation direction, which remains physical. If a light wave encounters a mirror, it travels just back the way it came. Furthermore, wave phenomena are characterized by propagation, unlike diffusion which is characterized by dispersion without propagation.

These fundamental differences between the diffusion and the wave equation are also apparent on mathematical grounds and in their solutions. While the former has evolutionary relaxation over all space scales to infinity, the latter has finite speed propagation. Mathematically, the diffusion equation is parabolic and has one characteristic, whereas the wave equation is hyperbolic with two characteristic solutions. In addition, the numerical treatments of parabolic versus hyperbolic equations are different too.

As a result, these two prototypical equations seem to be clearly separated and unconnected. It was thus a fascinating undertaking to seek out a way to join these two worlds nonetheless and to study what the consequences are. The first way to accomplish such a connection was to explore the use of fractional calculus in the context of this equation

The fractional derivative

is actually defined via an integral as

with

and

. As solving the (fractional) diffusion equation is essentially an initial value problem, Davison and Essex [

1] were first to prove that only the

case works for normal initial value problems. Thus, the fractional derivative is given as

which is also known as Caputo derivative [

2].

For the time-fractional diffusion Equation (

3), the domain is

and

. Negative

x adds nothing due to spatial symmetry about the origin. This results in an evolution equation that fully contained both the diffusion,

, and the wave equation,

, as special cases—thus for

represents a bridge between two different worlds [

3,

4,

5,

6].

The obvious probe to explore this extraordinary bridging regime is something that assesses the differences in the property of reversibility as one traverses between diffusion and waves. Classical (Shannon) entropy

and particularly entropy production, is the obvious first consideration as an appropriate measure such that the entropy production rate (EPR) [

3,

7,

8] quantifies how irreversible a process is:

The reversibility and irreversibility of the wave equation and the diffusion equation respectively manifests itself in the entropy production occurring during the time evolution. Then, in the diffusion case, one has a positive entropy production while for the wave propagation the entropy production is zero. One thus suspects that, for the transition from the diffusion case

to the wave case

, the EPR will decrease and become zero in the reversible case. However, this expectation has been shown to be erroneous and the corresponding phenomenon has been dubbed the entropy production paradox for fractional diffusion [

3]. The entropy production paradox exhibits remarkable robustness, but that 44 does not mean it is universal [

9,

10]. A key question is why it exists at all.

In this short note, we will review the original results for the entropy production paradox [

3,

6] in the time-fractional context that arises from the above equations and why, taking particular note to how the symmetries of the extraordinary differential Equation (

3) lead to the unexpected result: the EPR increases as the solution approaches the reversible limit.

Furthermore, we will also sketch out how this scaling symmetry plays out in a formally different extraordinary differential equation with space-fractional derivative, with completely different solutions and domains, leading to the same remarkable outcome [

6,

11]: the EPR increases in the reversible limit. We will also observe in passing that this paradox persists even for generalizations of entropy available in the literature such as Tsallis and Renyi entropies [

4,

6,

12,

13,

14]. In this context, we want to note that there might be implications of the entropy production paradox on applications known in finance [

15,

16], ecology [

17], computational neuroscience [

18], and physics [

19,

20,

21].

We will then widen the scope of the entropy production paradox by leaving the realm of systems continuous in space and investigate the entropy production for time-fractional diffusion discrete in space. We generalize a classical master equation describing diffusion on a chain of points by replacing the time derivative with a time-fractional one, thus opening the full range up to the wave equation on the chain. We find an EPR rich in features. In particular, the scaling symmetry persists even in this finite discrete scenario governed by a master equation picture allowing the paradoxical behavior to reveal itself until the finite size effects dominate. We can thus conclude that this paradoxical behavior is not only unique but extraordinarily robust.

2. The Paradox for Time-Fractional Diffusion

The time-fractional problem (

3) is fully realized with the following initial conditions:

where

represents the delta distribution.

The solution of Equation (

3) is known in terms of the

H-function (for details, see [

5,

22]) as

Note that, for each

, a different

is obtained. In accordance with [

23], the second initial condition in Equation (

9) is set to be zero to guarantee the continuity at

.

2.1. The Transformation Group

Interesting as the

H-function is in its own right, it has little to do with the nature of the regime properties in terms of entropy production. This can be seen by considering the similarity group

and

, choosing

. Equation (

3) is invariant under this group and thus solutions,

, can be found in terms of a similarity variable,

We note that

is a solution of an auxiliary equation [

3], which is not relevant here.

While the probability distributions

is not a similarity solution in its own right, the function

with its scaling property is essential to the form of

, which must have a time independent integral over the domain,

, in

x. With

being the corresponding domain in

, one finds

This suggests the primary form for the PDF,

leading to

which confirms the form (

13) for the probability density. A quick observation reveals that this is precisely the form of (

10).

2.2. Entropy Production Directly from the PDF

Inserting Equation (

13) into (

6) leads to

where

decreases monotonically with

[

5]. It follows that for

which shows decreasing entropy production with time, but increasing entropy production with

, where increasing

corresponds to the direction of the reversible limit in

-space. Moreover, it is clear that this property has nothing whatever to do with the form of the PDF in (

10).

This is the primary form of the entropy production paradox. Instead of a decreasing while approaching the reversible wave case, i.e., increasing towards 2, the entropy production increases up to a final non-continuous jump at down to .

2.3. Entropy as Order Function

Perhaps this paradoxical behaviour is misleading. That is, one can consider each as representing a separate system with its own intrinsic rates. Maybe one might argue that the EPR does not represent the physically meaningful ordering of states in this domain.

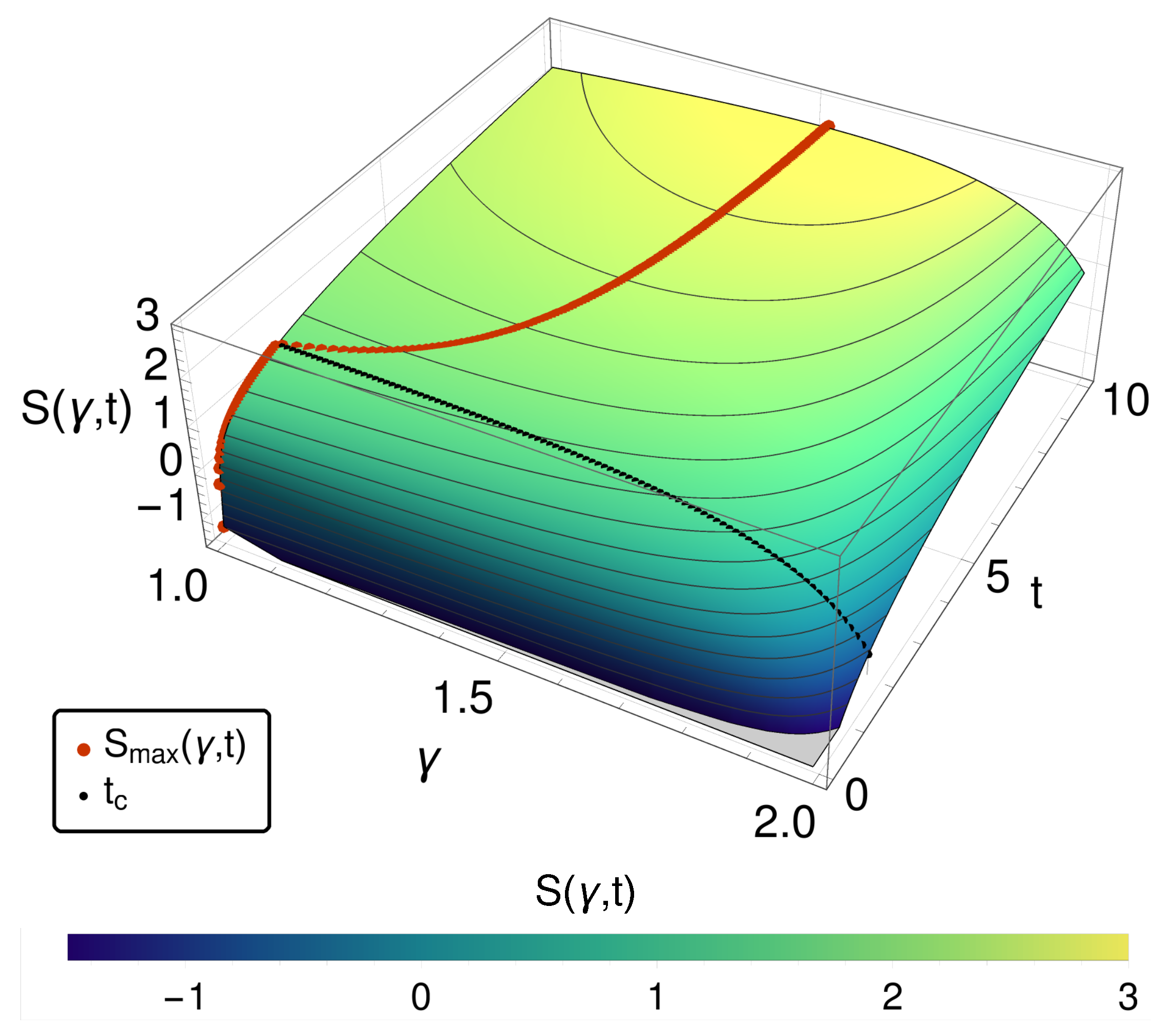

One alternative is the entropy itself. In

Figure 1, the entropy

S is given over

and time

t. For small times, we observe a monotonic decreasing behavior of

S for increase

, i.e., approaching towards the reversible case the entropy decreases. However, after a critical time

, a maximum of the entropy within

appears. (A detailed discussion can be found in [

5].)

Thus, we find that comparing the different probability distributions via the entropy production or via the entropy S at constant times does not represent the standard notion of the entropy production or of the entropy that it should decrease towards the reversible case.

This leaves us with entropy production which changes in a paradoxical manner, or entropy which does not even order the states between diffusion and waves because of the maximum. However, perhaps the notion of ordering the respective systems along lines of constant time misses an essential aspect of the matter.

Examination of the evolution of the PDFs computed shows that the solutions between diffusion and waves exhibit properties of both dissipation and propagation simultaneously. The peak of the PDF moves in the half space while the width of the peak broadens. As grows, the peak tightens and moves more quickly, until the peak approaches a delta distribution in the limit. In this sense, it is a precursor to propagation, which we call pseudo propagation, as it is not strict propagation in a mathematical sense.

As this pseudo propagation is more rapid for larger , this suggests that the different systems in operate with a different internal clocks. Larger means the process has greater “quickness”.

For this case, this property may be captured by the rate of movement of the mode of the PDF. Although the mode is not known analytically, it exists and can be determined numerically, whereas for instance the mean or the first moment does not always exist in such problems, as we shall see in the case below.

The time dependent mode for this distribution can be determined from the mode

at

via

From this, we can determine a corresponding time,

, that puts each system at a similar evolutionary position as a function of

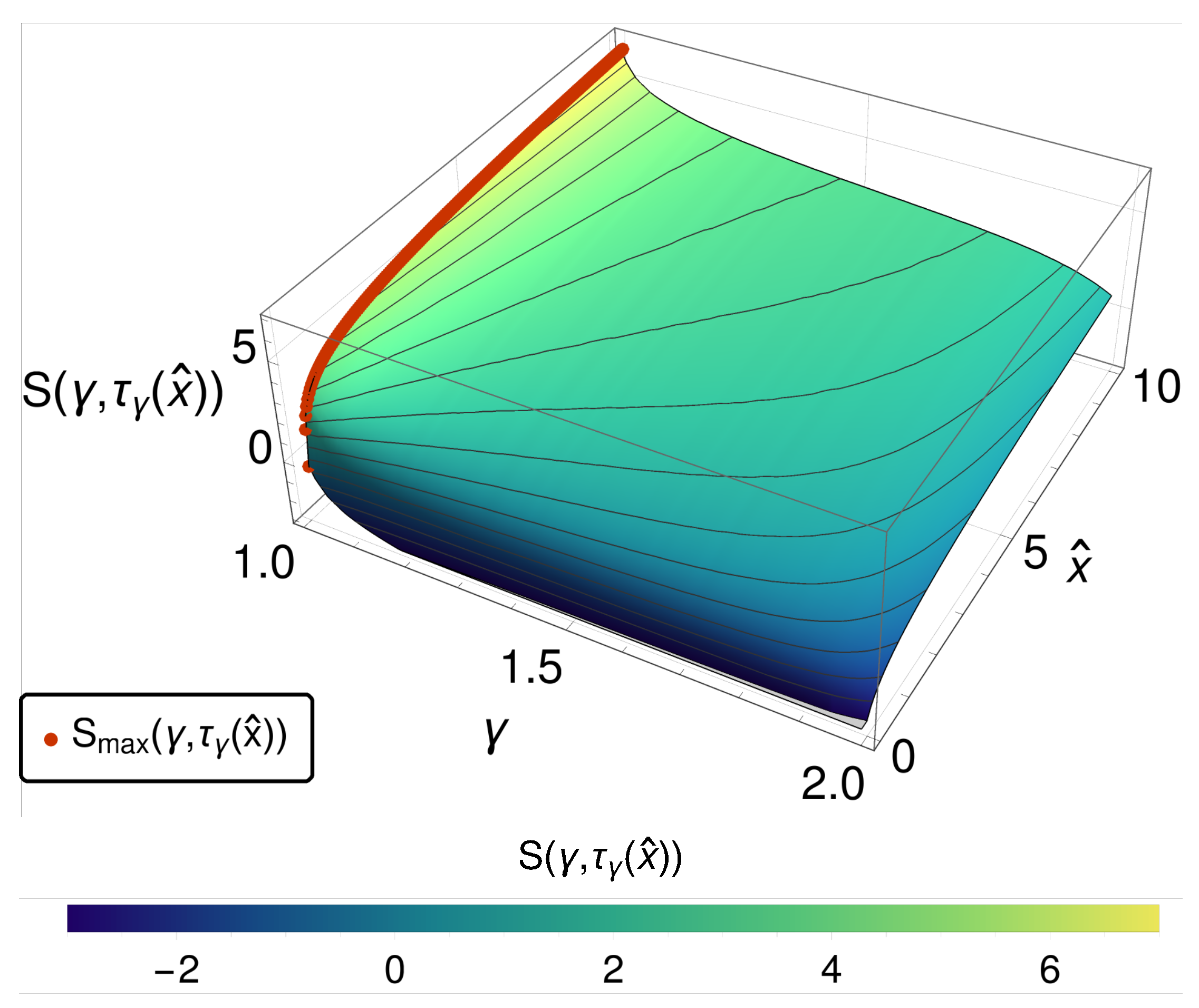

Along lines of constant

instead of constant

t, as shown in

Figure 2, we observe a monotonic decreasing entropy while crossing from the diffusive to the reversible case. While this does not eliminate the primary paradoxical behavior of the EPR, it causes the entropy itself to operate as an order parameter that has some intuitive content.

3. The Paradox Is Not Unique

The entropy production paradox for time-fractional diffusion [

3] naturally leads to the question as to whether the paradox is unique. How special and singular is this phenomenon?

It turns out that, while the circumstances that permit this paradox to occur are not universal, they are far from unique. This section provides an alternative circumstance that is as removed from the original paradox as one might get without losing all contact with the context of the problem.

We still must have PDFs that bridge the regime between diffusion and waves with a one-parameter family of PDFs. This can be accomplished by beginning with the diffusion equation and letting the space derivatives decrease in order to 1,

where

takes the values

with

represents the (half) wave equation, while

represents the diffusion equation.

Equation (

19) could not be more different for this context than (

3). Equation (

19) induces an infinite domain, while (

3) has a semi-finite one. Here, space does not have an initial point as time does. Time-fractional derivatives imply a nonlocality or “memory” respectively in the their definition [

24,

25] by the time integration, while space-fractional ones have the classical picture of time in regular differential equations.

A suitable “memory-less” fractional derivative can be found using Fourier transforms. The resulting fractional derivative

is defined via

as

as used in [

6,

11,

12,

13], but other definitions are also possible [

14]. While the space-fractional derivative is defined through transforms for historical reasons, this does not mean that the time-fractional derivative could not be defined alternatively through a suitable transform.

Utilizing the initial condition

the PDF solutions of Equation (

19) turn out to be Lévy stable distributions [

6,

11]

with

. Note that

is defined for

and

.

As in the case of time-fractional diffusion, the solution functions (

21) show a scaling behavior in the variables space

x and time

t that they can be written as a product of a function of time only and a function of the similarity variable

. Thus, the entropy can then be written in terms of the similarity variable and a scaling function

as

The analysis of the resulting EPR proceeds in the same fashion as in the time-fractional case and leads again to the entropy production paradox, i.e.,

where here

decreases to the reversible limit meaning the EPR again increases.

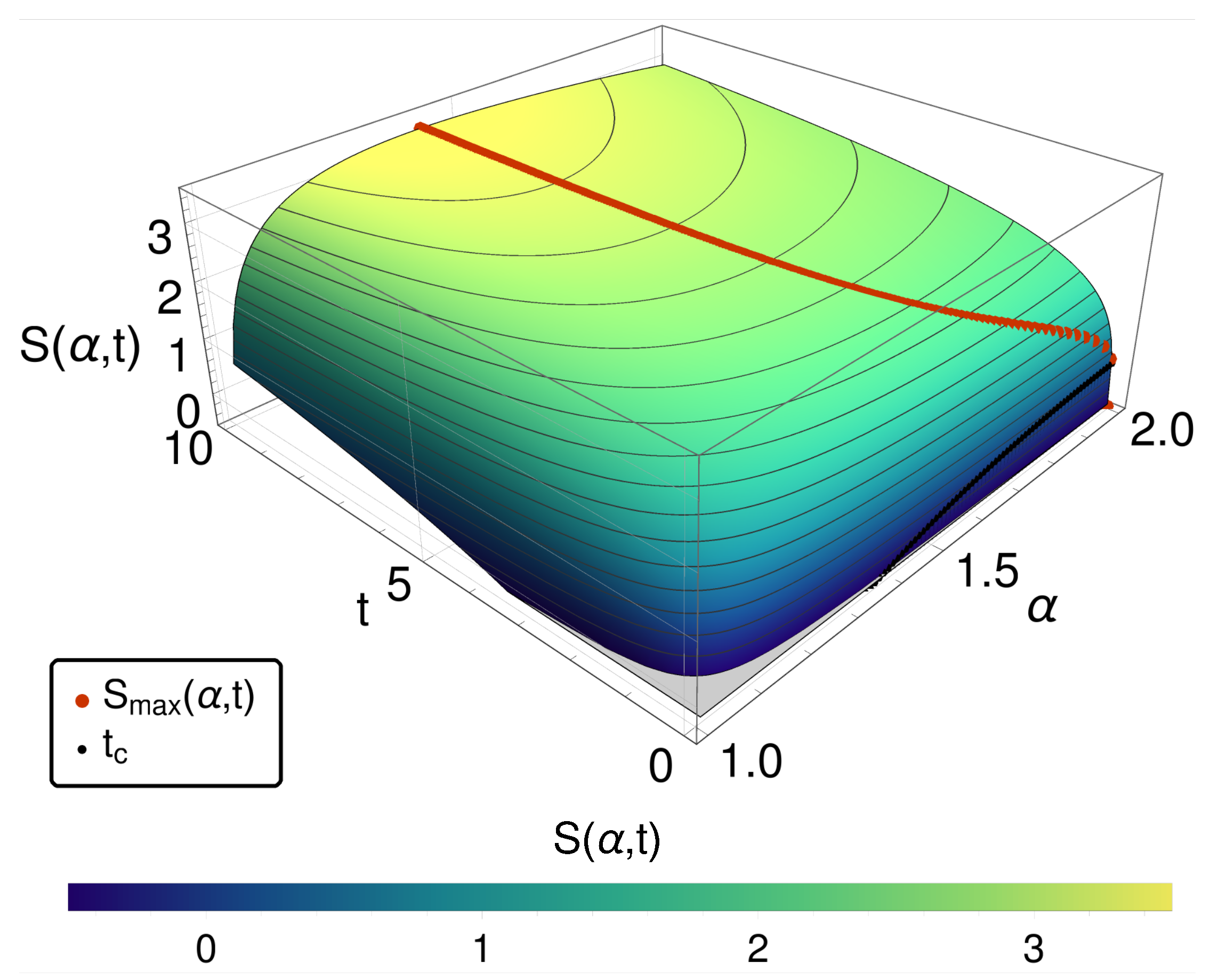

As shown in

Figure 3, again an entropy maximum in the bridging regime (and thus the paradox) occurs, and the internal clock question recurs. As indicated above, this scenario is one of those, where due to the very different nature of the Lévy stable distributions, i.e., having heavy tails and exhibiting no first moments, only the mode can be used to track the internal clocks of the respective system for each

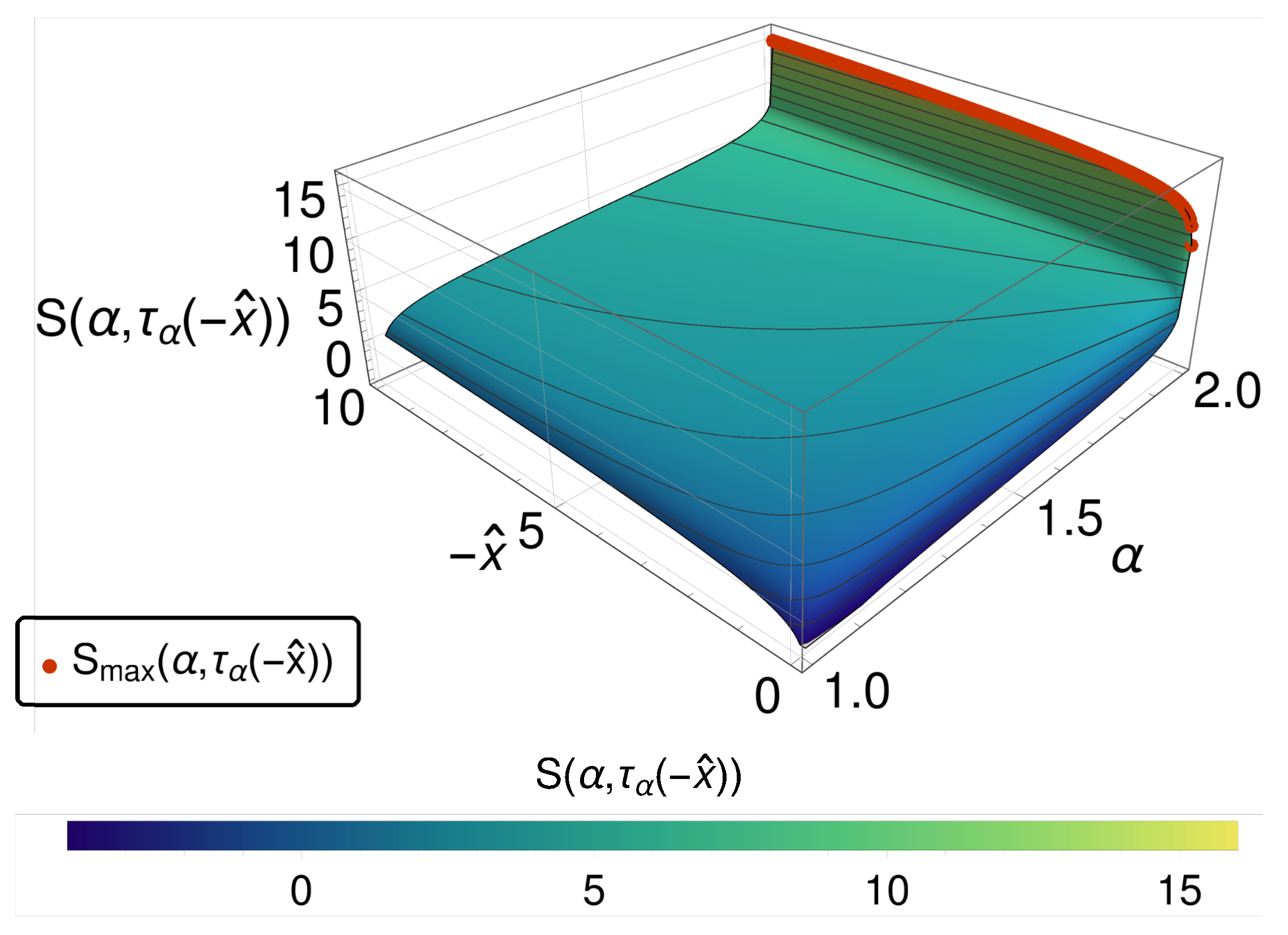

. In

Figure 4, the entropy is given as a function of

and the mode position

of the distribution function. It turns out that here again the mode provides an effective strategy to resolve the ordering problem. However, this successful conclusion distracts from the essential difference between the space-fractional and the time-fractional case.

5. Time-Fractional Diffusion on a Finite Interval

The above discussion has already shown that the entropy production paradox occurs in a variety of systems and for a variety of entropy definitions. In this section, we want to show that the entropy production paradox does not only occur in systems using continuous space but also for processes in discrete systems (e.g., [

29]).

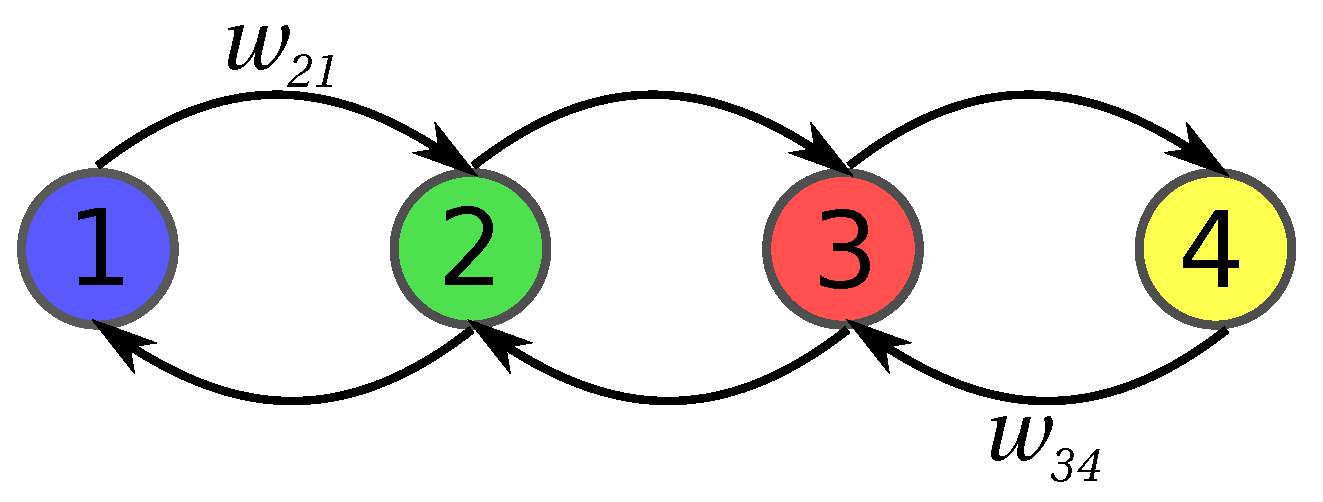

We consider the dynamics on a linear chain of finite length

m. In

Figure 5, the setting for a chain with four sites is depicted. The figure visualizes that we treat the case in which only nearest neighbor connections are present. We consider a simple diffusion process on that set with the dynamics of the probability

to be in state

i at time

t given by the master equation

where

are denoting the transition probabilities from site

j to site

i. They are set to

for

and

otherwise, and

.

We now generalize this master equation by substituting the first order time derivative on the left-hand side of (

26) with the fractional derivative as given in Equation (

5)

given in matrix-vector notation.

We note that now the no longer represent transition probabilities but a connectivity strength indicating how much the value of and (in addition to ) influences the fractional derivative of . Below, we will refer to the as generalized transition probabilities.

Equation (

27) represents a set of

m coupled linear time-fractional differential equations. They can be decoupled by an appropriate transition to variables based on the eigensystem of the connectivity strength matrix

with elements

:

which has

m eigenvalues

,

and corresponding eigenvectors

. In detail, we find

The probability distribution can now be expressed as a linear combination of the eigenvectors with coefficients

Inserting (

31) into (

27) then leads to a set of

m decoupled fractional differential equations

which follows from the orthogonality of the eigenvectors.

The resulting solution to this fractional differential equation can be determined for known initial values

and

as

where

represents the generalized Mittag–Leffler function. It is defined by its power series

and encompasses the Mittag–Leffler function

.

For given

and

the corresponding

and

are set by

Combining the results, we obtain

as well as its time derivative

Finally, the entropy production rate is determined

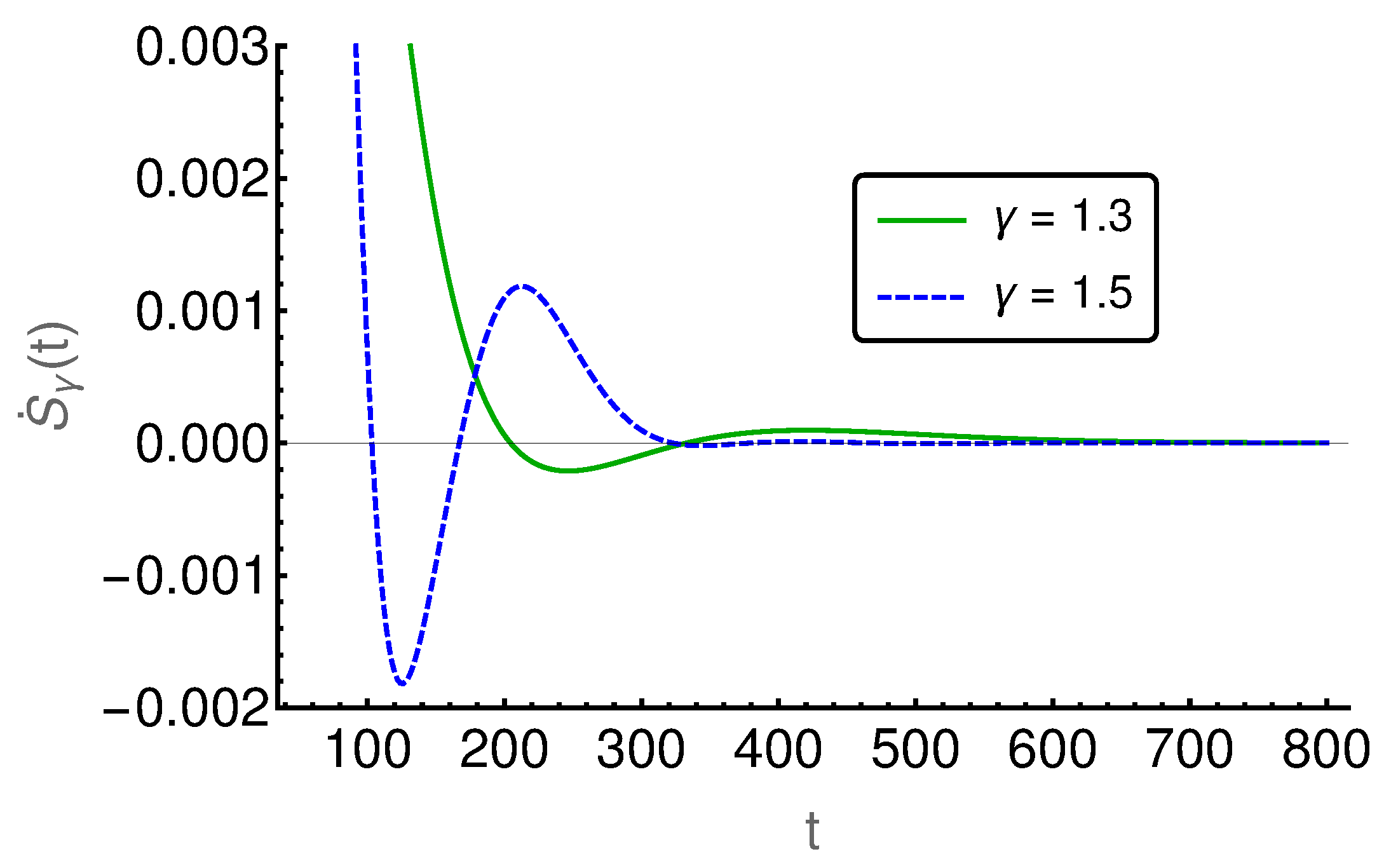

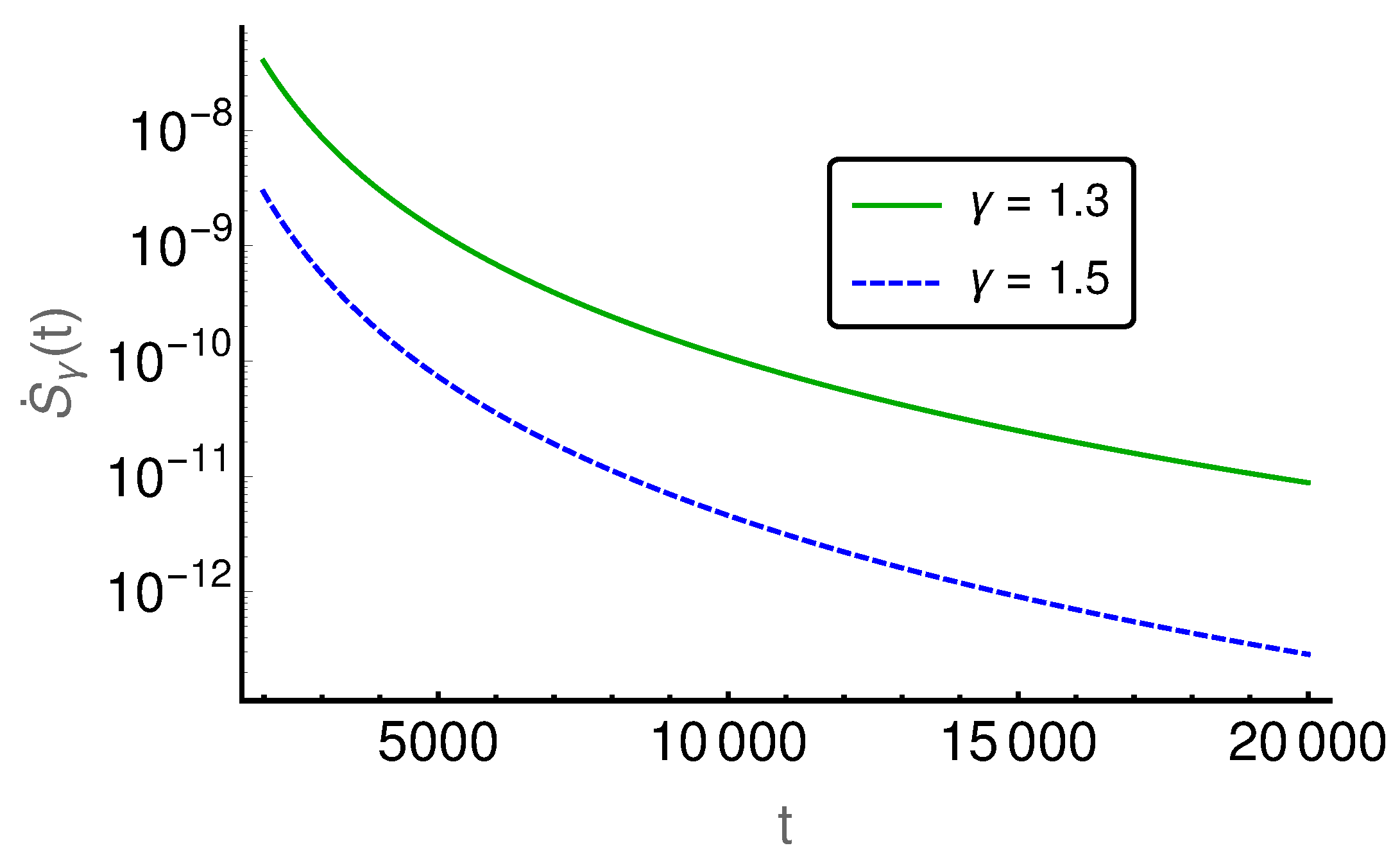

In the following figures (

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10), the EPR is shown for

and

and

, where

indicates the strength of the initial probability change. In

Figure 6,

Figure 7 and

Figure 8,

is set to zero and the EPR is shown for

and

. An apparent feature is that the EPRs for different

cross each other several times. This alone exemplifies that the entropy production paradox is present in certain cases.

If we focus on the small time regime as presented in

Figure 6, we can see that the

-case has a larger rate than the

-case and thus the entropy production paradox is recovered. In the intermediate time regime, between 100 and 800, the two rates cross each other (cf.

Figure 7) several times and thus we find the paradox but also the intuitive behavior where the EPR is larger for smaller values of

indicating larger proximity to the irreversible diffusion process. Finally, for large times, we see in

Figure 8 that EPRs show regular ordering in the final relaxation process towards equilibrium.

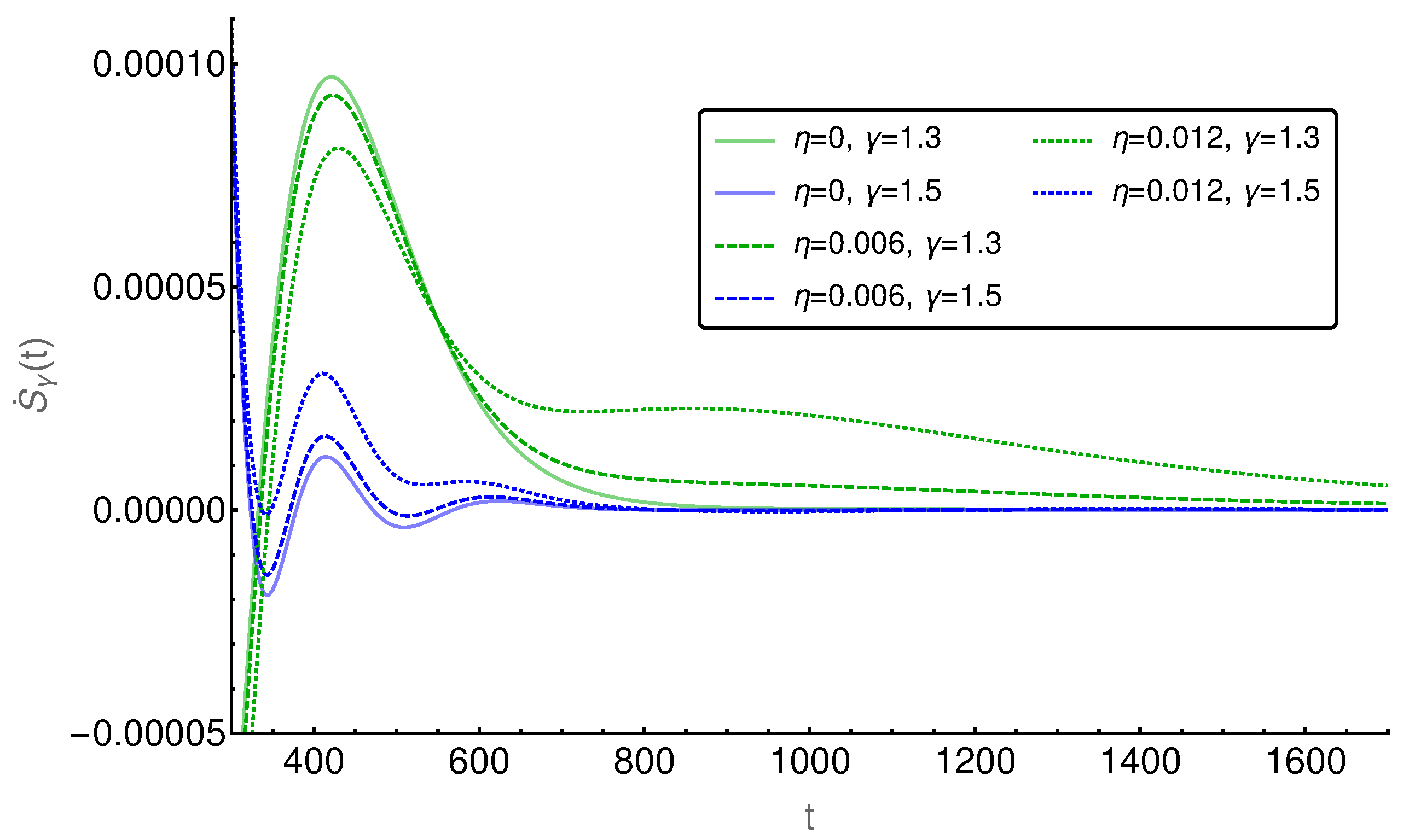

In

Figure 9, the EPR is shown for two different values of

, i.e.,

and

. Again, the entropy production paradox appears in the small time regime, while regular behavior is observed for long time periods. Interestingly, the initial probability change

shows only a small influence for short and intermediate times (see

Figure 9a,c), as both EPRs apparently behave in the same way as for

. For long time periods, as shown in

Figure 9b,d, we observe a stronger influence of

than of

as expected and additionally we find that, for smaller values of

, the influence of

is stronger than for larger

.

A further comparison of the EPRs for different initial probability changes

(cf.

Figure 10) shows that the influence of

persists longer for

than for

. Furthermore, it can be seen that at least for the smaller values of

the initial probability change does not lead automatically to a larger or lower EPR. Whether the EPR is raised or reduced depends on time. This is an exciting finding, which needs further investigation.

6. Conclusions

The first encounter with the entropy production paradox was realized by an increase of the entropy production rate (EPR) as the parameter , representing the fractional order of the time derivative in the time-fractional diffusion equation, is moved from , the diffusion case, to , the wave case. Subsequently, it was realized that this was not unique. Substantively different cases, as the space-fractional diffusion equation, were found where the EPR increased as the control parameter approached the reversible limit. This unexpected behavior contradicts the expectation that the EPR ought to decease as the reversible limit is approached. Furthermore, we have shown that this peculiar behavior is robust, even valid for generalized entropies.

Recently, we extended the analysis to time-fractional diffusion on a finite chain of points. This extension promises new insights as the scaling features present in the fractional diffusion on a half-infinite space no longer exist due to the confinement of the probability in the chain. By a numerical analysis of the EPR, we could establish the existence of the entropy production paradox for short time periods, which are here characterized by the time span in which the distribution initially starting as a

-distribution in the middle of the chain has not “seen” one of the ends. Thereafter, a complex behavior appears due to the distribution sloshing against the reflecting ends of the chain. The further analysis of this complex behavior is left open here for further research. The treatment of these systems in terms of fractional entropies may be of interest in future work [

30,

31,

32].