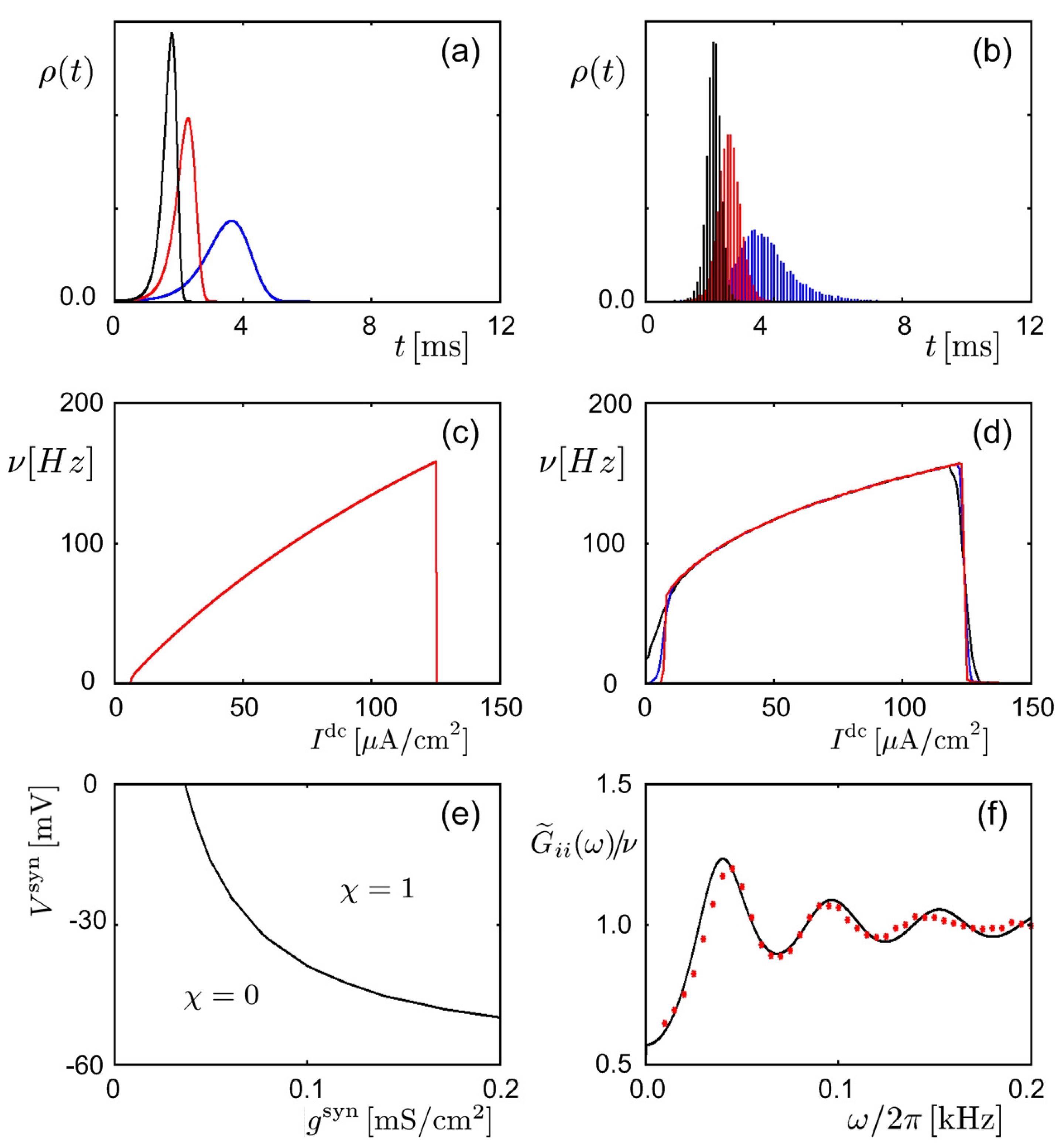

3.1. Hopfield Network

A Hopfield network consists of a group of neurons together with symmetric connections between them. The state of neuron

i is represented by the variable

, which takes values

. The connection strengths between neurons are symmetric (

) and have no self-connection (

). In the learning process, the Hopfield network memorizes a set of

p binary patterns

(

) with

, which is achieved by setting the connections as the sum of outer products:

with the constant

specifying the overall connection strength. In the firing process, the state of each neuron is updated according to the following rule

where the constant

controls the bias of neuron

i. Namely, the state of neuron

i is updated as if the neuron had a threshold activation function with the threshold

. The Hopfield network possesses the desired memory patterns

as its attractors, so that it usually flows to one of them depending on its initial state.

It is of interest that the update rule can be understood as the single spin-flip sampling in an Ising model system with the energy function

Whereas the deterministic update rule in Equation (

8) corresponds to the zero-temperature dynamics, one may generalize it easily to finite temperatures by introducing probabilities. Specifically, adopting the importance sampling Markov process or the Monte Carlo method, we consider the probabilistic update in such a way that the probability of flipping

is given by

, where

is the energy difference in flipping of

. It is obvious that the important sampling process reduces to the deterministic update rule in Equation (

8) as the temperature

T approaches zero.

It is also of interest to note that only a single neuron is updated at each time step. Such asynchronous dynamics contrasts with the synchronous dynamics where all the neurons are updated simultaneously [

11]. Neither dynamics provides a very realistic description of the dynamics in real networks, which presumably lies in between. As an attempt toward a more realistic description of the dynamics, a dynamic model working in continuous time but taking into account relevant time scales was also presented [

16].

3.2. Boltzmann Machine

The Boltzmann machine, which is often referred to as a Monte Carlo version of the Hopfield network [

17], has the energy function given by

where

can only take values of 0 and 1 and

with

being the external input to neuron

i and

the negative of the activation threshold in the system running freely without external inputs. The neurons are divided into “visible” and “hidden” ones. The external input

is applicable only if neuron

i is a visible one and the system is in the training phase. In the case of the Metropolis importance sampling [

18], the state of each neuron flips with the probability

where

is the energy difference in the flipping of

. The expectation value of

in the Monte Carlo process then obtains the form

where

is the partition function with the trace standing for the summation over all configurations (

). The expectation value can also be obtained from the derivative of the partition function with respect to the external source in the following way:

where

is the free energy of the system. Similarly, the cross-correlation function between two neurons

i and

j reads

The learning rule for the Boltzmann machine is expressed originally in the form of a gradient descent process

where

L is the relative entropy or Kullback–Leibler (KL) divergence given by

The KL divergence measures the distance between probability distributions

and

, vanishing if and only if the two distributions are identical. Here,

is the probability of the state

of visible neurons when the system is in the training phase.

is the corresponding probability for the network running freely with no external input. After some calculation, the change in a connection strength is obtained as

where the correlation function

describes the average probability of two neurons both being in the

on state with the environment clamping the states of the visual units, and

is the corresponding probability for the network running freely without external inputs.

Interestingly, there is similarity between the learning rules based on the gradient descent in the KL divergence and in the free energy. Applying the density-matrix formalism with the correlation function taken as the density matrix

, we express the free energy of the Boltzmann machine as

with the internal energy

and the entropy

, where

stands for the firing probability of neuron

i. With the condition

in the extremum state, the gradient descent in the free energy takes the form

Note that the derivative of the entropy with respect to the connection strength does not depend on external inputs.

Further, there is another learning rule for the Boltzmann machine, which is a simplified version of the STDP rule [

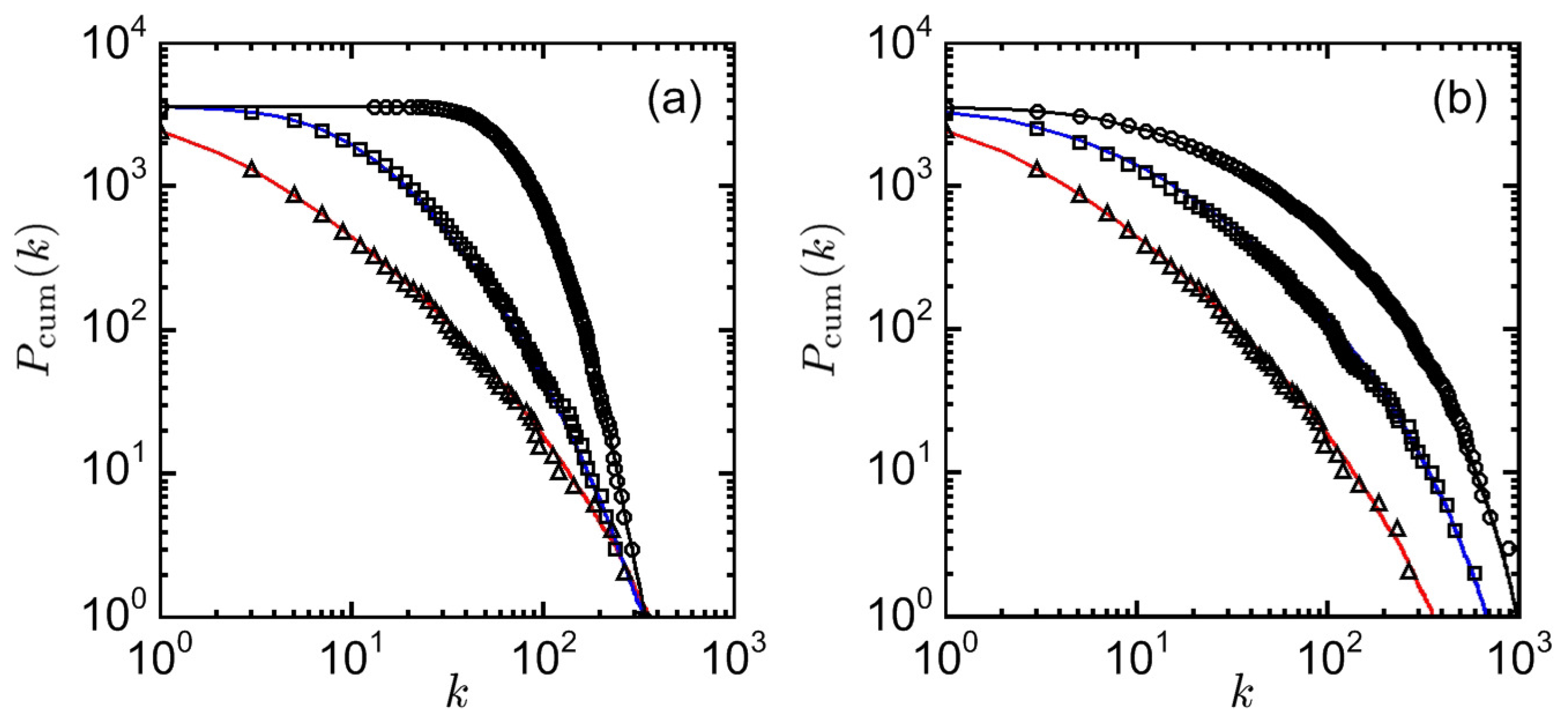

9]. It has been developed to explore the emergent structure in a neural network running freely, motivated by the report that the STDP rule leads to the development of small-world and scale-free graphs in simulations [

19,

20]. As well known, small-world and scale-free properties are ubiquitous in complex networks [

21,

22]. Conventional models explain that a small-world network, characterized by short path lengths and high clustering, emerges as a result of randomly replacing a fraction of links on a lattice by new, randomly chosen links [

23] and that a scale-free network emerges from stochastic growth in which new nodes are added continuously and attach themselves preferentially to existing nodes [

24]. However, there are such empirical networks as the brain network, possessing small-world and/or scale-free properties, to which the conventional models are not applicable. Specifically, a neural network with a static number of neurons acquires the scale-free properties not as a result of the preferential attachment or rich-get-richer process in a growing network but as an equilibrium state of the connecting–disconnecting processes. In the biological learning rule, the connecting and the disconnecting processes are related to the LTP and the LTD parts of the STDP window, respectively.

In the model, neurons flip their states according to the importance sampling rule in Equation (

11). Simultaneously, the strength of each connection, which can take values

J and 0, changes with the probability

where

controls the ratio of time scales in the firing and the learning process and

is the energy difference associated with flipping

. The energy

in the learning process is given by

where the cross-correlation

and the firing probability

are measured in a moving time window (of about 100 steps). The competition strength

controls the contributions of interactions relative to those of independent activations;

is the wiring propensity (which is opposite to the wiring cost). The value of

J is chosen to be sufficiently larger than

T and the value of

to be a small number as usual.

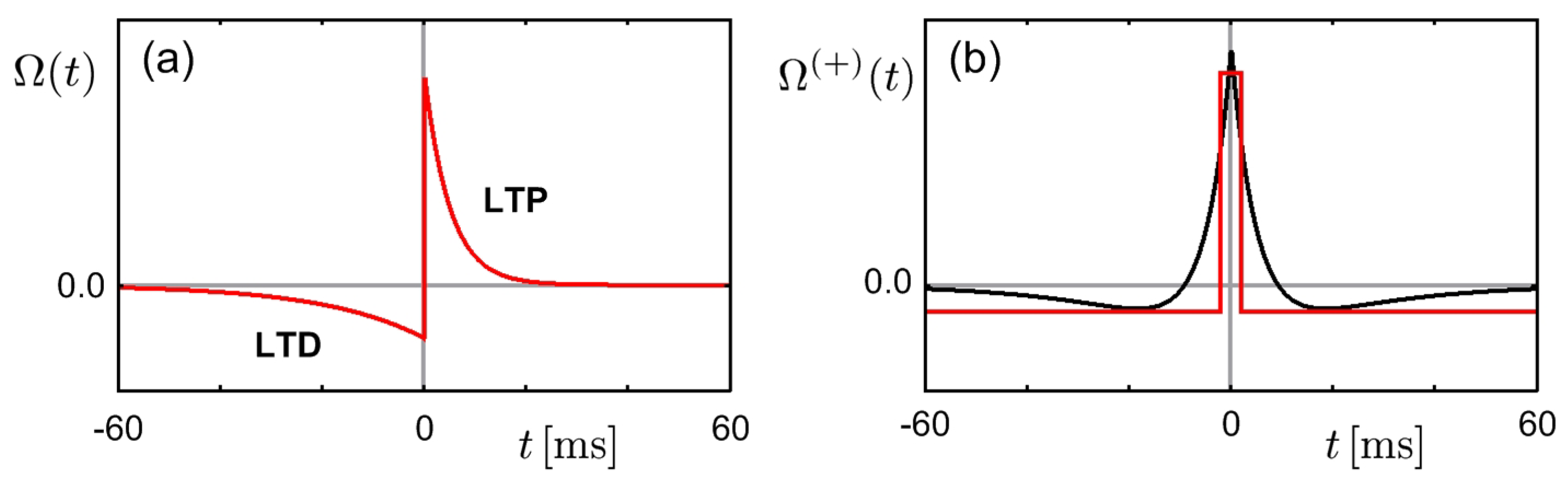

Figure 2 shows the log-log plots of the cumulative degree distribution in neural networks, which exhibit the typical features of scale-free graphs.

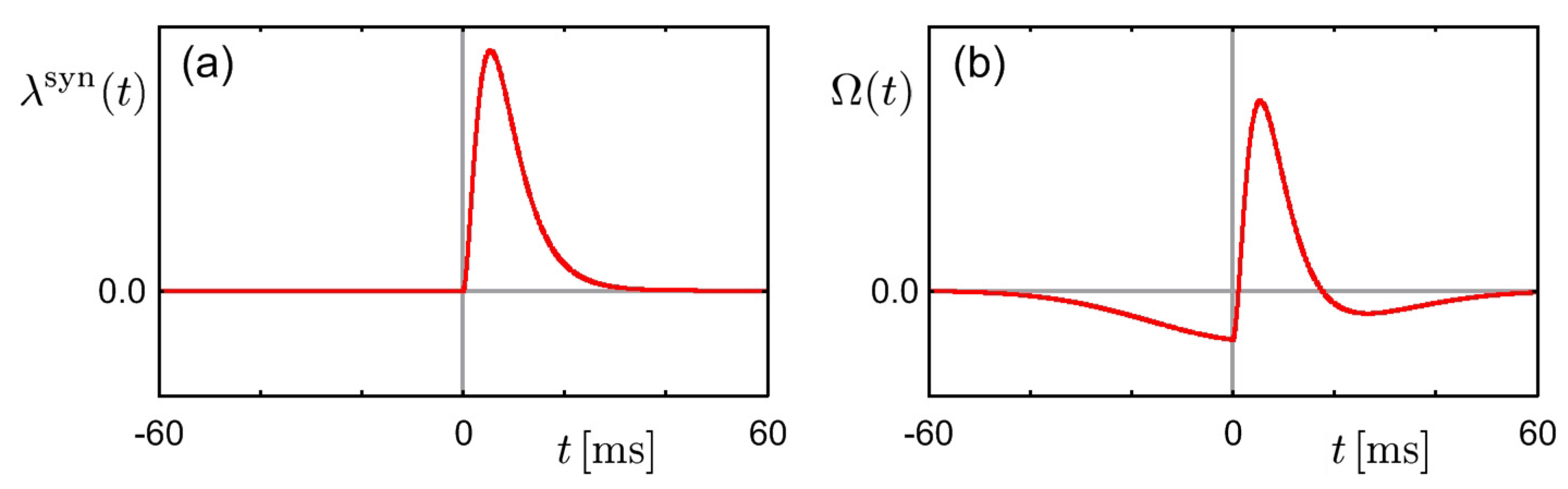

In the above, the connection strength has been taken to be a binary number for convenience in Monte Carlo simulations and in the discriminant of connected and disconnected neural pairs. In the case that the connection strength takes continuous values, the learning process could be expressed as the gradient descent in the energy function:

which is similar to the learning process in Equation (

17) or Equation (

18). The form of

originates from an approximate of the even part of the STDP window (see

Figure 1b). Note also that

often serves as a free energy rather than energy. Henceforth, it is demonstrated that the biological synaptic plasticity rule can be interpreted as the minimization process for a free energy (see

Section 5).

3.3. Informatix Rule

The informatix rule does not employ the definition of the energy or free energy of a neural system. Nevertheless, in view of informatics, it provides an important hint as to how the entropy maximization principle admits a stochastic neural network with competitive learning behavior. Consider a neural network composed of two input–output layers and only feedforward connections from input to output neurons. Suppose that the activity of neuron

i is given by

with the scaled membrane potential

given by Equation (

2), where the feedforward connection

is relevant only for

and

with

(or

) denoting the set of input (or output) neurons, and the external input

is applicable only for

. According to the informatics, the information transfer is measured by the joint entropy

with

. The joint entropy can be rewritten as

where

is the marginal entropy of the output

and

the mutual information of the outputs. They obtain the form

and

In informatics, an ideal learning process is to maximize the joint entropy or the information transfer from input to output neurons. Provided that the input and the output layers have the same number of neurons, the derivative of the joint entropy with respect to the connection strength is given by

where other terms are assumed to be independent of

W and

is the Jacobian of the information transfer from neuron

j to neuron

i. When there is no lateral connection between output neurons, the Jacobian would become

. Finally, the learning rule maximizing the joint entropy reads

where

reduces to

with the inverse matrix

. The learning rule described by Equation (

27) corresponds to the competition mechanism in the PSL model or in the Feynman machine, as shown in the following sections. A further manipulation of this equation gives the informatix rule [

25], which is in turn related to the independent component analysis (ICA), a popular algorithm for blind source separation [

26].

3.4. Pseudo-Stochastic Learning Model

Consider a neural network composed of input–output layers, where output neurons have feedforward connections

W from input neurons and lateral connections

J with other output neurons. Neglecting the activation function

f or assuming

, we write the time-dependent firing rate in the form

where the lateral connection

is applicable only for

, the feedforward connection

only for

and

, and the external input

only for

.

At stationarity (

), Equation (

28) gives the neural activity in the form

where

is the recursive lateral interaction, given by a component of the matrix

with

I being the identity. Namely, letting

, we have

. Adopting the simple Hebbian rule in Equation (

3), the feedforward connection changes as

In consideration of the learning process under varying external inputs, we write Equation (

30) in the form

where

measures the correlations of external inputs with

denoting the average in a long time period for varying external inputs. This is the correlation-based learning model for feature map formation [

27]. A feature map formation model should have a competition mechanism to prevent output neurons from having the same features as neighbors. The correlation-based model achieves the competition mechanism through negative components in

J or

K originating from inhibitory lateral connections.

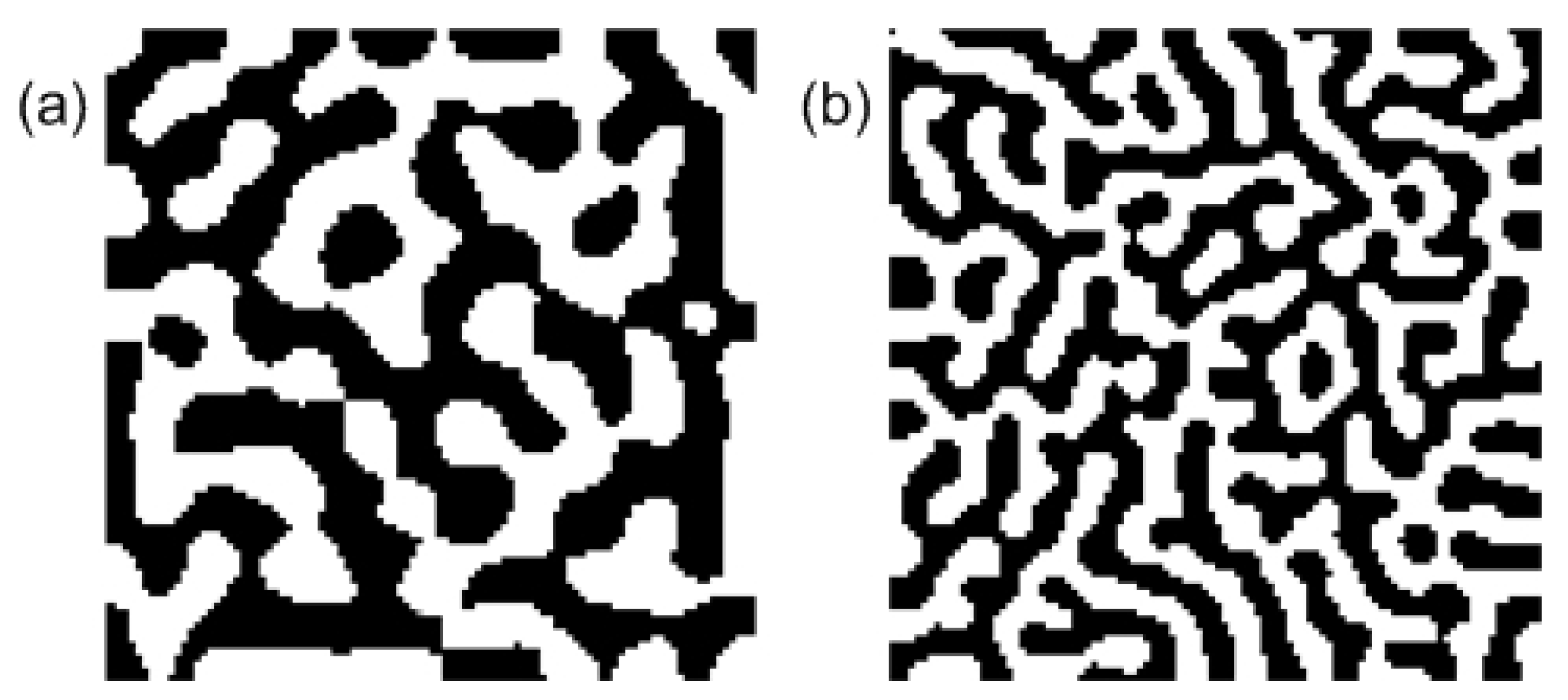

Figure 3 shows the emergent feature map, developed by the correlation-based model. It is noteworthy that the feature map displays the same characteristics as the feature map observed in the primary visual cortex (V1) area. In simulations, the recursive lateral interaction

K has been modeled as a Mexican-hat-shaped function of distance. The output neurons would have the same features (i.e., ocular dominance) as others if the degree of the competition, controlled by

k, is sufficiently small [

28]. In general, the visual input correlation matrix is diagonalized owing to the symmetry properties of external inputs; the feedforward connections is then represented by low-dimensional feature vectors or spin-like variables. For example, the ocular dominance of visual cortex neurons, based on the difference between feedforward connections from left and right eyes, can be represented by Ising-type spin variables. Consequently, the feature map formation in a visual cortex area can be explained in terms of the energy of a spin-like model [

28,

29,

30,

31,

32].

The firing activity and the learning rule in the correlation-based model can be derived through the use of statistical mechanics. Suppose that the activity of output neuron

i is determined probabilistically, so that its expectation value is expressed as

with the partition function

and a nonnegative constant

T. Provided that

J is a symmetric matrix, the energy function

E is given by

with

. The expectation value is obtained from the derivative of the free energy

with respect to the external source:

Performing the Gaussian integral, we obtain the free energy as

with the internal energy

and the entropy

where the connected two-point function

obtains the form

. In accord, the expectation value in Equation (

34) reduces to

which agrees with Equation (

29). Further, the learning rule in Equation (

30) is obtained from the derivative of the free energy with respect to the connection strength:

Equations (

34) and (

38) demonstrate that both the firing process and the learning process in a neural network can be derived from the free energy of the system. The derivative of the free energy with respect to the external source yields the neural activity in an extremum state. In comparison with the derivative of the energy function, the derivative of the free energy includes the effects of recursive interactions between neurons. Then, the derivative of the free energy with respect to the connection strength offers a relevant learning rule of the system. Nevertheless, the derivative of the entropy in Equation (

36) exerts effects neither on the firing process nor on the learning rule. It is plausible that the entropy, related to autonomous neural firings via thermal fluctuations, exerts no effects on the neural firing process, although the informatix rule allows that the entropy maximization induces a proper competition mechanism in a neural network without inhibitory connections.

On the other hand, the pseudo-stochastic learning (PSL) model suggests that the entropy maximization principle would exert meaningful effects on the learning rule when the entropy is obtained not from the Boltzmann distribution but from the neural firing correlations in a Langevin equation [

33]. We introduce a noise term to Equation (

28) and write

where

, referring to as endogenous neural firings via thermal fluctuations or external noisy currents, has the properties

,

, and

with constant

being proportional to the temperature

T. The activities of input and output neurons in the steady state are given by

and

, respectively, the expectation value of which agrees with Equation (

29). The endogenous neural firings via thermal fluctuations exert no effects on the average over individual neural activities.

Meanwhile, the connected two-point function between output neurons becomes

where

(or

) corresponds to the neural correlations originating from the autonomous firings of output (or input) neurons. With the substitution

in Equation (

36), the gradient flow of the free energy in Equation (

38) leads to the learning rule

where

is the inner product between incoming connection vectors onto output neurons

i and

j, so that

hinders output neurons from having the same feedforward connection pattern as others. Note that the second term in this equation corresponds to the first term in Equation (

27) because

for a square matrix

W.

Figure 4 presents a feature map, developed by the PSL model [

33]. It has characteristics of a topographic map, such as the well-ordered connection distribution from retina (or LGN) cells to V1 neurons. In simulations, the lateral connections

J (or

K) have no negative values, but the entropy-originating term brings about adequate competition between output neurons.