Abstract

In this work, we investigate a three-user cognitive communication network where a primary two-user multiple access channel suffers interference from a secondary point-to-point channel, sharing the same medium. While the point-to-point channel transmitter—transmitter 3—causes an interference at the primary multiple access channel receiver, we assume that the primary channel transmitters—transmitters 1 and 2—do not cause any interference at the point-to-point receiver. It is assumed that one of the multiple access channel transmitters has cognitive capabilities and cribs causally from the other multiple access channel transmitter. Furthermore, we assume that the cognitive transmitter knows the message of transmitter 3 in a non-causal manner, thus introducing the three-user multiple access cognitive Z-interference channel. We obtain inner and outer bounds on the capacity region of the this channel for both causal and strictly causal cribbing cognitive encoders. We further investigate different variations and aspects of the channel, referring to some previously studied cases. Attempting to better characterize the capacity region we look at the vertex points of the capacity region where each one of the transmitters tries to achieve its maximal rate. Moreover, we find the capacity region of a special case of a certain kind of more-capable multiple access cognitive Z-interference channels. In addition, we study the case of full unidirectional cooperation between the 2 multiple access channel encoders. Finally, since direct cribbing allows us full cognition in the case of continuous input alphabets, we study the case of partial cribbing, i.e., when the cribbing is performed via a deterministic function.

1. Introduction

Two of the most fundamental multi-terminal communication channels are the Multiple-Access Channel (MAC) and the Interference Channel (IFC). The MAC, sometimes referred to as the uplink channel, consists of multiple transmitters, sending messages to a single receiver (base station). The capacity region of the two-user MAC channel was determined, early on, by Ahlswede [1], and Liao [2]. However, the capacity regions of many other fundamental multi-terminal channels are yet unknown. One of these channels is the Interference Channel (IFC). The two-user IFC consists of two point to point transmitter-receiver pairs, where each of the transmitters has its own intended receiver and serves as an interference to the other transmitter-receiver. The study of this channel was initiated by C.E. Shannon [3], and extended by R. Ahlswede [4] who gave simple but fundamental inner and outer bounds to the capacity region. The fundamental achievable region of the discrete memoryless two-user IC is the Han-Kobayashi (HK) region [5] which can be exressed by a simplified expression [6]. Much progress has been made toward understanding this channel (see, e.g., [7,8,9,10,11,12,13] and the references therein). Although widely investigated, this problem remains unsolved except for some specific channel configurations, enforcing various constraints on the channel [14].

A common scenario of multi-terminal network is comprised of these two channels. For instance, looking at Wi-Fi or cellular communication, there are usually several portable devices (i.e., laptops, mobile phones, etc.) “talking” to a single end point (i.e., base station, Access Point, etc.). Moreover, the same frequencies are frequently used by nearby base stations, causing interferences at adjacent receivers. This increasing usage of wireless services and constant reuse of frequencies imply an ever increasing problem of optimizing the wireless medium for achieving better transmission rates. Cognitive radio technology is one of the novel strategies for overcoming the problem of inefficient spectrum usage which has been receiving a lot of attention [15,16,17].

Cognition stands for awareness of system paramters, such as operative frequencies, time schedules, space directivity, and actual transmission. The latter refers to transmitted messages of interfering transmitters, which are either monitored by receiving the interfering signals (cribbing), or on a network scale (a-priori available transmitted messages). Examples of signal awareness are reflected by Dynamic Spectrum Access (DSA) (see the tutorial [18], and references therein), as well as a variety of techniques for spectrum and activity sensing (see [19] and references therein). The timely relevance of cognitive radios and the information theoretic framework that can assess the potential benefits and limitations are reflected in recent literature (see [15] and references therein).

In our study, we focus on aspects of cognition in terms of the ability to recognize the primary (licensed) user and adapt its communication strategy to minimize the interference that it generates, while maximizing its own Quality of Service (QoS). Furthermore, cognition allows cooperation between transmitters in relaying information to improve network capacity. The shared information used by the cognitive transmitter might be achieved through a noisy observation of the channel or via a dedicated link. The cognitive transmitter may apply different strategies such as decode-and-forward (DF) or amplify-and-forward (AF) for relaying the other transmitter information.

To obtain information theoretical limits of cognitive radios, the Cognitive Interference Channel (CIFC) is defined in [20]. CIFC refers to a two-user Interference Channel (IFC) in which the cognitive user (secondary user) is cognizant of the message being transmitted by the other user (primary user), either in a non-causal or causal manner. The two-user CIFC was further studied in [21,22,23,24,25]. Cognitive radio was applied to the MAC in 1985, when Willems and Van Der Meulen established the capacity region of the MAC with cribbing encoders [26]. Cribbing encoders means that one or both encoders crib from the other encoder and learn the channel input(s) (to be) emitted by this encoder in a causal manner. Since then, the cognitive MAC has received much attention, recently characterizing capacity regions for various extensions [27,28,29,30,31,32]. Today, there are already practical implications of advanced processing techniques in the cognitive arena. For example, [33], shows coding techniques for an Orthogonal Frequency-Division Multiple Access (OFDMA)-based secondary service in cognitive networks that outperform traditional coding schemes, see also [34]. Hence, aspects of binning (dirty-paper coding [35]), as well as rate splitting [5], used in cognitive coding schemes, do have even stronger practical implications.

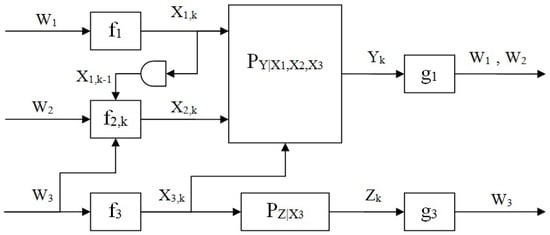

In this paper, we study a common wireless scenario in which a Multiple Access Channel (MAC) suffers interferences from a point-to-point (P2P) channel sharing the same medium. The main motivation behind this model is trying to interweave a MAC channel on top of a licensed P2P channel. The P2P licensed user must not suffer interference while the other users may use cognitive radio to improve performance. Adding cognition capabilities to one of the MAC transmitters, we investigate the case in which it has knowledge of signals transmitted by another user intended for the same receiver as well as signals transmitted by the P2P user on a separate channel resulting in an interference at the MAC receiver. We introduce Multiple Access Cognitive Z-Interference Channel (MA-CZIC) which consists of three transmitters and two receivers; two-user MAC as a primary network and a point-to-point channel as a secondary channel. The communication system, including the primary and secondary channels (whose outputs are Y and Z, respectively), is depicted in Figure 1. The signal is generated by Encoder 1. Encoder 2 is assumed to be a cognitive cribbing encoder, that is, it has knowledge of Encoder 1’s signal, as well as non-causal knowledge of Encoder 3’s signal. We note that while the signal interferes with the other signals creating Y, it is observed interference-free by the second decoder creating Z. The cognition of the P2P signal may model the fact that the same user produced a P2P message to another point, and hence naturally it is cognizant of the message . This channel model generalizes several previously studied setups: without Encoder 3, the system reduces to a MAC with a cribbing encoder as in [26]. Replacing the signal with a state process and ignoring the structure of , we get a MAC with states available at a cribbing encoder as in [24,27]. Removing Encoder 2, the problem reduces to the standard Z-Interference channel, and removing Encoder 1, we get the Cognitive Z-Interference channel, as in [36]. The Z-Gaussian Cognitive Interference channel was further studied in [37]. The model of a cooperative state-dependent MAC which is considered in [29] is very closely related to a special case of the MA-CZIC which is obtained by replacing the interfering signal of the MA-CZIC with an i.i.d. state sequence which is known non-causally to the cognitive transmitter. Some of the results which appear in this paper were presented in part in [38,39].

Figure 1.

Multiple-Access Cognitive Z-Interference Channel (MA-CZIC).

The rest of the paper is organized as follows. In Section 2 we formally define the memoryless MA-CZIC with causal and strictly causal cribbing encoder. In Section 3 we proceed to derive inner and outer bounds on the capacity region of the channel with causal and strictly causal cribbing encoders including a special case of the channel where the bounds coincide and the capacity region is established. Section 4 is devoted to the case of full unidirectional cooperation from Encoder 1 to Encoder 2 (a common message setup). Section 5 deals with the case of partial cribbing. Finally, concluding remarks are given in Section 6.

2. Channel Model and Preliminaries

Throughout this work, we will use uppercase letters (e.g., X) to denote random variables (RVs) and lowercase letters (e.g., x) to show their realization. Boldface letters are used for denoting n-vectors, e.g., . For a set of RVs , denotes the set of -strongly, jointly typical n-sequences of S as defined in ([40], Chapter 13). We may omit the index n from when it is clear from the context.

A more formal definition of the problem is as follows: A discrete memoryless multiple-access Z-interference channel (MA-CZIC) is defined by the input alphabets and output alphabets and by the transition probabilities and , that is, the channel outputs are generated in the following manner:

Encoder i, , sends a message which is drawn uniformly over the set to its destined receiver. It is further assumed that Encoder 2 “cribs” causally and observes the sequence of channel inputs emitted by Encoder 1 during all past transmissions before generating its next channel input. The model is depicted in Figure 1.

An code for the MA-CZIC with strictly causal Encoder 2 consists of:

- Encoder 1 defined by a deterministic mappingwhich maps the message to a channel input codeword.

- Encoder 2 which observes and prior to transmitting , is defined by the mappings

- Encoder 3 is defined by a deterministic mapping

- The primary (main) decoder is defined by a mapping

- The secondary decoder is defined by a mapping

An code for the MA-CZIC with causal Encoder 2 differs only in the fact that Encoder 2 observes (including the current symbol, ) before transmitting , and is defined by a the mappings

For a given code, the block average error probability is

A rate-triple is said to be achievable for the MA-CZIC if there exists a sequence of codes with . The capacity region of the MA-CZIC with a cribbing encoder is the closure of the set of achievable rate-triples.

3. Main Results

In this section, we provide inner and outer bounds to the capacity region of the discrete memoryless MA-CZIC.

3.1. Inner Bound

We next present achievable regions for the strictly causal and the causal MA-CZICs.

Definition 1.

Let be the region defined by the closure of the convex hull of the set of all rate-triples satisfying

for some probability distribution of the form

Theorem 1.

The region is achievable for the MA-CZIC with a strictly causal cribbing encoder.

The proof appears in Appendix A.

Definition 2.

Theorem 2.

The region is achievable for the MA-CZIC with a causal cribbing encoder.

The outline of the proof appears in Appendix B.

A few comments regarding the achievability region (9a)–(9d) are in order. In the coding scheme, Encoder 1 and Encoder 2 use Block–Markov superposition encoding, while the primary decoder uses backward decoding [27]. In this scheme, the RV V represents the “resolution information” [26]; i.e., the current block information used for encoding the proceeding block. Encoder 3 uses rate-splitting, where the RV L represents the part of that can be decoded by both the primary and secondary decoders as can be observed by the term which appears in (9d). The complementary part of , while fully decoded by the secondary decoder, serves as a channel state for the primary channel in the form of . To reduce interference the cognitive encoder (Encoder 2) additionally uses Gel’fand–Pinsker binning [41] of U against , assuming an already successful decoding of V and L at the primary decoder, as can be seen in (9b).

It is important to note that the achievable region is consistent with previously studied special cases: By setting and we can also set . The equations then reduce to

and this results in the region achievable for the cognitive Z-Interference channel, studied in [36], with user 2 and user 3 as the cognitive and non-cognitive users, respectively.

Removing Encoder 2 by setting and we can also set and we get the classical Z-Interference channel with the 2 users, Encoder 1 and Encoder 3. In this case Y is dependent of V only through , since , and . We get

An interesting setup arises by setting without removing Encoder 2. This models a relay channel, where Encoder 2 is a relay which has no message of its own and learns the information of the transmitter by cribbing (modeling excellent SNR conditions on this path). This model relates to [42], if the structure of the primary user () is not accounted for, thus assuming i.i.d. state symbols known a-causally at the relay, as in [42].

Removing Encoder 3 by setting and we can also set , and the expression reduces to

By setting we get the achievable region of the MAC with Encoder 2 as the cribbing encoder [26].

By setting , removing inequality (9d) and replacing by S, whose given probability distribution is not to be optimized, the region reduces to the one in [27].

It is worth noting that in the case of a Gaussian channel, Encoder 2 can become fully cognitive of the message from a single sample of . This special case can be made non-trivial by adding a noisy channel or some deterministic function (quantizer for instance) between and Encoder 2.

Finally, we examine the case where Encoder 1’s output may be viewed as two parts where only the first part of the input affects the channel; i.e., . In this case, if the second part is rich enough (e.g. continuous alphabet) Encoder 1 is able to transfer to Encoder 2 infinite amount of data, specifically the entire message . This is equivalent to the case of full cooperation from Encoder 1 to Encoder 2; i.e., the case where Encoder 2 has full knowledge of Encoder 1’s data . Hence, the cooperative state-dependent MAC where the state is known non-causally at the cognitive encoder [29] may also be considered as a special case of the MA-CZIC, when is replaced with an i.i.d. state S.

3.2. Outer Bound

In this section, we present an outer bound on the achievable region of the strictly causal and causal MA-CZIC.

Theorem 3.

Achievable rate-triples for the MA-CZIC with a strictly causal cribbing encoder belong to the closure of the convex hull of all rate triples that satisfy

for some probability distribution of the form

The proof is provided in Appendix C, it is based on Fano’s Inequality [40] and from the Csiszár and Körner’s identity ([43], Lemma 7).

The outline of the proof is provided in Appendix D.

As for the alphabet cardinalities: using standard applications of Carathéodory’s Theorem we obtain that it is sufficient to consider the alphabet cardinalities which are bounded as follows:

The details are omitted for the sake of brevity.

3.3. Special Cases

For the special case of a more-capable MA-CZIC channel we can actually establish the capacity region of the channel, both in the causal and the strictly causal cases.

Definition 3.

We say that the strictly-causal MA-CZIC is more-capable if for all probability distributions of the form .

Theorem 5.

The capacity region of the more-capable strictly-causal MA-CZIC channel is the closure of the convex hull of the set of all rate-triples satisfying

for some probability distribution of the form

The proof of Theorem 5 is provided in Appendix E.

The proof of Theorem 6; i.e., the causal case, follows in the same manner as that of Theorem 5 and thus omitted.

Unfortunately, the requirement that the MA-CZIC is more-capable implies that the receiver Y has a better reception of the signal than its designated receiver Z, which is somewhat optimistic.

We next consider the cases where either one of the transmitters wishes to achieve its maximal possible rate; i.e., the vertex point of the capacity region.

● Maximal rate at Transmitter 1:

Transmitter 2 may help Transmitter 1’s transmission and by doing so increase its rate. Therefore, we that assume Transmitter 2 dedicates its transmission to help transmitting . Transmitter 3 should minimize its interference at the Y Receiver. Setting , the entire interference caused by transmitter 3 at receiver 1 may be reduced via successive cancellation decoding. With no interference caused by transmitter 3, transmitter 2 may drop the Gelfand–Pinsker scheme, setting to maximize the rates. Thus, from (9a)–(9d) we get

From the Markov chain we get . Therefore, we can rewrite (28a)–(28c) as

where in the strictly-causal MA-CZIC case, the union is over all probability distributions of the form

● Maximal rate at Transmitter 2:

Both Transmitter 1 and 3 are not cognitive and have no knowledge of the message , thus they cannot help convey to Y and should only reduce their interference to a minimal level. Setting and follows as in maximizing in (28a)–(29c).

● Maximal rate at Transmitter 3:

Looking at (9d) and (19d) we see that the lower and upper bounds on coincide. Since transmitter 3 is not affected by the transmission of both transmitters 1 and 2, we can treat the transmitter 3—receiver 3 pair as a single user channel and thus achieve the Shannon capacity; i.e.,

In the general case, the maximum rate at transmitter 3 is achieved by setting . In this case, the higher rate at transmitter 3 comes at the expense of the other transmitters, since L was used for conveying part of the interference to Y. Thus, (9a)–(9d) become

Examining (9d), we see that maximum rate at transmitter 3 may also be achieved without affecting and . This is true when the receiver Y is less-noisy than receiver Z in the sense that for all probability distributions of the form (20). In this case (9d) becomes

Actually, it suffices to require that the channel will be more-capable; i.e., for all probability distributions of the form (20), for achieving maximum rate at .

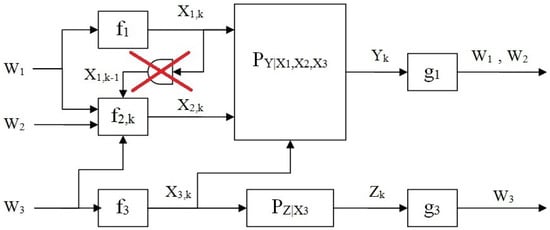

4. Cooperative Encoding

Let us now consider the case of full unidirectional cooperation from Encoder 1 to Encoder 2. This becomes a setup in which Encoders 1 and 2 share a common message, and Encoder 2 transmits a separate additional private message. Thus we have an interference cognitive channel (cognition in terms of ) with a common message, as depicted in Figure 2. Hence, Encoder 2 is given by the mapping

Figure 2.

MA-CZIC with full unidirectional cooperation from Encoder 1 to Encoder 2.

For this channel setup, a simpler outer bound on the capacity region can be derived providing some insights on the original problem. A special case of this channel, in which the secondary channel is removed and is replaced with an i.i.d. state S for the main channel; i.e., , was studied in [29] and the single-letter characterization of the capacity region was established for that channel.

The following theorem provides a single-letter expression for an achievable region of the MA-CZIC with full unidirectional cooperation (common message).

Theorem 7.

The closure of the convex hull of the set of all rate-triples satisfying

for some probability distribution of the form

is achievable for the MA-CZIC with with full unidirectional cooperation.

The outline of the proof for Theorem 7 appears in Appendix F.

The following theorem provides a single-letter expression for an outer bound on the capacity region of the MA-CZIC with full unidirectional cooperation.

Theorem 8.

Achievable rate-triples for the MA-CZIC with full unidirectional cooperation belong to the closure of the convex hull of rate-regions given by

for some probability distribution of the form

The outline of the proof of Theorem 8 is provided in Appendix G.

Notice that inequalities (38a)–(38c) are identical to (19b)–(19d), where the probability distribution form (39) is a special case of (20). Thus, the outer bound established for and the sum-rate in the strictly-causal MA-CZIC, also holds for the case of full unidirectional cooperation. However, we would expect the outer bounds on and the sum-rate to be smaller for the channel with the cribbing encoder, thus implying that the outer bound for the MA-CZIC is generally not tight.

5. Partial Cribbing

Next we consider the case of partial cribbing, where Encoder 2 views through a deterministic function

instead of obtaining directly. This cribbing scheme is motivated by continuous input alphabet MA-CZIC, since perfect cribbing results in the degenerated case of full cooperation between the encoders and requires an infinite capacity link.

We define the strictly-causal MA-CZIC with partial cribbing as in (2)–(6) with the exception that Encoder 2 is defined by the mapping

where for . The causal MA-CZIC with partial cribbing differs by setting

It is worth noticing that the state-dependent MAC with state information known non-causally at one encoder [44] is a special case of the MA-CZIC with partial cribbing. This case is derived by setting and replacing with an i.i.d. state S. The capacity region of this simpler case remains an open problem. Therefore, it is hard to expect that capacity region would be established for the MA-CZIC with partial cribbing. Next, we establish inner and outer bounds for the MA-CZIC with partial cribbing.

Theorem 9.

The closure of the convex hull of the set of rate-triples satisfying

for some probability distribution of the form

is achievable for the strictly-causal MA-CZIC with partial cribbing.

The outline proof of Theorem 9 appears in Appendix H.

The proof of Theorem 10 is similar to that of Theorem 9 and thus is omitted.

Comparing this result to the achievability region found for the MA-CZIC we can see that inequalities (44), (45) and (47) are identical to (9b)–(9d), while inequality (43) differs and inequality (46) was added. In correspondence, the coding scheme for the MA-CZIC with partial cribbing differs from Theorem 1 mainly in Encoder 1. Encoder 1 now needs to transmit data in a lossy manner to both Y receiver and Encoder 2. To do so, Encoder 1 employs the rate-splitting technique. It splits its message into two parts with rates accordingly, such that . The rate represents the rate of transmission to Encoder 2. Combining the rate-splitting with the superposition block Markov encoding (SBME) at Encoder 1 results in another codebook , in addition to the two codebooks and . The codebooks are created in an i.i.d. manner as follows: First, codewords are created using . Then, for each codeword , codewords are drawn i.i.d. given . Finally, for each pair , codewords are drawn i.i.d. given . Next, as in the scheme which corresponds to Theorem 1 SBME coding scheme, the index of the codeword in time i becomes the index of in time . For successful decoding at Encoder 2, we must require . The rate is therefore that of the information jointly transmitted to Y by both encoders. The remaining quantity in (43), that is, represents the rate super-imposed by and decoded by Y via successive decoding. One may notice that in inequalities (44)–(45), the pair can replace , however since is a deterministic function of it can be dropped.

This result is based on [28], where a capacity region was established for the case of the two-user MAC with cribbing through a deterministic function at both encoders.

Theorem 11.

Achievable rate-triples for the strictly-causal MA-CZIC with partial cribbing belong to a closure of the convex hull of the set of rate-regions given by

for some probability distribution of the form

The proof of Theorem 11 as well as the following Theorem Theorem 12 appear in Appendix I.

It is easy to see that setting to be the identity function, and , the region of Theorem 11 degenerates to the outer bound of the MA-CZIC with noiseless cribbing (Theorem 3).

A related problem is the Cognitive State-Dependent MAC with Partial Cribbing. This setup is obtained by removing user 3 and replacing with an i.i.d. state S known non-causally at Encoder 2. From the inner bound (Theorem 9) and outer bound (Theorem 11) for the MA-CZIC with partial cribbing derived in previous sections it is immediate to derive inner and outer bounds for the channel by setting and . Doing so yields the following inner and outer bounds.

Theorem 13.

The closure of the convex hull of the set of rate-pairs satisfying

for some probability distribution of the form

is achievable for the state-dependent cognitive MAC with partial (strictly-causal) cribbing.

Theorem 14.

Achievable rate-pairs for the state-dependent cognitive MAC with partial (strictly-causal) cribbing belong to the closure of the convex hull of rate-regions given by

for some probability distribution of the form

6. Discussion and Future Work

The use of cognitive radio holds tremendous promise in better exploiting the available spectrum. Sensing its environment, a cognitive radio can use it as network side information resulting in better performances for all users. The cognitive transmitter may use this information to reduce interference at its end, reduce interference for the other users or help relaying information. However, obtaining this side-information is not always practical in actual scenarios. The assumption of a-priori knowledge of the other user’s information may only be applied to certain situations where the transmitters share information through a separate channel. The assumption of causally sensing the environment is more realistic in many cases of distinct transmitters. Nevertheless, the cognitive transmitter will most likely acquire a noisy version of the information limiting its ability to cooperate. In addition, sensing the environment involves complicated implementations of the transmitter as well as power consumption for which the cognition improvement is weighed against. Nevertheless, the improved transmission rates achieved via cognitive schemes motivate their integration into various wireless systems such as Wi-Fi and Cellular networks. We note that cribbing requires parallel receiver/transmit technology (duplex operation), which is useful and usually available, as in the 5G systems. Although receiving much attention recently ([15,16]), many of the fundamental problems of cognitive multi-terminal networks remain unsolved.

In this paper we investigated some cognitive aspects of multi-terminal communication networks. We introduced the MA-CZIC as generalization of a compound cognitive multi-terminal network. The MA-CZIC incorporates various multi-terminal communication channels—MAC, Z-IFC—as well as several cognition aspects—cooperation and cribbing. For the MA-CZIC we have drawn inner and outer bounds on its capacity region. In an effort to better characterize the capacity region, we studied the extreme points of the achievability region, and were able to find the capacity region in the case the channel is more-capable. Furthermore, we investigated some variations of the channel regarding the nature of cooperation between the cognitive encoder—Encoder 2—and the non-cognitive encoder sharing its receiver—Encoder 1. The case in which Encoder 2 has better cognition abilities and obtains full knowledge of Encoder 1’s message was investigated. Furthermore, the case where Encoder 2 has worse cognition abilities and cribs from Encoder 1 via a deterministic function, such as quantizer, was studied.

As for possible future work, several directions can be considered. First, it would be interesting to identify some concrete non-trivial channel specification for which the MA-CZIC inner and outer bounds coincide, at least in partial regions. Finding such a channel may help us get insight about the capacity region as well as the margins given by the inner and outer bounds. Moreover, the characterization of the capacity region may be further improved by examining different interference regimes. Determining the exact capacity region for the MA-CZIC will subsequently result in the capacity region for the cognitive Z-IFC [36] as a special case. We believe that the opposite derivation also applies; i.e., the capacity region of the MA-CZIC will follow from the capacity region of the cognitive Z-IFC. Our model assumed that the cognitive transmitter—transmitter 2—has full non-causal knowledge of the interference signal . While modeling the interference signal as a transmitter is very realistic in many scenarios, the assumption of non-causal knowledge of the signal, may not hold in practice in case the cognitive transmitter has sensing capabilities but not shared information. Therefore, the model where transmitter 2 cribs from transmitter 3 is very much in place, and it would be very interesting to see if it is possible to determine the capacity region for the channel. Possible iprovement of the achievable bounds may incorporate the fact that is associated with a coding scheme, and hence the interference can be mitigated by partial/full decoding, with possible aid of the cognizant transmitter 2.

Acknowledgments

This work was supported by the Heron consortium via the Israel ministry of economy and science.

Author Contributions

All three authors cooperated in the theoretical research leading to the reported results and in composing this submitted paper.

Conflicts of Interest

The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| MAC | Multiple-Access Channel |

| IFC | Interference Channel |

| OFDMA | Orthogonal Frequency-Division Multiple Access |

| HK | Han-Kobayashi |

| QoS | Quality of Service |

| DF | Decode and Forward |

| AF | Amplify and Forward |

| CIFC | Cognitive Interference Channel |

| MA-CZIC | Multiple-Access Cognitive Z-Interference Channel |

| P2P | point-to-point |

| RV | Random Variable |

| AEP | Asymptotic Equipartition Property |

Appendix A

Proof of Theorem 1.

Below is a description of the random coding scheme we use to prove achievability of rate-triples in , the analysis of the average probability of error is omitted.

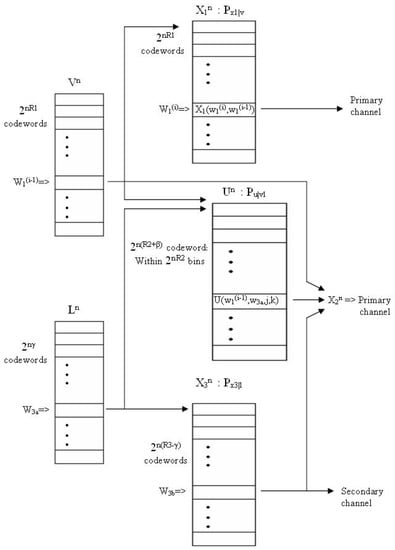

We propose the following coding scheme, which includes Block–Markov superposition coding, backward decoding, rate splitting and Gelfand–Pinsker coding [41]. The coding scheme combines the coding techniques of [36] with that of [27], which, in turn, is based on the coding technique of [26,29].

For a fixed distribution the coding schemes are as follows:

Appendix A.1. Encoder 3 and Decoder 3 Coding Scheme

Appendix A.1.1. Encoder 3 Codebook generation

Generate independently codewords , each with probability . These codewords constitute the inner codebook of Transmitter 3. Denote them as where . For each codeword , generate codewords , each with probability Pr. Denote them as . The codewords constitute the outer codebook of Transmitter 3 associated with the codeword .

Appendix A.1.2. Encoding Scheme of Encoder 3

Encoder 3 splits its message into two independent parts , with rates and respectively. For and it transmits .

Appendix A.1.3. Receiver 3 Decoding

Receiver 3 looks for such that

If no such exists an error is declared, and if there exists more than one that satisfies the condition, the decoder chooses at random among them.

Appendix A.2. Encoder 1, Encoder 2 and Main Decoder Coding Scheme

We consider B Blocks, each of n symbols. A sequence of message pairs for , will be transmitted during B transmission blocks. As , for a fixed n, the rate pair of the message , converges to .

Appendix A.2.1. Encoder 1 Codebook generation

Generate codewords , each with probability . These codewords constitute the inner codebook of Transmitter 1. Denote them as , where . For each codeword generate codewords , each with probability . These codewords, , constitute the outer codebook of Transmitter 1 associated with . Denote them as where is as before, representing the index of the codeword in the inner codebook and the index of the codeword in the associated outer codebook.

Appendix A.2.2. Encoding Scheme of Encoder 1

Given for , we define for .

In block 1 Encoder 1 sends

in block Encoder 1 sends

and in block B Encoder 1 sends

Appendix A.2.3. Encoder 2 Codebook Generation

This encoder’s codebook is based on both Encoder 1 and Encoder 3 inner codebooks. For each two codewords and generate codewords , each with probability . These codewords constitute Encoder 2’s codebook associated with and . Randomly partition each of the codebooks into bins, each consisting of codewords. Now label the codewords by , where the codebook is chosen according to and , defines the bin according to Encoder 2’s message, and is the index within the bin.

Appendix A.2.4. Encoding Scheme of Encoder 2

Given as before and , search for the lowest such that is jointly typical with the triplet , denoting that t as . If such a t is not found or if the triplet is not jointly typical, an error is declared and . Now, create the codeword by drawing its components i.i.d. conditionally on the quadruple , where the conditional law is induced by (10).

In block 1 Encoder 2 sends

As a result of cribbing from Encoder 1, before the beginning of block , Encoder 2 has an estimate for . Then, for , Encoder 2 sends

and in block B Encoder 2 sends

Schematic description of the encoding appears in Figure A1.

Figure A1.

A schematic description of the codebooks hierarchy and encoding procedure at the three encoders.

Appendix A.2.5. Decoding at the Primary Receiver ()

After receiving B blocks the decoder uses backward decoding starting from decoding block B moving on downward to block 1. In block B the receiver looks for such that

for some , where .

At block , assuming that a decoding was done backward down to (and including) block , the receiver decoded . Then, to decode block b, the receiver looks for such that

for some , where .

Appendix A.2.6. Decoding at Encoder 2

To obtain cooperation, after block , Encoder 2 chooses such that

where was determined at the end of block and .

At each of the decoders, if a decoding step either fails to recover a unique index (or index pair) which satisfies the decoding rule, or there is more than one index (or index pair), then an index (or index pair) is chosen at random among the indices which satisfies the decoding rule.

Appendix A.3. Bounding the Probability of Error

We define the error events as follows:

- : Codebook error, the codewords , , , are not jointly typical. That is

- : Error decoding at Encoder 2, that is, there exists such that

- : Encoding error at Encoder 2, no suitable encoding index t. That is, there is no such that

- : Channel error, one or more of the input signals is not jointly typical with the outputs and . That is

- : Codebook error in decoding at either one of the decoders, a false message was detected. That is, there exists such that

- : Codebook error in decoding . There exists such thatfor some pair , .

- : Codebook error in decoding . There exists a different bin , such thatfor some .

- : Codebook error in decoding . There exists such that

Notice that when Encoder 2 observes error-free, as in this setup, the error event (A1) can be replaced with the explicit case of having two identical codewords in codebook, i.e, there exists such that .

We now define the events as follows:

We upper bound the average probability of error averaged over all codebooks and all random partitions, as in [27], by

where denotes the complement of the event .

Furthermore, we can upper bound each of the summands in the last component of (A3) by the union bound as

Now, we can separately examine and upper bound each of the summands in (A3):

- By the Asymptotic Equipartition Property (AEP) [40], as .

- In the second summand, , the conditioning on insures that are jointly typical, and that Encoder 2 decoded correctly all the previous messages and specifically . Since each codeword is drawn i.i.d. given , from the strong typicality Lemma we getwhere is the eventAssuming, without loss of generality, that , we get by using the union boundHence forwe get as .

- Since the codewords are generated in an i.i.d. manner we havewhere is the eventfor a specific index t. Hence, we haveConditioning on V and L in ([41], Lemma 3) we getfor all , where as . HenceThe expression converges to 0 as for

- By the AEP as .

- Ifthen, from joint typicality decoding, as .

- We state thatwhere stands for the eventBy using the strong typicality Lemma we bound each of the summands aboveSumming over all codewords we getTherefore, ifthen as

- Similarly, using the same technique as the previous step, ifthen as .

- Finally, ifthen as .

Appendix B

Outline of the Proof of Theorem 2:

The achievability part follows similarly to that of Theorem 1, the only difference being in the way the codeword is generated. Here, the second encoder generates the codeword by drawing its components i.i.d. conditionally on the quintuple , where the conditional law is induced by (11).

Appendix C

Proof of Theorem 3—Strictly Causal MA-CZIC Outer Bound.

Consider an code with average block error probability , and a probability distribution on given by

For , let , and be the random variables defined by

and let U be the random variable defined by further defining Q to be an auxiliary (time-sharing) random variable that is distributed uniformly on the set , and let

We start with an upper bound on

where follows from the encoding relation in (2).

Next, consider

where follows from the fact that conditioning decreases entropy, follows from the Markov chain and follows since the channel is memoryless.

Using the Csiszár-Körner’s identity ([43], Lemma 7) we obtain

where the last equality follows from (A18). Substituting (A19) into (A22) we get

Notice that (A23) implies that there exists a number where

where the right inequality of (A25) follows since and , and the left inequality follows since

Following from (A21) we have

where follows from (A24) and the definitions of random variables in (A19); follows since the channel is memoryless, and follows from the Markov chain .

Next, consider

By conditioning (A22) on we get

and hence

where follows from the encoding relation in (2) and follows since and are Markov chains.

In the same manner, conditioning (A22) on ( yields

Substituting (A30) and (A31) into (A28) we get

where follows since is independent of and therefore , follows from the definitions of random variables in (A19), and follows since the channel is memoryless.

Finally, we consider the sum-rate

where follows from (A22) and (A31), follows since is independent of and and therefore , follows from the definitions of random variables in (A19), and follows since the channel is memoryless.

It remains to show that the joint law of the auxiliary random variables satisfy (20); i.e., we wish to show that the RVs and as chosen in (A18) satisfy

Since , summing this joint law over , and all possible sub-sequences we obtain

From the memorylessness of the channel we get

From the memoryless property of the channel we may write

Appendix D

Outline of the proof of Theorem 4—Causal MA-CZIC Outer Bound:

The outer-bound for the causal MA-CZIC follows similarly to that of Theorem 2. Consider an code with average block error probability , and a probability distribution on given by

Appendix E

Proof of Theorem 5—More Capable Channel—Strictly Causal Case.

Proof of the Converse Part: For , let be defined as in (A18). Define Q to be an auxiliary random variable that is distributed uniformly on the set , and let be defined as in (A19).

- (a)

- (b)

- follows since conditioning reduces entropy, and

- (c)

- follows since is a Markov chain.

Next, consider the sum-rate

where the reasoning for steps (a)–(c) is as in (A48).

Finally, clearly

Since in this case, the only auxiliary random variable used is V, defined the same as in (A19), and (27) is a special case of (10), it follows that V satisfies (27).

Proof of the Direct Part: It is easy to verify that the region in (9a)–(9c) contains the region in (25a)–(25d). To realize this, set and . Hence in (9b), (9c) we get and and both equations coincide with (25b), (25c). The inequality (9a) remains as it is and (9d) becomes since for in the more-capable case. Hence, since the p.m.f, in (27) is a special case of the probability mass function in (10), the region (25a)–(25d) is achievable thus concluding the proof of Theorem 5. ☐

Appendix F

Outline of the Proof of Theorem 7:

The achievability theorem is based on random coding scheme in addition to superposition coding, rate-splitting, and Gel’fand-Pinsker binning. However, since there is no cribbing involved, and Encoder 2 has full knowledge of , there is no need of Block–Markov coding and Backward-Decoding, and the coding scheme becomes simpler than the one used in proving Theorem 1. In what follows, we sketch the main elements of the encoding and decoding procedures and provide an intuitive explanation for the proposed choices.

For a distribution satisfying (37), User 3 uses the same rate-splitting coding technique as in Appendix A. It encodes one part of by an inner codebook represented by L and the second part by an outer codebook represented by , where the inner codebook can be decoded by both decoders. Now, User 1 may transmits at rate . The cognitive user, User 2, relying on the fact that L and were decoded by the main decoder, bins U against , hence transmitting at rate . Now the information sent by Encoder 2 at rate may be shared between the private message and the common message in such a manner that

thus, establishing (36a)–(36c).

Appendix G

Outline of the proof of Theorem 8:

Let the RVs be defined as in (A18)–(A19) with the exception of . We start by bounding as in (A27), thus getting

Next, consider

Now, consider the sum-rate

Appendix H

Proof of Theorem 9—Partial Cribbing MA-CZIC Inner Bound.

We introduce the following coding scheme, based on the coding scheme of Appendix A. The difference from Appendix A is that Encoder 1 now uses rate-splitting in addition to block Markov superposition coding. Encoders 2 and 3 use the same coding scheme as in Appendix A. Since the analysis of the average probability of error is very similar to that of Appendix A, for the sake of brevity we omit it as well as similar parts to Appendix A which will not be repeated here. For a fixed distribution the coding schemes are as follows:

Encoder 3 and Decoder 3 Coding Scheme: Same as in Appendix A.

Encoder 1 Coding Scheme: We consider B Blocks, each of n symbols. A sequence of message pairs for , will be transmitted during B transmission blocks.

Encoder 1 Codebook generation: Encoder 1 splits its message into two independent parts , with rates and accordingly. Generate codewords , each with probability . These codewords constitute the inner codebook of Transmitter 1. Denote them as , where . For each codeword generate codewords , each with probability . These codewords, , constitute the outer codebook of Transmitter 1 associated with . Denote them as where is as before, representing the index of the codeword in the inner codebook and the index of the codeword in the associated outer codebook. Finally, for each pair , generate codewords each with probability . Denote them as .

Encoding Scheme of Encoder 1: Given , where , for , we define for .

In block 1 Encoder 1 sends

in block Encoder 1 sends

and in block B Encoder 1 sends

Encoder 2 Coding Scheme: Same as in Appendix A, where the index is now replaced with , and , unknown at Encoder 2, is replaced with .

Decoding at the primary receiver (): After receiving B blocks the decoder uses backward decoding starting from decoding block B moving on downward to block 1. In block B the receiver looks for such that

for some , where .

In block , assuming that a decoding was done backward down to (and including) block , the receiver decoded . Then, to decode block b, the receiver looks for such that

for some , where .

Decoding at Encoder 2: To obtain cooperation, after block , Encoder 2 chooses such that

where was determined at the end of block and .

At each of the decoders, if a decoding step either fails to recover a unique index (or index pair) which satisfies the decoding rule, or there is more than one index (or index pair), then an index (or index pair) is chosen at random among the indices which satisfies the decoding rule. ☐

Appendix I

Proof of Theorem 11—Partial Cribbing MA-CZIC Outer Bound.

Let the RVs be defined as in (A18)–(A19). In addition define and to be

accordingly, define as follows

We start with an upper bound on

where follows from the encoding relation and the fact that is a deterministic function of . Step follows from the identity (A22). Step follows from the fact that conditioning decreases entropy and that is independent of the triplet ). Step follows from the Markov chains and .

Next, we bound as in Appendix C. We get

We continue to bound as in Appendix C. It is easy to see that the bound for the MA-CZIC with full strictly-causal cribbing must also bound the MA-CZIC with partial cribbing. Hence, we get

Finally, we consider the sum-rate . As in Appendix C, the bound for the MA-CZIC with full strictly-causal cribbing must also bound the MA-CZIC with partial cribbing. we get

A second bound on the sum-rate is obtained as follows

where follows from the encoding relation and the fact that is a deterministic function of . Step follows from the identity (A22). Step follows from the fact that is independent of the triplet ). Step follows from the fact that conditioning reduces entropy and from the Markov chains

Now, similarly to Appendix C, we use (A56)–(A60) and the time-sharing RV Q to derive the outer bound. ☐

References

- Ahlswede, R. Multi-way communication channels. In Proceedings of the Second International Symposium on Information Theory, Tsahkadsor, Armenia, USSR, 2–8 September 1971; pp. 23–52. [Google Scholar]

- Liao, H. Multiple Access Channels. Ph.D. Thesis, Department of Electrical Engineering, University of Hawaii, Honolulu, HI, USA, 1972. [Google Scholar]

- Shannon, C.E. Two-way communication channels. In Proceedings of the 4th Berkeley Symposium on Mathematical Statistics and Probability, Statistical Laboratory of the University of California, Berkeley, CA, USA, 20 June–30 July 1960; University of California Press: Berkeley, CA, USA, 1961; Volume 1, pp. 611–644. [Google Scholar]

- Ahlswede, R. The capacity region of a channel with two senders and two receivers. Ann. Probab. 1974, 2, 805–814. [Google Scholar] [CrossRef]

- Han, T.; Kobayashi, K. A new achievable rate region for the interference channel. IEEE Trans. Inf. Theory 1981, 27, 49–60. [Google Scholar] [CrossRef]

- Chong, H.F.; Motani, M.; Garg, H.; El Gamal, H. On the Han-Kobayashi Region for the Interference Channel. IEEE Trans. Inf. Theory 2008, 54, 3188–3195. [Google Scholar] [CrossRef]

- Carleial, A.B. Interference channels. IEEE Trans. Inf. Theory 1978, IT-24, 60–70. [Google Scholar] [CrossRef]

- Sason, I. On achievable rate regions for the Gaussian interference channel. IEEE Trans. Inf. Theory 2004, 50, 1345–1356. [Google Scholar] [CrossRef]

- Kramer, G. Review of rate regions for interference channels. In Proceedings of the International Zurich Seminar on Communications, Zurich, Switzerland, 2–4 March 2006; pp. 152–165. [Google Scholar]

- Etkin, R.; Tse, D.; Wang, H. Gaussian Interference Channel Capacity to within One Bit. IEEE Trans. Inf. Theory 2008, 54, 5534–5562. [Google Scholar] [CrossRef]

- Telatar, E.; Tse, D. Bounds on the capacity region of a class of interference channels. In Proceedings of the IEEE International Symposium Information Theory (ISIT), Nice, France, 24–29 June 2007. [Google Scholar]

- Maric, I.; Goldsmith, A.; Kramer, G.; Shamai (Shitz), S. On the capacity of interference channels with one cooperating transmitter. Eur. Trans. Telecommun. 2008, 19, 329–495. [Google Scholar] [CrossRef]

- El Gamal, A.; Kim, Y.H. Network Information Theory; Cambridge University Press: Cambridge, UK, 2012; ISBN 1-107-00873-1. [Google Scholar]

- Gamal, A.E.; Costa, M. The capacity region of a class of deterministic interference channels (Corresp.). IEEE Trans. Inf. Theory 1982, 28, 343–346. [Google Scholar] [CrossRef]

- Goldsmith, A.; Jafar, S.A.; Maric, I.; Srinivasa, S. Breaking Spectrum Gridlock with Cognitive Radios: An Information Theoretic Perspective. Proc. IEEE 2009, 97, 894–914. [Google Scholar] [CrossRef]

- Jovicic, A.; Viswanath, P. Cognitive Radio: An Information-Theoretic Perspective. IEEE Trans. Inf. Theory 2009, 55, 3945–3958. [Google Scholar] [CrossRef]

- Wang, B.; Liu, K.J.R. Advances in cognitive radio networks: A survey. IEEE J. Sel. Top. Signal Process. 2011, 5, 5–23. [Google Scholar] [CrossRef]

- Zhao, Q.; Sadler, B.M. A Survey of Dynamic Spectrum Access. IEEE Signal Process. Mag. 2007, 3, 79–89. [Google Scholar] [CrossRef]

- Guzzon, E.; Benedetto, F.; Giunta, G. Performance Improvements of OFDM Signals Spectrum Sensing in Cognitive Radio. In Proceedings of the 2012 IEEE Vehicular Technology Conference (VTC Fall), Quebec City, QC, Canada, 3–6 September 2012; Volume 5, pp. 1–5. [Google Scholar]

- Devroye, N.; Mitran, P.; Tarokh, V. Achievable rates in cognitive radio channels. IEEE Trans. Inf. Theory 2006, 52, 1813–1827. [Google Scholar] [CrossRef]

- Rini, S.; Kurniawan, E.; Goldsmith, A. Primary Rate-Splitting Achieves Capacity for the Gaussian Cognitive Interference Channel. arXiv, 2012; arXiv:1204.2083. [Google Scholar]

- Rini, S.; Kurniawan, E.; Goldsmith, A. Combining superposition coding and binning achieves capacity for the Gaussian cognitive interference channel. In Proceedings of the 2012 IEEE Information Theory Workshop (ITW), Lausanne, Switzerland, 3–7 September 2012; pp. 227–231. [Google Scholar]

- Wu, Z.; Vu, M. Partial Decode-Forward Binning for Full-Duplex Causal Cognitive Interference Channels. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Cambridge, MA, USA, 1–6 July 2012; pp. 1331–1335. [Google Scholar]

- Duan, R.; Liang, Y. Bounds and Capacity Theorems for Cognitive Interference Channels with State. IEEE Trans. Inf. Theory 2015, 61, 280–304. [Google Scholar] [CrossRef]

- Rini, S.; Huppert, C. On the Capacity of the Cognitive Interference Channel with a Common Cognitive Message. IEEE Trans. Inf. Theory 2015, 26, 432–447. [Google Scholar] [CrossRef]

- Willems, F.; van der Meulen, E. The discrete memoryless multiple-access channel with cribbing encoders. IEEE Trans. Inf. Theory 1985, IT-31, 313–327. [Google Scholar] [CrossRef]

- Bross, S.; Lapidoth, A. The state-dependent multiple-access channel with states available at a cribbing encoder. In Proceedings of the 2010 IEEE 26th Convention of Electrical and Electronics Engineers in Israel (IEEEI), Eilat, Israel, 17–20 November 2010; pp. 665–669. [Google Scholar]

- Permuter, H.H.; Asnani, H. Multiple Access Channel with Partial and Controlled Cribbing Encoders. IEEE Trans. Inf. Theory 2013, 59, 2252–2266. [Google Scholar]

- Somekh-Baruch, A.; Shamai (Shitz), S.; Verdú, S. Cooperative Multiple-Access Encoding With States Available at One Transmitter. IEEE Trans. Inf. Theory 2008, 54, 4448–4469. [Google Scholar] [CrossRef]

- Kopetz, T.; Permuter, H.H.; Shamai (Shitz), S. Multiple Access Channels With Combined Cooperation and Partial Cribbing. IEEE Trans. Inf. Theory 2016, 62, 825–848. [Google Scholar] [CrossRef]

- Kolte, R.; Özgür, A.; Permuter, H. Cooperative Binning for Semideterministic Channels. IEEE Trans. Inf. Theory 2016, 62, 1231–1249. [Google Scholar] [CrossRef]

- Permuter, H.H.; Shamai (Shitz), S.; Somekh-Baruch, A. Message and State Cooperation in Multiple Access Channels. IEEE Trans. Inf. Theory 2011, 57, 6379–6396. [Google Scholar] [CrossRef]

- Mokari, N.; Saeedi, H.; Navaie, K. Channel Coding Increases the Achievable Rate of the Cognitive Networks. IEEE Commun. Lett. 2013, 17, 495–498. [Google Scholar] [CrossRef]

- Passiatore, C.; Camarda, P. A P2P Resource Sharing Algorithm (P2P-RSA) for 802.22b Networks. In Proceedings of the 3rd International Conference on Context-Aware Systems and Applications, Dubai, UAE, 15–16 October 2014. [Google Scholar]

- Costa, M.H.M. Writing on dirty paper. IEEE Trans. Inf. Theory 1983, 29, 439–441. [Google Scholar] [CrossRef]

- Liu, N.; Maric, I.; Goldsmith, A.; Shamai (Shitz), S. Capacity Bounds and Exact Results for the Cognitive Z-Interference Channel. IEEE Trans. Inf. Theory 2013, 59, 886–893. [Google Scholar] [CrossRef]

- Rini, S.; Tuninetti, D.; Devroye, N. Inner and Outer bounds for the Gaussian cognitive interference channel and new capacity results. IEEE Trans. Inf. Theory 2012, 58, 820–848. [Google Scholar] [CrossRef]

- Shimonovich, J.; Somekh-Baruch, A.; Shamai (Shitz), S. Cognitive cooperative communications on the Multiple Access Channel. In Proceedings of the 2013 IEEE Information Theory Workshop (ITW), Sevilla, Spain, 9–13 September 2013; pp. 1–5. [Google Scholar]

- Shimonovich, J.; Somekh-Baruch, A.; Shamai (Shitz), S. Cognitive aspects in a Multiple Access Channel. In Proceedings of the 2012 IEEE 27th Convention of Electrical and Electronics Engineers in Eilat, Israel, 14–17 November 2012; pp. 1–3. [Google Scholar]

- Cover, T.; Thomas, J. Elements of Information Theory, 1st ed.; Wiley: Hoboken, NJ, USA, 1991. [Google Scholar]

- Gel’fand, S.I.; Pinsker, M.S. Coding for Channels with Random Parameters. Probl. Contr. Inf. Theory 1980, 9, 19–31. [Google Scholar]

- Zaidi, A.; Kotagiri, S.; Laneman, J.; Vandendorpe, L. Cooperative Relaying with State Available Noncausally at the Relay. IEEE Trans. Inf. Theory 2010, 56, 2272–2298. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J. Broadcast channels with confidential messages. IEEE Trans. Inf. Theory 1978, 24, 339–348. [Google Scholar] [CrossRef]

- Kotagiri, S.; Laneman, J.N. Multiple Access Channels with State Information Known at Some Encoders. EURASIP J. Wireless Commun. Netw. 2008, 2008. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).