Abstract

The explicit form of the rate-distortion function has rarely been obtained, except for few cases where the Shannon lower bound coincides with the rate-distortion function for the entire range of the positive rate. From an information geometrical point of view, the evaluation of the rate-distortion function is achieved by a projection to the mixture family defined by the distortion measure. In this paper, we consider the -th power distortion measure, and prove that -generalized Gaussian distribution is the only source that can make the Shannon lower bound tight at the minimum distortion level at zero rate. We demonstrate that the tightness of the Shannon lower bound for (Laplacian source) and (Gaussian source) yields upper bounds to the rate-distortion function of power distortion measures with a different power. These bounds evaluate from above the projection of the source distribution to the mixture family of the generalized Gaussian models. Applying similar arguments to -insensitive distortion measures, we consider the tightness of the Shannon lower bound and derive an upper bound to the distortion-rate function which is accurate at low rates.

1. Introduction

The rate-distortion function, , shows the minimum achievable rate to reproduce source outputs with the expected distortion not exceeding D. The Shannon lower bound (SLB) has been used for evaluating [1,2]. The tightness of the SLB for the entire range of the positive rate identifies the entire for pairs of a source and distortion measure such as the Gaussian source with squared distortion [1], the Laplacian source with absolute magnitude distortion [2], and the gamma source with Itakura–Saito distortion [3]. However, such pairs are rare examples. In fact, for a fixed distortion measure, there exists only a single source that makes the SLB tight for all D, as we will prove in Section 2.3. The necessary and sufficient condition for the tightness of the SLB was first obtained for the squared distortion [4], discussed for a general difference distortion measure d [2], and recently described in terms of d-tilted information [5]. While these results consider the tightness of the SLB for each point of (i.e., for each D), we discuss the tightness for all D in this paper. More specifically, if we focus on the minimum distortion at zero rate (denoted by ), the tightness of the SLB at characterizes a condition between the source density and the distortion measure.

If the SLB is not tight, the explicit evaluation of the rate-distortion function has been obtained only in limited cases [6,7,8,9]. Little is inferred on the behavior of when the distortion measure is varied from a known case, since does not continuously change even if the distortion measure is continuously modified. Although the SLB is easily obtained for difference distortion measures, it is unknown how accurate the SLB is without the explicit evaluation, upper bound, or numerical calculation of the rate-distortion function.

In this paper, we consider the constrained optimization of the definition of from an information geometrical viewpoint [10]. More specifically, we show that it is equivalent to a projection of the source distribution to the mixture family defined by the distortion measure. If the source is included in the mixture family, the SLB is tight; if it is not tight, the gap between and its SLB evaluates the minimum Kullback–Leibler divergence from the source to the mixture family (Lemma 1). Then, using the bounds of the rate-distortion function of the -th power difference distortion measure obtained in [11], we evaluate the projections of the source distribution to the mixture families associated with this distortion measure (Theorem 3).

Operational rate-distortion results have been obtained for the uniform scalar quantization of the generalized Gaussian source under the -th power distortion measure [12,13]. We prove that only the -generalized Gaussian distribution has the potential to be the source whose SLB is tight; that is, identical to the rate-distortion function for the entire rage of positive rate. This fact brings knowledge on the tightness of the SLB of an -insensitive distortion measure, which is obtained by truncating the loss function near zero error [14,15,16]. The above result implies that the SLB is not tight if the source is the -generalized Gaussian and the distortion has another power . We demonstrate that even in such a case, a novel upper bound to can be derived from the condition for the tightness of the SLB. The fact that the Laplacian () and the Gaussian () sources have the tight SLB specifically derives a novel upper bound to of -th power distortion measure, which has a constant gap from the SLB for all D. By the relationship between the SLB and the projection in the information geometry, the gap evaluates the projections of the -generalized Gaussian source to the mixture families of -generalized Gaussian models. Extending the above argument to -insensitive loss, we derive an upper bound to the distortion-rate function, which is tight in the limit of zero rate.

2. Rate-Distortion Function and Shannon Lower Bound

2.1. Rate-Distortion Function

Let X and Y be real-valued random variables of a source output and reconstruction, respectively. For the distortion measure between x and y, , the rate-distortion function of the source is defined by

where

is the mutual information and E denotes the expectation with respect to . shows the minimum achievable rate R to reconstruct source outputs with average distortion not exceeding D under the distortion measure d [2,17]. The distortion-rate function, , is the inverse function of the rate-distortion function.

If the conditional distribution achieves the minimum of the following Lagrange function parameterized by ,

then the rate-distortion function is parametrically given by

The parameter s corresponds to the (negated) slope of the tangent of at , and hence is referred to as the slope parameter [2]. Alternatively, the rate-distortion function is given by ([18], Theorem 4.5.1):

If the marginal reconstruction density achieves the minimum above, the optimal conditional reconstruction distribution is given by

(see, for example, [2,19]).

From the properties of the rate-distortion function , we know that for , where

and for [2] (p. 90). Hence, .

2.2. Shannon Lower Bound

In this paper, we focus on difference distortion measures,

for which Shannon derived a general lower bound to the rate-distortion function [1] ([2], Chapter 4).

Throughout this paper, we assume that the function is nonnegative and satisfies

for all . It follows that

for all .

Let

denote the Kullback–Leibler divergence from p to r, which is non-negative and equal to zero if and only if almost everywhere. We define the distribution

Then, the Shannon lower bound (SLB) is defined by

where is the differential entropy of the probability density p, and s is related to D by

The next lemma shows that the SLB is in fact a lower bound to the rate-distortion function and that the difference between them is lower bounded by the Kullback–Leibler divergence.

Lemma 1.

For a source with probability density function and the difference distortion measure (5),

where s and D are related to each other by (9) and

is the convolution between and q.

Proof.

In the information geometry, for a family of distributions and a given distribution p, the distributions that achieve the minimums

are called the m-projection and e-projection of p to , respectively [10]. As a family of distributions, the ()-dimensional mixture family spanned by is defined by

Hence, from the information geometrical viewpoint, the above lemma shows that the difference between and evaluates the m-projection

of the source distribution p to

the (infinite-dimensional) mixture family defined by .

It is also easy to see from the lemma that the SLB coincides with (that is, holds in (8)) if and only if the source random variable X with density can be represented as the sum of two independent random variables, one of which is distributed according to the probability density function in (7). This condition is referred to as the “backward channel” condition, and is equivalent to the fact that the integral equation

has a solution which is a valid density function ([2], Chapter 4). This condition is also equivalent to the fact that .

2.3. Probability Density Achieving Tight SLB for All D

The following theorem claims that for a difference distortion measure, there is at most a unique source for which is tight at .

Theorem 1.

Assume that the source distribution has a finite achieved by a reconstruction μ; that is, . The rate-distortion function is strictly greater than the SLB at , , unless the following holds for the source density almost everywhere:

where is defined in (6), and is determined by the relation

Proof.

Let Z be the random variable such that has the density . As a functional of the source density , the SLB at is expressed as

From the non-negativity of the divergence, is maximized to 0 only if holds almost everywhere. ☐

The tightness of the SLB for each D characterizes the form of the backward channel as discussed for example in ([5], Theorem 4). The above theorem focuses on and characterizes the relation between the form of the source density and the distortion measure.

The tightness of the SLB at is relevant to the tightness for all . For some distortion measures (e.g., the squared and absolute distortion measures), the random variable is decomposable into the sum of two independent random variables

where and some random variable N for any . The backward channel condition (11) means that in such a case, the tightness of the SLB at implies the tightness of the SLB for all . The condition (11) is closely related to the closure property with respect to convolution. If is a kernel function associated with a reproducing kernel Hilbert space, such a closure property is studied in detail [20].

3. Generalized Gaussian Source and Power Distortion Measure

3.1. -th Power Distortion Measure

We examine the rate-distortion trade-offs under the -th power distortion measure

where is a real exponent. In particular, corresponds to the squared error criterion and to the absolute one. The corresponding noise model given by (7) is

where and is the gamma function. This model is the -th-order generalized Gaussian distribution including the Gaussian () and the Laplace () distributions as special cases. Its differential entropy is

For a difference distortion measure, we can assume that the in Theorem 1 is zero without loss of generality. Thus, as a source, we assume the generalized Gaussian random variable with the density,

where , which is a versatile model for symmetric unimodal probability densities. Here the scaling factor is chosen so that

holds.

The SLB for the source (16) with respect to the distortion measure (14) is

which follows from (8), (15), and the relation between the slope parameter s and the average distortion given by (9),

It is well known that when (Gaussian source and the squared distortion measure), the SLB is tight; that is, for all D [1,2,17]. The optimal reconstruction distribution minimizing (2) for this case is given by

for . Additionally, when (Laplacian source and the absolute distortion measure), the SLB is tight for all D ([2], Example 4.3.2.1), which is attained by

for , where is Dirac’s delta function.

3.2. Tightness of the SLB

From Theorem 1, we immediately obtain the following corollary, which shows that the -generalized Gaussian source is the only source that can make the SLB tight at under the -th power distortion measure (14).

Corollary 1.

In the case of , the rate-distortion function of the -insensitive distortion measure,

was studied [16]. It was proved that a necessary condition for at a slope parameter s is that also holds for . According to Theorem 1, this fact derives a contradiction if there is a source that makes the SLB of the distortion measure (17) tight at . Thus, we have the following corollary.

Corollary 2.

Under the ϵ-insensitive distortion measure (17) with , no source makes the SLB tight at .

4. Rate-Distortion Bounds for Mismatching Pairs

From Corollary 1, the SLB cannot be tight for all D if the distortion measure has a different exponent from that of the source (i.e., ). In this section, we show that even in such a case, accurate upper and lower bounds to of Laplacian and Gaussian sources can be derived from the fact that for and .

We denote the rate-distortion function and bounds to it by indicating the parameters and of the source and the distortion measure. More specifically, denotes the rate-distortion function for the source with respect to the distortion measure .

We first prove the following lemma:

Lemma 2.

If for all D, then

holds for , where denotes the expectation with respect to , and

is satisfied for the optimal conditional reproduction distribution in(3).

Proof.

Let , which is equivalent to

The above lemma implies that achieving has the expected -distortion,

with the rate if for all D.

Thus, we obtain the following upper bound to if .

We also have the SLB for ,

Therefore, we arrive at the following theorem:

Theorem 2.

Since the upper bound is tight at , it is the smallest upper bound that has a constant deviation from the SLB. In addition, the SLB is asymptotically tight in the limit for the distortion measure in general [2,21], and the condition for the asymptotic tightness has been weakened recently [22]. These facts suggest that the rate-distortion function is near the SLB at low distortion levels and then approaches the upper bound as the average distortion D grows to . In terms of the distortion-rate function, the theorem also implies that the encoder designed for -distortion has the loss in -distortion, due to the mismatch of the orders, at most by the constant factor .

From Lemma 1 in Section 2.2, by examining the correspondence between the slope parameter and the distortion level D, we obtain the next theorem, which evaluates the m-projection of the source to the mixture family,

If the upper bound is replaced by the asymptotically tight upper bound [2,21], asymptotically tighter bounds to the m-projection are obtained.

Theorem 3.

Proof.

The first part of the theorem is a corollary of Theorem 2 and Lemma 1. The second part corresponds to the case of , since D monotonically decreases as s grows. Because yields , we have (22). It follows from (13) that .

Since for , the optimal reconstruction distribution is given by , (22) holds with equality. ☐

Since we know that if and , the SLB is tight for all D, we have the following corollaries.

Corollary 3.

The rate-distortion function of the Laplacian source, , is lower- and upper-bounded as

Corollary 4.

The rate-distortion function of the Gaussian source, , is lower- and upper-bounded as

Example 1.

If we put in Corollary 4 (), we have

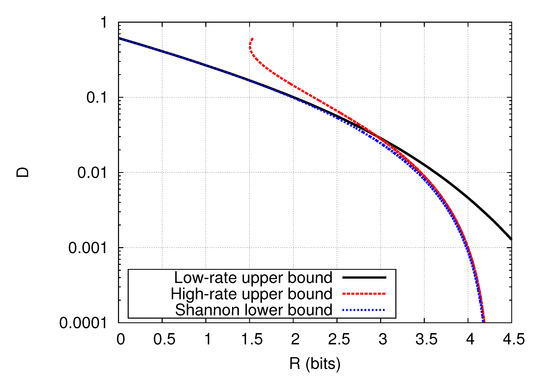

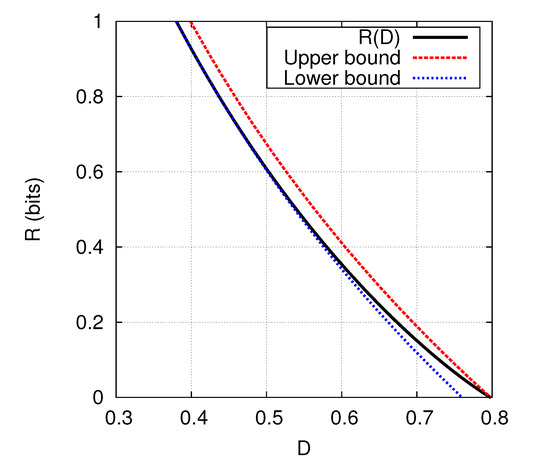

The explicit evaluation of is obtained through a parametric form using the slope parameter s [6]. While the explicit parametric form requires evaluations of the cumulative distribution function of the Gaussian distribution, the bounds in (23) demonstrate that it is well approximated by an elementary function of D. In fact, the gap between the upper and lower bounds is (bit). The bounds in (23) are compared with for in Figure 1.

Figure 1.

Rate-distortion function [6] and its lower and upper bounds in (23).

Example 2.

If we put in Corollary 3 (), we have

for the Laplacian source and the squared distortion measure . The gap between the bounds is (bit).

The upper bound in Theorem 2 implies the following:

Corollary 5.

Under the γ-th power distortion measure, if for all D, the γ-generalized Gaussian source has the greatest rate-distortion function among all β-generalized Gaussian sources with a fixed , satisfying for all D.

Proof.

Since , the upper bound in (19) is expressed as , which is equal to the rate-distortion function of the -generalized Gaussian source under the -th power distortion measure if its SLB is tight for all D. ☐

The preceding corollary is well-known in the case of the squared distortion measure, while the Gaussian source has the largest rate-distortion function not only among all -generalized Gaussian sources, but also among all the sources with a fixed variance ([2], Theorem 4.3.3).

5. Distortion-Rate Bounds for -Insensitive Loss

As another example of a distortion measure that is not matching with the -generalized Gaussian source in the sense of Theorem 1, we consider the following -th power -insensitive distortion measure generalizing (17),

where . Such distortion measures are used in support vector regression models [14,15].

In this section, we focus on the Laplacian source (), for which similarly to Section 4, we can evaluate

where and . Such an explicit evaluation appears to be prohibitive for . The above expected distortion is achievable by with the rate since holds for all D. Thus, we obtain the following upper bound, which is expressed by a closed form in the case of the distortion-rate function.

Theorem 4.

The distortion-rate function of the Laplacian source under the γ-th power ϵ-insensitive distortion measure (24) is upper-bounded as

In addition, the upper bound is tight at ; that is,

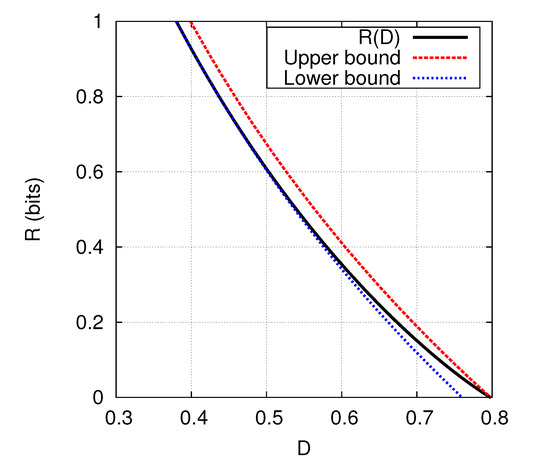

Upper and (Shannon) lower bounds which are accurate asymptotically as have been obtained for the distortion measure (24) [16]. They are proved to have approximation error at most as . Combined with these bounds, the upper bound (25), being accurate at high distortion levels, provides a good approximation of the rate-distortion function for the entire range of D. This is demonstrated in Figure 2 for the case of , , and , where the upper bound (25) and that in [16] are referred to as low-rate and high-rate upper bounds because they are effective at low and high rates, respectively. Although the rate-distortion function of this case is still unknown, it lies between the upper bounds and the SLB. Hence, the figure implies that the SLB is accurate for all R, and the rate-distortion function is almost identified except for the region around (bits) where there is a relatively large gap between the upper bounds and the SLB.

6. Conclusions

We have shown that the generalized Gaussian distribution is the only source that can make the SLB tight for all D under the power distortion measure if the orders of the source and the distortion measure are matched. We have also derived an upper bound of the rate-distortion function for the cases when the orders are mismatched, which together with the SLB provides constant-width bounds sandwiching the rate-distortion function, and hence evaluates the m-projection of the source to the mixture family associated with the distortion measure. The derived bounds demonstrate the possibility that the condition for the tightness of the SLB implies knowledge on the behavior of the rate-distortion function of other distortion measures; for example, those defined by composition of functions. In fact, we have obtained an upper bound to the distortion-rate function of -insensitive distortion measures in the case of the Laplacian source. It is an important undertaking to investigate the geometric structure of the mixture family associated with the distortion measure and its relationship to the m-projection; that is, the optimal reconstruction distribution.

Acknowledgments

The author would like to thank the anonymous reviewers for their helpful comments and suggestions. This work was supported in part by JSPS grants 25120014, 15K16050, and 16H02825.

Conflicts of Interest

The author declares no conflict of interest.

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 623–656. [Google Scholar] [CrossRef]

- Berger, T. Rate Distortion Theory: A Mathematical Basis for Data Compression; Prentice-Hall: Englewood Cliffs, NJ, USA, 1971. [Google Scholar]

- Buzo, A.; Kuhlmann, F.; Rivera, C. Rate-distortion bounds for quotient-based distortions with application to Itakura-Saito distortion measures. IEEE Trans. Inf. Theory 1986, 32, 141–147. [Google Scholar] [CrossRef]

- Gerrish, A.; Schultheiss, P. Information rates of non-Gaussian processes. IEEE Trans. Inf. Theory 1964, 10, 265–271. [Google Scholar] [CrossRef]

- Kostina, V. Data compression with low distortion and finite blocklength. IEEE Trans. Inf. Theory 2017, in press. [Google Scholar] [CrossRef]

- Tan, H.H.; Yao, K. Evaluation of rate-distortion functions for a class of independent identically distributed sources under an absolute magnitude criterion. IEEE Trans. Inf. Theory 1975, 21, 59–64. [Google Scholar] [CrossRef]

- Yao, K.; Tan, H.H. Absolute error rate-distortion functions for sources with constrained magnitudes. IEEE Trans. Inf. Theory 1978, 24, 499–503. [Google Scholar]

- Rose, K. A mapping approach to rate-distortion computation and analysis. IEEE Trans. Inf. Theory 1994, 40, 1939–1952. [Google Scholar] [CrossRef]

- Watanabe, K.; Ikeda, S. Rate-Distortion functions for gamma-type sources under absolute-log distortion measure. IEEE Trans. Inf. Theory 2016, 62, 5496–5502. [Google Scholar] [CrossRef]

- Amari, S.; Nagaoka, H. Methods Information Geometry; Oxford University Press: Oxford, UK, 2000. [Google Scholar]

- Watanabe, K. Constant-width rate-distortion bounds for power distortion measures. In Proceedings of the 2016 IEEE Information Theory Workshop (ITW), Cambridge, UK, 11–14 September 2016; pp. 106–110. [Google Scholar]

- Fraysse, A.; Pesquet-Popescu, B.; Pesquet, J.C. Rate-distortion results for generalized Gaussian distributions. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 3753–3756. [Google Scholar]

- Fraysse, A.; Pesquet-Popescu, B.; Pesquet, J.C. On the uniform quantization of a class of sparse sources. IEEE Trans. Inf. Theory 2009, 55, 3243–3263. [Google Scholar] [CrossRef]

- Steinwart, I.; Christmann, A. Support Vector Machines; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Chu, W.; Keerthi, S.S.; Ong, C.J. Bayesian support vector regression using a unified loss function. IEEE Trans. Neural Netw. 2004, 15, 29–44. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, K. Rate-distortion bounds for ε-insensitive distortion measures. IEICE Trans. Fundam. 2016, E99-A, 370–377. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley Interscience: New York, NY, USA, 1991. [Google Scholar]

- Gray, R.M. Source Coding Theory; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1990. [Google Scholar]

- Gray, R.M. Entropy and Information Theory, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Nishiyama, Y.; Fukumizu, K. Characteristic kernels and infinitely divisible distributions. J. Mach. Learn. Res. 2016, 17, 1–28. [Google Scholar]

- Linder, T.; Zamir, R. On the asymptotic tightness of the Shannon lower bound. IEEE Trans. Inf. Theory 1994, 40, 2026–2031. [Google Scholar] [CrossRef]

- Koch, T. The Shannon lower bound is asymptotically tight. IEEE Trans. Inf. Theory 2016, 62, 6155–6161. [Google Scholar] [CrossRef]

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).