Abstract

This work reviews and extends a family of log-determinant (log-det) divergences for symmetric positive definite (SPD) matrices and discusses their fundamental properties. We show how to use parameterized Alpha-Beta (AB) and Gamma log-det divergences to generate many well-known divergences; in particular, we consider the Stein’s loss, the S-divergence, also called Jensen-Bregman LogDet (JBLD) divergence, Logdet Zero (Bhattacharyya) divergence, Affine Invariant Riemannian Metric (AIRM), and other divergences. Moreover, we establish links and correspondences between log-det divergences and visualise them on an alpha-beta plane for various sets of parameters. We use this unifying framework to interpret and extend existing similarity measures for semidefinite covariance matrices in finite-dimensional Reproducing Kernel Hilbert Spaces (RKHS). This paper also shows how the Alpha-Beta family of log-det divergences relates to the divergences of multivariate and multilinear normal distributions. Closed form formulas are derived for Gamma divergences of two multivariate Gaussian densities; the special cases of the Kullback-Leibler, Bhattacharyya, Rényi, and Cauchy-Schwartz divergences are discussed. Symmetrized versions of log-det divergences are also considered and briefly reviewed. Finally, a class of divergences is extended to multiway divergences for separable covariance (or precision) matrices.

Keywords:

Similarity measures; generalized divergences for symmetric positive definite (covariance) matrices; Stein’s loss; Burg’s matrix divergence; Affine Invariant Riemannian Metric (AIRM); Riemannian metric; geodesic distance; Jensen-Bregman LogDet (JBLD); S-divergence; LogDet Zero divergence; Jeffrey’s KL divergence; symmetrized KL Divergence Metric (KLDM); Alpha-Beta Log-Det divergences; Gamma divergences; Hilbert projective metric and their extensions 1. Introduction

Divergences or (dis)similarity measures between symmetric positive definite (SPD) matrices underpin many applications, including: Diffusion Tensor Imaging (DTI) segmentation, classification, clustering, pattern recognition, model selection, statistical inference, and data processing problems [1–3]. Furthermore, there is a close connection between divergence and the notions of entropy, information geometry, and statistical mean [2,4–7], while matrix divergences are closely related to the invariant geometrical properties of the manifold of probability distributions [4,8–10]. A wide class of parameterized divergences for positive measures are already well understood and a unification and generalization of their properties can be found in [11–13].

The class of SPD matrices, especially covariance matrices, play a key role in many areas of statistics, signal/image processing, DTI, pattern recognition, and biological and social sciences [14–16]. For example, medical data produced by diffusion tensor magnetic resonance imaging (DTI-MRI) represent the covariance in a Brownian motion model of water diffusion. The diffusion tensors can be represented as SPD matrices, which are used to track the diffusion of water molecules in the human brain, with applications such as the diagnosis of mental disorders [14]. In array processing, covariance matrices capture both the variance and correlation of multidimensional data; this data is often used to estimate (dis)similarity measures, i.e., divergences. This all has led to an increasing interest in divergences for SPD (covariance) matrices [1,5,6,14,17–20].

The main aim of this paper is to review and extend log-determinant (log-det) divergences and to establish a link between log-det divergences and standard divergences, especially the Alpha, Beta, and Gamma divergences. Several forms of the log-det divergence exist in the literature, including the log–determinant α divergence, Riemannian metric, Stein’s loss, S-divergence, also called the Jensen-Bregman LogDet (JBLD) divergence, and the symmetrized Kullback-Leibler Density Metric (KLDM) or Jeffrey’s KL divergence [5,6,14,17–20]. Despite their numerous applications, common theoretical properties and the relationships between these divergences have not been established. To this end, we propose and parameterize a wide class of log-det divergences that provide robust solutions and/or even improve the accuracy for a noisy data. We next review fundamental properties and provide relationships among the members of this class. The advantages of some selected log-det divergences are also discussed; in particular, we consider the efficiency, simplicity, and resilience to noise or outliers, in addition to simplicity of calculations [14]. The log-det divergences between two SPD matrices have also been shown to be robust to biases in composition, which can cause problems for other similarity measures.

The divergences discussed in this paper are flexible enough to facilitate the generation of several established divergences (for specific values of the tuning parameters). In addition, by adjusting the adaptive tuning parameters, we optimize the cost functions of learning algorithms and estimate desired model parameters in the presence of noise and outliers. In other words, the divergences discussed in this paper are robust with respect to outliers and noise if the tuning parameters, α, β, and γ, are chosen properly.

1.1. Preliminaries

We adopt the following notation: SPD matrices will be denoted by P ∈ ℝn×n and Q ∈ ℝn×n, and have positive eigenvalues λi (sorted in descending order); by log(P), det(P) = |P|, tr(P) we denote the logarithm, determinant, and trace of P, respectively.

For any real parameter α ∈ ℝ and for a positive definite matrix P, the matrix Pα is defined using symmetric eigenvalue decomposition as follows:

where Λ is a diagonal matrix of the eigenvalues of P, and V ∈ ℝn×n is the orthogonal matrix of the corresponding eigenvectors. Similarly, we define

where log(Λ) is a diagonal matrix of logarithms of the eigenvalues of P. The basic operations for positive definite matrices are provided in Appendix A.

The dissimilarity between two SPD matrices is called a metric if the following conditions hold:

- D(P ║ Q) ≥ 0, where the equality holds if and only if P = Q (nonnegativity and positive definiteness).

- D(P ║ Q) = D(Q ║ P) (symmetry).

- D(P ║ Z) ≤ D(P ║ Q) + D(Q ║ Z) (subaddivity/triangle inequality).

Dissimilarities that only satisfy condition (1) are not metrics and are referred to as (asymmetric) divergences.

2. Basic Alpha-Beta Log-Determinant Divergence

For SPD matrices P ∈ ℝn×n and Q ∈ ℝn×n, consider a new dissimilarity measure, namely, the AB log-det divergence, given by

where the values of the parameters α and β can be chosen so as to guarantee the non-negativity of the divergence and it vanishes to zero if and only if P = Q (this issue is addressed later by Theorems 1 and 2). Observe that this is not a symmetric divergence with respect to P and Q, except when α = β. Note that using the identity log det(P) = tr log(P), the divergence in (3) can be expressed as

This divergence is related to the Alpha, Beta, and AB divergences discussed in our previous work, especially Gamma divergences [11–13,21]. Furthermore, the divergence in (4) is related to the AB divergence for SPD matrices [1,12], which is defined by

Note that α and β are chosen so that

is nonnegative and equal to zero if P = Q. Moreover, such divergence functions can be evaluated without computing the inverses of the SPD matrices; instead, they can be evaluated easily by computing (positive) eigenvalues of the matrix PQ−1 or its inverse. Since both matrices P and Q (and their inverses) are SPD matrices, their eigenvalues are positive. In general, it can be shown that even though PQ−1 is nonsymmetric, its eigenvalues are the same as those of the SPD matrix Q−1/2PQ−1/2; hence, its eigenvalues are always positive.

Next, consider the eigenvalue decomposition:

where V is a nonsingular matrix, and

is the diagonal matrix with the positive eigenvalues λi > 0, i = 1, 2, …, n, of PQ−1. Then, we can write

which allows us to use simple algebraic manipulations to obtain

It is straightforward to verify that

if P = Q. We will show later that this function is nonnegative for any SPD matrices if the α and β parameters take both positive or negative values.

For the singular values α = 0 and/or β = 0 (also α = −β), the AB log-det divergence in (3) is defined as a limit for α → 0 and/or β → 0. In other words, to avoid indeterminacy or singularity for specific parameter values, the AB log-det divergence can be reformulated or extended by continuity and by applying L’Hôpital’s formula to cover the singular values of α and β. Using L’Hôpital’s rule, the AB log-det divergence can be defined explicitly by

Equivalently, using standard matrix manipulations, the above formula can be expressed in terms of the eigenvalues of PQ−1, i.e., the generalized eigenvalues computed from λiQvi = Pvi (where vi (i = 1, 2, …, n) are corresponding generalized eigenvectors) as follows:

Theorem 1. The function given by (3) is nonnegative for any SPD matrices with arbitrary positive eigenvalues if α ≥ 0 and β ≥ 0 or if α < 0 and β < 0. It is equal to zero if and only if P = Q.

Equivalently, if the values of α and β have the same sign, the AB log-det divergence is positive independent of the distribution of the eigenvalues of PQ−1 and goes to zero if and only if all the eigenvalues are equal to one. However, if the eigenvalues are sufficiently close to one, the AB log-det divergence is also positive for different signs of α and β. The conditions for positive definiteness are given by the following theorem.

Theorem 2. The function given by (9) is nonnegative if α > 0 and β < 0 or if α < 0 and β > 0 and if all the eigenvalues of PQ−1 satisfy the following conditions:

and

If any of the eigenvalues violate these bounds, the value of the divergence, by definition, is infinite. Moreover, when α → −β these bounds simplify to

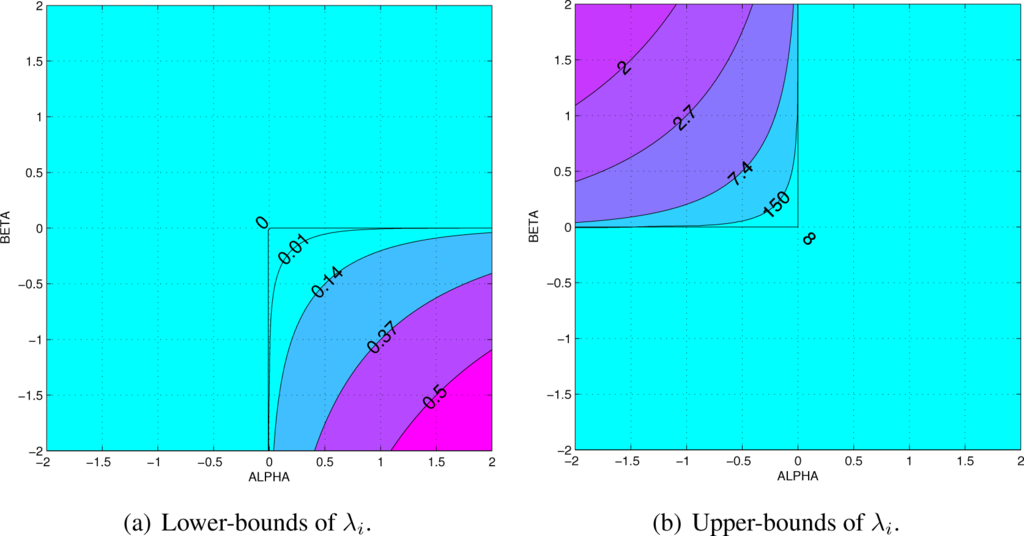

In the limit, when α → 0 or β → 0, the bounds disappear. A visual presentation of these bounds for different values of α and β is shown in Figure 1.

Figure 1.

Shaded-contour plots of the bounds of λi that prevent

from diverging to ∞. The positive lower-bounds are shown in the lower-right quadrant of (a). The finite upper-bounds are shown in the upper-left quadrant of (b).

Additionally,

only if λi = 1 for all i = 1, …, n, i.e., when P = Q.

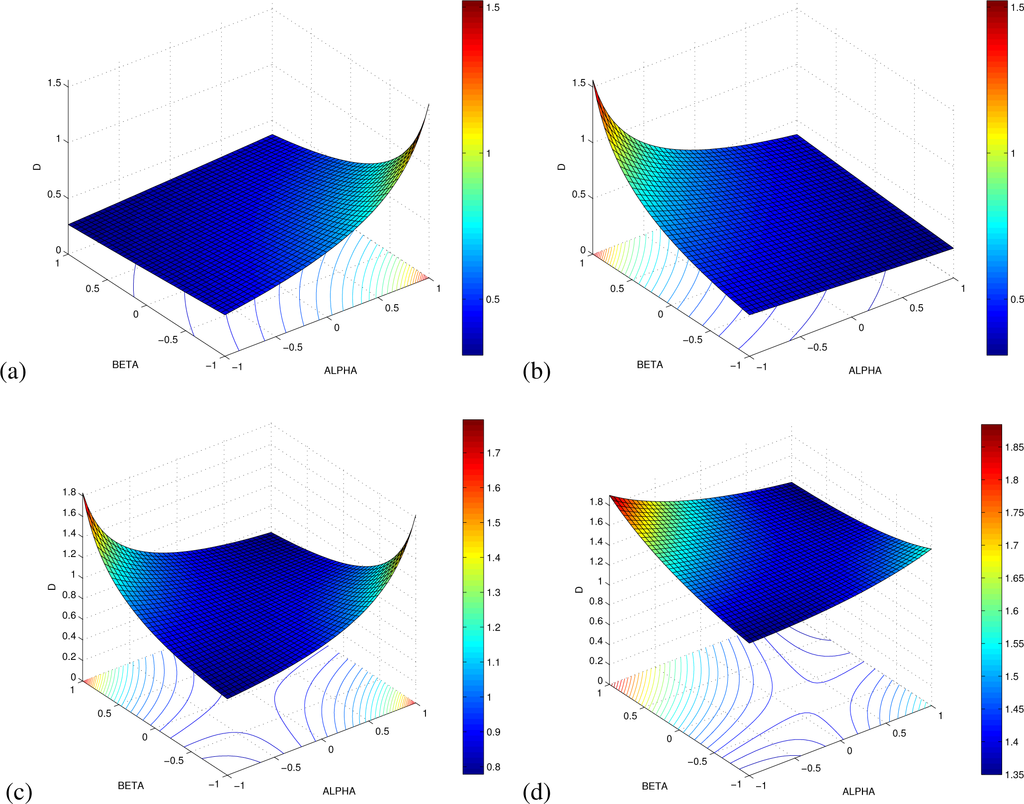

Figure 2 illustrates the typical shapes of the AB log-det divergence for different values of the eigenvalues for various choices of α and β.

Figure 2.

Two-dimensional plots of the AB log-det divergence for different eigenvalues: (a) λ = 0.4, (b) λ = 2.5, (c) λ1 = 0.4, λ2 = 2.5, (d) 10 eigenvalues uniformly randomly distributed in the range [0.5, 2].

In general, the AB log-det divergence is not a metric distance since the triangle inequality is not satisfied for all parameter values. Therefore, we can define the metric distance as the square root of the AB log-det divergence in the special case when α = β as follows:

This follows from the fact that

is symmetric with respect to P and Q.

Later, we will show that measures defined in this manner lead to many important and well-known divergences and metric distances such as the Logdet Zero divergence, Affine Invariant Riemannian metric (AIRM), and square root of Stein’s loss [5,6]. Moreover, new divergences can be generated; specifically, generalized Stein’s loss, the Beta-log-det divergence, and extended Hilbert metrics.

From the divergence

, a Riemannian metric and a pair of dually coupled affine connections are introduced in the manifold of positive definite matrices. Let dP be a small deviation of P, which belongs to the tangent space of the manifold at P. Calculating

and neglecting higher-order terms yields (see Appendix E)

This gives a Riemannian metric that is common for all (α, β). Therefore, the Riemannian metric is the same for all AB log-det divergences, although the dual affine connections depend on α and β. The Riemannian metric is also the same as the Fisher information matrix of the manifold of multivariate Gaussian distributions of mean zero and covariance matrix P.

Interestingly, note that the Riemannian metric or geodesic distance is obtained from (3) for α = β = 0:

where λi are the eigenvalues of PQ−1.

This is also known as the AIRM. AIRM takes advantage of several important and useful theoretical properties and is probably one of the most widely used (dis)similarity measure for SPD (covariance) matrices [14,15].

For α = β = 0.5 (and for α = β = −0.5), the recently defined and deeply analyzed S-divergence (JBLD) [6,14,15,17] is obtained:

The S-divergence is not a metric distance. To make it a metric, we take its square root and obtain the LogDet Zero divergence, or Bhattacharyya distance [5,7,18]:

Moreover, for α = 0, β ≠ 0 and α ≠ 0, β = 0, we obtain divergences which are generalizations of Stein’s loss (called also Burg matrix divergence or simply LogDet divergence):

3. Special Cases of the AB Log-Det Divergence

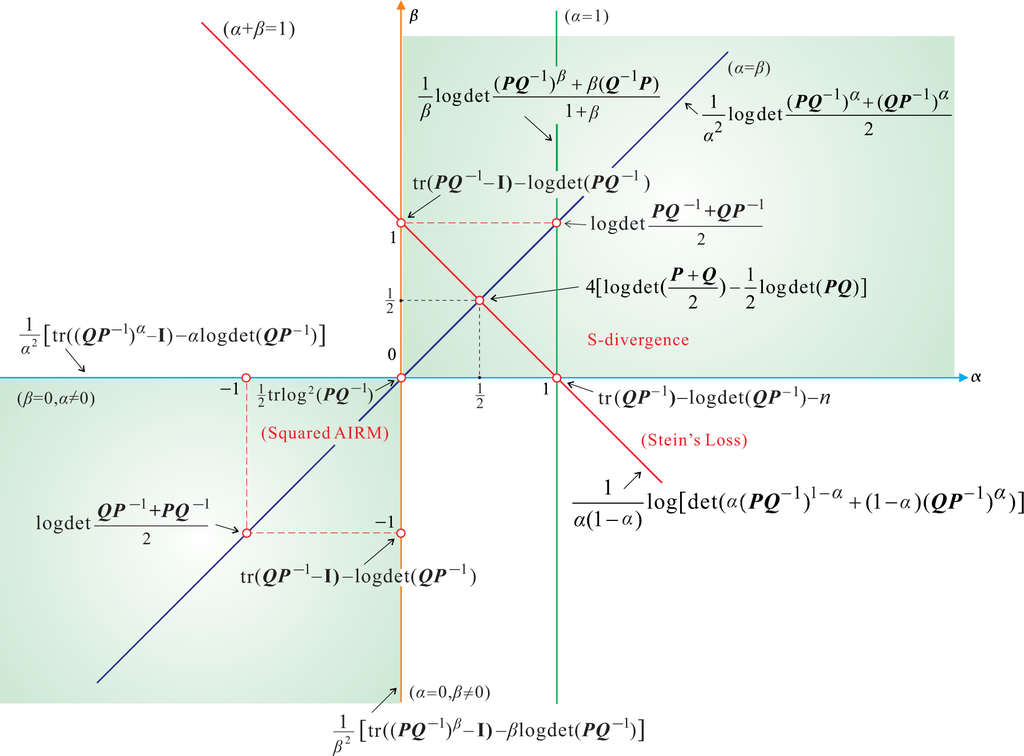

We now illustrate how a suitable choice of the (α, β) parameters simplify the AB log-det divergence into other known divergences such as the Alpha- and Beta-log-det divergences [5,11,18,23] (see Figure 3 and Table 1).

Figure 3.

Links between the fundamental, nonsymmetric, AB log-det divergences. On the α-β-plane, important divergences are indicated by points and lines, especially the Stein’s loss and its generalization, the AIRM (Riemannian) distance, S-divergence (JBLD), Alpha-log-det divergence

, and Beta-log-det divergence

.

Table 1.

Fundamental Log-det Divergences and Distances

When α + β = 1, the AB log-det divergence reduces to the Alpha-log-det divergence [5]:

On the other hand, when α = 1 and β ≥ 0, the AB log-det divergence reduces to the Beta-log-det divergence:

Note that

, and the Beta-log-det divergence is well defined for β = −1 and if all the eigenvalues are larger than λi > e−1 ≈ 0.367 (e ≈ 2.72).

It is interesting to note that the Beta-log-det divergence for β → ∞ leads to a new divergence that is robust with respect to noise. This new divergence is given by

This can be easily shown by applying the L’Hôpital’s formula. Assuming that the set Ω = {i : λi > 1} gathers the indices of those eigenvalues greater than one, we can more formally express this divergence as

The Alpha-log-det divergence gives the standard Stein’s losses (Burg matrix divergences) for α = 1 and α = 0, and the Beta-log-det divergence is equivalent to Stein’s loss for β = 0.

Another important class of divergences is Power log-det divergences for any α = β ∈ ℝ:

4. Properties of the AB Log-Det Divergence

The AB log-det divergence has several important and useful theoretical properties for SPD matrices.

- Nonnegativity; given by

- Identity of indiscernibles (see Theorems 1 and 2); given by

- Continuity and smoothness of as a function of α ∈ ℝ and β ∈ ℝ, including the singular cases when α = 0 or β = 0, and when α = −β (see Figure 2).

- The divergence can be expressed in terms of the diagonal matrix Λ = diag{λ1, λ2, …, λn} with the eigenvalues of PQ−1, in the form

- Scaling invariance; given byfor any c > 0.

- Relative invariance for scale transformation: For given α and β and nonzero scaling factor ω ≠ 0, we have

- Dual-invariance under inversion (for ω = −1); given by

- Dual symmetry; given by

- Affine invariance (invariance under congruence transformations); given byfor any nonsingular matrix A ∈ ℝn×n

- Divergence lower-bound; given byfor any full-column rank matrix X ∈ ℝn×m with n ≤ m.

- Scaling invariance under the Kronecker product; given byfor any symmetric and positive definite matrix Z of rank n.

- Double Sided Orthogonal Procrustes property. Consider an orthogonal matrix and two symmetric positive definite matrices P and Q, with respective eigenvalue matrices ΛP and ΛQ which elements are sorted in descending order. The AB log-det divergence between ΩTPΩ and Q is globally minimized when their eigenspaces are aligned, i.e.,

- Triangle Inequality-Metric Distance Condition, for α = β ∈ ℝ. The previous property implies the validity of the triangle inequality for arbitrary positive definite matrices, i.e.,The proof of this property exploits the metric characterization of the square root of the S-divergence proposed first by S. Sra in [6,17] for arbitrary SPD matrices.

Several of these properties have been already proved for the specific cases of α and β that lead to the S-divergence (α, β = 1/2) [6], the Alpha log-det divergence (0 ≤ α ≤ 1, β = 1 − α) [5] and the Riemannian metric (α, β = 0) [28, Chapter 6]. We refer the reader to Appendix F for their proofs when α, β ∈ ℝ.

5. Symmetrized AB Log-Det Divergences

The basic AB log-det divergence is asymmetric; that is,

, except the spacial case of α = β).

In general, there are several ways to symmetrize a divergence; for example, Type-1,

and Type-2, based on the Jensen-Shannon symmetrization (which is too complex for log-det divergences),

The Type-1 symmetric AB log-det divergence is defined as

Equivalently, this can be expressed by the eigenvalues of PQ−1 in the form

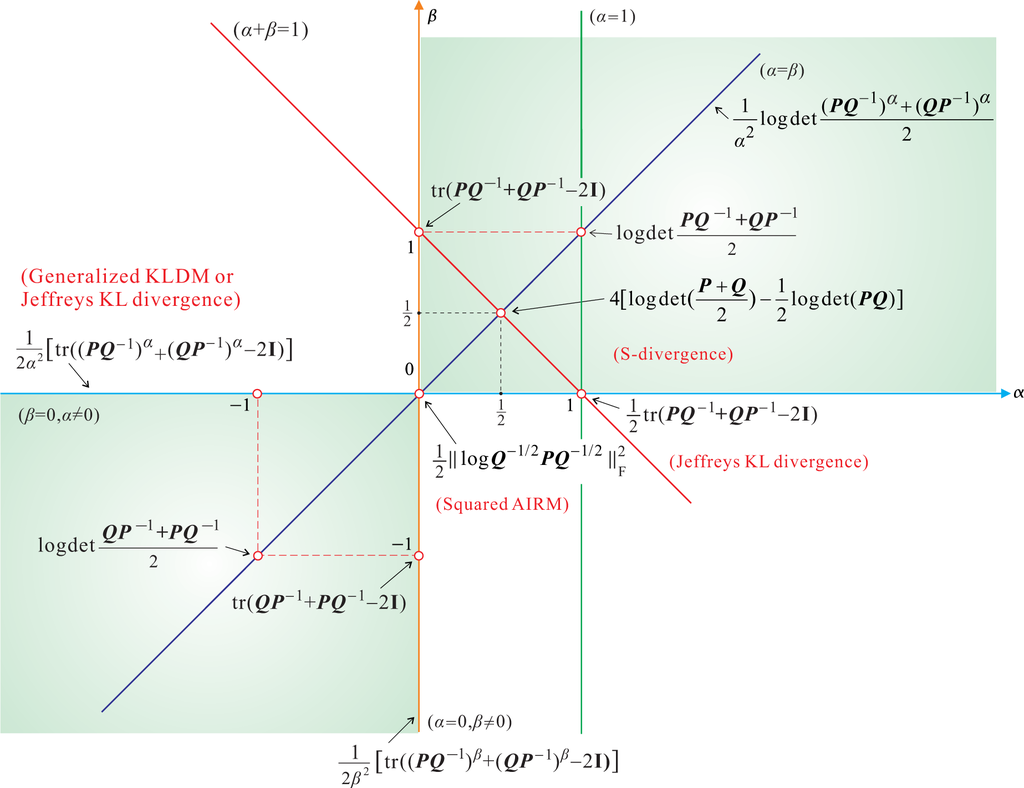

We consider several well-known symmetric log-det divergences (see Figure 4); in particular, we consider the following:

- For α = 0 and β = ±1 or for β = 0 and α = ±1, we obtain the KLDM (symmetrized KL Density Metric), also known as the symmetric Stein’s loss or Jeffreys KL divergence [3]:

Figure 4.

Links between the fundamental symmetric, AB log-det divergences. On the (α, β)-plane, the special cases of particular divergences are indicated by points (Jeffreys KL divergence (KLDM) or symmetric Stein’s loss and its generalization, S-divergence (JBLD), and the Power log-det divergence.

One important potential application of the AB log-det divergence is to generate conditionally positive definite kernels, which are widely applied to classification and clustering. For a specific set of parameters, the AB log-det divergence gives rise to a Hilbert space embedding in the form of a Radial Basis Function (RBF) kernel [22]; more specifically, the AB log-det kernel is defined by

for some selected values of γ > 0 and α, β > 0 or α, β < 0 that can make the kernel positive definite.

6. Similarity Measures for Semidefinite Covariance Matrices in Reproducing Kernel Hilbert Spaces

There are many practical applications for which the underlying covariance matrices are symmetric but only positive semidefinite, i.e., their columns do not span the whole space. For instance, in classification problems, assume two classes and a set of observation vectors {x1, …, xT} and {y1, …, yT} in ℝm for each class, then we may wish to find a principled way to evaluate the ensemble similarity of the data from their sample similarity. The problem of the modeling of similarity between two ensembles was studied by Zhou and Chellappa in [32]. For this purpose, they proposed several probabilistic divergence measures between positive semidefinite covariance matrices in a Reproducing kernel Hilbert space (RKHS) of finite dimensionality. Their strategy was later extended for image classification problems [33] and formalized for the Log-Hilbert-Schmidt metric between infinite-dimensional RKHS covariance operators [34].

In this section, we propose the unifying framework of the AB log-det divergences to reinterpret and extend the similarity measures obtained in [32,33] for semidefinite covariance matrices in the finite-dimensional RKHS.

We shall assume that the nonlinear functions Φx : ℝm → ℝn and Φy : ℝm → ℝn (where n > m) respectively map the data from each of the classes into their higher dimensional feature spaces. We implicitly define the feature matrices as

and the sample covariance matrices of the observations in the feature space as:

and

, where

denotes the T × T centering matrix.

In practice, it is common to consider low-rank approximations of sample covariance matrices. For a given basis Vx = (v1, …, vr) ∈ ℝT×r of the principal subspace of

, we can define the projection matrix

and redefine the covariance matrices as

Assuming the Gaussianity of the data in the feature space, the mean vector and covariance matrix are sufficient statistics and a natural measure of dissimilarity between Φx and Φy should be a function of the first and second order statistics of the features. Furthermore, in most practical problems the mean value should be ignored due to robustness considerations, and then the comparison reduces to the evaluation of a suitable dissimilarity measure between Cx and Cy.

The dimensionality of the feature space n is typically much larger than r, so the rank of the covariance matrices in (48) will be r ≪ n and, therefore, both matrices are positive semidefinite. The AB log-det divergence is infinite when the range spaces of the covariance matrices Cx and Cy differ. This property is useful in applications which require an automatic constraint in the range of the estimates [22], but it will prohibit the practical use of the comparison when the ranges of the covariance matrices differ. The next subsections present two different strategies to address this challenging problem.

6.1. Measuring the Dissimilarity with a Divergence Lower-Bound

One possible strategy is to use dissimilarity measures which ignore the contribution to the divergence caused by the rank deficiency of the covariance matrices. This is useful when performing one comparison of the covariances matrices after applying a congruence transformation that aligns their range spaces, and can be implemented by retaining only the finite and non-zero eigenvalues of the matrix pencil (Cx, Cy).

Let Ir denote the identity matrix of size r and (·)+ the Moore-Penrose pseudoinverse operator. Consider the eigenvalue decomposition of the symmetric matrix

where U is a semi-orthogonal matrix for which the columns are the eigenvectors associated with the positive eigenvalues of the matrix pencil and

is a diagonal matrix with the eigenvalues sorted in a descending order.

Note that the tall matrix

diagonalizes the covariance matrices of the two classes

and compress them to a common range space. The compression automatically discards the singular and infinite eigenvalues of the matrix pencil (Cx, Cy), while it retains the finite and positive eigenvalues. In this way, the following dissimilarity measures can be obtained:

Note, however, that these measures should not be understood as a strict comparison of the original covariance matrices, but rather as an indirect comparison through their respective compressed versions WTCxW and WTCyW.

With the help of the kernel trick, the next lemma shows that the evaluation of the dissimilarity measures

and

, does not require the explicit computation of the covariance matrices or of the feature vectors.

Lemma 1. Given the Gram matrix or kernel matrix of the input vectors

and the matrices Vx and Vy which respectively span the principal subspaces of Kxx and Kyy, the positive and finite eigenvalues of the matrix pencil can be expressed by

Proof. The proof of the lemma relies on the property that for any pair of m × n matrices A and B, the non-zero eigenvalues of ABT and of BTA are the same (see [30, pag. 11]). Then, there is an equality between the following matrices of positive eigenvalues

Taking into account the structure of the covariance matrices in (48), such eigenvalues can be explicitly obtained in terms of the kernel matrices

6.2. Similarity Measures Between Regularized Covariance Descriptors

Several authors consider a completely different strategy, which consists in the regularization of the original covariance matrices [32–34]. This way the null the eigenvalues of the covariances Cx and Cy are replaced by a small positive constant ρ > 0, to obtain the “regularized” positive definite matrices

and

, respectively. The modification can be illustrated by comparing the eigendecompositions

Then, the dissimilarity measure of the data in the feature space can be obtained just by measuring a divergence between the SPD matrices

and

. Again, the idea is to compute the value of the divergence without requiring the evaluation of the feature vectors but by using the available kernels.

Using the properties of the trace and the determinants, a practical formula for the log-det Alpha-divergence has been obtained in [32,33] for 0 < α < 1. The resulting expression

is a function of the principal eigenvalues of the kernels

and the matrix

where

The evaluation of the divergence outside the interval 0 < α < 1, or when β ≠ 1 − α, is not covered by this formula and, in general, requires knowledge of the eigenvalues of the matrix

. However, different analyses are necessary depending on the dimension of the intersection of the range space of both covariance matrices Cx and Cy. In the following, we study the two more general scenarios.

Case (A) The range spaces of Cx and Cy are the same.

In this case

and the eigenvalues of the matrix

coincide with the nonzero eigenvalues of

except for (n − r) additional eigenvalues which are equal to 1. Then, using the equivalence between (57) and (60), the divergence reduces to the following form

Case (B) The range spaces of Cx and Cy are disjoint.

In practice, for n ≫ r this is the most probable scenario. In such a case, the r largest eigenvalues of the matrix

diverge as ρ tends to zero. Hence, we can not bound above these eigenvalues and, for this reason, it makes no sense to study the case of sign(α) ≠ sign(β), so in this section we assume that sign(α) = sign(β).

Theorem 3. When range spaces of Cx and Cy are disjoint and for a sufficiently small value of ρ > 0, the AB log-det divergence is closely approximated by the formula

where (and respectively by interchanging x and y) denotes the matrix

The proof of the theorem is presented in the Appendix G. The eigenvalues of the matrices

and

, estimate the r largest eigenvalues of

and of its inverse

, respectively. The relative error in the estimation of these eigenvalues is of order O(ρ), i.e., it gradually improves as ρ tend to zero. The approximation is asymptotically exact, and

and

converge respectively to the conditional covariance matrices

while ρ I converges to the zero matrix.

In the limit, the value of the divergence is not very useful because

though there are some practical ways to circumvent this limitation. For example, when α = 0 or β = 0, the divergence can be scaled by a suitable power of ρ to make it finite (see Section 3.3.1 in [32]). The scaled form of the divergence between the regularized covariance matrices is

Examples of scaled divergences are the following versions of Stein’s losses

as well as the Jeffrey’s KL family of symmetric divergences (cf. Equation (23) in [33])

In other cases, when the scaling is not sufficient to obtain a finite and practical dissimilarity measure, an affine transformation may be used. The idea is to identify the divergent part of

as ρ → 0 and use its value as a reference for the evaluation the dissimilarity. For α, β ≥ 0, the relative AB log-det dissimilarity measure is the limiting value of the affine transformation

After its extension by continuity (including as special cases α = 0 or β = 0), the function

provides simple formulas to measure the relative dissimilarity between symmetric positive semidefinite matrices Cx and Cy. However, it should be taken into account that, as a consequence of its relative character, this function is not bounded below and can achieve negative values.

7. Modifications and Generalizations of AB Log-Det Divergences and Gamma Matrix Divergences

The divergence (3) discussed in the previous sections can be extended and modified in several ways. It is interesting to note that the positive eigenvalues of PQ−1 play a similar role as the ratios (pi/qi) and (qi/pi) when used in the wide class of standard discrete divergences, see for example, [11,12]; hence, we can apply such divergences to formulate a modified log-det divergence as a function of the eigenvalues λi.

For example, consider the Itakura-Saito distance defined by

It is worth noting that we can generate the large class of divergences or cost functions using Csiszár f-functions [13,24,25]. By replacing pi=qi with λi and qi=pi with we obtain the log-det divergence for SPD matrices:

which is consistent with (24) and (26).

As another example, consider the discrete Gamma divergence [11,12] defined by

which when α = 1 and β → −1, simplifies to the following form [11]:

Hence, by substituting pi/qi with λi, we derive a new Gamma matrix divergence for SPD matrices:

where M1 denotes the arithmetic mean, and M0 denotes the geometric mean.

Interestingly, (86) can be expressed equivalently as

Similarly, using the symmetric Gamma divergence defined in [11,12],

for α = 1 and β → −1 and by substituting the ratios pi/qi with λi, we obtain a new Gamma matrix divergence as follows:

where M−1 {λi} denotes the harmonic mean.

Note that for n → ∞, this formulated divergence can be expressed compactly as

where ui = {λi} and

.

The basic means are defined as follows:

with

where equality holds only if all λi are equal. By increasing the values of γ, more emphasis is put on large relative errors, i.e., on λi whose values are far from one. Depending on the value of γ, we obtain the minimum entry of the vector λ (for γ → −∞), its harmonic mean (γ = −1), the geometric mean (γ = 0), the arithmetic mean (γ = 1), the quadratic mean (γ = 2), and the maximum entry of the vector (γ → ∞).

Exploiting the above inequalities for the means, the divergences in (86) and (90) can be heuristically generalized (defined) as follows:

for γ2 > γ1.

The new divergence in (94) is quite general and flexible, and in extreme cases, it takes the following form:

which is, in fact, a well-known Hilbert projective metric [6,26].

The Hilbert projective metric is extremely simple and suitable for big data because it requires only two (minimum and maximum) eigenvalue computations of the matrix PQ−1.

The Hilbert projective metric satisfies the following important properties [6,27]:

- Nonnegativity, dH(P ║ Q) ≥ 0, and definiteness, dH(P ║ Q) = 0, if and only if there exists a c > 0 such that Q = cP.

- Invariance to scaling:for any c1, c2 > 0.

- Symmetry:

- Invariance under inversion:

- Invariance under congruence transformations:for any invertible matrix A.

- Separability of divergence for the Kronecker product of SPD matrices:

- Scaling of power of SPD matrices:for any ω ≠ 0.Hence, for 0 < |ω1| ≤ 1 ≤ |ω2| we have

- Scaling under the weighted geometric mean:for any u, s ≠ 0, where

- Triangular inequality: .

These properties can easily be derived and verified. For example, property (9) can easily be derived as follows [6,27]:

In Table 2, we summarize and compare some fundamental properties of three important metric distances: the Hilbert projective metric, Riemannian metric, and LogDet Zero (Bhattacharyya) distance. Since some of these properties are new, we refer to [6,27,28].

Table 2.

Comparison of the fundamental properties of three basic metric distances: the Riemannian (geodesic) metric (19), LogDet Zero (Bhattacharyya) divergence (21), and the Hilbert projective metric (95). Matrices P, Q, P1, P2, Q1, Q2, Z ∈ ℝn×n are SPD matrices, A ∈ ℝn×n is nonsingular, and the matrix X ∈ ℝn×r with r < n is full column rank. The scalars satisfy the following conditions: c > 0, c1, c2 > 0; 0 < ω ≤ 1, s, u ≠ 0, ψ = |s − u|. The geometric means are defined by P#uQ = P1/2(P−1/2QP−1/2)u P1/2 and P#Q = P#1/2Q = P1/2(P−1/2QP−1/2)1/2 P1/2. The Hadamard product of P and Q is denoted by P ○ Q (cf. with [6]).

7.1. The AB Log-Det Divergence for Noisy and Ill-Conditioned Covariance Matrices

In real-world signal processing and machine learning applications, the SPD sampled matrices can be strongly corrupted by noise and extremely ill conditioned. In such cases, the eigenvalues of the generalized eigenvalue (GEVD) problem Pvi = λiQvi can be divided into a signal subspace and noise subspace. The signal subspace is usually represented by the largest eigenvalues (and corresponding eigenvectors), and the noise subspace is usually represented by the smallest eigenvalues (and corresponding eigenvectors), which should be rejected; in other words, in the evaluation of log-det divergences, only the eigenvalues that represent the signal subspace should be taken into account. The simplest approach is to find the truncated dominant eigenvalues by applying the suitable threshold τ > 0; equivalently, find an index r ≤ n for which λr+1 ≤ τ and perform a summation. For example, truncation reduces the summation in (8) from 1 to r (instead of 1 to n) [22]. The threshold parameter τ can be selected via cross validation.

Recent studies suggest that the real signal subspace covariance matrices can be better represented by truncating the eigenvalues. A popular and relatively simple method applies a thresholding and shrinkage rule to the eigenvalues [35]:

where any eigenvalue smaller than the specific threshold is set to zero, and the remaining eigenvalues are shrunk. Note that the smallest eigenvalues are shrunk more than the largest one. For γ = 1, we obtain a standard soft thresholding, and for γ → ∞ a standard hard thresholding is obtained [36]. The optimal threshold τ > 0 can be estimated along with the parameter γ > 0 using cross validation. However, a more practical and efficient method is to apply the Generalized Stein Unbiased Risk Estimate (GSURE) method even if the variance of the noise is unknown (for more details, we refer to [35] and the references therein).

In this paper, we propose an alternative approach in which the bias generated by noise is reduced by suitable choices of α and β [12]. Instead of using the eigenvalues λi of PQ−1 or its inverse, we use regularized or shrinked eigenvalues [35–37]. For example, in light of (8), we can use the following shrinked eigenvalues:

which play a similar role as the ratios (pi/qi) (pi ≥ qi), which are used in the standard discrete divergences [11,12]. It should be noted that equalities

, ∀i hold only if all λi of PQ−1 are equal to one, which occurs only if P = Q. For example, the new Gamma divergence in (94) can be formulated even more generally as

where γ2 > γ1, and

are the regularized or optimally shrinked eigenvalues.

8. Divergences of Multivariate Gaussian Densities and Differential Relative Entropies of Multivariate Normal Distributions

In this section, we show the links or relationships between a family of continuous Gamma divergences and AB log-det divergences for multivariate Gaussian densities.

Consider the two multivariate Gaussian (normal) distributions:

where µ1 ∈ ℝn and µ2 ∈ ℝn are mean vectors, and P = Σ1 ∈ ℝn×n and Q = Σ2 ∈ ℝn×n are the covariance matrices of p(x) and q(x), respectively.

Furthermore, consider the Gamma divergence for these distributions:

which generalizes a family of Gamma divergences [11,12].

Theorem 4. The Gamma divergence in (112) for multivariate Gaussian densities (110) and (111) can be expressed in closed form as follows:

for α > 0 and β > 0.

The proof is provided in Appendix H. Note that for α + β = 1, the first term in the right-hand-side of (113) also simplifies as

Observe that Formula (113) consists of two terms: the first term is expressed via the AB log-det divergence, which measures the similarity between two covariance or precision matrices and is independent from the mean vectors, while the second term is a quadratic form expressed by the Mahalanobis distance, which represents the distance between the means (weighted by the covariance matrices) of multivariate Gaussian distributions. Note that the second term is zero when the mean values µ1 and µ2 coincide.

Theorem 4 is a generalization of the following well-known results:

- For α = 1 and β = 0 and as β → 0, the Kullback-Leibler divergence can be expressed as [5,38]where the last term represents the Mahalanobis distance, which becomes zero for zero-mean distributions µ1 = µ2 = 0.

- For α = β = 0.5 we have the Bhattacharyya distance [5,39]

- For α + β = 1 and 0 < α < 1, the closed form expression for the Rényi divergence is obtained [5,32,40]:

- For α = β = 1, the Gamma-divergences reduce to the Cauchy-Schwartz divergence:

Similar formulas can be derived for the symmetric Gamma divergence for two multivariate Gaussian distributions. Furthermore, analogous expressions can be derived for Elliptical Gamma distributions (EGD) [41], which facilitate more flexible modeling than standard multivariate Gaussian distributions.

8.1. Multiway Divergences for Multivariate Normal Distributions with Separable Covariance Matrices

Recently, there has been growing interest in the analysis of tensors or multiway arrays [42–45]. One of the most important applications of multiway tensor analysis and multilinear distributions, is magnetic resonance imaging (MRI) (we refer to [46] and the references therein). For multiway arrays, we often use multilinear (array or tensor) normal distributions that correspond to the multivariate normal (Gaussian) distributions in (110) and (111) with common means µ1 = µ2 and separable (Kronecker structured) covariance matrices:

where

and

for k = 1, 2, …, K are SPD matrices, usually normalized so that det Pk = det Qk = 1 for each k and

[45].

One of the main advantages of the separable Kronecker model is the significant reduction in the number of variance-covariance parameters [42]. Usually, such separable covariance matrices are sparse and very large-scale. The challenge is to design an efficient and relatively simple dissimilarity measure for big data between two zero-mean multivariate (or multilinear) normal distributions ((110) and (111)). Because of its unique properties, the Hilbert projective metric is a good candidate; in particular, for separable Kronecker structured covariances, it can be expressed very simply as

where

and

are the (shrinked) maximum and minimum eigenvalues of the (relatively small) matrices

for k = 1, 2, …, K, respectively. We refer to this divergence as the multiway Hilbert metric. This metric has many attractive properties, especially invariance under multilinear transformations.

Using the fundamental properties of divergence and SPD matrices, we derive other multiway log-det divergences. For example, the multiway Stein’s loss can be obtained:

Note that under the constraint that det Pk = det Qk = 1, this simplifies to

which is different from the multiway Stein’s loss recently proposed by Gerard and Hoff [45].

Similarly, if det Pk = det Qk = 1 for each k = 1, 2, …, K, we can derive the multiway Riemannian metric as follows:

The above multiway divergences are derived using the following properties:

and the basic property: If the eigenvalues {λi} and {θj} are eigenvalues with corresponding eigenvectors {vi} and {uj} for SPD matrices A and B, respectively, then A ⊗ B has eigenvalues {λiθj} with corresponding eigenvectors {vi ⊗ uj}.

Other possible extensions of the AB and Gamma matrix divergences to separable multiway divergences for multilinear normal distributions under additional constraints and normalization conditions will be discussed in future works.

9. Conclusions

In this paper, we presented novel (dis)similarity measures; in particular, we considered the Alpha-Beta and Gamma log-det divergences (and/or their square-roots) that smoothly connect or unify a wide class of existing divergences for SPD matrices. We derived numerous results that uncovered or unified theoretic properties and qualitative similarities between well-known divergences and new divergences. The scope of the results presented in this paper is vast, especially since the parameterized Alpha-Beta and Gamma log-det divergence functions include several efficient and useful divergences, including those based on relative entropies, the Riemannian metric (AIRM), S-divergence, generalized Jeffreys KL (KLDM), Stein’s loss, and Hilbert projective metric. Various links and relationships between divergences were also established. Furthermore, we proposed several multiway log-det divergences for tensor (array) normal distributions.

Acknowledgments

Part of this work was supported by the Spanish Government under MICINN projects TEC2014-53103, TEC2011-23559, and by the Regional Government of Andalusia under Grant TIC-7869.

Appendices

A. Basic operations for positive definite matrices

Functions of positive definite matrices frequently appear in many research areas, for an introduction we refer the reader to Chapter 11 in [31]. Consider a positive definite matrix P of rank n with eigendecomposition VΛVT. The matrix function f(P) is defined as

where f(Λ) ≡ diag (f(λ1), …, f(λn)). With the help of this definition, the following list of well-known properties can be easily obtained:

B. Extension of for (α, β) ∈ ℝ2

Remark 1. Equation (3) is only well defined in the first and third quadrants of the (α, β)-plane. Outside these regions, where α and β have opposite signs (i.e., α > 0 and β < 0 or α < 0 and β > 0), the divergence can be complex valued.

This undesirable behavior can be avoided with the help of the truncation operator

which prevents the arguments of the logarithms from being negative.

The new definition of the AB log-det divergence is

which is compatible with the previous definition in the first and third quadrants of the (α, β)-plane. It is also well defined in the second and fourth quadrants except for the special cases when α = 0, β = 0, and α + β = 0, which is where the formula is undefined. By enforcing continuity, we can explicitly define the AB log-det divergence on the entire (α, β)-plane as follows:

C. Eigenvalues Domain for Finite

In this section, we assume that λi, an eigenvalue of PQ−1, satisfies 0 ≤ λi ≤ ∞ for all i = 1, …, n. We will determine the bounds of the eigenvalues of PQ−1 that prevent the AB log-det divergence from being infinite. First, recall that

We assume that 0 ≤ λi ≤ ∞ for all i. For the divergence to be finite, the arguments of the logarithms in the previous expression must be positive. This happens when

which is always true when α, β > 0 or when α, β < 0. On the contrary, when sign (αβ)=−1 we have the following two cases. In the first case when α > 0, we initially solve for

and later for λi to obtain

In the second case when α < 0, we obtain

Using sign(αβ) = −1, we can solve for

, which yields

Solving again for λi, we see that

and

In the limit, when α → −β ≠ 0, these bounds simplify to

On the other hand, when α → 0 or when β → 0, the bounds disappear. The lower-bounds converge to 0, while the upper-bounds converge to ∞, leading to the trivial inequalities 0 < λi < ∞.

This concludes the determination of the domain of the eigenvalues that result in a finite divergence. Outside this domain, we expect

. A complete picture of bounds for different values of α and β is shown in Figure 1.

D. Proof of the Nonnegativity of

The AB log-det divergence is separable; it is the sum of the individual divergences of the eigenvalues from unity, i.e.,

where

We prove the nonnegativity of

by showing that the divergence of each of the eigenvalues

is nonnegative and minimal at λi = 1.

First, note that the only critical point of the criterion is obtained when λi = 1. This can be shown by setting the derivative of the criterion equal to zero, i.e.,

and solving for λi.

Next, we show that the sign of the derivative only changes at the critical point λi = 1. If we rewrite

and observe that the condition for the divergence to be finite enforces

then it follows that

Since the derivative is strictly negative for λi < 1 and strictly positive for λi > 1, the critical point at λi = 1 is the global minimum of

. From this result, the nonnegativity of the divergence

easily follows. Moreover,

only when λi = 1 for i = 1, …, n, which concludes the proof of the Theorems 1 and 2.

E. Derivation of the Riemannian Metric

We calculate

using the Taylor expansion when dP is small, i.e.,

where

Similar calculations hold for β[(P + dP)P−1]−α, and

where the first-order term of dZ disappears and the higher-order terms are neglected. Since

by taking its logarithm, we have

for any α and β.

F. Proof of the Properties of the AB Log-Det Divergence

Next we provide a proof of the properties of the AB log-det divergence. The proof will only be omitted for those properties which can be readily verified from the definition of the divergence.

- Nonnegativity; given byThe proof of this property is presented in Appendix D.

- Identity of indiscernibles; given bySee Appendix D for its proof.

- Continuity and smoothness of as a function of α ∈ ℝ and β ∈ ℝ, including the singular cases when α = 0 or β = 0, and when α = −β (see Figure 2).

- The divergence can be explicitly expressed in terms of Λ = diag{λ1, λ2, …, λn}, the diagonal matrix with the eigenvalues of Q−1 P; in the formProof. From the definition of divergence and taking into account the eigenvalue decomposition PQ−1 = VΛ V−1, we can write

- Scaling invariance; given byfor any c > 0.

- For a given α and β and nonzero scaling factor ω 6= 0, we haveProof. From the definition of divergence, we writeHence, the additional inequalityis obtained for |ω| ≤ 1.

- Dual-invariance under inversion (for ω = −1); given by

- Dual symmetry; given by

- Affine invariance (invariance under congruence transformations); given byfor any nonsingular matrix A ∈ ℝn×n.Proof.

- Divergence lower-bound; given byfor any full-column rank matrix X ∈ ℝn×m with n ≤ m.This result has been already proved for some special cases of α and β, especially these that lead to the S-divergence and the Riemannian metric [6]. Next, we present a different argument to prove it for any α, β ∈ ℝ.Proof. As already discussed, the divergence depends on the generalized eigenvalues of the matrix pencil (P, Q), which have been denoted by λi, i = 1, …, n. Similarly, the presumed lower-bound is determined by µi, i = 1, …, m, the eigenvalues of the matrix pencil (XT PX, XT QX). Assuming that both sets of eigenvalues are arranged in decreasing order, the Cauchy interlacing inequalities [29] provide the following upper and lower-bounds for µj in terms of the eigenvalues of the first matrix pencil,We classify the eigenvalues µj on three sets , and , according to the sign of (µj − 1). By the affine invariance we can writewhere the eigenvalues µj ∈ have been excluded since for them .With the help of (178), the first group of eigenvalues µj ∈ (which are smaller than one) are one-to-one mapped with their lower-bounds λj, which we include in the set . Also those µj ∈ (which are greater than one) are mapped with their upper-bounds λn−m+j, which we group in . It is shown in Appendix D that the scalar divergence is strictly monotone descending for λ < 1, zero for λ = 1 and strictly monotone ascending for λ > 1. This allows one to upperbound (180) as followsobtaining the desired property.

- Scaling invariance under the Kronecker product; given byfor any symmetric and positive definite matrix Z of rank n.Proof. This property was obtained in [6] for the S-divergence and the Riemannian metric. With the help of the properties of the Kronecker product of matrices, the desired equality is obtained:

- Double Sided Orthogonal Procrustes property. Consider an orthogonal matrix and two symmetric positive definite matrices P and Q, with respective eigenvalue matrices ΛP and ΛQ, which elements are sorted in descending order. The AB log-det divergence between ΩT PΩ and Q is globally minimized when their eigenspaces are aligned, i.e.,Proof. Let Λ denote the matrix of eigenvalues of ΩT PΩQ−1 with its elements sorted in descending order. We start showing that for ∆ = log Λ, the function is convex. Its Hessian matrix is diagonal and positive definite, i.e., with non-negative diagonal elementswhereSince is strictly convex and non-negative, we are in the conditions of the Corollary 6.15 in [47]. This result states that for two symmetric positive definite matrices A and B, which vectors of eigenvalues are respectively denoted by (when sorted in descending order) and (when sorted in ascending order), the function is submajorized by . By choosing A = ΩT PΩ, B = Q−1, and applying the corollary, we obtainwhere the equality is only reached when the eigendecompositions of the matrices ΩT PΩ = VΛP VTand Q = VΛQVT, share the same matrix of eigenvectors V.

- Triangle Inequality-Metric Distance Condition, for α = β ∈ ℝ; given byProof. The proof of this property exploits the recent result that the square root of the S-divergenceis a metric [17]. Given three arbitrary symmetric positive definite matrices P, Q, Z, with common dimensions, consider the following eigenvalue decompositionsand assume that the diagonal matrices Λ1 and Λ2 have the eigenvalues sorted in a descending order.For a given value of α in the divergence, we define ω = 2α ≠ 0 and use properties 6 and 9 (see Equations (168) and (175)) to obtain the equivalenceSince the S-divergence satisfies the triangle inequality for diagonal matrices [5,6,17]from (196), this implies thatIn similarity with the proof of the metric condition for S-divergence [6], we can use property 12 to bound above the first term in the right-hand-side of the equation bywhereas the second term satisfiesAfter bounding the right-hand-side of (198) with the help of (199) and (200), the divergence satisfies the desired triangle inequality (192) for α ≠ 0.On the other hand, as α → 0 converges to the Riemannian metricwhich concludes the proof of the metric condition of for any α ∈ ℝ

G. Proof of Theorem 3

This theorem assumes that the range spaces of the symmetric positive semidefinite matrices Cx and Cy are disjoint, in the sense that they only intersect at the origin, which is the most probable situation for n ≫ r (where n is the size of the matrices while r is their common rank). For ρ > 0 the regularized versions

and

of these matrices are full rank.

Let

denote the diagonal matrix representing the n eigenvalues of the matrix pencil

. The AB log-det divergence between the regularized matrices is equal to the divergence between

and the identity matrix of size n, i.e.,

The positive eigenvalues of the matrix pencil satisfy

therefore, the divergence can be directly estimated from the eigenvalues of

. In order to simplify this matrix product, we first express

and

in term of the auxiliary matrices

In this way, they are written as a scaled version of the identity matrix plus a symmetric term:

and

Next, using (207) and (208), we expand the product

and approximate the eigenvectors Uy → Ux of the residual matrix R to obtain the estimate

Hence, it is not difficult to see that the estimated residual is equal to

Let Eig ≶1{·} denote the arrangement of the ordered eigenvalues of the matrix argument after excluding those that are equal to 1. For convenience, we reformulate the property proved in [30] that for any pair of matrices A, B ∈ ℝm×n, the non-zero eigenvalues of ABT and of BTA are the same, into the following proposition.

Proposition 1. For any pair of m × n matrices A and B, the eigenvalues of the matrices Im + ABT and In + BTA, which are not equal to 1, coincide.

Since range spaces of Cx and of Cy only intersect at the origin, the approximation matrix

has r dominant eigenvalues of order O(ρ−1) and (n − r) remaining eigenvalues equal to 1. Using Proposition 1, these r dominant eigenvalues are given by

Let

and

, respectively denote the diagonal submatrices of

with the r largest and with the r smallest eigenvalues. From the definitions in (66) and (206), one can recognize that

, while

, and substituting them in (214) we obtain the estimate of the r largest eigenvalues

The relative error between these eigenvalues and the r largest eigenvalues of

is of order O(ρ). This is a consequence of the fact that these eigenvalues are O(ρ−1), while the Frobenius norm of the error matrix is O(ρ0). Then, the relative error between the dominant eigenvalues of the two matrices can be bounded above by

On the other hand, the r smallest eigenvalues of

are the reciprocal of the r dominant eigenvalues of the inverse matrix

, so we can estimate them using essentially the same procedure

For a sufficient small value of ρ > 0, the dominant contribution to the AB log-det divergence comes from the r largest and r smallest eigenvalues of the matrix pencil (Ĉx, Ĉy), so we obtain the desired approximation

Moreover, as ρ → 0, the relative error of this approximation also tends to zero.

H. Gamma Divergence for Multivariate Gaussian Densities

Recall that for a given quadratic function

, where A is an SPD matrix, the integral of exp {f(x)g} with respect to x is given by

This formula is obtained by evaluating the integral as follows:

assuming that A is an SPD matrix, which assures the convergence of the integral and the validity of (224).

The Gamma divergence involves the a product of densities. In the multivariate Gaussian case, this simplifies as

where

Integrating this product with the help of (224), we obtain

provided that αP−1 + βQ−1 is positive definite.

Rearranging the expression in terms of µ1 and µ2 yields

With the help of the Woodbury matrix identity, we simplify

and hence, arriving at the desired result:

This formula can be can easily particularized to evaluate the integrals and

and

By substituting these integrals into the definition of the Gamma divergence and simplifying, we obtain a generalized closed form formula:

which concludes the proof of Theorem 4.

Author Contributions

First two authors contributed equally to this work. Andrzej Cichocki has coordinated this study and wrote most of the sections 1–3 and 7–8. Sergio Cruces wrote most of the sections 4, 5, and 6. He also provided most of the final rigorous proofs presented in Appendices. Shun-ichi Amari proved the fundamental property (16) that the Riemannian metric is the same for all AB log-det divergences and critically revised the paper by providing inspiring comments. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Amari, S. Information geometry of positive measures and positive-definite matrices: Decomposable dually flat structure. Entropy 2014, 16, 2131–2145. [Google Scholar]

- Basseville, M. Divergence measures for statistical data processing—An annotated bibliography. Signal Process 2013, 93, 621–633. [Google Scholar]

- Moakher, M.; Batchelor, P.G. Symmetric Positive—Definite Matrices: From Geometry to Applications and Visualization. In Chapter 17 in the Book: Visualization and Processing of Tensor Fields; Weickert, J., Hagen, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 285–298. [Google Scholar]

- Amari, S. Information geometry and its applications: Convex function and dually flat manifold. In Emerging Trends in Visual Computing; Nielsen, F., Ed.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 75–102. [Google Scholar]

- Chebbi, Z.; Moakher, M. Means of Hermitian positive-definite matrices based on the log-determinant α-divergence function. Linear Algebra Appl 2012, 436, 1872–1889. [Google Scholar]

- Sra, S. Positive definite matrices and the S-divergence 2013. arXiv:1110.1773.

- Nielsen, F.; Bhatia, R. Matrix Information Geometry; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Amari, S. Alpha-divergence is unique, belonging to both f-divergence and Bregman divergence classes. IEEE Trans. Inf. Theory 2009, 55, 4925–4931. [Google Scholar]

- Zhang, J. Divergence function, duality, and convex analysis. Neural Comput 2004, 16, 159–195. [Google Scholar]

- Amari, S.; Cichocki, A. Information geometry of divergence functions. Bull. Polish Acad. Sci 2010, 58, 183–195. [Google Scholar]

- Cichocki, A.; Amari, S. Families of Alpha- Beta- and Gamma- divergences: Flexible and robust measures of similarities. Entropy 2010, 12, 1532–1568. [Google Scholar]

- Cichocki, A.; Cruces, S.; Amari, S. Generalized alpha-beta divergences and their application to robust nonnegative matrix factorization. Entropy 2011, 13, 134–170. [Google Scholar]

- Cichocki, A.; Zdunek, R.; Phan, A.-H.; Amari, S. Nonnegative Matrix and Tensor Factorizations; John Wiley & Sons Ltd: Chichester, UK, 2009. [Google Scholar]

- Cherian, A.; Sra, S.; Banerjee, A.; Papanikolopoulos, N. Jensen-Bregman logdet divergence with application to efficient similarity search for covariance matrices. IEEE Trans. Pattern Anal. Mach. Intell 2013, 35, 2161–2174. [Google Scholar]

- Cherian, A.; Sra, S. Riemannian sparse coding for positive definite matrices. Proceedings of the Computer Vision—ECCV 2014—13th European Conference, Zurich, Switzerland, September 6–12 2014; 8691, pp. 299–314.

- Olszewski, D.; Ster, B. Asymmetric clustering using the alpha-beta divergence. Pattern Recognit 2014, 47, 2031–2041. [Google Scholar]

- Sra, S. A new metric on the manifold of kernel matrices with application to matrix geometric mean. Proceedings of the 26th Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, Nevada, USA, 3–6 December 2012; pp. 144–152.

- Nielsen, F.; Liu, M.; Vemuri, B. Jensen divergence-based means of SPD Matrices. In Matrix Information Geometry; Springer: Berlin/Heidelberg, Germany, 2013; pp. 111–122. [Google Scholar]

- Hsieh, C.; Sustik, M.A.; Dhillon, I.; Ravikumar, P.; Poldrack, R. BIG & QUIC: Sparse inverse covariance estimation for a million variables. Proceedings of the 27th Annual Conference on Neural Information Processing Systems 2013, Lake Tahoe, Nevada, USA, 5–8 December 2013; pp. 3165–3173.

- Nielsen, F.; Nock, R. A closed-form expression for the Sharma-Mittal entropy of exponential families. CoRR. 2011. arXiv:1112.4221v1 [cs.IT]. Available online: http://arxiv.org/abs/1112.4221 accessed on 4 May 2015.

- Fujisawa, H.; Eguchi, S. Robust parameter estimation with a small bias against heavy contamination. Multivar. Anal 2008, 99, 2053–2081. [Google Scholar]

- Kulis, B.; Sustik, M.; Dhillon, I. Learning low-rank kernel matrices. Proceedings of the Twenty-third International Conference on Machine Learning (ICML06), Pittsburgh, PA, USA, 25–29 July 2006; pp. 505–512.

- Cherian, A.; Sra, S.; Banerjee, A.; Papanikolopoulos, N. Efficient similarity search for covariance matrices via the jensen-bregman logdet divergence. Proceedings of the IEEE International Conference on Computer Vision, ICCV 2011, Barcelona, Spain, 6–13 November 2011; pp. 2399–2406.

- Österreicher, F. Csiszár’s f-divergences-basic properties. RGMIA Res. Rep. Collect. 2002. Available online: http://rgmia.vu.edu.au/monographs/csiszar.htm accessed on 6 May 2015.

- Cichocki, A.; Zdunek, R.; Amari, S. Csiszár’s divergences for nonnegative matrix factorization: Family of new algorithms. Independent Component Analysis and Blind Signal Separation, Proceedings of 6th International Conference on Independent Component Analysis and Blind Signal Separation (ICA 2006), Charleston, SC, USA, 5–8 March 2006; 3889, pp. 32–39.

- Reeb, D.; Kastoryano, M.J.; Wolf, M.M. Hilbert’s projective metric in quantum information theory. J. Math. Phys 2011, 52, 082201. [Google Scholar]

- Kim, S.; Kim, S.; Lee, H. Factorizations of invertible density matrices. Linear Algebra Appl 2014, 463, 190–204. [Google Scholar]

- Bhatia, R. Positive Definite Matrices; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Li, R.-C. Rayleigh Quotient Based Optimization Methods For Eigenvalue Problems. In Summary of Lectures Delivered at Gene Golub SIAM Summer School 2013; Fudan University: Shanghai, China, 2013. [Google Scholar]

- De Moor, B.L.R. On the Structure and Geometry of the Product Singular Value Decomposition; Numerical Analysis Project NA-89-06; Stanford University: Stanford, CA, USA, 1989; pp. 1–52. [Google Scholar]

- Golub, G.H.; van Loan, C.F. Matrix Computations, 3rd ed; Johns Hopkins University Press: Baltimore, MD, USA, 1996; pp. 555–571. [Google Scholar]

- Zhou, S.K.; Chellappa, R. From Sample Similarity to Ensemble Similarity: Probabilistic Distance Measures in Reproducing Kernel Hilbert Space. IEEE Trans. Pattern Anal. Mach. Intell 2006, 28, 917–929. [Google Scholar]

- Harandi, M.; Salzmann, M.; Porikli, F. Bregman Divergences for Infinite Dimensional Covariance Matrices. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1003–1010.

- Minh, H.Q.; Biagio, M.S.; Murino, V. Log-Hilbert-Schmidt metric between positive definite operators on Hilbert spaces. Adv. Neural Inf. Process. Syst 2014, 27, 388–396. [Google Scholar]

- Josse, J.; Sardy, S. Adaptive Shrinkage of singular values 2013. arXiv:1310.6602.

- Donoho, D.L.; Gavish, M.; Johnstone, I.M. Optimal Shrinkage of Eigenvalues in the Spiked Covariance Model 2013. arXiv:1311.0851.

- Gavish, M.; Donoho, D. Optimal shrinkage of singular values 2014. arXiv:1405.7511.

- Davis, J.; Dhillon, I. Differential entropic clustering of multivariate gaussians. Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; pp. 337–344.

- Abou-Moustafa, K.; Ferrie, F. Modified divergences for Gaussian densities. Proceedings of the Structural, Syntactic, and Statistical Pattern Recognition, Hiroshima, Japan, 7–9 November 2012; pp. 426–436.

- Burbea, J.; Rao, C. Entropy differential metric, distance and divergence measures in probability spaces: A unified approach. J. Multi. Anal 1982, 12, 575–596. [Google Scholar]

- Hosseini, R.; Sra, S.; Theis, L.; Bethge, M. Statistical inference with the Elliptical Gamma Distribution 2014. arXiv:1410.4812.

- Manceur, A.; Dutilleul, P. Maximum likelihood estimation for the tensor normal distribution: Algorithm, minimum sample size, and empirical bias and dispersion. J. Comput. Appl. Math 2013, 239, 37–49. [Google Scholar]

- Akdemir, D.; Gupta, A. Array variate random variables with multiway Kronecker delta covariance matrix structure. J. Algebr. Stat 2011, 2, 98–112. [Google Scholar]

- PHoff, P.D. Separable covariance arrays via the Tucker product, with applications to multivariate relational data. Bayesian Anal 2011, 6, 179–196. [Google Scholar]

- Gerard, D.; Hoff, P. Equivariant minimax dominators of the MLE in the array normal model 2014. arXiv:1408.0424.

- Ohlson, M.; Ahmad, M.; von Rosen, D. The Multilinear Normal Distribution: Introduction and Some Basic Properties. J. Multivar. Anal 2013, 113, 37–47. [Google Scholar]

- Ando, T. Majorization, doubly stochastic matrices, and comparison of eigenvalues. Linear Algebra Appl 1989, 118, 163–248. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).