Abstract

We address the problem of non-parametric estimation of the recently proposed measures of statistical dispersion of positive continuous random variables. The measures are based on the concepts of differential entropy and Fisher information and describe the “spread” or “variability” of the random variable from a different point of view than the ubiquitously used concept of standard deviation. The maximum penalized likelihood estimation of the probability density function proposed by Good and Gaskins is applied and a complete methodology of how to estimate the dispersion measures with a single algorithm is presented. We illustrate the approach on three standard statistical models describing neuronal activity.

1. Introduction

Frequently, the dispersion (variability) of measured data needs to be described. Although standard deviation is used ubiquitously for quantification of variability, such approach has limitations. The dispersion of the probability distribution can be understood in different points of view: as “spread” with respect to the expected value, “evenness” (“randomness”) or “smoothness”. For example highly variable data might not be random at all if it consists only of “extremely small” and “extremely large” measurements. Although the probability density function or its estimate provides a complete view, quantitative methods are needed in order to compare different models or experimental results.

In a series of recent studies [1,2] we proposed and justified alternative measures of dispersion. The effort was inspired by various information-based measures of signal regularity or randomness and their interpretations that have gained significant popularity in various branches of science [3,4,5,6,7,8,9]. For convenience, in what follows we discuss only the relative dispersion coefficients (i.e., the data or the probability density function is first normalized to unit mean). Besides the coefficient of variation, , which is the relative dispersion measure based on standard deviation, we employ the entropy-based dispersion coefficient , and the Fisher information-based coefficient . The difference between these coefficients lies in the fact that the Fisher information-based coefficient, , describes how “smooth” is the distribution and it is sensitive to the modes of the probability density, while the entropy-based coefficient, , describes how “even” it is, hence being sensitive to the overall spread of the probability density over the entire support. Since multimodal densities can be more evenly spread than unimodal ones, the behavior of cannot be generally deduced from (and vice versa).

If a complete description of the data is available, i.e., the probability density function is known, the values of the above mentioned dispersion coefficients can be calculated analytically or numerically. However, the estimation of these coefficients from data is more problematic, and so far we employed either the parametric approach [2] or non-parametric estimation of based on the popular Vasicek’s estimator of differential entropy [10,11]. The goal of this paper is to provide a self-contained method of non-parametric estimation. We describe a method that can be used to estimate both and as a result of a single procedure.

2. Methods

2.1. Dispersion Coefficients

We briefly review the proposed dispersion measures, for more details see [2]. Let T be a continuous positive random variable with probability density function defined on . By far, the most common measure of dispersion of T is the standard deviation, σ, defined as the square root of the second central moment of the distribution. The corresponding relative dispersion measure is known as the coefficient of variation, ,

where is the mean value of T.

The entropy-based dispersion coefficient is defined as

where is the differential entropy [12],

The numerator in (2), , is the entropy-based dispersion, “analogous” to the notion of standard deviation σ. The interpretation of relies on the asymptotic equipartition property theorem [12]. Informally, the theorem states that almost any sequence of n realizations of the random variable T comes from a rather small subset (the typical set) in the n-dimensional space of all possible values. The volume of this subset is approximately , and the volume is bigger for those random variables, which generate more diverse (or unpredictable) realizations. The values of and quantify how “evenly” is the probability distributed over the entire support. From this point of view, is more appropriate than σ to describe the randomness of outcomes generated by the random variable T.

The Fisher information-based dispersion coefficient, , is defined as

where

The Fisher information is traditionally interpreted by means of the Cramer–Rao bound, i.e., the value of is the lower bound on the error of any unbiased estimator of a location parameter of the distribution. Due to the derivative in (5), certain regularity conditions are required on in order for to be interpreted according to the Cramer–Rao bound, namely continuous differentiability for all and , [13]. However, the integral in (5) exists and is finite also for, e.g., an exponential distribution. Any locally steep slope or the presence of modes in the shape of increases , [4].

2.2. Methods of Non-Parametric Estimation

In the following we assume that are N independent realizations of the random variable , defined on .

The is most often estimated by the ratio of sample standard deviation to sample mean, however, the estimate may be considerably biased, [14].

Estimation of relies on the estimate of the differential entropy , as follows from (2). The problem of differential entropy estimation is well exploited in literature [11,15,16]. It is preferable to avoid estimations based on data binning (histograms), because discretization affects the results. The most popular approaches are represented by the class of Kozachenko–Leonenko estimators [17,18] and the Vasicek’s estimator [10]. Our experience shows that the simple Vasicek’s estimator gives good results on a wide range of data [19,20,21], thus in this paper we employ it for the sake of comparison with the estimation method described further below. Given the ranked observations , the Vasicek’s estimator is defined as

where for and for . The integer parameter is set prior to the calculation, roughly one may set m to be the integer part of . The bias-correcting factor is

where denotes the digamma function, [22].

The estimation of requires estimation of , and it is more problematic and as far as we know no “standard” algorithms have been proposed.

Huber [23] showed that there exists a unique interpolation of the empirical cumulative distribution function such that the resulting Fisher information is maximized. In theory, one may use this method to estimate . Unfortunately, it is very complicated and probably not feasible even for moderate values of N.

Kernel density estimators are widely used for estimation of the density. They can be successfully used for the calculation of the differential entropy too [24,25]. But, according to our experience, they are unsuitable for estimation of the Fisher information, mainly due to the inability to control the “smoothness” of the ratio , which is the principal term in the integral (5). Inappropriate choice of the kernel and the bandwidth leads to many local extremes of the kernel density estimator which substantially increases the Fisher information. Theoretically, the kernel should be “optimal” in the sense of minimizing the mean square error of the ratio , which is difficult to derive in a closed form, generally. One may also derive Vasicek-like estimator of , based on the empirical cumulative distribution function and considering differences of higher order, however, we discovered that this approach is numerically unstable.

We found that the maximum penalized likelihood (MPL) method of Good and Gaskins [26] for probability density estimation offers a possible solution. The idea is to represent the whole probability density function using a suitable orthogonal base of functions (Hermite functions), and the “best” estimate of is obtained by solving a set of equations for the base coefficients given the sample .

In order to proceed, we first log-transform and normalize the sample,

where

The idea here is to obtain a new random variable X, , with a more Gaussian-like density shape unlike the shape of the distribution of T, which is usually highly skewed. Furthermore, the limitation of the support of T to the real half-line may cause numerical difficulties. The probability density function of X is expressed by the real probability amplitude , so that . For the purpose of numerical implementation, is represented by the first r Hermite functions, [22], as

where

and denotes the Hermite polynomial .

The goal is to find such , for which the score, ω, is maximized,

subject to constraint (ensuring that is a probability density function)

The term in (12) is the log-likelihood function,

and Φ is the roughness penalty proposed by [26], controlling the smoothness of the density of X (and hence the smoothness of ),

The two nonnegative parameters α and β ( ) should be set prior to the calculation. Note that the first term in (15) is equal to α-times the Fisher information .

The system of equations for ’s which maximize (12) can be written as [26]

for . The system can be solved iteratively as follows. Initially , for and , which gives the approximation of X by a Gaussian random variable. Then, in each iteration step, current values of are substituted into the right hand side of (16), and the system is solved as a linear system with unknown variables appearing on the left hand side of (16). Once the linear system is solved, the coefficients are normalized to satisfy (13). The corresponding Lagrange multiplier is calculated as

The variables (r coefficients and the Lagrange multiplier λ) are computed iteratively. In accordance with [26], the algorithm is stopped when λ does not change within desired precision in subsequent iterations.

After the score-maximizing ’s are obtained, and thus the density of X is estimated, the estimates of dispersion coefficients in (2) and (4) are calculated from the following entropy and Fisher information estimators. The change of variables gives

where is the estimator of the differential entropy of X, μ and σ are given by (9) and is the estimated expected value of X. (Numerically, is usually small and can be neglected. Its value is influenced by the number, r, of considered Hermite functions.)

The Fisher information estimator then follows, by an analogous change of variables, as

The calculation requires the first derivative of [22],

3. Results

Neurons communicate via the process of synaptic transmission, which is triggered by an electrical discharge called the action potential or spike. Since the time intervals between individual spikes are relatively large when compared to the spike duration, and since for any particular neuron the “shape” or character of a spike remains constant, the spikes are usually treated as point events in time. Spike train consists of times of spike occurrences , equivalently described by a set of n interspike intervals (ISIs) , , and these ISIs are treated as independent realizations of the random variable . The probabilistic description of the spiking results from the fact that the positions of spikes cannot be predicted deterministically due to presence of intrinsic noise, only the probability that a spike occurs can be given [27,28,29]. In real neuronal data, however, the non-renewal property of the spike trains is often observed [30,31]. Taking the serial correlation of the ISIs as well as any other statistical dependence into account would result in the decrease of the entropy and hence of the value of , see [12].

We compare exact and estimated values of the dispersion coefficients on three widely used statistical models of ISIs: gamma, inverse Gaussian and lognormal. Since only the relative coefficients are discussed, , we parameterize each distributions by its while keeping .

The gamma distribution is one of the most frequent statistical descriptors of ISIs used in analysis of experimental data [32]. Probability density function of gamma distribution can be written as

where is the gamma function, [22]. The differential entropy is equal to, [2],

where denotes the digamma function, [22]. The Fisher information about the location parameter is

The Fisher information diverges for , with the exception of (corresponds to exponential distribution) where , but the Cramer–Rao based interpretation of does not hold in this case since , see [13] for details.

The inverse Gaussian distribution is often used for description of ISIs and fitted to experimental data. It arises as result of spiking activity of a stochastic variant of the perfect integrate-and-fire neuronal model [33]. The density of this distribution is

The differential entropy of this distribution is equal to

where denotes the first derivative of the modified Bessel function of the second kind [22], . The Fisher information of the inverse Gaussian distribution results in

The lognormal distribution is rarely presented as model distribution of ISIs. However, it represents a common descriptor in analysis of experimental data [33], with density

The differential entropy of this distribution is equal to

and the Fisher information is given by

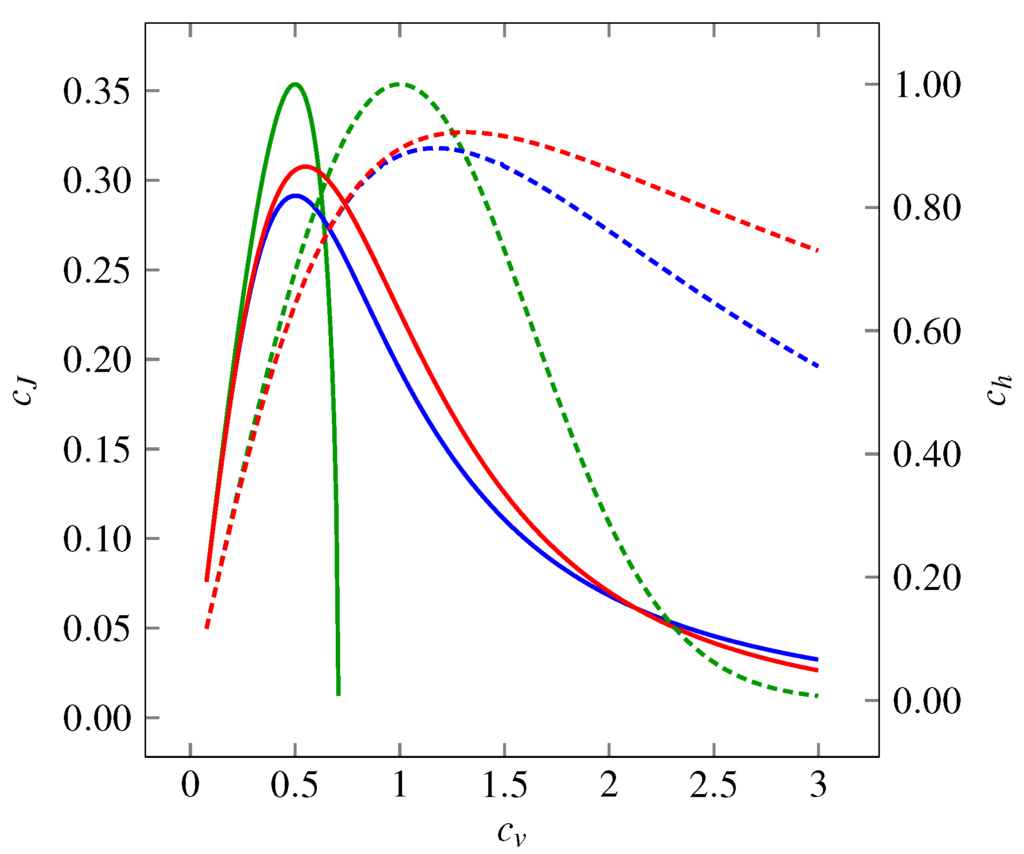

The theoretical values of the coefficients of and as functions of are shown in Figure 1 for all the three distributions mentioned above. We see that the functions form hill-shaped curves with local maxima achieved for different values of . Asymptotically, as well as tend to zero for or for the three models.

Figure 1.

Variability represented by the coefficient of variation , the entropy-based coefficient (dashed curves, right-hand-side axis) and the Fisher information-based coefficient (solid curves, left-hand-side axis) of three probability distributions: gamma (green curves), inverse Gaussian (blue curves) and lognormal (red curves). The entropy-based coefficient, , expresses the evenness of the distribution. In dependency on , it shows a maximum at for gamma distribution (corresponds to exponential distribution) and between and for inverse Gaussian and lognormal distributions. For all the distributions holds as or . The Fisher information-based coefficient, , grows as the distributions become “smoother”. The overall dependence on shows a maximum around . Similarly to dependencies, as or (does not hold for gamma distribution, where can be calculated only for ).

To explore the accuracy of the estimators of the coefficients and when the MPL estimations of the densities are employed, we did three separate simulation studies. All the simulations and calculations were performed in the free software package R [34]. For each model with the probability distribution (21), (24) and (27), respectively, the coefficient of variation, , varied from to in steps of . One thousand samples, each consisting of 1000 random numbers (a common number of events in experimental records of neuronal firing), were taken for each value of from the three distributions.

The MPL method was employed on each generated sample to estimate the density. We chose the number of the base functions equal to . The larger bases, or , were examined too, with negligible differences in the estimation for the selected models. The values of the parameters for (15) were chosen as and , in accordance with the suggestion ([26], Appendix A).

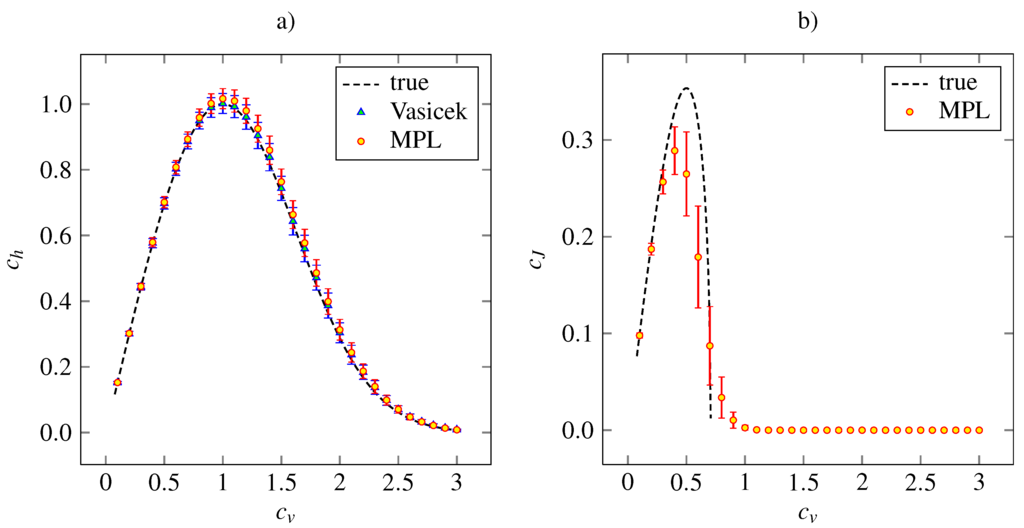

The outcome of the simulation study for the gamma density (21) is presented in Figure 2, where the theoretical and estimated values of and for given are plotted. In addition, the values of coefficient calculated by Vasicek’s estimator are shown. We see that the MPL method results in precise estimation of for low values of and in slightly overestimated values of for . The Vasicek’s estimator gives slightly overestimated values too. Overall, the performances of Vasicek’s and MPL estimators are comparable. The maximum of the MPL estimator of is achieved at the theoretical value, . We can see that the standard deviation of the estimate is higher as grows from zero. As , the standard deviation begins to decrease slowly.

We conclude that the MPL estimator of is accurate for low . For it results in underestimated and it tends to zero as as well as the theoretical values. The high bias of for high is caused by inappropriate choice of the parameters α and β, which were kept fixed in accordance with the suggestion of [26]. Nevertheless, the main shape of the dependency on remains and the MPL estimates of achieves local maximum at , which is slightly lower than the theoretical value.

Figure 2.

The entropy-based variability coefficient (panel a) and the Fisher information-based variability coefficient (panel b), calculated nonparametrically from (18) and (19), respectively, for gamma distribution (24). The mean values (indicated by red discs) accompanied by the standard error (red error bars) are plotted in dependency on the coefficient of variation, . The dashed lines are the theoretical curves. In panel a, the results obtained by estimation (6) are added (blue triangles indicate mean values and blue error bars stand for standard error). The results are based on 1000 trials of samples of size 1000 for each value of .

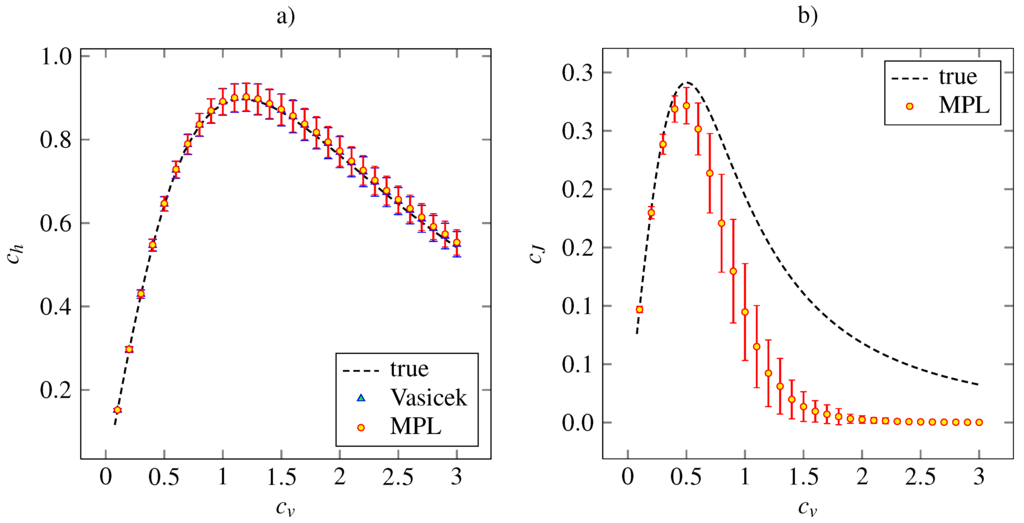

Figure 3 shows the corresponding results for the inverse Gaussian density. The Vasicek’s and MPL estimates of are almost precise. Both the estimator of based on MPL method and that based on the Vasicek’s entropy give the same mean values together with the same standard deviations. The MPL estimator of gives accurate and precise results for . For higher , the estimated value is lower than the true one. The maximum of estimated is achieved at the same point, . The asymptotical decrease of the estimated to zero is faster than the true dependency is. By our experience, this can be improved by setting higher α and β in order to give higher impact to the roughness penalty (15).

Figure 3.

Estimations of the variability coefficients (panel a) and (panel b) for inverse Gaussian distribution (24). The notation and the layout is the same as in Figure 2.

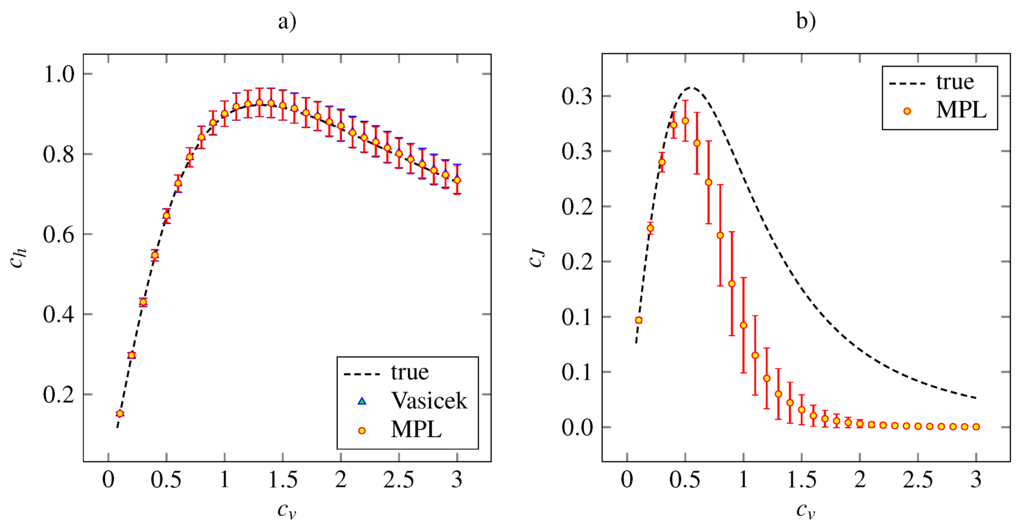

The results of the simulation study on the lognormal distribution with density (27) is plotted in Figure 4, together with the theoretical dependencies and the results for the Vasicek’s estimator of the entropy. Both the MPL estimators of and have qualitatively same accuracy and precision features as the analogous estimators for the inverse Gaussian model.

Figure 4.

Estimations of the variability coefficients (panel a) and (panel b) for lognormal distribution (27). The notation and the layout is the same as in Figure 2.

4. Discussion and Conclusions

In proposing the dispersion measures based on entropy and Fisher information we were motivated by the difference between frequently mixed up notions of ISI variability and randomness, which, however, represent two different concepts [1]. The proposed measures have been so far successfully applied mainly to examine differences between various neuronal activity regimes, obtained either by simulation of neuronal models or from experimental measurements [32]. There, the comparison of neuronal spiking activity under different conditions plays a key role in resolving the question of neuronal coding. However, the methodology is not specific to the research of neuronal coding; it is generally applicable whenever one needs to quantify some additional properties of positive continuous random data.

In this paper, we used the MPL method of Good and Gaskins [26] to estimate the dispersion coefficients nonparametrically from data. We found that the method performs comparably with the classical Vasicek’s estimator [10] in the case of entropy-based dispersion.

The estimation of Fisher information-based dispersion is more complicated, but we found that the MPL method gives reasonable results. In fact, so far the MPL method is the best option for estimation among the possibilities we tested (modified kernel methods, spline interpolation and approximation methods). The key parameters of the MPL method which affect the estimated value of (and consequently of ) are the values of α and β in (15). In this paper we employed the suggestion of [26], however, we found that different setting may sometimes lead to dramatic improvement in the estimation of . We tested the performance of the estimation for sample sizes less than 1,000 and we found out that significant and systematic improvement resulting in low bias can be reached if α and β are allowed to depend somehow on the sample size. In this sense, the parameters play a similar role to the parameter m in the Vasicek’s estimator (6). We are currently working on a systematic approach to determine optimally, but the fine tuning of α and β is a difficult numerical task. Nevertheless, even without the fine-tuning, the performance of the entropy estimation is essentially the same as in the case of Vasicek’s estimator.

The length of the neuronal record and hence the sample size is another issue related to the choice of these parameters. As emphasized, e.g., by [35,36], particularly short record can considerably modify the empirical distribution. This can be adjusted by the parameter values, choosing whether the distribution should fit the data or it should be rather robust.

Acknowledgements

This work was supported by the Institute of Physiology RVO: 67985823, the Centre for Neuroscience P304/12/G069 and by the Grant Agency of the Czech Republic projects P103/11/0282 and P103/12/P558. We thank Petr Lansky for helpful discussion.

References

- Kostal, L.; Lansky, P.; Rospars, J.P. Review: Neuronal coding and spiking randomness. Eur. J. Neurosci. 2007, 26, 2693–2701. [Google Scholar] [CrossRef] [PubMed]

- Kostal, L.; Lansky, P.; Pokora, O. Variability measures of positive random variables. PLoS One 2011, 6, e21998. [Google Scholar] [CrossRef] [PubMed]

- Bercher, J.F.; Vignat, C. On minimum Fisher information distributions with restricted support and fixed variance. Inf. Sci. 2009, 179, 3832–3842. [Google Scholar] [CrossRef]

- Frieden, B.R. Physics from Fisher Information: A Unification; Cambridge University Press: New York, NY, USA, 1998. [Google Scholar]

- Berger, A.L.; Della Pietra, V.J.; Della Pietra, S.A. A maximum entropy approach to natural language processing. Comput. Linguist. 1996, 22, 39–71. [Google Scholar]

- Della Pietra, S.A.; Della Pietra, V.J.; Lafferty, J. Inducing features of random fields. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 380–393. [Google Scholar] [CrossRef]

- Di Crescenzo, A.; Longobardi, M. Entropy-based measure of uncertainty in past lifetime distributions. J. Appl. Probab. 2002, 39, 434–440. [Google Scholar] [CrossRef]

- Ramírez-Pacheco, J.; Torres-Román, D.; Rizo-Dominguez, L.; Trejo-Sanchez, J.; Manzano-Pinzón, F. Wavelet Fisher’s information measure of 1/fα signals. Entropy 2011, 13, 1648–1663. [Google Scholar] [CrossRef]

- Pennini, F.; Ferri, G.; Plastino, A. Fisher information and semiclassical treatments. Entropy 2009, 11, 972–992. [Google Scholar] [CrossRef]

- Vasicek, O. A test for normality based on sample entropy. J. Roy. Stat. Soc. B 1976, 38, 54–59. [Google Scholar]

- Tsybakov, A.B.; van der Meulen, E.C. Root-n consistent estimators of entropy for densities with unbounded support. Scand. J. Statist. 1994, 23, 75–83. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley and Sons, Inc.: New York, NY, USA, 1991. [Google Scholar]

- Pitman, E.J.G. Some Basic Theory for Statistical Inference; John Wiley and Sons, Inc.: New York, NY, USA, 1979. [Google Scholar]

- Ditlevsen, S.; Lansky, P. Firing variability is higher than deduced from the empirical coefficient of variation. Neural Comput. 2011, 23, 1944–1966. [Google Scholar] [CrossRef] [PubMed]

- Beirlant, J.; Dudewicz, E.J.; Gyorfi, L.; van der Meulen, E.C. Nonparametric entropy estimation: An overview. Int. J. Math. Stat. Sci. 1997, 6, 17–39. [Google Scholar]

- Gupta, M.; Srivastava, S. Parametric Bayesian estimation of differential entropy and relative entropy. Entropy 2010, 12, 818–843. [Google Scholar] [CrossRef]

- Kozachenko, L.F.; Leonenko, N.N. Sample estimate of the entropy of a random vector. Prob. Inform. Trans. 1987, 23, 95–101. [Google Scholar]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 66138–16. [Google Scholar] [CrossRef]

- Esteban, M.D.; Castellanos, M.E.; Morales, D.; Vajda, I. Monte Carlo comparison of four normality tests using different entropy estimates. Comm. Stat. Simulat. Comput. 2001, 30, 761–785. [Google Scholar] [CrossRef]

- Miller, E.G.; Fisher, J.W. ICA using spacings estimates of entropy. J. Mach. Learn. Res. 2003, 4, 1271–1295. [Google Scholar]

- Kostal, L.; Lansky, P. Similarity of interspike interval distributions and information gain in a stationary neuronal firing. Biol. Cybern. 2006, 94, 157–167. [Google Scholar] [CrossRef] [PubMed]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions, with Formulas, Graphs, and Mathematical Tables; Dover: New York, NY, USA, 1965. [Google Scholar]

- Huber, P.J. Fisher information and spline interpolation. Ann. Stat. 1974, 2, 1029–1033. [Google Scholar] [CrossRef]

- Hall, P.; Morton, S.C. On the estimation of the entropy. Ann. Inst. Statist. Math. 1993, 45, 69–88. [Google Scholar] [CrossRef]

- Eggermont, P.B.; LaRiccia, V.N. Best asymptotic normality of the kernel density entropy estimator for smooth densities. IEEE Trans. Inform. Theor. 1999, 45, 1321–1326. [Google Scholar] [CrossRef]

- Good, I.J.; Gaskins, R.A. Nonparametric roughness penalties for probability densities. Biometrika 1971, 58, 255–277. [Google Scholar] [CrossRef]

- Gerstner, W.; Kistler, W.M. Spiking Neuron Models: Single Neurons, Populations, Plasticity; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Stein, R.; Gossen, E.; Jones, K. Neuronal variability: Noise or part of the signal? Nat. Rev. Neurosci. 2005, 6, 389–397. [Google Scholar] [CrossRef] [PubMed]

- Shinomoto, S.; Shima, K.; Tanji, J. Differences in spiking patterns among cortical neurons. Neural Comput. 2003, 15, 2823–2842. [Google Scholar] [CrossRef] [PubMed]

- Avila-Akerberg, O.; Chacron, M.J. Nonrenewal spike train statistics: Cause and functional consequences on neural coding. Exp. Brain Res. 2011, 210, 353–371. [Google Scholar] [CrossRef] [PubMed]

- Farkhooi, F.; Strube, M.; Nawrot, M.P. Serial correlation in neural spike trains: Experimental evidence, stochastic modelling and single neuron variability. Phys. Rev. E 2009, 79, 021905. [Google Scholar] [CrossRef]

- Duchamp-Viret, P.; Kostal, L.; Chaput, M.; Lansky, P.; Rospars, J.P. Patterns of spontaneous activity in single rat olfactory receptor neurons are different in normally breathing and tracheotomized animals. J. Neurobiol. 2005, 65, 97–114. [Google Scholar] [CrossRef] [PubMed]

- Pouzat, C.; Chaffiol, A. Automatic spike train analysis and report generation. An implementation with R, R2HTML and STAR. J. Neurosci. Meth. 2009, 181, 119–144. [Google Scholar] [CrossRef] [PubMed]

- R Development Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2009. [Google Scholar]

- Nawrot, M.P.; Boucsein, C.; Rodriguez-Molina, V.; Riehle, A.; Aertsen, A.; Rotter, S. Measurement of variability dynamics in cortical spike trains. J. Neurosci. Meth. 2008, 169, 374–390. [Google Scholar] [CrossRef] [PubMed]

- Pawlas, Z.; Lansky, P. Distribution of interspike intervals estimated from multiple spike trains observed in a short time window. Phys. Rev. E 2011, 83, 011910. [Google Scholar] [CrossRef]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).