An Improved Boundary-Aware U-Net for Ore Image Semantic Segmentation

Abstract

1. Introduction

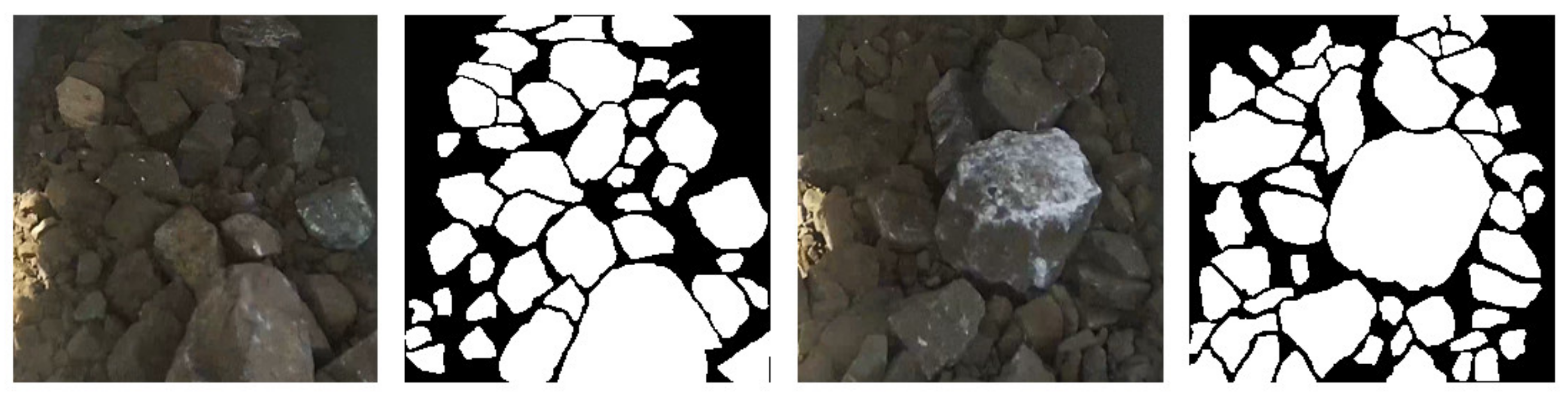

2. Acquisition and Preprocessing Methods of Dataset

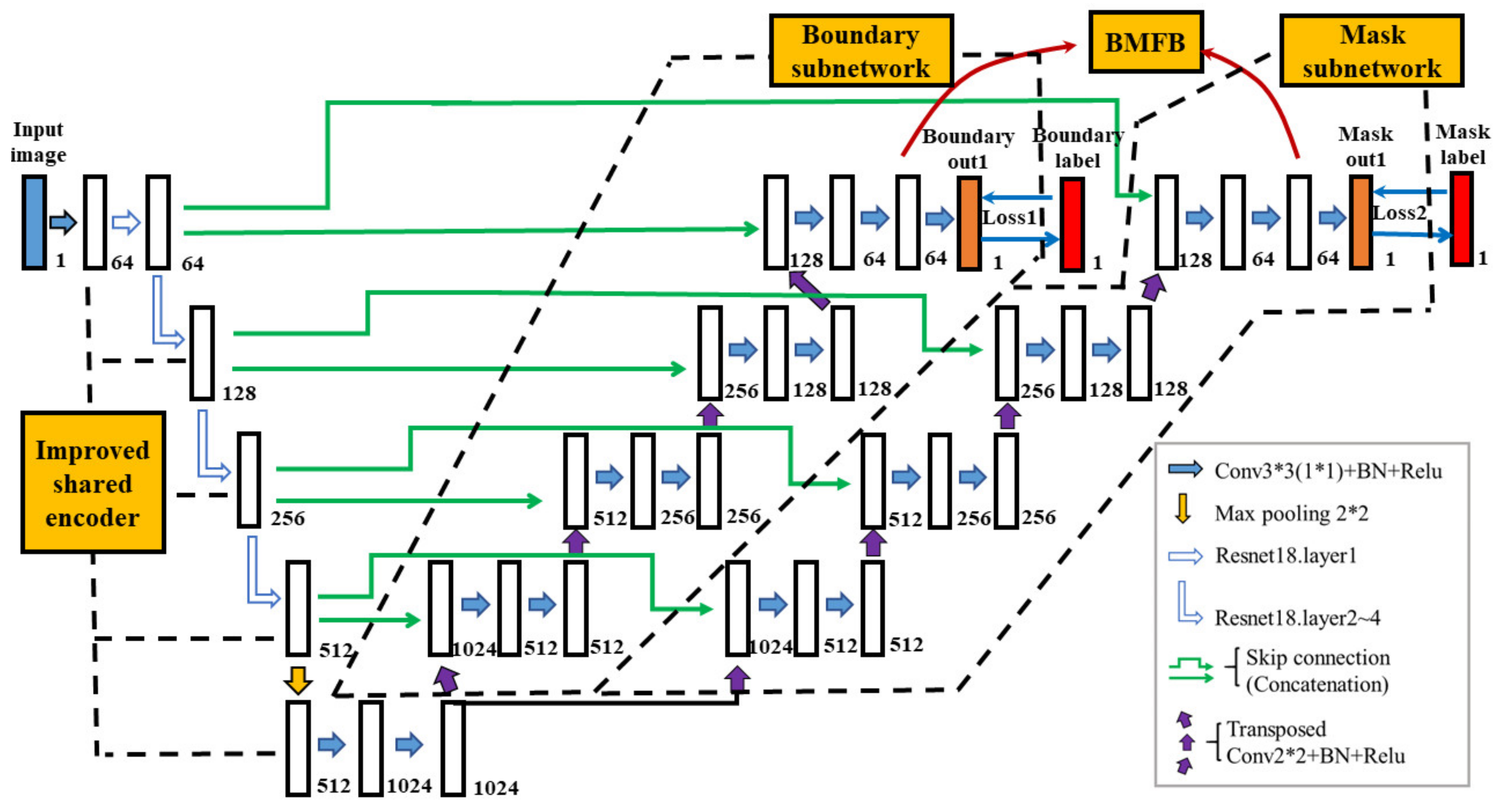

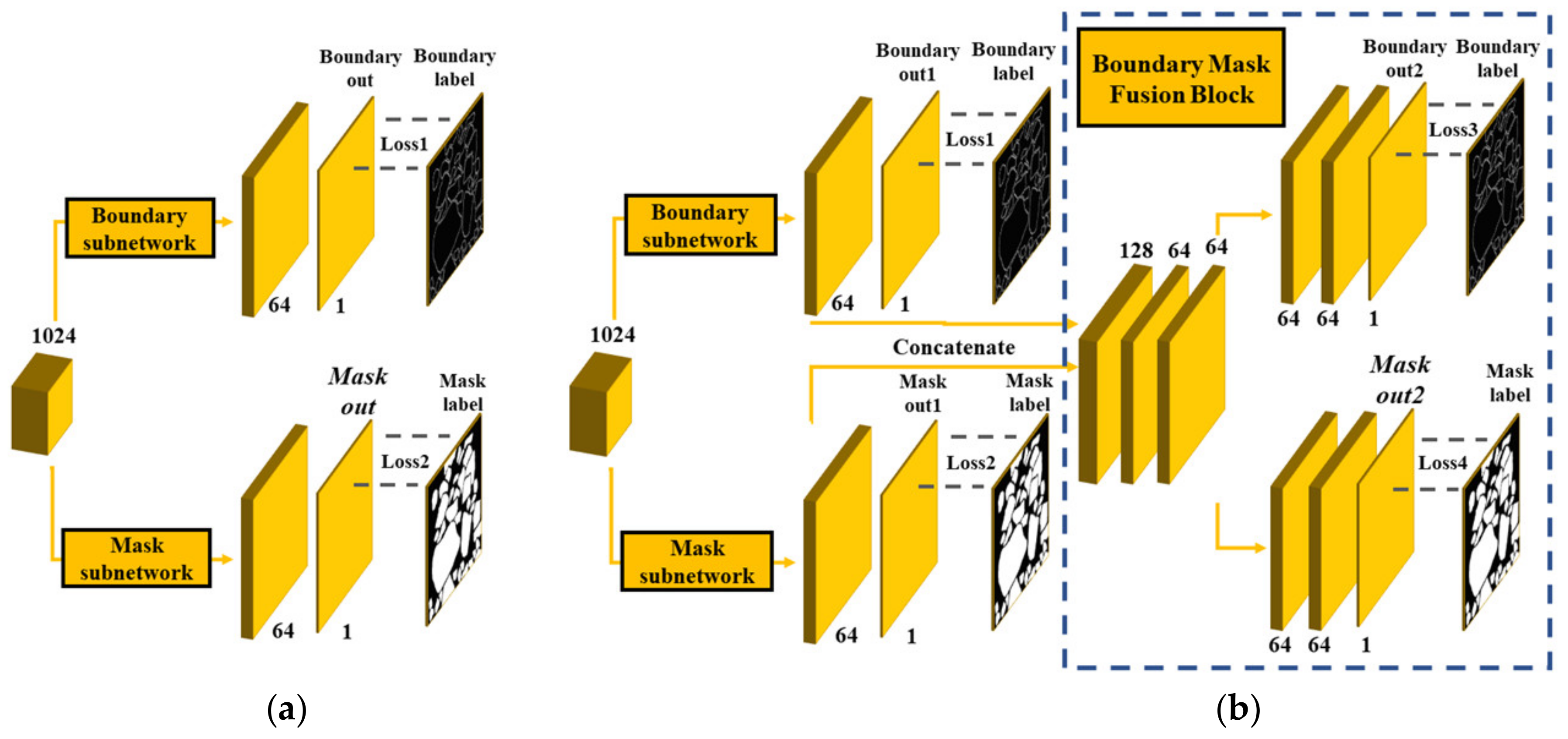

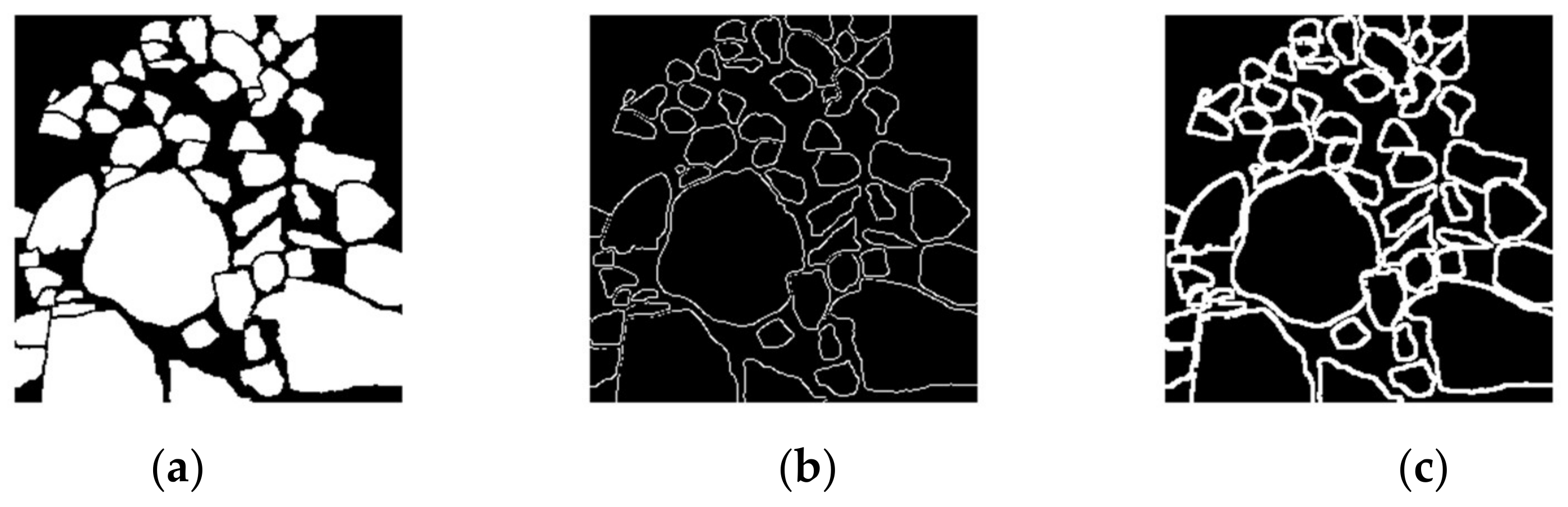

3. Boundary-Aware U-Net

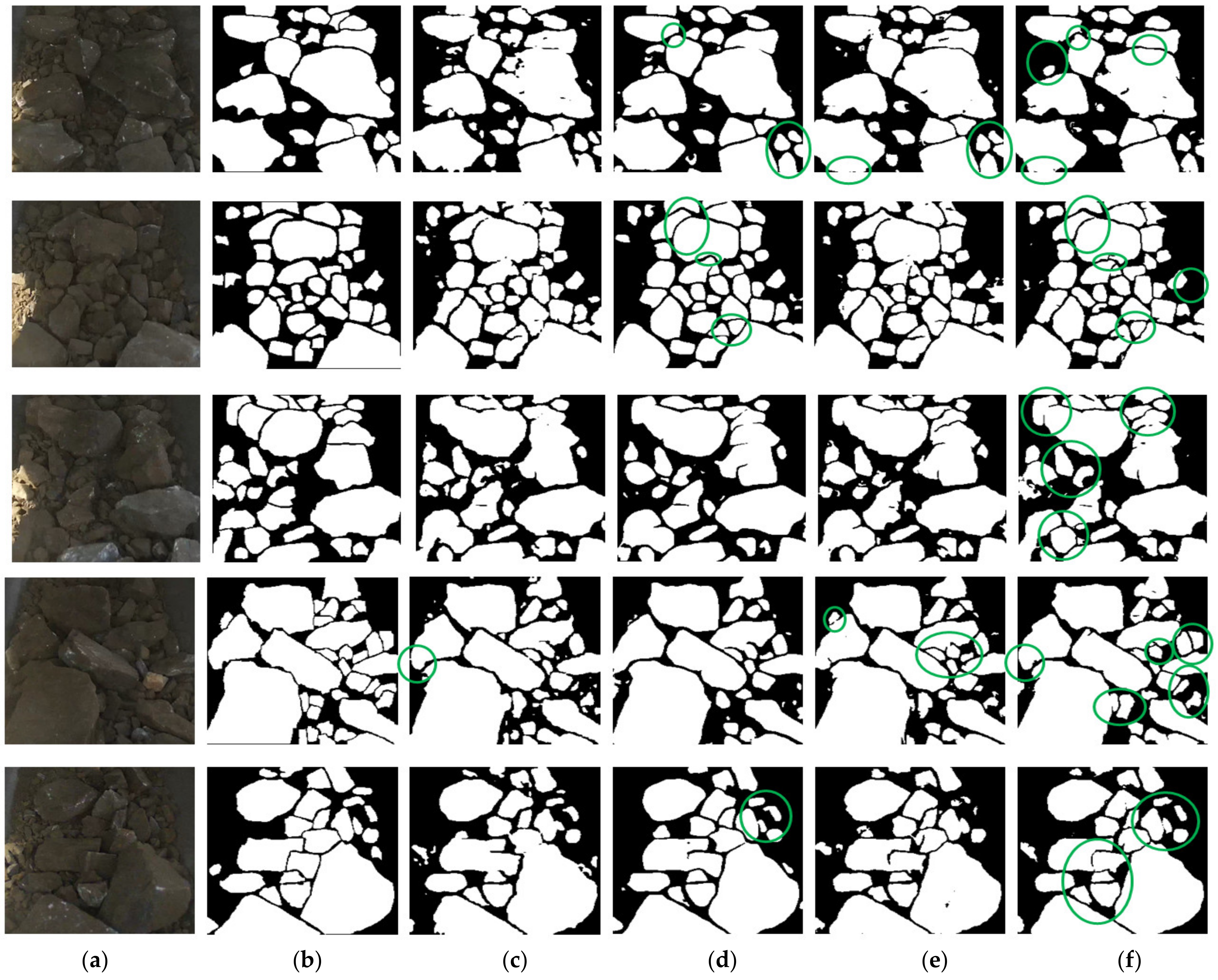

4. Experiment Results

4.1. Dataset and Preprocessing

4.2. Experiment Details

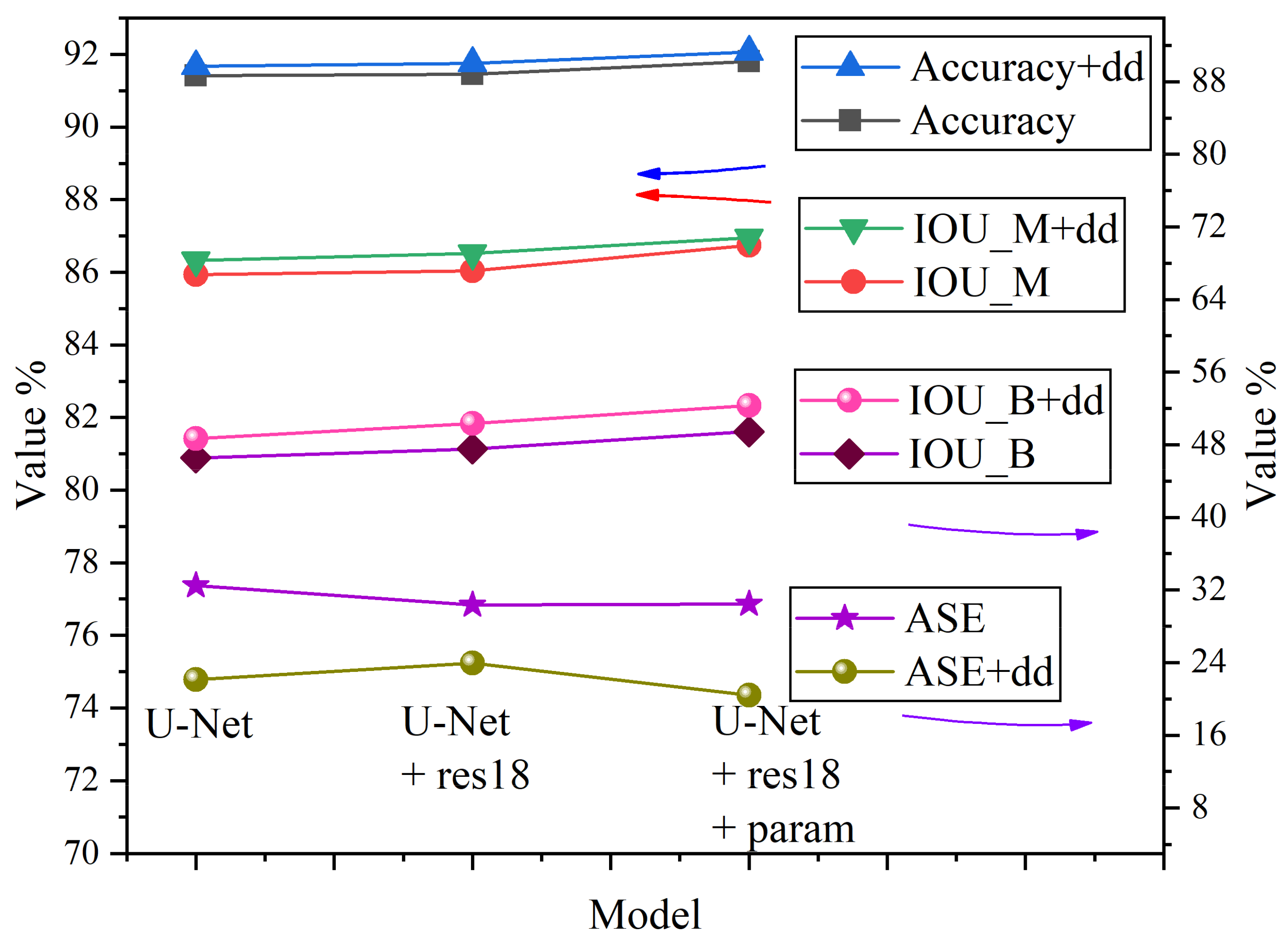

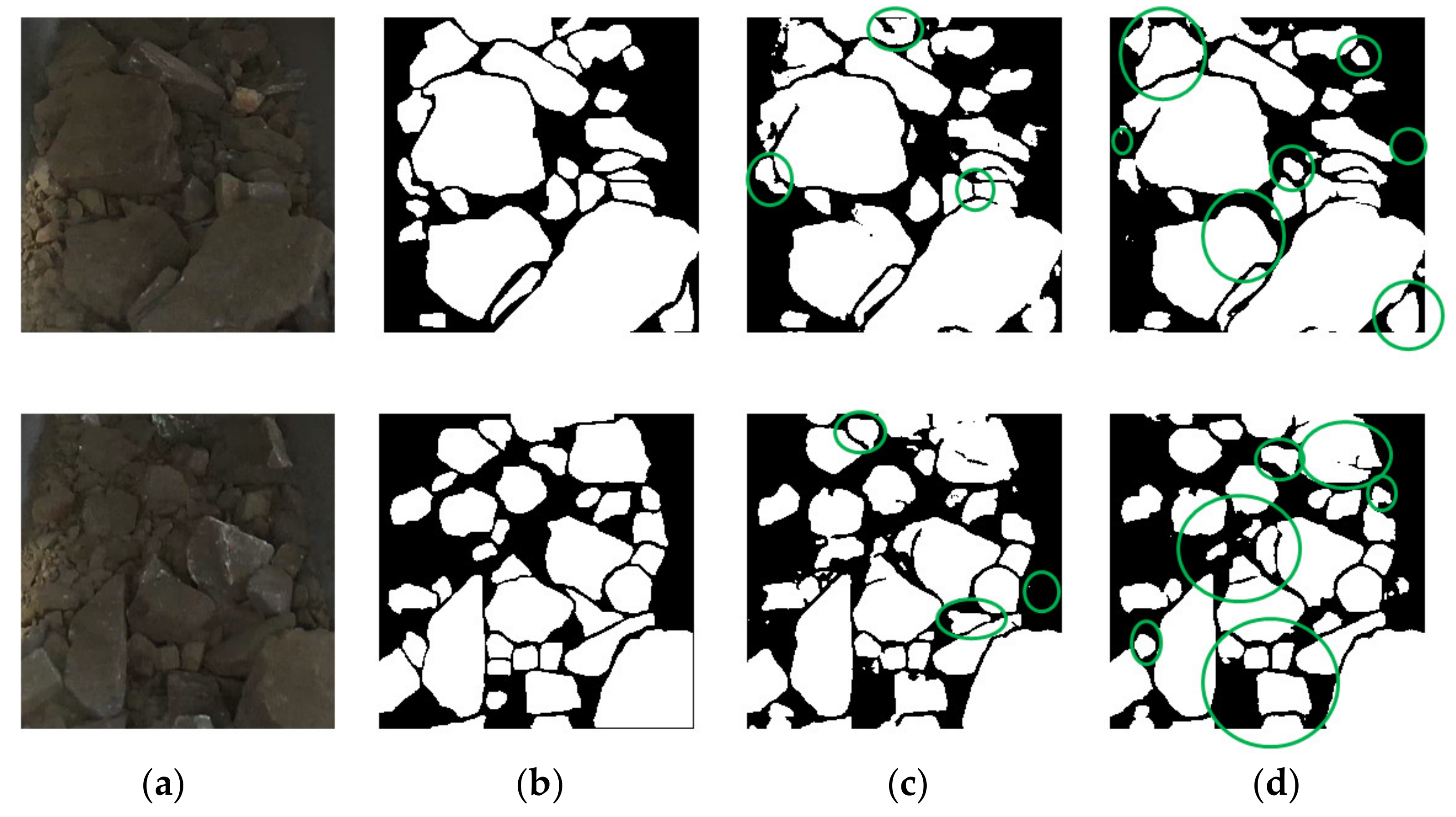

4.3. Result Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, W.; Jiang, D. The marker-based watershed segmentation algorithm of ore image. In Proceedings of the IEEE International Conference on Communication Software and Networks, Xian, China, 27–29 May 2011; pp. 472–474. [Google Scholar]

- Zhang, G.; Liu, G.; Zhu, H. Segmentation algorithm of complex ore images based on templates transformation and reconstruction. Int. J. Min. Met. Mater. 2011, 18, 385–389. [Google Scholar] [CrossRef]

- Dong, K.; Jiang, D. Ore image segmentation algorithm based on improved watershed transform. Comput. Eng. Des. 2011, 34, 899–903. (In Chinese) [Google Scholar]

- Jin, X.; Zhang, G. Ore impurities detection based on marker-watershed segmentation algorithm. Comput. Sci. Appl. 2018, 8, 21–29. (In Chinese) [Google Scholar]

- Zhan, Y.; Zhang, G. An improved OTSU algorithm using histogram accumulation moment for ore segmentation. Symmetry 2019, 11, 431. [Google Scholar] [CrossRef]

- Zhang, J.; Sun, S.; Qin, S. Ore image segmentation based on optimal threshold segmentation based on genetic algorithm. Sci. Technol. Eng. 2019, 19, 105–109. (In Chinese) [Google Scholar]

- Yang, G.; Wang, H.; Xu, W.; Li, P.; Wang, Z. Ore particle image region segmentation based on multilevel strategy. Chin. J. Anal. Lab. 2014, 35, 202–204. (In Chinese) [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems, Cambridge, CA, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Russakovsky, O.; Deng, O.; Su, H.; Krause, J.; Satheesh, S. ImageNet large scale visual recognition challenge. Int. J. Comput. Vision 2015, 115, 211–252. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1026–1034. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE T. Pattern. Anal. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Yuan, L.; Duan, Y. A method of ore image segmentation based on deep learning. In Proceedings of the International Conference on Intelligent Computing, Wuhan, China, 15–18 August 2018; pp. 508–519. [Google Scholar]

- Liu, X.; Zhang, Y.; Jing, H.; Wang, L.; Zhao, S. Ore image segmentation method using U-Net and Res_Unet convolutional networks. RSC Adv. 2020, 10, 9396–9406. [Google Scholar] [CrossRef]

- Xia, X.; Kulis, B. W-Net: A deep model for fully unsupervised image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, USA, 21–26 July 2017. [Google Scholar]

- Li, H.; Pan, C.; Chen, Z.; Wulamu, A.; Yang, A. Ore image segmentation method based on U-Net and watershed. CMC Comput. Mater. Con. 2020, 65, 563–578. [Google Scholar] [CrossRef]

- Suprunenko, V. Ore particles segmentation using deep learning methods. J. Phys. Conf. Ser. 2020, 1679, 042089. [Google Scholar] [CrossRef]

- Xiao, D.; Liu, X.; Le, B.; Ji, Z.; Sun, X. An ore image segmentation method based on RDU-Net model. Sensors 2020, 20, 4979. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G. Deformable convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, USA, 21–26 July 2017. [Google Scholar]

- Yang, H.; Huang, C.; Wang, L.; Luo, X. An improved encoder-decoder network for ore image segmentation. IEEE Sens. J. 2020, 1, 99–105. [Google Scholar] [CrossRef]

- Iglovikov, V.; Shvets, A. TernausNet: U-Net with VGG11 encoder pre-trained on ImageNet for image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–21 June 2018. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L. Attention U-Net: Learning where to look for the pancreas. In Proceedings of the 1st Conference on Medical Imaging with Deep Learning, Amsterdam, NY, USA, 4–6 July 2018. [Google Scholar]

- Ma, X.; Zhang, P.; Man, X.; Ou, L. A new belt ore image segmentation method based on the convolutional neural network and the image-processing technology. Minerals 2020, 10, 1115. [Google Scholar] [CrossRef]

- Chen, H.; Qi, X.; Yu, L.; Heng, P. DCAN: Deep contour-aware networks for accurate gland segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Shen, H.; Wang, R.; Zhang, J.; McKenna, S. Boundary-aware fully convolutional network for brain tumor segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 10–14 September 2017; pp. 433–441. [Google Scholar]

- Oda, H.; Roth, H.; Chiba, K.; Sokolic, J. BESNet: Boundary-enhanced segmentation of cells in histopathological images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; pp. 228–236. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE T. Pattern. Anal. 2017, 39, 640–651. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Versaci, M.; Morabito, F. Image edge detection: A new approach based on fuzzy entropy and fuzzy divergence. Int. J. Fuzzy Syst. 2021. [Google Scholar] [CrossRef]

- Zhou, Y.; Dou, Q.; Chen, H.; Heng, P. CIA-Net: Robust nuclei instance segmentation with contour-aware information aggregation. In Proceedings of the International Conference on Information Processing in Medical Imaging, Hong Kong, China, 2–7 June 2019; pp. 682–693. [Google Scholar]

- Cheng, T.; Wang, X.; Huang, L.; Liu, W. Boundary-preserving mask R-CNN. In Proceedings of the European Conference on Computer Vision, online, 23–28 August 2020; pp. 660–676. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Deep convolutional networks for large-scale image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

| Layer Name | Output Size of the 3 Encoders [n×n] | Our Improved Encoder | Original ResNet18 | Original U-Net Encoder |

|---|---|---|---|---|

| Layer1_0 | 256/64/--- | 3 × 3, 64, stride 1 | 7 × 7, 64, stride 2 3 × 3 max pool, stride 2 | - |

| Layer1 | 256/64/256 | 3 × 3, 64 3 × 3, 64 | ||

| Layer2 | 128/32/128 | 2 × 2 max pool, stride 2 3 × 3, 128 3 × 3, 128 | ||

| Layer3 | 64/16/64 | 2 × 2 max pool, stride 2 3 × 3, 256 3 × 3, 256 | ||

| Layer4 | 32/8/32 | 2 × 2 max pool, stride 2 3 × 3, 512 3 × 3, 512 | ||

| Layer5 | 16/---/16 | 2 × 2 max pool, stride 2 3 × 3, 1024 3 × 3, 1024 | fc layer | 2 × 2 max pool, stride 2 3 × 3, 1024 3 × 3, 1024 |

| Acc | F1 | IOU_M | Precision | Recall | Under_seg | Over_seg | IOU_B | ASE | |

|---|---|---|---|---|---|---|---|---|---|

| U-Net | 91.41 | 92.42 | 85.94 | 92.91 | 92.04 | 9.00 | 7.96 | 46.54 | 32.49 |

| U-Net+res18 | 91.46 | 92.48 | 86.04 | 92.91 | 92.16 | 9.19 | 7.80 | 47.54 | 30.33 |

| U-Net+res18+param | 91.81 | 92.88 | 86.74 | 91.94 | 93.90 | 10.84 | 6.09 | 49.44 | 30.43 |

| U-Net+dd | 91.67 | 92.63 | 86.33 | 93.55 | 91.83 | 8.23 | 8.16 | 48.86 | 22.13 |

| U-Net+res18+dd | 91.76 | 92.75 | 86.52 | 93.59 | 92.03 | 8.32 | 7.96 | 50.36 | 23.93 |

| U-Net+res18+param+dd | 92.07 | 93.00 | 86.95 | 93.46 | 92.64 | 8.50 | 7.36 | 52.32 | 20.38 |

| Acc | F1 | IOU_M | Precision | Recall | Under_seg | Over_seg | IOU_B | ASE | |

|---|---|---|---|---|---|---|---|---|---|

| U-Net | 91.41 | 92.42 | 85.94 | 92.91 | 92.04 | 9.00 | 7.96 | 46.54 | 32.49 |

| U-Net+VGG16 [35] | 91.41 | 92.20 | 85.56 | 95.58 | 89.14 | 5.40 | 10.86 | 48.78 | 17.21 |

| U-Net+Res18 [30] | 91.14 | 92.07 | 85.33 | 94.12 | 90.20 | 7.46 | 9.79 | 46.18 | 22.37 |

| U-Net+dd | 91.67 | 92.63 | 86.33 | 93.55 | 91.83 | 8.23 | 8.16 | 48.60 | 22.13 |

| U-Net+res18 (ours) | 92.07 | 93.00 | 86.95 | 93.46 | 92.64 | 8.50 | 7.36 | 52.32 | 20.38 |

| U-Net+res34 (ours) | 92.07 | 93.00 | 86.94 | 93.48 | 92.61 | 8.43 | 7.38 | 52.72 | 23.22 |

| Acc | F1 | IOU_M | Precision | Recall | Under_seg | Over_seg | IOU_B | ASE | |

|---|---|---|---|---|---|---|---|---|---|

| U-Net | 91.41 | 92.42 | 85.94 | 92.91 | 92.04 | 9.00 | 7.96 | 46.54 | 32.49 |

| U-Net_DCAN [26] | 91.04 | 92.14 | 85.46 | 92.28 | 92.11 | 10.16 | 7.8 | 46.30 | 37.17 |

| U-Net+dd | 91.67 | 92.63 | 86.33 | 93.55 | 91.83 | 8.23 | 8.16 | 48.60 | 22.13 |

| Acc | F1 | IOU_M | Precision | Recall | Under_seg | Over_seg | IOU_B | ASE | |

|---|---|---|---|---|---|---|---|---|---|

| U-Net | 91.41 | 92.42 | 85.94 | 92.91 | 92.04 | 9.00 | 7.96 | 46.54 | 32.49 |

| U-Net+res18+BA [27] | 91.57 | 92.68 | 86.41 | 91.89 | 93.58 | 10.91 | 6.41 | 51.60 | 33.35 |

| U-Net+res18+dd | 92.07 | 93.00 | 86.95 | 93.46 | 92.64 | 8.50 | 7.36 | 52.72 | 20.38 |

| U-Net+res18+dd+loss3_4 | 91.61 | 92.58 | 86.23 | 93.05 | 92.21 | 9.08 | 7.70 | 50.35 | 32.29 |

| U-Net+res18+dd+mask | 91.68 | 92.52 | 86.13 | 94.35 | 90.84 | 7.10 | 9.10 | 48.98 | 26.01 |

| Acc | F1 | IOU_M | Precision | Recall | Under_seg | Over_seg | IOU_B | ASE | |

|---|---|---|---|---|---|---|---|---|---|

| U-Net | 91.41 | 92.42 | 85.94 | 92.91 | 92.04 | 9.00 | 7.96 | 46.54 | 32.49 |

| U-Net+dd_canny | 91.67 | 92.63 | 86.33 | 93.55 | 91.83 | 8.23 | 8.16 | 48.60 | 22.13 |

| U-Net+dd_dilate | 91.53 | 92.52 | 86.11 | 93.46 | 91.70 | 8.50 | 8.30 | 48.59 | 26.59 |

| Acc | F1 | IOU_M | Precision | Recall | Under_seg | Over_seg | IOU_B | ASE | Inference Time | |

|---|---|---|---|---|---|---|---|---|---|---|

| U-Net | 91.41 | 92.42 | 85.94 | 92.91 | 92.04 | 9.00 | 7.96 | 46.54 | 32.49 | 44.65ms |

| FCN8 | 90.73 | 91.84 | 84.94 | 91.88 | 91.91 | 10.60 | 8.08 | 37.36 | 46.33 | 22.81ms |

| PSP | 90.48 | 91.61 | 84.54 | 92.39 | 90.90 | 9.97 | 9.09 | 34.18 | 28.16 | 38.33ms |

| Ours | 92.07 | 93.00 | 86.95 | 93.46 | 92.64 | 8.50 | 7.36 | 52.32 | 20.38 | 105.31ms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Li, Q.; Xiao, C.; Zhang, D.; Miao, L.; Wang, L. An Improved Boundary-Aware U-Net for Ore Image Semantic Segmentation. Sensors 2021, 21, 2615. https://doi.org/10.3390/s21082615

Wang W, Li Q, Xiao C, Zhang D, Miao L, Wang L. An Improved Boundary-Aware U-Net for Ore Image Semantic Segmentation. Sensors. 2021; 21(8):2615. https://doi.org/10.3390/s21082615

Chicago/Turabian StyleWang, Wei, Qing Li, Chengyong Xiao, Dezheng Zhang, Lei Miao, and Li Wang. 2021. "An Improved Boundary-Aware U-Net for Ore Image Semantic Segmentation" Sensors 21, no. 8: 2615. https://doi.org/10.3390/s21082615

APA StyleWang, W., Li, Q., Xiao, C., Zhang, D., Miao, L., & Wang, L. (2021). An Improved Boundary-Aware U-Net for Ore Image Semantic Segmentation. Sensors, 21(8), 2615. https://doi.org/10.3390/s21082615