Modeling Consumer Reactions to AI-Generated Content on E-Commerce Platforms: A Trust–Risk Dual Pathway Framework with Ethical and Platform Responsibility Moderators

Abstract

1. Introduction

2. Literature Review

2.1. Research Progress on AI-Generated Content in E-Commerce

2.2. Formation Mechanisms of Perceived Risk and Trust

2.3. Platform Responsibility and Ethical Concern

3. Research Methods

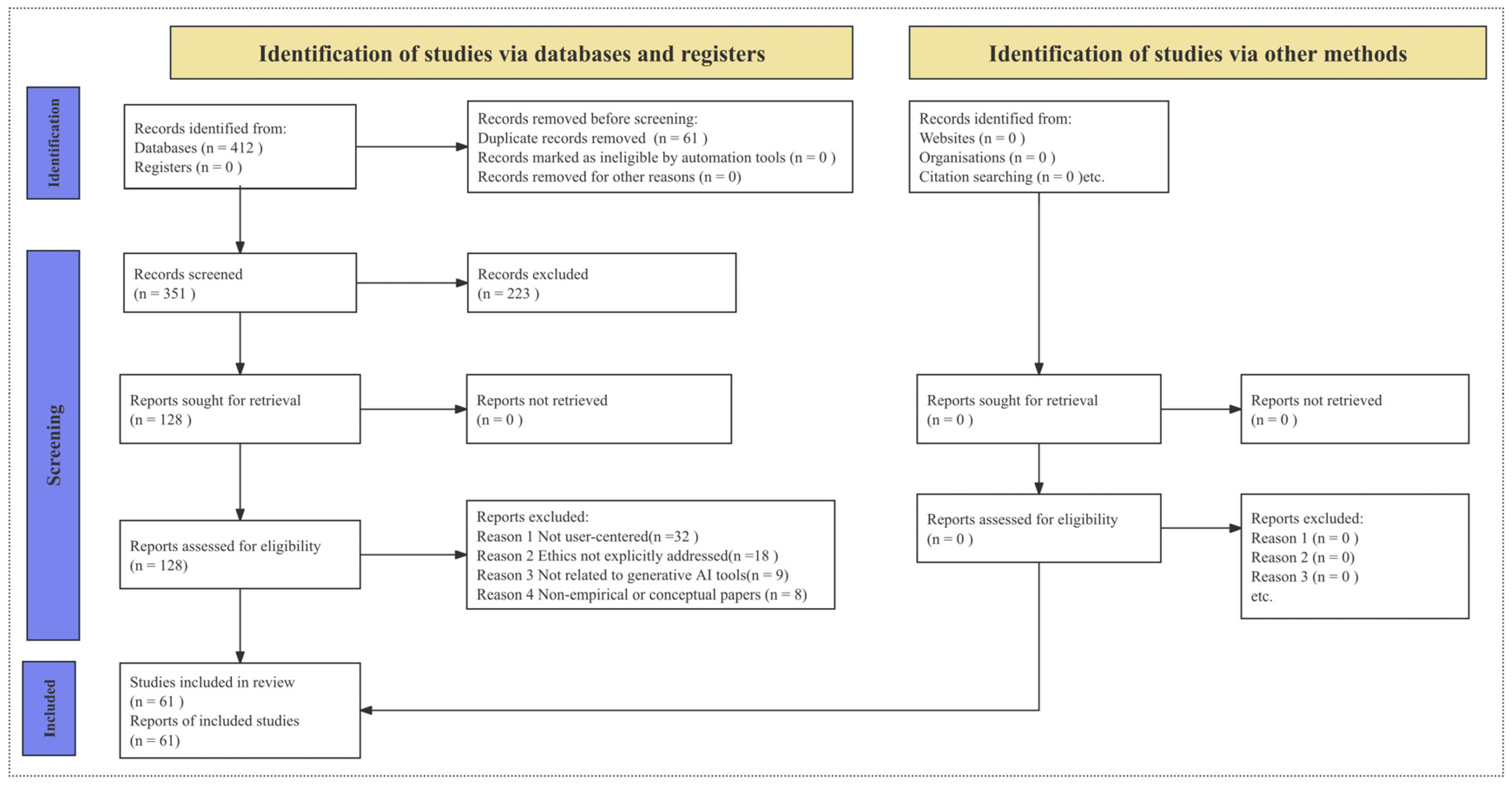

3.1. Systematic Literature Review (SLR) Process

3.2. Expert Interview Design and Analysis

3.3. Questionnaire Design and Data Collection Strategy

4. Results

4.1. Phase I: Construct Identification

4.1.1. Findings from Systematic Literature Review

4.1.2. Findings from Expert Interviews

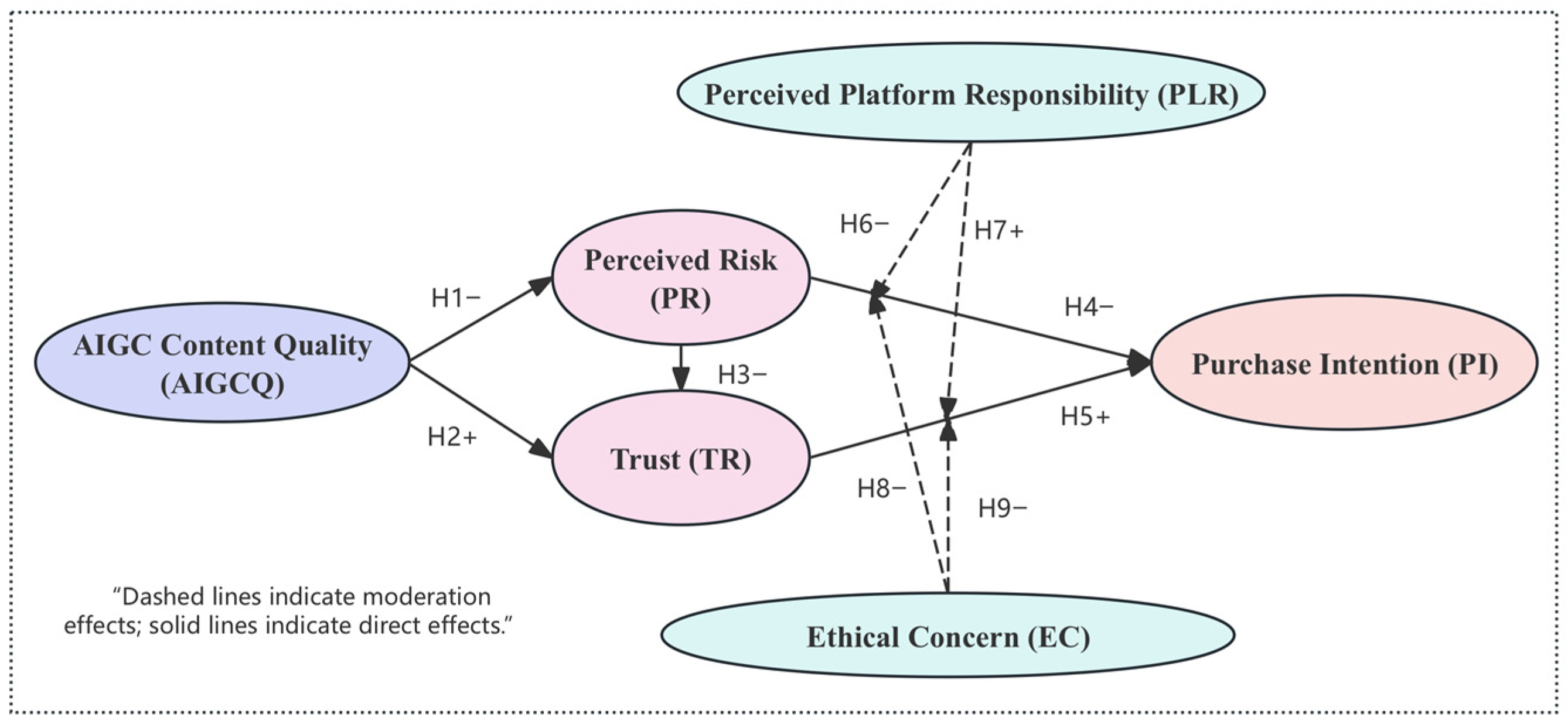

5. Variable Analysis and Hypotheses Development

5.1. Theoretical Basis for Hypotheses

5.1.1. Effects of AIGC Content Quality on User Cognition

5.1.2. Transmission Mechanism of PR and TR Toward PI

5.1.3. Moderating Role of PLR

5.1.4. Moderating Role of EC

5.2. Research Model Structure

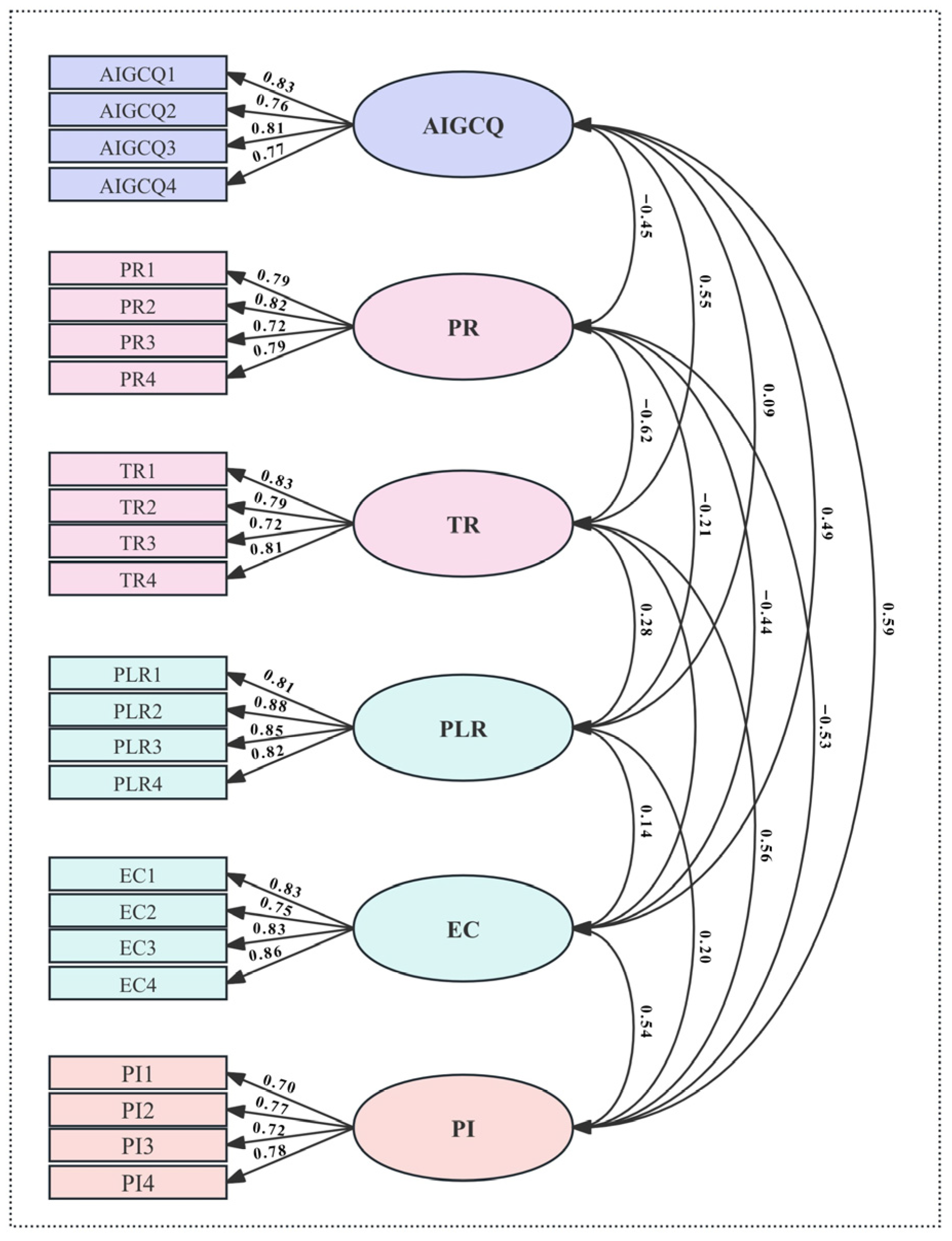

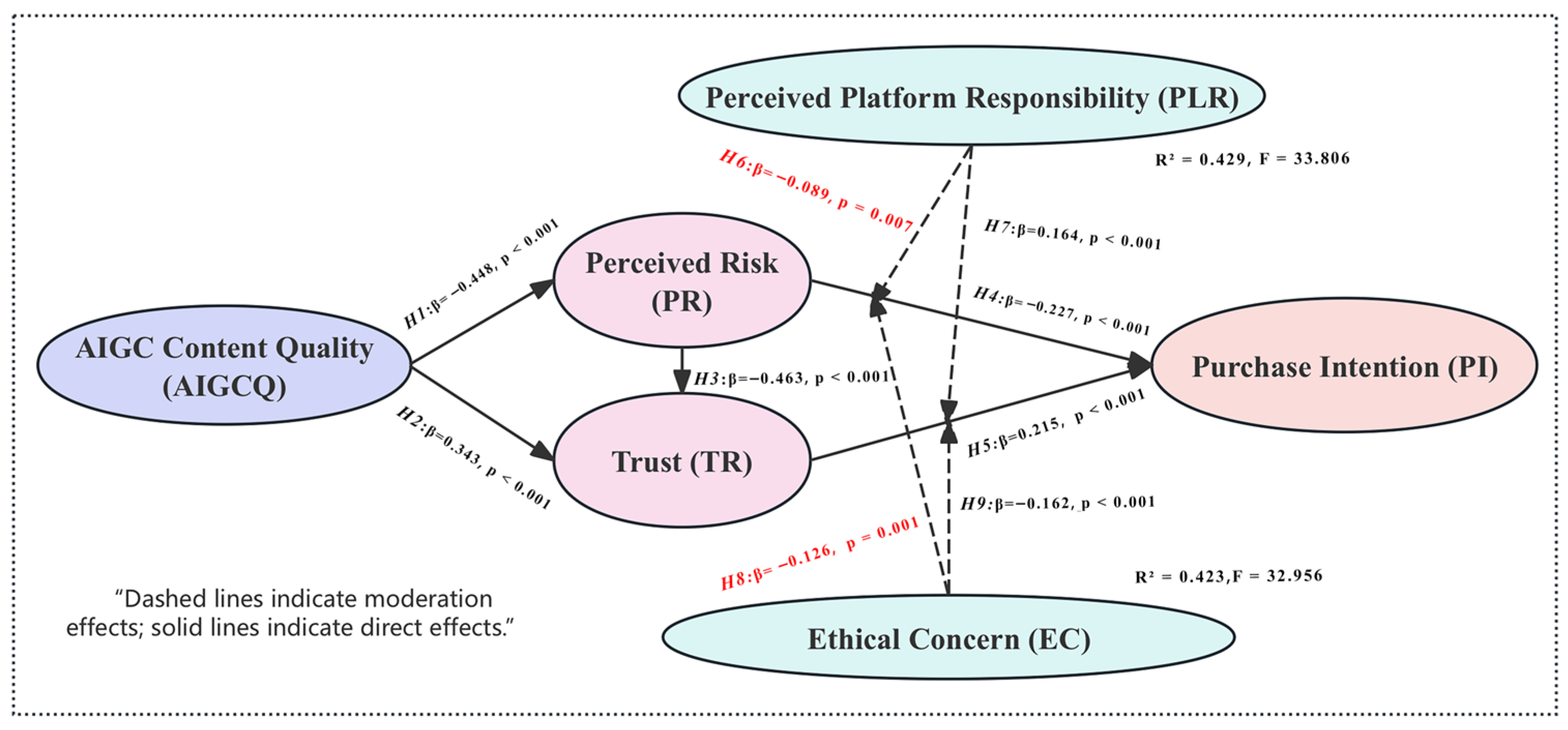

5.3. Phase II: Variable Analysis and Hypothesis Testing

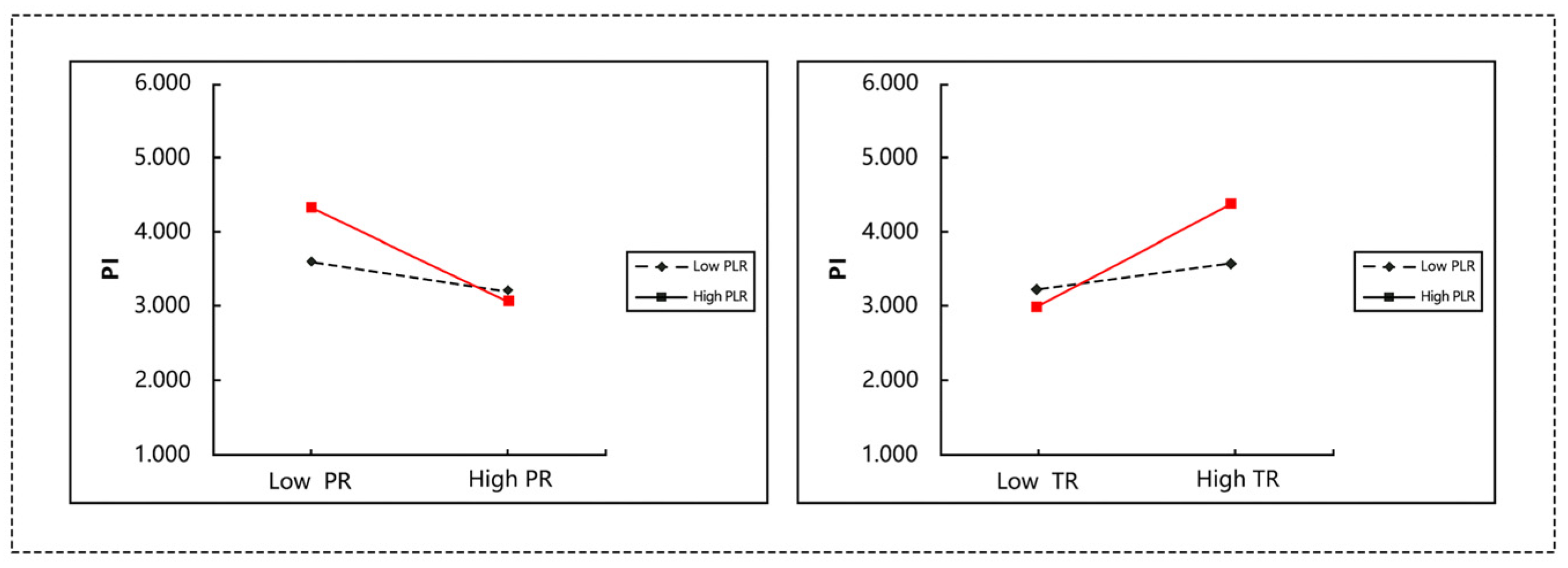

- PR → PI: β = −0.202 (p < 0.001); PLR × PR = −0.089 (p = 0.007), indicating that PLR strengthens the negative effect.

- PR → PI: β = −0.137 (p = 0.001); EC × PR = −0.126 (p = 0.001), indicating that EC weakens the negative effect.

6. Discussion

6.1. Summary of Key Findings

6.2. Main Pathway Discussion: Content Quality–Trust/Risk–Intention

6.3. Moderation Effects Discussion: Platform Responsibility and Ethical Concern

6.4. Theoretical Contributions and Practical Implications

6.5. Future Research Directions and Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Quantitative Survey Questionnaire Items

| Variables | Items | Issue | References |

| AI-Generated Content Quality (AIGCQ) | AIGCQ1 | The AI-generated content on e-commerce platforms is very clear in its information expression. | [6,30,31] |

| AIGCQ2 | I find that the overall structure of AI-generated product information is well organized. | ||

| AIGCQ3 | The descriptions generated by AI demonstrate a certain degree of professionalism and credibility. | ||

| AIGCQ4 | Compared with user-generated content, AI-generated content performs well in terms of expression quality. | ||

| Perceived Risk (PR) | PR1 | I am concerned that AI-generated product information may contain errors or be misleading. | [41,42,75] |

| PR2 | AI-generated content may obscure the true condition of the product. | ||

| PR3 | I feel that AI-generated content lacks full transparency. | ||

| PR4 | I feel uneasy when making decisions based on AI-generated content. | ||

| Trust (TR) | TR1 | I consider AI-generated product information to be trustworthy. | [10,34,56] |

| TR2 | I believe the platform is capable of managing the quality and norms of AI-generated content. | ||

| TR3 | Even knowing the content is generated by AI, I am still willing to use it. | ||

| TR4 | Overall, I trust the AI-assisted content services provided by the platform. | ||

| Perceived Platform Responsibility (PLR) | PLR1 | The platform has the responsibility to clearly inform users which content is generated by AI. | [59,77,78] |

| PLR2 | The platform should establish mechanisms to monitor the accuracy and applicability of AI content. | ||

| PLR3 | When issues arise due to AI-generated content, the platform should proactively provide explanations. | ||

| PLR4 | Whether the platform takes responsibility affects my acceptance of AI-generated content. | ||

| Ethical Concern (EC) | EC1 | I believe replacing human creation with AI poses certain ethical problems. | [69,79,99] |

| EC2 | I am concerned that AI-generated content may infringe upon expression rights or originality. | ||

| EC3 | If AI-generated content is not clearly labeled, it triggers conflicts with my personal values. | ||

| EC4 | I have ethical concerns about AI-generated content that is not explicitly disclosed as such. | ||

| Purchase Intention (PI) | PI1 | I am willing to make purchase decisions based on AI-generated content. | [9,75,91] |

| PI2 | Even if the content is not human-written, I am still willing to purchase as long as the quality is high. | ||

| PI3 | If AI-generated content provides useful information, I am willing to consider it when choosing products. | ||

| PI4 | When facing AI-generated content, I will decide whether to purchase based on its perceived reliability. |

References

- Bansal, G.; Nawal, A.; Chamola, V.; Herencsar, N. Revolutionizing Visuals: The Role of Generative AI in Modern Image Generation. ACM Trans. Multimedia Comput. Commun. Appl. 2024, 20, 356. [Google Scholar] [CrossRef]

- Kimura, T. Exploring the Frontier: Generative AI Applications in Online Consumer Behavior Analytics. Cuad. Gest. 2025, 25, 57–70. [Google Scholar] [CrossRef]

- Dai, J.; Mao, X.; Wu, P.; Zhou, H.; Cao, L. Revolutionizing Cross-Border e-Commerce: A Deep Dive into AI and Big Data-Driven Innovations for the Straw Hat Industry. PLoS ONE 2024, 19, e0305639. [Google Scholar] [CrossRef]

- Artificial Intelligence (AI) in e-Commerce. Available online: https://www.statista.com/study/146530/artificial-intelligence-ai-and-extended-reality-xr-in-e-commerce/ (accessed on 23 April 2025).

- Software Engineer 3 Walmart Inc.; Sinha, A.R. Revolutionizing Retail User Experience: Leveraging Generative AI for Data Summarization, Chatbot Integration, and AI-Driven Sentiment Analysis. Int. Sci. J. Eng. Manag. 2023, 2, 1–6. [Google Scholar] [CrossRef]

- Olmedilla, M.; Romero, J.C.; Martínez-Torres, R.; Galván, N.R.; Toral, S. Evaluating Coherence in AI-Generated Text. In Proceedings of the 6th International Conference on Advanced Research Methods and Analytics—CARMA 2024, Valencia, Spain, 26–28 June 2024; pp. 149–156. [Google Scholar] [CrossRef]

- Ding, L.; Antonucci, G.; Venditti, M. Unveiling User Responses to AI-Powered Personalised Recommendations: A Qualitative Study of Consumer Engagement Dynamics on Douyin. Qual. Mark. Res. Int. J. 2025, 28, 234–255. [Google Scholar] [CrossRef]

- Shareef, M.; Dwivedi, Y.K.; Kumar, V.; Davies, G.; Rana, N.P.; Baabdullah, A. Purchase Intention in an Electronic Commerce Environment: A Trade-off between Controlling Measures and Operational Performance. Inf. Technol. People 2019, 32, 1345–1375. [Google Scholar] [CrossRef]

- Sipos, D. The Effects of AI-Powered Personalization on Consumer Trust, Satisfaction, and Purchase Intent. Eur. J. Appl. Sci. Eng. Technol. 2025, 3, 14–24. [Google Scholar] [CrossRef] [PubMed]

- Gefen, D.; Karahanna, E.; Straub, D.W. Trust and TAM in Online Shopping: An Integrated Model. MIS Q. 2003, 27, 51–90. [Google Scholar] [CrossRef]

- Yang, Y.; Jia, Q.; Zhao, Y. The Impact of Platform Social Responsibility on Consumer Trust the Impact of Platform Social Responsibility on Consumer Trust. In Proceedings of the International Conference on Electronic Business, Nanjing, China, 3–7 December 2021. [Google Scholar]

- Sharma, S.; Chaitanya, K.; Jawad, A.B.; Premkumar, I.; Mehta, J.V.; Hajoary, D. Ethical Considerations in AI-Based Marketing: Balancing Profit and Consumer Trust. Tuijin Jishu/J. Propuls. Technol. 2023, 44, 1301–1309. [Google Scholar] [CrossRef]

- Cyberspace Administration of China; National Development and Reform Commission; Ministry of Education; Ministry of Science and Technology; Ministry of Industry and Information Technology; Ministry of Public Security; National Radio and Television Administration. Interim Measures for the Administration of Generative Artificial Intelligence Services; 2023. Available online: https://www.cac.gov.cn/2023-07/13/c_1690898327029107.htm (accessed on 23 April 2025).

- EU AI Act: First Regulation on Artificial Intelligence. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 23 April 2025).

- Jobin, A.; Ienca, M.; Vayena, E. The Global Landscape of AI Ethics Guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Hua, Y.; Niu, S.; Cai, J.; Chilton, L.B.; Heuer, H.; Wohn, D.Y. Generative AI in User-Generated Content. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Chua, T.-S. Towards Generative Search and Recommendation: A Keynote at RecSys 2023. ACM SIGIR Forum 2023, 57, 1–14. [Google Scholar] [CrossRef]

- Wang, Y.; Luo, H.; Liu, H. Research on the Application of AIGC Technology in E-commerce Platforms Advertising. Int. J. Asian Soc. Sci. Res. 2025, 2, 32–41. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, F.; Chen, T.; Pan, L.; Beliakov, G.; Wu, J. A Brief Survey of Machine Learning and Deep Learning Techniques for E-Commerce Research. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 2188–2216. [Google Scholar] [CrossRef]

- Wasilewski, A. Harnessing Generative AI for Personalized E-Commerce Product Descriptions: A Framework and Practical Insights. Comput. Stand. Interfaces 2025, 94, 104012. [Google Scholar] [CrossRef]

- Wasilewski, A.; Chawla, Y.; Pralat, E. Enhanced E-Commerce Personalization through AI-Powered Content Generation Tools. IEEE Access 2025, 13, 48083–48095. [Google Scholar] [CrossRef]

- Malikireddy, S.K.R. Revolutionizing Product Recommendations with Generative AI: Context-Aware Personalization at Scale. IJSREM 2024, 8, 1–8. [Google Scholar] [CrossRef]

- Generative AI and the Future of Interactive and Immersive Advertising|Semantic Scholar. Available online: https://www.semanticscholar.org/paper/Generative-AI-and-the-Future-of-Interactive-and-Gujar-Paliwal/4d14e8b7f56066963cbd28a49dbdc22b0be19979?utm_source=consensus (accessed on 23 April 2025).

- Balamurugan, M. AI-Driven Adaptive Content Marketing: Automating Strategy Adjustments for Enhanced Consumer Engagement. Int. J. Multidiscip. Res. 2024, 6, 27940. [Google Scholar] [CrossRef]

- Teepapal, T. AI-Driven Personalization: Unraveling Consumer Perceptions in Social Media Engagement. Comput. Hum. Behav. 2025, 165, 108549. [Google Scholar] [CrossRef]

- Rae, I. The Effects of Perceived AI Use on Content Perceptions. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–14. [Google Scholar] [CrossRef]

- Kirk, C.P.; Givi, J. The AI-Authorship Effect: Understanding Authenticity, Moral Disgust, and Consumer Responses to AI-Generated Marketing Communications. J. Bus. Res. 2025, 186, 114984. [Google Scholar] [CrossRef]

- Pandey, P.; Rai, A.K. Modeling Consequences of Brand Authenticity in Anthropomorphized AI-Assistants: A Human-Robot Interaction Perspective. PURUSHARTHA-J. Manag. Ethics Spiritual. 2025, 17, 116–135. [Google Scholar] [CrossRef]

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Yu, P.S.; Sun, L. A Comprehensive Survey of AI-Generated Content (AIGC): A History of Generative AI from GAN to ChatGPT 2023. arXiv 2023, arXiv:2303.04226. [Google Scholar]

- Fang, X.; Chen, P.; Wang, M.; Wang, S. Examining the Role of Compression in Influencing AI-Generated Image Authenticity. Sci. Rep. 2025, 15, 12192. [Google Scholar] [CrossRef] [PubMed]

- Eisner, J.; Holub, S.; Goller, F.; Steiner, E. Revolutionizing Product Descriptions: The Impact of AI Generated Product Descriptions on Product Quality Perception and Purchase Intention. In Proceedings of the AMS World Marketing Congress, Bel Ombre, Mauritius, 25–29 June 2024. [Google Scholar]

- Kishnani, D. The Uncanny Valley: An Empirical Study on Human Perceptions of AI-Generated Text and Images. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2025. [Google Scholar]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm Aversion: People Erroneously Avoid Algorithms after Seeing Them Err. J. Exp. Psychol. Gen. 2014, 144, 114–126. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Lu, H. The Effect of Trust on User Adoption of AI-Generated Content. Electron. Libr. 2025, 43, 61–76. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Bigman, Y.E.; Gray, K. People Are Averse to Machines Making Moral Decisions. Cognition 2018, 181, 21–34. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Hoffman, R.R.; Johnson, M.; Bradshaw, J.M.; Underbrink, A. Trust in Automation. IEEE Intell. Syst. 2013, 28, 84–88. [Google Scholar] [CrossRef]

- Sundar, S.S. Rise of Machine Agency: A Framework for Studying the Psychology of Human–AI Interaction (HAII). J. Comput.-Mediat. Commun. 2020, 25, 74–88. [Google Scholar] [CrossRef]

- Zhang, B.; Dafoe, A. Artificial Intelligence: American Attitudes and Trends; Center for the Governance of AI, Future of Humanity Institute, University of Oxford: Oxford, UK, 2019. [Google Scholar]

- Featherman, M.S.; Pavlou, P.A. Predicting E-Services Adoption: A Perceived Risk Facets Perspective. Int. J. Hum.-Comput. Stud. 2003, 59, 451–474. [Google Scholar] [CrossRef]

- Forsythe, S.; Liu, C.; Shannon, D.; Gardner, L.C. Development of a Scale to Measure the Perceived Benefits and Risks of Online Shopping. J. Interact. Mark. 2006, 20, 55–75. [Google Scholar] [CrossRef]

- Shin, D. The Effects of Explainability and Causability on Perception, Trust, and Acceptance: Implications for Explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Harrison McKnight, D.; Choudhury, V.; Kacmar, C. The Impact of Initial Consumer Trust on Intentions to Transact with a Web Site: A Trust Building Model. J. Strateg. Inf. Syst. 2002, 11, 297–323. [Google Scholar] [CrossRef]

- Paul, A. Pavlou Consumer Acceptance of Electronic Commerce: Integrating Trust and Risk with the Technology Acceptance Model. Int. J. Electron. Commer. 2003, 7, 101–134. [Google Scholar] [CrossRef]

- Kim, H.-W.; Kankanhalli, A. Investigating User Resistance to Information Systems Implementation: A Status Quo Bias Perspective. MIS Q. 2009, 33, 567–582. [Google Scholar] [CrossRef]

- Nicolaou, A.I.; McKnight, D.H. Perceived Information Quality in Data Exchanges: Effects on Risk, Trust, and Intention to Use. Inf. Syst. Res. 2006, 17, 332–351. [Google Scholar] [CrossRef]

- Li, F.; Yang, Y.; Yu, G. Nudging Perceived Credibility: The Impact of AIGC Labeling on User Distinction of AI-Generated Content. Emerg. Media 2025, 3, 275–304. [Google Scholar] [CrossRef]

- Dezao, T. Enhancing Transparency in AI-Powered Customer Engagement. J. AI Robot. Workplace Autom. 2024, 3, 134. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C. The Media Equation—How People Treat Computers, Television, and New Media like Real People and Places; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Overcoming Algorithm Aversion: People Will Use Imperfect Algorithms If They Can (Even Slightly) Modify Them. Manag. Sci. 2018, 64, 1155–1170. [Google Scholar] [CrossRef]

- Logg, J.M.; Minson, J.A.; Moore, D.A. Algorithm Appreciation: People Prefer Algorithmic to Human Judgment. Organ. Behav. Hum. Decis. Process. 2019, 151, 90–103. [Google Scholar] [CrossRef]

- Mori, M.; MacDorman, K.; Kageki, N. The Uncanny Valley [from the Field]. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Agarwal, R.; Prasad, J. A Conceptual and Operational Definition of Personal Innovativeness in the Domain of Information Technology. Inf. Syst. Res. 1998, 9, 204–215. [Google Scholar] [CrossRef]

- Venkatesh, V. Determinants of Perceived Ease of Use: Integrating Control, Intrinsic Motivation, and Emotion into the Technology Acceptance Model. Inf. Syst. Res. 2000, 11, 342–365. [Google Scholar] [CrossRef]

- McKnight, D.H.; Choudhury, V.; Kacmar, C. Developing and Validating Trust Measures for E-Commerce: An Integrative Typology. Inf. Syst. Res. 2002, 13, 334–359. [Google Scholar] [CrossRef]

- Zhang, B.; Anderljung, M.; Kahn, L.; Dreksler, N.; Horowitz, M.C.; Dafoe, A. Ethics and Governance of Artificial Intelligence: Evidence from a Survey of Machine Learning Researchers. J. Artif. Intell. Res. 2021, 71, 591–666. [Google Scholar] [CrossRef]

- Shin, D.; Park, Y.J. Role of Fairness, Accountability, and Transparency in Algorithmic Affordance. Comput. Hum. Behav. 2019, 98, 277–284. [Google Scholar] [CrossRef]

- Stanaland, A.J.S.; Lwin, M.O.; Murphy, P.E. Consumer Perceptions of the Antecedents and Consequences of Corporate Social Responsibility. J. Bus. Ethics 2011, 102, 47–55. [Google Scholar] [CrossRef]

- Gillath, O.; Ai, T.; Branicky, M.S.; Keshmiri, S.; Davison, R.B.; Spaulding, R. Attachment and Trust in Artificial Intelligence. Comput. Hum. Behav. 2021, 115, 106607. [Google Scholar] [CrossRef]

- Xu, S.; Mou, Y.; Ding, Z. The More Open, the Better? Research on the Influence of Subject Diversity on Trust of Tourism Platforms. Mark. Intell. Plan. 2023, 41, 1213–1235. [Google Scholar] [CrossRef]

- Ahn, J.; Kim, J.; Sung, Y. The Role of Perceived Freewill in Crises of Human-AI Interaction: The Mediating Role of Ethical Responsibility of AI. Int. J. Advert. 2024, 43, 847–873. [Google Scholar] [CrossRef]

- Belanche, D.; Casaló, L.V.; Flavián, C.; Schepers, J. Trust Transfer in the Continued Usage of Public E-Services. Inf. Manag. 2014, 51, 627–640. [Google Scholar] [CrossRef]

- Vasir, G.; Huh-Yoo, J. Characterizing the Flaws of Image-Based AI-Generated Content. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; Association for Computing Machinery: New York, NY, USA, 25 April 2025; pp. 1–7. [Google Scholar]

- Du, D.; Zhang, Y.; Ge, J. Effect of AI Generated Content Advertising on Consumer Engagement; Nah, F., Siau, K., Eds.; Springer Nature: Cham, Switzerland, 2023; Volume 14039, pp. 121–129. [Google Scholar]

- Franzoni, V. From Black Box to Glass Box: Advancing Transparency in Artificial Intelligence Systems for Ethical and Trustworthy AI; Gervasi, O., Murgante, B., Rocha, A.M.A.C., Garau, C., Scorza, F., Karaca, Y., Torre, C.M., Eds.; Springer Nature: Cham, Switzerland, 2023; Volume 14107, pp. 118–130. [Google Scholar]

- Kumar, R. Ethics of Artificial Intelligence and Automation: Balancing Innovation and Responsibility. J. Comput. Signal Syst. Res. 2024, 1, 1–8. Available online: https://www.researchgate.net/publication/386537846_Ethics_of_Artificial_Intelligence_and_Automation_Balancing_Innovation_and_Responsibility (accessed on 23 April 2025). [CrossRef]

- Sundar, S.S.; Kim, J. Machine Heuristic: When We Trust Computers More than Humans with Our Personal Information. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–9. [Google Scholar]

- Choung, H.; David, P.; Ross, A. Trust and Ethics in AI. AI Soc. 2023, 38, 733–745. [Google Scholar] [CrossRef]

- Lopes, E.L.; Yunes, L.Z.; Bandeira De Lamônica Freire, O.; Herrero, E.; Contreras Pinochet, L.H. The Role of Ethical Problems Related to a Brand in the Purchasing Decision Process: An Analysis of the Moderating Effect of Complexity of Purchase and Mediation of Perceived Social Risk. J. Retail. Consum. Serv. 2020, 53, 101970. [Google Scholar] [CrossRef]

- Cabrera, D.; Cabrera, L.L. The Steps to Doing a Systems Literature Review (SLR). J. Syst. Think. Prepr. 2023, 3, 1–27. [Google Scholar] [CrossRef]

- Sarkis-Onofre, R.; Catalá-López, F.; Aromataris, E.; Lockwood, C. How to Properly Use the PRISMA Statement. Syst. Rev. 2021, 10, 117. [Google Scholar] [CrossRef]

- Dorussen, H.; Lenz, H.; Blavoukos, S. Assessing the Reliability and Validity of Expert Interviews. Eur. Union Politics 2005, 6, 315–337. [Google Scholar] [CrossRef]

- Liang, J.; Guo, J.; Liu, Z.; Tang, J. “Ask Everyone?” Understanding How Social Q&a Feedback Quality Influences Consumers’ Purchase Intentions. In Proceedings of the Eighteenth Wuhan International Conference on E-Social Network and Commerce, Wuhan, China, 24–26 May 2019. [Google Scholar]

- Kim, D.J.; Ferrin, D.L.; Rao, H.R. A Trust-Based Consumer Decision-Making Model in Electronic Commerce: The Role of Trust, Perceived Risk, and Their Antecedents. Decis. Support Syst. 2008, 44, 544–564. [Google Scholar] [CrossRef]

- Amin, S.; Mahasan, S.S. Relationship between Consumers Perceived Risks and Consumer Trust: A Study of Sainsbury Store. Middle-East J. Sci. Res. 2014, 19, 647–655. [Google Scholar]

- Yang, F.; Abedin, M.Z.; Qiao, Y.; Ye, L. Toward Trustworthy Governance of AI-Generated Content (AIGC): A Blockchain-Driven Regulatory Framework for Secure Digital Ecosystems. IEEE Trans. Eng. Manag. 2024, 71, 14945–14962. [Google Scholar] [CrossRef]

- David, P.; Choung, H.; Seberger, J.S. Who Is Responsible? US Public Perceptions of AI Governance through the Lenses of Trust and Ethics. Public Underst. Sci. 2024, 33, 654–672. [Google Scholar] [CrossRef]

- Adanyin, A. Ethical AI in Retail: Consumer Privacy and Fairness. Eur. J. Comput. Sci. Inf. Technol. 2024, 12, 21–35. [Google Scholar] [CrossRef]

- Brunk, K.H. Consumer Perceived Ethicality: An Impression Formation Perspective. In Proceedings of the EMAC Annual Conference 2010, Nantes, France, 1 June 2010. [Google Scholar]

- Kuder, G.F.; Richardson, M.W. The Theory of the Estimation of Test Reliability. Psychometrika 1937, 2, 151–160. [Google Scholar] [CrossRef]

- dos Santos, P.M.; Cirillo, M.Â. Construction of the Average Variance Extracted Index for Construct Validation in Structural Equation Models with Adaptive Regressions. Commun. Stat.-Simul. Comput. 2023, 52, 1639–1650. [Google Scholar] [CrossRef]

- Ullman, J.B.; Bentler, P.M. Structural Equation Modeling. In Handbook of Psychology, 2nd ed.; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2012; ISBN 978-1-118-13388-0. [Google Scholar]

- Preacher, K.J.; Rucker, D.D.; Hayes, A.F. Addressing Moderated Mediation Hypotheses: Theory, Methods, and Prescriptions. Multivar. Behav. Res. 2007, 42, 185–227. [Google Scholar] [CrossRef]

- Wen, Z.; Ye, B. Mediation Effect Analysis: Methods and Model Development. Adv. Psychol. Sci. 2014, 22, 731. [Google Scholar] [CrossRef]

- Filieri, R.; McLeay, F.; Tsui, B.; Lin, Z. Consumer Perceptions of Information Helpfulness and Determinants of Purchase Intention in Online Consumer Reviews of Services. Inf. Manag. 2018, 55, 956–970. [Google Scholar] [CrossRef]

- Alamyar, I.H. The Role of User-Generated Content in Shaping Consumer Trust: A Communication Psychology Approach to E-Commerce. Medium 2025, 12, 175–191. [Google Scholar] [CrossRef]

- Jiang, X.; Wu, Z.; Yu, F. Constructing Consumer Trust through Artificial Intelligence Generated Content. Acad. J. Bus. Manag. 2024, 6, 263–272. [Google Scholar] [CrossRef]

- Gu, C.; Jia, S.; Lai, J.; Chen, R.; Chang, X. Exploring Consumer Acceptance of AI-Generated Advertisements: From the Perspectives of Perceived Eeriness and Perceived Intelligence. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 2218–2238. [Google Scholar] [CrossRef]

- Suryaningsih, I.B.; Hadiwidjojo, D.; Rohman, F.; Sumiati, S. A Theoretical Framework: The Role of Trust and Perceived Risks in Purchased Decision. Res. Bus. Manag. 2014, 1, 103. [Google Scholar] [CrossRef][Green Version]

- Lăzăroiu, G.; Neguriţă, O.; Grecu, I.; Grecu, G.; Mitran, P.C. Consumers’ Decision-Making Process on Social Commerce Platforms: Online Trust, Perceived Risk, and Purchase Intentions. Front. Psychol. 2020, 11, 890. [Google Scholar] [CrossRef] [PubMed]

- Ou, M.; Zheng, H.; Zeng, Y.; Hansen, P. Trust It or Not: Understanding Users’ Motivations and Strategies for Assessing the Credibility of AI-Generated Information. New Media Soc. 2024, 14614448241293154. [Google Scholar] [CrossRef]

- Gonçalves, A.R.; Pinto, D.C.; Rita, P.; Pires, T. Artificial Intelligence and Its Ethical Implications for Marketing. Emerg. Sci. J. 2023, 7, 313–327. [Google Scholar] [CrossRef]

- Liu, W.; Wang, C.; Ding, L.; Wang, C. Research on the Influence Mechanism of Platform Corporate Social Responsibility on Customer Extra-Role Behavior. Discrete Dyn. Nat. Soc. 2021, 2021, 1895598. [Google Scholar] [CrossRef]

- Paulssen, M.; Roulet, R.; Wilke, S. Risk as Moderator of the Trust-Loyalty Relationship. Eur. J. Mark. 2014, 48, 964–981. [Google Scholar] [CrossRef]

- Tang, Y.; Su, L. Graduate Education in China Meets AI: Key Factors for Adopting AI-Generated Content Tools. Libri 2025, 75, 81–96. [Google Scholar] [CrossRef]

- Riquelme, I.P.; Román, S. The Relationships among Consumers’ Ethical Ideology, Risk Aversion and Ethically-Based Distrust of Online Retailers and the Moderating Role of Consumers’ Need for Personal Interaction. Ethics Inf. Technol. 2014, 16, 135–155. [Google Scholar] [CrossRef]

- Peukert, C.; Kloker, S. Trustworthy AI: How Ethicswashing Undermines Consumer Trust. In Proceedings of the 15th International Conference on Wirtschaftsinformatik (WI), Potsdam, Germany, 8–11 March 2020; GITO Verlag: Berlin, Germany, 2020; pp. 1100–1115. [Google Scholar] [CrossRef]

- Ferhataj, A.; Memaj, F.; Sahatcija, R.; Ora, A.; Koka, E. Ethical Concerns in AI Development: Analyzing Students’ Perspectives on Robotics and Society. J. Inf. Commun. Ethics Soc. 2025, 23, 165–187. [Google Scholar] [CrossRef]

- Aldulaimi, S.; Soni, S.; Kampoowale, I.; Krishnan, G.; Yajid, M.S.A.; Khatibi, A.; Minhas, D.; Khurana, M. Customer Perceived Ethicality and Electronic Word of Mouth Approach to Customer Loyalty: The Mediating Role of Customer Trust. Int. J. Ethics Syst. 2024, 41, 258–278. [Google Scholar] [CrossRef]

- Gabriela, S. Ethical Consumerism in the 21st Century. Ovidius Univ. Ann. Econ. Sci. Ser. 2010, X, 1327–1331. [Google Scholar]

- Wang, X.; Tajvidi, M.; Lin, X.; Hajli, N. Towards an Ethical and Trustworthy Social Commerce Community for Brand Value Co-Creation: A Trust-Commitment Perspective. J. Bus. Ethics 2020, 167, 137–152. [Google Scholar] [CrossRef]

- Cheung, C.M.K.; Lee, M.K.O.; Rabjohn, N. The Impact of Electronic Word-of-mouth. Internet Res. 2008, 18, 229–247. [Google Scholar] [CrossRef]

- Wang, S.-F.; Chen, C.-C. Exploring Designer Trust in Artificial Intelligence-Generated Content: TAM/TPB Model Study. Appl. Sci. 2024, 14, 6902. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, Z. Cross-Cultural Perspectives on Artificial Intelligence Generated Content (AIGC): A Comparative Study of Attitudes and Acceptance among Global Products. In Cross-Cultural Design, Proceedings of the 16th International Conference, CCD 2024, Held as Part of the 26th HCI International Conference, HCII 2024, Washington, DC, USA, 29 June–4 July 2024; Rau, P.-L.P., Ed.; Springer Nature: Cham, Switzerland, 2024; pp. 287–298. [Google Scholar]

| Content Type | Description | Example Platforms or Tools |

|---|---|---|

| Product Information Generation | Automatically writes product titles, selling points, specifications, and functional descriptions | Taobao “Smart Copywriting,” Amazon Auto Title Generation, JD AI Graphic Assistant |

| User Review and Q&A Generation | Synthesizes review summaries, simulates authentic user reviews, and auto-completes FAQs | E-commerce AI Customer Service, Review Summary Generators, Shopee Auto Reply |

| Marketing Copy Generation | Generates personalized recommendation phrases, discount prompts, ad slogans, and SMS content | JD Advertising AI System, Pinduoduo Push Message Generator |

| Image/Video Content Generation | Generates product images, virtual model try-on, promotional short videos, and livestream personas | Aliyun Visual AIGC, Runway, Midjourney, Luma |

| Customer Service and Interaction Content Generation | Generates smart customer service responses, auto-guidance phrases, and scenario-based dialogues | JD Cloud Smart Customer Service, Coupang Auto Response System |

| User Recommendation and Personalized Content | Generates personalized product descriptions in recommendation sections based on user behavior | Amazon Personalized Product Descriptions, TikTok E-commerce Content Recommendations |

| Category | Content/Keyword | Frequency (n) | Proportion (%) |

|---|---|---|---|

| Research Topic Keywords | Trust | 38 | 62.3% |

| Risk Perception | 35 | 57.4% | |

| AIGC | 24 | 39.3% | |

| Platform Responsibility | 12 | 19.7% | |

| Ethical Concern | 9 | 14.8% | |

| Purchase Intention | 47 | 77.0% | |

| Theoretical Frameworks | Trust–Risk Framework | 21 | 34.4% |

| TAM/UTAUT | 18 | 29.5% | |

| No Explicit Theoretical Framework | 13 | 21.3% | |

| Research Methods | Quantitative (Survey) | 42 | 68.9% |

| Qualitative (Interview/Content Analysis) | 9 | 14.8% | |

| Mixed Methods | 10 | 16.3% | |

| Application Scenarios | E-commerce Platforms | 41 | 67.2% |

| Social Media Content Recommendation | 12 | 19.7% | |

| Enterprise-Generated Content Systems | 8 | 13.1% |

| ID | Professional Role | Industry/Organization | Relevant Expertise |

|---|---|---|---|

| E1 | AI Product Manager | Domestic E-commerce Platform A | AI Copywriting Generation, Content System Deployment |

| E2 | Director of Content Operations | International E-commerce Platform B | Product Content Quality Management, Automated Comment Review |

| E3 | UX Design Researcher | Research Institute / UX Lab | Consumer Behavior Analysis, A/B Testing |

| E4 | Technology Ethics Scholar | University Philosophy & Social Research Center | AIGC Ethical Review, Platform Policy Consultation |

| E5 | Digital Marketing Consultant | Independent Consulting Firm | AI Recommendation Strategies, E-commerce Operation Optimization |

| E6 | Platform Content Review Specialist | E-commerce Platform C | User Report Handling, Violation Content Review |

| E7 | AI Writing System Development Engineer | AI Tech Company D | Text Generation Model Design, Content Credibility Optimization |

| E8 | Postdoctoral Researcher in Social Sciences (Platform Governance) | Public Policy Research Institute | Platform Responsibility Mechanisms, User Trust Crisis Management |

| ID | Original Statement (Excerpt) | Initial Code | Thematic Category | Mapped Variable |

|---|---|---|---|---|

| E2-01 | “This review is too polished—it doesn’t look like a real person wrote it.” | Highly Uniform Expression Style | Authenticity Judgment | AIGCQ |

| E4-02 | “AI content is acceptable, but the platform should tell me if it’s machine-written.” | Lack of Source Disclosure | Expectation of Transparency | PLR |

| E3-03 | “I’m afraid AI-generated reviews might be manipulated to look overly positive.” | Concern about Manipulated Reviews | Risk Trigger | PR |

| E6-01 | “Most user reports target those that seem human-written but sound weird.” | Difficulty in Identifying Suspicious Content | Risk Identification Mechanism | PR |

| E1-04 | “I don’t mind AI copywriting, but not in emotionally sensitive product categories.” | Boundary of Content Applicability | Moral Fit Assessment | EC |

| E5-02 | “A platform can’t profit from AI but deny responsibility when things go wrong.” | Attribution of Platform Responsibility | Rejection of Responsibility Shifting | PLR |

| E7-03 | “Whether users trust AI content depends largely on their trust in the platform.” | Platform-Driven Trust Mechanism | Proxy Trust Structure | TR |

| E8-01 | “The worst thing about AI content is errors with no accountability—that uncertainty creates anxiety.” | Unclear Consequences of Errors | Opaque Technical Risks | PR |

| ID | Thematic Category | Descriptive Keywords (Examples) | Mapped Construct |

|---|---|---|---|

| T1 | Content Quality | Natural, logically clear, overly standardized, lacking authenticity | AIGCQ |

| T2 | Risk Salience | Untrustworthy, manipulated reviews, vague sources, information overload, obvious AI traces | PR |

| T3 | Rebuilding Trust | Trust in platform, distrust in author, sense of system control, rule transparency, brand reputation | TR |

| T4 | Ethical Boundary Sensitivity | Feeling deceived, moral ambiguity, inappropriate in certain domains, blurred right to expression, information manipulation | EC |

| T5 | Responsibility Expectation | Should be labeled, who is responsible for errors, lack of knowledge leads to distrust, platforms must not evade responsibility | PLR |

| Component | Initial Eigenvalues | Extraction Sums of Squared Loadings | ||||

|---|---|---|---|---|---|---|

| Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | |

| 1 | 8.255 | 34.395 | 34.395 | 8.255 | 34.395 | 34.395 |

| 2 | 3.007 | 12.53 | 46.925 | 3.007 | 12.53 | 46.925 |

| 3 | 1.861 | 7.753 | 54.678 | 1.861 | 7.753 | 54.678 |

| 4 | 1.697 | 7.069 | 61.747 | 1.697 | 7.069 | 61.747 |

| 5 | 1.383 | 5.764 | 67.511 | 1.383 | 5.764 | 67.511 |

| 6 | 1.249 | 5.206 | 72.716 | 1.249 | 5.206 | 72.716 |

| Item | Option | Frequency | Percentage |

|---|---|---|---|

| Gender | Male | 269 | 53.06% |

| Female | 238 | 46.94% | |

| Age | Under 18 | 22 | 4.34% |

| 19–25 | 151 | 29.78% | |

| 26–35 | 154 | 30.37% | |

| 36–45 | 116 | 22.88% | |

| Over 46 | 64 | 12.62% | |

| Education | High school or below | 99 | 19.53% |

| Bachelor’s degree | 262 | 51.68% | |

| Master’s degree or above | 146 | 28.80% | |

| Shopping frequency per month | ≤1 time | 81 | 15.98% |

| 2–3 times | 156 | 30.77% | |

| 4–6 times | 169 | 33.33% | |

| ≥7 times | 101 | 19.92% | |

| Frequency of encountering AI-generated content | Frequently | 162 | 31.95% |

| Occasionally | 139 | 27.42% | |

| Rarely | 125 | 24.65% | |

| Not sure | 81 | 15.98% |

| Dimension | Number of Items | Cronbach’s Alpha |

|---|---|---|

| AIGCQ | 4 | 0.872 |

| PR | 4 | 0.858 |

| TR | 4 | 0.867 |

| PLR | 4 | 0.903 |

| EC | 4 | 0.888 |

| PI | 4 | 0.831 |

| Fit Index | Criterion | Actual Value | Fit Result |

|---|---|---|---|

| CMIN/DF | <3 | 1.658 | Excellent |

| GFI | >0.80 | 0.940 | Excellent |

| AGFI | >0.80 | 0.925 | Excellent |

| RMSEA | <0.08 | 0.036 | Excellent |

| NFI | >0.9 | 0.945 | Excellent |

| IFI | >0.9 | 0.977 | Excellent |

| TLI | >0.9 | 0.974 | Excellent |

| CFI | >0.9 | 0.977 | Excellent |

| PNFI | >0.5 | 0.811 | Excellent |

| PCFI | >0.5 | 0.839 | Excellent |

| Dimension | Observed Variable | Factor Loading | S.E. | C.R. | p | CR | AVE |

|---|---|---|---|---|---|---|---|

| AIGCQ | AIGCQ1 | 0.828 | 0.873 | 0.631 | |||

| AIGCQ2 | 0.762 | 0.049 | 18.608 | *** | |||

| AIGCQ3 | 0.813 | 0.048 | 20.180 | *** | |||

| AIGCQ4 | 0.773 | 0.053 | 18.958 | *** | |||

| PR | PR1 | 0.788 | 0.860 | 0.606 | |||

| PR2 | 0.815 | 0.056 | 18.742 | *** | |||

| PR3 | 0.716 | 0.061 | 16.248 | *** | |||

| PR4 | 0.790 | 0.063 | 18.144 | *** | |||

| TR | TR1 | 0.825 | 0.867 | 0.621 | |||

| TR2 | 0.794 | 0.049 | 19.575 | *** | |||

| TR3 | 0.718 | 0.050 | 17.248 | *** | |||

| TR4 | 0.810 | 0.049 | 20.059 | *** | |||

| PLR | PLR1 | 0.806 | 0.904 | 0.703 | |||

| PLR2 | 0.877 | 0.053 | 22.391 | *** | |||

| PLR3 | 0.849 | 0.053 | 21.534 | *** | |||

| PLR4 | 0.820 | 0.046 | 20.566 | *** | |||

| EC | EC1 | 0.830 | 0.890 | 0.669 | |||

| EC2 | 0.750 | 0.053 | 18.711 | *** | |||

| EC3 | 0.829 | 0.051 | 21.420 | *** | |||

| EC4 | 0.859 | 0.049 | 22.395 | *** | |||

| PI | PI1 | 0.704 | 0.833 | 0.555 | |||

| PI2 | 0.770 | 0.071 | 15.237 | *** | |||

| PI3 | 0.720 | 0.070 | 14.399 | *** | |||

| PI4 | 0.783 | 0.071 | 15.454 | *** |

| AIGCQ | PR | TR | PLR | EC | PI | |

|---|---|---|---|---|---|---|

| AIGCQ | 0.795 | |||||

| PR | −0.447 | 0.778 | ||||

| TR | 0.550 | −0.616 | 0.788 | |||

| PLR | 0.086 | −0.206 | 0.278 | 0.839 | ||

| EC | 0.493 | −0.442 | 0.493 | 0.139 | 0.818 | |

| PI | 0.589 | −0.526 | 0.559 | 0.199 | 0.543 | 0.745 |

| AIGCQ | PR | TR | PLR | EC | PI | |

|---|---|---|---|---|---|---|

| AIGCQ | ||||||

| PR | 0.444 | |||||

| TR | 0.546 | 0.615 | ||||

| PLR | 0.084 | 0.204 | 0.271 | |||

| EC | 0.496 | 0.449 | 0.489 | 0.137 | ||

| PI | 0.594 | 0.529 | 0.562 | 0.200 | 0.548 |

| Fit Index | Criterion | Actual Value | Fit Result |

|---|---|---|---|

| CMIN/DF | <3 | 1.888 | Excellent |

| GFI | >0.80 | 0.958 | Excellent |

| AGFI | >0.80 | 0.941 | Excellent |

| RMSEA | <0.08 | 0.042 | Excellent |

| NFI | >0.9 | 0.957 | Excellent |

| IFI | >0.9 | 0.979 | Excellent |

| TLI | >0.9 | 0.974 | Excellent |

| CFI | >0.9 | 0.979 | Excellent |

| PNFI | >0.5 | 0.781 | Excellent |

| PCFI | >0.5 | 0.800 | Excellent |

| Path | Path Coefficient | S.E. | C.R. | p | ||

|---|---|---|---|---|---|---|

| PR | <--- | AIGCQ | −0.448 | 0.054 | −8.718 | *** |

| TR | <--- | AIGCQ | 0.343 | 0.049 | 7.023 | *** |

| TR | <--- | PR | −0.463 | 0.049 | −8.999 | *** |

| PI | <--- | AIGCQ | 0.369 | 0.051 | 6.462 | *** |

| PI | <--- | PR | −0.227 | 0.051 | −3.797 | *** |

| PI | <--- | TR | 0.215 | 0.057 | 3.338 | *** |

| Parameter | Estimate | SE | Lower | Upper | p |

|---|---|---|---|---|---|

| AIGCQ-PR-PI (Indirect Effect) | 0.101 | 0.036 | 0.034 | 0.178 | 0.005 |

| AIGCQ-TR-PI (Indirect Effect) | 0.074 | 0.029 | 0.027 | 0.143 | 0.003 |

| AIGCQ-PR-TR-PI (Indirect Effect) | 0.045 | 0.019 | 0.018 | 0.093 | 0.003 |

| AIGCQ-PI (Direct Effect) | 0.369 | 0.068 | 0.238 | 0.505 | 0.000 |

| AIGCQ-PI (Total Effect) | 0.589 | 0.051 | 0.482 | 0.688 | 0.000 |

| B | SE | t | p | |

|---|---|---|---|---|

| (Constant) | 2.668 | 0.236 | 11.318 | 0 |

| Gender | 0.112 | 0.068 | 1.653 | 0.099 |

| Age | 0.018 | 0.031 | 0.586 | 0.558 |

| Highest Educational Attainment | −0.114 | 0.049 | −2.334 | 0.020 |

| Occupation | −0.038 | 0.032 | −1.180 | 0.239 |

| Shopping Frequency | −0.025 | 0.035 | −0.717 | 0.474 |

| AIGCQ | 0.280 | 0.040 | 7.071 | 0.000 |

| PR | −0.202 | 0.041 | −4.955 | 0.000 |

| TR | 0.198 | 0.044 | 4.483 | 0.000 |

| PLR | 0.131 | 0.033 | 4.013 | 0.000 |

| PLR * PR | −0.089 | 0.033 | −2.698 | 0.007 |

| PLR * TR | 0.164 | 0.033 | 4.946 | 0.000 |

| R2 | 0.429 | |||

| F | 33.806 | |||

| B | SE | t | p | |

|---|---|---|---|---|

| (Constant) | 2.873 | 0.244 | 11.767 | 0.000 |

| Gender | 0.064 | 0.068 | 0.941 | 0.347 |

| Age | 0.015 | 0.031 | 0.478 | 0.633 |

| Highest Educational Attainment | −0.076 | 0.049 | −1.537 | 0.125 |

| Occupation | −0.036 | 0.032 | −1.132 | 0.258 |

| Shopping Frequency | −0.013 | 0.035 | −0.379 | 0.705 |

| AIGCQ | 0.237 | 0.042 | 5.579 | 0.000 |

| PR | −0.137 | 0.042 | −3.245 | 0.001 |

| TR | 0.202 | 0.045 | 4.448 | 0.000 |

| EC | 0.203 | 0.039 | 5.247 | 0.000 |

| EC * PR | −0.126 | 0.037 | −3.449 | 0.001 |

| EC * TR | −0.162 | 0.038 | −4.222 | 0.000 |

| R2 | 0.423 | |||

| F | 32.956 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, T.; Pan, Y.; Jang, W. Modeling Consumer Reactions to AI-Generated Content on E-Commerce Platforms: A Trust–Risk Dual Pathway Framework with Ethical and Platform Responsibility Moderators. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 257. https://doi.org/10.3390/jtaer20040257

Yu T, Pan Y, Jang W. Modeling Consumer Reactions to AI-Generated Content on E-Commerce Platforms: A Trust–Risk Dual Pathway Framework with Ethical and Platform Responsibility Moderators. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(4):257. https://doi.org/10.3390/jtaer20040257

Chicago/Turabian StyleYu, Tao, Younghwan Pan, and Wansok Jang. 2025. "Modeling Consumer Reactions to AI-Generated Content on E-Commerce Platforms: A Trust–Risk Dual Pathway Framework with Ethical and Platform Responsibility Moderators" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 4: 257. https://doi.org/10.3390/jtaer20040257

APA StyleYu, T., Pan, Y., & Jang, W. (2025). Modeling Consumer Reactions to AI-Generated Content on E-Commerce Platforms: A Trust–Risk Dual Pathway Framework with Ethical and Platform Responsibility Moderators. Journal of Theoretical and Applied Electronic Commerce Research, 20(4), 257. https://doi.org/10.3390/jtaer20040257