1. Introduction

With breakthroughs in generative AI, communication with intelligent agents through natural human language has become a common activity [

1]. CAs have evolved from single-task-oriented services (e.g., customer service responses) to intelligent agents capable of complex interactions [

2]. This breakthrough lies in their ability to generate contextually coherent and appropriate responses through algorithmic processes, enhancing the realism of AI-driven interactions [

3]. According to industry experts, by 2025, generative AI will be embedded in 80% of conversational AI solutions, leading more companies to actively use AI CAs to enhance customer satisfaction and retention rates [

4]. Recent advancements in multimodal AI, such as integration with visual and auditory inputs, further amplify their potential to deliver immersive experiences [

5].

Globally, platforms such as ChatGPT and DeepSeek have emerged as widely adopted AI conversation systems. In China, social platforms like Soul have introduced projects such as “Dialogue with Great Souls,” integrating conversations with visual scenarios to enable immersive interactions with virtualized historical figures such as Einstein, Aristotle, etc. [

6]. These multimodal interaction scenarios reflect a shift in the core competitiveness of AI systems from functional implementation to experience optimization. And through the AI CAs system, meet users’ personalized demands [

7]. By leveraging personalized interactions and improved conversation management, these systems enhance overall user experiences across applications such as online education [

8,

9,

10], social platforms [

11,

12], healthcare [

8,

13], and e-commerce [

14,

15,

16,

17].

In recent years, academic research on the usage of CAs has gained significant attention. Scholars have primarily employed technology adoption models and theoretical frameworks—such as the Technology Acceptance Model [

18,

19], trust theory [

19], and perceived interactivity theory [

18,

20]—to investigate factors influencing user experiences. Research findings indicate that among various design features, interactivity has been proven to significantly affect user experience and behavioural decisions [

18,

21]. It encompasses technical attributes such as conversational agency, and response efficiency as well as emotional dimensions such as social needs [

19,

22] and psychological ownership [

18]. This underscores the importance of adopting a multifaceted perspective that integrates both technological and social factors in studying AI CAs.

However, the existing literature on AI CAs industry still exhibits three primary gaps. First, prior studies often treat perceived interactivity as a monolithic construct [

16,

21,

23], lacking systematic integration of its multidimensional aspects—particularly as modern systems increasingly incorporate emerging features like entertainment [

24] and personalized customization [

25]. Second, while extensive research focuses on improving user behavioural intentions through technical enhancements, few studies analyse how users’ social attributes and psychological factors influence sustained usage [

26]. Although system efficacy remains critical, users’ emotional resonance and trust in AI CAs during interactions are increasingly recognized as vital to behavioural outcomes [

27,

28,

29]. Yet, research on the relationships between interactivity, social presence, and trust in AI CAs industry remains scarce. Third, despite the growing adoption of AI CAs, studies reveal that most users eventually reduce or discontinue usage, with initial enthusiasm for novel technologies diminishing over time [

18]. While recent research has introduced social presence theory to explain AI-driven services [

26,

30], the psychological mechanisms through which perceived interactivity affects long-term usage behavior remain underexplored. Consequently, understanding the dynamic coupling between multidimensional interactivity variables and users’ continuance usage intentions through affective pathways constitutes the core focus of this study.

To address these gaps, this study aims to investigate how perceived interactivity influences users’ continuance intention to use AI conversational agents, with a focus on the mediating roles of social presence and trust. The primary objective is to develop a comprehensive model that integrates multidimensional interactivity variables and psychological factors to explain sustained usage behavior. By employing a two-stage hybrid PLS-SEM and ANN approach, this study seeks to uncover both linear and nonlinear relationships, providing a nuanced understanding of user behavior in AI CA interactions. This hybrid approach is particularly suited to the dynamic nature of AI CAs, where user perceptions and behaviors are influenced by both structured and emergent factors. Furthermore, the study extends the application of perceived interactivity theory by incorporating playfulness and personalization as critical dimensions, offering new insights into user engagement in emerging AI applications such as virtual assistants and social platforms.

To address these theoretical gaps and provide actionable insights for designing and developing AI CAs, this study aims to investigate three research questions:

- (1)

How does perceived interactivity influence users’ continuance intention to use artificial intelligence conversational agents?

- (2)

How do social presence and trust mediate the relationship between interactivity and continuance intention?

- (3)

How can insights into perceived interactivity inform targeted strategies for improving user experiences?

The remainder of this paper is structured as follows:

Section 2 introduces the theoretical foundation and hypothesis development.

Section 3 details the research method, followed by data analysis results in

Section 4.

Section 5 discusses key findings, while

Section 6 elaborates on theoretical and practical implications. Finally, limitations and future research directions are presented.

3. Methods

3.1. Research Method Design

To investigate the influence of perceived interactivity on users’ continuance intention to use AI CAs, this study adopts a two-stage hybrid methodology combining Partial Least Squares Structural Equation Modeling (PLS-SEM) and Artificial Neural Networks (ANN). In the first stage, PLS-SEM is employed to test the hypothesized relationships among perceived interactivity variables, social presence, trust, and continuance intention. PLS-SEM is suitable for this study due to its ability to handle complex models with latent variables and smaller sample sizes, as supported by Hair et al. [

109]. In the second stage, ANN is used to capture nonlinear relationships and validate the predictive accuracy of the PLS-SEM results, leveraging its strength in modeling complex, non-compensatory interactions [

110]. This hybrid approach ensures a robust analysis of both linear and nonlinear dynamics in user behavior, aligning with the dynamic nature of AI CA interactions.

The experimental platform selected for this study is the Dialogue with Great Souls project hosted on Soul APP (

https://www.soulapp.cn/en) (accessed on 11 January 2025), jointly developed by Soul APP, the EchoVerse APP (Version 1.42.0), and the China Academy of Art. The project includes both an offline digital art interactive exhibition and an online platform experience. It leverages AI technology to integrate Soul APP with its subsidiary EchoVerse APP, and features eight AI-based virtual “great souls.” These AI CAs are modeled after influential historical figures, including Charles Darwin, Zhuangzi, Albert Einstein, Aristotle, Georg Wilhelm Friedrich Hegel, Arthur Schopenhauer, Friedrich Nietzsche, and Mark Twain.

At the physical exhibition site, visitors can interact with these eight figures through various interactive installations, enabling diverse and engaging exchanges. Online users can participate in the experience via the EchoVerse APP, where they engage in “cross-temporal” conversations with the great souls (see

Figure 2).

Selecting an appropriate platform is critical for exploring user experiences with AI CAs. The decision to use this platform was based on the following four considerations:

Advanced technology: The platform uses advanced AI language models to process user queries and provide timely responses, and CAs in platform have a rich knowledge graph to ensure the relevance of the answer content.

Communicative richness: Each “great soul” is endowed with visual representations and matching voice profiles, enabling users to feel as though they are engaging in real-time conversation with a human interlocutor.

Playfulness: The offline exhibition enhances enjoyment through digital human displays and interactive installations, while the online experience offers diverse functions—such as the “Book of Answers,” which provides responses from the great souls through a randomized draw.

Personalization: The eight figures represent distinguished contributors across various domains of human knowledge. Users can inquire about emotional, academic, or professional topics, or engage in deep philosophical discussions within the respective expert domains of these historical figures.

3.2. Questionnaire Design

In the first part of the questionnaire, participants were provided with a brief introduction to Soul APP’s Dialogue with Great Souls project to ensure they had a clear understanding of the experimental context. A download link to the app was included, and participants were instructed to engage with the project for a minimum of 10 min, during which they were encouraged to interact with multiple AI representations of the great historical figures.

The second part of the questionnaire collected respondents’ demographic information, including gender, age, education level, familiarity with AI CAs, and frequency of use.

The third part consisted of 25 items measuring nine constructs: CON, RES, COM, PLA, PER, SP, TR, and CI. All items were measured using a 7-point Likert scale (1 = strongly disagree, 7 = strongly agree). To ensure content validity, the items for each variable were adapted from the established literature and contextualized for AI CAs. Specifically, items for control, responsiveness, and communication were based on the work of Park et al. [

46] and Shi et al. [

111]; items for playfulness and social presence were adapted from Anubhav et al. [

69,

112]; the personalization scale was drawn from Lee et al. [

105,

113]; trust items were adapted from Fernandes et al. [

114] and Gao [

115]; and continued usage intention was measured using the scale developed by Bhattacherjee et al. [

103] and Ashfaq et al. [

112]. A detailed itemization is provided in

Table 1.

As most of the original measurement scales were developed in English and the study aimed to collect data from participants in China, two professional translators were employed to translate the questionnaire into Chinese to ensure accuracy. Prior to finalizing the survey, three researchers with over one year of experience using AI CAs and one university professor were invited to review the translated version for clarity and accuracy. From an ethical standpoint, informed consent was obtained from all participants prior to their participation. Respondents were assured that their privacy would be strictly protected and that all data collected would be used solely for academic research purposes, with no commercial intent.

3.3. Data Collection and Analysis

Data for this study were collected using Questionnaire Star (

www.wjx.cn) (accessed on 19 January 2025), a professional online survey platform widely used in China. The questionnaire link and corresponding QR code generated on the platform were distributed via social media channels including WeChat (version 8.0.54), QQ (version 9.0.90.614), and others. To optimize sample diversity, the research team initially disseminated the survey through WeChat and QQ group chats and encouraged respondents to recommend potential participants from various backgrounds. This approach aimed to mitigate the clustering bias typically associated with snowball sampling methods [

116]. To enhance participation, an incentive mechanism was implemented. Respondents who completed the survey were entered into a random draw to win small prizes, including WeChat cash red packets (valued at 1 or 5 RMB) and an electronic thank-you letter. Participants were instructed to complete the questionnaire immediately after their interaction with the Dialogue with Great Souls project to ensure the timeliness and accuracy of their feedback. All participation was voluntary, and no conflict of interest was present throughout the study.

The sample selection process was designed to ensure representativeness and relevance to the study’s objectives. Participants were selected based on two primary criteria: (1) they had actively engaged with the Dialogue with Great Souls platform (via the EchoVerse APP or offline exhibition) at least once within the past three months, ensuring recent and relevant interaction with AI CAs; and (2) they were active users of social media platforms (WeChat or QQ), facilitating survey distribution and response collection. To address the sample size requirements for the two-stage hybrid methodology (PLS-SEM and ANN), a minimum sample size was determined using a priori power analysis. For PLS-SEM, the total sample size should be at least ten times the number of observed variables corresponding to each latent variable to be considered sufficient based on Hill et al. [

117]. For ANN, the rule of thumb is that the sample size needs to be at least 10 times the number of weights in the network [

118].Following these guidelines, a number of 300 valid responses was targeted to ensure robust predictive accuracy. Therefore, based on these criteria, the sample size currently selected for this study not only conforms to the general norms in the field of social sciences but also has good representativeness and statistical significance.

The survey was conducted over a one-month period, from 19 January to 19 February 2025. A total of 327 responses were collected. After thorough screening by three researchers, 22 invalid responses were excluded, resulting in 305 valid samples for data analysis. This final sample size exceeds the minimum requirements for both PLS-SEM and ANN, enhancing the reliability and generalizability of the findings. The screening process involved cross-checking responses for completion and consistency, with inter-rater agreement among researchers exceeding 90% to ensure data quality. Descriptive statistics for demographic characteristics are presented in

Table 2.

4. Result

4.1. Model Fit

To enhance the reliability and validity of the research findings, this study conducted a model fit assessment of the measurement model. Using Partial Least Squares Structural Equation Modeling (PLS-SEM) to construct the theoretical framework, model fit was evaluated by analyzing the distribution characteristics of covariate error and bias.

Specifically, two key indices were employed to assess model fit: the Standardized Root Mean Square Residual (SRMR) and the Normed Fit Index (NFI). SRMR is defined as the difference between the observed correlation matrix and the model-implied correlation matrix, with a value of SRMR ≤ 0.08 indicating a good model fit [

119]. NFI is an incremental fit index, where values closer to 1 suggest better model fit [

120]. Empirical results showed that the SRMR for this study was 0.036, and the NFI reached 0.867, indicating a relatively good model fit. These results support the adequacy of the model, as illustrated in

Table 3.

4.2. Common Method Bias

In survey-based research, common method bias (CMB) is a critical concern as a potential source of systematic error. The Variance Inflation Factor (VIF), widely used in formative measurement models, serves as a diagnostic tool for assessing multicollinearity among constructs. This metric quantifies the degree of variance inflation due to collinearity among variables, with higher values indicating greater risk of multicollinearity. In empirical research, a VIF threshold of 3.0 is commonly used as a conservative benchmark [

121].

Data analysis in this study revealed that the VIF values for all measured items ranged from 1.152 to 1.362, which are well below the critical threshold of 3.0 (see

Table 4). This provides statistical evidence that multicollinearity is not a concern and that the measurement items do not suffer from significant common method bias.

4.3. Reliability and Validity Analysis

To evaluate the reliability and validity of the questionnaire, this study employed PLS-SEM for a systematic assessment of the measurement instruments. Specifically, Cronbach’s alpha (α), Composite Reliability (CR), and Average Variance Extracted (AVE) were calculated.

Cronbach’s alpha assesses the internal consistency and stability of scale items. When both α and CR values exceed 0.7, the measurement tool is considered acceptably reliable; values above 0.8 indicate high reliability [

122]. Factor loadings reflect the correlation between observed variables and their corresponding latent constructs, indicating the extent to which the construct explains variance in the observed item. Loadings greater than 0.7 are generally considered strong, indicating a high degree of relevance between items and constructs [

123].

In this study, all factor loadings and CR values exceeded the 0.7 threshold, and AVE values for all constructs were above 0.5, suggesting that the measurement model demonstrates adequate convergent validity [

124]. Empirical results (see

Table 5) show that the Cronbach’s alpha coefficients and composite reliability values (rho_A and rho_C) for all latent variables exceeded 0.8. Regarding convergent validity, the Average Variance Extracted (AVE) values for each construct ranged between 0.80 and 1.00. In addition, all standardized factor loadings were above the critical threshold of 0.5. These findings collectively indicate strong internal consistency, reliability, and convergent validity of the measurement model, confirming the robustness of the study’s results.

For validity testing, we further assessed discriminant validity using the Fornell–Larcker criterion, Heterotrait–Monotrait ratio (HTMT), and cross-loadings. As shown in

Table 6, the square root of each construct’s AVE was greater than its correlations with other constructs, indicating adequate discriminant validity [

122]. The HTMT values ranged from 0.206 to 0.397—well below the recommended threshold of 0.85 (see

Table 7), further supporting the presence of strong discriminant validity [

122]. Additionally, each observed variable demonstrated a higher factor loading on its corresponding latent construct than on any other construct (see

Table 8), further confirming satisfactory construct differentiation [

122].

4.4. Hypothesis Testing

Based on 305 valid responses, path analysis was conducted using the bootstrap resampling method with 5000 iterations to estimate the parameters of the theoretical model. The PLS-SEM results (see

Figure 3 and

Table 9) indicate that 12 out of 13 hypothesized paths were statistically significant, while one was not supported.

Specifically, control (β = 0.139, p = 0.010), responsiveness (β = 0.110, p < 0.050), communication (β = 0.182, p < 0.010), playfulness (β = 0.163, p < 0.050), and personalization (β = 0.195, p < 0.010) had significant positive effects on social presence. These results support hypotheses H1a–e, confirming that perceived interactivity dimensions significantly influence users’ sense of social presence.

In terms of trust, significant positive effects were observed for control (β = 0.179, p < 0.010), responsiveness (β = 0.157, p < 0.010), communication (β = 0.133, p < 0.050), and playfulness (β = 0.141, p < 0.050), supporting hypotheses H2a–d. However, personalization (β = 0.087, p = 0.116) did not have a significant effect on trust, thus hypothesis H2e was not supported.

Finally, the study found that social presence positively influenced trust (β = 0.133, p < 0.050), and both social presence (β = 0.272, p < 0.010) and trust (β = 0.209, p < 0.010) significantly predicted users’ continuance intention to use AI-based CA. These findings provide empirical support for hypotheses H3 through H5.

4.5. Mediation Analysis

Following the guidelines proposed by Preacher et al. [

125], a mediation effect is considered statistically significant when the 95% confidence interval (CI) derived from the bootstrapping procedure does not include zero. In this study, the 95% CIs for the indirect effects of CON, RES, COM, and PLA all excluded zero, indicating significant mediation effects through SP and TR (see

Table 10). However, the confidence interval for the indirect path from perceived personalization (PER) through trust included zero, suggesting a non-significant mediating effect.

To further assess the strength of these mediating effects, the Variance Accounted For (VAF) metric was used in the PLS-SEM analysis. VAF is calculated as the ratio of the indirect effect to the total effect (VAF = ab/c), and is used to evaluate the magnitude of mediation. A VAF value between 20% and 80% indicates a partial mediation effect, while a value greater than 80% suggests full mediation.

According to the results, the mediating role of social presence and trust accounted for 46.83% and 48.10% of the total effect of control, indicating partial mediation. Responsiveness exhibited a partial mediation effect through trust, explaining 50% of the variance. Communication demonstrated a partial mediation effect through social presence, accounting for 59.76% of the variance. Playfulness also showed partial mediation, with 56.41% of the effect mediated through social presence and 36.70% through trust. Finally, social presence mediated the relationship to trust with a relatively weak effect, accounting for only 10.23% of the variance.

4.6. Artificial Neural Network (ANN) Analysis

This study adopted a two-stage modeling strategy by incorporating ANN analysis alongside PLS-SEM to better capture nonlinear relationships and enhance predictive accuracy. While SEM is well-suited for evaluating linear associations and compensatory effects among constructs, it has limitations in modeling complex nonlinear interactions [

126]. In contrast, ANN is highly capable of identifying non-normal distributions and nonlinear dependencies between exogenous and endogenous variables [

127].

ANN also demonstrates strong tolerance to data noise, outliers, and relatively small sample sizes. Moreover, it is applicable to non-compensatory models, where a deficiency in one factor does not necessarily need to be offset by an increase in another [

128]. Therefore, ANN was integrated into this study as an extended analytical tool to more systematically identify and explain the key drivers influencing users’ continued adoption of AI-based CAs.

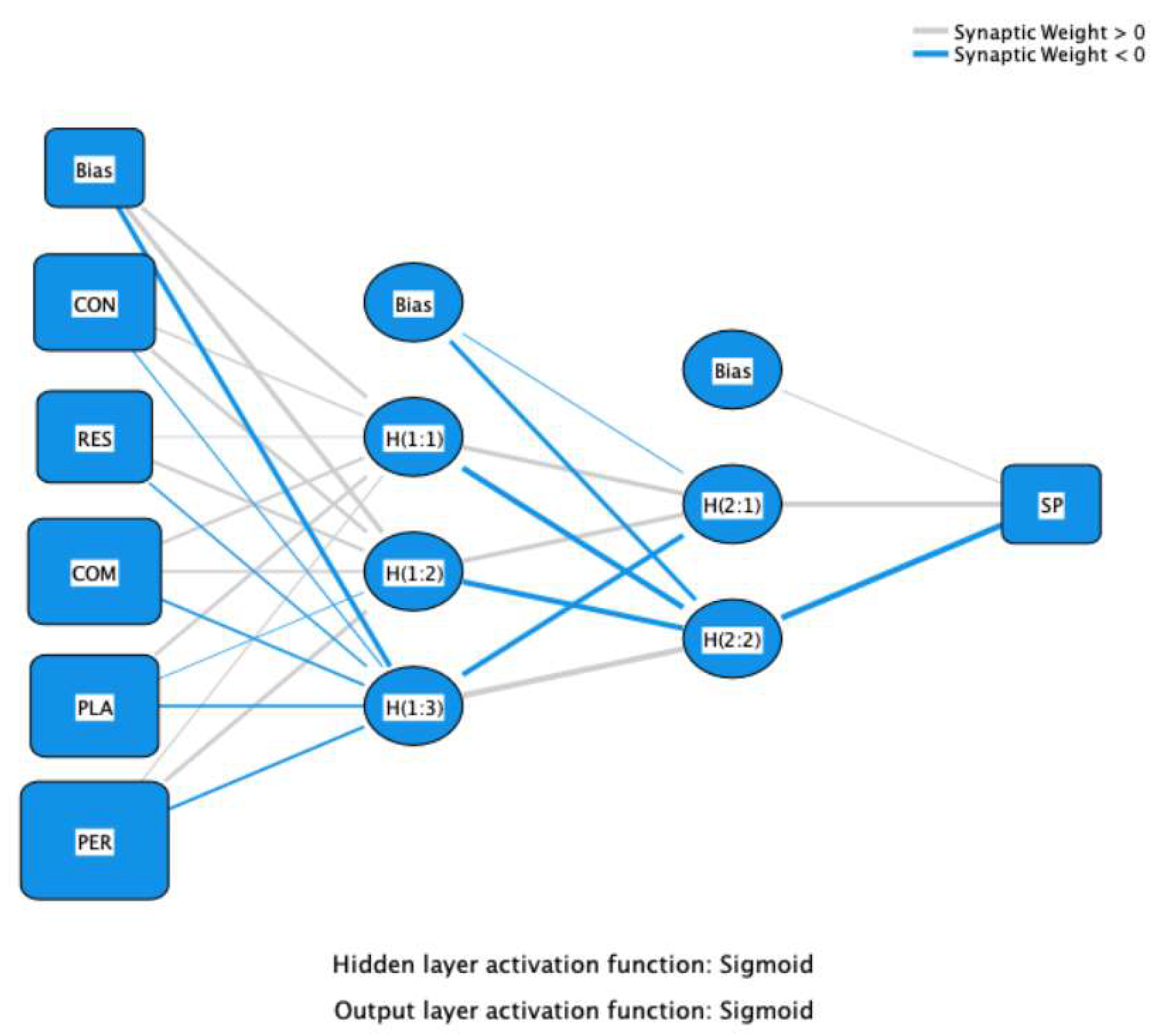

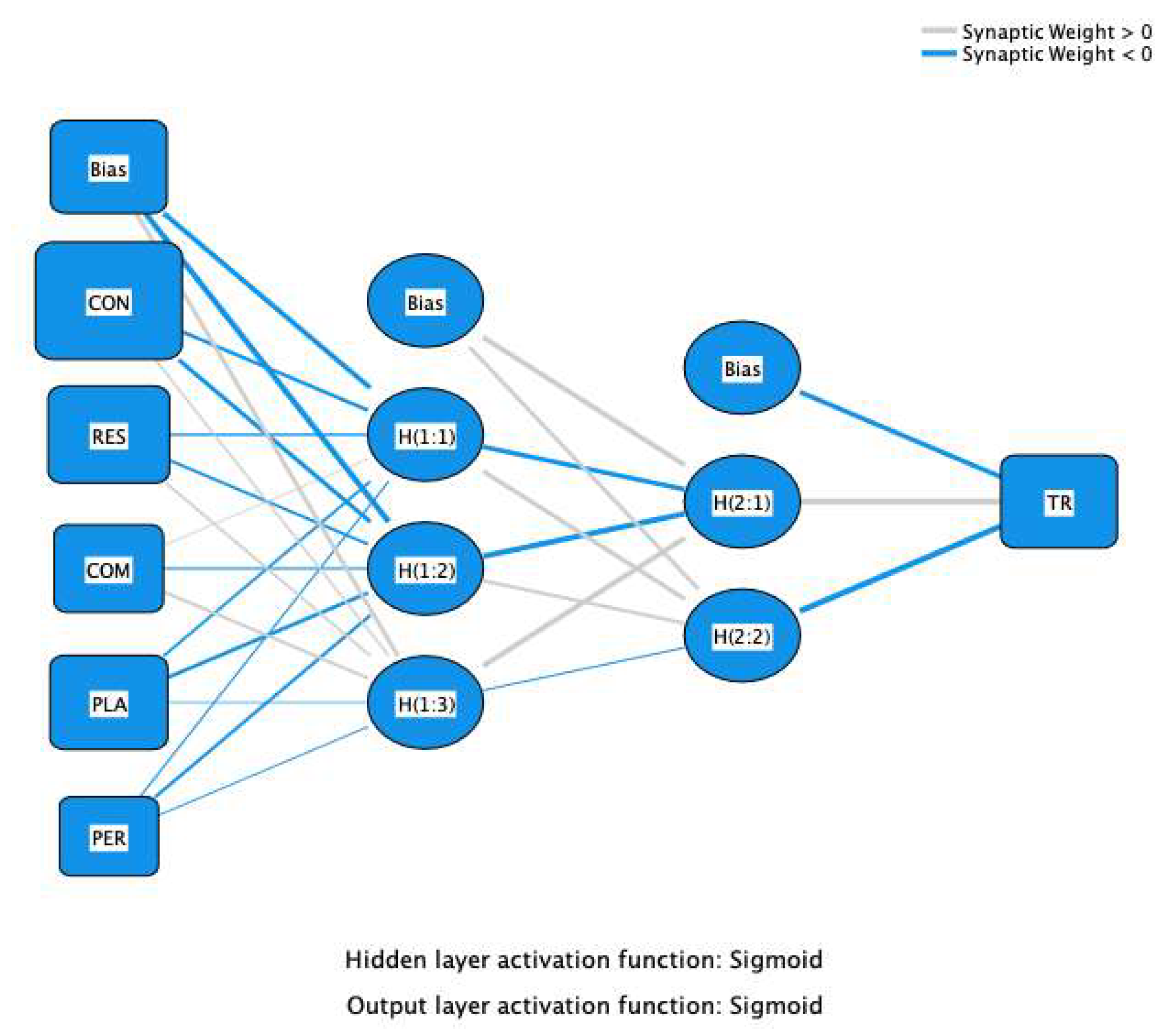

4.6.1. Structure of ANN Model

Based on the SPSS 26 software platform, deep learning modeling was conducted using a Multilayer Perceptron (MLP) architecture, which includes an input layer, one or more hidden layers, and an output layer. During the model training process, the feedforward–backpropagation (FFBP) algorithm was employed. In this method, inputs are processed in the forward pass, while the estimation error is propagated backward through the network to update weights and improve predictive performance [

129].

Statistically significant variables identified through the SEM analysis were transformed into input neurons in the neural network. Social presence, trust, and continuance intention were used as output nodes in three separate ANN models, respectively. The corresponding neural network structures are illustrated in

Figure 4,

Figure 5 and

Figure 6.

4.6.2. Result of ANN

According to the methodology proposed by Guo et al. [

127], this study utilized 90% of the sample data for the training phase and 10% for testing. Both the input and output layers adopted the sigmoid activation function to handle nonlinear relationships. All input and output variables were normalized to a scale of 0 to 1 to improve computational efficiency [

130]. To prevent overfitting, a 10-fold cross-validation procedure was applied, and Root Mean Square Error (RMSE) was used as the evaluation metric. The iterative learning process was designed to minimize error and enhance predictive accuracy [

131].

Experimental results (see

Table 11) showed relatively low RMSE values for both training and testing phases, indicating strong predictive performance and good model fit [

128]. The average training RMSE values for Models A, B, and C were 0.168, 0.173, and 0.178, respectively, while the average testing RMSE values were 0.142, 0.166, and 0.177, respectively.

In addition, sensitivity analysis results (

Table 10) were conducted to determine the normalized relative importance of the input neurons [

128]. In ANN Model A, COM emerged as the most critical predictor of SP, with a normalized importance of 90.78%, followed by PER at 84.98%, PLA at 63.55%, CON at 57.41%, and RES at 45.83%. In ANN Model B, control was the most influential predictor of TR with a normalized importance of 95.66%, followed by responsiveness at 76.45%, playfulness at 69.41%, and communication at 63.64%. In ANN Model C, social presence was the strongest predictor of CI, with a normalized importance of 93.9%, followed by TR at 84.66%.

Finally, a comparison between the PLS-SEM and ANN results was conducted to examine differences in predictor rankings (see

Table 11). In ANN Model A, the ranking of predictors differed slightly from that in the SEM model. While the SEM model identified personalization (β = 0.195,

p = 0.001) as the strongest predictor of social presence, followed by communication (β = 0.182,

p < 0.001), the ANN model indicated that communication (90.78%) was the most important predictor, followed by personalization (84.98%). However, in Models B and C, the ranking of predictors was consistent across both PLS-SEM and ANN methods.

5. Discussion

This study grounded in perceived interactivity theory, investigates how the interactive features of AI CAs influence users’ experience and their continuance intention. By employing a two-stage modeling approach PLS-SEM and ANN, the study confirms several hypothesized relationships while also revealing notable and unexpected insights.

First, the structural equation model supported hypotheses H1a to H1e: CON, RES, COM, PLA, and PER all exerted significant positive effects on users’ perceived SP within AI CAs. Among these, personalization emerged as the strongest predictor of social presence, followed closely by communication. This is consistent with findings from Hsieh et al. [

105] and Wang et al. [

63]. A possible explanation is that social presence may be more strongly driven by dimensions related to contextual and personality construction—such as communication and personalization. When CAs adapt to user characteristics and demonstrate fluent, natural interaction abilities, users may feel a heightened sense of “real presence,” as if they are conversing with a human.

Interestingly, ANN analysis yielded a different ranking of predictor importance: communication was found to be more critical than personalization. This discrepancy can be attributed to the methodological differences—PLS-SEM emphasizes linear and significance-based paths, whereas ANN captures more complex nonlinear interactions and synergistic effects [

132]. It can be inferred that personalization may exert more direct and perceptible influence, while communication potentially forms stronger interactions with other predictors, thus demonstrating higher predictive power overall. Moreover, playfulness was confirmed as a significant factor. CA incorporating humor and enjoyable interactions enhance user perceptions of realism and satisfaction, in line with findings by Xie et al. [

133].

Conversely, the effects of control and responsiveness on social presence were relatively weaker, which contrasts with Zhao et al.’s findings [

134]. One possible reason is that control mainly concerns the user’s ability to guide dialogue direction or interface operations, while responsiveness reflects system speed and accuracy—basic functions often taken for granted by users. These technical features may exhibit diminishing marginal utility: once fundamental interaction needs are met, their incremental contribution to social presence becomes limited. This speculation was supported by ANN results, where control and responsiveness were ranked lowest in importance.

Second, the study found that control, responsiveness, communication, and playfulness all positively influenced trust (supporting H2a–d). Among them, control was the most influential, consistent with Li et al.’s research [

135]. In AI interaction contexts, perceived control fosters trust by reassuring users that their intentions are correctly understood and promptly addressed. Responsiveness ranked second, reinforcing Wang et al. [

136] indicated that timely feedback not only ensures interactional coherence but also reduces uncertainty, thereby enhancing perceived system reliability [

45]. Playfulness, through lighthearted and engaging interactions, helps ease user apprehension and increase affinity—findings echoed by Kim et al. [

86]. Similarly, communication had a positive impact on trust, aligned with Hamacher et al. [

85], due to its natural and human-like qualities.

However, personalization did not significantly influence trust (H2e not supported), diverging from findings by Foroughi et al. [

132]. This could be due to users’ ambivalent perceptions of personalized interaction [

85]. While customization may improve satisfaction, it may also raise privacy or algorithmic manipulation concerns—as Knote et al. have suggested [

137].

Third, the study confirmed that both social presence and trust significantly predict users’ continuance intention (supporting H4 and H5). Social presence transforms technology from a functional medium into a social entity during interaction, evoking emotional projections that increase engagement and stickiness. Trust, in turn, stabilizes user behavior by reducing perceived uncertainty and technological risk—findings consistent with Attar et al. [

104]. The ANN results further corroborated this, with social presence ranking as the most important predictor, followed by trust. This may indicate that in highly anthropomorphic environments, users prioritize “social functionality” over basic technical reliability.

Last but not least, the study revealed a positive mediating role of social presence in fostering trust, which subsequently promotes continuance intention—consistent with findings by Lina et al. [

19]. When AI CAs exhibits high social presence (e.g., human-like communication, continuity of memory, emotional bonding), users are more likely to perceive them as socially capable and trustworthy entities. This forms a dual-path mechanism driving continuance intention—through both emotional connection and trust formation.

7. Limitations and Future Work

Despite its contributions, this study has several limitations that should be acknowledged. First, data collection was limited to users within a single country, which may constrain the generalizability of the findings. Cultural differences can significantly influence users’ familiarity with and acceptance of AI technologies. Future research should include more diverse cultural and regional populations to test the robustness of the proposed interactivity mechanism and develop culturally adaptive design frameworks.

Second, due to research constraints, this study mainly focuses on general AI CA. However, there may be significant differences in the interactivity requirements of agents in vertical fields such as medical consultation and educational companionship. For example, educational agents may rely more heavily on playfulness, while medical agents must enhance the credibility of communication. Additionally, the unique interaction needs of special user groups such as children with autism or older adults with cognitive impairments were not considered in this study. Future research should explore customized CA for these populations and develop tailored evaluation tools to investigate the boundaries and optimization paths of interactivity dimensions across use cases.

Third, this study overlooked the integration of Natural Language Processing (NLP) techniques, which are pivotal in conversational agents for analyzing and responding to user sentiment [

142]. Understanding the emotional state of users during interactions can greatly enhance the agent’s effectiveness and user engagement [

143]. NLP enables AI conversational agents (CAs) to process and understand human language in a more natural way, facilitating tasks such as intent recognition, entity extraction, and dialogue management, which are crucial for creating personalized and context-aware responses. By incorporating NLP, CAs can improve user satisfaction through empathetic and adaptive interactions, ultimately transforming human–computer communication [

142]. Therefore, future research would emphasize incorporating NLP to detect and adapt to user emotions, thereby addressing this gap and improving the interactivity framework.