Abstract

As AI recommendation systems become increasingly important in consumer decision-making, leveraging sound cues to optimize user interaction experience has become a key research topic. Grounded in the theory of perceptual contagion, this study centers on sound cues in AI recommendation scenarios, systematically examining their impact on consumer choice and choice satisfaction, as well as the underlying psychological mechanisms. Study 1 (hotel recommendation, N = 155) demonstrated that embedding sound cues into recommendation interfaces significantly increased consumer choice and choice satisfaction. Study 2 (laptop recommendation, N = 155) further revealed that this effect was mediated by preference fluency. Contrary to expectations, AI literacy did not moderate these effects, suggesting that sound cues exert influence across different user groups regardless of technological expertise. Theoretically, this study (1) introduces the theory of perceptual contagion into AI-human interaction research; (2) identifies preference fluency as the core mediating mechanism; and (3) challenges the traditional assumptions about the role of AI literacy. Practically, this study proposes a low-cost and highly adaptable design strategy, providing a new direction for recommendation systems to shift from content-driven to experience-driven. These findings enrich the understanding of sensory influences in digital contexts and offer practical insights for optimizing the design of AI platforms.

1. Introduction

With the rapid development of artificial intelligence (AI), AI-driven recommendation and search functions have been deeply integrated into consumers’ daily shopping experiences [1,2,3]. Taobao, one of the largest online shopping platforms in China, took the lead in introducing the Deep-Thinking function for shopping search. This trend indicates that AI is not only promoting technological upgrading but also reshaping digital consumption scenarios [4,5]. However, improvements in algorithmic accuracy do not always translate into better consumer experiences. Faced with large volumes of recommendations and limited attention, consumers increasingly rely on the design of recommendation systems to facilitate efficient decision-making [6]. Consequently, scholars have emphasized that system effectiveness depends not only on accuracy but also on users’ sensory experiences during interactions [7,8].

Sensory marketing research has confirmed that external sensory stimuli affect consumers’ perceptions, judgments, and behaviors [9], such as visual [10,11,12], linguistic [13,14,15,16], and tactile cues [17,18]. As a sensory channel characterized by high contextual adaptability and subconscious influence, auditory stimuli have been especially influential [19]. When examining the impact of auditory input on customers, existing studies have primarily focused on two types of sound cues: linguistic content features (i.e., text-based speech styles) and non-linguistic auditory features (i.e., non-linguistic, purely acoustic characteristics of sound) [20,21,22]. While the majority of prior research has centered on linguistic content features [23,24], there remains a lack of theoretical development and empirical evidence regarding how nonverbal auditory features—such as acoustic and perceptual attributes—affect decision-making processes [23]. Although these nonverbal sounds do not convey explicit semantic information, they may serve psychological functions such as providing contextual cues or evoking familiarity, thereby exerting unconscious influences on consumer choice and choice satisfaction. In particular, within AI recommendation systems, nonverbal sound cues—such as task-related ambient sounds familiar to users—have not been systematically examined in terms of their underlying psychological mechanisms and behavioral consequences. Based on this, this study puts forward the core question: In AI recommendation systems, can non-linguistic auditory features enhance users’ choices and choice satisfaction?

To address this question, we adopt the perceptual contagion theory as the theoretical foundation. The theory suggests that individuals may subconsciously project attributes of sensory stimuli onto associated objects, thereby shaping evaluations [25]. Existing studies have shown this in food and health perceptions [26,27], and in brand impressions shaped by product packaging [28,29]. In fact, there have long been signs of the impact of sound on consumer behavior in real life. For example, studies have shown that background music in catering environments can change consumers’ ordering preferences [30,31,32], and ambient sounds in retail scenarios can increase customers’ dwell time and purchase intention [33,34]. In a similar way, sound cues in digital shopping platforms may “transfer” attributes to AI systems, enhancing processing fluency and influencing user behavior [35]. Furthermore, considering that individuals’ understanding and psychological expectations during AI interactions may vary significantly [36], this study includes users’ AI literacy as a moderating variable in the analytical model to examine its role in shaping the effect of nonverbal sound cues.

In sum, this study designs two online experiments: (1) to investigate whether AI-generated recommendations embedded with sound cues influence consumers’ choice and choice satisfaction; and (2) to explore the underlying mediating mechanisms and boundary conditions. This study has dual important values in both theory and practice. Theoretically, it is the first to systematically apply the perceptual contagion theory to AI recommendation contexts, revealing how sound cues shape users’ evaluations through subconscious sensory associations. Practically, the findings provide empirical support for low-cost, low-disruption auditory design strategies that can be widely adopted in AI recommendation systems. These insights offer systematic guidance for intelligent recommendation, cross-platform user experience optimization, and personalized interface design.

2. Theoretical Background

2.1. Sound Cues

Sound, as one of the most socially attribute sensory channels of humans, has a processing process that is highly automated and difficult to shield [19]. As a highly social prompt signal, sound can quickly attract individual attention, activate situational processing mechanisms, and further influence attitude and behavior judgments [37]. Among non-verbal auditory features, sound has two basic types of characteristics: acoustic and perceptual. Acoustic features refer to the physical characteristics of the sound itself [38], while perceptual features refer to the listener’s perception and cognition [39]. In consumer behavior research, a large number of studies have shown that sound cues can significantly affect consumers’ cognition and behavior. For example, the rhythm and volume of music can affect consumers’ perception [32,40], shopping rhythm [41], dwell time [42,43], and consumption amount [33]; music types can also change consumers’ choices [30,31] and purchase intentions [34]. These studies show that even if sound does not carry explicit semantic information, it can affect consumers’ judgments and choices. In contrast, in the fields of AI and human–computer interaction, existing studies have focused more on language content features or acoustic features. For example, Efthymiou et al. (2024), Guha et al. (2023), and Natale & Cooke (2021) explored how speech content and style affect users’ attitudes and trust in AI assistants [35,44,45]; Efthymiou found that acoustic features such as intonation and speaking style affect people’s evaluation and attitude towards robots [35,46]. It is worth noting that sound cues at the level of perceptual features have received little research attention in the context of AI interaction. However, such perceptual features also play a key role in users’ cognition and judgment, because this is the key channel through which sensory cues “infect” users’ psychology [47]. Table 1 shows an overview of sound influences in consumer behavior.

Table 1.

Overview of Sound Influences in Consumer Behavior.

2.2. Perceptual Contagion Theory

Perceptual contagion theory refers to the transfer of attributes from one object to another through brief or superficial contact [25]. It explains that the attributes carried by external stimuli can be transferred to another object in a brief sensory presentation, thereby influencing one’s own judgments and decisions. In a consumption context, this means that individuals may attribute the characteristics of sensory cues to the products or services they are evaluating. For example, in an auditory scenario, if the background sound has a soft and warm tone, users may unconsciously project this gentle attribute onto the product itself, believing it to be more reliable or trustworthy; conversely, if the sound is sharp or rapid, users may attribute this “tense” characteristic to other products [33]. In the context of AI recommendation systems, users often cannot directly infer the reliability or credibility of the system based solely on the content [59]. Therefore, they rely more on peripheral sensory cues to form an overall perception [8]. Even without explicit semantic meaning, these sound cues can automatically trigger associations and “project” their implied qualities onto the system, shaping perceptions of its reliability, thoroughness, or trustworthiness [31].

Other theories, such as Embodied Cognition Theory, also link sensory input to cognitive behavior. This theory emphasizes that cognition is grounded in bodily experience—that is, mental processes are shaped by physical sensations, motor actions, or interactions with the environment [8]. By contrast, the perceptual contagion theory can directly illustrate how sound, as a highly social sensory stimulus, attaches psychological attributes to AI systems, thereby affecting users’ choices and satisfaction. This kind of psychological projection does not stem from the recommended content itself but is driven by the psychological impressions evoked by sensory stimuli. It precisely reflects the core mechanism of the Perceptual Contagion Theory regarding how external stimuli influence internal projection [60].

3. Hypothesis Development

3.1. Sound Cues as Main Effect

Non-linguistic sound cues can subtly influence consumers’ decision-making behaviors in traditional consumption contexts [31]. These cues often function without users’ awareness but can significantly affect individuals’ perceptions and preferences [30,61]. According to the theory of sensory contagion, external sensory inputs can activate individuals’ existing psychological associations, and the psychological meanings of these associations are then projected onto relevant objects, thereby influencing cognitive evaluations and behaviors [25,62]. Extending this theoretical perspective to the AI-driven human–computer interaction environment, sounds in the interface also have significant psychological activation functions [23]. On the one hand, such sound cues can indicate the current operational status or interaction progress of the system, thereby reducing users’ cognitive uncertainty when facing complex algorithms; on the other hand, sound cues may activate users’ social psychological associations to improve their overall satisfaction with the system [20]. The occurrence of this effect does not depend on changes in the recommended content itself but is driven by the sensory contagion mechanism activated by sound cues. Even if the recommended content remains unchanged, sound cues can act as perceptual triggers to affect users’ choices and satisfaction. Therefore, in a complex and opaque algorithmic environment, it is difficult for users to directly evaluate the reasoning process of the system. At this time, sound cues, as an alternative source of information, provide users with potential decision-making cues, thereby influencing their choice behaviors and evaluation results of system recommendations. Based on this, this paper puts forward the following research hypotheses:

H1.

Embedding of (vs. absence of) sound cues in AI recommendation systems will lead to better decision outcomes.

3.2. Preference Fluency as Mediating Effect

Preference fluency refers to the subjective feeling of psychological ease experienced by individuals during the formation of preferences and decision-making. It manifests as rapid judgments, decisive choices, and reduced cognitive load [63]. Previous research has demonstrated that the sensation of “rightness” experienced during decision-making often stems from the fluency of cognitive processing [64]. The more smoothly users can process the information they receive, the higher their willingness to accept it. [65,66], and this will also improve their evaluation [67]. Whether it is visual advertising elements [68] or musical stimuli [69], when the information is more fluent, the individual’s probability of choice increases. This indicates that sensory cues can enhance individual choices through fluency [70]. We believe that sound cues can trigger a specific type of fluency, namely preference fluency. This arises when the selection of options is not so difficult or requires little effort [67,71]. In AI recommendation interactions, non-verbal sound cues, as a kind of sensory input with situational suggestive effects, may unconsciously enhance the fluency of users’ information processing. Specifically, when users receive auditory stimuli in the interface, they may interpret this sensory experience as a positive cue for the product type, thereby triggering a metacognitive experience—i.e., that the choice is easy (preference fluency)—which in turn influences the decision-making process. Although this experience may not be explicitly perceived by users, it can still simplify the judgment process, reduce cognitive load, and enhance preference certainty, thus manifesting as a higher level of preference fluency.

From the perspective of contagion by perception theory, the enhancement of processing fluency does not occur in isolation but is often triggered and regulated by external sensory inputs. Sound, as an initial sensory input, activates individuals’ perceptual processing system, thereby eliciting positive psychological associations [72]. This kind of association is projected into interactive scenarios, thereby enhancing the fluency of individuals’ judgment. When users experience clear preferences and easy choices, their willingness to adopt recommendations increases, while the possibility of hesitation and rejection decreases. It can be seen from this that the influence of sound cues on consumers’ judgment may not only directly affect decision-making results, but also indirectly act by improving preference fluency. Based on this, the following hypothesis is proposed:

H2.

Preference fluency mediates the effect of sound cues on decision outcomes. Sound cues enhance consumers’ preference fluency during the interaction process, which in turn increases their consumer choice and choice satisfaction.

3.3. AI Literacy as Moderator Effect

AI literacy reflects an individual’s cognitive understanding, usage ability, and psychological acceptance of AI technology [73]. When consumers possess higher AI literacy, they generally have a deeper understanding of the AI system’s operating mechanisms, response logic, and interaction design [36]. This understanding leads them to interpret system behaviors and interface elements from a functional or instrumental perspective. For instance, they may perceive sound cues as design elements intended to enhance user experience rather than as social or emotional interaction signals. Consequently, sound cues are less likely to evoke embodied contextual associations in their cognitive processing, resulting in a relatively weaker effect on preference fluency. In contrast, individuals with lower AI literacy tend to be less familiar with the structure and logic of AI systems and lack stable knowledge frameworks to rationally analyze system performance. During the decision-making process, these users are more likely to rely on intuitive, concrete sensory cues to interpret and evaluate the AI system’s capability or attitude [36]. Due to insufficient understanding of AI recommendation mechanisms and limited systematic processing ability, they are more susceptible to interference from non-core information, which reduces preference fluency and weakens the coherence and confidence of their judgments. Therefore, users’ AI literacy levels may moderate the effect of sound cues on preference fluency. Specifically, individuals with lower AI literacy are more vulnerable to contagion by the contextual associations triggered by sound cues, thereby disrupting the fluency of their cognitive processing. Conversely, under higher AI literacy conditions, individuals show reduced sensitivity to sound cues, weakening the influence of the contagion mechanism. Based on this reasoning, the following hypothesis is proposed:

H3.

AI literacy moderates the effect of sound cues on decision outcomes. Specifically, when AI literacy is high (vs. low), the effect of sound cues will be amplified.

3.4. Research Framework

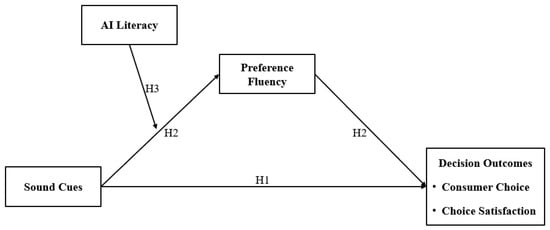

Our experiment focuses on an operational sound with strong task symbolism—the mechanical keyboard typing sound—as a concrete form of manipulation. Although such sounds are often overlooked in digital interfaces, they carry strong social signaling functions that may subconsciously evoke positive associations toward the system, thereby influencing consumer choice and choice satisfaction. Unlike melodic background music, the mechanical keyboard sound lacks rhythmic and emotional qualities, making it unlikely to directly affect consumer attitudes through emotional pathways. Furthermore, this sound does not possess mood-inducing or ambiance-creating functions, thereby excluding the possibility of behavior preference driven by pleasure. Figure 1 illustrates the complete research framework. The subsequent content presents two situational experiments designed to test the theoretical hypotheses. Experiment 1 employs a two-group design to compare the impact of sound cues on consumer decision outcomes (H1). Experiment 2 examines the mediating role of preference fluency and the moderating role of AI literacy (H2/H3). All experimental protocols see Appendix A.

Figure 1.

Research Framework.

4. Study 1

4.1. Overview

Study 1 aims to investigate whether embedding mechanical keyboard sound cues in AI recommendation systems significantly affects consumers’ consumer choice and choice satisfaction.

4.2. Method

4.2.1. Participants

We used G*Power 3.1 software to calculate the minimum required sample size [74]. The parameters were set as follows: effect size = 0.25, α = 0.05, and power = 0.80, resulting in a minimum sample size of 128 participants across two groups. A total of 160 online users with prior experience using AI voluntarily signed up and participated in the study. Recruiting participants with prior AI experience ensured that they had sufficient familiarity with AI-based systems, which allowed them to provide more valid and contextually relevant evaluations of the experimental scenarios. To ensure data quality, we first conducted a manipulation check to verify whether participants noticed the sound cues. Participants who failed the manipulation check, as well as those with excessively short response times or who did not follow experimental instructions, were excluded. In total, 20 participants were removed, resulting in a final valid sample of 140 participants (Mage = 33.92, SD = 11.96. Mfemale = 57.86%). Experiment 1 employed a single-factor (sound cues: presence vs. control) between-subjects design. Participants were randomly assigned to two groups (NPresence = 73, Ncontrol = 67). No significant differences in gender, age, or academic background were found between the groups (p > 0.05).

4.2.2. Procedure and Measures

This study adopted a single-factor, two-level (sound cues: presence vs. control) between-subjects experimental design, referencing the design by Schindler et al., (2024) [63]. All experimental materials were approved by the Ethics Committee of Liaoning University of Technology (No. 20250630). Two senior scholars and one industry expert conducted a rigorous review and approved the materials. Before the experiment, each participant was fully informed of the study’s purpose. The experiment officially began after participants signed informed consent forms. Participants were randomly assigned to one of two conditions: the sound cues group or the control group. They were instructed to imagine that they intended to have an AI recommendation system suggest suitable hotels for travel and accommodation. Next, participants watched a pre-recorded video simulating the AI recommendation system suggesting hotels based on various attributes such as price, star rating, and location. To enhance ecological validity, each participant viewed two rounds of hotel recommendation content. Both groups received identical recommendation content; the only difference was the presence or absence of sound cues. To ensure the standardization of experimental stimuli, the video playback duration was fixed at 15 s, the volume of sound cues was uniformly calibrated, and background noise was kept at a negligible level to avoid interfering with participants’ perception. The mechanical keyboard typing sound was used as the specific manipulation of the sound cues. In the sound cues group, participants saw the AI system continuously outputting recommendation results accompanied by ongoing mechanical keyboard typing sounds, conveying the perception that the “system is carefully processing information.” In the control group, the sound cues were completely removed, and participants only saw the AI system outputting recommendation results. After watching the video, the manipulation check was conducted by asking participants: “Did you notice the mechanical keyboard typing sound during the AI recommendation video?” (binary choice: 0 = No, 1 = Yes). Subsequently, following prior research in human–computer interaction, consumer choice was measured using a single-item, seven-point Likert scale (i.e., “How likely are you to choose this product?”) [28]. Choice satisfaction was assessed via a three-item, seven-point Likert scale (1 = strongly disagree, 7 = strongly agree), including items such as “I am satisfied with my choice” (Cronbach’s α= 0.85) [63,75]. Considering prior research indicating that domain-specific expertise may influence judgments and choices, we included a single-item seven-point Likert scale measuring participants’ expertise as a control variable [76,77]. Finally, demographic information such as gender, age, and education level was collected, and the experiment concluded.

4.3. Result and Discussion

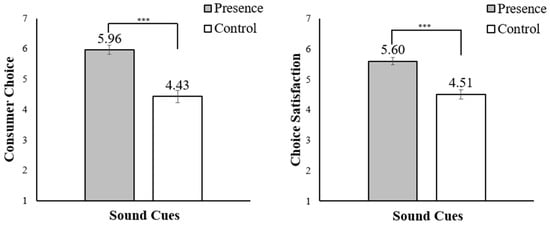

We conducted ANCOVA analyses with sound cues as the independent variable, consumer choice and choice satisfaction as dependent variables, and participants’ expertise as a covariate. The results indicated that, after controlling for expertise, the presence of sound cues had a significant effect on both consumer choice and choice satisfaction. Specifically, compared to AI recommendations without sound cues, users showed a stronger preference for AI recommendations with sound cues (Mpresence = 5.96, SD = 1.07 vs. Mcontrol = 4.43, SD = 1.62; F(1138) = 31.41, p < 0.001, η2 = 0.44), and choice satisfaction was also significantly higher (Mpresence = 5.60, SD = 1.03 vs. Mcontrol = 4.51, SD = 1.25; F(1138) = 20.56, p < 0.001, η2 = 0.49). In both models, the covariate expertise did not have a significant effect on the dependent variables (p > 0.05). This suggests that participants’ level of domain knowledge had minimal influence on the outcomes, and the main effects observed in the model are unlikely to be confounded by this variable. These findings support Hypothesis 1. The effects of sound cues on consumer choice and choice satisfaction are illustrated in Figure 2.

Figure 2.

Impact of sound cues on choice and choice satisfaction. (Notes: * p < 0.05, ** p < 0.01, *** p < 0.001).

This study found that, compared to AI assistants without sound cues, those with sound cues not only influenced consumers’ consumer choice but also enhanced users’ choice satisfaction, thereby confirming the role of sound cues in affecting user decision outcomes and supporting Hypothesis 1. Next, we will further investigate the mediating and moderating mechanisms underlying this effect.

5. Study 2

5.1. Overview

This experiment aims to test whether the effect of sound cues on decision outcomes is mediated by preference fluency and moderated by AI literacy.

5.2. Method

5.2.1. Participants

The method of Study 2 was similar to that of Study 1, differing only in the contextual setting of the experimental materials. A new set of 197 online users voluntarily participated in this study. The participants are still a broad range of ordinary consumers. Consistent with Study 1, we employed the same manipulation check items to ensure the validity of the experimental treatment. After excluding 42 participants who failed the manipulation check, provided excessively short responses, or did not follow the experimental instructions. The final valid sample comprised 155 participants (Mage = 34.57, SD = 13.35, Mfemale = 51.60%). Study 2 employed a single-factor between-subjects design, with participants randomly assigned to two groups (NPresence = 85, Ncontrol = 70). No significant differences in gender, age, or academic background were found between the groups (p > 0.05).

5.2.2. Procedure and Measures

Participants in this study watched a pre-recorded video of an AI recommendation system suggesting configurations for laptops. After completing the manipulation check, participants were asked to complete the same self-report measures of consumer choice and choice satisfaction as in Study 1 (Cronbach’s α = 0.84). Unlike Study 1, participants were then required to complete measures for the mediator and moderator variables. Preference fluency was assessed using a three-item, seven-point Likert scale adapted from Schindler et al., (2024) [63]; Novemsky et al., (2007) [71] (e.g., “It was easy for me to evaluate different options,” Cronbach’s α = 0.89). AI literacy was measured using an 11-item, seven-point Likert scale adapted from Wang et al., (2023) [73] (e.g., “I can identify the AI technologies used in the applications and products I use,” Cronbach’s α = 0.96). Additionally, the same control variables as in Study 1 were included. Finally, demographic information was collected, and the experiment concluded.

5.3. Result

5.3.1. Main Effect Analysis

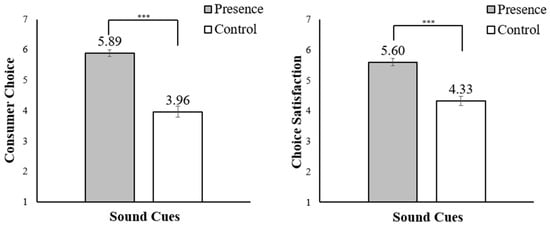

We conducted ANCOVA analyses with sound cues as the independent variable, consumer choice and choice satisfaction as dependent variables, and participants’ expertise as a covariate. As shown in Figure 3, after controlling for expertise, sound cues had a significant effect on users’ consumer choice (Mpresence = 5.89, SD = 1.16 vs. Mcontrol = 3.96, SD = 1.42; F(1153) = 65.53, p < 0.001, η2 = 0.52). Choice satisfaction was also significantly higher in the sound cues group compared to the control group (Mpresence = 5.60, SD = 1.28 vs. Mcontrol = 4.33, SD = 1.21; F(1153) = 21.76, p < 0.001, η2 = 0.55). In both models, the covariate expertise did not have a significant effect on the dependent variables (p > 0.05). This result is consistent with Study 1, indicating that the effect of sound cues does not change due to differences in users’ professional proficiency. Thus, the results provide further support for Hypothesis 1.

Figure 3.

Impact of sound cues on choice and choice satisfaction. (Notes: * p < 0.05, ** p < 0.01, *** p < 0.001).

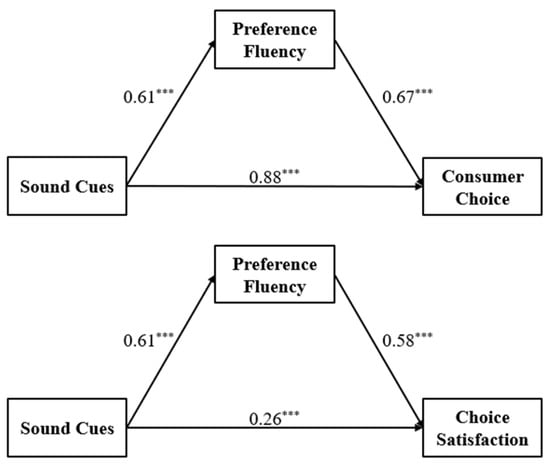

5.3.2. Mediation Effect Analysis

To test the mediating role of preference fluency between sound cues and decision outcomes, we analyzed the effect of sound cues on preference fluency. The results showed a significant effect of sound cues on preference fluency (Mpresence = 4.98, SD = 1.31 vs. Mcontrol = 3.86, SD = 1.46; F(1195) = 42.78, p < 0.001, η2 = 0.15). Subsequently, a mediation analysis was conducted using 5000 bootstrapped samples (PROCESS, Model 4) [78]. The results indicated that sound cues influenced consumer choice and choice satisfaction through preference fluency (β = 0.61; BootSE = 0.14; CI95% = [0.34, 0.89]). Given the significant direct effect, preference fluency partially mediated the impact of sound cues on user consumer choice and choice satisfaction, as illustrated in Figure 4. These findings collectively supported Hypothesis 2.

Figure 4.

Mediation effect on choice and choice satisfaction. (Notes: * p < 0.05, ** p < 0.01, *** p < 0.001).

5.3.3. Moderator Effect Analysis

A bootstrap method was used to test the moderating effect of AI literacy (PROCESS Model 7) [78]. In this model, sound cues served as the independent variable, decision outcomes as the dependent variable, preference fluency as the mediator, and AI literacy as the moderator. The results indicated that the interaction between sound cues and AI literacy did not significantly affect preference fluency (β = 0.05; BootSE = 0.08; CI95% = [−0.11,0.21]; p = 0.54). Examination of the conditional indirect effects showed that the indirect effect slightly increased with higher levels of AI literacy (− 1 SD: β = 0.13, BootSE = 0.16, CI95% = [−0.20, 0.43], M: β = 0.17; BootSE = 0.10, CI95% = [−0.02,0.38]; + 1 SD: β = 0.22, BootSE = 0.12, CI95% = [0.15,0.48]). Although there are slight differences in the impact of voice cues on consumers’ choices and choice satisfaction across different levels of AI literacy, combined with the results of statistical analysis, AI literacy does not significantly moderate the impact of voice cues on choices and choice satisfaction. Thus, Hypothesis 3 was not supported.

5.4. Discussion

Study 2 focused on the mediating and moderating mechanisms of sound cues in the user decision-making process. The results demonstrated that sound cues significantly enhanced participants’ consumer choice behavior and choice satisfaction, thereby providing further support for Hypothesis 1. Subsequent analyses revealed that sound cues partially influenced these outcomes through the enhancement of preference fluency, confirming Hypothesis 2.

However, Hypothesis 3—which proposed that AI literacy would moderate the effect of sound cues on preference fluency—was not statistically supported. Although we expected AI literacy to moderate the impact of sound cues on preference fluency, no significant moderating effect was observed. This finding suggests that in the current interaction context, non-linguistic auditory features of sound cues may influence cognitive processing in an automatic manner, such that even users with higher AI literacy find it difficult to fully filter out the psychological effects of these non-linguistic sensory inputs. This implies that the influence of non-linguistic sound cues may have a generalizable effect across individual differences, with their impact pathway being less susceptible to modulation by cognitive ability or knowledge background. Overall, Study 2 validated the pathway through which sound cues affect user decision-making via preference fluency, while revealing the limited moderating role of AI literacy.

6. General Discussion

6.1. Summary and Interpretation of Key Findings

This study, with the perceptual theory as the core framework, explores the psychological mechanisms and behavioral outcomes of nonverbal sound cues in AI recommendation systems. Unlike existing research that mainly focuses on salient cues such as interface language and recommendation transparency [20,59], this paper proposes a pathway to optimize users’ psychological experiences through sensory inputs, highlighting the perceptual basis for the transformation of AI systems from mere functional tools to experiential platforms. This interdisciplinary perspective not only enriches the applicability of sensory marketing in digital contexts but also injects a user-experience-centered sensory strategy logic into the design philosophy of AI recommendation systems. Through two controlled experiments, the study empirically tests three core research questions, aiming to provide theoretical support and practical insights for the interactive design of intelligent recommendation systems.

First, this study is the first to introduce the theory of perceptual contagion theory into the field of human–computer interaction. The results of Study 1 demonstrate that, compared to recommendation interfaces without auditory prompts, AI recommendation systems incorporating non-linguistic auditory feature-based sound cues significantly enhance users’ consumer choice and choice satisfaction. This finding supports the notion that sensory inputs influence AI interactions not only through informational content but also via subtle guidance from the external sensory environment [79]. In contrast to embodied cognition theory [8], which emphasizes effects arising from bodily sensations, the sound cues in our study—completely independent of any physical association—still influenced consumer preferences. Furthermore, these cues contained no distinctive semantic or symbolic content, yet they elicited positive responses to AI recommendations. Together, these results imply that, in AI-mediated contexts, perceptual contagion operates through automatic cognitive penetration rather than bodily simulation or sound symbolism, underscoring its potential as a broadly generalizable and widely applicable mechanism.

Second, the impact of sound cues on choices and satisfaction is indirectly achieved by enhancing preference fluency. Study 2 further reveals that sound cues reshape users’ subjective experience of the recommendation process in a contextualized and perceptual manner, facilitating the faster formation of preference judgments and behavioral decisions. The findings suggest that subtle sensory inputs can activate fluency mechanisms within the user’s information processing system—without being explicitly noticed—thus triggering a feeling of “rightness” [63], which in turn increases both acceptance of and satisfaction with the recommendation. This result expands the explanatory scope of perceptual contagion theory. Prior research has primarily focused on the direct transmission of affective cues such as pleasure [80], warmth [81], or anger [82], through emotional projection or mimicry [83,84]. In contrast, the present study proposes a more cognitively grounded form of indirect contagion—sound cues enhance the fluency of information processing, leading users to form more favorable perceptions of the recommendation system, thereby eliciting clearer and quicker preference formation. This adds a new cognitive dimension to the perceptual theory, indicating that it does not necessarily require strong emotional or semantic content; its subtle influence can be realized through the perceived fluency in the cognitive processing process.

Finally, this study found no significant moderating effect of AI literacy. It is generally believed that users with high literacy possess stronger abilities in information filtering and rational control [36], and thus are less likely to be influenced by sensory cues. In the interaction context established in this study, sound cues may have entered users’ cognitive processing pathways in an automatic fashion, thereby exhibiting a generalizable effect across different levels of cognitive ability. Even when users have superior skills in recognizing and processing information, sensory inputs can still permeate their cognitive systems and exert influence. This counterintuitive finding extends the applicability of perceptual contagion theory to AI-mediated contexts and offers valuable theoretical insights into how sensory inputs may bypass individual difference variables. It prompts a reconsideration within the human–AI interaction field of how technological literacy is conceptualized and evaluated.

6.2. Theoretical Contributions and Implications

This study offers a systematic theoretical foundation for the application of AI technologies in digital marketing contexts. First, this study expands the applicable boundary of perceptual contagion theory in the field of human–computer interaction. Existing research has mostly focused on linguistic auditory features [23] or has been limited to acoustic attributes directly related to products [35], while systematic investigations into non-linguistic sounds with no clear semantics remain scarce. At the same time, prior studies on non-linguistic sounds have mainly centered on traditional consumption contexts, such as dining or retail environments [30,33], with little attention paid to the digital interaction context of AI recommendation. By introducing sound cues into AI recommendation system experiments, this study verifies their positive effects on choice intention and satisfaction, thereby responding to the call by Anglada-Tort et al. (2022) [34] that “non-semantic sounds need to be validated in more decision-making scenarios.” Accordingly, this study not only fills the research gap concerning non-linguistic auditory features in AI contexts but also extends perceptual contagion theory from traditional product or environmental cues to digital scenarios.

Second, this study refines the cognitive pathway explanation of perceptual contagion theory. Previous research on the mechanisms of sound cues has largely emphasized emotional mediation [30,31], while giving insufficient attention to cognitive mediators. Even when cognitive mechanisms were considered, they often remained at a general level [65] without focusing on the critical stage of preference formation. Although Schindler et al. (2024) [63] highlighted the link between preference fluency and choice satisfaction, they did not examine its connection with non-linguistic sound cues. Through experimental evidence, this study demonstrates that preference fluency serves as the core mediator in the effect of sound cues on decision-making: sound enhances individuals’ psychological ease in forming preferences and making choices, thereby indirectly improving decision outcomes. This finding responds to Novemsky et al. (2007) [71]’s call for more evidence on the external triggers of preference fluency and supplements the explanatory power of perceptual contagion theory from a cognitive perspective.

Third, we revised the moderating hypothesis regarding AI literacy in the context of sensory cue effects. Previous research has suggested that individuals with high AI literacy are better able to rationally filter peripheral cues [36,44]. However, our findings indicate that AI literacy does not significantly moderate the pathway through which auditory cues influence decision outcomes, suggesting that such auditory effects are automatic and difficult to filter even for highly literate individuals. This result not only fills the empirical gap in understanding the interaction between AI literacy and sensory cues but also challenges the mainstream assumption that “higher literacy weakens the effect of peripheral cues.” It further responds to Hermann’s (2022) speculation that “sensory cues may bypass cognitive control.” [85]. At the same time, this study demonstrates that perceptual contagion effects exhibit cross-individual universality in digital interactions, thereby extending the theoretical boundaries of the concept and offering practical implications for recommendation system design.

6.3. Implications for Practice

This study offers innovative and practical implications for the design of AI recommendation systems and digital marketing practices. First, the study highlights that optimizing digital interfaces should go beyond merely improving the accuracy of recommendation content, shifting instead toward enhancing user experience. Our findings demonstrate that sound cues, as part of a “micro-sensory design” within the interface, can reinforce the sense of interactional fluency. Compared to more complex or highly anthropomorphic system interactions, non-verbal sound cues provide a “low-disruption, high-context adaptability” advantage, making them suitable for a wide range of AI recommendation scenarios. Notably, while non-verbal sound cues show promise, their effectiveness may be constrained by specific contextual factors. Additionally, while the present study’s sound cues were designed to be culturally neutral, culturally specific sound associations could introduce unintended effects: for example, a frequency modulation that is perceived as neutral in one culture might carry negative connotations in another, potentially triggering adverse user responses. Thus, when deploying sound cues across global or culturally diverse user groups, pre-testing for cultural compatibility remains necessary to avoid counterproductive outcomes.

Secondly, the study further reveals that the effectiveness of non-linguistic auditory features of sound cues is not moderated by users’ levels of AI literacy. This finding carries important implications for real-world system deployment: interface designers can implement a unified and standardized sound cues strategy without the need for differentiated adaptation based on users’ technological proficiency, thereby significantly reducing design complexity and development costs. This design effectively alleviates the adaptation burden caused by individual cognitive differences and enhances the stability and sustainability of recommendation systems across diverse user environments.

6.4. Limitations and Future Research Directions

This study has several limitations. First, it focuses on a single type of non-linguistic sound cues and immediate decision-making contexts. Future research could improve the design by considering audio delivery variability, long-term effects, and alternate mediators. Second, although we recruited a diverse sample of ordinary consumers online, online data collection methods may have limitations, including self-selection bias, differences in testing environments, and variations in attention or engagement. Future research can combine online studies with controlled laboratory experiments or field research to improve validity. Finally, user differences such as AI literacy may interact with the cultural sound symbolism, and cross-modal designs could offer a more comprehensive understanding.

Author Contributions

Conceptualization, Validation and Methodology, X.Y.; Formal Analysis, Data Curation and Writing—Original Draft Preparation, Q.G.; Writing—Review & Editing and Supervision, X.Y. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

2024 Liaoning University of Technology Doctoral Research Startup Fund (Project No.: XB2024012).

Institutional Review Board Statement

This research has obtained ethical approval from Liaoning University of Technology (Approval No.: 20250630).

Informed Consent Statement

This experiment obtained the informed consent of all participants.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A

All experimental protocols were registered at (https://os.psych.ac.cn/preregisterdetail/202507.00014, accessed on 2 September 2025). These materials are available on the Open Science Framework and ensure the transparency and reproducibility of the research process.

References

- Belanche, D.; Casaló, L.V.; Flavián, C. Frontline Robots in Tourism and Hospitality: Service Enhancement or Cost Reduction? Electron. Mark. 2021, 31, 477–492. [Google Scholar] [CrossRef]

- Mengucci, C.; Ferranti, P.; Romano, A.; Masi, P.; Picone, G.; Capozzi, F. Food Structure, Function and Artificial Intelligence. Trends Food Sci. Technol. 2022, 123, 251–263. [Google Scholar] [CrossRef]

- Zhang, Q.; Lu, J.; Jin, Y. Artificial Intelligence in Recommender Systems. Complex. Intell. Syst. 2021, 7, 439–457. [Google Scholar] [CrossRef]

- Chi, O.H.; Chi, C.G.; Gursoy, D.; Nunkoo, R. Customers’ Acceptance of Artificially Intelligent Service Robots: The Influence of Trust and Culture. Int. J. Inf. Manag. 2023, 70, 102623–102638. [Google Scholar] [CrossRef]

- Hoyer, W.; Kroschke, M.; Schmitt, B.; Kraume, K.; Shankar, V. Transforming the Customer Experience through New Technologies. J. Interact. Mark. 2020, 51, 57–71. [Google Scholar] [CrossRef]

- Gupta, S.; Modgil, S.; Bhattacharyya, S.; Bose, I. Artificial Intelligence for Decision Support Systems in the Field of Operations Research: Review and Future Scope of Research. Ann. Oper. Res. 2022, 308, 215–274. [Google Scholar] [CrossRef]

- Petit, O.; Cheok, A.D.; Spence, C.; Velasco, C.; Karunanayaka, K.T. Sensory Marketing in Light of New Technologies. In Proceedings of the 12th International Conference on Advances in Computer Entertainment Technology, Iskandar Malaysia, 16 November 2015; pp. 1–4. [Google Scholar]

- Petit, O.; Velasco, C.; Spence, C. Digital Sensory Marketing: Integrating New Technologies into Multisensory Online Experience. J. Interact. Mark. 2019, 45, 42–61. [Google Scholar] [CrossRef]

- Krishna, A. An Integrative Review of Sensory Marketing: Engaging the Senses to Affect Perception, Judgment and Behavior. J. Consum. Psychol. 2012, 22, 332–351. [Google Scholar] [CrossRef]

- Einola, K.; Khoreva, V.; Tienari, J. A Colleague Named Max: A Critical Inquiry into Affects When an Anthropomorphised AI (Ro)Bot Enters the Workplace. Hum. Relat. 2023, 77, 1620–1649. [Google Scholar] [CrossRef]

- Elyoseph, Z.; Refoua, E.; Asraf, K.; Lvovsky, M.; Shimoni, Y.; Hadar-Shoval, D. Capacity of Generative AI to Interpret Human Emotions from Visual and Textual Data: Pilot Evaluation Study. JMIR Ment. Health 2024, 11, e54369. [Google Scholar] [CrossRef]

- Zhou, X.; Yan, X.; Jiang, Y. Making Sense? The Sensory-Specific Nature of Virtual Influencer Effectiveness. J. Mark. 2024, 88, 84–106. [Google Scholar] [CrossRef]

- Bergner, A.; Hildebrand, C.; Häubl, G. Machine Talk: How Verbal Embodiment in Conversational AI Shapes Consumer-Brand Relationships. J. Consum. Res. 2023, 50, 742–764. [Google Scholar] [CrossRef]

- Han, E.; Yin, D.; Zhang, H. Bots with Feelings: Should AI Agents Express Positive Emotion in Customer Service? Inf. Syst. Res. 2023, 34, 1296–1311. [Google Scholar] [CrossRef]

- Schuetzler, R.; Grimes, G.; Giboney, J. The Impact of Chatbot Conversational Skill on Engagement and Perceived Humanness. J. Manag. Inf. Syst. 2020, 37, 875–900. [Google Scholar] [CrossRef]

- Tsekouras, D.; Gutt, D.; Heimbach, I. The Robo Bias in Conversational Reviews: How the Solicitation Medium Anthropomorphism Affects Product Rating Valence and Review Helpfulness. J. Acad. Market. Sci. 2024, 52, 1651–1672. [Google Scholar] [CrossRef]

- Hampton, W.H.; Hildebrand, C. Haptic Rewards: How Mobile Vibrations Shape Reward Response and Consumer Choice. J. Consum. Res. 2025, ucaf025. [Google Scholar] [CrossRef]

- Wu, J.; Zhu, Y.; Fang, X.; Banerjee, P. Touch or Click? The Effect of Direct and Indirect Human-Computer Interaction on Consumer Responses. J. Mark. Theory Pract. 2024, 32, 158–173. [Google Scholar] [CrossRef]

- Wiener, H.J.D.; Chartrand, T.L. The Effect of Voice Quality on Ad Efficacy. Psychol. Mark. 2014, 31, 509–517. [Google Scholar] [CrossRef]

- Barcelos, R.H.; Dantas, D.C.; Sénécal, S. Watch Your Tone: How a Brand’s Tone of Voice on Social Media Influences Consumer Responses. J. Interact. Mark. 2018, 41, 60–80. [Google Scholar] [CrossRef]

- Javornik, A.; Filieri, R.; Gumann, R. “Don’t Forget That Others Are Watching, Too!” The Effect of Conversational Human Voice and Reply Length on Observers’ Perceptions of Complaint Handling in Social Media. J. Interact. Mark. 2020, 50, 100–119. [Google Scholar] [CrossRef]

- Krestar, M.L.; McLennan, C.T. Examining the Effects of Variation in Emotional Tone of Voice on Spoken Word Recognition. Q. J. Exp. Psychol. 2013, 66, 1793–1802. [Google Scholar] [CrossRef] [PubMed]

- Huo, J.; Gong, L.; Xi, Y.; Chen, Y.; Chen, D.; Yang, Q. Mind the Voice! The Effect of Service Robot Voice Vividness on Service Failure Tolerance. J. Travel. Tour. Mark. 2025, 42, 1–19. [Google Scholar] [CrossRef]

- Xu, X.; Liu, J. Artificial Intelligence Humor in Service Recovery. Ann. Tour. Res. 2022, 95, 103439. [Google Scholar] [CrossRef]

- Rozin, P.; Millman, L.; Nemeroff, C. Operation of the Laws of Sympathetic Magic in Disgust and Other Domains. J. Pers. Soc. Psychol. 1986, 50, 703–712. [Google Scholar] [CrossRef]

- Li, Y.; Heuvinck, N.; Pandelaere, M.; Park, Y.; Lee, D.; Park, S.; Moon, J.; Li, Y.; Heuvinck, N.; Pandelaere, M.; et al. Make It Hot? How Food Temperature (Mis)Guides Product Judgments. J. Consum. Res. 2020, 47, 523–543. [Google Scholar] [CrossRef]

- Haws, K.L.; Reczek, R.W.; Sample, K.L.; Paul, I.; Mohanty, S.; Parker, J.R. The Impact of Green Energy Production on Healthiness Perceptions and Preferences. J. Consum. Res. 2025, ucaf024. [Google Scholar] [CrossRef]

- Labrecque, L.I.; Sohn, S.; Seegebarth, B.; Ashley, C. Color Me Effective: The Impact of Color Saturation on Perceptions of Potency and Product Efficacy. J. Mark. 2025, 89, 120–139. [Google Scholar] [CrossRef]

- Buechel, E.; Townsend, C. Buying Beauty for the Long Run: (Mis)Predicting Liking of Product Aesthetics. J. Consum. Res. 2018, 45, 275–297. [Google Scholar] [CrossRef]

- Motoki, K.; Takahashi, N.; Velasco, C.; Spence, C. Is Classical Music Sweeter than Jazz? Crossmodal Influences of Background Music and Taste/Flavour on Healthy and Indulgent Food Preferences. Food Qual. Prefer. 2022, 96, 104380. [Google Scholar] [CrossRef]

- Peng-Li, D.; Andersen, T.; Finlayson, G.; Byrne, D.; Wang, Q. The Impact of Environmental Sounds on Food Reward. Physiol. Behav. 2022, 245, 113689. [Google Scholar] [CrossRef]

- Huang, X.; Labroo, A. Cueing Morality: The Effect of High-Pitched Music on Healthy Choice. J. Mark. 2020, 84, 130–143. [Google Scholar] [CrossRef]

- Yang, S.; Chang, X.; Chen, S.; Lin, S.; Ross, W. Does Music Really Work? The Two-Stage Audiovisual Cross-Modal Correspondence Effect on Consumers’ Shopping Behavior. Mark. Lett. 2022, 33, 251–276. [Google Scholar] [CrossRef]

- Anglada-Tort, M.; Schofield, K.; Trahan, T.; Müllensiefen, D. I’ve Heard That Brand before: The Role of Music Recognition on Consumer Choice. Int. J. Advert. 2022, 41, 1567–1587. [Google Scholar] [CrossRef]

- Efthymiou, F.; Hildebrand, C.; de Bellis, E.; Hampton, W. The Power of AI-Generated Voices: How Digital Vocal Tract Length Shapes Product Congruency and Ad Performance. J. Interact. Mark. 2024, 59, 117–134. [Google Scholar] [CrossRef]

- Tully, S.; Longoni, C.; Appel, G. Lower Artificial Intelligence Literacy Predicts Greater AI Receptivity. J. Mark. 2025, 89, 1–20. [Google Scholar] [CrossRef]

- Horowitz, S.S. The Universal Sense: How Hearing Shapes the Mind; Bloomsbury Publishing: New York, NY, USA, 2012; ISBN 978-1-60819-090-4. [Google Scholar]

- Hildebrand, C.; Efthymiou, F.; Busquet, F.; Hampton, W.; Hoffman, D.; Novak, T. Voice Analytics in Business Research: Conceptual Foundations, Acoustic Feature Extraction, and Applications. J. Bus. Res. 2020, 121, 364–374. [Google Scholar] [CrossRef]

- Bruder, C.; Poeppel, D.; Larrouy-Maestri, P. Perceptual (but Not Acoustic) Features Predict Singing Voice Preferences. Sci. Rep. 2024, 14, 1–15. [Google Scholar] [CrossRef]

- Daunfeldt, S.; Moradi, J.; Rudholm, N.; Öberg, C. Effects of Employees’ Opportunities to Influence in-Store Music on Sales: Evidence from a Field Experiment. J. Retail. Consum. Serv. 2021, 59, 102417. [Google Scholar] [CrossRef]

- Douce, L.; Adams, C.; Petit, O.; Nijholt, A. Crossmodal Congruency between Background Music and the Online Store Environment: The Moderating Role of Shopping Goals. Front. Psychol. 2022, 13, 883920. [Google Scholar] [CrossRef]

- Feng, S.; Suri, R.; Bell, M. Does Classical Music Relieve Math Anxiety? Role of Tempo on Price Computation Avoidance. Psychol. Mark. 2014, 31, 489–499. [Google Scholar] [CrossRef]

- Peng-Li, D.; Chan, R.C.K.; Byrne, D.V.; Wang, Q.J. The Effects of Ethnically Congruent Music on Eye Movements and Food Choice-a Cross-Cultural Comparison between Danish and Chinese Consumers. Foods 2020, 9, 1109. [Google Scholar] [CrossRef]

- Guha, A.; Bressgott, T.; Grewal, D.; Mahr, D.; Wetzels, M.; Schweiger, E. How Artificiality and Intelligence Affect Voice Assistant Evaluations. J. Acad. Mark. Sci. 2023, 51, 843–866. [Google Scholar] [CrossRef]

- Natale, S.; Cooke, H. Browsing with Alexa: Interrogating the Impact of Voice Assistants as Web Interfaces. Media Cult. Soc. 2021, 43, 1000–1016. [Google Scholar] [CrossRef]

- Efthymiou, F.; Hildebrand, C. Empathy by Design: The Influence of Trembling AI Voices on Prosocial Behavior. IEEE Trans. Affect. Comput. 2024, 15, 1253–1263. [Google Scholar] [CrossRef]

- Zierau, N.; Hildebrand, C.; Bergner, A.; Busquet, F.; Schmitt, A.; Leimeister, J. Voice Bots on the Frontline: Voice-Based Interfaces Enhance Flow-like Consumer Experiences & Boost Service Outcomes. J. Acad. Mark. Sci. 2023, 51, 823–842. [Google Scholar] [CrossRef]

- Liu, Y.; Ye, T.; Yu, C. Virtual Voices in Hospitality: Assessing Narrative Styles of Digital Influencers in Hotel Advertising. J. Hosp. Tour. Manag. 2024, 61, 281–298. [Google Scholar] [CrossRef]

- Peng-Li, D.; Mathiesen, S.; Chan, R.; Byrne, D.; Wang, Q. Sounds Healthy: Modelling Sound-Evoked Consumer Food Choice through Visual Attention. Appetite 2021, 164, 105264. [Google Scholar] [CrossRef] [PubMed]

- Van Zant, A.; Berger, J. How the Voice Persuades. J. Pers. Soc. Psychol. 2020, 118, 661–682. [Google Scholar] [CrossRef]

- Ketron, S.; Spears, N. Sounds like a Heuristic! Investigating the Effect of Sound-Symbolic Correspondences between Store Names and Sizes on Consumer Willingness-to-Pay. J. Retail. Consum. Serv. 2019, 51, 285–292. [Google Scholar] [CrossRef]

- Ziv, N. Musical Flavor: The Effect of Background Music and Presentation Order on Taste. Eur. J. Mark. 2018, 52, 1485–1504. [Google Scholar] [CrossRef]

- Spendrup, S.; Hunter, E.; Isgren, E. Exploring the Relationship between Nature Sounds, Connectedness to Nature, Mood and Willingness to Buy Sustainable Food: A Retail Field Experiment. Appetite 2016, 100, 133–141. [Google Scholar] [CrossRef]

- North, A.; Sheridan, L.; Areni, C. Music Congruity Effects on Product Memory, Perception, and Choice. J. Retail. 2016, 92, 83–95. [Google Scholar] [CrossRef]

- Vermeulen, I.; Beukeboom, C. Effects of Music in Advertising: Three Experiments Replicating Single-Exposure Musical Conditioning of Consumer Choice (Gorn 1982) in an Individual Setting. J. Advert. 2016, 45, 53–61. [Google Scholar] [CrossRef]

- Brodsky, W. Developing a Functional Method to Apply Music in Branding: Design Language-Generated Music. Psychol. Music 2011, 39, 261–283. [Google Scholar] [CrossRef]

- Redker, C.; Gibson, B. Music as an Unconditioned Stimulus: Positive and Negative Effects of Country Music on Implicit Attitudes, Explicit Attitudes, and Brand Choice1. J. Appl. Soc. Psychol. 2009, 39, 2689–2705. [Google Scholar] [CrossRef]

- Hahn, M.; Hwang, I. Effects of Tempo and Familiarity of Background Music on Message Processing in TV Advertising: A Resource-Matching Perspective. Psychol. Mark. 1999, 16, 659–675. [Google Scholar] [CrossRef]

- Bauer, K.; von Zahn, M.; Hinz, O. Expl(AI)Ned: The Impact of Explainable Artificial Intelligence on Users’ Information Processing. Inf. Syst. Res. 2023, 34, 1582–1602. [Google Scholar] [CrossRef]

- Meng, L.M.; Duan, S.; Zhao, Y.; Lu, K.; Chen, S. The Impact of Online Celebrity in Livestreaming E-Commerce on Purchase Intention from the Perspective of Emotional Contagion. J. Retail. Consum. Serv. 2021, 63, 102733. [Google Scholar] [CrossRef]

- Galmarini, M.; Paz, R.; Choquehuanca, D.; Zamora, M.; Mesz, B. Impact of Music on the Dynamic Perception of Coffee and Evoked Emotions Evaluated by Temporal Dominance of Sensations (TDS) and Emotions (TDE). Food Res. Int. 2021, 150, 110795. [Google Scholar] [CrossRef]

- Morales, A.C.; Fitzsimons, G.J. Product Contagion: Changing Consumer Evaluations through Physical Contact with “Disgusting” Products. J. Mark. Res. 2007, 44, 272–283. [Google Scholar] [CrossRef]

- Schindler, D.; Maiberger, T.; Koschate-Fischer, N.; Hoyer, W. How Speaking versus Writing to Conversational Agents Shapes Consumers’ Choice and Choice Satisfaction. J. Acad. Mark. Sci. 2024, 52, 634–652. [Google Scholar] [CrossRef]

- Humphreys, A.; Isaac, M.S.; Wang, R.J.-H. Construal Matching in Online Search: Applying Text Analysis to Illuminate the Consumer Decision Journey. J. Mark. Res. 2021, 58, 1101–1119. [Google Scholar] [CrossRef]

- Reber, R.; Schwarz, N.; Winkielman, P. Processing Fluency and Aesthetic Pleasure: Is Beauty in the Perceiver’s Processing Experience? Pers. Soc. Psychol. Rev. 2004, 8, 364–382. [Google Scholar] [CrossRef] [PubMed]

- Mosteller, J.; Donthu, N.; Eroglu, S. The Fluent Online Shopping Experience. J. Bus. Res. 2014, 67, 2486–2493. [Google Scholar] [CrossRef]

- Nielsen, J.; Escalas, J. Easier Is Not Always Better: The Moderating Role of Processing Type on Preference Fluency. J. Consum. Psychol. 2010, 20, 295–305. [Google Scholar] [CrossRef]

- Shapiro, S.; Nielsen, J. What the Blind Eye Sees: Incidental Change Detection as a Source of Perceptual Fluency. J. Consum. Res. 2013, 39, 1202–1218. [Google Scholar] [CrossRef]

- Mungan, E.; Akan, M.; Bilge, M. Tracking Familiarity, Recognition, and Liking Increases with Repeated Exposures to Nontonal Music: Revisiting MEE-Revisited. New Ideas Psychol. 2019, 54, 63–75. [Google Scholar] [CrossRef]

- Janiszewski, C.; Kuo, A.; Tavassoli, N. The Influence of Selective Attention and Inattention to Products on Subsequent Choice. J. Consum. Res. 2013, 39, 1258–1274. [Google Scholar] [CrossRef]

- Novemsky, N.; Dhar, R.; Schwarz, N.; Simonson, I. Preference Fluency in Choice. J. Mark. Res. 2007, 44, 347–356. [Google Scholar] [CrossRef]

- Seaborn, K.; Miyake, N.; Pennefather, P.; Otake-Matsuura, M. Voice in Human-Agent Interaction: A Survey. ACM Comput. Surv. 2021, 54, 1–43. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.-L.P.; Yuan, T. Measuring User Competence in Using Artificial Intelligence: Validity and Reliability of Artificial Intelligence Literacy Scale. Behav. Inf. Technol. 2023, 42, 1324–1337. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*power 3: A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Zhang, S.; Fitzsimons, G.J. Choice-Process Satisfaction: The Influence of Attribute Alignability and Option Limitation. Organ. Behav. Hum. Human. Decis. Process. 1999, 77, 192–214. [Google Scholar] [CrossRef]

- Chinchanachokchai, S.; Thontirawong, P.; Chinchanachokchai, P. A Tale of Two Recommender Systems: The Moderating Role of Consumer Expertise on Artificial Intelligence Based Product Recommendations. J. Retail. Consum. Serv. 2021, 61, 102528. [Google Scholar] [CrossRef]

- Mitchell, A.A.; Dacin, P.A. The Assessment of Alternative Measures of Consumer Expertise. J. Consum. Res. 1996, 23, 219. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach, 2nd ed.; Guilford Publications: New York, NY, USA, 2017; ISBN 978-1-4625-3466-1. [Google Scholar]

- Laukkanen, T.; Xi, N.; Hallikainen, H.; Ruusunen, N.; Hamari, J. Virtual Technologies in Supporting Sustainable Consumption: From a Single-Sensory Stimulus to a Multi-Sensory Experience. Int. J. Inf. Manag. 2022, 63, 102455. [Google Scholar] [CrossRef]

- Gelbrich, K.; Hagel, J.; Orsingher, C. Emotional Support from a Digital Assistant in Technology-Mediated Services: Effects on Customer Satisfaction and Behavioral Persistence. Int. J. Res. Mark. 2021, 38, 176–193. [Google Scholar] [CrossRef]

- Yu, S.; Zhao, L. Emojifying Chatbot Interactions: An Exploration of Emoji Utilization in Human-Chatbot Communications. Telemat. Inform. 2024, 86, 102071–102083. [Google Scholar] [CrossRef]

- Crolic, C.; Thomaz, F.; Hadi, R.; Stephen, A.T. Blame the Bot: Anthropomorphism and Anger in Customer–Chatbot Interactions. J. Mark. 2022, 86, 132–148. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, Q.; Lu, J.; Wang, X.; Liu, L.; Feng, Y. Emotional Expression by Artificial Intelligence Chatbots to Improve Customer Satisfaction: Underlying Mechanism and Boundary Conditions. Tour. Manag. 2024, 100, 104835–104854. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, X.; Lu, J.; Liu, L.; Feng, Y. The Impact of Emotional Expression by Artificial Intelligence Recommendation Chatbots on Perceived Humanness and Social Interactivity. Decis. Support Syst. 2024, 187, 114347–114358. [Google Scholar] [CrossRef]

- Hermann, E.; Williams, G.Y.; Puntoni, S. Deploying Artificial Intelligence in Services to AID Vulnerable Consumers. J. Acad. Mark. Sci. 2024, 52, 1431–1451. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).