Abstract

In the sentiment analysis of online comments, all comments are generally considered as a whole, with little attention paid to the inevitable emotional fluctuations in comments caused by changes in product value. In this study, we analyzed the online comments related to apple sales on Chinese e-commerce platforms, and combined topic models, sentiment analysis, and transfer learning to investigate the impact of product value on emotional fluctuations in online comments. We found that as product value changes, the sentiment of online comments undergoes significant fluctuations. Among the prominent negative sentiments, the proportion of topics influenced by product value significantly increases as product value decreases. This study reveals the correlation between changes in product value and sentiment fluctuations in online comments, and demonstrates the necessity of classifying online comments based on product value as an indicator. This study offers a novel perspective for enhancing sentiment analysis by incorporating product value dynamics.

1. Introduction

With the advancement of technology, the online shopping industry has experienced rapid growth. Online shopping has become a significant component of the modern economy. However, when engaging in online shopping, consumers cannot physically interact with products, and thus, purchasing decisions based solely on the information provided by online stores are difficult [1,2]. As a result, online comments from other consumers have become a crucial reference for online purchasing decisions [3,4,5]. Research on online comments has thus emerged as an important topic for promoting industrial development.

Current research on online comments primarily focuses on two aspects: comment usefulness and sentiment analysis. Comment usefulness, or comment helpfulness, is defined as the evaluation of a product that facilitates the consumer decision-making process [6]. Studies on comment usefulness help reduce consumer uncertainty and promote rational decision-making [7,8]. The primary goal of sentiment analysis in online comments is to help businesses quickly understand consumer feedback to improve their products [9,10]. With the widespread application of sentiment analysis, it has become a current research hotspot [11,12]. Researchers have applied sentiment analysis methods to various domains, including public opinion monitoring [13,14], consumer behavior [15,16], municipal management [17], financial market forecasting [18], and sarcasm detection [19]. Related to our study, Liu et al. focused on product quality features in online comments [20]. Other scholars have concentrated on improving the accuracy of sentiment analysis, conducting in-depth research on sentiment polarity [21]. The sentiment analysis of online comments has also yielded practical applications. For instance, Gan et al. identified five key attributes in consumer comments on restaurants [22]. The impact of online comments on sales has also garnered significant attention, revealing the interplay between numerical ratings and textual comments in influencing product sales [23]. However, most of these studies focus on specific attributes of online comments, aiming to identify key factors influencing consumer sentiment while overlooking the shifts in consumer sentiment caused by changes in product value.

At present, classification research in the field of online comments is still relatively preliminary, mostly focusing on how to classify comments, including model improvement (e.g., multimodal [24] and Bert [25] models) and application research on false comment detection [26] and semantic analysis [27]. However, these studies mainly consider the characteristics of online comments without taking into account the specific attributes of the product, such as the emotional changes in online comments caused by changes in value. Research on the inherent characteristics of products, such as that by Dambros et al. [28], is limited, and although there have been detailed discussions on the process of product value changes, there has been no further classification research to obtain more universal patterns. For products with highly volatile values, it is particularly imperative to conduct classification studies of online comments based on product value to formulate more adaptive sales strategies. For instance, in the case of agricultural products such as vegetables and fruits—whose quality deteriorates rapidly—sellers must reassess the managerial significance of various online comments to optimize decision-making. Similarly, for seasonal tourism-related products (hotels, tourist spots, etc.), distinct management mechanisms are required to account for the varying implications of online comments across different seasons.

To explore the relationship between product value fluctuations and sentiment changes in online comments, in this paper, we introduce a temporal dimension to analyze sentiment fluctuations during the online sales process, aiming to uncover patterns in consumer sentiments as product values change. This study addresses the following two questions: (1) Do online comment sentiments change as product values fluctuate? (2) If online comment sentiments exhibit inconsistency, what are the characteristics of these fluctuations?

2. Literature Review

2.1. Research on Product Value

Product value is a core factor in measuring market performance and consumer acceptance, encompassing multiple dimensions such as product functionality, quality, emotional value, and social recognition [29]. In value engineering theory, it is defined as the ratio of product functionality to its cost over the product lifecycle [30]. Customer perspectives on value can be a subjective assessment of the overall excellence of the product [31], and therefore, product value is influenced by various factors, including environmental quality [32], social factors [33], and technological risks [34]. Many scholars have conducted research in this area. For example, Sonderegger et al. found that consumers from different cultural backgrounds have significantly different evaluations of the same product’s value [35]. Other studies have shown that product novelty, technological dependency, customer demand selection, and market environment also impact product value creation [36,37]. Past methods have often relied on mathematical models to improve performance, typically considering only one or two factors. The advent of machine learning has changed this, enabling researchers to consider more factors in data prediction tasks [38]. Du et al. developed a reinforcement learning-based product value prediction model that incorporates various market information for dynamic pricing, significantly improving prediction accuracy [39]. Similarly, Cong et al. applied reinforcement learning to price cloud services based on user-perceived value [40]. Additionally, Wang et al. proposed a product value prediction model based on data fluctuation networks, introducing time series into product value research using deep learning methods, which holds significant importance [41].

Most of the existing literature focuses on the impact of social dimensions on product value, such as functional value and social identity, but neglects the role of consumers’ own emotions. For consumers, whether or not they purchase a product is not only influenced by its value, but is also (and more often) driven by their own emotions. So far, the relationship between emotional fluctuations and product value has not been fully studied. Therefore, the aim of this article is to explore the relationship between changes in product value and consumer emotions.

2.2. Research on Online Comments

Online comments represent consumer opinions about products. With the rapid development of the Internet, online comments play an increasingly important role in online shopping [42]. Current research on online comments primarily follows two main lines: usefulness and sentiment analysis.

Research on the usefulness of online comments helps consumers identify which comments are more helpful in making decisions, thereby improving satisfaction and purchase efficiency. Therefore, for online shopping platforms, accurately assessing the usefulness of online comments and developing corresponding ranking strategies is crucial [43]. However, the usefulness of online comments is a multidimensional concept influenced by various factors with different mechanisms [44]. Scholars have approached this topic from multiple angles, including specificity and diversity [45], visual cues [46], topics [47], social media features [48], and reviewer avatars [49]. However, despite the depth of these studies, research on the usefulness of reviews is still limited to the characteristics of the reviews themselves. Some other factors, such as product value, can better influence consumer behavior through emotions. As a result, the sentiment analysis of online comments has become a growing research focus.

Sentiment analysis is a method for determining the emotional tendency of customers based on a given text [50]. It primarily employs lexicon-based and machine learning methods [51]. For example, Dang et al. developed a lexicon-enhanced method that achieves more accurate sentiment classification [52]. Other improvements based on machine learning methods have also led to effective extraction of sentiment features [53,54]. These methods have varying effects on different types of text comments. Additionally, the most discussed topics in sentiment analysis research include sentiment lexicons and knowledge bases, aspect-based sentiment analysis, and social network analysis [55]. Khoo et al. developed a new general-purpose sentiment lexicon and validated its superiority in non-comment texts [56]. Other researchers have used binary and six-level difficulty strategies to study the difficulty of aspect-based sentiment analysis, demonstrating that these strategies can improve model performance while minimizing resource consumption [57]. Beyond methodological research, scholars have presented numerous results in the application of sentiment analysis. For example, it has been found that investor sentiment is significantly related to stock price fluctuations [58], the introduction of augmented reality positively affects consumer perception [59], the combination of quantitative information and topic sentiment features provides valuable references for sales performance prediction [60], and hotel room cleanliness and location are the most critical factors influencing customer ratings [61]. These findings collectively demonstrate the utility of sentiment analysis methods.

Despite the depth of research on sentiment analysis, the impact of product value fluctuations on consumer sentiment has largely been overlooked. Changes in product attributes often drive shifts in consumer sentiment; therefore, in this study, we aim to introduce a temporal dimension, combined with sentiment analysis models, in order to identify and analyze trends in sentiment fluctuations in online comments.

3. Research Methods

3.1. Research Design

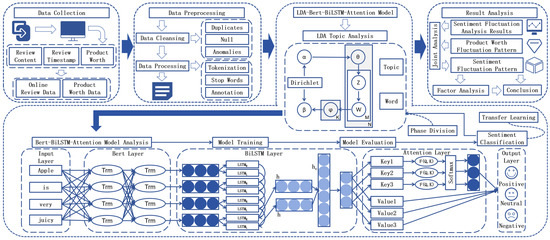

In this study, we employed multiple machine learning methods (topic models, sentiment analysis, and transfer learning) to conduct sentiment analysis on product comments from online shopping platforms. The sentiment analysis results were then combined with product value to explore the relationship between product value changes and consumer sentiment variations. The research process was divided into four parts: data collection, data processing, sentiment analysis, and result analysis. The research framework is illustrated in Figure 1.

Figure 1.

Research framework.

- (1)

- Data Collection

The value of most products can be influenced by unexpected factors. For instance, the outbreak of the COVID-19 pandemic altered public sentiment toward masks, breakthroughs in 5G technologies have diminished interest in 4G devices, and public opinion shifts have led to a decline in the reputation of certain branded products. In the agricultural sector, product value is particularly susceptible to fluctuations due to storage duration. Because the scale of apple production and sales is huge, with the characteristic of post-harvest storage and year-long sales cycle [28], in this study, we selected apples as the research subject. Apples are among the most widely cultivated fruits globally, favored by consumers for their high nutritional value and distinctive flavor [62]. As the world’s largest producer and consumer of apples, China accounted for 54.07% of global production and 67.17% of global consumption in 2022 [63]. Therefore, in this study, we focused on apples in the Chinese market to analyze the impact of product value changes on online comments, which holds significant representativeness among various online retail products.

Furthermore, considering factors such as sales volume and market coverage, in this study, we selected all major fruit-related e-commerce platforms in China (e.g., JD, Taobao, Dingdong Maicai, NetEase Yanxuan, Suning, Vipshop, and PuPu Supermarket) as data sources for online comments. A Python-based web crawler (Octoparse 8.7.2) was employed to collect review data for apple products with sales durations exceeding one year. The collected data attributes include the username, the comment content, and the comment timestamp.

- (2)

- Data Processing

Python-based data processing techniques were applied to remove duplicates, null values, and outliers, ensuring the cleanliness of the raw data. Subsequently, the Jieba word segmentation tool was used to tokenize the text [64], remove stop words, and merge synonyms for subsequent model training. The stop word dictionary in this article refers to the Harbin Institute of Technology Stop Word List, published by the Information Retrieval Research Center of Harbin Institute of Technology, and the synonym dictionary refers to the Harbin Institute of Technology Synonym Forest.

- (3)

- Sentiment Analysis

First, a topic model was applied to analyze online comments and identify key consumer concerns. Next, deep learning methods were used to pre-train the labeled data, and transfer learning was employed to classify sentiment in unlabeled reviews. Finally, the sentiment classification results were integrated with product value fluctuation characteristics for further analysis (Figure 1).

- (4)

- Result Analysis

Conclusions and recommendations were proposed based on the observed patterns of consumer sentiment changes and product value fluctuations.

3.2. Model Design for Sentiment Analysis

The sentiment analysis in the third part of Figure 1 requires multiple models, the main structures of which are depicted in the lower section of Figure 1. Sentiment analysis is a subfield of NLP (natural language processing) aimed at extracting and analyzing knowledge from subjective information posted online [65]. Since sentiment analysis requires contextual semantic representations from large-scale text data, pre-trained models such as BERT (Bidirectional Encoder Representation from Transformers) and GPT (Generative Pre-trained Transformer) are commonly used in practice. However, in specialized domains, pre-trained models may underperform due to their inability to recognize domain-specific terminology, prompting researchers to combine multiple approaches. For example, integrating an attention-based BiLSTM (Bidirectional Long Short-Term Memory) model with transfer learning can compensate for the shortcomings of pre-trained models in aspect-level sentiment classification [66], while word augmentation techniques can enhance model generalization [67].

In this study, the deep learning model LBBA (LDA-Bert-BiLSTM-Attention) consisted of two components: an LDA (Latent Dirichlet Allocation) topic model and a BERT-BiLSTM-Attention sentiment classification model. From a data requirement perspective, both the LDA and BERT-BiLSTM-Attention models necessitate a sufficiently large text dataset, which aligns with the online comments used in this study. Analytically, both models extract semantic features for analysis, consistent with this study’s objectives. Additionally, the LDA model focuses on text content identification, whereas the BERT-BiLSTM-Attention model emphasizes sentiment classification in sequential data, corresponding to the product value fluctuations in this study. Hence, the LBBA model was selected for the research methodology.

- (1)

- LDA Topic Model

LDA is a widely used topic modeling approach that categorizes words in texts into different themes to better understand textual information [68]. A fundamental principle of LDA is that people tend to use similar words when discussing the same topic, leading to repeated occurrences of related terms in texts on the same subject [69].

A key advantage of the LDA model is its ability to identify latent themes in large-scale text data without predefined topic labels while maintaining analytical depth and quality [70]. This feature contributes to its popularity in text analysis. Moreover, LDA does not require term selection to construct a document–term matrix, making it highly adaptable for diverse tasks [71].

- (2)

- BERT-BiLSTM-Attention Model

The BERT-BiLSTM-Attention model demonstrates strong performance in sentiment classification tasks for sequential text data. This model comprises five layers: an input layer, a BERT layer, a BiLSTM layer, an attention layer, and an output layer (Figure 1). First, the BERT model is pre-trained to extract contextual features from review texts using its bidirectional Transformer architecture, generating word embeddings that capture contextual information. Next, the extracted textual features are fed into the BiLSTM layer to identify sentiment characteristics in consumer comments, with an attention mechanism applied to assign varying weights to different words. Finally, a classifier in Na et al. categorizes the weighted features [68].

The BERT model employs bidirectional Transformers to achieve bidirectional semantic representations, jointly refining context across all layers through the output layer and therefore significantly enhancing feature extraction and contextual fusion capabilities. The BiLSTM model, with its bidirectional LSTM structure, effectively learns contextual information and shares insights, outperforming traditional machine learning methods in sentiment analysis tasks. The attention mechanism addresses long-term dependency and term importance issues, improving the model’s ability to capture word dependencies and emphasize key terms, thereby enhancing classification accuracy.

Thus, in this study, we adopted a three-tier architecture combining a pre-trained BERT, BiLSTM, and an attention mechanism. This design ensures superior performance in processing sequential text data, aligns with product value fluctuation patterns, and strengthens key sentiment feature extraction, demonstrating the model’s effectiveness.

4. Research Results

4.1. Data Collection and Preprocessing

Online comments contain rich emotional information, which can help other consumers mitigate potential risks when shopping [72]. For this study, we collected online comments about apple products generated by consumers across all fruit-related e-commerce platforms in China as the dataset.

During the data collection phase, web crawlers were employed to gather online comments for 112 apple products with sales records spanning over one year (2016–2024) from multiple platforms. For each product, the first 100 pages of comments were collected, resulting in a total of 69,263 comments. Table 1 presents examples of the collected data. Here, “Sentiment Orientation” indicates the emotional tendency of the comment, which, consistently with Zhang et al., is categorized into three types: positive, neutral, and negative [73].

Table 1.

Examples of online apple comments.

In the data preprocessing stage, the following steps were taken: (1) removal of null and duplicate values, resulting in 66,193 valid reviews, and (2) elimination of stop words and merging of synonyms. The synonym lexicon was constructed based on the Harbin Institute of Technology Synonym Thesaurus (Extended Edition).

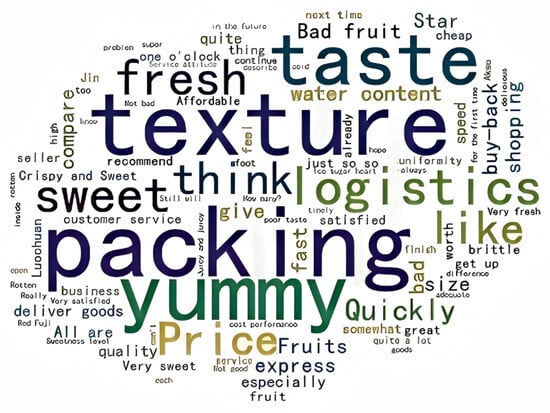

Subsequently, a word frequency table was generated through text mining, and a word cloud was created (Figure 2). From an observational perspective, it is evident that consumers prioritize “texture,” with frequent mentions of terms such as “taste,” “yummy,” and “sweet.” Additionally, other keywords such as “packaging,” “logistics,” and “price” reflect consumer concerns in other dimensions, prompting further exploration of customer sentiment.

Figure 2.

Word cloud of online apple comments.

4.2. Topics and Sentiment Analysis

After preprocessing, the LBBA model was employed for sentiment analysis. First, the LDA topic model was used to extract sentiment features. Next, the Bert-BiLSTM-Attention model was trained, and the optimized parameters were applied to prediction tasks. Finally, the LDA model was reused to analyze sentiment dynamics.

- (1)

- Overall LDA Topic Analysis

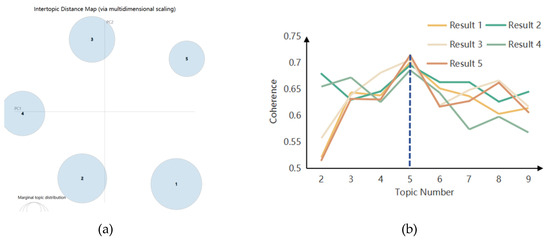

In LDA topic analysis, each topic is iterated thirty times, and the bubble size represents the frequency of the corresponding topic [74]. Setting the number of topics to seven or eight resulted in overlapping topic bubbles. Reducing the topic count to five ensured independent, non-overlapping bubbles. Figure 3a illustrates the visualization-based determination of the optimal topic count. Determining the number of topics through topic consistency is also a common method. Theme consistency demonstrates the ability of the model to extract semantic words that support theme quality [75], with higher values being better [76]. Figure 3b shows how topic consistency changes with the number of topics. Due to the imprecise nature of LDA, multiple experiments were conducted to eliminate errors. It can be observed that when the number of topics is five, the consistency of topics reaches its maximum value and then continuously decreases. Therefore, the final number of topics for the LDA model was determined to be five.

Figure 3.

Determination of number of LDA topics: (a) Topic quantities via LDA visualization. (b) Change in topic coherence.

Five major topics were identified from consumer comments about apples, and keywords were assigned to each topic for labeling. The results revealed that consumers primarily focused on five aspects: sales service, mouthfeel, size, sweetness, and blemish. In Table 2, the weight distribution of these themes was 0.251, 0.203, 0.232, 0.191, and 0.123, indicating consumer priorities as follows in descending order: sales service > mouthfeel > size > sweetness > blemishes.

Table 2.

Topic distribution and keywords in apple comments.

- (2)

- Bert-BiLSTM-Attention Sentiment Analysis

Based on the topic analysis results in Table 2, the Bert-BiLSTM-Attention model (developed using PyTorch) was used for sentiment classification. Of the 66,193 valid samples, 40,653 were selected for training and testing (28,457 for training and 12,196 for testing).

The hardware setup includes a Xeon Platinum 8352V CPU produced by Intel Corporation in the United States, RTX4090 GPU produced by NVIDIA Corporation, and 24 GB RAM produced by Micron. The loss function was sparse categorical cross-entropy. The optimizer was AdamW, a variant of Adam with hyperparameter independence and enhanced regularization [77]. The learning rate was set to 1 × 10−5, the batch size to 32, the output size to three (for three sentiment classes), the hidden size (Bert-aligned) to 768, and the epochs to 50.

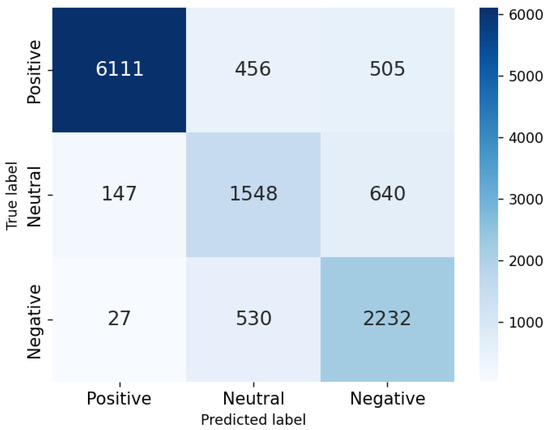

The confusion matrix (Figure 4) summarizes the classification results. For instance, the element 6111 denotes correctly classified positive reviews, while 1548 represents correctly identified neutral reviews. The evaluation metrics (test accuracy, test recall, test precision, and F1-score) of the Bert-BiLSTM-Attention model can be calculated from the confusion matrix in Figure 4. The test accuracy is 0.811, indicating that the model correctly classified 81.1% of the data, and the test recall is 0.776, demonstrating that 77.6% of the samples were accurately classified, while the test precision is 0.748, meaning that among the samples predicted as positive, 74.8% were correctly classified. The F1-score is 0.758, suggesting that the model achieves a balanced performance between precision and recall, effectively accomplishing the classification task.

Figure 4.

Confusion matrix of Bert-BiLSTM-Attention model.

In addition, to verify the classification ability of the Bert-BiLSTM-Attention model, comparative experiments were conducted by introducing machine learning model SVM and deep learning models Bert and Bert-BiLSTM. Table 3 shows the experimental results, and through comparison, it was found that the deep learning model Bert-BiLSTM-Attention has a higher accuracy, F1 score, recall, and precision compared to the machine learning model SVM, indicating that the deep learning model is more suitable for this type of task. In addition, the Bert-BiLSTM-Attention model with added attention mechanism is also superior to the Bert and Bert-BiLSTM models, indicating that the attention mechanism successfully captures the key points in the sentence. Overall, the Bert-BiLSTM-Attention model has good classification ability and can effectively complete the aim of this study.

Table 3.

Performance comparison between Bert-BiLSTM-Attention model and baseline model.

The trained Bert-BiLSTM-Attention model was applied to predict sentiment labels in consumer comments via transfer learning. Out of 66,193 valid online comments, 43,915 were positive, 10,301 were neutral, and 11,977 were negative. The sentiment analysis based on product value will be discussed in detail in the subsequent sections.

4.3. Analysis of Product Value and Sentiment Fluctuations in Comments

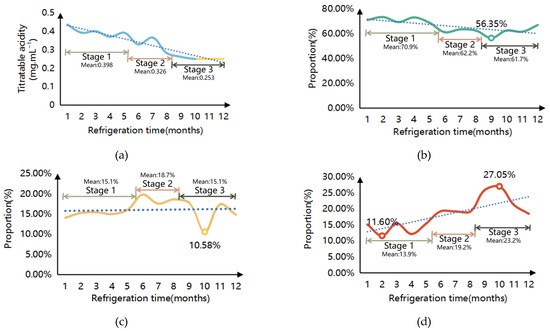

To analyze the value of apples in this study, we referred to the research by Dambros et al., where apple quality was represented by titratable acidity [28]. As shown in Figure 5a, the horizontal axis indicates the post-harvest cold storage duration (months), while the vertical axis represents titratable acidity. The blue curve is derived from the data in Dambros et al.’s study, whereas the yellow curve extends the trend to 12 months by assuming that the data for the last three months (the 10th, 11th, and 12th months) remain the same as in the 9th month. This assumption is based on the premise that apple quality does not improve with prolonged storage [78].

Figure 5.

Changes in product value and the proportion of online comment sentiments: (a) Changes in the value of apples. (b) The proportion of comments with positive sentiments. (c) The proportion of comments with neutral sentiments. (d) The proportion of comments with negative sentiments.

Furthermore, the figure reveals that apple value remains relatively stable in the first 5 months (Stage 1), experiences significant fluctuations from the 6th to the 8th month (Stage 2), and gradually declines before stabilizing from the 9th to the 12th month (Stage 3). Accordingly, we categorized the annual changes in apple value into three stages and conducted variance analysis on product value and various emotions to verify the rationality of the classification in this study. p-values, F-statistics, means, and other parameters of one-way ANOVA at different stages are presented in Table 4. Among them, the p-values of apple value, positive emotion, neutral emotion, and negative emotion are all less than 0.5, indicating significant differences between groups and demonstrating the rationality of this classification method.

Table 4.

ANOVA (analysis of variance) at different stages.

By correlating the results of sentiment analysis with the apple value stages, Figure 5b–d illustrates the proportion of each sentiment over time (horizontal axis: post-harvest storage duration; vertical axis: the proportion of comments with different sentiments). Key observations include the following:

- Positive and neutral sentiments exhibit minor fluctuations in Stages 1 and 2 but become more volatile in Stage 3, often reaching their lowest proportions at certain points. For instance, positive sentiment drops to its lowest level (56.35%) in the 9th month, while neutral sentiment hits its lowest (10.58%) in the 10th month (Figure 5b,c). Additionally, the overall proportion of positive sentiment tends to decline across stages.

- Negative sentiment remains low in Stage 1, rises slightly in Stage 2, and peaks in Stage 3 before receding. Specifically, negative sentiment reaches its minimum (11.6%) in the 2nd month, gradually increases, and peaks at 27.05% in the 10th month (Figure 5d). Thus, negative sentiment generally increases with the progression of stages.

In summary, consumer sentiment undergoes significant shifts in response to product value changes. To systematically investigate the distinct collective dynamics exhibited by online comments across different stages in Figure 5, we focused on the most pronounced fluctuations—particularly in negative sentiment.

4.4. Analysis of Negative Reviews Based on Product Value

Negative comments often exert a stronger diagnostic influence on consumer decisions than positive ones [79,80]. To determine whether there is a causal relationship between the fluctuation of negative comments and changes in product value, we conducted a correlation analysis between product value and negative comments. The results are shown in Table 5, where “titratable_acidity” represents product quality, “negative_sentiments” represents the proportion of negative emotions, and “neutral_sentiments” and “negative_sentiments” represent the proportion of neutral and positive emotions. It can be observed that the correlation between the proportion of negative emotions and product quality is −0.778, which corresponds to the previous research conclusion that negative emotions increase as product quality decreases.

Table 5.

Pair-wise Pearson correlations of non-dummy variables.

In addition, to examine sentiment fluctuations in relation to product value, we applied the LDA model to analyze topics in negative comments. The optimal number of topics was determined based on topic overlap.

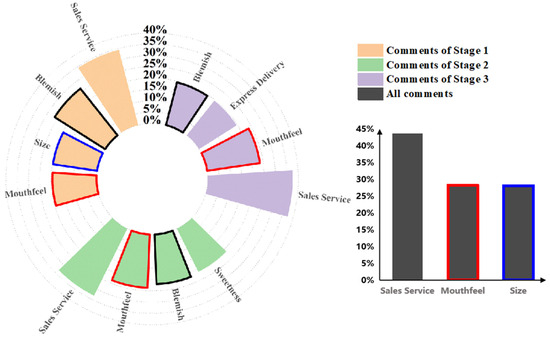

First, following prior methodologies, we conducted LDA topic modeling on all negative reviews (“All comments” in Figure 6). Key topics included sales service (post-purchase support), mouthfeel (textural sensations during and after consumption), and size (physical dimensions).

Figure 6.

LDA analysis results of classified and unclassified negative comments.

Existing research typically recommends that companies prioritize improving these factors. However, consumer sentiment toward certain attributes (e.g., mouthfeel) may shift with product value degradation [78]. Thus, we further analyzed negative reviews by stage to uncover sentiment dynamics.

As illustrated in Figure 6, “Comments of Stage 1” are the LDA results for Stage 1 negative reviews. “Comments of Stage 2” represents the LDA results for Stage 2 negative reviews. “Comments of Stage 3” represents the LDA results for Stage 3 negative reviews.

Key findings include the following:

- Stage-specific relevance of factors: Some globally significant topics (e.g., size) only dominate in certain stages (Stage 1), indicating that aggregate analyses may overlook stage-specific issues.

- Emergence of stage-critical factors: Topics absent in the aggregate analysis (e.g., blemish) appear prominently in specific stages, underscoring the need for stage-wise examination.

- Dynamic topic rankings: The prominence of certain topics (e.g., mouthfeel) shifts across stages, suggesting that their impact is closely tied to product value fluctuations.

This analysis confirms that consumer sentiment evolves with product value, necessitating adaptive sales strategies. Regardless of whether online comments are classified or not, sales service is one of the important reasons for causing negative sentiments among consumers. For other factors, companies should fully consider the relationship between product value and the sentiment of online comments when formulating sales strategies.

5. Discussion

5.1. Does the Sentiment of Online Comments Shift with Fluctuations in Product Value?

In this study, we found that the sentiment of online comments changes alongside variations in product value. This shift may, to some extent, be directly related to fluctuations in product value. For instance, a decline in product quality during sales leads consumers to express more negative sentiments. Similarly, some scholars have found a correlation between the quality of online comments and the price range of products [81]. Therefore, companies must closely monitor product quality during production to ensure consistency. For products where quality deterioration is inevitable (e.g., perishable goods such as fruits), companies should develop targeted marketing strategies to mitigate adverse effects through proactive efforts.

Additionally, these sentiment shifts may also correlate with evolving societal values and trends. For example, growing environmental concerns have heightened consumer preference for green products [82], while public scrutiny has cast doubt on food with additives [83]. To address such issues, companies should strengthen public relations while upholding social responsibilities. To further examine whether direct relationships exist between review sentiment shifts and specific factors, canonical correlation analysis methods such as Pearson’s r [84], regularization [85], and sparse weighting can be employed [86].

5.2. If Online Comment Sentiment Exhibits Inconsistency, What Are the Characteristics of This Variation?

At different stages of product value, online comments reflect varying degrees of concern toward different factors. This diverges from prior research, which predominantly focused on the sentiment analysis of all reviews collectively while overlooking dynamic sentiment shifts across product value stages [68]. By analyzing reviews at distinct product value phases, this study identifies patterns in sentiment variation. Such granular analysis enables companies to implement stage-specific improvements. For example, factors prominent in aggregate analysis might only appear in certain stages, allowing companies to allocate resources efficiently. Conversely, factors negligible in overall analysis but persistent across stages may indicate chronic issues requiring long-term resolution. Special attention should be paid to factors that are highly sensitive to changes in product value, as they may reflect consumers’ perceived value [30]. In addition, according to Festinger’s theory of cognitive dissonance, consumers experience disharmonious negative psychological emotions such as doubt, anxiety, and regret after purchasing, which can lead to dissatisfied behavior [87]. Enterprises should take measures in advance to help consumers adjust their product awareness, build the correct perceived value of the product, and reduce or eliminate dissatisfaction behavior.

In summary, companies should adopt multifaceted management strategies to address these dynamics. For instance, integrating an enhanced Bass model for sales forecasting across value stages can inform production planning [88]. Alternatively, customer relationship management can guide tailored strategies at each stage. Such approaches enhance customer service and market precision [89].

6. Conclusions and Implications

6.1. Conclusions

In this study, we employed multiple machine learning methods to analyze online comments’ sentiment from a product value perspective, revealing shifts in consumer sentiment with product value changes. Further investigation of negative sentiment uncovered a pattern: the proportion of negative sentiment factors increases as product value declines. These findings hold significant theoretical and practical implications. By introducing the perspective of product value, online reviews can be divided into different stages, which promotes sentiment analysis research and inspires future research on product value and online reviews, such as product value and the usefulness of online reviews. Additionally, this study offers e-commerce platforms and firms a new framework for comment management—recognizing that consumer sentiment varies by product value—and emphasizes monitoring sentiment fluctuations for timely interventions. While the conclusions of this study cannot be directly generalized to the management of online comments for all value-volatile products, the research framework and findings provide important insights for other product categories. The proposed methodology can be directly applied to other contexts, such as classifying online comments for agricultural products based on intervals of quality deterioration, conducting seasonal classification analyses of online comments for tourism products, or categorizing consumer electronics comments according to market acceptance of technological features. Such studies will enable sellers to more objectively analyze the underlying product value factors reflected in diverse consumer perspectives.

6.2. Theoretical Implications

The findings of this study expand our theoretical understanding of online comment management. The product value-based sentiment analysis model for online comments can more objectively reflect consumer attitudes. Existing studies often analyze online comments as an aggregated whole to extract the diverse sentiments expressed by consumers within these data. While this study paradigm holds significant value for products with relatively stable value, it may prove inadequate for products experiencing rapid value fluctuations.

The first theoretical contribution of this paper lies in examining the phased fluctuation characteristics of online comments in response to product value changes, thereby revealing the objective existence of this correlation. Conventional approaches that treat all online comments as a unified entity inevitably overlook the critical issue that “consumer sentiment may shift as product value declines.”

Secondly, phased sentiment analysis enables precise identification of how product value changes influence online comments, providing a theoretical foundation for accurately interpreting consumers’ online purchasing behaviors. This study also carries implications for NLP, as developing NLP models based on product value fluctuations can enhance the real-world explanatory power of data mining outcomes.

Furthermore, when constructing theoretical models of online consumption behavior, scholars may incorporate product value as a control variable to analyze discrepancies between product value and consumers’ perceived value, thereby explaining cognitive dissonance in consumers’ evaluation of products versus their online comments. This study elucidates the mechanism through which product value fluctuations drive sentiment variations in online comments, extending the product value variable to theoretical domains such as sentiment analysis, NLP, and consumer behavior research.

6.3. Managerial Implications

Regarding the managerial implications, both e-commerce platforms and sellers can benefit from the results. E-commerce platforms can gain a clearer understanding of the phased fluctuation characteristics of online comments, thereby developing more reasonable recommendation algorithms or ranking display mechanisms. When an e-commerce platform detects collective sentiment fluctuations in a product’s online comments, it should analyze whether the underlying cause stems from shifts in product value. If product value volatility is identified as the primary driver, the platform’s intervention and moderation mechanisms should be designed based on the comment characteristics observed across comparable value cycles—rather than reacting solely to short-term changes in positive or negative ratings. Furthermore, the platform’s recommendation algorithms and personalized systems ought to incorporate product value dynamics as a control variable, enabling the generation of consumer-centric recommendations that account for value-induced sentiment patterns. A value cycle-based product ranking or classification system could also enhance transparency, allowing consumers to intuitively assess a product’s current market positioning.

For sellers, grounded in the objective reality of product value fluctuations, more effective strategies for managing online comments and product quality can be formulated. Sellers need to establish product-specific value-tracking systems. For high-value products, a surge in negative comments should trigger immediate intervention. However, when a product transitions from high to low value, the decision to intervene must be informed by comparative analysis against comments from analogous value cycles: corrective action is warranted only if negativity exceeds historical norms for that value phase. Aligning with this study’s findings, real-time sentiment monitoring systems should evaluate not only contemporaneous comment sentiments but also their divergence from patterns in matched value periods. Such systems would optimize customer relationship management strategies and inform data-driven product quality interventions.

6.4. Limitations and Future Research

While this study provides valuable insights into the impact of product value on sentiment fluctuations in online comments, it has several limitations pertaining to dataset constraints, model limitations, and potential variables.

First, the analysis is confined to a single product category dataset. Although many products exhibit typical value fluctuation patterns, this study selected only the most representative product data due to observed variations in value fluctuation cycles, market scales, and significant disparities in online comment volumes across different products during preliminary data screening. Potential biases inherent in this data may compromise the validity of the findings. Future research should incorporate cross-category comparative studies to examine sentiment evolution characteristics across diverse product types, which would help determine whether the product value–comment sentiment relationship remains consistent across different products.

Additionally, while this study primarily employs the LBBA model and provides comparative analyses with four alternative models in Table 3, computational discrepancies among different models may exist, potentially limiting the generalizability of the proposed framework. The current model is restricted to textual data analysis and operates within predefined product value metrics, both of which may influence research conclusions. Subsequent studies could develop a multimodal intelligent sentiment analysis model for online comments from a dynamic product value perspective, integrating textual, visual, and audio/video comments to automatically identify phased fluctuation patterns. Such models could significantly enhance the accuracy and scalability of staged sentiment analysis.

A final limitation involves unaddressed potential variables such as promotional effects and regional economic disparities. Consequently, the conclusions may exhibit reduced validity in specific market contexts. Future investigations should examine how product value influences comment sentiment across different markets or cultural backgrounds, thereby strengthening the theoretical foundation for understanding the product value–online comment relationship.

Author Contributions

Conceptualization, J.L. and J.S.; methodology, J.S.; software, J.S.; validation, J.L.; formal analysis, J.L.; investigation, J.L.; resources, J.S.; data curation, J.S.; writing—original draft preparation, J.S.; writing—review and editing, J.L.; visualization, J.S.; supervision, J.L.; project administration, J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China [grant number 72171121] and the Postgraduate Research & Practice Innovation Program of Jiangsu Province [grant number KYCX25_1270].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ghasemaghaei, M.; Eslami, S.P.; Deal, K.; Hassanein, K. Reviews’ length and sentiment as correlates of online reviews’ ratings. Internet Res. 2018, 28, 544–563. [Google Scholar] [CrossRef]

- Xiao, L.; Ni, Y.; Wang, C. Research on the pricing strategy of e-commerce agricultural products: Should presale be adopted? Electron. Commer. Res. 2025, 25, 2039–2066. [Google Scholar] [CrossRef]

- Davis, J.M.; Agrawal, D. Understanding the role of interpersonal identification in online review evaluation: An information processing perspective. Int. J. Inf. Manag. 2018, 38, 140–149. [Google Scholar] [CrossRef]

- Fang, C.; Ma, S. Home is best: Review source and cross-border online shopping. Electron. Commer. Res. Appl. 2024, 68, 101457. [Google Scholar] [CrossRef]

- Hu, N.; Pavlou, P.A.; Zhang, J. On Self-Selection Biases in Online Product Reviews. MIS Q. 2017, 41, 449–471. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. Research Note: What Makes a Helpful Online Review? A Study of Customer Reviews on Amazon.com. MIS Q. 2010, 34, 185. [Google Scholar] [CrossRef]

- Deng, Y.; Zheng, J.; Khern-am-nuai, W.; Kannan, K. More than the Quantity: The Value of Editorial Reviews for a User-Generated Content Platform. Manag. Sci. 2022, 68, 6865–6888. [Google Scholar] [CrossRef]

- Khern-am-nuai, W.; Kannan, K.; Ghasemkhani, H. Extrinsic versus Intrinsic Rewards for Contributing Reviews in an Online Platform. Inf. Syst. Res. 2018, 29, 871–892. [Google Scholar] [CrossRef]

- Huang, J.; Xue, Y.; Hu, X.; Jin, H.; Lu, X.; Liu, Z. Sentiment analysis of Chinese online reviews using ensemble learning framework. Clust. Comput. 2019, 22, 3043–3058. [Google Scholar] [CrossRef]

- Zhou, H.; Wu, J.; Chen, T.; Xia, M. Synergy or substitution? Interactive effects of user-generated cues and seller-generated cues on consumer purchase behavior toward fresh agricultural products. Electron. Commer. Res. 2025. [Google Scholar] [CrossRef]

- Almeida, C.; Castro, C.; Leiva, V.; Braga, A.C.; Freitas, A. Optimizing Sentiment Analysis Models for Customer Support: Methodology and Case Study in the Portuguese Retail Sector. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1493–1516. [Google Scholar] [CrossRef]

- Hussain, A.; Cambria, E. Semi-supervised learning for big social data analysis. Neurocomputing 2018, 275, 1662–1673. [Google Scholar] [CrossRef]

- Lyu, J.C.; Han, E.L.; Luli, G.K. COVID-19 Vaccine–Related Discussion on Twitter: Topic Modeling and Sentiment Analysis. J. Med. Internet Res. 2021, 23, e24435. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, A.H.; Sudhof, M.; Wilson, D.J. Measuring news sentiment. J. Econom. 2022, 228, 221–243. [Google Scholar] [CrossRef]

- Ji, F.; Cao, Q.; Li, H.; Fujita, H.; Liang, C.; Wu, J. An online reviews-driven large-scale group decision making approach for evaluating user satisfaction of sharing accommodation. Expert Syst. Appl. 2023, 213, 118875. [Google Scholar] [CrossRef]

- Robertson, C.E.; Pröllochs, N.; Schwarzenegger, K.; Pärnamets, P.; Van Bavel, J.J.; Feuerriegel, S. Negativity drives online news consumption. Nat. Hum. Behav. 2023, 7, 812–822. [Google Scholar] [CrossRef]

- Huai, S.; Van De Voorde, T. Which environmental features contribute to positive and negative perceptions of urban parks? A cross-cultural comparison using online reviews and Natural Language Processing methods. Landsc. Urban Plan. 2022, 218, 104307. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, G. Deep Learning-based Integrated Framework for stock price movement prediction. Appl. Soft Comput. 2023, 133, 109921. [Google Scholar] [CrossRef]

- Savini, E.; Caragea, C. Intermediate-Task Transfer Learning with BERT for Sarcasm Detection. Mathematics 2022, 10, 844. [Google Scholar] [CrossRef]

- Liu, H.; Wu, S.; Zhong, C.; Liu, Y. The effects of customer online reviews on sales performance: The role of mobile phone’s quality characteristics. Electron. Commer. Res. Appl. 2023, 57, 101229. [Google Scholar] [CrossRef]

- Tang, F.; Fu, L.; Yao, B.; Xu, W. Aspect based fine-grained sentiment analysis for online reviews. Inf. Sci. 2019, 488, 190–204. [Google Scholar] [CrossRef]

- Gan, Q.; Ferns, B.H.; Yu, Y.; Jin, L. A Text Mining and Multidimensional Sentiment Analysis of Online Restaurant Reviews. J. Qual. Assur. Hosp. Tour. 2017, 18, 465–492. [Google Scholar] [CrossRef]

- Li, X.; Wu, C.; Mai, F. The effect of online reviews on product sales: A joint sentiment-topic analysis. Inf. Manag. 2019, 56, 172–184. [Google Scholar] [CrossRef]

- Liu, J.; Sun, Y.; Zhang, Y.; Lu, C. Research on Online Review Information Classification Based on Multimodal Deep Learning. Appl. Sci. 2024, 14, 3801. [Google Scholar] [CrossRef]

- Chen, X.; Zou, D.; Xie, H.; Cheng, G.; Li, Z.; Wang, F. Automatic Classification of Online Learner Reviews Via Fine-Tuned BERTs. Int. Rev. Res. Open Distrib. Learn. 2025, 26, 57–79. [Google Scholar] [CrossRef]

- Pan, Y.; Xu, L. Detecting fake online reviews: An unsupervised detection method with a novel performance evaluation. Int. J. Electron. Commer. 2024, 28, 84–107. [Google Scholar] [CrossRef]

- Kalabikhina, I.; Moshkin, V.; Kolotusha, A.; Kashin, M.; Klimenko, G.; Kazbekova, Z. Advancing Semantic Classification: A Comprehensive Examination of Machine Learning Techniques in Analyzing Russian-Language Patient Reviews. Mathematics 2024, 12, 566. [Google Scholar] [CrossRef]

- Dambros, J.I.; Storch, T.T.; Pegoraro, C.; Crizel, G.R.; Gonçalves, B.X.; Quecini, V.; Fialho, F.B.; Rombaldi, C.V.; Girardi, C.L. Physicochemical properties and transcriptional changes underlying the quality of ‘Gala’ apples (Malus × domestica Borkh.) under atmosphere manipulation in long-term storage. J. Sci. Food Agric. 2023, 103, 576–589. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Wang, X. Does establishing regional brands of agricultural products improve the reputation of e-commerce? Evidence from China. Electron. Commer. Res. 2023. [Google Scholar] [CrossRef]

- Maisenbacher, S.; Fürtbauer, D.; Behncke, F.; Lindemann, U. Integrated value engineering-implementation of value optimization potentials. In DS 84, Proceedings of the DESIGN 2016 14th International Design Conference, Cavtat, Dubrovnik, Croatia, 16–19 May 2016; Faculty of Mechanical Engineering and Naval Architecture, University of Zagreb, The Design Society: Glasgow, Scotland, UK, 2016; pp. 361–370. [Google Scholar]

- Zeithaml, V.A. Consumer Perceptions of Price, Quality, & Value: A Means-End Model and Synthesis of Evidence. J. Mark. 1988, 52, 2–22. [Google Scholar] [CrossRef]

- Toivonen, R.M. Product quality and value from consumer perspective—An application to wooden products. J. For. Econ. 2012, 18, 157–173. [Google Scholar] [CrossRef]

- Hou, C.; Jo, M.-S.; Sarigöllü, E. Feelings of satiation as a mediator between a product’s perceived value and replacement intentions. J. Clean. Prod. 2020, 258, 120637. [Google Scholar] [CrossRef]

- Browning, T.R.; Deyst, J.J.; Eppinger, S.D.; Whitney, D.E. Adding value in product development by creating information and reducing risk. IEEE Trans. Eng. Manag. 2002, 49, 443–458. [Google Scholar] [CrossRef]

- Sonderegger, A.; Sauer, J. The influence of socio-cultural background and product value in usability testing. Appl. Ergon. 2013, 44, 341–349. [Google Scholar] [CrossRef] [PubMed]

- Barney, S.; Aurum, A.; Wohlin, C. A product management challenge: Creating software product value through requirements selection. J. Syst. Archit. 2008, 54, 576–593. [Google Scholar] [CrossRef]

- Yan, T.; Wagner, S.M. Do what and with whom? Value creation and appropriation in inter-organizational new product development projects. Int. J. Prod. Econ. 2017, 191, 1–14. [Google Scholar] [CrossRef]

- Chowdhery, S.A.; Bertoni, M. Modeling resale value of road compaction equipment: A data mining approach. IFAC-PapersOnLine 2018, 51, 1101–1106. [Google Scholar] [CrossRef]

- Du, Y.; Jin, X.; Han, H.; Wang, L. Reusable electronic products value prediction based on reinforcement learning. Sci. China Technol. Sci. 2022, 65, 1578–1586. [Google Scholar] [CrossRef]

- Cong, P.; Zhou, J.; Chen, M.; Wei, T. Personality-Guided Cloud Pricing via Reinforcement Learning. IEEE Trans. Cloud Comput. 2022, 10, 925–943. [Google Scholar] [CrossRef]

- Wang, M.; Tian, L.; Zhou, P. A novel approach for oil price forecasting based on data fluctuation network. Energy Econ. 2018, 71, 201–212. [Google Scholar] [CrossRef]

- Luo, L.; Duan, S.; Shang, S.; Pan, Y. What makes a helpful online review? Empirical evidence on the effects of review and reviewer characteristics. Online Inf. Rev. 2021, 45, 614–632. [Google Scholar] [CrossRef]

- Zhang, Z.; Chen, G.; Zhang, J.; Guo, X.; Wei, Q. Providing Consistent Opinions from Online Reviews: A Heuristic Stepwise Optimization Approach. Inf. J. Comput. 2016, 28, 236–250. [Google Scholar] [CrossRef]

- Qin, J.; Zheng, P.; Wang, X. Comprehensive helpfulness of online reviews: A dynamic strategy for ranking reviews by intrinsic and extrinsic helpfulness. Decis. Support Syst. 2022, 163, 113859. [Google Scholar] [CrossRef]

- Shao, Y.; Ji, X.; Cai, L.; Akter, S. Determinants of online clothing review helpfulness: The roles of review concreteness, variance and valence. Ind. Textila 2021, 72, 639–644. [Google Scholar] [CrossRef]

- Karimi, S.; Wang, F. Online review helpfulness: Impact of reviewer profile image. Decis. Support Syst. 2017, 96, 39–48. [Google Scholar] [CrossRef]

- Feng, Y.; Yin, Y.; Wang, D.; Dhamotharan, L.; Ignatius, J.; Kumar, A. Diabetic patient review helpfulness: Unpacking online drug treatment reviews by text analytics and design science approach. Ann. Oper. Res. 2023, 328, 387–418. [Google Scholar] [CrossRef]

- Pu, Z.; Li, S.; Wu, J.; Liu, Y. Social Network Integration and Online Review Helpfulness: An Empirical Investigation. J. Assoc. Inf. Syst. 2024, 25, 1563–1584. [Google Scholar] [CrossRef]

- Chen, M.-Y.; Teng, C.-I.; Chiou, K.-W. The helpfulness of online reviews: Images in review content and the facial expressions of reviewers’ avatars. Online Inf. Rev. 2019, 44, 90–113. [Google Scholar] [CrossRef]

- Zeng, D.; Dai, Y.; Li, F.; Wang, J.; Sangaiah, A.K. Aspect based sentiment analysis by a linguistically regularized CNN with gated mechanism. J. Intell. Fuzzy Syst. 2019, 36, 3971–3980. [Google Scholar] [CrossRef]

- Gong, B.; Liu, R.; Zhang, X.; Chang, C.-T.; Liu, Z. Sentiment analysis of online reviews for electric vehicles using the SMAA-2 method and interval type-2 fuzzy sets. Appl. Soft Comput. 2023, 147, 110745. [Google Scholar] [CrossRef]

- Dang, Y.; Zhang, Y.; Chen, H. A Lexicon-Enhanced Method for Sentiment Classification: An Experiment on Online Product Reviews. IEEE Intell. Syst. 2010, 25, 46–53. [Google Scholar] [CrossRef]

- Ghiassi, M.; Zimbra, D.; Lee, S. Targeted Twitter Sentiment Analysis for Brands Using Supervised Feature Engineering and the Dynamic Architecture for Artificial Neural Networks. J. Manag. Inf. Syst. 2016, 33, 1034–1058. [Google Scholar] [CrossRef]

- Yan, C.; Liu, J.; Liu, W.; Liu, X. Sentiment Analysis and Topic Mining Using a Novel Deep Attention-Based Parallel Dual-Channel Model for Online Course Reviews. Cogn. Comput. 2023, 15, 304–322. [Google Scholar] [CrossRef]

- Chen, X.; Xie, H. A Structural Topic Modeling-Based Bibliometric Study of Sentiment Analysis Literature. Cogn. Comput. 2020, 12, 1097–1129. [Google Scholar] [CrossRef]

- Khoo, C.S.; Johnkhan, S.B. Lexicon-based sentiment analysis: Comparative evaluation of six sentiment lexicons. J. Inf. Sci. 2018, 44, 491–511. [Google Scholar] [CrossRef]

- Chifu, A.-G.; Fournier, S. Sentiment Difficulty in Aspect-Based Sentiment Analysis. Mathematics 2023, 11, 4647. [Google Scholar] [CrossRef]

- Wu, D.D.; Zheng, L.; Olson, D.L. A Decision Support Approach for Online Stock Forum Sentiment Analysis. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 1077–1087. [Google Scholar] [CrossRef]

- Dong, X.; Hu, C.; Heller, J.; Deng, N. Does using augmented reality in online shopping affect post-purchase product perceptions? A mixed design using machine-learning based sentiment analysis, lab experiments, & focus groups. Int. J. Inf. Manag. 2025, 82, 102872. [Google Scholar] [CrossRef]

- Yuan, H.; Xu, W.; Li, Q.; Lau, R. Topic sentiment mining for sales performance prediction in e-commerce. Ann. Oper. Res. 2018, 270, 553–576. [Google Scholar] [CrossRef]

- Chen, Y.; Zhong, Y.; Yu, S.; Xiao, Y.; Chen, S. Exploring Bidirectional Performance of Hotel Attributes through Online Reviews Based on Sentiment Analysis and Kano-IPA Model. Appl. Sci. 2022, 12, 692. [Google Scholar] [CrossRef]

- Han, Y.; Du, J.; Bao, J.; Wang, W. Effect of apple texture properties and pectin structure on the methanol production in apple wine. LWT 2025, 216, 117369. [Google Scholar] [CrossRef]

- Cheng, J.; Yu, J.; Tan, D.; Wang, Q.; Zhao, Z. Life cycle assessment of apple production and consumption under different sales models in China. Sustain. Prod. Consum. 2025, 55, 100–116. [Google Scholar] [CrossRef]

- Zhu, X.; Zhang, Q.; Ma, B. Predicting and interpreting digital platform survival: An interpretable machine learning approach. Electron. Commer. Res. Appl. 2024, 67, 101423. [Google Scholar] [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharya, U.R. ABCDM: An Attention-based Bidirectional CNN-RNN Deep Model for sentiment analysis. Future Gener. Comput. Syst. 2021, 115, 279–294. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Z.; Zhang, T.; Yu, S.; Meng, Y.; Chen, S. Aspect-level sentiment classification based on attention-BiLSTM model and transfer learning. Knowl.-Based Syst. 2022, 245, 108586. [Google Scholar] [CrossRef]

- Feng, Z.; Zhou, H.; Zhu, Z.; Mao, K. Tailored text augmentation for sentiment analysis. Expert Syst. Appl. 2022, 205, 117605. [Google Scholar] [CrossRef]

- Na, J.; Long, R.; Chen, H.; Ma, W.; Huang, H.; Wu, M.; Yang, S. Sentiment analysis of online reviews of energy-saving products based on transfer learning and LBBA model. J. Environ. Manag. 2024, 360, 121083. [Google Scholar] [CrossRef]

- Schwarz, C. Ldagibbs: A Command for Topic Modeling in Stata Using Latent Dirichlet Allocation. Stata J. Promot. Commun. Stat. Stata 2018, 18, 101–117. [Google Scholar] [CrossRef]

- Kukreja, V. Comic exploration and Insights: Recent trends in LDA-Based recognition studies. Expert Syst. Appl. 2024, 255, 124732. [Google Scholar] [CrossRef]

- Yang, H.; Li, J.; Chen, S. TopicRefiner: Coherence-Guided Steerable LDA for Visual Topic Enhancement. IEEE Trans. Vis. Comput. Graph. 2024, 30, 4542–4557. [Google Scholar] [CrossRef]

- Fan, W.; Liu, Y.; Li, H.; Tuunainen, V.K.; Lin, Y. Quantifying the effects of online review content structures on hotel review helpfulness. Internet Res. 2022, 32, 202–227. [Google Scholar] [CrossRef]

- Zhang, M.; Sun, L.; Wang, G.A.; Li, Y.; He, S. Using neutral sentiment reviews to improve customer requirement identification and product design strategies. Int. J. Prod. Econ. 2022, 254, 108641. [Google Scholar] [CrossRef]

- Wu, M.; Long, R.; Chen, F.; Chen, H.; Bai, Y.; Cheng, K.; Huang, H. Spatio-temporal difference analysis in climate change topics and sentiment orientation: Based on LDA and BiLSTM model. Resour. Conserv. Recycl. 2023, 188, 106697. [Google Scholar] [CrossRef]

- Ihou, K.E.; Amayri, M.; Bouguila, N. Stochastic variational optimization of a hierarchical dirichlet process latent Beta-Liouville topic model. ACM Trans. Knowl. Discov. Data (TKDD) 2022, 16, 1–48. [Google Scholar] [CrossRef]

- Osmani, A.; Mohasefi, J.B.; Gharehchopogh, F.S. Enriched latent dirichlet allocation for sentiment analysis. Expert Syst. 2020, 37, e12527. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Hasan, M.U.; Singh, Z.; Shah, H.M.S.; Kaur, J.; Woodward, A. Water Loss: A Postharvest Quality Marker in Apple Storage. Food Bioprocess Technol. 2024, 17, 2155–2180. [Google Scholar] [CrossRef]

- Cui, G.; Lui, H.K.; Guo, X. The Effect of Online Consumer Reviews on New Product Sales. Int. J. Electron. Commer. 2012, 17, 3–58. [Google Scholar] [CrossRef]

- Wan, Y.; Zhang, Y.; Wang, F.; Yuan, Y. Retailer response to negative online consumer reviews: How can damaged trust be effectively repaired? Inf. Technol. Manag. 2023, 24, 37–53. [Google Scholar] [CrossRef]

- Wang, X.; Lv, T.; Cai, R.; Deng, X. Research on content analysis and quality evaluation for online reviews of new energy vehicles. Inf. Dev. 2024. [Google Scholar] [CrossRef]

- Suki, N.M. Consumption values and consumer environmental concern regarding green products. Int. J. Sustain. Dev. World Ecol. 2015, 22, 269–278. [Google Scholar] [CrossRef]

- Li, H.; Luo, J.; Li, H.; Han, S.; Fang, S.; Li, L.; Han, X.; Wu, Y. Consumer Cognition Analysis of Food Additives Based on Internet Public Opinion in China. Foods 2022, 11, 2070. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, Q.; Singh, V.P.; Xiao, M. General correlation analysis: A new algorithm and application. Stoch. Environ. Res. Risk Assess. 2015, 29, 665–677. [Google Scholar] [CrossRef]

- Zhou, X.; Shen, H. Regularized canonical correlation analysis with unlabeled data. J. Zhejiang Univ.-Sci. A 2009, 10, 504–511. [Google Scholar] [CrossRef]

- Min, W.; Liu, J.; Zhang, S. Sparse Weighted Canonical Correlation Analysis. Chin. J. Electron. 2018, 27, 459–466. [Google Scholar] [CrossRef]

- Festinger, L. A Theory of Cognitive Dissonance; Row, Peterson: Evanston, IL, USA, 1957. [Google Scholar]

- Bian, Y.; Shan, D.; Yan, X.; Zhang, J. New energy vehicle demand forecasting via an improved Bass model with perceived quality identified from online reviews. Ann. Oper. Res. 2024. [Google Scholar] [CrossRef]

- Nilashi, M.; Abumalloh, R.A.; Ahmadi, H.; Samad, S.; Alrizq, M.; Abosaq, H.; Alghamdi, A. The nexus between quality of customer relationship management systems and customers’ satisfaction: Evidence from online customers’ reviews. Heliyon 2023, 9, e21828. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).