Abstract

As smart voice assistants (SVAs) become increasingly integrated into digital commerce, understanding the psychological factors driving their adoption or resistance is essential. While prior research has addressed the impact of privacy concerns, few studies have explored the competing forces that shape user decisions. This study investigates the dual role of privacy cynicism as a context-specific belief influencing both trust (reason-for) and perceived creepiness (reason-against)—which in turn affect attitudes, behavioral intentions, and resistance toward SVA usage, based on the Behavioral Reasoning Theory (BRT). The study used a convenience sampling method, gathering data from 250 Turkish consumers aged 18–35 through an online survey technique. The research model was analyzed using PLS-SEM. The findings revealed that perceived creepiness increases resistance intention but does not significantly affect attitudes toward using SVAs. Perceived cynicism was found to positively influence perceived trust, and perceived trust, in turn, increased both behavioral intentions and attitudes toward using SVAs. Furthermore, attitudes toward SVA usage decreased resistance intention but increased behavioral intention. The results emphasize consumer trust and skepticism in AI-driven marketing. The study offers both theoretical contributions by extending BRT with a novel dual-path conceptualization of privacy cynicism, and practical implications for developers aiming to boost SVA adoption through trust-building and privacy assurance strategies.

1. Introduction

The swift integration of artificial intelligence (AI) into business environments is transforming the operational landscape of modern organizations and prompting fundamental shifts in a strategic direction [1]. Within this rapidly evolving context, AI-powered smart voice assistants (SVAs) have emerged as intelligent software systems that use natural language processing (NLP) to recognize, interpret, and respond to human speech in a natural, human-like manner [2]. Advancements in NLP and voice recognition technologies have significantly enhanced SVAs’ ability to process human language, resulting in more fluid, intuitive, and lifelike interactions between consumers and machines [3].

As a result of these technological developments, SVAs have evolved into key marketing tools, reshaping traditional models of consumer–company interaction [4]. Increasingly, consumers prefer fast, hands-free, and frictionless solutions—needs that SVAs are uniquely positioned to meet [5]. These assistants now play a central role in facilitating everyday activities, such as online shopping, information retrieval, and smart home control, all through voice commands [6,7]. In response to growing consumer expectations for speed, ease of use, and convenience, SVAs have become among the most widely adopted AI applications in day-to-day life.

The American Marketing Association has identified SVAs as the future of marketing, suggesting that these technologies may ultimately replace traditional mobile shopping apps. Their integration with popular messaging platforms such as Facebook, WhatsApp, and Skype enables consumers to interact with brands through conversational interfaces, creating seamless and intuitive shopping experiences [8]. Market forecasts echo this enthusiasm. The Market Research Future Report [9] estimates that the global voice agent market will exceed USD 24.98 billion by 2030, with a projected compound annual growth rate (CAGR) of 24.2%. Similarly, Future Market Insights [10] predicts that the conversational commerce sector will reach USD 26.3 billion by 2032.

Despite these optimistic projections and the technical capabilities of SVAs, their real-world adoption has been less robust than anticipated. Some recent reports even point to stagnation or a decline in usage rates among both consumers and retailers [11]. This disconnect between technological promise and behavioral reality underscores a critical need to understand not only the motivators but also the psychological and perceptual barriers to SVA adoption. Existing research has primarily focused on overall SVA adoption [5,12,13,14,15], using well-established frameworks such as the “technology acceptance model” (TAM) [16], the “Unified Theory of Acceptance and Use of Technology” (UTAUT) [17], and the “service robot acceptance model” (SRAM) [18]. However, given the increasing complexity of smart technologies and the emotional and cognitive tensions they provoke, there is a growing need for more comprehensive frameworks that also account for resistance behaviors alongside adoption intentions [6].

To address this research gap, the present study investigates the following question: “What are the motivators and barriers influencing consumers’ use of SVAs for online shopping?” Drawing on insights from consumer behavior and SVA literature, this study applies Behavioral Reasoning Theory (hereafter BRT) as a theoretical foundation. BRT examines the relationships among individual beliefs, values, reasons (both for and against behavior), global motives, intentions, and actual behavior. Unlike traditional consumer behavior theories that emphasize unidirectional influences, BRT enables researchers to capture the competing motivations behind adoption and resistance simultaneously [19].

BRT plays a critical role in this study by offering a structured dual-pathway model for understanding both positive and negative behavioral intentions toward SVAs. Within this framework, perceived trust serves as a “reason for” adoption, while perceived creepiness functions as a “reason against”, both shaped by privacy cynicism, which we conceptualize as a core value-based belief. The model also includes attitude as a global motive mediating the effects of trust and creepiness on consumers’ adoption and resistance intentions. This dual-path approach allows for a more comprehensive view of consumer behavior by accounting for the internal tensions and ambivalence often associated with AI-driven technologies. By doing so, it not only explains why consumers adopt SVAs but also why they may resist them, thereby offering marketers and developers actionable insights for improving user experience and reducing psychological barriers to adoption.

Given the increasing relevance of privacy in digital commerce, this study places special emphasis on privacy-related constructs. According to a recent PwC report [20], consumer concerns around data collection and sharing are among the most prominent reasons for resistance to SVAs (p. 4). In this context, we examine how privacy cynicism, perceived creepiness, trust, and attitude collectively shape consumers’ behavioral intentions toward SVA usage. Data were collected via a convenience sample of Turkish consumers aged 18–35 years, all of whom have experience using SVAs. The responses (n = 250) were analyzed using partial least squares structural equation modeling (PLS-SEM) to explore the proposed relationships and assess the model’s explanatory power.

This study contributes to the literature in three key ways. First, it extends the BRT framework by addressing Sahu et al.’s [21] call for integrating constructs such as privacy cynicism and perceived creepiness into AI-focused marketing research. Second, it advances our understanding of consumer behavior by identifying privacy cynicism as a foundational belief that influences how users perceive and engage with AI technologies. This perspective reflects the growing consumer skepticism around data privacy and the trade-offs it entails. Third, it enhances the literature on SVAs by empirically validating trust and creepiness as psychological factors that explain the ambivalence many users feel toward voice-based AI systems. In an era of accelerating AI integration, understanding how consumers navigate tensions between trust and privacy is essential for both academic inquiry and practical application.

This study is organized as follows: Section 2 presents a discussion of various theoretical notions and the construction of hypotheses. Section 3 describes the sampling technique and associated measurements. Section 3 clarifies the outcomes of the data analysis. In Section 4, findings are discussed, and in Section 5, the theoretical contributions and managerial implications are elaborated, and the limitations and future recommendations are discussed.

2. Literature Review and Hypothesis Development

2.1. Smart Voice Assistants

SVAs are advanced software apps that leverage AI and NLP to comprehend and interpret human speech, enabling them to generate responses that simulate natural, human-like conversation [2]. These advanced technologies have become nearly ubiquitous in modern society [6,22], with increasing integration into everyday life. They are compatible with a diverse array of devices, including smartphones, smart speakers, computers (desktop/laptop), tablets, wearables, gaming consoles, TV sets, VR headsets, cars, and various Internet of Things (IoT) devices [23]. Voice assistants can understand spoken commands, allowing them to perform a wide variety of tasks using natural, everyday language. These tasks range from providing news updates and weather forecasts to facilitating online shopping, managing playlists, or making reservations [24]. In academic literature, these systems are referred to by various terms, including voice-based conversational agents [7], smart voice assistants [12], AI-powered conversational digital assistants [23], and voice-activated digital assistants [14].

The existing body of literature underscores the growing importance of research on SVAs, indicating a pressing need for further academic investigation [25,26]. Research in this domain has transitioned from traditional technology adoption frameworksto a broader exploration of various factors influencing SVA adoption, including the determinants of purchase intention [27], continued usage [28,29], consumer loyalty and involvement [30], as well as the effects on consumer well-being [31]. Technology adoption studies utilize prominent theories such as the TAM [32], the UTAUT [33], and the service robot acceptance model [24] to explain the adoption of SVAs. These theories are more focused on how users’ perceptions of and attitudes toward a technology influence their intention to use it, while BRT, relatively new approach, provides a broader understanding of how people weigh different reasons (both positive and negative) to decide whether to engage in a behavior, including adoption of or resistance to a technology [34]. Therefore, this study explored the adoption process of SVA usage with an online perspective in the framework of BRT.

2.2. Behavioral Reasoning Theory (BRT)

BRT, introduced by Westaby [34] in 2005, is a framework used to understand the cognitive processes that influence people’s decisions to engage in specific behaviors. It builds on earlier models like the theory of planned behavior [35] and the Theory of Reasoned Action [36] but addresses their limitations by incorporating both facilitating and inhibiting factors. It suggests that behavior is driven not only by attitudes or intentions but also by the reasons individuals perceive for taking or not taking an action. These reasons can be positive (supporting the behavior) or negative (hindering it), and they directly affect the individual’s intentions and subsequent actions. Positive reasons are referred to as “reasons for”, while negative reasons are called “reasons against” [34]. Its applications extend to various domains, such as consumer behavior [37], health interventions [38], and technology adoption [39], where it enables researchers to analyze how opposing motivational forces influence intentions and behaviors in context-specific ways. BRT provides valuable insights into decision-making, offering a deeper understanding of how people evaluate the justifications for their actions and the barriers they face in carrying them out [34].

Previous studies have applied BRT to explore the adoption and resistance to electric vehicles [40], sustainable purchasing intentions [41], webrooming behaviors [42], organic food buying behavior [43], sustainable clothing [44], and IoT-based wearables [45]. However, research on SVA adoption within the BRT framework remains underway. For instance, Jan et al. [7] employed extended behavioral reasoning theory in the context of AI-powered conversational agents’ adoption and resistance intention for shopping purposes. The study found that motivators of technology readiness (optimism, innovativeness) were positively associated with reasons for usage, and inhibitors (discomfort, insecurity) positively affected reasons against usage. Reasons for usage (perceived ease of use, perceived usefulness, perceived trendiness, perceived innovativeness) were positively related to attitudes toward usage and usage intention, while reasons against (perceived image barrier, perceived functional risk barrier, perceived intrusiveness) usage had a significant positive effect on resistance intention. The study also found that attitude toward usage was positively associated with usage intention and negatively associated with resistance intention.

Choudhary et al. [13] assess the factors that influence customers’ adoption of AI-based voice assistants, using the BRT to examine the relationships between enablers and inhibitors of adoption. Hedonic motivation, performance expectancy, social influence, price value, facilitating conditions, and effort expectancy constructs were elaborated as reasons for constructs, whereas perceived risk, the image barrier, and the value barrier were referred to as reasons against constructs. The study highlighted that “reasons for” (enablers) are far more impactful than “reasons against” (inhibitors) in attitude formation and generating adoption intentions of VAs.

In this study, the factors affecting behavioral and resistance intention toward SVAs for online shopping purposes have been investigated by using BRT. Recently, Sahu et al. [21] conducted a systematic literature review on BRT and suggested enhancing the existing framework by incorporating new context-specific exogenous variables. To respond to this call, perceived creepiness and perceived trust are integrated into the framework as reason against and reason for constructs, respectively, which are predictors of attitude. Also, privacy cynicism is integrated into the model as an antecedent to reason for and against constructs. By doing so, the BRT framework has been extended with new context-specific constructs, as Sahu et al. [21] suggested in the context of SVA adoption.

2.3. Perceived Cynicism and Reasons for/Against Usage

Reasons for/against are the justifications individuals make for the behavior they decide to engage in. They have a crucial role in the decision-making process of individuals. In the BRT framework, reason for/against usage variables are predicted by context-specific values or beliefs [34]. In this study privacy cynicism is examined as a belief that predicts perceived creepiness as a reason against construct and perceived trust as a reason for usage.

Cynicism refers to negative attitudes and feelings toward individuals or issues [46,47]. It usually occurs when users face difficulties, hopelessness, or disappointment, especially when their expectations are unmet [47]. While cynicism has mainly been studied in psychology and organizational contexts, studies have often focused on dyadic relationships. Research shows that cynicism is negatively related to trust in various contexts (e.g., [48,49]). In the realm of data capitalism, privacy cynicism is a new concept that reflects doubt, helplessness, and distrust toward online services regarding personal data protection [50]. Privacy cynicism is a mindset consumers develop to ignore privacy concerns while using the internet without putting much effort into protecting their privacy [51] (p. 1168).

Privacy cynicism can be defined as a belief or attitude. It is characterized by frustration, hopelessness, and disillusionment regarding privacy protection efforts, particularly in the context of data collection practices by powerful institutions like tech companies [52] (p. 149). This belief arises from feelings of powerlessness and resignation toward data handling, where individuals perceive privacy protection as futile due to the inevitability of surveillance and lack of control over their personal information [50] (p. 5). According to Lutz et al. [51], cynicism relates to users’ feelings of uncertainty and helplessness when interacting with new technologies such as chatbots and SVAs. As users engage with these technologies, they often feel a loss of control over their personal data, leading to discomfort or creepiness. Creepiness is an emotional response of consumers to the privacy issue that leads to resistance to AI agents. Rajaobelina et al. [53] showed that perceived creepiness leads to resistance intention toward smart home devices. Yip et al. [54] found that a lack of control is a key factor contributing to creepiness in children. Hoffmann et al. [50] highlighted that consumers tend to adopt a cynical attitude when they experience such a lack of control. In the context of online shopping with SVAs, consumers may feel uneasy about sharing their personal and financial data, which could lead to privacy cynicism and, ultimately, positively contribute to perceived creepiness, which is a reason against SVA usage for shopping purposes. Moreover, it is expected that privacy cynicism is an inhibitor to perceived trust, which is a reason for SVA usage for shopping purposes. Therefore, we posit the following hypotheses:

H1:

Perceived cynicism positively affects perceived creepiness toward SVA usage for online shopping purposes.

H2:

Perceived cynicism negatively affects perceived trust toward SVA usage for online shopping purposes.

2.4. Reasons for/Against Usage and Attitudes

Attitudes represent a customer’s evaluative judgment toward a specific object—such as a product, service, or brand—categorizing it as “preferred or non-preferred”, liked or disliked, “favorable or unfavorable”, or “good or bad” [55,56]. Pennington and Hastie [57] suggest that in the decision-making process, consumers tend to have positive perceptions toward adoption when the supporting reasons are stronger, while negative perceptions emerge when the reasons against adoption are more compelling. Based on BRT, studies have shown that supporting reasons for positively influence attitudes toward adoption, while reasons against negatively impact these attitudes [7,19]. Raff et al. [58] revealed that perceived creepiness is a negative perception that arises from privacy intrusions, unpredictability, and violations of social norms in the context of smart home devices. Moreover, privacy concerns, such as fears of eavesdropping or misuse of data and unpredictable behaviors of smart technologies, heightened perceived creepiness, which leads to avoiding SVAs. Therefore, in this study, it can be said that perceived creepiness plays a crucial role in shaping negative attitudes toward SVA usage. Additionally, perceived trust is a positive perception that shapes positive attitudes and intentions in the context of smart systems [56]. Thus, it is expected that perceived trust positively affects attitudes toward SVA usage for online shopping. Given the evidence supporting the hypotheses of this section, they can be stated as follows:

H3:

Perceived creepiness negatively affects attitude toward SVA usage for online shopping purposes.

H4:

Perceived trust positively affects attitude toward SVA usage for online shopping purposes.

2.5. Perceived Creepiness—Resistance Intention

The concept of innovation resistance was first introduced by Jagdish Sheth, referring to consumers’ reluctance to embrace changes brought about by innovation [59]. Various psychological theories discuss resistance to change, suggesting that consumers tend to prefer maintaining their current state rather than adopting innovations that may disrupt their routines [60,61]. Thus, resistance can be seen as a typical consumer response to changes induced by innovation [62]. Ram [63] developed the innovation resistance model based on the innovation diffusion theory, which posits that higher resistance leads to slower acceptance. Resistance to innovative products often stems from individual differences or negative beliefs about the product, manifesting as either passive or active resistance. Active resistance, in particular, is driven by negative perceptions of the innovation, such as feelings of creepiness [59]. Perceived creepiness refers to discomfort caused by the unexpected use of personal data or intrusive technology, leading to emotions like fear and doubt [64,65]. Studies show that these negative feelings increase resistance to new technologies, including artificial intelligence apps [66]. For example, smart home assistants can trigger perceptions of creepiness, contributing to consumer resistance [58]. Therefore, in this study, perceived creepiness is a barrier to the adoption of SVAs for shopping purposes. The hypothesis is posited as follows:

H5:

Perceived creepiness positively affects resistance intentions toward SVA usage for shopping purposes.

2.6. Perceived Trust—Behavioral Intention

Trust denotes the readiness of consumers to rely on the systems and applications they engage with [67] (p. 82). Perceived trust is the belief that the new technological system functions reliably [56]. A system fosters trust in the consumer when it operates effectively during use [18]. One of the key factors in the acceptance of new technologies is perceived trust. Consumer distrust of virtual algorithms often arises from concerns about the misuse of personal data [23]. Perceived trust boosts intentions by increasing confidence and reducing perceived risks [68]. The studies on voice-based devices indicate that trust, driven by social and functional factors, influences behavioral intentions, with these devices being seen as social entities [18,69]. Alagarsamy and Mehrolia [70] found that trust in AI explains 11% of the variance in the intention of consumers, while Kasilingam [8] showed that perceived trust directly affects chatbot usage within the TAM. These findings suggest that when voice assistant systems build trust, consumers are more likely to adopt them for online shopping. Therefore, we expect that behavioral intention is positively influenced by perceived trust in the context of SVAs. The hypothesis is posited as follows:

H6:

Perceived trust has a positive influence on behavioral intention toward SVA usage for shopping purposes.

2.7. Attitudes—Behavioral Intention—Resistance Intention

Behavioral intention denotes the probability or eagerness of an individual to participate in a particular behavior [71], such as adopting or using a new technology [16]. The relationship between attitude and behavioral intention is central to technology acceptance research [72], highlighted by models like the TAM, Theory of Reasoned Action (TRA), and UTAUT. According to the TAM, user attitudes are crucial for explaining adoption behavior. Previous studies have shown that attitude is a key predictor of intention in various contexts, such as travel intention [55], mobile technologies [73], autonomous vehicles [74], and AI-based voice assistants [7,8,75]. Recent research using the BRT framework has explored the effect of attitude on the intention to use AI-based technologies [43,76] and voice assistants [30,69]. Jan et al. [7] found that attitude positively influenced usage intention and negatively affected resistance intention toward AI-powered conversational agents. Based on these findings, it is expected that attitudes toward SVAs will positively impact usage intention and negatively influence resistance intention. Therefore, we posit the following hypotheses:

H7:

Attitude toward SVAs negatively affects resistance intention to SVA usage for shopping purposes.

H8:

Attitude toward SVAs positively affects behavioral intention to SVA usage for shopping purposes.

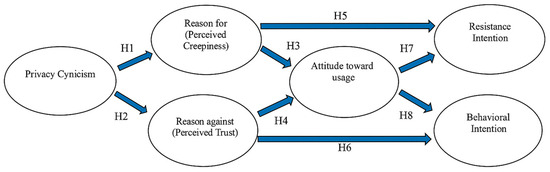

The research model, grounded in BRT, was developed based on insights from consumer behavior and technology adoption literature, as illustrated in Figure 1.

Figure 1.

Research model.

3. Methodology

3.1. Sampling

The target population for this study comprises Turkish users of smart voice assistants (SVAs), with the sample consisting of 250 individuals aged between 18 and 35 years. This age group was selected due to its higher likelihood of adopting and engaging with emerging technologies. The sample size was determined based on the widely accepted “ten times the number of items” rule, commonly applied in confirmatory factor analysis to ensure adequate statistical power [77]. A convenience sampling technique was utilized [1,56,71], and data were collected via an online survey, a method recognized for its efficiency and cost-effectiveness in terms of both time and resources [78].

The questionnaire was created using Google Forms and disseminated through digital platforms such as WhatsApp and email. Data collection occurred over a one-month period beginning on 21 May 2024. Before participating in the study, all individuals received a comprehensive explanation outlining the research objectives. They were explicitly informed that their participation was entirely voluntary, that they could withdraw at any time without consequence, and that their responses would be treated with strict confidentiality and used solely for academic purposes [72]. The survey, designed exclusively for scholarly research, required approximately 6 to 7 min to complete. A total of 262 responses were initially collected; however, 12 were excluded due to incomplete or inconsistent data, resulting in a final dataset of 250 valid responses for analysis.

3.2. Measures

The survey used in this study consists of two sections with an information paragraph regarding the research. The first section includes questions regarding participants’ demographic properties. The second section contains scales measuring privacy cynicism, perceived creepiness, perceived trust, resistance intention, attitude, and behavioral intention. All constructions were assessed using validated and reliable scales from the existing literature.

Perceived creepiness was measured using a seven-item scale developed by Raff et al. [58] and adapted to the study context. Attitude was assessed with four items from Jan et al. [7]. Resistance intention was assessed with three items adapted from Handrich [66]. Privacy cynicism items were derived from Acikgoz and Vega [79]. Perceived trust was measured with two items adapted from Fernandes and Oliveira [24]. Behavioral intention items were based on the scale developed by Joo and Sang [80]. All items were rated on a five-point Likert scale (1 = “strongly disagree”, 5 = “strongly agree”). To ensure both linguistic accuracy and content validity, the measurement scales used in the study were first translated into Turkish using the translation and back-translation method by two academic experts. The initial Turkish versions were subsequently evaluated by faculty members from the Department of Business Administration and the Department of Technology Education at Hatay Mustafa Kemal University. Following this review, the Turkish text was retranslated into English by an academician affiliated with the Center for Foreign Languages. A comparison between the retranslated version and the original scales revealed no significant semantic discrepancies.

To further enhance the linguistic precision of the Turkish version, the questionnaire was collaboratively reviewed by scholars from the Department of Turkish Language Teaching. Necessary adjustments were made to improve clarity and grammatical accuracy. The revised instrument was then subjected to expert evaluation by academics with experience in scale development and validation. In an effort to optimize data quality, a pilot study was conducted with a sample of 65 participants. Based on the feedback obtained, revisions were made to items that could potentially cause misunderstanding. Following these refinements, the final version of the questionnaire was completed and subsequently used in the main phase of data collection. A total of 250 completed questionnaires were analyzed to test the proposed hypotheses.

4. Analyses and Findings

First, the descriptions of the sample were described. Second, construct validity was checked, and finally, study hypotheses were tested with least squares structural equation modeling (PLS-SEM) using SmartPLS 4 Version 4.1.0.5, since it is highly suitable for small-to-medium samples [81].

4.1. Descriptive Statistics of the Sample

Table 1 shows the descriptive statistics of the participants. Considering the findings, 49.20% of the participants are male, and 50.80% of them are female. The majority of the participants’ages ranged from eighteen totwenty-five. Most of the consumers who participated in this study use SVAs once or twice a week. The common purposes for using SVAs are setting alarms (29.20%), checking the weather forecast (26%), and online shopping (16%).

Table 1.

Demographic characteristics of the sample (n = 250).

4.2. Measurement Model Assessment

First, a “confirmatory factor analysis” (CFA) was conducted to assess the measurement model. In this process, reliability, convergent validity, and discriminant validity were examined. Each factor loading exceeded 0.70, and the “Average Variance Extracted” (AVE) values surpassed the recommended threshold of 0.50, confirming convergent validity (see Table 2) [82].

Table 2.

Results of the measurement model.

Reliability was evaluated using Composite Reliability (CR), Cronbach’s alpha (CA), and AVE scores. Cronbach’s alpha values ranged from 0.741 to 0.955. CR values exceeded 0.50, and AVE scores were above 0.70, indicating that all scales demonstrated adequate reliability [83]. The results of the measurement model are presented in Table 2.

Discriminant validity is checked with HTMT ratios, which is a more effective method than traditional methods such as Fornell–Larcker and cross-loadings for variance-based SEM [81] (p.128). To establish discriminant validity, the HTMT ratios for each construct should be less than 0.90. The HTMT ratio of each construct was lower than 0.90, indicatingthat discriminant validity was achieved (see Table 3).

Table 3.

HTMT ratios of the measurement model.

Finally, the model fit was checked. As seen in Table 4, the model hadproper fit indices (SRMR < 0.10, NFI ≥ 0.80) [84]. Accordingly, the assessment of validity and reliability was completed, establishing the construct validity of the measurement model.

Table 4.

Model fit.

Common method variance (CMV) was assessed by performing both Harman’s single-factor test [85] and a full collinearity assessment approach [86]. The results of the single-factor test indicated that no single factor emerged and that the first unrotated factor accounted for only 28% of the total variance, which is below the commonly accepted threshold of 50%, suggesting that common method bias is unlikely to be a concern [85]. Furthermore, the collinearity assessment showed that all variance inflation factor (VIF) values were below the conservative threshold of 3.3, indicating the absence of multicollinearity and supporting the conclusion that the data is not affected by common method bias [86,87].

VIF (multicollinearity), R2 (explanatory power), f2 (effect size), and Q2 (predictive relevance) coefficients were assessed to evaluate the measurement model of this study (see Table 5). The inner VIF values for all constructs remained below the threshold of 5 [88] (p. 194), indicating the absence of multicollinearity issues. The R2 values for the endogenous variables were found to be 0.662, 0.572, 0.128, 0.060, and 0.046, respectively for behavioral intention, attitude toward SVA usage, resistance intention, perceived creepiness, and perceived trust, indicating that 66% of behavioral intention, 57% of attitude toward SVA usage,13% of resistance intention, 6% of perceived creepiness, and 5% of trust is explained by the model. Cohen [89] suggested that an f2 value of 0.02 or higher signifies a small effect size, 0.15 or higher indicates a medium effect size, and 0.35 or higher represents a large effect size. In the model, the effect size of perceived creepiness on resistance intention (f2 = 0.123, R2 = 0.128) is at a low level, whereas the effect size of perceived cynicism on perceived creepiness (f2 = 0.068, R2 = 0.060) is at a medium level.

Table 5.

Measurement model results.

The effect size of attitude toward SVA usage on resistance intention is low (f2 = 0.027, R2 = 0.123). The effect size of perceived trust on behavioral intention (f2 = 0.103, R2 = 0.662) is medium-level. The effect size of attitude toward SVA usage on behavioral intention (f2 = 0.423, R2 = 0.662) is high. The effect size of perceived cynicism on perceived trust (f2 = 0.053, R2 = 0.046) is low-level. The effect size of perceived creepiness on attitude toward SVA usage (f2 = 0.001, R2 = 0.572) is low-level. The effect size of perceived trust on attitude toward SVA usage (f2 = 1.350, R2 = 0.572) is high-level. The predictive power of the latent variables was assessed with Q2. A Q2 greater than 0 points out that the latent variables in the research model have predictive relevance [88]. As shown in Table 5, the positive Q2 values suggest that the latent construct in our model has predictive power.

4.3. Hypothesis Testing

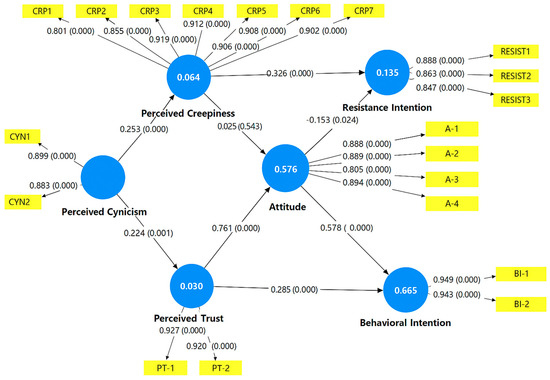

The structural model hypotheses were examined using Smart PLS 4. A bootstrapping method (10,000 resamples) was implemented to test the significance of the path coefficients and the loadings [90]. The findings from the structural model analysis are displayed in Figure 2 and Table 6.

Figure 2.

Structural model analyses results.

Table 6.

Structural model results.

Perceived cynicism positively affects perceived creepiness (β = 0.253, p = 0.000); H1 is accepted. The effect of perceived cynicism on perceived trust is positive (β = 0.224, p = 0.001); H2 is rejected. The influence of perceived creepiness on attitude toward SVA usage is positive but insignificant (β = 0.25, p = 0.544); H3 is rejected. Perceived trust positively affects the attitude toward SVA usage (β = 0.761, p = 0.000); H4 is accepted. The results demonstrated that the influence of perceived creepiness on resistance intention is positive (β = 0.326, p = 0.000); H5 is accepted. The influence of perceived trust on behavioral intention is positive (β = 0.285, p = 0.000); H6 is accepted. The effect of attitude toward SVA usage on resistance intention is negative (β = −0.153, p = 0.024); H7 is supported. The effect of attitude toward SVA usage on behavioral intention is negative (β = 0.578, p = 0.000); H8 is accepted.

5. Discussion

This study explores the impact of privacy cynicism, perceived creepiness, and perceived trust on shaping consumer attitudes and behavioral intentions toward SVA usage for online shopping, drawing upon BRT. BRT provides a valuable theoretical lens to understand how individuals form attitudes and behavioral intentions based on underlying reasons for and reasons against a given behavior. In this context, privacy cynicism was conceptualized as an inhibitor, while perceived creepiness functioned as a reason against adoption and perceived trust as a reason for adoption. The study’s findings offer significant theoretical and practical implications regarding the psychological mechanisms underlying SVA adoption.

The results confirmed Hypothesis 1, demonstrating that privacy cynicism positively influences perceived creepiness, suggesting that consumers who harbor skepticism about corporate data handling and digital surveillance are more likely to feel discomfort and unease when interacting with SVAs. From a BRT perspective, this underscores how enduring belief systems (privacy cynicism) reinforce affective resistance (creepiness), leading to psychological barriers to adoption. These findings are consistent with previous work (e.g., Rajaobelina et al. [53]), which highlights that privacy cynicism heightens feelings of uncertainty, loss of control, and vulnerability—hallmarks of the creepiness response.

However, contrary to Hypothesis 2, the study found a positive and statistically significant relationship between privacy cynicism and perceived trust. While initially surprising, this paradox aligns with emerging research on privacy resignation and functional trust [47,50,51], which suggests that users may continue to trust and engage with digital tools despite harboring privacy concerns. Rather than actively resisting technology, users may develop a form of resigned trust—a rational adaptation where the perceived inevitability of data collection leads to functional engagement, especially if the platform is familiar or perceived as indispensable.

This finding also resonates with the privacy paradox [91,92], where consumers’ expressed concerns about privacy coexist with continued technology use. Our results offer a novel extension to this discussion, suggesting not merely behavioral inconsistency but attitudinal ambivalence. That is, consumers may experience discomfort (creepiness) and trust simultaneously, reflecting cognitive dissonance (Festinger [93]). One possible resolution mechanism is compartmentalization, where users transfer trust to specific brands or technologies—perceived as more secure or user-friendly—while remaining skeptical of the broader data ecosystem.

This dual-path explanation helps reconcile the coexistence of privacy cynicism’s positive influence on both trust and creepiness, offering deeper insight into how consumers navigate the complex trade-offs in AI-enabled commerce. As suggested by van Ooijen et al. [52] and Wang and Herrando [94], brand familiarity, positive prior experience, and habitual use may act as buffers that reduce the disruptive effect of privacy concerns on trust. While not directly measured in this study, these variables represent important moderators for future research.

The findings regarding perceived creepiness and attitude (H3) revealed no statistically significant relationship, suggesting that discomfort alone may not be sufficient to reduce favorable attitudes toward SVAs. This result challenges conventional assumptions that negative affect automatically translates into unfavorable evaluations [95,96]. Within BRT, it is plausible that users, especially digital natives, have developed tolerance for certain privacy-related discomforts in exchange for convenience, functionality, or entertainment. The result points to a more ambivalent attitudinal state, where emotional discomfort is weighed against practical benefits, and may not always override rational motivations. In other words, it is possible that consumers recognize the benefits of SVAs despite their discomfort, leading to a more ambivalent attitude rather than outright rejection.

This study confirms that perceived trust has a strong positive effect on attitudes toward SVA usage (H4), reinforcing its role as a key reason for adoption within the BRT framework. Consumers who perceive SVAs as reliable and secure develop more favorable attitudes, which, in turn, increase their willingness to engage with the technology [56,72]. Trust has long been recognized as a key driver of acceptance in AI and technology adoption models (e.g., TAM, UTAUT). This underscores the centrality of trust in shaping consumer acceptance of AI-driven shopping tools and highlights the importance of establishing transparent data policies and reliable security measures [97] to foster positive attitudes. This finding is consistent with the existing literature [98,99].

As expected, perceived creepiness positively influences resistance intentions (H5), indicating that discomfort and unease associated with SVAs lead consumers to actively resist their adoption. This finding is consistent with the literature [53,66]. This aligns with BRT’s notion that strong reasons against a behavior can significantly contribute to avoidance or resistance behaviors [7,34]. Unlike attitudes, which may be shaped by multiple competing factors, resistance intentions appear to be more directly influenced by negative affective responses. Practically, these finding calls for minimizing uncanny, intrusive, or overly anthropomorphic elements in voice assistant design to mitigate negative reactions.

Finally, the findings support the hypothesis that perceived trust positively affects behavioral intentions toward SVA usage (H6), further validating trust as a critical reason for adoption. This finding is consistent with the broader TAM literature, emphasizing that trust not only shapes attitudes but also directly translates into behavioral engagement [24,79,99,100]. Within the BRT framework, this suggests that strong reasons for adoption (trust) can facilitate consumer willingness to adopt AI tools even in the presence of underlying privacy skepticism.

Taken together, these findings provide a more holistic view of consumer ambivalence, especially among younger users such as Generation Z. As noted by Bunea et al. [101], Gen Z consumers tend to report high awareness of privacy issues while simultaneously embracing AI technologies for their utility and personalization. Our study builds on this by showing that privacy cynicism does not necessarily act as a universal inhibitor. Instead, its effects are channeled through dual psychological mechanisms—creepiness and trust—which operate in parallel rather than opposition. This layered interpretation of consumer reasoning deepens the explanatory power of BRT and offers a valuable extension beyond linear acceptance models. It suggests that both adoption and resistance can coexist in user decision-making, driven by a balance of cognitive, affective, and contextual factors—particularly in the context of AI-driven commerce, where digital skepticism and dependency are increasingly intertwined.

From a model performance perspective, our framework yields strong explanatory power, accounting for 47.1% of the variance in usage intention and 44.6% in resistance intention. These figures exceed typical outcomes in TAM-based SVA research (e.g., [79]: R2 = 0.41). This reinforces the utility of BRT—especially when expanded to include dual-path mechanisms—in capturing the complexity of contemporary technology adoption behavior.

6. Implications and Limitations

6.1. Theoretical Implications

This study makes several important theoretical contributions by extending the BRT framework into the domain of AI-enabled commerce, with a specific focus on SVA usage. While BRT has previously been used to explain intention formation through the interaction of beliefs, reasons, attitudes, and behavioral outcomes, our model introduces a novel conceptualization of privacy cynicism as a dual-path antecedent—shaping both “reasons for” and “reasons against” SVA adoption. Specifically, privacy cynicism was found to positively influence perceived trust (a reason for) and perceived creepiness (a reason against), demonstrating that a single belief construct can simultaneously activate opposing psychological responses within the same decision-making framework.

This dual-path approach departs from the traditional linearity of many technology acceptance models (e.g., TAM, UTAUT), which typically emphasize utilitarian or hedonic predictors such as performance expectancy or perceived ease of use. While these models effectively capture functional evaluations of technology, they often overlook the emotional and value-based inhibitors that increasingly influence user responses to AI-driven systems. In contrast, our BRT-based model integrates both rational–cognitive reasoning (e.g., trust rooted in functional reliability) and emotional–affective reactions (e.g., discomfort stemming from perceived surveillance), offering a more holistic and psychologically grounded understanding of consumer ambivalence.

Furthermore, the study contributes to the literature on the privacy paradox [91,92] and privacy resignation [50,51], two key concepts explaining why individuals continue to engage with digital platforms despite harboring privacy concerns. Our findings suggest that privacy cynicism does not uniformly inhibit trust. Instead, it may give rise to what we conceptualize as resigned trust—a cognitive adaptation where users rationalize continued engagement based on familiarity, routine, or perceived brand credibility. This insight adds nuance to the privacy paradox literature by shifting the focus from behavioral inconsistency to attitudinal dualism, revealing that trust and skepticism may coexist and jointly shape AI technology adoption.

Importantly, our work distinguishes itself from prior studies such as that of Acikgoz and Vega [79], who explored the role of privacy cynicism in SVA adoption through the TAM framework. While their findings highlighted its negative impact on usage intention, they did not account for the competing psychological mechanisms—such as emotional discomfort and rationalized trust—that are central to BRT. Nor did they examine resistance intention as a distinct outcome. While we do not claim to be the first to link privacy cynicism to SVA usage, our contribution lies in embedding this belief as a context-specific antecedent within BRT, enabling the simultaneous prediction of both adoption and resistance.

Finally, this study proposes a theoretical refinement of BRT by demonstrating that belief constructs may influence different reasoning processes through distinct psychological mechanisms. For instance, privacy cynicism may foster trust via rational adaptation or habitual usage (a cognitive route), while simultaneously triggering creepiness through emotional discomfort and perceived surveillance (an affective route). This asymmetric reasoning process helps explain why discomfort does not always reduce attitudes, and why trust can coexist with unease—an insight particularly relevant in AI-mediated environments, where emotional and rational evaluations often diverge. Our enriched model offers a foundation for future research on psychological ambivalence, trust–risk trade-offs, and value-based reasoning in algorithmic and data-intensive technological contexts.

6.2. Practical Implications

From a practical perspective, this study offers several important insights for developers, marketers, and policymakers aiming to increase the adoption of SVAs while mitigating resistance. Given that perceived trust emerged as a pivotal driver of SVA adoption, it is imperative for developers to embed trust-building mechanisms throughout the SVA design and user experience. This includes offering transparent communication about data usage, establishing clear and accessible privacy policies, and assuring users of the security and ethical handling of their personal information [99]. To operationalize these strategies, firms should implement granular privacy controls that allow users to customize the types of data they share and manage consent on an ongoing basis. Embedding user education features that inform individuals about their data rights and privacy options—without overwhelming them—can enhance perceptions of control and increase trust. Moreover, leveraging third-party certifications (e.g., trustmarks or GDPR compliance badges) may signal credibility and adherence to recognized privacy standards, further alleviating skepticism.

Another key recommendation is to adopt permission transparency and default opt-in data sharing. Consumers should be invited—rather than defaulted—into data-sharing arrangements, with context-sensitive prompts that clearly articulate what data is being collected, how it will be used, and for what purpose. This aligns not only with ethical AI principles but also with rising consumer expectations for autonomy in digital environments.

The design of the voice interface itself also plays a role in reducing perceptions of creepiness. Human–computer interaction (HCI) research and AI ethics studies increasingly highlight that design features—such as tone of voice, speech pace, gender choice, and conversational style—can significantly shape emotional responses to AI. Allowing users to personalize the assistant’s voice and interaction style can enhance perceived comfort and reduce the feeling of being surveilled or manipulated. In turn, these features may mitigate negative affective responses such as discomfort or unease.

In addition, co-creating privacy policies with users—through participatory design approaches or user-feedback loops—can increase perceptions of procedural justice, ownership, and transparency. Particularly for digital-native and privacy-aware generations, such as Gen Z, involving users in the governance of their data can reinforce trust and foster long-term loyalty. Efforts should also focus on reducing creepiness by making SVA interactions feel more natural, familiar, and non-intrusive. Marketing campaigns could showcase how SVAs simplify routine tasks while respecting privacy, helping consumers see these tools as supportive rather than invasive. Communicating the convenience-enhancing potential of SVAs—such as time savings, multitasking capabilities, and seamless integration into daily life—can help balance any residual discomfort or skepticism.

Furthermore, this study highlights the need for firms to openly acknowledge and address privacy cynicism. Marketers should clearly explain data protection measures, reinforce ethical standards, and emphasize user control features. As prior research suggests [52,102,103], offering transparent messaging and control options not only fosters confidence but may also reduce emotional reactions associated with loss of control or surveillance anxiety.

Finally, this study has direct policy relevance. Our findings support the importance of aligning product development with the transparency mandates outlined in the European Union’s AI Act (particularly Article 13). These include requirements that users be informed when interacting with AI systems, that data processing purposes be communicated clearly, and that systems be designed for explainability and accountability. Complying with such legal frameworks not only fulfills regulatory obligations but also supports trust-building and reduces consumer resistance—further demonstrating that ethical and legal alignment can yield both compliance and competitive advantages.

By implementing these actionable strategies—grounded in behavioral theory and regulatory alignment—developers and marketers can more effectively address the emotional, ethical, and cognitive concerns associated with SVA use. Doing so will be essential to fostering greater user adoption and minimizing resistance in an increasingly AI-driven marketplace.

6.3. Limitations and Recommendations for Future Research

This study has some limitations that offer valuable directions for future research. First, the use of a convenience sampling method and the demographic skew toward digitally native consumers—especially those aged 18–25 (63.6% of the sample)—limit the generalizability of the findings. Although this age group is most likely to interact with SVAs for online shopping, the results may not capture the perspectives of older or less digitally literate users. Future research should employ probability-based or stratified sampling strategies to ensure more representative coverage across age groups, socio-economic statuses, and cultural settings. Relatedly, the study’s geographic and demographic scope restricts the transferability of findings to broader populations. As prior research suggests, privacy concerns, trust formation, and technology readiness can differ across generations and cultural contexts. Therefore, future studies should examine these variables using multi-group comparisons or moderation analyses, enabling deeper insights into whether psychological constructs such as privacy cynicism, trust, and creepiness function consistently across diverse cohorts. Comparative cross-cultural studies could also shed light on how regulatory environments (e.g., GDPR-compliant vs. non-compliant regions) and societal norms shape user perceptions of AI technologies.

Second, another limitation is the study’s cross-sectional design, which prevents examination of how consumer attitudes, trust, and resistance evolve over time. As AI technologies like ChatGPT 4.0 and other generative tools rapidly evolve, longitudinal or panel-based research will be essential to capture shifting consumer expectations, especially in response to changes in AI regulation, data governance, and ethical standards.

Third, from a theoretical perspective, while the study was grounded in the BRT, there is merit in integrating complementary models in future research. For example, constructs from UTAUT2 (e.g., performance expectancy) or the TAM (e.g., perceived usefulness) can enrich BRT’s reason-based structure. Combining cognitive evaluations (e.g., usefulness, ease of use), emotional responses (e.g., creepiness), and value-laden beliefs (e.g., privacy cynicism) would provide a more holistic explanatory model. Moreover, applying frameworks such as the privacy calculus or cognitive dissonance theory could uncover how users reconcile contradictory attitudes—for example, distrusting data systems while continuing to use them.

Fourth, due to sample size constraints (n = 250), the present study focused on direct relationships for model parsimony. However, future research with larger and more diverse samples should explore mediated mechanisms using advanced techniques like Hayes’ PROCESS macro. Constructs such as privacy fatigue or perceived manipulative intent (e.g., Yu et al. [102]) could serve as sequential mediators, uncovering underlying psychological processes driving trust or resistance to AI. In addition, several control and moderating variables, while conceptually discussed, were not empirically tested. Factors such as prior experience with SVAs, online shopping frequency, and technological literacy likely influence trust and privacy perceptions. Similarly, technology readiness —including optimism, discomfort, and insecurity—may moderate key relationships, particularly those related to privacy cynicism. Including such variables in future research would offer a more granular and behaviorally nuanced understanding of SVA usage.

Fifth, the reliance on self-reported survey data introduces the possibility of social desirability bias, especially regarding attitudes toward AI privacy and ethics. As public awareness of these issues grows, participants may overstate their privacy consciousness or trust in AI. Future studies should complement surveys with objective behavioral data, such as app analytics, system logs, or actual usage metrics, to validate and enrich self-reported findings.

Sixth, although prior work (e.g., Acikgoz and Vega [79]) has explored privacy cynicism in relation to SVA adoption through the TAM framework, our study advances the literature by embedding this construct within BRT as a dual-path belief—influencing both perceived trust (reason for) and perceived creepiness (reason against). This structure enables simultaneous examination of adoption and resistance behaviors, a contribution not fully leveraged in earlier research. Although we did not empirically test the dynamic privacy cynicism model proposed by Lutz et al. [51], we recognize this as a valuable theoretical extension for future inquiry.

Finally, the national context of Turkey may shape user responses to AI and data privacy, particularly given differences in digital governance, cultural attitudes toward authority, and exposure to privacy regulation. To improve external validity, future research should conduct cross-national comparisons that account for policy frameworks, such as the GDPR in Europe, and cultural dimensions influencing AI trust, risk perception, and technology adoption.

7. Conclusions

This study provides valuable insights into the motivators and demotivators influencing consumers’ use of SVAs for online shopping, employing an extended BRT framework. The findings highlight the complex interplay between trust, skepticism, and resistance, emphasizing the central role of perceived trust in shaping both attitudes and behavioral intentions. While perceived creepiness heightened resistance, it did not significantly affect attitudes, suggesting that privacy-related discomfort may not always deter favorable perceptions. Interestingly, privacy cynicism was found to enhance trust, pointing to a paradoxical relationship that warrants further exploration. These results underscore the importance of fostering trust and addressing privacy concerns to encourage the broader adoption of SVAs in e-commerce. From a practical standpoint, the study offers strategic recommendations for marketers, developers, and policymakers aiming to promote the adoption of voice-assisted shopping technologies. By investing in transparent data practices, privacy protection features, and user education, companies can reduce consumer resistance and strengthen trust. Furthermore, tailored approaches that consider generational, psychological, and technological factors will be essential in enhancing user experience and acceptance. Overall, this research contributes to a growing body of literature on AI-driven consumer behavior and sets the stage for more nuanced investigations into the dynamics of emerging digital technologies.

Author Contributions

Conceptualization, M.G.; methodology, M.G. and K.M.; software, M.G.; validation, M.G. and K.M.; formal analysis, M.G.; investigation, M.G.; resources, M.G. and K.M.; data curation, M.G.; writing—original draft preparation, M.G.; writing—review and editing, K.M.; visualization, K.M.; supervision, K.M.; project administration, M.G.; funding acquisition, K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

As this research utilized an anonymous questionnaire, approval from an ethics committee was deemed unnecessary, in line with applicable local regulations.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data supporting this study are included within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Almokdad, E.; Lee, C.H. Service robots in the workplace: Fostering sustainable collaboration by alleviating perceived burdensomeness. Sustainability 2024, 16, 9518. [Google Scholar] [CrossRef]

- Mishra, A.; Shukla, A.; Sharma, S.K. Psychological Determinants of Users’ Adoption and Word-of-Mouth Recommendations of Smart Voice Assistants. Int. J. Inf. Manag. 2022, 67, 102413. [Google Scholar] [CrossRef]

- Mo, L.; Zhang, L.; Sun, X.; Zhou, Z. Unlock Happy Interactions: Voice Assistants Enable Autonomy and Timeliness. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1013–1033. [Google Scholar] [CrossRef]

- Mou, Y.; Meng, X. Alexa, It Is Creeping over Me–Exploring the Impact of Privacy Concerns on Consumer Resistance to Intelligent Voice Assistants. Asia Pac. J. Mark. Logist. 2024, 36, 261–292. [Google Scholar] [CrossRef]

- Cao, D.; Sun, Y.; Goh, E.; Wang, R.; Kuiavska, K. Adoption of Smart Voice Assistants Technology among Airbnb Guests: A Revised Self-Efficacy-Based Value Adoption Model (SVAM). Int. J. Hosp. Manag. 2022, 101, 103124. [Google Scholar] [CrossRef]

- Jayasingh, S.; Sivakumar, A.; Vanathaiyan, A.A. Artificial Intelligence Influencers’ Credibility Effect on Consumer Engagement and Purchase Intention. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 17. [Google Scholar] [CrossRef]

- Jan, I.U.; Ji, S.; Kim, C. What (De)Motivates Customers to Use AI-Powered Conversational Agents for Shopping? The Extended Behavioural Reasoning Perspective. J. Retail. Consum. Serv. 2023, 75, 103440. [Google Scholar] [CrossRef]

- Kasilingam, D.L. Understanding the Attitude and Intention to Use Smartphone Chatbots for Shopping. Technol. Soc. 2020, 62, 101280. [Google Scholar] [CrossRef]

- Market Research Future. Chatbots Market Size to Surpass USD 24.98 Billion at a 24.2% CAGR by 2030—Report by Market Research Future (MRFR). 2022. Available online: https://www.globenewswire.com/en/news-release/2022/09/29/2525394/0/en/Chatbots-Market-Size-to-Surpass-USD-24-98-Billion-at-a-24-2-CAGR-by-2030-Report-by-Market-Research-Future-MRFR.html (accessed on 31 December 2024).

- Future Market Insights. With 15.6% CAGR, Conversational Commerce Market Size to Hit US$26,301.8 Million by 2032. 2022. Available online: https://www.globenewswire.com/en/news-release/2022/08/22/2502010/0/en/With-15-6-CAGR-Conversational-Commerce-Market-Size-to-Hit-US-26-301-8-Million-by-2032-Future-Market-Insights-Inc.html (accessed on 31 December 2024).

- He, J.; Liang, X.; Xue, J. Unraveling the Influential Mechanisms of Smart Interactions on Stickiness Intention: A Privacy Calculus Perspective. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 2582–2604. [Google Scholar] [CrossRef]

- Al-Fraihat, D.; Alzaidi, M.; Joy, M. Why Do Consumers Adopt Smart Voice Assistants for Shopping Purposes? A Perspective from Complexity Theory. Intell. Syst. Appl. 2023, 18, 200230. [Google Scholar] [CrossRef]

- Choudhary, S.; Kaushik, N.; Sivathanu, B.; Rana, N.P. Assessing Factors Influencing Customers’ Adoption of AI-Based Voice Assistants. J. Comput. Inf. Syst. 2024, 1–18. [Google Scholar] [CrossRef]

- Singh, C.; Dash, M.K.; Sahu, R.; Kumar, A. Investigating the Acceptance Intentions of Online Shopping Assistants in E-Commerce Interactions: Mediating Role of Trust and Effects of Consumer Demographics. Heliyon 2024, 10, e25031. [Google Scholar] [CrossRef] [PubMed]

- Vimalkumar, M.; Sharma, S.K.; Singh, J.B.; Dwivedi, Y.K. ‘Okay Google, What about My Privacy?’: User’s Privacy Perceptions and Acceptance of Voice-Based Digital Assistants. Comput. Hum. Behav. 2021, 120, 106763. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Wirtz, J.; Patterson, P.G.; Kunz, W.H.; Gruber, T.; Lu, V.N.; Paluch, S.; Martins, A. Brave New World: Service Robots in the Frontline. J. Serv. Manag. 2018, 29, 907–931. [Google Scholar] [CrossRef]

- Claudy, M.C.; Garcia, R.; O’Driscoll, A. Consumer Resistance to Innovation—A Behavioural Reasoning Perspective. J. Acad. Mark. Sci. 2015, 43, 528–544. [Google Scholar] [CrossRef]

- PwC. Prepare for the Voice Revolution: An In-Depth Look at Consumer Adoption and Usage of Voice Assistants, and How Companies Can Earn Their Trust—And Their Business; PwC USA: New York, NY, USA, 2018; Available online: https://www.pwc.com/us/en/services/consulting/library/consumer-intelligence-series/voice-assistants.html (accessed on 25 December 2024).

- Sahu, A.K.; Padhy, R.K.; Dhir, A. Envisioning the Future of Behavioural Decision-Making: A Systematic Literature Review of Behavioural Reasoning Theory. Australas. Mark. J. 2020, 28, 145–159. [Google Scholar] [CrossRef]

- Kaplan, A.M.; Haenlein, M. Siri, Siri, in My Hand: Who’s the Fairest in the Land? On the Interpretations, Illustrations, and Implications of Artificial Intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Chattaraman, V.; Kwon, W.S.; Gilbert, J.E.; Ross, K. Should AI-Based, Conversational Digital Assistants Employ Social- or Task-Oriented Interaction Style? A Task-Competency and Reciprocity Perspective for Older Adults. Comput. Hum. Behav. 2019, 90, 315–330. [Google Scholar] [CrossRef]

- Fernandes, T.; Oliveira, E. Understanding Consumers’ Acceptance of Automated Technologies in Service Encounters: Drivers of Digital Voice Assistants Adoption. J. Bus. Res. 2021, 122, 180–191. [Google Scholar] [CrossRef]

- Arnold, A.; Kolody, S.; Comeau, A.; Miguel Cruz, A. What Does the Literature Say about the Use of Personal Voice Assistants in Older Adults? A Scoping Review. Disabil. Rehabil. Assist. Technol. 2024, 19, 100–111. [Google Scholar] [CrossRef] [PubMed]

- Chahal, H.; Mahajan, M. Voice Unbound: The Impact of Localization and Experience on Continuous Personal Voice Assistant Usage and Its Drivers. Int. J. Hum.-Comput. Interact. 2024, 1–18. [Google Scholar] [CrossRef]

- Kautish, P.; Purohit, S.; Filieri, R.; Dwivedi, Y.K. Examining the Role of Consumer Motivations to Use Voice Assistants for Fashion Shopping: The Mediating Role of Awe Experience and eWOM. Technol. Forecast. Soc. Change 2023, 190, 122407. [Google Scholar] [CrossRef]

- Jo, H. Interaction, Novelty, Voice, and Discomfort in the Use of Artificial Intelligence Voice Assistant. Univ. Access Inf. Soc. 2025, 1–14. [Google Scholar] [CrossRef]

- Liébana-Cabanillas, F.J.; Higueras-Castillo, E.; Alonso-Palomo, R.; Japutra, A. Exploring the Determinants of Continued Use of Virtual Voice Assistants: A UTAUT2 and Privacy Calculus Approach. Acad. Rev. Latinoam. Adm. 2025, 38, 156–182. [Google Scholar] [CrossRef]

- Moriuchi, E. Okay, Google!: An Empirical Study on Voice Assistants on Consumer Engagement and Loyalty. Psychol. Mark. 2019, 36, 489–501. [Google Scholar] [CrossRef]

- Kang, W.; Shao, B. The Impact of Voice Assistants’ Intelligent Attributes on Consumer Well-Being: Findings from PLS-SEM and fsQCA. J. Retail. Consum. Serv. 2023, 70, 103130. [Google Scholar] [CrossRef]

- Kowalczuk, P. Consumer Acceptance of Smart Speakers: A Mixed Methods Approach. J. Res. Interact. Mark. 2018, 12, 418–431. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Westaby, J.D. Behavioral Reasoning Theory: Identifying New Linkages Underlying Intentions and Behavior. Organ. Behav. Hum. Decis. Process. 2005, 98, 97–120. [Google Scholar] [CrossRef]

- Ajzen, I. The Theory of Planned Behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Fishbein, M.; Ajzen, I. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research; Addison-Wesley: Reading, MA, USA, 1975. [Google Scholar]

- Sivathanu, B. Adoption of Online Subscription Beauty Boxes: A Behavioural Reasoning Theory (BRT) Perspective. In Research Anthology on E-Commerce Adoption, Models, and Applications for Modern Business; Information Resources Management Association, Ed.; IGI Global: Hershey, PA, USA, 2021; pp. 958–983. [Google Scholar] [CrossRef]

- Lee, J.C.; Chen, L.; Zhang, H. Exploring the Adoption Decisions of Mobile Health Service Users: A Behavioral Reasoning Theory Perspective. Ind. Manag. Data Syst. 2023, 123, 2241–2266. [Google Scholar] [CrossRef]

- Gupta, A.; Arora, N. Consumer Adoption of M-Banking: A Behavioral Reasoning Theory Perspective. Int. J. Bank Mark. 2017, 35, 733–747. [Google Scholar] [CrossRef]

- Uddin, S.F.; Sabir, L.B.; Kirmani, M.D.; Kautish, P.; Roubaud, D.; Grebinevych, O. Driving Change: Understanding Consumers’ Reasons Influencing Electric Vehicle Adoption from the Lens of Behavioural Reasoning Theory. J. Environ. Manag. 2024, 369, 122277. [Google Scholar] [CrossRef]

- Zafar, A.U.; Sajjad, A.; Agarwal, R.; Lamprinakos, G.; Yaqub, M.Z. Digital transformation portrays a play or ploy for brands: Exploring the impact of green gamification as a digital marketing strategy. Int. Mark. Rev. 2025. ahead of print. [Google Scholar] [CrossRef]

- Zafar, S.; Badghish, S.; Yaqub, R.M.S.; Yaqub, M.Z. The Agency of Consumer Value and Behavioral Reasoning Patterns in Shaping Webrooming Behaviors in Omnichannel Retail Environments. Sustainability 2023, 15, 14852. [Google Scholar] [CrossRef]

- Tandon, A.; Dhir, A.; Kaur, P.; Kushwah, S.; Salo, J. Behavioral Reasoning Perspectives on Organic Food Purchase. Appetite 2020, 154, 104786. [Google Scholar] [CrossRef]

- Diddi, S.; Yan, R.N.; Bloodhart, B.; Bajtelsmit, V.; McShane, K. Exploring Young Adult Consumers’ Sustainable Clothing Consumption Intention-Behavior Gap: A Behavioral Reasoning Theory Perspective. Sustain. Prod. Consum. 2019, 18, 200–209. [Google Scholar] [CrossRef]

- Sivathanu, B. Adoption of internet of things (IOT) based wearables for healthcare of older adults—A behavioural reasoning theory (BRT) approach. J. Enabling Technol. 2018, 12, 169–185. [Google Scholar] [CrossRef]

- Andersson, L.M. Employee Cynicism: An Examination Using a Contract Violation Framework. Hum. Relat. 1996, 49, 1395–1418. [Google Scholar] [CrossRef]

- Choi, H.; Jung, Y. Online users’ cynical attitudes towards privacy protection: Examining privacy cynicism. Asia Pac. J. Inf. Syst. 2020, 30, 547–567. [Google Scholar] [CrossRef]

- Boush, D.M.; Kim, C.H.; Kahle, L.R.; Batra, R. Cynicism and Conformity as Correlates of Trust in Product Information Sources. J. Curr. Issues Res. Advert. 1993, 15, 71–79. [Google Scholar] [CrossRef]

- Pugh, S.D.; Skarlicki, D.P.; Passell, B.S. After the Fall: Layoff Victims’ Trust and Cynicism in Re-Employment. J. Occup. Organ. Psychol. 2003, 76, 201–212. [Google Scholar] [CrossRef]

- Hoffmann, C.P.; Lutz, C.; Ranzini, G. Privacy Cynicism: A New Approach to the Privacy Paradox. Cyberpsychology 2016, 10, 7. [Google Scholar] [CrossRef]

- Lutz, C.; Hoffmann, P.C.; Ranzini, G. Data Capitalism and the User: An Exploration of Privacy Cynicism in Germany. New Media Soc. 2020, 22, 1168–1187. [Google Scholar] [CrossRef]

- van Ooijen, I.; Segijn, C.M.; Opree, S.J. Privacy Cynicism and Its Role in Privacy Decision-Making. Commun. Res. 2024, 51, 146–177. [Google Scholar] [CrossRef]

- Rajaobelina, L.; Prom Tep, S.; Arcand, M.; Ricard, L. Creepiness: Its Antecedents and Impact on Loyalty When Interacting with a Chatbot. Psychol. Mark. 2021, 38, 2339–2356. [Google Scholar] [CrossRef]

- Yip, J.C.; Sobel, K.; Gao, X.; Hishikawa, A.M.; Lim, A.; Meng, L.; Ofiana, R.F.; Park, J.; Hiniker, A. Laughing Is Scary, but Farting Is Cute: A Conceptual Model of Children’s Perspectives of Creepy Technologies. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–15. [Google Scholar] [CrossRef]

- Almokdad, E.; Kiatkawsin, K.; Kaseem, M. The role of COVID-19 vaccine perception, hope, and fear on the travel bubble program. Int. J. Environ. Res. Public Health 2022, 19, 8714. [Google Scholar] [CrossRef]

- Mouloudj, K.; Aprile, M.C.; Bouarar, A.C.; Njoku, A.; Evans, M.A.; Oanh, L.V.L.; Asanza, D.M.; Mouloudj, S. Investigating antecedents of intention to use green agri-food delivery apps: Merging TPB with trust and electronic word of mouth. Sustainability 2025, 17, 3717. [Google Scholar] [CrossRef]

- Pennington, N.; Hastie, R. Explanation-Based Decision Making: Effects of Memory Structure on Judgment. J. Exp. Psychol. Learn. Mem. Cogn. 1988, 14, 521–533. [Google Scholar] [CrossRef]

- Raff, S.; Rose, S.; Huynh, T. Perceived Creepiness in Response to Smart Home Assistants: A Multi-Method Study. Int. J. Inf. Manag. 2024, 74, 102720. [Google Scholar] [CrossRef]

- Ram, S.; Sheth, J.N. Consumer Resistance to Innovations: The Marketing Problem and Its Solutions. J. Consum. Mark. 1989, 6, 5–14. [Google Scholar] [CrossRef]

- Osgood, C.E.; Tannenbaum, P.H. The Principle of Congruity in the Prediction of Attitude Change. Psychol. Rev. 1955, 62, 42–55. [Google Scholar] [CrossRef]

- Wang, D.; Choi, H. The Effect of Consumer Resistance and Trust on the Intention to Accept Fully Autonomous Vehicles. Mob. Inf. Syst. 2023, 2023, 3620148. [Google Scholar] [CrossRef]

- Zaltman, G.; Wallendorf, M. Consumer Behavior, Basic Findings and Management Implications; Wiley: Hoboken, NJ, USA, 1979. [Google Scholar]

- Ram, S. A Model of Innovation Resistance. Adv. Consum. Res. 1987, 14, 208–212. [Google Scholar]

- Stevens, A.M. Antecedents and Outcomes of Perceived Creepiness in Online Personalized Communications. Ph.D. Thesis, Case Western Reserve University, Cleveland, OH, USA, 2016. Available online: http://rave.ohiolink.edu/etdc/view?acc_num=case1459413626 (accessed on 12 January 2025).

- Sohn, K.; Kwon, O. Technology Acceptance Theories and Factors Influencing Artificial Intelligence-Based Intelligent Products. Telemat. Inform. 2020, 47, 101324. [Google Scholar] [CrossRef]

- Handrich, M. Alexa, You Freak Me Out: Identifying Drivers of Innovation Resistance and Adoption of Intelligent Personal Assistants. In Proceedings of the Forty-Second International Conference on Information Systems, Austin, TX, USA, 12–15 December 2021. [Google Scholar]

- Moorman, C.; Deshpande, R.; Zaltman, G. Factors Affecting Trust in Market Research Relationships. J. Mark. 1993, 57, 81–101. [Google Scholar] [CrossRef]

- Lăzăroiu, G.; Neguriţă, O.; Grecu, I.; Grecu, G.; Mitran, P.C. Consumers’ Decision-Making Process on Social Commerce Platforms: Online Trust, Perceived Risk, and Purchase Intentions. Front. Psychol. 2020, 11, 890. [Google Scholar] [CrossRef]

- Pal, D.; Roy, P.; Arpnikanondt, C.; Thapliyal, H. The Effect of Trust and Its Antecedents Towards Determining Users’ Behavioral Intention with Voice-Based Consumer Electronic Devices. Heliyon 2022, 8, e09271. [Google Scholar] [CrossRef]

- Alagarsamy, S.; Mehrolia, S. Exploring Chatbot Trust: Antecedents and Behavioural Outcomes. Heliyon 2023, 9, e16074. [Google Scholar] [CrossRef] [PubMed]

- Njoku, A.; Mouloudj, K.; Bouarar, A.C.; Evans, M.A.; Asanza, D.M.; Mouloudj, S.; Bouarar, A. Intentions to create green start-ups for collection of unwanted drugs: An empirical study. Sustainability 2024, 16, 2797. [Google Scholar] [CrossRef]

- Erokhin, V.; Mouloudj, K.; Bouarar, A.C.; Mouloudj, S.; Gao, T. Investigating Farmers’ Intentions to Reduce Water Waste through Water-Smart Farming Technologies. Sustainability 2024, 16, 4638. [Google Scholar] [CrossRef]

- McLean, G.; Osei-Frimpong, K. Hey Alexa… Examine the Variables Influencing the Use of Artificial Intelligent In-Home Voice Assistants. Comput. Hum. Behav. 2019, 99, 28–37. [Google Scholar] [CrossRef]

- Huang, T. Psychological Factors Affecting Potential Users’ Intention to Use Autonomous Vehicles. PLoS ONE 2023, 18, e0282915. [Google Scholar] [CrossRef]

- Anayat, S.; Rasool, G.; Pathania, A. Examining the Context-Specific Reasons and Adoption of Artificial Intelligence-Based Voice Assistants: A Behavioural Reasoning Theory Approach. Int. J. Consum. Stud. 2023, 47, 1885–1910. [Google Scholar] [CrossRef]

- Park, M.; Cho, H.; Johnson, K.; Yurchisin, J. Use of Behavioral Reasoning Theory to Examine the Role of Social Responsibility in Attitudes Toward Apparel Donation. Int. J. Consum. Stud. 2017, 41, 333–339. [Google Scholar] [CrossRef]

- Bentler, P.M.; Chou, C.P. Practical Issues in Structural Modelling. Sociol. Methods Res. 1987, 16, 78–117. [Google Scholar] [CrossRef]

- Lefever, S.; Dal, M.; Matthíasdóttir, Á. Online data collection in academic research: Advantages and limitations. Brit. J. Educ. Technol. 2007, 38, 574–582. [Google Scholar] [CrossRef]

- Acikgoz, F.; Vega, R.P. The Role of Privacy Cynicism in Consumer Habits with Voice Assistants: A Technology Acceptance Model Perspective. Int. J. Hum. Comput. Interact. 2022, 38, 1138–1152. [Google Scholar] [CrossRef]

- Joo, J.; Sang, Y. Exploring Koreans’ Smartphone Usage: An Integrated Model of the Technology Acceptance Model and Uses and Gratifications Theory. Comput. Hum. Behav. 2013, 29, 2512–2518. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modelling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Bagozzi, R.P.; Yi, Y. On the Evaluation of Structural Equation Models. J. Acad. Mark. Sci. 1988, 16, 74–94. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Hooper, D.; Coughlan, J.; Mullen, M.R. Structural Equation Modelling: Guidelines for Determining Model Fit. Electron. J. Bus. Res. Methods 2008, 6, 53–60. [Google Scholar]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

- Kock, N. Common Method Bias in PLS-SEM: A Full Collinearity Assessment Approach. Int. J. e-Collab. 2015, 11, 1–10. [Google Scholar] [CrossRef]

- Klein, S.P.; Spieth, P.; Heidenreich, S. Facilitating business model innovation: The influence of sustainability and the mediating role of strategic orientations. J. Prod. Innov. Manag. 2021, 38, 271–288. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M.; Ringle, C.M.; Gudergan, S.P. Advanced Issues in Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Cohen, J. A Power Primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 3rd ed.; Sage: Thousand Oaks, CA, USA, 2022. [Google Scholar]

- Dinev, T.; Hart, P. An extended privacy calculus model for e-commerce transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Barth, S.; De Jong, M.D. The privacy paradox–Investigating discrepancies between expressed privacy concerns and actual online behavior—A systematic literature review. Telemat. Inform. 2017, 34, 1038–1058. [Google Scholar] [CrossRef]

- Festinger, L. A Theory of Cognitive Dissonance; Stanford University Press: Stanford, CA, USA, 1957. [Google Scholar]

- Wang, Y.; Herrando, C. Does Privacy Assurance on Social Commerce Sites Matter to Millennials? Int. J. Inf. Manag. 2019, 44, 164–177. [Google Scholar] [CrossRef]

- Gilly, M.C.; Celsi, M.W.; Schau, H.J. It Don’t Come Easy: Overcoming Obstacles to Technology Use within a Resistant Consumer Group. J. Consum. Aff. 2012, 46, 62–89. [Google Scholar] [CrossRef]