The Role of Product Type in Online Review Generation and Perception: Implications for Consumer Decision-Making

Abstract

1. Introduction

2. Literature Review and Hypothesis Development

2.1. Theoretical Foundation: Product Classification Theory and Review Behavior

2.2. Online Review Behavior and Review Features

2.3. Hypothesis Development

2.3.1. Review Generation for Different Product Types

2.3.2. Perceived Helpfulness of Reviews by Product Type

2.3.3. Perceived Helpfulness of Review Characteristics

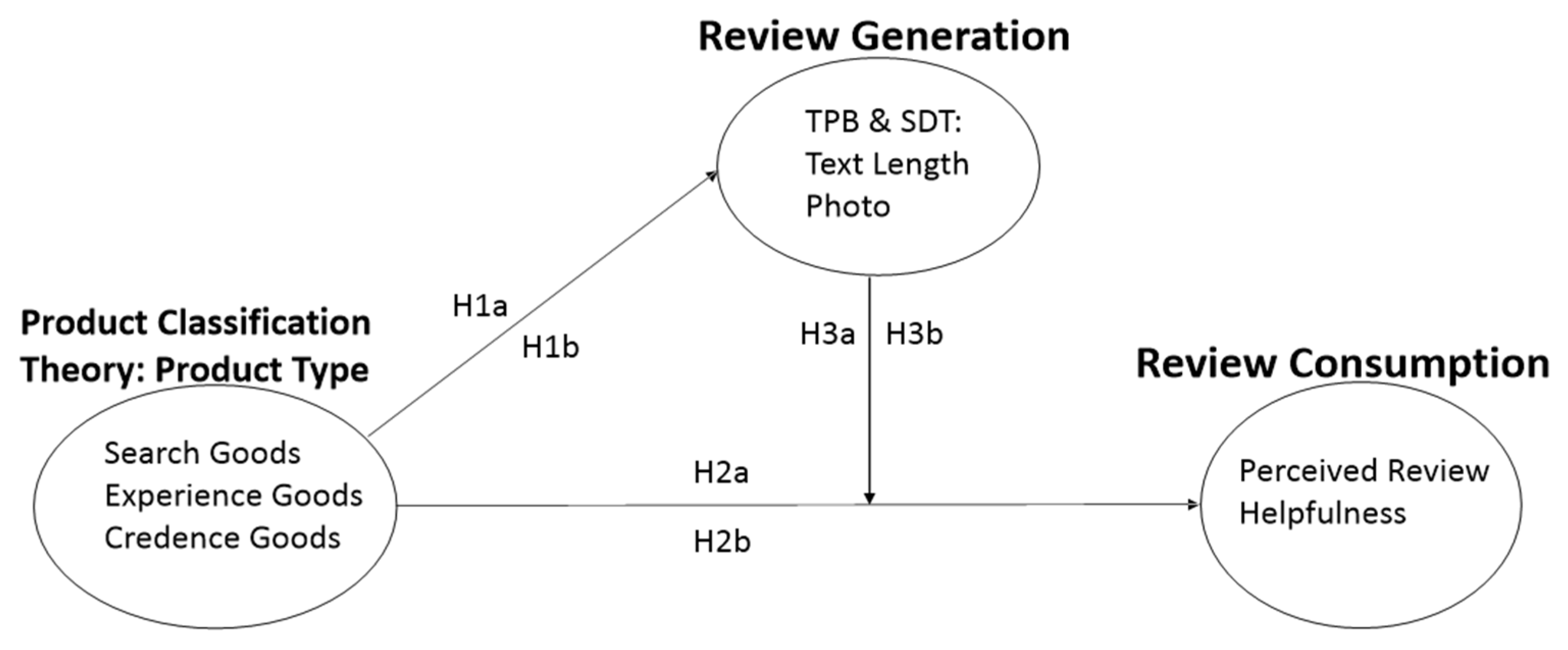

2.4. Research Framework

3. Data and Methodology

3.1. Methodology

3.2. Data Collection and Variable Description

3.3. Descriptive Statistics

4. Results

4.1. Review Generation

4.2. Perceived Helpfulness of Reviews

5. Discussion and Implications

5.1. Discussion

5.2. Theoretical Implications

5.3. Practical Implications

6. Conclusions

7. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Contimod. Amazon Statistics: The Ultimate Numbers Must Know in 2025. 2025. Available online: https://www.contimod.com/amazon-statistics/ (accessed on 18 April 2025).

- Domínguez, S.; Pedreros, S.; Delgadillo, D.; Anzola, J. A Depreciation Method Based on Perceived Information Asymmetry in the Market for Electric Vehicles in Colombia. World Electr. Veh. J. 2024, 15, 511. [Google Scholar] [CrossRef]

- Rosillo-Díaz, E.; Muñoz-Rosas, J.F.; Blanco-Encomienda, F.J. Impact of Heuristic–Systematic Cues on the Purchase Intention of the Electronic Commerce Consumer through the Perception of Product Quality. J. Retail Consum. Serv. 2024, 81, 103980. [Google Scholar] [CrossRef]

- Chen, T.; Samaranayake, P.; Cen, X.; Qi, M.; Lan, Y.C. The impact of online reviews on consumers’ purchasing decisions: Evidence from an eye-tracking study. Front. Psychol. 2022, 13, 865702. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. Research note: What makes a helpful online review? A study of customer reviews on Amazon.com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Alzate, M.; Arce-Urriza, M.; Cebollada, J. Online Reviews and Product Sales: The Role of Review Visibility. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 638–669. [Google Scholar] [CrossRef]

- Wang, L.; Che, G.; Hu, J.; Chen, L. Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1243–1266. [Google Scholar] [CrossRef]

- Mirhoseini, M.; Pagé, S.-A.; Léger, P.-M.; Sénécal, S. What Deters Online Grocery Shopping? Investigating the Effect of Arithmetic Complexity and Product Type on User Satisfaction. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 828–845. [Google Scholar] [CrossRef]

- Nelson, P. Information and consumer behavior. J. Polit. Econ. 1970, 78, 311–329. [Google Scholar] [CrossRef]

- Darby, M.R.; Karni, E. Free Competition and the Optimal Amount of Fraud. J. Law Econ. 1973, 16, 67–88. [Google Scholar] [CrossRef]

- Akerlof, G.A. The market for ‘lemons’: Quality uncertainty and the market mechanism. In Market Failure or Success; Edward Elgar Publishing: Cheltenham, UK, 2002; pp. 66–81. [Google Scholar]

- Gunasti, K.; Kara, S.; Ross, W.T., Jr. Effects of search, experience and credence attributes versus suggestive brand names on product evaluations. Eur. J. Mark. 2020, 54, 12. [Google Scholar] [CrossRef]

- Chocarro, R.; Cortinas, M.; Villanueva, M.L. Different channels for different services: Information sources for services with search, experience and credence attributes. Serv. Ind. J. 2021, 41, 261–284. [Google Scholar] [CrossRef]

- Osterbrink, L.; Alpar, P.; Seher, A. Influence of images in online reviews for search goods on helpfulness. Rev. Mark. Sci. 2020, 18, 43–73. [Google Scholar] [CrossRef]

- Dai, H.; Chan, C.; Mogilner, C. People rely less on consumer reviews for experiential than material purchases. J. Consum. Res. 2020, 46, 1052–1075. [Google Scholar] [CrossRef]

- Lantzy, S.; Hamilton, R.W.; Chen, Y.J.; Stewart, K. Online reviews of credence service providers: What do consumers evaluate, do other consumers believe the reviews, and are interventions needed? J. Public Policy Mark. 2021, 40, 27–44. [Google Scholar] [CrossRef]

- d’Andria, D. The economics of professional services: Lemon markets, credence goods, and C2C information sharing. Serv. Bus. 2013, 7, 1–15. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, X. Investigating the role of review presentation format in affecting the helpfulness of online reviews. Electron. Commer. Res. 2022, 22, 2499–2518. [Google Scholar] [CrossRef]

- Kerschbamer, R.; Sutter, M. The economics of credence goods–a survey of recent lab and field experiments. CESifo Econ. Stud. 2017, 63, 1–23. [Google Scholar] [CrossRef]

- Li, H.; Zhang, L.; Guo, R.; Ji, H.; Yu, B.X. Information enhancement or hindrance? Unveiling the impacts of user-generated photos in online reviews. Int. J. Contemp. Hosp. Manag. 2023, 35, 2322–2351. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68. [Google Scholar] [CrossRef]

- Ajzen, I.; Driver, B.L. Prediction of leisure participation from behavioral, normative, and control beliefs: An application of the theory of planned behavior. Leis. Sci. 1991, 13, 185–204. [Google Scholar] [CrossRef]

- Jia, Y.; Feng, H.; Wang, X.; Alvarado, M. “Customer Reviews or Vlogger Reviews?” The Impact of Cross-Platform UGC on the Sales of Experiential Products on E-Commerce Platforms. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 1257–1282. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, C.; Lyu, Y. Examining Consumers’ Perceptions of and Attitudes toward Digital Fashion in General and Purchase Intention of Luxury Brands’ Digital Fashion Specifically. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 1971–1989. [Google Scholar] [CrossRef]

- Varga, M.; Albuquerque, P. The impact of negative reviews on online search and purchase decisions. J. Mark. Res. 2024, 61, 803–820. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, J. Consumer preferences in user-vs. item-based recommender systems for search and experience products. J. Mark. Manag. 2025, 1, 84–110. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Kumar, V.; Sharma, A.; Chung, T.S. Impact of review narrativity on sales in a competitive environment. Prod. Oper. Manag. 2022, 31, 2538–2556. [Google Scholar] [CrossRef]

- Verma, D.; Dewani, P.P. eWOM credibility: A comprehensive framework and literature review. Online Inf. Rev. 2021, 45, 481–500. [Google Scholar] [CrossRef]

- Cardoso, A.; Gabriel, M.; Figueiredo, J.; Oliveira, I.; Rêgo, R.; Silva, R.; Meirinhos, G. Trust and loyalty in building the brand relationship with the customer: Empirical analysis in a retail chain in northern Brazil. J. Open Innov. Technol. Mark. Complex. 2022, 8, 109. [Google Scholar] [CrossRef]

- Riswanto, A.L.; Ha, S.; Lee, S.; Kwon, M. Online Reviews Meet Visual Attention: A Study on Consumer Patterns in Advertising, Analyzing Customer Satisfaction, Visual Engagement, and Purchase Intention. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 3102–3122. [Google Scholar] [CrossRef]

- Pooja, K.; Upadhyaya, P. Does Negative Online Review Matter? An Investigation of Travel Consumers. Int. J. Consum. Stud. 2025, 49, e70043. [Google Scholar] [CrossRef]

- Sandhu, R.; Singh, A.; Faiz, M.; Kaur, H.; Thukral, S. Enhanced Text Mining Approach for Better Ranking System of Customer Reviews. In Multimodal Biometric and Machine Learning Technologies: Applications for Computer Vision; Springer: Cham, Switzerland, 2023; pp. 53–69. [Google Scholar]

- Ceylan, G.; Diehl, K.; Proserpio, D. Words meet photos: When and why photos increase review helpfulness. J. Mark. Res. 2024, 61, 5–26. [Google Scholar] [CrossRef]

- Felbermayr, A.; Nanopoulos, A. The role of emotions for the perceived usefulness in online customer reviews. J. Interact. Mark. 2016, 36, 60–76. [Google Scholar] [CrossRef]

- Lopez, A.; Garza, R. Do sensory reviews make more sense? The mediation of objective consumption in online review helpfulness. J. Res. Interact. Mark. 2022, 16, 438–456. [Google Scholar]

- Madadi, R.; Torres, I.M.; Zúñiga, M.Á. The semiotics of emojis in advertising: An integrated quantitative and qualitative examination of emotional versus functional ad dynamics. Psychol. Mark. 2024, 41, 1223–1241. [Google Scholar] [CrossRef]

- Korfiatis, N.; García-Bariocanal, E.; Sánchez-Alonso, S. Evaluating content quality and helpfulness of online product reviews: The interplay of review helpfulness vs. review content. Electron. Commer. Res. Appl. 2012, 11, 205–217. [Google Scholar] [CrossRef]

- Zhu, L.; Yin, G.; He, W. Is this opinion leader’s review useful? Peripheral cues for online review helpfulness. J. Electron. Commer. Res. 2014, 15, 267. [Google Scholar]

- Pan, Y.; Zhang, J.Q. Born unequal: A study of the helpfulness of user-generated product reviews. J. Retail. 2011, 87, 598–612. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. When are extreme ratings more helpful? Empirical evidence on the moderating effects of review characteristics and product type. Comput. Hum. Behav. 2018, 88, 134–142. [Google Scholar] [CrossRef]

- Schindler, R.M.; Bickart, B. Perceived helpfulness of online consumer reviews: The role of message content and style. J. Consum. Behav. 2012, 11, 234–243. [Google Scholar] [CrossRef]

- Forman, C.; Ghose, A.; Wiesenfeld, B. Examining the relationship between reviews and sales: The role of reviewer identity disclosure in electronic markets. Inf. Syst. Res. 2008, 19, 291–313. [Google Scholar] [CrossRef]

- Willemsen, L.M.; Neijens, P.C.; Bronner, F.; De Ridder, J.A. Highly recommended! The content characteristics and perceived usefulness of online consumer reviews. J. Comput. Mediat. Commun. 2011, 17, 19–38. [Google Scholar] [CrossRef]

- Filieri, R. What makes online reviews helpful? A diagnosticity-adoption framework to explain informational and normative influences in e-WOM. J. Bus. Res. 2015, 68, 1261–1270. [Google Scholar] [CrossRef]

- Cheung, C.M.; Thadani, D.R. The impact of electronic word-of-mouth communication: A literature analysis and integrative model. Decis. Support Syst. 2012, 54, 461–470. [Google Scholar] [CrossRef]

- Deci, E.L.; Ryan, R.M. Intrinsic Motivation and Self-Determination in Human Behavior; Springer: Boston, MA, USA, 1985. [Google Scholar]

- Bronner, F.; de Hoog, R. Vacationers and eWOM: Who posts, and why, where, and what? J. Travel Res. 2011, 50, 15–26. [Google Scholar] [CrossRef]

- Yoo, K.H.; Lee, Y.; Gretzel, U.; Fesenmaier, D.R. Trust in travel-related consumer generated media. In Information and Communication Technologies in Tourism 2009; Höpken, W., Gretzel, U., Law, R., Eds.; Springer: Vienna, Austria, 2009; pp. 50–61. [Google Scholar]

- Sen, S.; Lerman, D. Why are you telling me this? An examination into negative consumer reviews on the web. J. Interact. Mark. 2007, 21, 76–94. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, X. Impact of online consumer reviews on sales: The moderating role of product and consumer characteristics. J. Mark. 2010, 74, 133–148. [Google Scholar] [CrossRef]

- Filieri, R. What makes an online consumer review trustworthy? Ann. Tour. Res. 2016, 58, 46–64. [Google Scholar] [CrossRef]

- Cheung, C.M.; Lee, M.K.; Rabjohn, N. The impact of electronic word-of-mouth: The adoption of online opinions in online customer communities. Internet Res. 2008, 18, 229–247. [Google Scholar] [CrossRef]

- Yin, D.; Bond, S.D.; Zhang, H. Anxious or angry? Effects of discrete emotions on the perceived helpfulness of online reviews. MIS Q. 2014, 38, 539–560. [Google Scholar] [CrossRef]

- Ghose, A.; Ipeirotis, P.G. Estimating the helpfulness and economic impact of product reviews: Mining text and reviewer characteristics. IEEE Trans. Knowl. Data Eng. 2010, 23, 1498–1512. [Google Scholar] [CrossRef]

- Otterbacher, J. Helpfulness in online communities: A measure of message quality. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 955–964. [Google Scholar]

- Hong, Y.; Pavlou, P.A. Product fit uncertainty in online markets: Nature, effects, and antecedents. Inf. Syst. Res. 2014, 25, 328–344. [Google Scholar] [CrossRef]

- Liu, Z.; Park, S. What makes a useful online review? Implication for travel product websites. Tour. Manag. 2015, 47, 140–151. [Google Scholar] [CrossRef]

- Huang, P.; Lurie, N.H.; Mitra, S. Searching for experience on the web: An empirical examination of consumer behavior for search and experience goods. J. Mark. 2009, 73, 55–69. [Google Scholar] [CrossRef]

- Lee, S.G.; Trimi, S.; Yang, C.G. Perceived usefulness factors of online reviews: A study of Amazon.com. J. Comput. Inf. Syst. 2018, 58, 344–352. [Google Scholar] [CrossRef]

- Wan, Y.; Nakayama, M.; Sutcliffe, N. The impact of age and shopping experiences on the classification of search, experience, and credence goods in online shopping. Inf. Syst. E-Bus. Manag. 2012, 10, 135–148. [Google Scholar] [CrossRef]

- Mayzlin, D.; Dover, Y.; Chevalier, J. Promotional reviews: An empirical investigation of online review manipulation. Am. Econ. Rev. 2014, 104, 2421–2455. [Google Scholar] [CrossRef]

- Lii, Y.S.; Sy, E. Internet differential pricing: Effects on consumer price consumption, emotions, and behavioral responses. Comput. Hum. Behav. 2009, 25, 770–777. [Google Scholar] [CrossRef]

- Geuens, M.; De Pelsmacker, P.; Faseur, T. Emotional advertising: Revisiting the role of product category. J. Bus. Res. 2011, 64, 418–426. [Google Scholar] [CrossRef]

- Dulleck, U.; Kerschbamer, R.; Sutter, M. The economics of credence goods: An experiment on the role of liability, verifiability, reputation, and competition. Am. Econ. Rev. 2011, 101, 526–555. [Google Scholar] [CrossRef]

| Variable | Description |

|---|---|

| Search Goods (sg.) | Search goods are products evaluated before purchase using available information. |

| Experience Goods (eg.) | Experience goods are products evaluated after consumption based on personal experience. |

| Credence Goods (cg.) | Credence goods are products that are difficult to evaluate, requiring expert validation or long-term use, and rely on online word-of-mouth (eWOM). In this study, they are used as the reference for binary classification. |

| Rating | Continuous variable representing the overall product rating (1–5). |

| Rating2 | Squared value of the rating, capturing non-linear relationships. |

| Extremely Positive Rating (expos) | Indicates reviews with a 5-star rating (extremely positive). |

| Extremely Negative Rating (exneg) | Indicates reviews with a 1–2 star rating (extremely negative). |

| log photo | Logarithmic value of the number of photos in a review, representing visual content. |

| log word count (log wc) | Logarithmic value of the word count in a review, representing text length. |

| log helpfulness (log helpful) | Logarithmic value of the helpfulness score, typically based on the number of helpful votes received by a review. |

| Search Goods × log photo (sg. photo) | Interaction between ‘Search Goods’ and ‘log photo’. |

| Experience Goods × log photo (eg. photo) | Interaction between ‘Experience Goods’ and ‘log photo’. |

| Search Goods × log word count (sg. wc) | Interaction between ‘Search Goods’ and ‘log word count’. |

| Experience Goods × log word count (eg. wc) | Interaction between ‘Experience Goods’ and ‘log word count’. |

| Product Price (price) | Price of the product, used to measure its impact on consumer consumption and decision-making. |

| Monthly_dummy | Month when the review was posted (1–12). |

| Yearly_dummy | Year when the review was posted (2008–2024). |

| Variable | Obs | Mean | Std. Dev. | Min | Max |

|---|---|---|---|---|---|

| sg | 23,250 | 0.244 | 0.43 | 0 | 1 |

| eg | 23,250 | 0.426 | 0.495 | 0 | 1 |

| cg | 23,250 | 0.33 | 0.47 | 0 | 1 |

| rating | 23,250 | 3.386 | 1.602 | 1 | 5 |

| rating2 | 23,250 | 14.028 | 9.969 | 1 | 25 |

| expos | 23,250 | 0.391 | 0.488 | 0 | 1 |

| exneg | 23,250 | 0.331 | 0.471 | 0 | 1 |

| log photo | 23,250 | 0.079 | 0.364 | 0 | 4.127 |

| log wc | 23,250 | 3.287 | 1.131 | 0 | 7.41 |

| log helpful | 23,250 | 0.541 | 0.810 | 0 | 6.396 |

| sg photo | 23,250 | 0.029 | 0.225 | 0 | 4.127 |

| eg photo | 23,250 | 0.036 | 0.249 | 0 | 3.258 |

| sg wc | 23,250 | 0.884 | 1.662 | 0 | 7.209 |

| eg wc | 23,250 | 1.342 | 1.717 | 0 | 7.41 |

| price | 23,250 | 150.373 | 259.225 | 6.99 | 2399 |

| yearly dummy | 23,250 | 2021.769 | 2.385 | 2008 | 2004 |

| monthly dummy | 23,250 | 6.253 | 3.341 | 1 | 12 |

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | (13) | (14) | (15) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (1) sg | 1.00 | ||||||||||||||

| (2) eg | −0.490 | 1.00 | |||||||||||||

| (3) cg | −0.398 | −0.605 | 1.00 | ||||||||||||

| (4) rating | −0.026 | 0.138 | −0.121 | 1.00 | |||||||||||

| (5) rating2 | −0.012 | 0.134 | −0.130 | 0.987 | 1.00 | ||||||||||

| (6) expos | 0.023 | 0.106 | −0.132 | 0.808 | 0.882 | 1.00 | |||||||||

| (7) exneg | 0.043 | −0.124 | 0.091 | −0.902 | −0.849 | −0.564 | 1.00 | ||||||||

| (8) log photo | 0.058 | 0.010 | −0.064 | −0.023 | −0.017 | −0.005 | 0.024 | 1.00 | |||||||

| (9) log wc | 0.169 | −0.106 | −0.043 | −0.208 | −0.225 | −0.233 | 0.160 | 0.135 | 1.00 | ||||||

| (10) log helpful | 0.165 | −0.001 | −0.150 | −0.135 | −0.126 | −0.101 | 0.116 | 0.151 | 0.431 | 1.00 | |||||

| (11) sg photo | 0.223 | −0.109 | −0.089 | 0.013 | 0.016 | 0.015 | −0.014 | 0.600 | 0.129 | 0.113 | 1.00 | ||||

| (12) eg photo | −0.082 | 0.167 | −0.101 | −0.022 | −0.016 | −0.002 | 0.025 | 0.666 | 0.052 | 0.107 | 0.018 | 1.00 | |||

| (13) sg wc | 0.937 | −0.459 | −0.373 | −0.066 | −0.056 | −0.026 | 0.072 | 0.093 | 0.339 | 0.246 | 0.272 | 0.076 | 1.00 | ||

| (14) eg wc | −0.444 | 0.907 | −0.548 | 0.077 | 0.067 | 0.034 | −0.079 | 0.041 | 0.174 | 0.108 | 0.099 | 0.197 | 0.416 | 1.00 | |

| (15) price | 0.578 | −0.195 | −0.323 | 0.086 | 0.102 | 0.128 | −0.052 | 0.062 | 0.061 | 0.136 | 0.161 | 0.026 | 0.534 | 0.193 | 1.00 |

| (1) | (2) | (3) | (4) | (5) | (6) | |

|---|---|---|---|---|---|---|

| Variables | log_photo | log_photo | log_photo | log_wc | log_wc | log_wc |

| sg. | 0.043 *** | 0.043 *** | 0.043 *** | 0.484 *** | 0.491 *** | 0.486 *** |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | |

| eg. | 0.054 *** | 0.053 *** | 0.054 *** | 0.022 | 0.042 ** | 0.039 ** |

| (0.001) | (0.001) | (0.001) | (0.198) | (0.015) | (0.023) | |

| rating | −0.008 *** | −0.033 *** | −0.019 *** | −0.141 *** | 0.436 *** | 0.006 |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.748) | |

| rating2 | Not included | 0.004 *** | Not included | Not included | −0.094 *** | Not included |

| (0.004) | (0.001) | |||||

| expos | Not included | Not included | 0.023 ** | Not included | Not included | −0.510 *** |

| (0.032) | (0.001) | |||||

| exneg | Not included | Not included | −0.021 | Not included | Not included | 0.086 ** |

| (0.120) | (0.045) | |||||

| price | 0.001 *** | 0.001 *** | 0.001 *** | 0.001 ** | 0.001 | 0.001 |

| (0.001) | (0.001) | (0.001) | (0.032) | (0.530) | (0.415) | |

| yearly dummy | Included | Included | Included | Included | Included | Included |

| monthly dummy | Included | Included | Included | Included | Included | Included |

| Constant | −0.029 *** | 0.004 | 0.017 | 5.772 *** | 3.450 *** | 5.165 *** |

| (0.001) | (0.781) | (0.476) | (0.001) | (0.001) | (0.001) | |

| Observations | 23,250 | 23,250 | 23,250 | 23,250 | 23,250 | 23,250 |

| R-squared | 1.3% | 1.3% | 1.3% | 7.9% | 9.7% | 9.3% |

| (1) | (2) | (3) | (4) | (5) | (6) | |

|---|---|---|---|---|---|---|

| Variables | log_helpful | log_helpful | log_helpful | log_helpful | log_helpful | log_helpful |

| sg | −0.133 *** | −0.136 *** | −0.137 *** | −0.133 *** | −0.136 *** | −0.137 *** |

| (0.002) | (0.001) | (0.001) | (0.002) | (0.001) | (0.001) | |

| eg | 0.068 ** | 0.059 * | 0.060 * | 0.068 ** | 0.059 * | 0.060 * |

| (0.031) | (0.062) | (0.056) | (0.031) | (0.062) | (0.056) | |

| rating | −0.036 *** | −0.264 *** | −0.126 *** | −0.036 *** | −0.264 *** | −0.126 *** |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | |

| rating2 | Not included | 0.037 *** | Not included | Not included | 0.037 *** | Not included |

| (0.001) | (0.001) | |||||

| expos | Not included | Not included | 0.195 *** | Not included | Not included | 0.195 *** |

| (0.001) | (0.001) | |||||

| exneg | Not included | Not included | −0.164 *** | Not included | Not included | −0.164 *** |

| (0.001) | (0.001) | |||||

| log photo | 0.113 *** | 0.108 *** | 0.112 *** | 0.113 *** | 0.108 *** | 0.112 *** |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | |

| log wc | 0.245 *** | 0.253 *** | 0.249 *** | 0.245 *** | 0.253 *** | 0.249 *** |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | |

| sg photo | 0.002 | 0.005 | 0.002 | 0.002 | 0.005 | 0.001 |

| (0.969) | (0.914) | (0.973) | (0.969) | (0.914) | (0.973) | |

| eg photo | 0.143 *** | 0.137 *** | 0.138 *** | 0.143 *** | 0.137 *** | 0.138 *** |

| (0.001) | (0.002) | (0.001) | (0.001) | (0.002) | (0.002) | |

| sg wc | 0.096 *** | 0.095 *** | 0.097 *** | 0.096 *** | 0.095 *** | 0.097 *** |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | |

| eg wc | 0.027 ** | 0.0275 ** | 0.028 ** | 0.027 ** | 0.028 ** | 0.028 ** |

| (0.016) | (0.013) | (0.012) | (0.016) | (0.013) | (0.011) | |

| price | 0.001 *** | 0.001 *** | 0.001 *** | 0.001 *** | 0.001 *** | 0.001 *** |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | |

| yearly dummy | Included | Included | Included | Included | Included | Included |

| monthly dummy | Included | Included | Included | Included | Included | Included |

| Constant | −0.668 *** | −0.388 *** | −0.321 *** | −0.347 *** | −0.133 *** | −0.080 |

| (0.001) | (0.001) | (0.001) | (0.001) | (0.001) | (0.142) | |

| Observations | 23,250 | 23,250 | 23,250 | 23,250 | 23,250 | 23,250 |

| R-squared | 23.4% | 23.9% | 23.7% | - | - | - |

| AIC | - | - | - | 2.154507 | 2.147648 | 2.15117 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, H.; Park, K.K.-c.; Kim, J.M. The Role of Product Type in Online Review Generation and Perception: Implications for Consumer Decision-Making. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 135. https://doi.org/10.3390/jtaer20020135

Dong H, Park KK-c, Kim JM. The Role of Product Type in Online Review Generation and Perception: Implications for Consumer Decision-Making. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(2):135. https://doi.org/10.3390/jtaer20020135

Chicago/Turabian StyleDong, Hang, Keeyeon Ki-cheon Park, and Jong Min Kim. 2025. "The Role of Product Type in Online Review Generation and Perception: Implications for Consumer Decision-Making" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 2: 135. https://doi.org/10.3390/jtaer20020135

APA StyleDong, H., Park, K. K.-c., & Kim, J. M. (2025). The Role of Product Type in Online Review Generation and Perception: Implications for Consumer Decision-Making. Journal of Theoretical and Applied Electronic Commerce Research, 20(2), 135. https://doi.org/10.3390/jtaer20020135