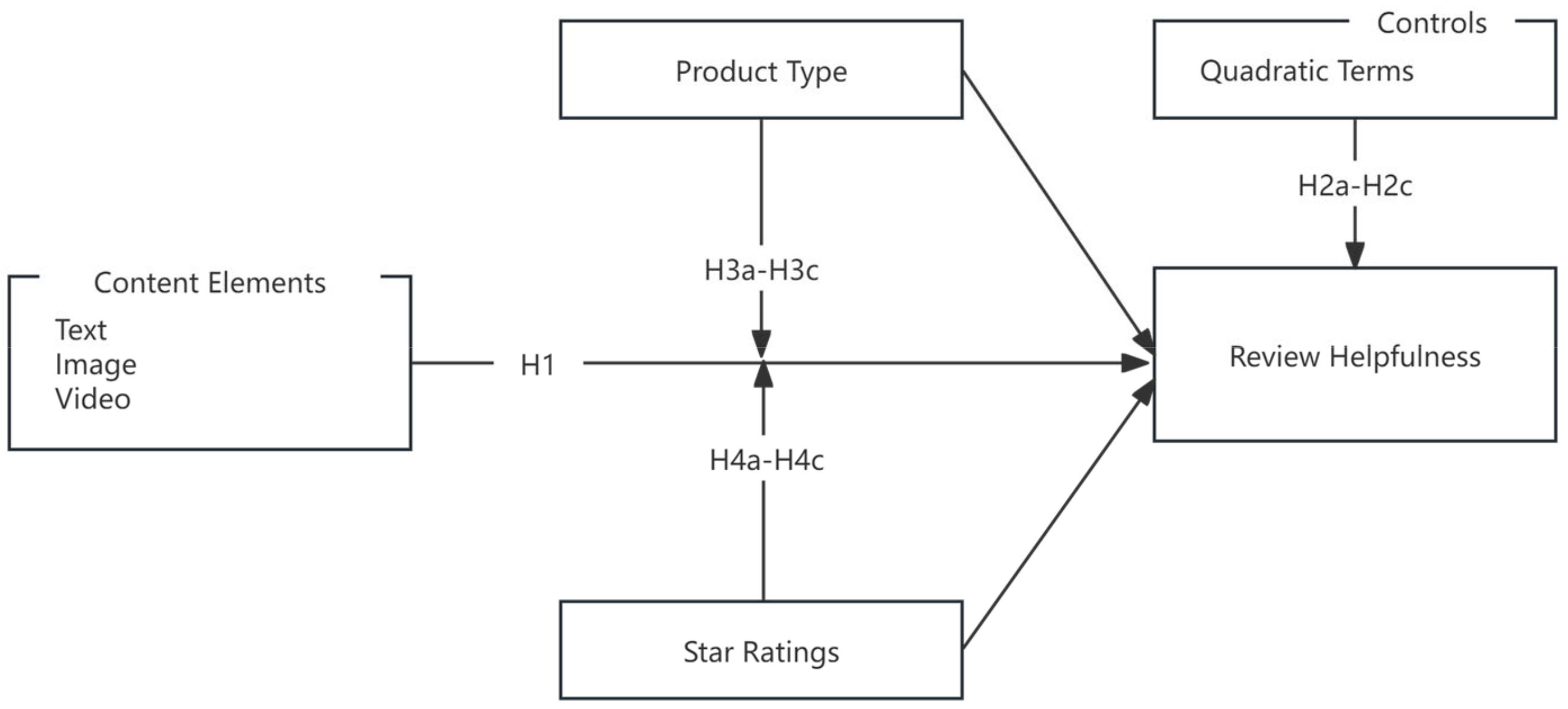

Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements

Abstract

1. Introduction

2. Literature Review

2.1. Elements of Online Review Content and Online Review Helpfulness

2.2. Information Overload in Online Consumer Decisions

3. Theoretical Background and Hypotheses

3.1. Cognitive Load Theory and Dual-Coding Theory

3.2. Information Overload and Underload in Hybrid Content Elements

3.3. Product Types

3.4. Star Ratings

4. Research Methodology

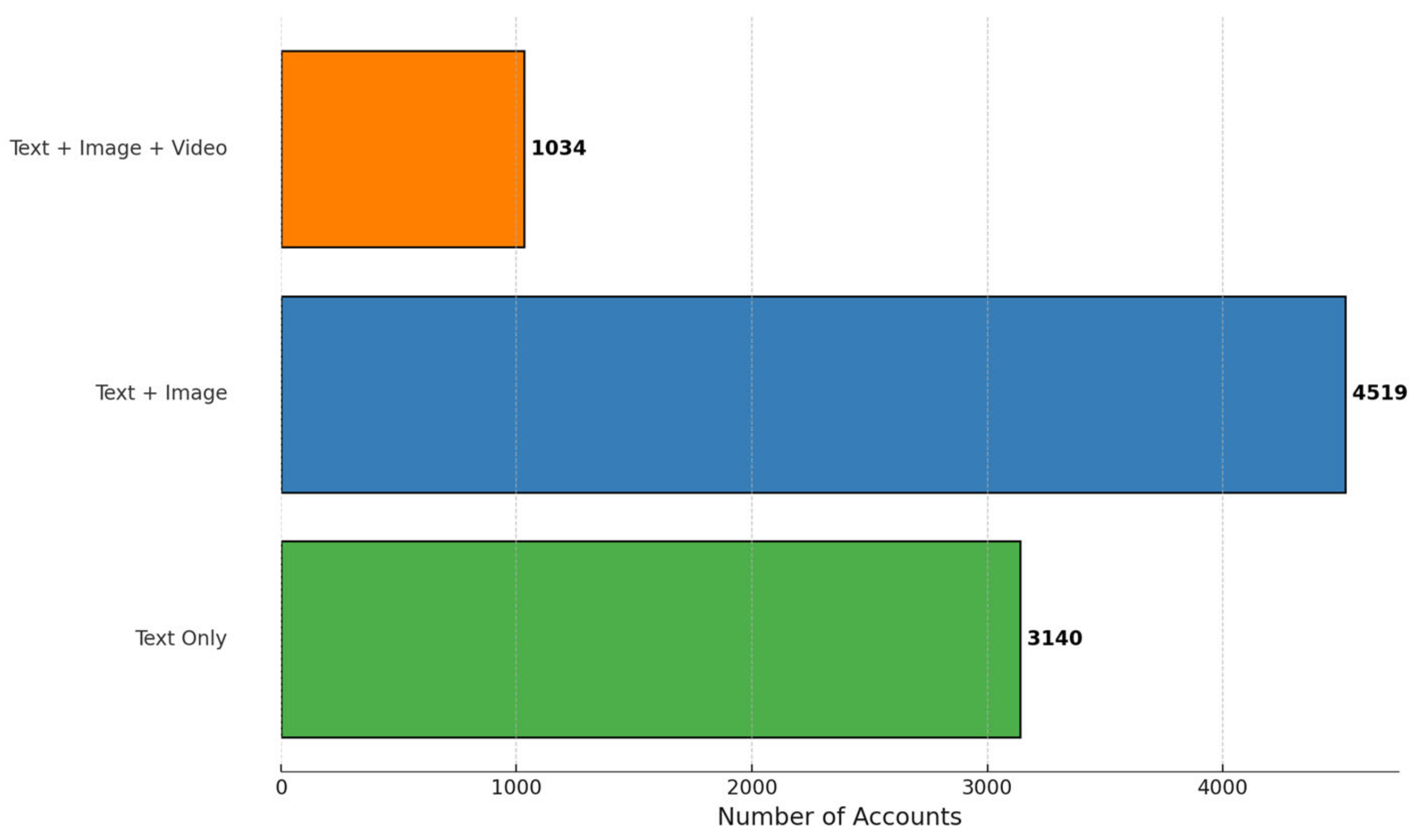

4.1. Data Collection

4.2. Variables

4.3. Analysis Method

5. Results and Robustness Checks

5.1. Main Results

5.2. Robustness Checks

6. Discussion

6.1. Impact of Image Quantity on Other Content Elements

6.2. Impact of Presentation Formats on Review Helpfulness

7. Conclusions

7.1. Theoretical Contributions

7.2. Practical Contributions

7.3. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alzate, M.; Arce-Urriza, M.; Cebollada, J. Online Reviews and Product Sales: The Role of Review Visibility. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 638–669. [Google Scholar] [CrossRef]

- Zhai, M. The Importance of Online Customer Reviews Characteristics on Remanufactured Product Sales: Evidence from the Mobile Phone Market on Amazon.Com. J. Retail. Consum. Serv. 2024, 77, 103677. [Google Scholar] [CrossRef]

- Xia, L.; Bechwati, N.N. Word of Mouse: The Role of Cognitive Personalization in Online Consumer Reviews. J. Interact. Advert. 2008, 9, 3–13. [Google Scholar] [CrossRef]

- Wang, Y.; Ngai, E.W.T.; Li, K. The Effect of Review Content Richness on Product Review Helpfulness: The Moderating Role of Rating Inconsistency. Electron. Commer. Res. Appl. 2023, 61, 101290. [Google Scholar] [CrossRef]

- Li, H.; Wang, C.R.; Meng, F.; Zhang, Z. Making Restaurant Reviews Useful and/or Enjoyable? The Impacts of Temporal, Explanatory, and Sensory Cues. Int. J. Hosp. Manag. 2019, 83, 257–265. [Google Scholar] [CrossRef]

- Pauwels, K.; Leeflang, P.S.H.; Teerling, M.L.; Huizingh, K.R.E. Does Online Information Drive Offline Revenues? Only for Specific Products and Consumer Segments! J. Retail. 2011, 87, 1–17. [Google Scholar] [CrossRef]

- Li, C.; Liu, Y.; Du, R. The Effects of Review Presentation Formats on Consumers’ Purchase Intention. J. Glob. Inf. Manag. 2021, 29, 1–20. [Google Scholar] [CrossRef]

- Xu, P.; Chen, L.; Santhanam, R. Will Video Be the next Generation of E-Commerce Product Reviews? Presentation Format and the Role of Product Type. Decis. Support Syst. 2015, 73, 85–96. [Google Scholar] [CrossRef]

- Choi, H.S.; Leon, S. An Empirical Investigation of Online Review Helpfulness: A Big Data Perspective. Decis. Support Syst. 2020, 139, 113403. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. When Are Extreme Ratings More Helpful? Empirical Evidence on the Moderating Effects of Review Characteristics and Product Type. Comput. Hum. Behav. 2018, 88, 134–142. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. What Moderates the Influence of Extremely Negative Ratings? The Role of Review and Reviewer Characteristics. Int. J. Hosp. Manag. 2019, 77, 333–341. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. Research Note: What Makes a Helpful Online Review? A Study of Customer Reviews on Amazon.Com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Chevalier, J.A.; Mayzlin, D. The Effect of Word of Mouth on Sales: Online Book Reviews. J. Mark. Res. 2006, 43, 345–354. [Google Scholar] [CrossRef]

- Godes, D.; Mayzlin, D. Firm-Created Word-of-Mouth Communication: Evidence from a Field Test. Mark. Sci. 2009, 28, 721–739. [Google Scholar] [CrossRef]

- Jiang, Z.; Benbasat, I. The Effects of Presentation Formats and Task Complexity on Online Consumers’ Product Understanding. MIS Q. 2007, 31, 475–500. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A Review of Affective Computing: From Unimodal Analysis to Multimodal Fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Li, J.; Ensafjoo, M. Media Format Matters: User Engagement with Audio, Text and Video Tweets. J. Radio Audio Media 2024, 1–21. [Google Scholar] [CrossRef]

- Bettman, J.R.; Kakkar, P. Effects of Information Presentation Format on Consumer Information Acquisition Strategies. J. Consum. Res. 1977, 3, 233–240. [Google Scholar] [CrossRef]

- Townsend, C.; Kahn, B.E. The “Visual Preference Heuristic”: The Influence of Visual versus Verbal Depiction on Assortment Processing, Perceived Variety, and Choice Overload. J. Consum. Res. 2014, 40, 993–1015. [Google Scholar] [CrossRef]

- Ganguly, B.; Sengupta, P.; Biswas, B. What Are the Significant Determinants of Helpfulness of Online Review? An Exploration Across Product-types. J. Retail. Consum. Serv. 2024, 78, 103748. [Google Scholar] [CrossRef]

- Zinko, R.; Stolk, P.; Furner, Z.; Almond, B. A Picture Is Worth a Thousand Words: How Images Influence Information Quality and Information Load in Online Reviews. Electron. Mark. 2020, 30, 775–789. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, X. Investigating the Role of Review Presentation Format in Affecting the Helpfulness of Online Reviews. Electron. Commer. Res. 2022, 1–20. [Google Scholar] [CrossRef]

- Ceylan, G.; Diehl, K.; Proserpio, D. Words Meet Photos: When and Why Photos Increase Review Helpfulness. J. Mark. Res. 2024, 61, 5–26. [Google Scholar] [CrossRef]

- Grewal, R.; Gupta, S.; Hamilton, R. Marketing Insights from Multimedia Data: Text, Image, Audio, and Video. J. Mark. Res. 2021, 58, 1025–1033. [Google Scholar] [CrossRef]

- Tavassoli, N.T.; Lee, Y.H. The Differential Interaction of Auditory and Visual Advertising Elements with Chinese and English. J. Mark. Res. 2003, 40, 468–480. [Google Scholar] [CrossRef]

- Eppler, M.J.; Mengis, J. The Concept of Information Overload: A Review of Literature from Organization Science, Accounting, Marketing, MIS, and Related Disciplines. Inf. Soc. 2004, 20, 325–344. [Google Scholar] [CrossRef]

- Glatz, T.; Lippold, M.A. Is More Information Always Better? Associations among Parents’ Online Information Searching, Information Overload, and Self-Efficacy. Int. J. Behav. Dev. 2023, 47, 444–453. [Google Scholar] [CrossRef]

- Jacoby, J.; Speller, D.E.; Kohn, C.A. Brand Choice Behavior as a Function of Information Load. J. Mark. Res. 1974, 11, 63–69. [Google Scholar] [CrossRef]

- Hu, H.; Krishen, A.S. When Is Enough, Enough? Investigating Product Reviews and Information Overload from a Consumer Empowerment Perspective. J. Bus. Res. 2019, 100, 27–37. [Google Scholar] [CrossRef]

- Jabr, W.; Rahman, M. Online Reviews and Information Overload: The Role of Selective, Parsimonious, and Concordant Top Reviews. MIS Q. 2022, 46, 1517–1550. [Google Scholar] [CrossRef]

- Furner, C.P.; Zinko, R.A. The Influence of Information Overload on the Development of Trust and Purchase Intention Based on Online Product Reviews in a Mobile vs. Web Environment: An Empirical Investigation. Electron. Mark. 2017, 27, 211–224. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load Theory, Learning Difficulty, and Instructional Design. Learn. Instr. 1994, 4, 295–312. [Google Scholar] [CrossRef]

- Wirzberger, M.; Esmaeili Bijarsari, S.; Rey, G.D. Embedded Interruptions and Task Complexity Influence Schema-Related Cognitive Load Progression in an Abstract Learning Task. Acta Psychol. 2017, 179, 30–41. [Google Scholar] [CrossRef] [PubMed]

- Loaiza, V.M.; Oftinger, A.-L.; Camos, V. How Does Working Memory Promote Traces in Episodic Memory? J. Cogn. 2023, 6, 4. [Google Scholar] [CrossRef] [PubMed]

- Zukić, M.; Đapo, N.; Husremović, D. Construct and Predictive Validity of an Instrument for Measuring Intrinsic, Extraneous and Germane Cognitive Load. Univers. J. Psychol. 2016, 4, 242–248. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive Load Theory and Instructional Design: Recent Developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- Paivio, A. Imagery and Verbal Processes; Psychology Press: New York, NY, USA, 1979. [Google Scholar]

- Lee, A.Y.; Aaker, J.L. Bringing the Frame into Focus: The Influence of Regulatory Fit on Processing Fluency and Persuasion. J. Pers. Soc. Psychol. 2004, 86, 205–218. [Google Scholar] [CrossRef] [PubMed]

- Dang, A.; Nichols, B.S. The Effects of Size Referents in User-generated Photos on Online Review Helpfulness. J. Consum. Behav. 2023, 23, 1493–1511. [Google Scholar] [CrossRef]

- Chen, T.; Rao, R.R. Audio-Visual Integration in Multimodal Communication. Proc. IEEE 1998, 86, 837–852. [Google Scholar] [CrossRef]

- Lutz, B.; Pröllochs, N.; Neumann, D. Are Longer Reviews Always More Helpful? Disentangling the Interplay between Review Length and Line of Argumentation. J. Bus. Res. 2022, 144, 888–901. [Google Scholar] [CrossRef]

- Kemper, S.; Herman, R.E. Age Differences in Memory-Load Interference Effects in Syntactic Processing. J. Gerontol. Ser. B. 2006, 61, 327–332. [Google Scholar] [CrossRef]

- Laposhina, A.N.; Lebedeva, M.Y.; Khenis, A.A.B. Word Frequency and Text Complexity: An Eye-tracking Study of Young Russian Readers. Russ. J. Linguist. 2022, 26, 493–514. [Google Scholar] [CrossRef]

- Ullman, S.; Vidal-Naquet, M.; Sali, E. Visual Features of Intermediate Complexity and Their Use in Classification. Nat. Neurosci. 2002, 5, 682–687. [Google Scholar] [CrossRef]

- Ghose, A.; Ipeirotis, P.G. Estimating the Helpfulness and Economic Impact of Product Reviews: Mining Text and Reviewer Characteristics. IEEE Trans. Knowl. Data Eng. 2011, 23, 1498–1512. [Google Scholar] [CrossRef]

- Sun, L.; Yamasaki, T.; Aizawa, K. Photo Aesthetic Quality Estimation Using Visual Complexity Features. Multimed. Tools Appl. 2018, 77, 5189–5213. [Google Scholar] [CrossRef]

- Hughes, C.; Costley, J.; Lange, C. The Effects of Multimedia Video Lectures on Extraneous Load. Distance Educ. 2019, 40, 54–75. [Google Scholar] [CrossRef]

- Lackmann, S.; Léger, P.-M.; Charland, P.; Aubé, C.; Talbot, J. The Influence of Video Format on Engagement and Performance in Online Learning. Brain Sci. 2021, 11, 128. [Google Scholar] [CrossRef]

- Kübler, R.V.; Lobschat, L.; Welke, L.; van der Meij, H. The Effect of Review Images on Review Helpfulness: A Contingency Approach. J. Retail. 2023, 100, 5–23. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Y.; Zhao, J. Effect of User-Generated Image on Review Helpfulness: Perspectives from Object Detection. Electron. Commer. Res. Appl. 2023, 57, 101232. [Google Scholar] [CrossRef]

- Li, Y.; Xie, Y. Is a Picture Worth a Thousand Words? An Empirical Study of Image Content and Social Media Engagement. J. Mark. Res. 2020, 57, 1–19. [Google Scholar] [CrossRef]

- Song, J.; Tang, T.; Hu, G. The Duration Threshold of Video Content Observation: An Experimental Investigation of Visual Perception Efficiency. Comput. Sci. Inf. Syst. 2023, 20, 879–892. [Google Scholar] [CrossRef]

- Dhar, R.; Wertenbroch, K. Consumer Choice between Hedonic and Utilitarian Goods. J. Mark. Res. 2000, 37, 60–71. [Google Scholar] [CrossRef]

- Chen, Y.; Xie, J. Online Consumer Review: Word-of-Mouth as a New Element of Marketing Communication Mix. Manag. Sci. 2008, 54, 477–491. [Google Scholar] [CrossRef]

- Nelson, P. Information and Consumer Behavior. J. Polit. Econ. 1970, 78, 311–329. [Google Scholar] [CrossRef]

- Nelson, P. Advertising as Information. J. Polit. Econ. 1974, 82, 729–754. [Google Scholar] [CrossRef]

- Park, D.-H.; Lee, J.; Han, I. The Effect of On-Line Consumer Reviews on Consumer Purchasing Intention: The Moderating Role of Involvement. Int. J. Electron. Commer. 2007, 11, 125–148. [Google Scholar] [CrossRef]

- Koh, N.S.; Hu, N.; Clemons, E.K. Do Online Reviews Reflect a Product’s True Perceived Quality? An Investigation of Online Movie Reviews across Cultures. Electron. Commer. Res. Appl. 2010, 9, 374–385. [Google Scholar] [CrossRef]

- Altab, H.M.; Mu, Y.; Sajjad, H.M.; Frimpong, A.N.K.; Frempong, M.F.; Adu-Yeboah, S.S. Understanding Online Consumer Textual Reviews and Rating: Review Length with Moderated Multiple Regression Analysis Approach. Sage Open 2022, 12, 2158244022110480. [Google Scholar] [CrossRef]

- Yin, D.; Mitra, S.; Zhang, H. Research Note—When Do Consumers Value Positive vs. Negative Reviews? An Empirical Investigation of Confirmation Bias in Online Word of Mouth. Inf. Syst. Res. 2016, 27, 131–144. [Google Scholar] [CrossRef]

- Horton, N.J. Multilevel and Longitudinal Modeling Using Stata. Am. Stat. 2006, 60, 293–294. [Google Scholar] [CrossRef]

- Li, B.; Lingsma, H.F.; Steyerberg, E.W.; Lesaffre, E. Logistic Random Effects Regression Models: A Comparison of Statistical Packages for Binary and Ordinal Outcomes. BMC Med. Res. Methodol. 2011, 11, 77. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Shen, H.; Liu, Q.; Rao, C.; Li, J.; Goh, M. Joint optimization on green investment and contract design for sustainable supply chains with fairness concern. Ann. Operat. Res. 2024, 1–39. [Google Scholar] [CrossRef]

- Liu, Q.; Ma, Y.; Chen, L.; Pedrycz, W.; Skibniewski, M.; Chen, Z. Artificial intelligence for production, operations and logistics management in modular construction industry: A systematic literature review. Inform. Fusion 2024, 109, 102423. [Google Scholar] [CrossRef]

| Reference | Year | Key Findings | Gaps Identified |

|---|---|---|---|

| Mudambi and Schuff [12] | 2010 | Review depth affects helpfulness ratings, complex dynamics between review length and perceived helpfulness level | Did not explore multimodal content (text, images, video) |

| Chevalier and Mayzlin [13] | 2006 | Online book reviews influence sales significantly | Limited to books, not considering the mix of media elements in reviews |

| Townsend and Kahn [19] | 2014 | Visual depictions can increase perceived variety but may overload and complicate decision-making | Need to incorporate mixed media elements beyond visual and verbal formats |

| Xu et al. [8] | 2015 | The presentation format and product type significantly affect the impact of reviews on purchase intention | Need for exploring other combinations of review content elements in real-world settings |

| Zinko et al. [21] | 2020 | Images in reviews can balance out the effects of lengthy textual content | Did not fully explore video or interactive elements in reviews |

| Cui and Wang [22] | 2022 | Presentation format affects review helpfulness influenced by word count and response count | Need for exploring the authentic context of online shopping environments where hybrid review elements coexist and interact |

| Ceylan et al. [23] | 2024 | Greater similarity between review text and photos increases review helpfulness due to ease of processing | Did not explore the effect of video or interactive elements in reviews |

| Jabr and Rahman [30] | 2022 | Top reviews play a crucial role in mitigating information overload, with effectiveness varying by review volume, parsimony, concordance, and product popularity | Did not specifically address the impact of multimedia elements (images, videos) in online review helpfulness |

| Furner and Zinko [31] | 2017 | Information overload negatively affects trust and purchase intentions in online product reviews | Did not explore the impact of various multimedia elements (like images or videos) in reviews |

| Review Type | Experience Product | Search Product | |||||

|---|---|---|---|---|---|---|---|

| SK-II [New Year Coupon] Star Luxury Skincare Experience Set | Wuliangye 8th Generation 52 Degrees Strong Aroma Chinese Spirit | He Feng Yu Men’s Perfume Gift Box | Huawei HUAWEI P40 (5G) 8G+128G | Canon PowerShotG7 | Lenovo Xiaoxin 16 2023 Ultra-thin 16, i5-13500H 16G 512G Standard Edition IPS Full Screen | Total | |

| Textual elements | 1615 | 982 | 2459 | 1089 | 1450 | 1098 | 8693 |

| Imagery elements | 993 | 710 | 1057 | 850 | 910 | 1025 | 5545 |

| Video elements | 28 | 20 | 228 | 311 | 38 | 409 | 1034 |

| Variable | Mean | S.D. | Min | Max |

|---|---|---|---|---|

| RLength | 62.02519 | 51.28203 | 0 | 500 |

| NImage | 2.017255 | 1.999983 | 0 | 9 |

| DVideo | 1.158089 | 3.309312 | 0 | 15 |

| PType | 0.5816174 | 0.493322 | 0 | 1 |

| SRating | 3.817554 | 1.660865 | 1 | 5 |

| RResponse | 0.8141033 | 1.969498 | 0 | 96 |

| Variable | Model 1 | Model 2 | Model 3 | Model 4 | Model 5 |

|---|---|---|---|---|---|

| Baseline Model | Effects of Three Types of Reviews | Quadratic Terms of Three Types of Reviews | Interaction Terms of Product Types and Three Types of Reviews | Interaction Terms of Star Ratings and Three Types of Reviews | |

| −0.0094512 (0.0353704) | −0.5604907 *** (0.0461091) | −0.5148615 *** (0.0508109) | −0.5857195 *** (0.0519262) | −0.4930427 *** (0.0656872) | |

| PType | −0.374899 (0.668288) | −0.1652225 (0.8420286) | −0.1223541 (0.8428844) | −0.37136 (0.7070186) | −0.4278527 (0.7197488) |

| RResponse | 1.14453 *** (0.0747517) | 1.034944 *** (0.0794695) | 1.04114 *** (0.0798164) | 1.17875 *** (0.0832794) | 1.183912 *** (0.0836325) |

| RLength | - | 0.2520958 *** (0.0321143) | 0.5600407 *** (0.0767043) | 0.4586954 *** (0.0859834) | 0.4747597 *** (0.0836756) |

| NImage | - | 0.6736385 *** (0.0431683) | 0.3077441 *** (0.1127373) | 0.1773168 (0.1170098) | 0.1779609 (0.1182195) |

| DVideo | - | 0.2474221 *** (0.032336) | 0.2244095 *** (0.0355803) | −0.1295235 ** (0.0540558) | −0.1634889 ** (0.0665812) |

| - | - | −0.3179691 *** (0.0732998) | −0.2828878 *** (0.0741595) | −0.3036335 *** (0.0718357) | |

| - | - | 0.287492 *** (0.0920753) | 0.2015993 ** (0.0907878) | 0.1133691 (0.0984913) | |

| - | - | 0.0686756 * (0.0354769) | 0.1198493 *** (0.036711) | 0.1194758 *** (0.0368086) | |

| PType × RLength | - | - | - | 0.1725752 *** (0.0664521) | 0.1962015 *** (0.0649207) |

| PType × NImage | - | - | - | 0.3376643 *** (0.0708082) | 0.3732293 *** (0.0714518) |

| PType × DVideo | - | - | - | 0.704002 *** (0.0708605) | 0.7325866 *** (0.0718867) |

| SRating × RLength | - | - | - | - | 0.1185116 *** (0.0306011) |

| SRating × NImage | - | - | - | - | 0.1526409 ** (0.0685903) |

| SRating × DVideo | - | - | - | - | 0.0299873 (0.0610099) |

| Intercept | −1.352547 *** (0.4727249) | −1.587855 *** (0.5957433) | −1.61709 *** (0.5963793) | −1.344825 *** (0.5002534) | −1.456717 *** (0.5108506) |

| Category-specific random effects | ✓ | ✓ | ✓ | ✓ | ✓ |

| Product-type-specific random effects | ✓ | ✓ | ✓ | ✓ | ✓ |

| Observations | 8693 | 8693 | 8693 | 8693 | 8693 |

| AIC | 7293.222 | 6733.375 | 6709.319 | 6510.865 | 6494.367 |

| Log-likelihood | −3640.611 | −3357.688 | −3334.8877 | −3239.7858 | −3229.1835 |

| Variable | Model 6 | Model 7 | Model 8 |

|---|---|---|---|

| Interaction Term of Response and Product Type | Dummy Product Categories | Ordered Logistic Regression | |

| −0.480597 *** (0.0652739) | −0.4588905 *** (0.0661166) | −0.5965664 *** (0.0551167) | |

| PType | −0.4164621 (0.7217275) | −0.2068887 (0.2341358) | −0.6700705 (0.8038127) |

| RResponse | 1.001158 *** (0.1003105) | 1.058021 *** (0.0810086) | 0.7270809 *** (0.0413358) |

| RLength | 0.4953353 *** (0.0839767) | 0.8273235 *** (0.1191163) | 0.5629864 *** (0.0757548) |

| NImage | 0.1836417 (0.1176959) | 0.1160616 (0.1346521) | 0.3174738 *** (0.1077916) |

| DVideo | −0.1625694 ** (0.0661902) | 0.7428473 *** (0.135011) | −0.1758015 *** (0.0588212) |

| −0.3162264 *** (0.0720411) | −0.3468706 *** (0.0749603) | −0.3038904 *** (0.0650162) | |

| 0.0939385 (0.0981905) | 0.1825292 (0.1034378) | 0.0664214 (0.0845221) | |

| 0.1194046 *** (0.0367394) | 0.0496003 (0.0359453) | 0.1183577 *** (0.0358613) | |

| PType × RLength | 0.1974928 *** (0.0649661) | −0.0740211 *** (0.023857) | 0.0369258 (0.060756) |

| PType × NImage | 0.4266552 *** (0.0738749) | 0.0699344 *** (0.025906) | 0.3915435 *** (0.0635056) |

| PType × DVideo | 0.7424756 *** (0.0719275) | −0.1071241 *** (0.027712) | 0.6770692 *** (0.0671368) |

| SRating × RLength | 0.1192885 *** (0.0305453) | 0.0582222 * (0.0308609) | 0.1327962 *** (0.0267572) |

| SRating × NImage | 0.1720769 ** (0.0681431) | 0.1228174 ** (0.0701131) | 0.2547917 *** (0.0590973) |

| SRating × DVideo | 0.0247345 (0.0609282) | −0.0864279 (0.0642071) | −0.0146173 (0.0494329) |

| RResponse × PType | 0.4568665 *** (0.1579479) | - | - |

| Intercept | −1.475673 *** (0.5122107) | −1.0055 (0.9121967) | - |

| Category-specific random effects | ✓ | ✓ | ✓ |

| Product-type-specific random effects | ✓ | ✓ | ✓ |

| Observations | 8693 | 8693 | 8693 |

| AIC | 6488.005 | 6689.169 | 13,315.79 |

| Log-likelihood | −3225.003 | −3326.584 | −6566.896 |

| Variable | Model 9 | Model 10 |

|---|---|---|

| Image Quantity and Its Moderators | Interaction Term of Response and Product Type | |

| −0.4837298 *** (0.0661919) | −0.4695552 *** (0.0657944) | |

| PType | −0.4281081 (0.7240757) | −0.4162143 (0.726257) |

| RResponse | 1.189342 *** (0.0837727) | 0.9989255 *** (0.1001109) |

| RLength | 0.4637037 *** (0.0824031) | 0.4847465 *** (0.0827195) |

| NImage | 0.1110063 (0.1205665) | 0.1149707 (0.1199841) |

| DVideo | −0.112208 (0.0687552) | −0.1096264 (0.0683078) |

| −0.2850631 *** (0.0695438) | −0.2969254 *** (0.0698416) | |

| 0.2035386 ** (0.1026454) | 0.1851388 * (0.1022554) | |

| 0.1231488 *** (0.0364154) | 0.1233512 *** (0.0363315) | |

| PType × RLength | 0.2059165 *** (0.0638397) | 0.207092 *** (0.063874) |

| PType × NImage | 0.3712098 *** (0.0710646) | 0.4272585 *** (0.0735465) |

| PType × DVideo | 0.7476366 *** (0.0730458) | 0.7594635 *** (0.0731057) |

| SRating × RLength | 0.1674511 *** (0.0363951) | 0.1696441 *** (0.0363623) |

| SRating × NImage | 0.1484898 ** (0.0687052) | 0.1690226 ** (0.0682775) |

| SRating × DVideo | 0.1009002 (0.0683825) | 0.0991415 (0.0682961) |

| RLength × NImage | −0.0826407 ** (0.0339903) | −0.0845091 ** (0.0338529) |

| DVideo × NImage | −0.0983329 ** (0.0418905) | −0.102918 ** (0.0419894) |

| RResponse × PType | - | 0.4779957 *** (0.1582596) |

| Intercept | −1.424818 *** (0.5139615) | −1.443889 *** (0.5154571) |

| Category-specific random effects | ✓ | ✓ |

| Product-type-specific random effects | ✓ | ✓ |

| Observations | 8693 | 8693 |

| AIC | 6487.624 | 6480.507 |

| Log-likelihood | −3223.812 | −3219.253 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Che, G.; Hu, J.; Chen, L. Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1243-1266. https://doi.org/10.3390/jtaer19020064

Wang L, Che G, Hu J, Chen L. Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements. Journal of Theoretical and Applied Electronic Commerce Research. 2024; 19(2):1243-1266. https://doi.org/10.3390/jtaer19020064

Chicago/Turabian StyleWang, Liang, Gaofeng Che, Jiantuan Hu, and Lin Chen. 2024. "Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements" Journal of Theoretical and Applied Electronic Commerce Research 19, no. 2: 1243-1266. https://doi.org/10.3390/jtaer19020064

APA StyleWang, L., Che, G., Hu, J., & Chen, L. (2024). Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements. Journal of Theoretical and Applied Electronic Commerce Research, 19(2), 1243-1266. https://doi.org/10.3390/jtaer19020064