Understanding Antecedents That Affect Customer Evaluations of Head-Mounted Display VR Devices through Text Mining and Deep Neural Network

Abstract

1. Introduction

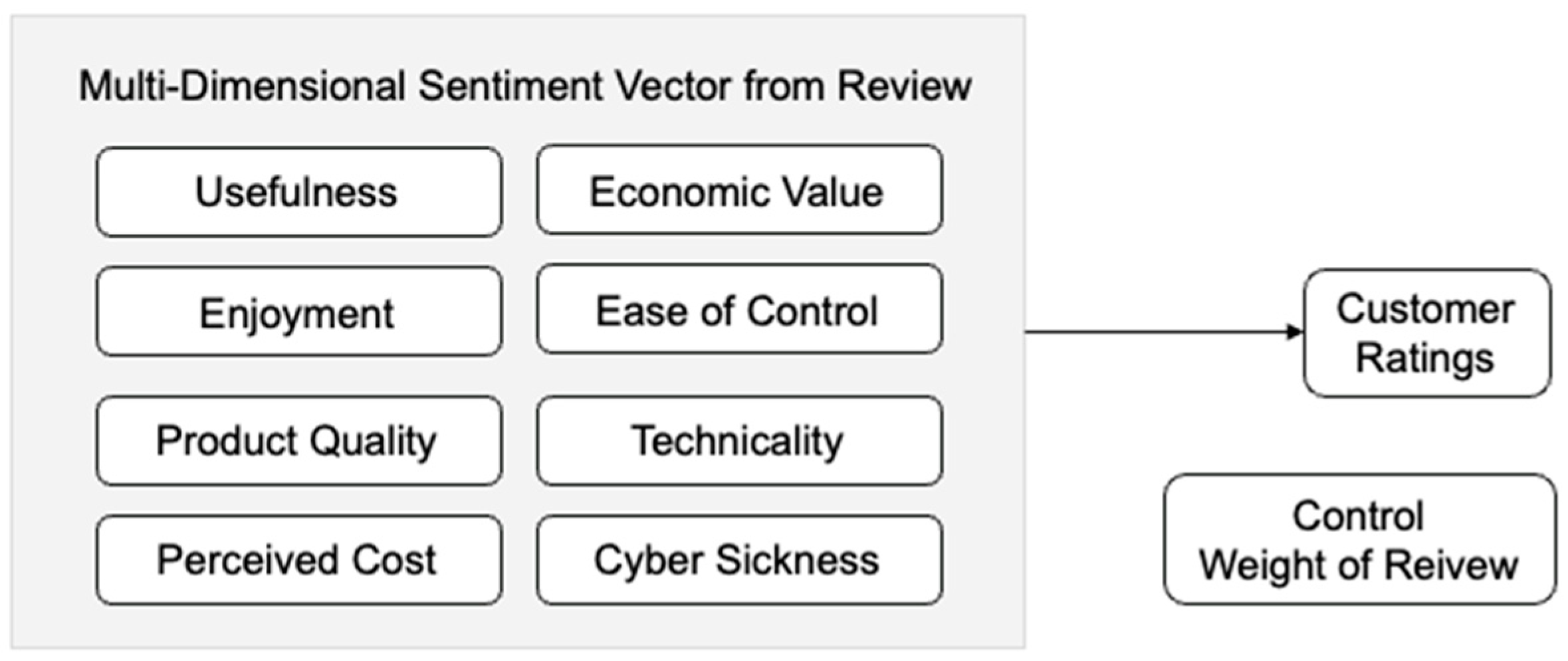

- Research question: Can multi-dimensional sentiment scoring of online user reviews predict and provide explanation for review ratings?

2. Conceptual Background

2.1. HMD VR Device

2.2. Customer Reviews and Ratings in E-Commerce

2.3. Text Mining and Sentiment Analysis: A Big Data Perspective

3. Methods

3.1. Research Design

3.2. Research Process

3.3. Exploratory Feature Selection

3.3.1. Value-Based Adoption Model

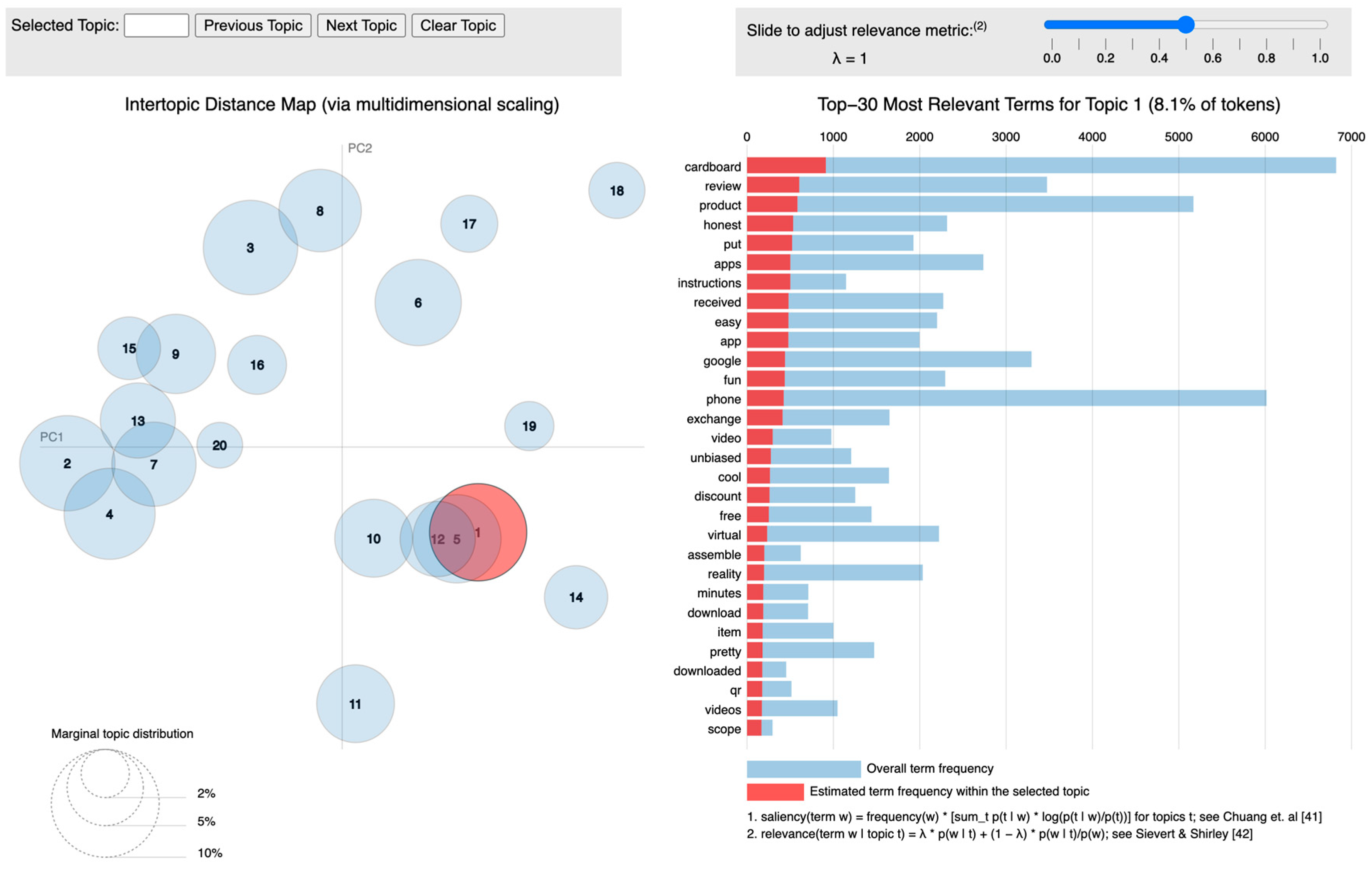

3.3.2. Topic Modeling

3.3.3. In-Depth Interview for Keyword Filtering

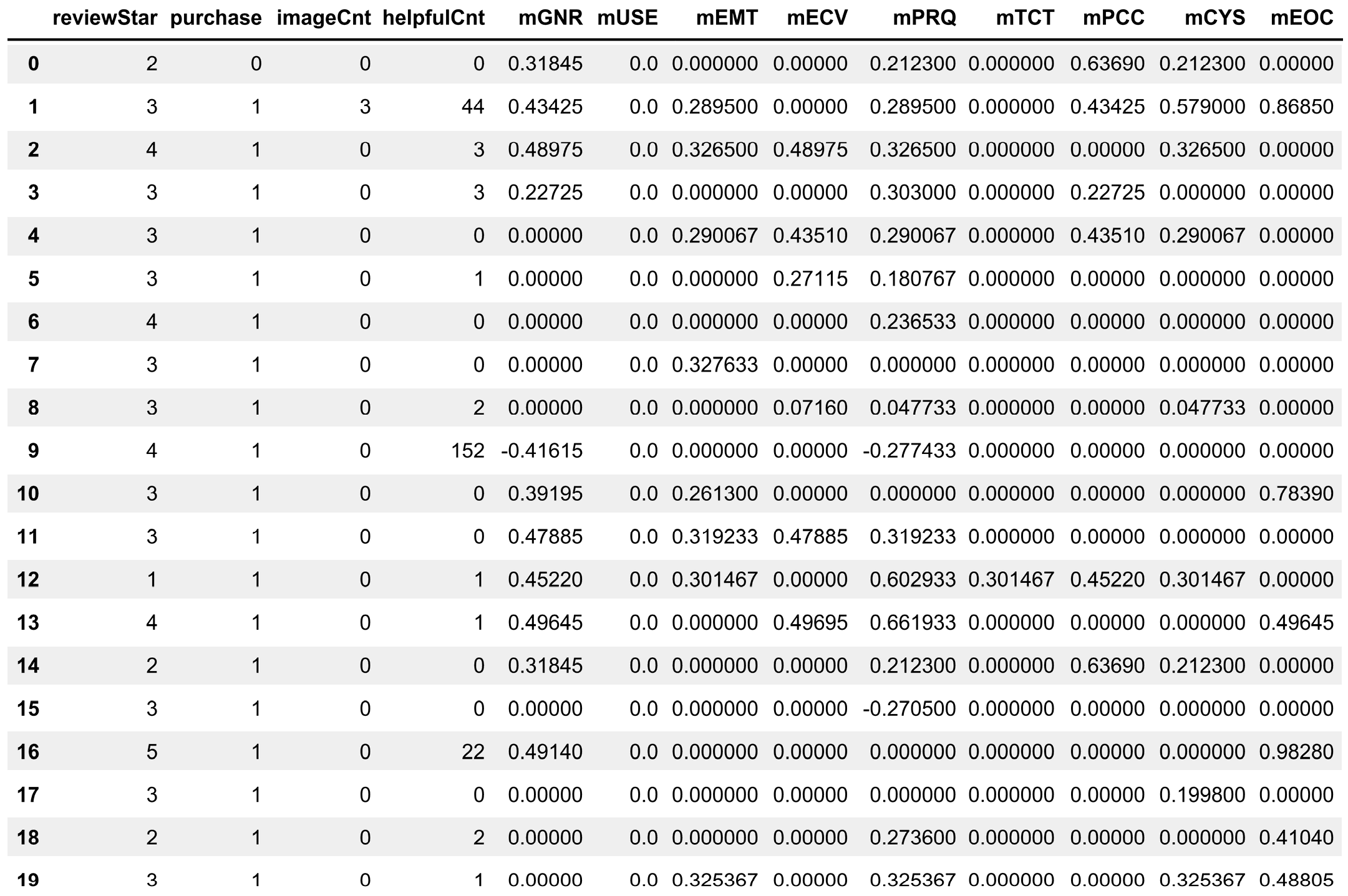

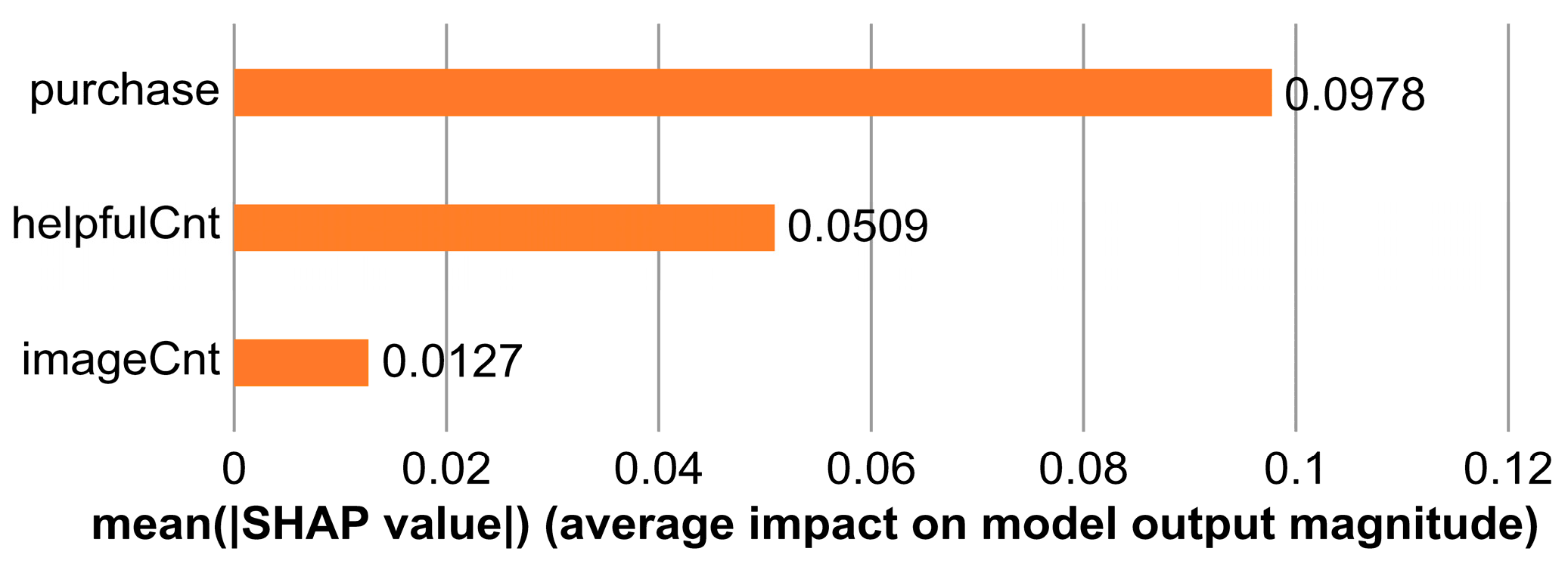

3.3.4. Weight of Review

3.4. Sentiment Score Using NLTK

3.5. Multi-Dimensional Sentiment Vector (MDSV)

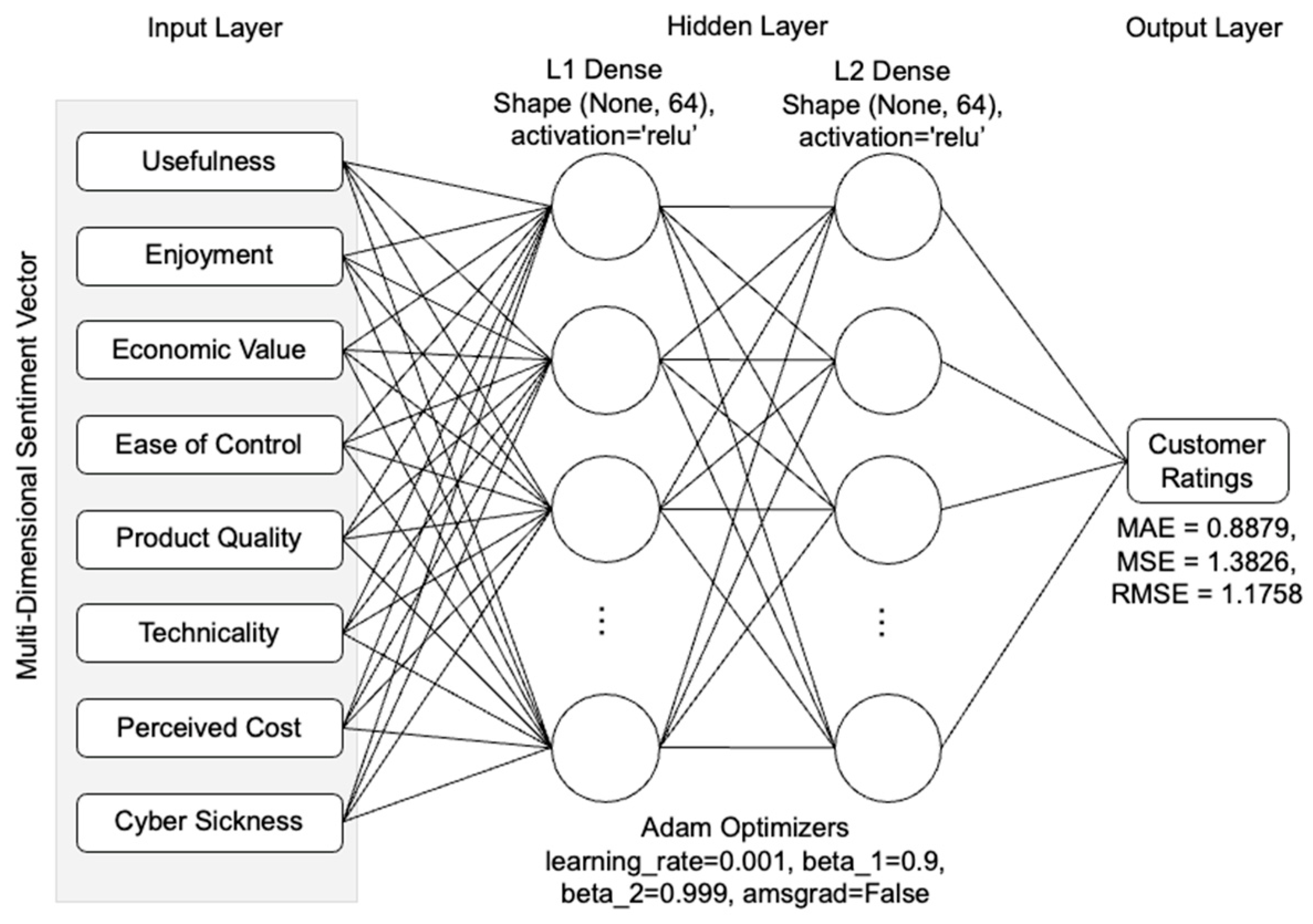

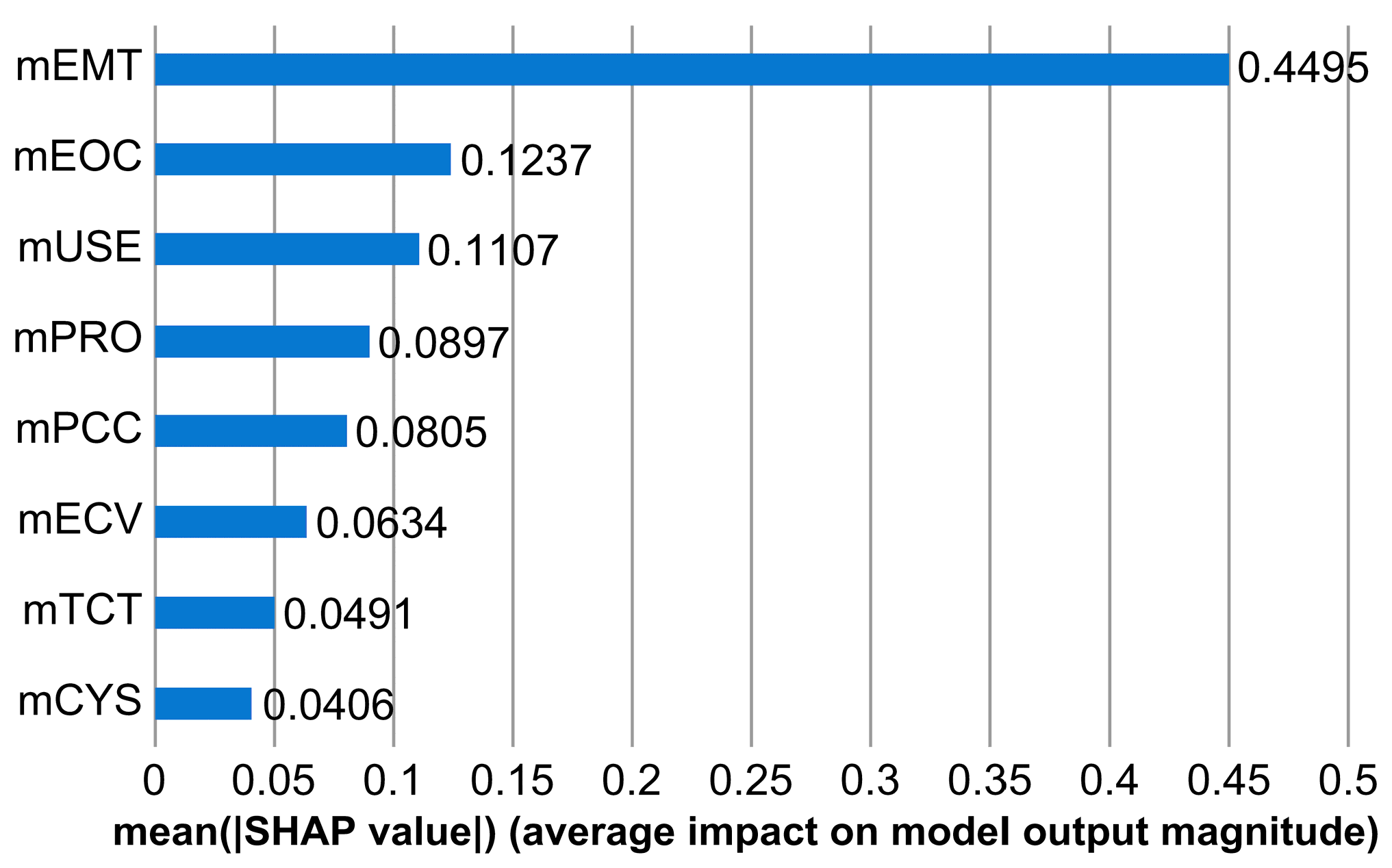

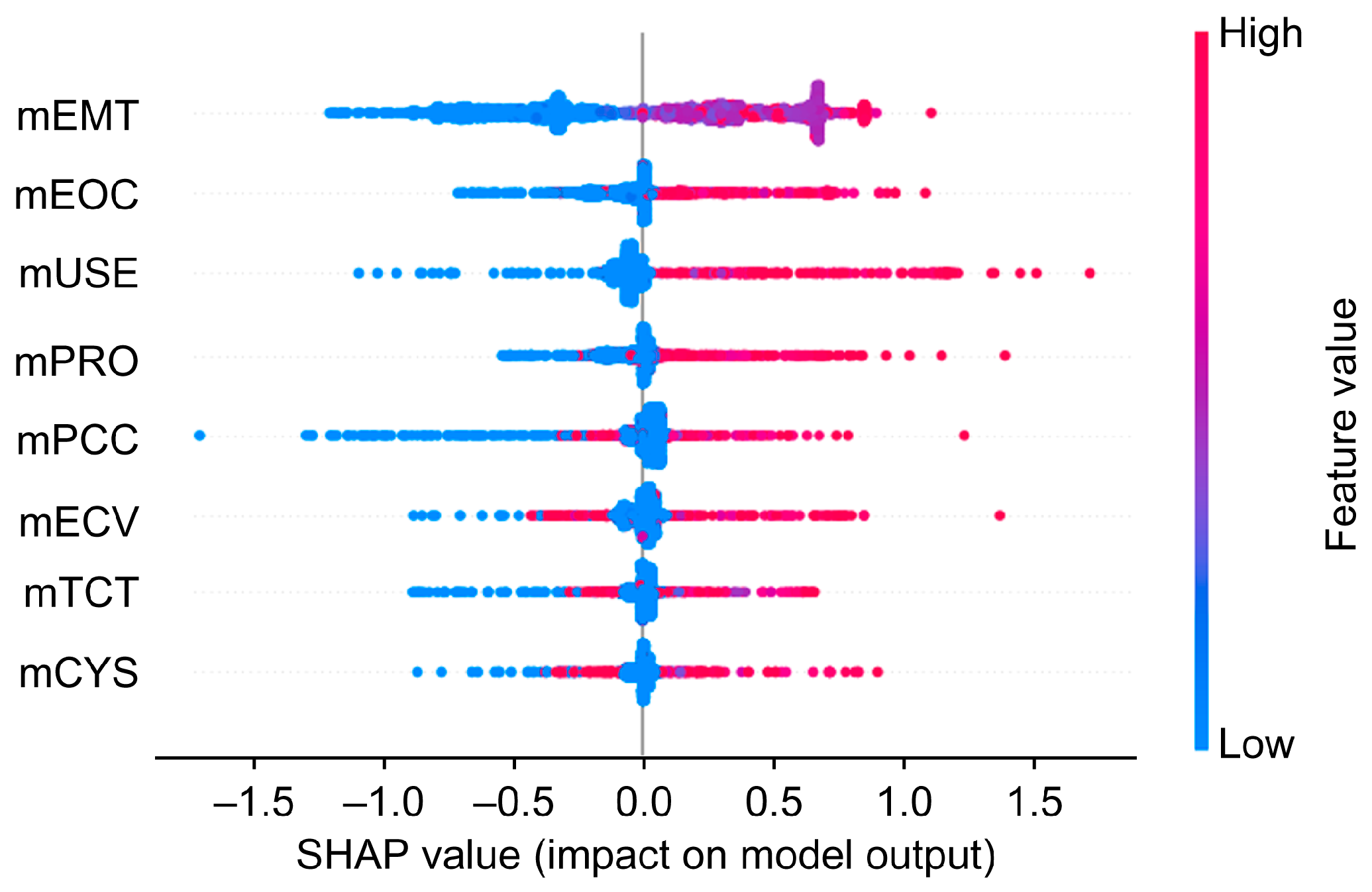

3.6. Deep Learning Model with SHAP

4. Experiment

4.1. Data Collection

4.2. Data Pre-Processing

4.3. Result of Feature Selection

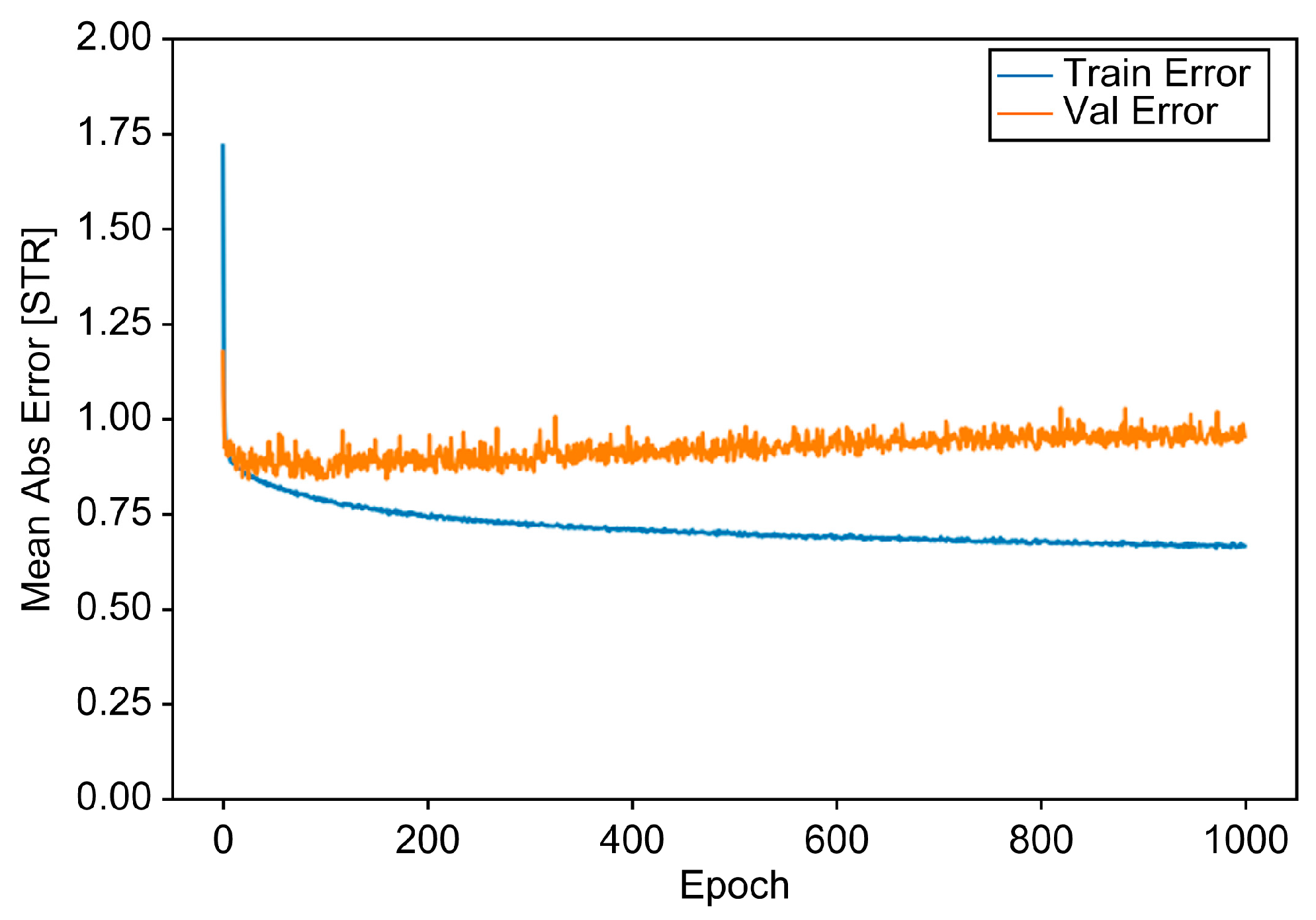

4.4. Result of Deep Neural Network Analysis

5. Discussion and Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bloomberg, Virtual Reality Market Size Worth $87.0 Billion by 2030: Grand View Research, Inc. 2022. Available online: https://www.bloomberg.com/press-releases/2022-07-07/virtual-reality-market-size-worth-87-0-billion-by-2030-grand-view-research-inc (accessed on 15 March 2023).

- Ahmed, N.; De Aguiar, E.; Theobalt, C.; Magnor, M.; Seidel, H.-P. Automatic generation of personalized human avatars from multi-view video. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Monterey, CA, USA, 7–9 November 2005; pp. 257–260. [Google Scholar]

- Hee Lee, J.; Shvetsova, O.A. The impact of VR application on student’s competency development: A comparative study of regular and VR engineering classes with similar competency scopes. Sustainability 2019, 11, 2221. [Google Scholar] [CrossRef]

- Horváth, I. An analysis of personalized learning opportunities in 3D VR. Front. Comput. Sci. 2021, 3, 673826. [Google Scholar] [CrossRef]

- Boas, Y. Overview of Virtual Reality Technologies. Available online: https://static1.squarespace.com/static/537bd8c9e4b0c89881877356/t/5383bc16e4b0bc0d91a758a6/1401142294892/yavb1g12_25879847_finalpaper.pdf (accessed on 1 March 2022).

- Park, M.J.; Lee, B.J. The features of VR (virtual reality) communication and the aspects of its experience. J. Commun. Res. 2004, 41, 29–60. [Google Scholar]

- John, J. Global (VR) Virtual Reality Market Size Forecast to Reach. Available online: https://www.globenewswire.com/news-release/2018/05/01/1494026/0/en/Global-VR-Virtual-Reality-Market-Size-Forecast-to-Reach-USD-26-89-Billion-by-2022.html (accessed on 27 February 2023).

- Badenhausen, K. Apple and Microsoft Head the World’s Most Valuable Brands 2015. Available online: http://www.forbes.com/sites/kurtbadenhausen/2015/05/13/apple-and-microsoft-head-the-worlds-most-valuable-brands-2015/#2d0ce65a2875 (accessed on 1 March 2022).

- Rohan, Virtual Reality Market Worth $15.89 Billion by 2020. Available online: https://www.marketsandmarkets.com/PressReleases/ar-market.asp (accessed on 27 February 2023).

- Korolov, M. 98% of VR Headsets Sold This Year Are for Mobile Phones–Hypergrid Business. 2016. Available online: https://www.hypergridbusiness.com/2016/11/report-98-of-vr-headsets-sold-this-year-are-for-mobile-phones/ (accessed on 27 February 2023).

- Mudambi, S.M.; Schuff, D. Research note: What makes a helpful online review? A study of customer reviews on Amazon. com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Burt, S.; Sparks, L. E-commerce and the retail process: A review. J. Retail. Consum. Serv. 2003, 10, 275–286. [Google Scholar] [CrossRef]

- Sagiroglu, S.; Sinanc, D. Big data: A review. In Proceedings of the 2013 International Conference on Collaboration Technologies and Systems (CTS), San Diego, CA, USA, 20–24 May 2013; pp. 42–47. [Google Scholar]

- Tan, A.-H. Text mining: The state of the art and the challenges. In Proceedings of the Pakdd 1999 Workshop on Knowledge Disocovery from Advanced Databases, Beijing, China, 26–28 April 1999; pp. 65–70. [Google Scholar]

- Mishev, K.; Gjorgjevikj, A.; Vodenska, I.; Chitkushev, L.T.; Trajanov, D. Evaluation of sentiment analysis in finance: From lexicons to transformers. IEEE Access 2020, 8, 131662–131682. [Google Scholar] [CrossRef]

- Ferreira, F.G.D.C.; Gandomi, A.H.; Cardoso, R.T.N. Artificial intelligence applied to stock market trading: A review. IEEE Access 2017, 9, 30898–30917. [Google Scholar] [CrossRef]

- Imran, A.S.; Daudpota, S.M.; Kastrati, Z.; Batra, R. Cross-cultural polarity and emotion detection using sentiment analysis and deep learning on COVID-19 related tweets. IEEE Access 2020, 8, 181074–181090. [Google Scholar] [CrossRef]

- Jianqiang, Z.; Xiaolin, G. Comparison research on text pre-processing methods on twitter sentiment analysis. IEEE Access 2017, 5, 2870–2879. [Google Scholar] [CrossRef]

- Zhao, Z.; Hao, Z.; Wang, G.; Mao, D.; Zhang, B.; Zuo, M.; Yen, J.; Tu, G. Sentiment Analysis of Review Data Using Blockchain and LSTM to Improve Regulation for a Sustainable Market. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 1–19. [Google Scholar] [CrossRef]

- Pezoa-Fuentes, C.; García-Rivera, D.; Matamoros-Rojas, S. Sentiment and Emotion on Twitter: The Case of the Global Consumer Electronics Industry. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 39. [Google Scholar] [CrossRef]

- Jo, Y.; Oh, A.H. Aspect and sentiment unification model for online review analysis. In Proceedings of the Fourth ACM International Conference on Web Search and Data Mining, Hong Kong, China, 9–12 February 2011; pp. 815–824. [Google Scholar]

- Zaki Ahmed, A.; Rodríguez-Díaz, M. Analyzing the online reputation and positioning of airlines. Sustainability 2020, 12, 1184. [Google Scholar] [CrossRef]

- Lucini, F.R.; Tonetto, L.M.; Fogliatto, F.S.; Anzanello, M.J. Text mining approach to explore dimensions of airline customer satisfaction using online customer reviews. J. Air Transp. Manag. 2020, 83, 101760. [Google Scholar] [CrossRef]

- Kuyucuk, T.; ÇALLI, L. Using Multi-Label Classification Methods to Analyze Complaints Against Cargo Services during the COVID-19 Outbreak: Comparing Survey-Based and Word-Based Labeling. Sak. Univ. J. Comput. Inf. Sci. 2022, 5, 371–384. [Google Scholar] [CrossRef]

- Çallı, L. Exploring mobile banking adoption and service quality features through user-generated content: The application of a topic modeling approach to Google Play Store reviews. Int. J. Bank Mark. 2023, 41, 428–454. [Google Scholar] [CrossRef]

- Çallı, L.; Çallı, F. Understanding airline passengers during COVID-19 outbreak to improve service quality: Topic modeling approach to complaints with latent dirichlet allocation algorithm. Transp. Res. Rec. 2023, 2677, 656–673. [Google Scholar] [CrossRef]

- Kim, H.W.; Chan, H.C.; Gupta, S. Value-based Adoption of Mobile Internet: An empirical investigation. Decis. Support Syst. 2007, 43, 111–126. [Google Scholar] [CrossRef]

- Zeithaml, V.A. Consumer Perceptions of Price, Quality, and Value: A Means-End Model and Synthesis of Evidence. J. Mark. 1988, 52, 2–22. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Griffiths, T.; Steyvers, M. Finding scientific topics. Proc. Natl. Acad. Sci. USA 2004, 101, 9. [Google Scholar] [CrossRef]

- Yang, C.; Wu, L.; Tan, K.; Yu, C.; Zhou, Y.; Tao, Y.; Song, Y. Online user review analysis for product evaluation and improvement. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 90. [Google Scholar] [CrossRef]

- Yang, Y.; Ma, Y.; Wu, G.; Guo, Q.; Xu, H. The Insights, “Comfort” Effect and Bottleneck Breakthrough of “E-Commerce Temperature; during the COVID-19 Pandemic. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 1493–1511. [Google Scholar]

- Legard, R.; Keegan, J.; Ward, K. In-depth interviews. Qual. Res. Pract. A Guide Soc. Sci. Stud. Res. 2003, 6, 138–169. [Google Scholar]

- Nadeem, W.; Juntunen, M.; Shirazi, F.; Hajli, N. Consumers’ value co-creation in sharing economy: The role of social support, consumers’ ethical perceptions and relationship quality. Technol. Forecast. Soc. Chang. 2020, 151, 119786. [Google Scholar] [CrossRef]

- Kim, G. Developing Theory-Based Text Mining Framework to Evaluate Service Quality: In the Context of Hotel Customers’ Online Reviews. Master’s Thesis, Yonsei University, Seoul, Republic of Korea, 2016. [Google Scholar]

- Choi, J. Analysis of the Impact of Sentiment Level of Service Quality Indicators on Hospital Evaluation: Using Text Mining Techniques. Master’s Thesis, Yonsei University, Seoul, Republic of Korea, 2016. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Google Cloud. Best Practices for Creating Tabular Training Data|Vertex AI|. Available online: https://cloud.google.com/vertex-ai/docs/tabular-data/bp-tabular#how-many-rows (accessed on 1 March 2023).

- Suh, K.S.; Chang, S. User interfaces and consumer perceptions of online stores: The role of telepresence. Behav. Inf. Technol. 2006, 25, 99–113. [Google Scholar] [CrossRef]

- Venkatesh, V. Determinants of perceived ease of use: Integrating control, intrinsic motivation, and emotion into the technology acceptance model. Inf. Syst. Res. 2000, 11, 342–365. [Google Scholar] [CrossRef]

- Sievert, C.; Shirley, K.E. LDAvis: A method for visualizing and interpreting topics. In Proceedings of the Workshop on Interactive Language Learning, Visualization, and Interfaces, Baltimore, MD, USA, 27 June 2014; pp. 63–70. [Google Scholar]

- Chuang, J.; Manning, C.D.; Heer, J. Termite: Visualization techniques for assessing textual topic models. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Capri Island, Italy, 21–25 May 2012; pp. 74–77. [Google Scholar]

- Lee, C.; Yun, H.; Lee, C.; Lee, C.C. Factors Affecting Continuous Intention to Use Mobile Wallet: Based on Value-based Adoption Model. J. Soc. e-Bus. Stud. 2015, 20, 117–135. [Google Scholar] [CrossRef]

- Suh, K.S.; Lee, Y.E. The effects of virtual reality on consumer learning: An empirical investigation. MIS Q. 2005, 29, 673–697. [Google Scholar] [CrossRef]

- LaViola, J.J., Jr. A discussion of cybersickness in virtual environments. ACM SIGCHI Bull. 2000, 32, 47–56. [Google Scholar] [CrossRef]

- McCauley, M.E.; Sharkey, T.J. Cybersickness: Perception of Self-Motion in Virtual Environments. Presence Teleoperators Virtual Environ. 1992, 1, 311–318. [Google Scholar] [CrossRef]

- Simons, D.J.; Chabris, C.F. Gorillas in our midst: Sustained inattentional blindness for dynamic events. Perception 1999, 28, 1059–1074. [Google Scholar] [CrossRef]

- Cohen, M.A.; Botch, T.L.; Robertson, C.E. The limits of color awareness during active, real-world vision. Proc. Natl. Acad. Sci. USA 2020, 117, 13821–13827. [Google Scholar] [CrossRef]

- Lee, S.; Shvetsova, O.A. Optimization of the technology transfer process using Gantt charts and critical path analysis flow diagrams: Case study of the korean automobile industry. Processes 2019, 7, 917. [Google Scholar] [CrossRef]

- Balakrishnan, V.; Shi, Z.; Law, C.L.; Lim, R.; Teh, L.L.; Fan, Y. A deep learning approach in predicting products’ sentiment ratings: A comparative analysis. J. Supercomput. 2022, 78, 7206–7226. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z. Yelp Review Rating Prediction: Machine Learning and Deep Learning Models. arXiv 2020, arXiv:2012.06690. [Google Scholar] [CrossRef]

- Conneau, A.; Schwenk, H.; Barrault, L.; Lecun, Y. Very deep convolutional networks for text classification. arXiv 2016, arXiv:1606.01781. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the EMNLP 2014-2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 26–28 October 2014; pp. 1746–1751. [Google Scholar] [CrossRef]

- Sharma, A.K.; Chaurasia, S.; Srivastava, D.K. Sentimental Short Sentences Classification by Using CNN Deep Learning Model with Fine Tuned Word2Vec. Procedia Comput. Sci. 2020, 167, 1139–1147. [Google Scholar] [CrossRef]

- Khan, Z.Y.; Niu, Z.; Sandiwarno, S.; Prince, R. Deep learning techniques for rating prediction: A survey of the state-of-the-art. Artif. Intell. Rev. 2021, 54, 95–135. [Google Scholar] [CrossRef]

- Ullah, K.; Rashad, A.; Khan, M.; Ghadi, Y.; Aljuaid, H.; Nawaz, Z. A Deep Neural Network-Based Approach for Sentiment Analysis of Movie Reviews. Complexity 2022, 2022, 5217491. [Google Scholar] [CrossRef]

- Shirani-mehr, H. Applications of Deep Learning to Sentiment Analysis of Movie Reviews. In Technical Report 2014; Stanford University: Stanford, CA, USA, 2014. [Google Scholar]

- Abarja, R.A.; Wibowo, A. Movie rating prediction using convolutional neural network based on historical values. Int. J. 2020, 8, 2156–2164. [Google Scholar] [CrossRef]

- Ning, X.; Yac, L.; Wang, X.; Benatallah, B.; Dong, M.; Zhang, S. Rating prediction via generative convolutional neural networks based regression. Pattern Recognit. Lett. 2020, 132, 12–20. [Google Scholar] [CrossRef]

- Pouransari, H.; Ghili, S. Deep Learning for Sentiment Analysis of Movie Reviews. In CS224N Proj; pp. 1–8. Available online: https://cs224d.stanford.edu/reports/PouransariHadi.pdf (accessed on 1 March 2022).

- Gogineni, S.; Pimpalshende, A. Predicting IMDB Movie Rating Using Deep Learning. In Proceedings of the 2020 5th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; pp. 1139–1144. [Google Scholar] [CrossRef]

| Year | Study | Objective | Method | Data and Sample | Findings |

|---|---|---|---|---|---|

| 2010 | Mudambi, S.M.; Schuff, D. [11] | Identify the factors that make online customer reviews helpful to consumers. | A model derived from information economics and applied Tobit regression | 1587 customer reviews for six products on Amazon.com. | Review extremity, depth, and product type influenced review helpfulness. Review depth was more impactful for search goods than for experience goods, while extreme ratings were less helpful for experience goods. |

| 2011 | Jo, Y. & Oh, A.H. [21] | Addresses the challenge of automatically identifying evaluated aspects and their associated sentiments in user-generated reviews. | Sentence-LDA (SLDA), Aspect and Sentiment Unification Model (ASUM), | 24,184 reviews of electronic devices and 27,458 reviews of restaurants from Amazon and Yelp | ASUM performed well in sentiment classification, nearing the effectiveness of supervised classification methods, while not requiring any sentiment labels. |

| 2020 | Zaki Ahmed, A. & Rodríguez-Díaz, M. [22] | Assess airlines’ online reputation and customer-perceived service quality and value. | Applied sentiment analysis and machine learning on TripAdvisor tags. | Data comprises TripAdvisor reviews from airlines across Europe, the USA, Canada, and other American countries. | Significant differences were found in service quality variables across geographical areas and based on whether airlines follow a low-cost strategy; 295 key tags linked to overall ratings were identified. |

| 2020 | Lucini et al. [23] | Employing text mining techniques to discern dimensions of airline customer satisfaction from online customer feedback. | Latent Dirichlet Allocation, Logistic Regression | comprehensive database encompassing over 55,000 Online Customer Reviews (OCRs) that represents over 400 airlines and passengers originating from 170 nations. | The three most crucial factors in forecasting airline recommendations are ‘cabin personnel’, ‘onboard service’, and ‘value for money’. |

| 2022 | Kuyucuk, T. & ÇALLI, L. [24] | Explores how cargo companies managed their last-mile activities during the COVID-19 outbreak and suggests remedies for adverse outcomes. | Multi-label classification algorithms & word-based labeling method for categorizing complaints. | Data was sourced from 104,313 complaints about cargo companies on sikayetvar.com between February 2020 and September 2021. | Most common complaints were related to parcel delivery issues, primarily delays or lack of delivery. Despite the pandemic, hygiene-related complaints were the least common. The study also reveals areas where leading cargo companies in Turkey performed well and areas where they struggled. The results emphasized the importance of real-time complaint review and issue discovery for achieving customer satisfaction. |

| 2023 | Çallı, L. [25] | Analyzes online user reviews of mobile banking services to identify key themes impacting customer satisfaction using text mining and machine learning. | Latent Dirichlet algorithm (LDA) and various predictive algorithms such as Random Forest and Naive Bayes. | The dataset comprises 21,526 reviews from customers of Turkish private and state banks on Google Play Store. | 11 key topics emerged, with perceived usefulness, convenience, and time-saving being crucial for app ratings. Seven topics were related to technical and security issues. |

| 2023 | Çallı, L. & Çallı, F. [26] | Understand the main triggers for customer complaints during the COVID-19 pandemic and identify significant service failures in the airline industry. | Latent Dirichlet Allocation | Data consists of 10,594 complaints against full-service and low-cost airlines. | The study provides valuable insights into the primary service failures triggering customer complaints during the COVID-19 pandemic. Additionally, it proposes a decision support system to identify significant service failures through passenger complaints. |

| Reason | Excluded Keywords |

|---|---|

| Keywords with less than 10 reviews or the minimum requirement for manual classification | attractive, amusing, diversity, diverse, varied, buy buy one get one, bundle, UI, UX, user interface, convenient sw, handy sw, controller, convenient hw, handy hw, easy hw, handy device, moisture, humidity, earphone jack, appearance, peculiar, uncanny, unnatural, tiredness, exhaust, exhausted, composing, shortage instruction, lack guide, shortage guide, compatibleness, unpleasant, offensive, uninteresting, hz |

| Irrelevant keywords scoring 1–3 in the relevancy survey by in-depth interview group | AR, variety, various, event, durability, odd |

| Feature ID | Measure Item | Classification | Item Definition and an Example of a Review | Source |

|---|---|---|---|---|

| Usefulness | Present | USE01 | Value of the VR device as a gift | [43] |

| AR function | USE02 | Usefulness of a VR device as a supplementary AR function | [43] | |

| Enjoyment | Surprise of VR experience | EMT01 | Surprise from VR experience | [44] |

| Interest of VR experience | EMT02 | Interest and excitement from VR experience | [44] | |

| Diversity of VR content | EMT03 | The types or number of contents users can experience with the VR device are numerous | eliminated [35,43] | |

| Economic Value | Low cost | ECV01 | Degree of affordability purchasers feel about the VR device | [28] |

| Discount cost and Event | ECV02 | Consumers feel being provided higher value through discounted rates or promotional events | [28] | |

| Ease of Control | UI/UX convenience | EOC01 | Ease of use that users feel when using software (SW) to control the device | [39,40] |

| HW operation convenience | EOC02 | Ease of use that users feel when using hardware (HW) to control the device | [40] | |

| Product Quality | Internal completeness | PRQ01 | Integral completeness of the product in terms of heat, moist, earphone jack, or light leakage | [28] |

| External completeness | PRQ02 | Integral completeness of the product including appearance when worn, finishing, and endurance | [28] | |

| Wearable | PRQ03 | The degree of comfort including temporary fit, weight, and fatigue as a head-mounted device | [28] | |

| Technicality | Installed level | TCT01 | Difficulty experienced from delivery to switch on and use. Including HW assembly in the case of an assembly type, and VR app installment in the case of smartphone-based type. | [43] |

| Lack of explanation | TCT02 | User response to the lack of explanation via user guide or instructional video for using the VR device | [43] | |

| Compatibility problem | TCT03 | Problems experienced by users when the VR device is not compatible with platforms | [43] | |

| Perceived Cost | High cost | PCC01 | Inappropriate price of the VR device | [28] |

| Disappointment | PCC02 | Disappointment and discomfort from VR experience | [28] | |

| Cyber sickness * | Motion sickness | CYS01 | Sickness and nausea experienced by the disparity between VR and real sensations, often occurring in low-spec devices | [45,46] |

| Limited display | CYS02 | Including visibility, or the resolutions of VR display and scanning rates, or the frequency of scanning per second | [45,46] | |

| Limited field of view | CYS03 | Physical limits of visual function provided by the lenses of the HMD VR device, including optical power of the lenses, adjustability of the distance between the eyes, wearability of the glasses, field of view | [45,46] | |

| Weight of Review | Status of purchase | MET01 | Status of purchase, it contains ‘Verified Purchased’, ‘Not Verified’ and ‘Vine Customer Review of Free Product’ | Self-developed |

| Helpful votes | MET02 | Number of customers who voted ‘helpful’ on reviews | Self-developed | |

| Number of Images | MET03 | Number of images attached to the review | Self-developed |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maeng, Y.; Lee, C.C.; Yun, H. Understanding Antecedents That Affect Customer Evaluations of Head-Mounted Display VR Devices through Text Mining and Deep Neural Network. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 1238-1256. https://doi.org/10.3390/jtaer18030063

Maeng Y, Lee CC, Yun H. Understanding Antecedents That Affect Customer Evaluations of Head-Mounted Display VR Devices through Text Mining and Deep Neural Network. Journal of Theoretical and Applied Electronic Commerce Research. 2023; 18(3):1238-1256. https://doi.org/10.3390/jtaer18030063

Chicago/Turabian StyleMaeng, Yunho, Choong C. Lee, and Haejung Yun. 2023. "Understanding Antecedents That Affect Customer Evaluations of Head-Mounted Display VR Devices through Text Mining and Deep Neural Network" Journal of Theoretical and Applied Electronic Commerce Research 18, no. 3: 1238-1256. https://doi.org/10.3390/jtaer18030063

APA StyleMaeng, Y., Lee, C. C., & Yun, H. (2023). Understanding Antecedents That Affect Customer Evaluations of Head-Mounted Display VR Devices through Text Mining and Deep Neural Network. Journal of Theoretical and Applied Electronic Commerce Research, 18(3), 1238-1256. https://doi.org/10.3390/jtaer18030063