Explaining Policyholders’ Chatbot Acceptance with an Unified Technology Acceptance and Use of Technology-Based Model

Abstract

1. Introduction

2. A Unified Technology Acceptance and Use of Technology-Based Model to Assess Behavioral Intention of Policyholders to Use Chatbots

2.1. Initial Considerations

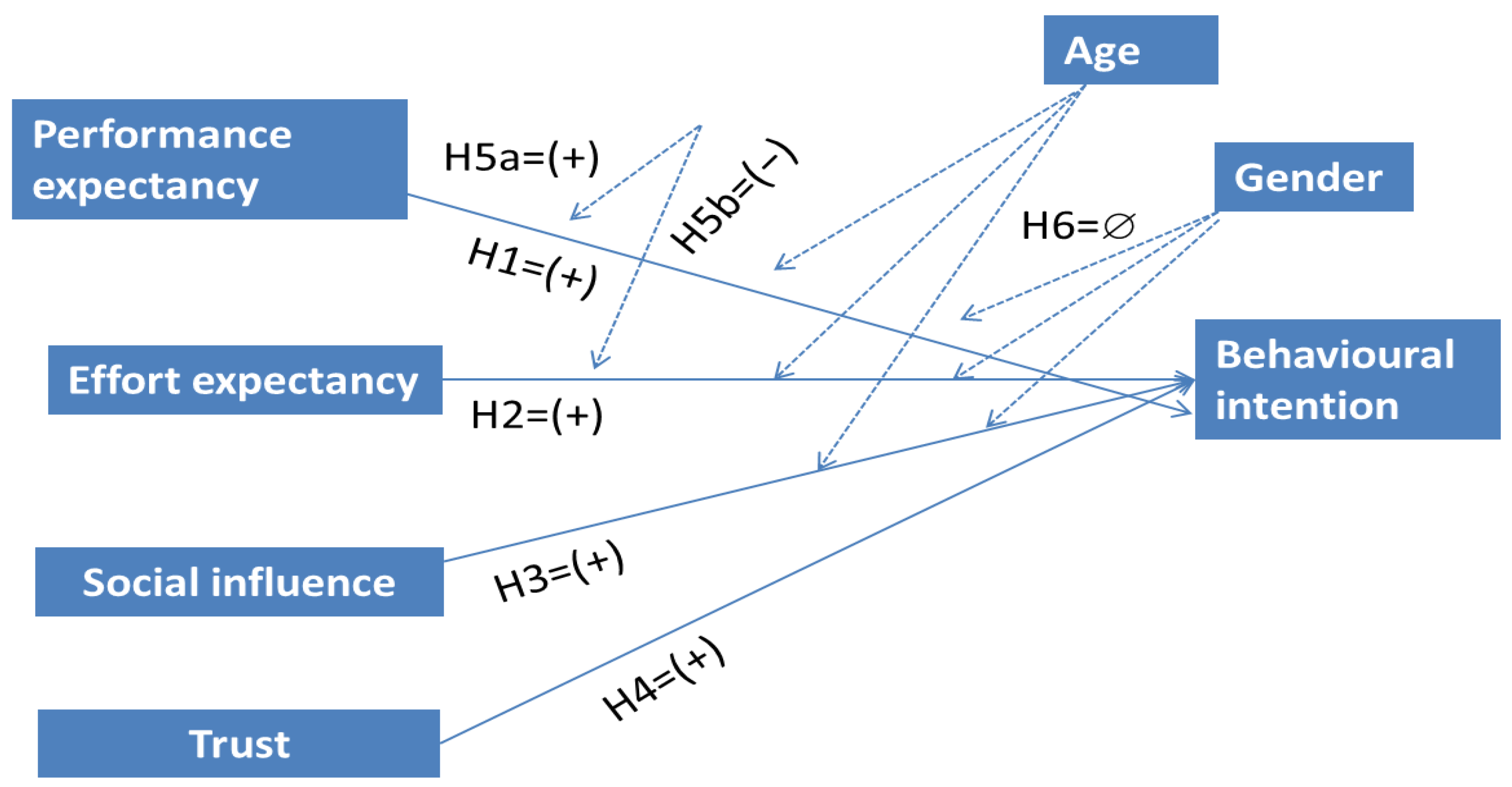

2.2. Direct Effects of Performance Expectancy, Effort Expectancy, Social Influence and Trust on Behavioral Intention

2.3. The Moderating Effects of Insurance Literacy, Gender, and Age

3. Materials and Methods

3.1. Materials

| Item | Foundation |

|---|---|

| Behavioral intention BI1. I agree in interacting with a bot to make procedures with my insurer. BI2. I believe that I will employ conversational bots to interact with the insurer regarding my policies. BI3. I will opt to manage existing policies with conversational robots. | Grounded in [20,25] |

| Performance expectancy PE1. Chatbots make procedures with the insurance company easier. PE2. Chatbots allow for a faster resolution of issues with my policies. PE3. Chatbots allow for making procedures with the insurance company with less effort. | Grounded in [20,24] and un used on [48] in the assessment of AI acceptance |

| Effort expectancy EE1. Using chatbots to communicate with the insurer is easy. EE2. Managing claims and other procedures with the insurer through chatbots is clear and understandable. EE3. The help of chatbots in managing policies and claims is accessible and less prone to errors. EE4. It is easy to use the communication channels of the insurer smoothly through chatbots. | Based on [20,48,59] |

| Social influence SI1. Persons who are important for me think that chatbots makes easier insurance procedures. SI2. The people who influence me feel that if there is possibility to choose a channel, better interact with bots. SI3. Persons whose opinions I value feel that making insurance procedures with bots is a step forward. | Grounded in [20] and used by [48,59] |

| Trust TRUST1. I feel that conversational bots are reliable. TRUST2. The use of chatbots enable the insurance company to fulfil its commitments and obligations. TRUST3. The use of chatbots to interact with the insurer considers the interests of policyholders. | Based on [40] that was grounded in [83]. Used in [57] within a chatbot context. |

| Insurance literacy IL1. I have a good level of knowledge about insurance matters. IL2. I have a high ability to apply my knowledge about insurance in practice. | Based on [84] |

3.2. Data Analysis

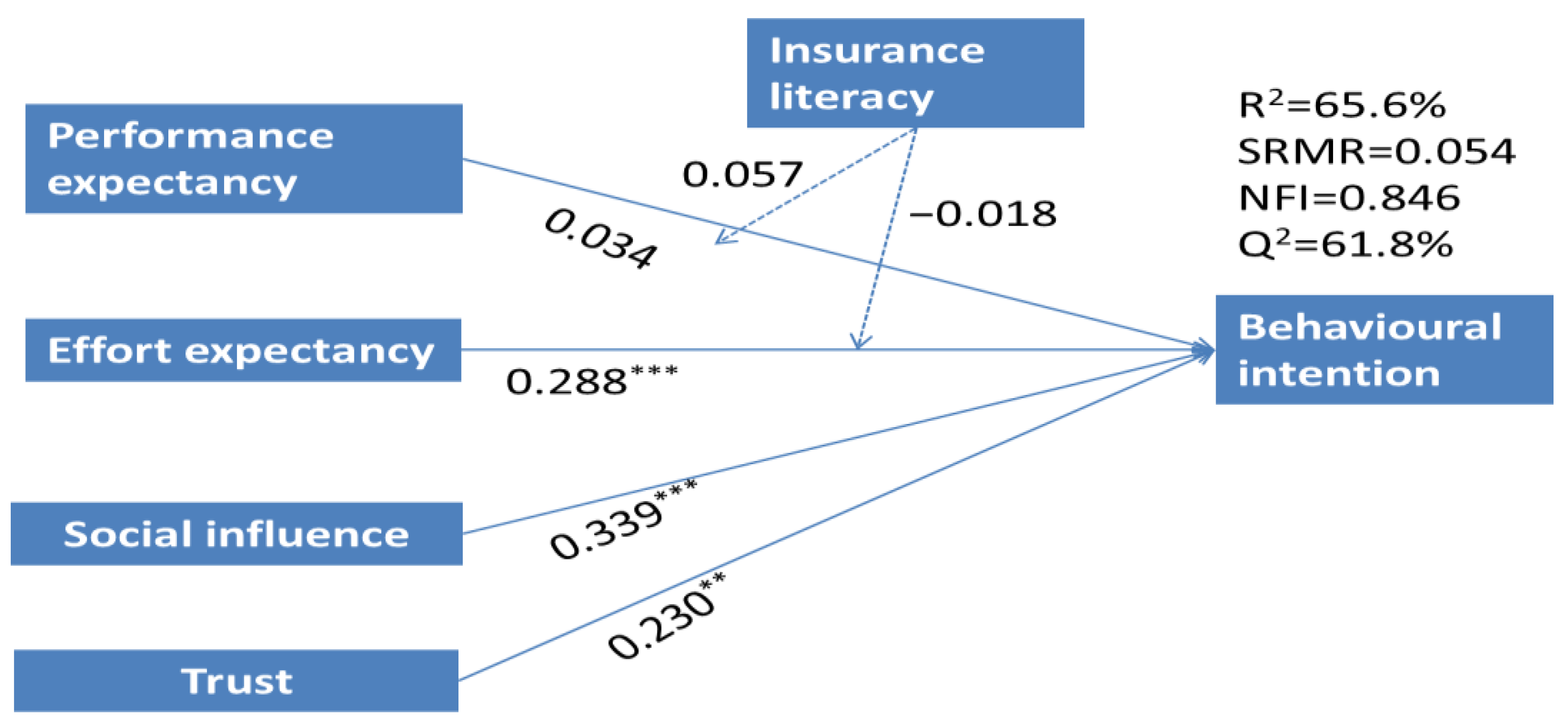

4. Results

5. Discussion and Implications

5.1. Discussion

5.2. Theoretical and Practical Implications

6. Conclusions, Limitations, and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barbu, C.M.; Florea, D.L.; Dabija, D.-C.; Barbu, M.C.R. Customer Experience in Fintech. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 1415–1433. [Google Scholar] [CrossRef]

- Tello-Gamarra, J.; Campos-Teixeira, D.; Longaray, A.A.; Reis, J.; Hernani-Merino, M. Fintechs and Institutions: A Systematic Literature Review and Future Research Agenda. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 722–750. [Google Scholar] [CrossRef]

- Stoeckli, E.; Dremel, C.; Uebernickel, F. Exploring characteristics and transformational capabilities of InsurTech innovations to understand insurance value creation in a digital world. Electron. Mark. 2018, 28, 287–305. [Google Scholar] [CrossRef]

- Yan, T.C.; Schulte, P.; Chuen, D.L.K. InsurTech and FinTech: Banking and Insurance Enablement. In Handbook of Blockchain, Digital Finance, and Inclusion; Academic Press: Cambridge, MA, USA, 2018; Volume 1, pp. 249–281. [Google Scholar] [CrossRef]

- Bohnert, A.; Fritzsche, A.; Gregor, S. Digital agendas in the insurance industry: The importance of comprehensive approaches. Geneva Pap. Risk Insur.-Issues Pract. 2019, 44, 1–19. [Google Scholar] [CrossRef]

- Sosa, I.; Montes, Ó. Understanding the InsurTech dynamics in the transformation of the insurance sector. Risk Manag. Insur. Rev. 2022, 25, 35–68. [Google Scholar] [CrossRef]

- Lanfranchi, D.; Grassi, L. Examining insurance companies’ use of technology for innovation. Geneva Pap. Risk Insur.-Issues Pract. 2022, 47, 520–537. [Google Scholar] [CrossRef]

- Yıldız, E.; Güngör Şen, C.; Işık, E.E. A Hyper-Personalized Product Recommendation System Focused on Customer Segmentation: An Application in the Fashion Retail Industry. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 571–596. [Google Scholar] [CrossRef]

- Rodríguez-Cardona, D.; Werth, O.; Schönborn, S.; Breitner, M.H. A mixed methods analysis of the adoption and diffusion of Chatbot Technology in the German insurance sector. In Proceedings of the Twenty-Fifth Americas Conference on Information Systems, Cancun, Mexico, 15–17 August 2019. [Google Scholar]

- Joshi, H. Perception and Adoption of Customer Service Chatbots among Millennials: An Empirical Validation in the Indian Context. In Proceedings of the 17th International Conference on Web Information Systems and Technologies–WEBIST 2021, online, 26–28 October 2021; pp. 197–208. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, J.; Deng, G. Enhancing customer satisfaction with chatbots: The influence of communication styles and consumer attachment anxiety. Front. Psychol. 2022, 13, 4266. [Google Scholar] [CrossRef]

- Nirala, K.K.; Singh, N.K.; Purani, V.S. A survey on providing customer and public administration based services using AI: Chatbot. Multimed. Tools Appl. 2022, 81, 22215–22246. [Google Scholar] [CrossRef]

- Fotheringham, D.; Wiles, M.A. The effect of implementing chatbot customer service on stock returns: An event study analysis. J. Acad. Mark. Sci. 2022, 51, 802–822. [Google Scholar] [CrossRef]

- DeAndrade, I.M.; Tumelero, C. Increasing customer service efficiency through artificial intelligence chatbot. Rev. Gestão 2022, 29, 238–251. [Google Scholar] [CrossRef]

- Riikkinen, M.; Saarijärvi, H.; Sarlin, P.; Lähteenmäki, I. Using artificial intelligence to create value in insurance. Int. J. Bank Mark. 2018, 36, 1145–1168. [Google Scholar] [CrossRef]

- Warwick, K.; Shah, H. Can machines think? A report on Turing test experiments at the Royal Society. J. Exp. Theor. Artif. Intell. 2016, 28, 989–1007. [Google Scholar] [CrossRef]

- de Sá Siqueira, M.A.; Müller, B.C.N.; Bosse, T. When Do We Accept Mistakes from Chatbots? The Impact of Human-Like Communication on User Experience in Chatbots That Make Mistakes. Int. J. Hum.–Comput. Interact. 2023. [Google Scholar] [CrossRef]

- Vassilakopoulou, P.; Haug, A.; Salvesen, L.M.; Pappas, I.O. Developing human/AI interactions for chat-based customer services: Lessons learned from the Norwegian government. Eur. J. Inf. Syst. 2023, 32, 10–22. [Google Scholar] [CrossRef]

- PromTep, S.P.; Arcand, M.; Rajaobelina, L.; Ricard, L. From What Is Promised to What Is Experienced with Intelligent Bots. In Advances in Information and Communication, Proceedings of the 2021 Future of Information and Communication Conference (FICC), Virtual, 29–30 April 2021; Arai, K., Ed.; Springer International Publishing: Cham, Switzerland, 2021; Volume 1, pp. 560–565. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Andrés-Sánchez, J.; González-Vila Puchades, L.; Arias-Oliva, M. Factors influencing policyholders′ acceptance of life settlements: A technology acceptance model. Geneva Pap. Risk Insur.-Issues Pract. 2021. [Google Scholar] [CrossRef]

- Mostafa, R.B.; Kasamani, T. Antecedents and consequences of chatbot initial trust. Eur. J. Mark. 2022, 56, 1748–1771. [Google Scholar] [CrossRef]

- Zarifis, A.; Cheng, X. A model of trust in Fintech and trust in Insurtech: How Artificial Intelligence and the context influence it. J. Behav. Exp. Financ. 2022, 36, 100739. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Proces. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Koetter, F.; Blohm, M.; Drawehn, J.; Kochanowski, M.; Goetzer, J.; Graziotin, D.; Wagner, S. Conversational agents for insurance companies: From theory to practice. In Agents and Artificial Intelligence: 11th International Conference, ICAART 2019, Prague, Czech Republic, 19–21 February 2019; Van den Herik, J., Rocha, A.P., Steels, L., Eds.; Revised Selected Papers 11; Springer International Publishing: Cham, Switzerland, 2019; pp. 338–362. [Google Scholar]

- Guiso, L. Trust and insurance. Geneva Pap. Risk Insur.-Issues Pract. 2021, 46, 509–512. [Google Scholar] [CrossRef]

- Alalwan, A.A.; Dwivedi, Y.K.; Rana, N.P.; Williams, M.D. Consumer adoption of mobile banking in Jordan: Examining the role of usefulness, ease of use, perceived risk and self-efficacy. J. Enterp. Inf. Manag. 2017, 30, 522–550. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, R.; Zou, Y.; Jin, D. Investigating customers’ responses to artificial intelligence chatbots in online travel agencies: The moderating role of product familiarity. J. Hosp. Tour. Technol. 2023, 14, 208–224. [Google Scholar] [CrossRef]

- Standaert, W.; Muylle, S. Framework for open insurance strategy: Insights from a European study. Geneva Pap. Risk Insur.-Issues Pract. 2022, 47, 643–668. [Google Scholar] [CrossRef]

- Gené-Albesa, J. Interaction channel choice in a multichannel environment, an empirical study. Int. J. Bank Mark. 2007, 25, 490–506. [Google Scholar] [CrossRef]

- LMI Group. The Psychology of Claims. 2022. Available online: https://lmigroup.io/the-psychology-of-claims/ (accessed on 12 December 2022).

- Albayati, H.; Kim, S.K.; Rho, J.J. Accepting financial transactions using blockchain technology and cryptocurrency: A customer perspective approach. Technol. Soc. 2020, 62, 101320. [Google Scholar] [CrossRef]

- Nuryyev, G.; Wang, Y.-P.; Achyldurdyyeva, J.; Jaw, B.-S.; Yeh, Y.-S.; Lin, H.-T.; Wu, L.-F. Blockchain Technology Adoption Behavior and Sustainability of the Business in Tourism and Hospitality SMEs: An Empirical Study. Sustainability 2020, 12, 1256. [Google Scholar] [CrossRef]

- Sheel, A.; Nath, V. Blockchain technology adoption in the supply chain (UTAUT2 with risk)–evidence from Indian supply chains. Int. J. Appl. Manag. Sci. 2020, 12, 324–346. [Google Scholar] [CrossRef]

- Palos-Sánchez, P.; Saura, J.R.; Ayestaran, R. An Exploratory Approach to the Adoption Process of Bitcoin by Business Executives. Mathematics 2021, 9, 355. [Google Scholar] [CrossRef]

- Bashir, I.; Madhavaiah, C. Consumer attitude and behavioral intention toward Internet banking adoption in India. J. Indian Bus. Res. 2015, 7, 67–102. [Google Scholar] [CrossRef]

- Farah, M.F.; Hasni, M.J.S.; Abbas, A.K. Mobile-banking adoption: Empirical evidence from the banking sector in Pakistan. Int. J. Bank Mark. 2018, 36, 1386–1413. [Google Scholar] [CrossRef]

- Sánchez-Torres, J.A.; Canada, F.-J.A.; Sandoval, A.V.; Alzate, J.-A.S. E-banking in Colombia: Factors favoring its acceptance, online trust and government support. Int. J. Bank Mark. 2018, 36, 170–183. [Google Scholar] [CrossRef]

- Warsame, M.H.; Ireri, E.M. Moderation effect on mobile microfinance services in Kenya: An extended UTAUT model. J. Behav. Exp. Financ. 2018, 18, 67–75. [Google Scholar] [CrossRef]

- Hussain, M.; Mollik, A.T.; Johns, R.; Rahman, M.S. M-payment adoption for bottom of pyramid segment: An empirical investigation. Int. J. Bank Mark. 2019, 37, 362–381. [Google Scholar] [CrossRef]

- Huang, W.S.; Chang, C.T.; Sia, W.Y. An empirical study on the consumers’ willingness to insure online. Pol. J. Manag. Stud. 2019, 20, 202–212. [Google Scholar] [CrossRef]

- Eeuwen, M.V. Mobile Conversational Commerce: Messenger Chatbots as the Next Interface between Businesses and Consumers. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2017. [Google Scholar]

- Kuberkar, S.; Singhal, T.K. Factors influencing adoption intention of AI powered chatbot for public transport services within a smart city. Int. J. Emerg. Technol. 2020, 11, 948–958. [Google Scholar]

- Brachten, F.; Kissmer, T.; Stieglitz, S. The acceptance of chatbots in an enterprise context-A survey study. Int. J. Inf. Manag. 2021, 60, 102375. [Google Scholar] [CrossRef]

- Gansser, O.A.; Reich, C.S. A new acceptance model for artificial intelligence with extensions to UTAUT2: An empirical study in three segments of application. Technol. Soc. 2021, 65, 101535. [Google Scholar] [CrossRef]

- Melián-González, S.; Gutiérrez-Taño, D.; Bulchand-Gidumal, J. Predicting the intentions to use chatbots for travel and tourism. Curr. Issues Tour. 2021, 24, 192–210. [Google Scholar] [CrossRef]

- Balakrishnan, J.; Abed, S.S.; Jones, P. The role of meta-UTAUT factors, perceived anthropomorphism, perceived intelligence, and social self-efficacy in chatbot-based services? Technol. Forecast. Soc. Chang. 2022, 180, 121692. [Google Scholar] [CrossRef]

- Lee, S.; Oh, J.; Moon, W.-K. Adopting Voice Assistants in Online Shopping: Examining the Role of Social Presence, Performance Risk, and Machine Heuristic. Int. J. Hum.–Comput. Interact. 2022. [Google Scholar] [CrossRef]

- Pawlik, V.P. Design Matters! How Visual Gendered Anthropomorphic Design Cues Moderate the Determinants of the Behavioral Intention Toward Using Chatbots. In Chatbot Research and Design, Proceedings of the 5th International Workshop, CONVERSATIONS 2021, Virtual Event, 23–24 November 2021; Følstad, A., Araujo, T., Papadopoulos, S., Law, E.L.-C., Luger, E., Goodwin, M., Brandtzaeg, P.B., Eds.; Revised Selected Papers; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Silva, F.A.; Shojaei, A.S.; Barbosa, B. Chatbot-Based Services: A Study on Customers’ Reuse Intention. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 457–474. [Google Scholar] [CrossRef]

- Xie, C.; Wang, Y.; Cheng, Y. Does Artificial Intelligence Satisfy You? A Meta-Analysis of User Gratification and User Satisfaction with AI-Powered Chatbots. Int. J. Hum. Comput. Interact. 2022. [Google Scholar] [CrossRef]

- Rajaobelina, L.; PromTep, S.P.; Arcand, M.; Ricard, L. Creepiness: Its antecedents and impact on loyalty when interacting with a chatbot. Psychol. Mark. 2021, 38, 2339–2356. [Google Scholar] [CrossRef]

- Xing, X.; Song, M.; Duan, Y.; Mou, J. Effects of different service failure types and recovery strategies on the consumer response mechanism of chatbots. Technol. Soc. 2022, 70, 102049. [Google Scholar] [CrossRef]

- Kasilingam, D.L. Understanding the attitude and intention to use smartphone chatbots for shopping. Technol. Soc. 2020, 62, 101280. [Google Scholar] [CrossRef]

- Van Pinxteren, M.M.; Pluymaekers, M.; Lemmink, J.G. Human-like communication in conversational agents: A literature review research agenda. J. Serv. Manag. 2020, 31, 203–225. [Google Scholar] [CrossRef]

- Makanyeza, C.; Mutambayashata, S. Consumers’ acceptance and use of plastic money in Harare, Zimbabwe: Application of the unified theory of acceptance and use of technology 2. Int. J. Bank Mark. 2018, 36, 379–392. [Google Scholar] [CrossRef]

- Fanea-Ivanovici, M.; Baber, H. The role of entrepreneurial intentions, perceived risk and perceived trust in crowdfunding intentions. Eng. Econ. 2021, 32, 433–445. [Google Scholar] [CrossRef]

- Balasubramanian, R.; Libarikian, A.; McElhaney, D. Insurance 2030-the Impact of AI on the Future of Insurance. McKinsey & Company. Consultado en. 2018. Available online: https://www.mckinsey.com/industries/financial-services/our-insights/insurance-2030-the-impact-of-ai-on-the-future-of-insurance#/ (accessed on 12 February 2023).

- Kovacs, O. The dark corners of industry 4.0–Grounding economic governance 2.0. Technol. Soc. 2018, 55, 140–145. [Google Scholar] [CrossRef]

- Stahl, B.C. (Ed.) Perspectives in Artificial Intelligence. In Artificial Intelligence for a Better Future: An Ecosystem Perspective on the Ethics of AI and Emerging Digital Technologies; Springer Nature: Berlin/Heidelberg, Germany, 2021; pp. 7–17. [Google Scholar] [CrossRef]

- Tomić, N.; Kalinić, Z.; Todorović, V. Using the UTAUT model to analyze user intention to accept electronic payment systems in Serbia. Port. Econ. J. 2023, 22, 251–270. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Baabdullah, A.M.; Alalwan, A.A.; Algharabat, R.S.; Metri, B.; Rana, N.P. Virtual agents and flow experience: An empirical examination of AI-powered chatbots. Technol. Forecast. Soc. Chang. 2022, 181, 121772. [Google Scholar] [CrossRef]

- De Cicco, R.; Iacobucci, S.; Aquino, A.; Romana Alparone, F.; Palumbo, R. Understanding Users’ Acceptance of Chatbots: An Extended TAM Approach. In Chatbot Research and Design, Proceedings of the 5th International Workshop, CONVERSATIONS 2021, Virtual Event, 23–24 November 2021; Følstad, A., Araujo, T., Papadopoulos, S., Law, E.L.-C., Luger, E., Goodwin, M., Brandtzaeg, P.B., Eds.; Springer: Cham, Switzerland, 2022; Revised Selected Papers. [Google Scholar] [CrossRef]

- Arias-Oliva, M.; de Andrés-Sánchez, J.; Pelegrín-Borondo, J. Fuzzy set qualitative comparative analysis of factors influencing the use of cryptocurrencies in Spanish households. Mathematics 2021, 9, 324. [Google Scholar] [CrossRef]

- Akbar, Y.R.; Zainal, H.; Basriani, A.; Zainal, R. Moderate Effect of Financial Literacy during the COVID-19 Pandemic in Technology Acceptance Model on the Adoption of Online Banking Services. Bp. Int. Res. Crit. Inst. J. 2021, 4, 11904–11915. [Google Scholar] [CrossRef]

- Hsieh, P.J.; Lai, H.M. Exploring people′s intentions to use the health passbook in self-management: An extension of the technology acceptance and health behavior theoretical perspectives in health literacy. Technol. Forecast. Soc. Chang. 2020, 161, 120328. [Google Scholar] [CrossRef]

- Stolper, O.A.; Walter, A. Financial literacy financial, advice and financial behavior. J. Bus. Econ. 2017, 87, 581–643. [Google Scholar] [CrossRef]

- Sanjeewa, W.S.; Hongbing, O. Consumers’ insurance literacy: Literature review, conceptual definition, and approach for a measurement instrument. Eur. J. Bus. Manag. 2019, 11, 49–65. [Google Scholar] [CrossRef]

- Ullah, S.; Kiani, U.S.; Raza, B.; Mustafa, A. Consumers’ Intention to Adopt m-payment/m-banking: The Role of Their Financial Skills and Digital Literacy. Front. Psychol. 2022, 13, 873708. [Google Scholar] [CrossRef] [PubMed]

- Grable, J.E.; Rabbani, A. The Moderating Effect of Financial Knowledge on Financial Risk Tolerance. J. Risk Financ. Manag. 2023, 16, 137. [Google Scholar] [CrossRef]

- Nomi, M.; Sabbir, M.M. Investigating the factors of consumers’ purchase intention towards life insurance in Bangladesh: An application of the theory of reasoned action. Asian Acad. Manag. J. 2020, 25, 135–165. [Google Scholar] [CrossRef]

- Weedige, S.S.; Ouyang, H.; Gao, Y.; Liu, Y. Decision making in personal insurance: Impact of insurance literacy. Sustainability 2019, 11, 6795. [Google Scholar] [CrossRef]

- Onay, C.; Aydin, G.; Kohen, S. Overcoming resistance barriers in mobile banking through financial literacy. Int. J. Mob. Commun. 2023, 21, 341–364. [Google Scholar] [CrossRef]

- Bisquerra Alzina, R.; Pérez Escoda, N. Can Likert scales increase in sensitivity? REIRE 2015, 8, 129–147. [Google Scholar] [CrossRef]

- Pelegrin-Borondo, J.; Reinares-Lara, E.; Olarte-Pascual, C. Assessing the acceptance of technological implants (the cyborg): Evidences and challenges. Comput. Hum. Behav. 2017, 70, 104–112. [Google Scholar] [CrossRef]

- Andrés-Sanchez, J.; de Torres-Burgos, F.; Arias-Oliva, M. Why disruptive sport competition technologies are used by amateur athletes? An analysis of Nike Vaporfly shoes. J. Sport Health Res. 2023, 15, 197–214. [Google Scholar] [CrossRef]

- Alesanco-Llorente, M.; Reinares-Lara, E.; Pelegrín-Borondo, J.; Olarte-Pascual, C. Mobile-assisted showrooming behavior and the (r) evolution of retail: The moderating effect of gender on the adoption of mobile augmented reality. Technol. Forecast. Soc. Chang. 2023, 191, 122514. [Google Scholar] [CrossRef]

- Reinares-Lara, E.; Pelegrín-Borondo, J.; Olarte-Pascual, C.; Oruezabala, G. The role of cultural identity in acceptance of wine innovations in wine regions. Br. Food J. 2023, 125, 869–885. [Google Scholar] [CrossRef]

- Morgan, R.M.; Hunt, S.D. The commitment-trust theory of relationship marketing. J. Mark. 1994, 58, 20–38. [Google Scholar] [CrossRef]

- Hastings, J.S.; Madrian, B.C.; Skimmyhorn, B. Financial literacy, financial education, and economic outcomes. Annu. Rev. Econ. 2013, 5, 347–375. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Kock, N.; Hadaya, P. Minimum sample size estimation in PLS-SEM: The inverse square root and gamma-exponential methods. Inf. Syst. J. 2018, 28, 227–261. [Google Scholar] [CrossRef]

- Dijkstra, T.K.; Henseler, J. Consistent partial least squares path modeling. MIS Q. 2015, 39, 297–316. Available online: https://www.jstor.org/stable/26628355 (accessed on 20 May 2023). [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Liengaard, B.D.; Sharma, P.N.; Hult, G.T.M.; Jensen, M.B.; Sarstedt, M.; Hair, J.F.; Ringle, C.M. Prediction: Coveted, yet forsaken? Introducing a cross-validated predictive ability test in partial least squares path modeling. Decis. Sci. 2021, 52, 362–392. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Smith, D.; Reams, R.; Hair, J.F., Jr. Partial least squares structural equation modeling (PLS-SEM): A useful tool for family business researchers. J. Fam. Bus. Strategy 2014, 5, 105–115. [Google Scholar] [CrossRef]

- Mahfuz, M.A.; Khanam, L.; Mutharasu, S.A. The influence of website quality on m-banking services adoption in Bangladesh: Applying the UTAUT2 model using PLS. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques, Chennai, India, 3–5 March 2016; pp. 2329–2335. [Google Scholar] [CrossRef]

- Moon, Y.; Hwang, J. Crowdfunding as an alternative means for funding sustainable appropriate technology: Acceptance determinants of backers. Sustainability 2018, 10, 1456. [Google Scholar] [CrossRef]

- Milanović, N.; Milosavljević, M.; Benković, S.; Starčević, D.; Spasenić, Ž. An Acceptance Approach for Novel Technologies in Car Insurance. Sustainability 2020, 12, 10331. [Google Scholar] [CrossRef]

- Rimban, E. Challenges and Limitations of ChatGPT and Other Large Language Models Challenges. Available online: https://ssrn.com/abstract=4454441 (accessed on 20 May 2023).

- Andres-Sanchez, J.; Almahameed, A.A.; Arias-Oliva, M.; Pelegrin-Borondo, J. Correlational and Configurational Analysis of Factors Influencing Potential Patients’ Attitudes toward Surgical Robots: A Study in the Jordan University Community. Mathematics 2022, 10, 4319. [Google Scholar] [CrossRef]

- Andrés-Sánchez, J.; Gené-Albesa, J. Assessing Attitude and Behavioral Intention toward Chatbots in an Insurance Setting: A Mixed Method Approach. Int. J. Hum.–Comput. Interact. 2023. [Google Scholar] [CrossRef]

- Rampton, J. The Advantages and Disadvantages of ChatGPT. 2023. Available online: https://www.entrepreneur.com/growth-strategies/the-advantages-and-disadvantages-of-chatgpt/450268 (accessed on 6 June 2023).

- Deng, J.; Lin, Y. The Benefits and Challenges of ChatGPT: An Overview. Front. Comput. Intell. Syst. 2022, 2, 81–83. [Google Scholar] [CrossRef]

| Gender | Age |

| Male: 52.03% | ≤40 years: 14.375 |

| Women: 45.53% | >40 years and <55 years: 53.89% |

| Other/NA: 2.44% | >55 years: 29.94% |

| NA: 1.80% | |

| Academic degree | Income |

| At least graduate: 88.62% | ≥EUR 3000: 34.55% |

| Undergraduate: 11.38% | ≥EUR 1750 and <EUR 3000: 35.77% |

| <EUR 1750: 29.67% | |

| Number of policies | |

| >4 contracts: 52.03% | |

| ≥2 and <4: 47.97% |

| Items | Mean | Median | Std. Deviation | Factor Loading | Cronbach’s α | CR | ρA | AVE |

|---|---|---|---|---|---|---|---|---|

| BI | 0.891 | 0.895 | 0.932 | 0.822 | ||||

| BI1 | 1.27 | 0 | 1.87 | 0.921 | ||||

| BI2 | 2.24 | 1 | 2.70 | 0.861 | ||||

| BI3 | 1.38 | 0 | 2.06 | 0.935 | ||||

| PE | 0.918 | 0.921 | 0.948 | 0.859 | ||||

| PE1 | 2.71 | 2 | 2.66 | 0.929 | ||||

| PE2 | 2.57 | 2 | 2.58 | 0.925 | ||||

| PE3 | 2.46 | 2 | 2.61 | 0.926 | ||||

| EE | 0.928 | 0.932 | 0.949 | 0.823 | ||||

| EE1 | 2.88 | 2.5 | 2.82 | 0.869 | ||||

| EE2 | 2.69 | 2 | 2.61 | 0.942 | ||||

| EE3 | 2.16 | 2 | 2.27 | 0.906 | ||||

| EE4 | 2.64 | 2 | 2.64 | 0.910 | ||||

| SI | 0.927 | 0.929 | 0.953 | 0.872 | ||||

| SI1 | 1.75 | 1 | 1.94 | 0.922 | ||||

| SI2 | 1.61 | 1 | 2.05 | 0.953 | ||||

| SI3 | 2.03 | 1 | 2.15 | 0.927 | ||||

| TRUST | 0.83 | 0.865 | 0.897 | 0.745 | ||||

| TRUST1 | 2.07 | 1 | 2.50 | 0.912 | ||||

| TRUST2 | 3.46 | 3 | 3.04 | 0.836 | ||||

| TRUST3 | 2.08 | 2 | 2.18 | 0.839 | ||||

| IL | 0.969 | 0.988 | 0.985 | 0.971 | ||||

| IL1 | 5.3 | 5 | 2.78 | 0.988 | ||||

| IL2 | 5.05 | 5 | 2.88 | 0.987 |

| BI | PE | EE | SI | TRUST | IL | |

|---|---|---|---|---|---|---|

| BI | 0.906 | 0.769 | 0.791 | 0.782 | 0.836 | 0.168 |

| PE | 0.724 | 0.909 | 0.838 | 0.685 | 0.948 | 0.194 |

| EE | 0.723 | 0.799 | 0.907 | 0.75 | 0.888 | 0.226 |

| SI | 0.713 | 0.701 | 0.641 | 0.934 | 0.786 | 0.054 |

| TRUST | 0.734 | 0.841 | 0.794 | 0.69 | 0.863 | 0.268 |

| IL | 0.158 | 0.183 | 0.215 | 0.045 | 0.246 | 0.985 |

| Direct effects | β | Student’s t |

| PE→BI | −0.036 | 0.223 (0.823) |

| EE→BI | 0.401 | 2.556 (0.011) |

| SI→BI | 0.222 | 1.982 (0.047) |

| TRUST→BI | 0.267 | 2.749 (0.006) |

| Moderating effects | β | Student’s t |

| PE × IL→BI | 0.042 | 0.457 (0.647) |

| EE × IL→BI | −0.001 | 0.007 (0.995) |

| PE × gender→BI | −0.065 | 0.391 (0.696) |

| EE × gender→BI | −0.022 | 0.126 (0.901) |

| SI × gender→BI | 0.102 | 0.629 (0.529) |

| PE × age→BI | 0.159 | 0.979 (0.328) |

| EE × age→BI | −0.187 | 1.121 (0.262) |

| SI × age→BI | 0.074 | 0.498 (0.618) |

| Effect | β | Student’s t | Decision on the Hypothesis |

|---|---|---|---|

| PE→BI | 0.034 | 0.407 | H1: Reject |

| EE→BI | 0.288 | 3.586 *** | H2: Accept |

| SI→BI | 0.339 | 4.764 *** | H3: Accept |

| TRUST→BI | 0.23 | 2.441 ** | H4: Accept |

| PE × IL→BI | 0.057 | 0.635 | H5a: Reject |

| EE × IL→BI | −0.018 | 0.193 | H5b: Reject |

| Predictive Measures | Model vs. Average | Model vs. Linear | |||||

|---|---|---|---|---|---|---|---|

| Q2 | RMSE | MAE | ALD | p Value | ALD | p Value | |

| BI | 0.618 | 0.627 | 0.456 | −2.477 | <0.01 | 0.102 | 0.463 |

| Overall model | −2.477 | <0.01 | 0.102 | 0.463 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Andrés-Sánchez, J.; Gené-Albesa, J. Explaining Policyholders’ Chatbot Acceptance with an Unified Technology Acceptance and Use of Technology-Based Model. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 1217-1237. https://doi.org/10.3390/jtaer18030062

de Andrés-Sánchez J, Gené-Albesa J. Explaining Policyholders’ Chatbot Acceptance with an Unified Technology Acceptance and Use of Technology-Based Model. Journal of Theoretical and Applied Electronic Commerce Research. 2023; 18(3):1217-1237. https://doi.org/10.3390/jtaer18030062

Chicago/Turabian Stylede Andrés-Sánchez, Jorge, and Jaume Gené-Albesa. 2023. "Explaining Policyholders’ Chatbot Acceptance with an Unified Technology Acceptance and Use of Technology-Based Model" Journal of Theoretical and Applied Electronic Commerce Research 18, no. 3: 1217-1237. https://doi.org/10.3390/jtaer18030062

APA Stylede Andrés-Sánchez, J., & Gené-Albesa, J. (2023). Explaining Policyholders’ Chatbot Acceptance with an Unified Technology Acceptance and Use of Technology-Based Model. Journal of Theoretical and Applied Electronic Commerce Research, 18(3), 1217-1237. https://doi.org/10.3390/jtaer18030062