Dynamic Emotion Recognition and Expression Imitation in Neurotypical Adults and Their Associations with Autistic Traits

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. The Autism-Spectrum Quotient Assessment

2.3. The Prosopagnosia-Index 20 Items (PI20)

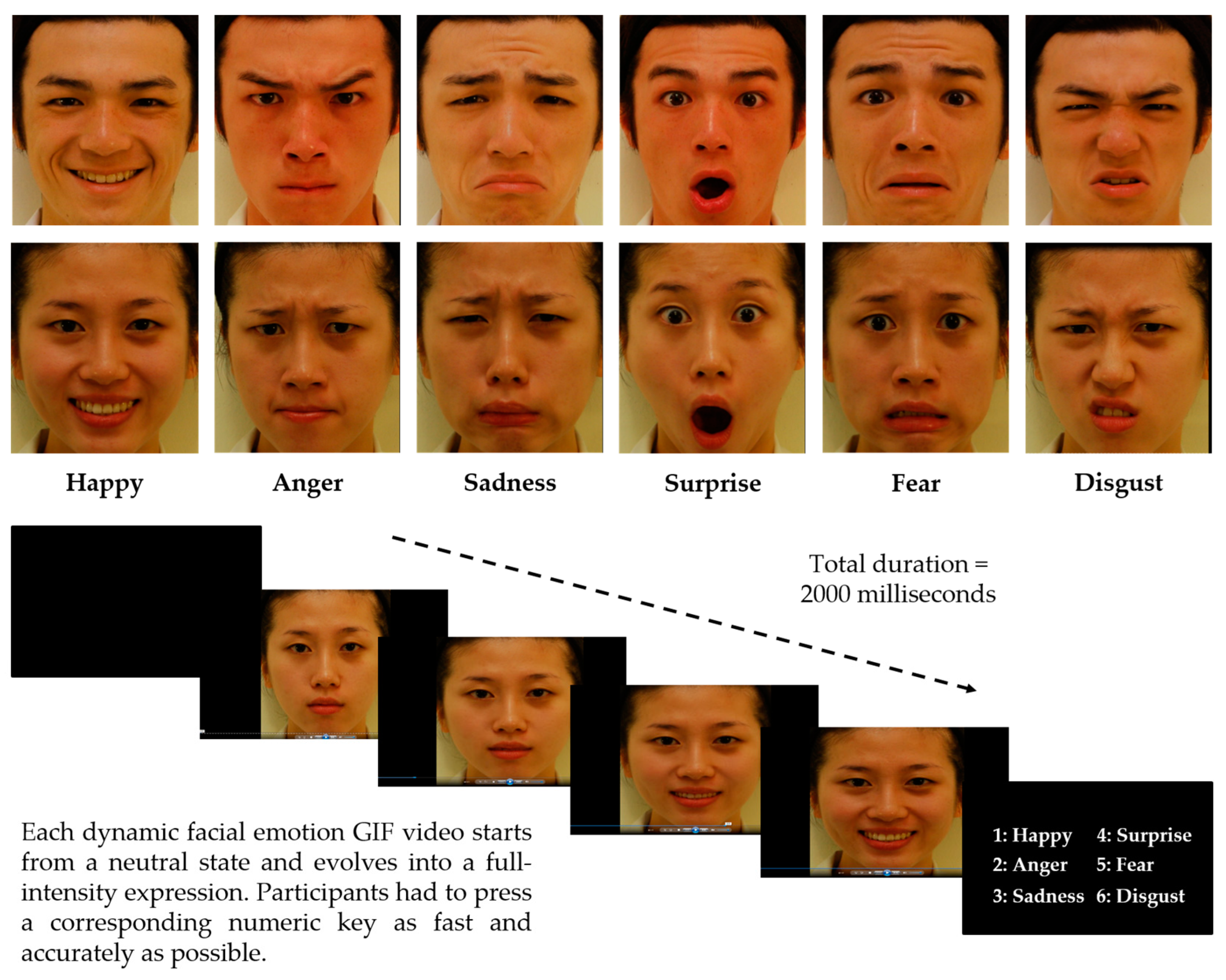

2.4. Dynamic Facial Emotion Recognition Task

2.4.1. Apparatus and Stimuli

2.4.2. Procedures

2.5. Expression Imitation Task

2.5.1. Apparatus and Stimuli

2.5.2. Procedures

3. Results

3.1. Chinese AQ and PI20

3.2. Dynamic Facial Emotion Recognition

3.3. Expression Imitation

3.4. Correlations Among AQ, PI20, and Task Performances

3.4.1. AQ, PI20, and Dynamic Emotion Recognition

3.4.2. AQ, PI20, and Expression Imitation

3.4.3. Emotion Recognition and Expression Imitation

4. Discussion

5. Conclusions, Limitations, and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Edition, F. Diagnostic and statistical manual of mental disorders. Am. Psychiatr. Assoc. 2013, 21, 591–643. [Google Scholar]

- Kanner, L. Autistic disturbances of affective contact. Nerv. Child 1943, 2, 217–250. [Google Scholar]

- Behrmann, M.; Thomas, C.; Humphreys, K. Seeing it differently: Visual processing in autism. Trends Cogn. Sci. 2006, 10, 258–264. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, J.W.; Sung, A. The “eye avoidance” hypothesis of autism face processing. J. Autism Dev. Disord. 2016, 46, 1538–1552. [Google Scholar] [CrossRef] [PubMed]

- Chien, S.H.-L.; Wang, L.-H.; Chen, C.-C.; Chen, T.-Y.; Chen, H.-S. Autistic children do not exhibit an own-race advantage as compared to typically developing children. Res. Autism Spectr. Disord. 2014, 8, 1544–1551. [Google Scholar] [CrossRef]

- Dawson, G.; Carver, L.; Meltzoff, A.N.; Panagiotides, H.; McPartland, J.; Webb, S.J. Neural correlates of face and object recognition in young children with autism spectrum disorder, developmental delay, and typical development. Child Dev. 2002, 73, 700–717. [Google Scholar] [CrossRef] [PubMed]

- Dawson, G.; Webb, S.J.; McPartland, J. Understanding the nature of face processing impairment in autism: Insights from behavioral and electrophysiological studies. Dev. Neuropsychol. 2005, 27, 403–424. [Google Scholar] [CrossRef] [PubMed]

- Gross, T.F. The perception of four basic emotions in human and nonhuman faces by children with autism and other developmental disabilities. J. Abnorm. Child Psychol. 2004, 32, 469–480. [Google Scholar] [CrossRef]

- Buitelaar, J.K.; Van der Wees, M.; Swaab–Barneveld, H.; Van der Gaag, R.J. Theory of mind and emotion-recognition functioning in autistic spectrum disorders and in psychiatric control and normal children. Dev. Psychopathol. 1999, 11, 39–58. [Google Scholar] [CrossRef]

- Crespi, B.; Badcock, C. Psychosis and autism as diametrical disorders of the social brain. Behav. Brain Sci. 2008, 31, 241–261. [Google Scholar] [CrossRef] [PubMed]

- Frith, U. Autism: Explaining the Enigma, 2nd ed.; Blackwell Publishing: Malden, MA, USA, 2003. [Google Scholar]

- Loveland, K.A.; Tunali-Kotoski, B.; Pearson, D.A.; Brelsford, K.A.; Ortegon, J.; Chen, R. Imitation and expression of facial affect in autism. Dev. Psychopathol. 1994, 6, 433–444. [Google Scholar] [CrossRef]

- Drimalla, H.; Baskow, I.; Behnia, B.; Roepke, S.; Dziobek, I. Imitation and recognition of facial emotions in autism: A computer vision approach. Mol. Autism 2021, 12, 27. [Google Scholar] [CrossRef] [PubMed]

- Oberman, L.M.; Winkielman, P.; Ramachandran, V.S. Slow echo: Facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Dev. Sci. 2009, 12, 510–520. [Google Scholar] [CrossRef] [PubMed]

- McIntosh, D.N.; Reichmann-Decker, A.; Winkielman, P.; Wilbarger, J.L. When the social mirror breaks: Deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Dev. Sci. 2006, 9, 295–302. [Google Scholar] [CrossRef] [PubMed]

- Yoshimura, S.; Sato, W.; Uono, S.; Toichi, M. Impaired overt facial mimicry in response to dynamic facial expressions in high-functioning autism spectrum disorders. J. Autism Dev. Disord. 2015, 45, 1318–1328. [Google Scholar] [CrossRef] [PubMed]

- Weiss, E.M.; Rominger, C.; Hofer, E.; Fink, A.; Papousek, I. Less differentiated facial responses to naturalistic films of another person’s emotional expressions in adolescents and adults with High-Functioning Autism Spectrum Disorder. Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2019, 89, 341–346. [Google Scholar] [CrossRef]

- Morton, J.; Johnson, M.H. CONSPEC and CONLERN: A two-process theory of infant face recognition. Psychol. Rev. 1991, 98, 164–181. [Google Scholar] [CrossRef]

- Chien, S.H.-L. No more top-heavy bias: Infants and adults prefer upright faces but not top-heavy geometric or face-like patterns. J. Vis. 2011, 11, 13. [Google Scholar] [CrossRef]

- Chien, S.H.-L.; Hsu, H.Y. No more top-heavy bias: On early specialization process for face and race in infants. Chin. J. Clin. Psychol. 2012, 54, 95–114. [Google Scholar]

- Barrera, M.E.; Maurer, D. The perception of facial expressions by the three-month-old. Child Dev. 1981, 52, 203–206. [Google Scholar] [CrossRef] [PubMed]

- Walker-Andrews, A.S. Infants’ perception of expressive behaviors: Differentiation of multimodal information. Psychol. Bull. 1997, 121, 437–456. [Google Scholar] [CrossRef]

- Denham, S.A. Social and Emotional Learning, Early Childhood. In Encyclopedia of Primary Prevention and Health Promotion; Gullotta, T.P., Bloom, M., Kotch, J., Blakely, C., Bond, L., Adams, G., Browne, C., Klein, W., Ramos, J., Eds.; Springer: Boston, MA, USA, 2003; pp. 1009–1018. [Google Scholar]

- Herba, C.; Phillips, M. Annotation: Development of facial expression recognition from childhood to adolescence: Behavioural and neurological perspectives. J. Child Psychol. Psychiatry 2004, 45, 1185–1198. [Google Scholar] [CrossRef]

- Herba, C.M.; Landau, S.; Russell, T.; Ecker, C.; Phillips, M.L. The development of emotion-processing in children: Effects of age, emotion, and intensity. J. Child Psychol. Psychiatry 2006, 47, 1098–1106. [Google Scholar] [CrossRef] [PubMed]

- Richoz, A.-R.; Lao, J.; Pascalis, O.; Caldara, R. Tracking the recognition of static and dynamic facial expressions of emotion across the life span. J. Vis. 2018, 18, 5. [Google Scholar] [CrossRef]

- Kolb, B.; Wilson, B.; Taylor, L. Developmental changes in the recognition and comprehension of facial expression: Implications for frontal lobe function. Brain Cogn. 1992, 20, 74–84. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Izard, C.E. Emotional intelligence or adaptive emotions? Emotion 2001, 1, 249–257. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, K.; Campbell, R.; Skuse, D. Age, gender, and puberty influence the development of facial emotion recognition. Front. Psychol. 2015, 6, 761. [Google Scholar] [CrossRef]

- Chiang, Y.-C.; Chien, S.H.-L.; Lyu, J.-L.; Chang, C.-K. Recognition of dynamic emotional expressions in children and adults and its associations with empathy. Sensors 2024, 24, 4674. [Google Scholar] [CrossRef] [PubMed]

- Thomas, L.A.; De Bellis, M.D.; Graham, R.; LaBar, K.S. Development of emotional facial recognition in late childhood and adolescence. Dev. Sci. 2007, 10, 547–558. [Google Scholar] [CrossRef] [PubMed]

- Riddell, C.; Nikolić, M.; Dusseldorp, E.; Kret, M.E. Age-related changes in emotion recognition across childhood: A meta-analytic review. Psychol. Bull. 2024, 150, 1094–1117. [Google Scholar] [CrossRef] [PubMed]

- Meltzoff, A.N.; Moore, M.K. Imitation of facial and manual gestures by human neonates. Science 1977, 198, 75–78. [Google Scholar] [CrossRef]

- Meltzoff, A.N.; Moore, M.K. Newborn infants imitate adult facial gestures. Child Dev. 1983, 54, 702–709. [Google Scholar] [CrossRef] [PubMed]

- Meltzoff, A.N.; Marshall, P.J. Human infant imitation as a social survival circuit. Curr. Opin. Behav. Sci. 2018, 24, 130–136. [Google Scholar] [CrossRef]

- Field, T.M.; Walden, T.A. Production and discrimination of facial expressions by preschool children. Child Dev. 1982, 53, 1299–1311. [Google Scholar] [CrossRef] [PubMed]

- Grossard, C.; Chaby, L.; Hun, S.; Pellerin, H.; Bourgeois, J.; Dapogny, A.; Ding, H.; Serret, S.; Foulon, P.; Chetouani, M. Children facial expression production: Influence of age, gender, emotion subtype, elicitation condition and culture. Front. Psychol. 2018, 9, 446. [Google Scholar] [CrossRef]

- Dimberg, U. Facial reactions to facial expressions. Psychophysiology 1982, 19, 643–647. [Google Scholar] [CrossRef] [PubMed]

- Blairy, S.; Herrera, P.; Hess, U. Mimicry and the judgment of emotional facial expressions. J. Nonverbal Behav. 1999, 23, 5–41. [Google Scholar] [CrossRef]

- Wallbott, H.G. Recognition of emotion from facial expression via imitation? Some indirect evidence for an old theory. Br. J. Soc. Psychol. 1991, 30, 207–219. [Google Scholar] [CrossRef]

- Oberman, L.M.; Winkielman, P.; Ramachandran, V.S. Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2007, 2, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Rychlowska, M.; Cañadas, E.; Wood, A.; Krumhuber, E.G.; Fischer, A.; Niedenthal, P.M. Blocking mimicry makes true and false smiles look the same. PLoS ONE 2014, 9, e90876. [Google Scholar] [CrossRef] [PubMed]

- Wood, A.; Rychlowska, M.; Korb, S.; Niedenthal, P. Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 2016, 20, 227–240. [Google Scholar] [CrossRef] [PubMed]

- Piven, J.; Palmer, P.; Jacobi, D.; Childress, D.; Arndt, S. Broader autism phenotype: Evidence from a family history study of multiple-incidence autism families. Am. J. Psychiatry 1997, 154, 185–190. [Google Scholar]

- Poljac, E.; Poljac, E.; Wagemans, J. Reduced accuracy and sensitivity in the perception of emotional facial expressions in individuals with high autism spectrum traits. Autism 2013, 17, 668–680. [Google Scholar] [CrossRef] [PubMed]

- Fujimori, M.; Okanoya, K. An ERP study of autistic traits and emotional recognition in non-clinical adolescence. Psychology 2013, 4, 515–519. [Google Scholar]

- Kerr-Gaffney, J.; Mason, L.; Jones, E.; Hayward, H.; Ahmad, J.; Harrison, A.; Loth, E.; Murphy, D.; Tchanturia, K. Emotion recognition abilities in adults with anorexia nervosa are associated with autistic traits. J. Clin. Med. 2020, 9, 1057. [Google Scholar] [CrossRef]

- Kowallik, A.E.; Pohl, M.; Schweinberger, S.R. Facial imitation improves emotion recognition in adults with different levels of sub-clinical autistic traits. J. Intell. 2021, 9, 4. [Google Scholar] [CrossRef] [PubMed]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Ethical Principles of Psychologists and Code of Conduct. American Psychological Association. Available online: https://www.apa.org/ethics/code/ (accessed on 10 July 2020).

- Liu, M. Screening adults for asperger syndrome and high-functioning autism by using the Autism-Spectrum Quotient (AQ)(Mandarin Version). J. Spec. Educ. 2008, 33, 73–92. [Google Scholar]

- Baron-Cohen, S.; Wheelwright, S.; Skinner, R.; Martin, J.; Clubley, E. The autism-spectrum quotient (AQ): Evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. J. Autism Dev. Disord. 2001, 31, 5–17. [Google Scholar] [CrossRef]

- Shah, P.; Gaule, A.; Sowden, S.; Bird, G.; Cook, R. The 20-item prosopagnosia index (PI20): A self-report instrument for identifying developmental prosopagnosia. R. Soc. Open Sci. 2015, 2, 140343. [Google Scholar] [CrossRef]

- Tsantani, M.; Vestner, T.; Cook, R. The Twenty Item Prosopagnosia Index (PI20) provides meaningful evidence of face recognition impairment. R. Soc. Open Sci. 2021, 8, 202062. [Google Scholar] [CrossRef]

- Taiwanese Facial Expression Image Database. Institute of Brain Science, National Yang-Ming University. Brain Mapping Laboratory, Taipei. Available online: https://bmlab.web.nycu.edu.tw/%e8%b3%87%e6%ba%90%e9%80%a3%e7%b5%90/ (accessed on 1 August 2018).

- Chien, S.H.-L.; Tai, C.-L.; Yang, S.-F. The development of the own-race advantage in school-age children: A morphing face paradigm. PLoS ONE 2018, 13, e0195020. [Google Scholar] [CrossRef]

- Chen, C.; Yang, S.; Chien, S. Exploring the own-race face encoding advantage and the other-race face categorization bias in Taiwanese adults: Using a morphing face paradigm. Chin. J. Clin. Psychol. 2016, 58, 39–53. [Google Scholar]

- Ho, M.W.-R.; Chien, S.H.-L.; Lu, M.-K.; Chen, J.-C.; Aoh, Y.; Chen, C.-M.; Lane, H.-Y.; Tsai, C.-H. Impairments in face discrimination and emotion recognition are related to aging and cognitive dysfunctions in Parkinson’s disease with dementia. Sci. Rep. 2020, 10, 4367. [Google Scholar] [CrossRef] [PubMed]

- Boraston, Z.; Blakemore, S.-J.; Chilvers, R.; Skuse, D. Impaired sadness recognition is linked to social interaction deficit in autism. Neuropsychologia 2007, 45, 1501–1510. [Google Scholar] [CrossRef] [PubMed]

- Stantić, M.; Ichijo, E.; Catmur, C.; Bird, G. Face memory and face perception in autism. Autism 2022, 26, 276–280. [Google Scholar] [CrossRef] [PubMed]

- Sun, W.; Wang, Y.; Wang, J.; Luo, F. Psychometric Properties of the Chinese version of the 20-item Prosopagnosia Index (PI20). In Proceedings of the E3S Web of Conferences, Semarang, Indonesia, 4–5 August 2021; Volume 271, p. 01036. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Du, S.; Tao, Y.; Martinez, A.M. Compound facial expressions of emotion. Proc. Natl. Acad. Sci. USA 2014, 111, E1454–E1462. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, Q.; Zhang, C.; Zhu, J.; Liu, T.; Zhang, Z.; Li, Y.-F. MMATrans: Muscle movement aware representation learning for facial expression recognition via transformers. IEEE Trans. Ind. Inform. 2024, 20, 13753–13764. [Google Scholar] [CrossRef]

- Dupré, D.; Andelic, N.; Morrison, G.; McKeown, G. Accuracy of three commercial automatic emotion recognition systems across different individuals and their facial expressions. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Athens, Greece, 19–23 March 2018; pp. 627–632. [Google Scholar]

- Noordewier, M.K.; Breugelmans, S.M. On the valence of surprise. Cogn. Emot. 2013, 27, 1326–1334. [Google Scholar] [CrossRef] [PubMed]

- Reisenzein, R.; Horstmann, G.; Schützwohl, A. The cognitive-evolutionary model of surprise: A review of the evidence. Top. Cogn. Sci. 2019, 11, 50–74. [Google Scholar] [CrossRef] [PubMed]

- Foster, M.I.; Keane, M.T. Why some surprises are more surprising than others: Surprise as a metacognitive sense of explanatory difficulty. Cogn. Psychol. 2015, 81, 74–116. [Google Scholar] [CrossRef] [PubMed]

- Lipps, T. Das wissen von fremden Ichen. Psychologische Untersuchungen 1905, 4, 694. [Google Scholar]

- Holland, A.C.; O’Connell, G.; Dziobek, I. Facial mimicry, empathy, and emotion recognition: A meta-analysis of correlations. Cogn. Emot. 2021, 35, 150–168. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Facial action coding system. Environ. Psychol. Nonverbal. Behav. 1978. [Google Scholar] [CrossRef]

- Russell, J.A. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 1994, 115, 102. [Google Scholar] [CrossRef]

- Pochedly, J.T.; Widen, S.C.; Russell, J.A. What emotion does the “facial expression of disgust” express? Emotion 2012, 12, 1315. [Google Scholar] [CrossRef] [PubMed]

- Bourke, C.; Douglas, K.; Porter, R. Processing of facial emotion expression in major depression: A review. Aust. N. Z. J. 2010, 44, 681–696. [Google Scholar] [CrossRef]

- Kulke, L.; Feyerabend, D.; Schacht, A. A comparison of the Affectiva iMotions Facial Expression Analysis Software with EMG for identifying facial expressions of emotion. Front. Psychol. 2020, 11, 329. [Google Scholar] [CrossRef]

| Females (n = 16) | Males (n = 16) | All (n = 32) | |

|---|---|---|---|

| Age (years) | 22.6 (3.7) | 23.2 (2.7) | 22.9 (3.2) |

| AQ score | 18.6 (6.3) | 20.4 (4.6) | 19.5 (5.5) |

| Social skill | 3.3 (3.0) | 4.3 (2.6) | 3.8 (2.8) |

| Attention switching | 5.3 (1.8) | 5.1 (2.0) | 5.2 (1.9) |

| Attention to detail | 5.3 (1.5) | 4.6 (2.5) | 4.9 (2.1) |

| Communication | 2.3 (2.0) | 3.3 (1.7) | 2.8 (1.9) |

| Imagination | 2.6 (1.5) | 3.3 (1.5) | 2.9 (1.5) |

| PI20 score | 49.1 (12.7) | 48.9 (16.3) | 49.0 (14.3) |

| Autism-Spectrum Quotient (AQ) | Dynamic Facial Emotion Recognition Task | PI20 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AQ Score 1 | Social Skill | Attention Switching | Attention to Detail | Communication | Imagination | HA_ ACC 2 | AN_ ACC 3 | SA_ ACC 4 | SU_ ACC 5 | FE_ ACC 6 | DI_ ACC 7 | Emotion_ACC | ||

| Social skill | 0.763 (0.000) ** | 1 | 0.254 (0.080) | −0.411 (0.010) ** | 0.680 (0.000) ** | 0.336 (0.030) * | 0.118 (0.260) | 0.032 (0.431) | −0.171 (0.175) | −0.035 (0.426) | −0.183 (0.157) | −0.050 (0.393) | −0.144 (0.216) | 0.478 (0.003) ** |

| Attention switching | 0.633 (0.000) ** | - | 1 | −0.062 (0.368) | 0.484 (0.003) ** | 0.072 (0.347) | −0.113 (0.270) | −0.149 (0.207) | −0.219 (0.114) | −0.013 (0.473) | 0.067 (0.357) | −0.183 (0.158) | −0.193 (0.145) | 0.212 (0.122) |

| Attention to detail | −0.001 (0.497) | - | - | 1 | −0.306 (0.044) * | −0.148 (0.209) | −0.012 (0.474) | −0.014 (0.469) | 0.263 (0.073) | −0.064 (0.363) | −0.038 (0.418) | −0.163 (0.186) | −0.031 (0.433) | −0.422 (0.008) ** |

| Communication | 0.786 (0.000) ** | - | - | - | 1 | 0.178 (0.165) | 0.039 (0.416) | −0.048 (0.397) | −0.292 (0.053) | −0.096 (0.301) | −0.255 (0.079) | −0.219 (0.114) | −0.339 (0.029) * | 0.546 (0.001) ** |

| Imagination | 0.471 (0.003) ** | - | - | - | - | 1 | −0.011 (0.476) | −0.140 (0.223) | −0.330 (0.033) * | −0.208 (0.127) | −0.041 (0.412) | −0.128 (0.242) | −0.253 (0.081) | 0.157 (0.195) |

| HA_ACC 2 | 0.025 (0.445) | - | - | - | - | - | 1 | −0.256 (0.078) | 0.094 (0.304) | −0.098 (0.298) | −0.192 (0.146) | 0.094 (0.305) | −0.086 (0.320) | 0.091 (0.309) |

| AN_ACC 3 | −0.095 (0.303) | - | - | - | - | - | - | 1 | −0.124 (0.250) | −0.133 (0.234) | −0.195 (0.143) | 0.128 (0.242) | 0.343 (0.027) * | −0.220 (0.113) |

| SA_ACC 4 | −0.257 (0.078) | - | - | - | - | - | - | - | 1 | 0.505 (0.002) ** | 0.084 (0.325) | 0.221 (0.112) | 0.432 (0.007) ** | −0.074 (0.344) |

| SU_ACC 5 | −0.137 (0.227) | - | - | - | - | - | - | - | - | 1 | 0.135 (0.230) | 0.092 (0.309) | 0.269 (0.068) | −0.040 (0.414) |

| FE_ACC 6 | −0.179 (0.163) | - | - | - | - | - | - | - | - | - | 1 | 0.397 (0.012) * | 0.631 (0.000) ** | −0.029 (0.437) |

| DI_ACC 7 | −0.262 (0.074) | - | - | - | - | - | - | - | - | - | - | 1 | 0.811 (0.000) ** | 0.003 (0.493) |

| Emotion_ACC | −0.337 (0.030) * | - | - | - | - | - | - | - | - | - | - | - | 1 | −0.120 (0.256) |

| PI20 | 0.382 (0.016) * | - | - | - | - | - | - | - | - | - | - | - | - | 1 |

| Autism-Spectrum Quotient (AQ) | Facial Emotion Imitation Task | PI20 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AQ Score 1 | Social Skill | Attention Switching | Attention to Detail | Communication | Imagination | HA_Max 2 | AN_Max 3 | SA_Max 4 | SU_Max 5 | FE_Max 6 | DI_Max 7 | Emotion_Max | ||

| Social skill | 0.763 (0.000) ** | 1 | 0.254 (0.080) | −0.411 (0.010) ** | 0.680 (0.000) ** | 0.336 (0.030) * | 0.260 (0.079) | 0.118 (0.264) | 0.066 (0.361) | 0.098 (0.299) | −0.320 (0.040) * | −0.265 (0.075) | −0.045 (0.405) | 0.478 (0.003) ** |

| Attention switching | 0.633 (0.000) ** | - | 1 | −0.062 (0.368) | 0.484 (0.003) ** | 0.072 (0.347) | 0.237 (0.100) | −0.035 (0.427) | −0.246 (0.091) | 0.133 (0.238) | −0.189 (0.154) | −0.106 (0.286) | −0.144 (0.219) | 0.212 (0.122) |

| Attention to detail | −0.001 (0.497) | - | - | 1 | −0.306 (0.044) * | −0.148 (0.209) | −0.001 (0.497) | −0.144 (0.219) | −0.239 (0.097) | −0.209 (0.129) | −0.047 (0.402) | −0.012 (0.475) | −0.185 (0.160) | −0.422 (0.008) ** |

| Communication | 0.786 (0.000) ** | - | - | - | 1 | 0.178 (0.165) | 0.228 (0.109) | 0.011 (0.477) | −0.047 (0.401) | 0.336 (0.032)* | −0.150 (0.211) | −0.087 (0.321) | −0.025 (0.448) | 0.546 (0.001) ** |

| Imagination | 0.471 (0.003) ** | - | - | - | - | 1 | −0.101 (0.294) | 0.187 (0.156) | 0.033 (0.430) | 0.303 (0.049) * | 0.134 (0.236) | 0.183 (0.162) | 0.181 (0.165) | 0.157 (0.195) |

| HA_Max2 | 0.258 (0.081) | - | - | - | - | - | 1 | −0.237 (0.099) | 0.100 (0.296) | −0.143 (0.222) | 0.088 (0.318) | −0.051 (0.392) | 0.177 (0.170) | 0.162 (0.192) |

| AN_Max 3 | 0.042 (0.411) | - | - | - | - | - | - | 1 | 0.114 (0.271) | 0.305 (0.048) * | 0.416 (0.010) ** | 0.151 (0.208) | 0.675 (0.000) ** | 0.121 (0.258) |

| SA_Max 4 | −0.150 (0.211) | - | - | - | - | - | - | - | 1 | 0.132 (0.240) | 0.267 (0.074) | −0.003 (0.494) | 0.554 (0.001) ** | 0.255 (0.083) |

| SU_Max 5 | 0.208 (0.131) | - | - | - | - | - | - | - | - | 1 | 0.329 (0.035) * | 0.029 (0.439) | 0.354 (0.025) * | 0.473 (0.004) ** |

| FE_Max 6 | −0.257 (0.081) | - | - | - | - | - | - | - | - | - | 1 | 0.357 (0.024) * | 0.827 (0.000) ** | −0.030 (0.436) |

| DI_Max 7 | −0.159 (0.197) | - | - | - | - | - | - | - | - | - | - | 1 | 0.390 (0.015) * | −0.189 (0.154) |

| Emotion_Max | −0.105 (0.286) | - | - | - | - | - | - | - | - | - | - | - | 1 | 0.160 (0.195) |

| PI20 | 0.382 (0.016) * | - | - | - | - | - | - | - | - | - | - | - | - | 1 |

| Dynamic Facial Emotion Recognition Task | Facial Emotion Imitation Task | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HA_ACC 1 | AN_ACC 2 | SA_ACC 3 | SU_ACC 4 | FE_ACC 5 | DI_ACC 6 | Emotion_ACC | HA_Max 7 | AN_Max 8 | SA_Max 9 | SU_Max 10 | FE_Max 11 | DI_Max 12 | Emotion_Max | |

| HA_ACC 1 | 1 | −0.256 (0.078) | 0.094 (0.304) | −0.098 (0.298) | −0.192 (0.146) | 0.094 (0.305) | −0.086 (0.320) | −0.068 (0.357) | 0.126 (0.250) | −0.013 (0.472) | −0.134 (0.236) | 0.033 (0.430) | −0.116 (0.267) | 0.026 (0.444) |

| AN_ACC 2 | - | 1 | −0.124 (0.250) | −0.133 (0.234) | −0.195 (0.143) | 0.128 (0.242) | 0.343 (0.027) * | 0.209 (0.130) | 0.070 (0.354) | −0.022 (0.453) | −0.136 (0.233) | 0.137 (0.231) | 0.164 (0.189) | 0.155 (0.203) |

| SA_ACC 3 | - | - | 1 | 0.505 (0.002) ** | 0.084 (0.325) | 0.221 (0.112) | 0.432 (0.007) ** | −0.115 (0.270) | 0.321 (0.039) * | −0.033 (0.430) | −0.138 (0.229) | −0.124 (0.253) | −0.180 (0.166) | 0.018 (0.461) |

| SU_ACC 4 | - | - | - | 1 | 0.135 (0.230) | 0.092 (0.309) | 0.269 (0.068) | 0.236 (0.100) | −0.018 (0.461) | −0.121 (0.258) | −0.194 (0.148) | −0.132 (0.240) | 0.015 (0.469) | −0.062 (0.369) |

| FE_ACC 5 | - | - | - | - | 1 | 0.397 (0.012) * | 0.631 (0.000) ** | −0.074 (0.347) | −0.012 (0.475) | 0.235 (0.102) | 0.029 (0.439) | 0.054 (0.386) | −0.098 (0.301) | 0.069 (0.356) |

| DI_ACC 6 | - | - | - | - | - | 1 | 0.811 (0.000) ** | 0.009 (0.480) | 0.375 (0.019) * | 0.050 (0.394) | −0.104 (0.289) | 0.094 (0.308) | −0.030 (0.436) | 0.226 (0.111) |

| Emotion_ACC | - | - | - | - | - | - | 1 | 0.033 (0.431) | 0.311 (0.044) * | 0.125 (0.252) | −0.144 (0.220) | 0.085 (0.324) | −0.045 (0.405) | 0.219 (0.118) |

| HA_Max 7 | - | - | - | - | - | - | - | 1 | −0.237 (0.099) | 0.100 (0.296) | −0.143 (0.222) | 0.088 (0.318) | −0.051 (0.392) | 0.177 (0.170) |

| AN_Max 8 | - | - | - | - | - | - | - | - | 1 | 0.114 (0.271) | 0.305 (0.048) * | 0.416 (0.010) ** | 0.151 (0.208) | 0.675 (0.000) ** |

| SA_Max 9 | - | - | - | - | - | - | - | - | - | 1 | 0.132 (0.240) | 0.267 (0.074) | −0.003 (0.494) | 0.554 (0.001) ** |

| SU_Max 10 | - | - | - | - | - | - | - | - | - | - | 1 | 0.329 (0.035) * | 0.029 (0.439) | 0.354 (0.025) * |

| FE_Max 11 | - | - | - | - | - | - | - | - | - | - | - | 1 | 0.357 (0.024) * | 0.827 (0.000) ** |

| DI_Max 12 | - | - | - | - | - | - | - | - | - | - | - | - | 1 | 0.390 (0.015) * |

| Emotion_Max | - | - | - | - | - | - | - | - | - | - | - | - | - | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.-T.; Lyu, J.-L.; Chien, S.H.-L. Dynamic Emotion Recognition and Expression Imitation in Neurotypical Adults and Their Associations with Autistic Traits. Sensors 2024, 24, 8133. https://doi.org/10.3390/s24248133

Wang H-T, Lyu J-L, Chien SH-L. Dynamic Emotion Recognition and Expression Imitation in Neurotypical Adults and Their Associations with Autistic Traits. Sensors. 2024; 24(24):8133. https://doi.org/10.3390/s24248133

Chicago/Turabian StyleWang, Hai-Ting, Jia-Ling Lyu, and Sarina Hui-Lin Chien. 2024. "Dynamic Emotion Recognition and Expression Imitation in Neurotypical Adults and Their Associations with Autistic Traits" Sensors 24, no. 24: 8133. https://doi.org/10.3390/s24248133

APA StyleWang, H.-T., Lyu, J.-L., & Chien, S. H.-L. (2024). Dynamic Emotion Recognition and Expression Imitation in Neurotypical Adults and Their Associations with Autistic Traits. Sensors, 24(24), 8133. https://doi.org/10.3390/s24248133