1. Introduction

With the development of computer technology, currently, smart cameras are widely used in navigation, positioning, tracking, obstacle avoidance, monitoring, etc. [

1,

2]. Among them, visual measurement is becoming a research hotspot, which uses a camera to capture the static single frame image or dynamic sequence images of the target [

3,

4]. Image processing and analysis technologies are used to measure the target structural parameters and motion parameters. It has the advantages of non-contact, fast dynamic response and high efficiency [

5]. Thus, it has been widely used in the field of industrial pose measurement. Intelligent agricultural machinery equipment is one of the important technologies of modern agriculture, and its working width has gradually broadened. During field operations, the attitude angles of the wide-amplitude agricultural machine, especially the roll angle, affect the tillage depth and compressing strength of the machine, thereby impacting the tillage quality [

6,

7]. Therefore, the accurate roll angle of the implement could make the control of automatic navigation agricultural vehicles more precise [

8], and it would help to warn of a possible roll before climbing or descending, thereby effectively assisting to decrease the loss of life and property [

9]. There are many ways to obtain the roll angle of agricultural machine [

10,

11]. Since the camera cost is low and it can provide rich visual information, with the rapid development of hardware computing capabilities, image processing has become a popular method for acquiring the roll angle.

At present, there are many types of methods for obtaining the attitude angle of an object based on image processing [

12,

13]. One of them is realized by extracting the geometric features of the object. For example, Arakawa et al. implemented the attitude estimation system from the visible horizon in the images for unmanned aerial vehicles (UAVs). This system finds the horizon in the image, and then estimates attitude from the horizon. The horizon is detected using morphological smoothing, Sobel filter and Hough transform [

12]. Timotheatos et al. proposed a vehicle attitude estimation method based on horizon detection, which detects the horizon line based on a canny edge and a Hough detector along with an optimization step performed by a particle swarm optimization (PSO) algorithm. The roll angle of the vehicle is determined from the angle formed by the slope of the horizon, and the pitch angle is computed using the current and initial horizon positions [

14]. There is another type of method that uses the correspondence between the target model point and its imaging point to obtain the attitude angle of the object. For example, Wang presented a method for the perspective-n-point (PnP) problem for determining the position and orientation of a calibrated camera from known reference points. The method transfers the pose estimation problem into an optimal problem, which only requires solving a seventh-order polynomial and a fourth-order univariate polynomial, respectively, making the processes more easily understood and significantly improving the performance [

15]. Zhang et al. proposed a method for attitude angle measurement using a single captured image to assist with the landing of small-scale fixed-wing UAVs. The method has the advantage that the attitude angles are obtained from just one image containing five coded landmarks, which reduces the time to solve the attitude angle while having more than one image in most methods [

16]. These kinds of methods require the manual setting of landmarks to deal with the corresponding problems. In the actual experiment, due to the large number of target points, there is a certain error in placing the control points manually or solving the corresponding problems by computers, and solving the corresponding problems also needs large amount of computation.

In addition, the method of attitude acquisition based on SLAM (simultaneous localization and mapping) is also a research hotspot in recent years. SLAM usually refers to a robot or a moving rigid body, equipped with a specific sensor, estimating its own motion and building a model (certain kinds of description) of the surrounding environment without prior information [

17]. At present, SLAM-based home service robots are widely used, and some scholars also research car autopilot and drone navigation based on SLAM [

18,

19]. However, few studies have been applied in complex field environments. Both this method and the visual odometer in SLAM use image feature points for registration. Considering the complex environment of the field, map building is not carried out in this paper.

Both types of methods need to extract object features, thus the feature extraction and matching methods would directly affect the applicability and accuracy of the algorithm. The efficient registration algorithm of visual images has become an important content in the study of visual image technology. In this paper, the image registration algorithm is used to obtain the rotation angle of the object, and different feature detection algorithms in image registration are compared, such as FAST corner point [

20], SURF [

21] and MSER [

22] detection algorithms. The method in this paper overcomes the shortcomings of high demand on the shape of the object when calculating the rotation angle. There is no need for camera calibration and manual setting of marker points. The rotation angle can be obtained at the same time when matching the images.

Section 2 introduces and details our method. In

Section 3, different field feature point registration models are compared. Then, based on a farmland feature point registration model, an attitude angle acquisition model for agricultural machinery is proposed.

Section 4 shows the field test results and analyses. Finally,

Section 5 draws the conclusions.

2. Theory and Method of Feature Point Registration

2.1. Theory of Image Registration

The core of image registration is finding a feasible mathematical model to explain the relationship between corresponding pixels in two images. Although these images may come from different angles and locations in a scene, or come from different sensors, statuses or times, it is possible to find a suitable way to register images according to different features.

Image registration includes spatial variation and grayscale transformation between two pictures. When using a two-dimensional matrix to express an image, the relationship between registration image P

i−1 and P

i is

where P

i−1(

x,

y) and P

i(

x,

y) are the grayscale values of a pixel at (

x,

y),

f is a two-dimensional space geometric transformation function and

g is a linear grayscale transformation function.

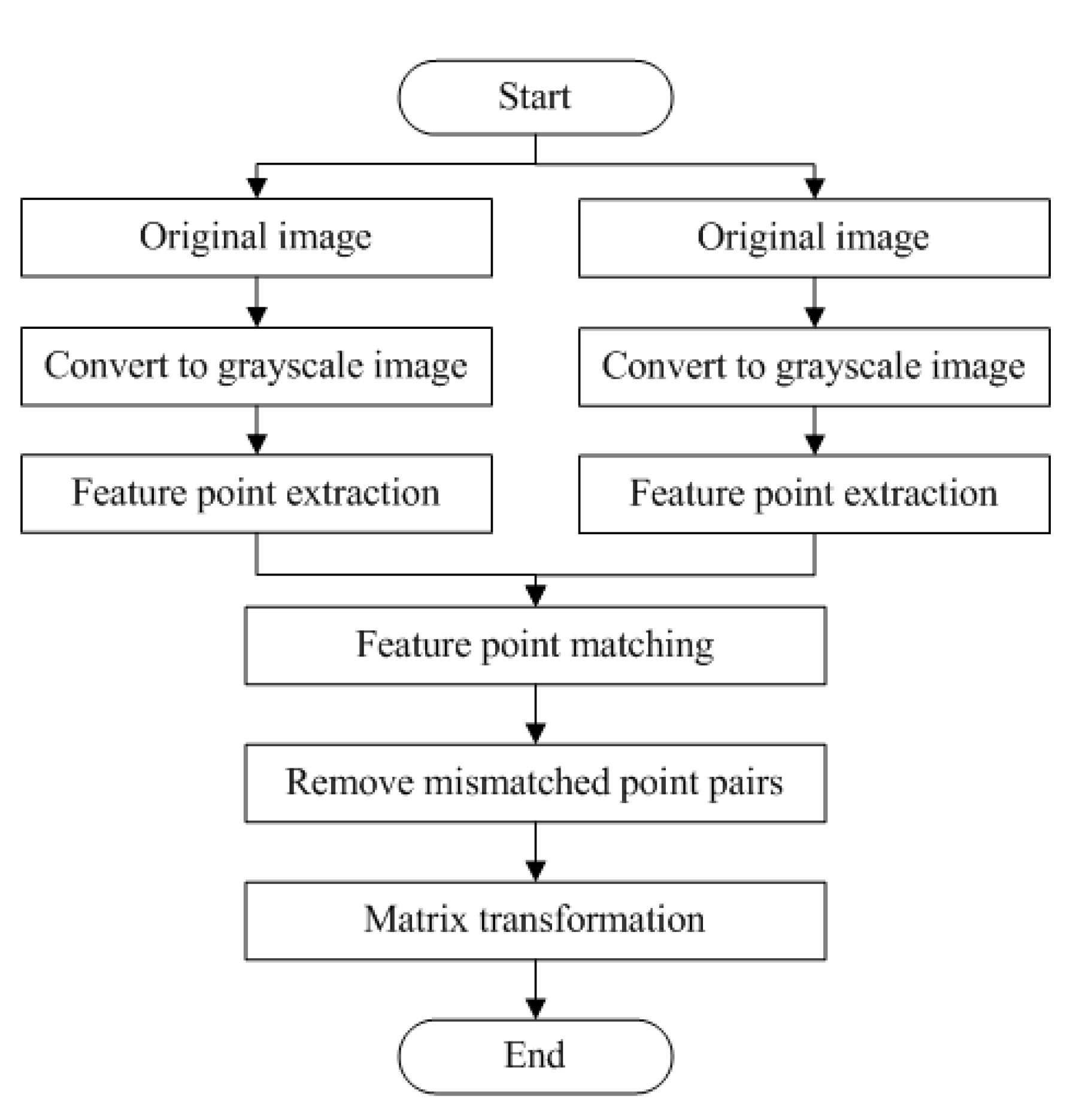

2.2. Image Registration Procedure

Figure 1 is the image registration algorithm procedure. Firstly, take two consecutive images P

i−1 and P

i. The image P

i−1 is referred to as the original image and P

i is referred to as the target image. Then, convert the two images to grayscale and extract feature points from them with feature extraction algorithm, which means pinpointing the feature points on each grayscale image. Afterwards, pick out the feature descriptor (including scale and direction information) and location information from each feature point. Finally, extract local feature points from images. Next, enter the feature point registration step. Set the original image as the reference, and then match the target image with the original image, i.e. match the original image and target image with feature descriptors. After that, eliminate mismatches. Subsequently, calculate a transformation matrix with the location of feature point pairs, eventually deriving the angle of two consecutive images calculated by this matrix.

In this method, Euclidean distance is applied to measure the registration between two images. When there is a small difference in the distribution of luminance information in multiple regions of the image, a feature point in the original image may match multiple feature points in a certain region of the target image. At this time, the multiple feature points in the target image may not be correct registration points. In addition, when the feature points in the original image are known, the corresponding feature points may not be detected in the target image, and the points found directly by the closest Euclidean distance might be mismatched points. Thus, this paper uses the ratio of the closest distance to the second-closest distance to find the most obvious feature points within the set threshold, which can reduce the occurrence of the above situation. Assume that there are M1 and M2 feature points in images Pi−1 and Pi, respectively. For any feature point m1 in M1, the two feature points m2 and m2* with the shortest Euclidean distance from m1 in M2 correspond to distance dij and dij*. If dij ≤ α × dij* (in this paper, α is 0.6), then m1 and m2 are taken as the corresponding registration feature point pairs. This method is used to find all possible registration pairs for all the feature points in Pi−1 and Pi.

2.3. Method of Image Registration

The feature-based image registration algorithm mainly includes feature extraction, feature description and feature registration. If the ratio of correctly matched feature pairs is greater than a certain proportion, M-estimator Sample Consensus (MSAC) algorithm [

22] is applied to obtain these pairs, and then parameters of geometric transformation model between two images are calculated. Thus, before executing image registration, the priority is extracting features.

Feature extraction mainly includes feature detection and feature description, and feature detection algorithms consist of two classes: one is a feature-point-based detection algorithm and the other is a feature-area-based detection algorithm. Corner point detection is a commonly used feature point detection algorithm, such as Harris corner point, SUSAN corner point, FAST corner point, SURF and scale-invariant feature transform (SIFT) descriptor [

23]. FAST considers 16 pixels in a cirle near the pixel point. For example, p is the center pixel point; if the value of n consecutive points from 16 pixel points in the circle are greater or smaller than the value of the center pixel p, then the center point will be the corner point. SURF is an improved and accelerated version of SIFT. SIFT detects extreme points in scale space to find feature points in different scale space and calculate the direction of key points. SURF operates on the integral image. The value of each pixel in the integral image is the sum of all elements in the upper left corner of the corresponding position on the original image; thus, acceleration can be realized. Because the FAST corner detection algorithm is fast and SURF algorithm is faster than SIFT algorithm while maintaining high accuracy, these two algorithms were chosen as representatives for comparison.

Feature-area-based detection methods find feature points and their surrounding area, which contains feature points and other information [

24]. The MSER method denotes a set of distinguished regions that are detected in a grayscale image. It shows better adaptation on detecting gray consistency regions with strong discriminative boundary and structured and textured images. MSER also has better performance when light intensity changes compared to other area detection operators [

25].

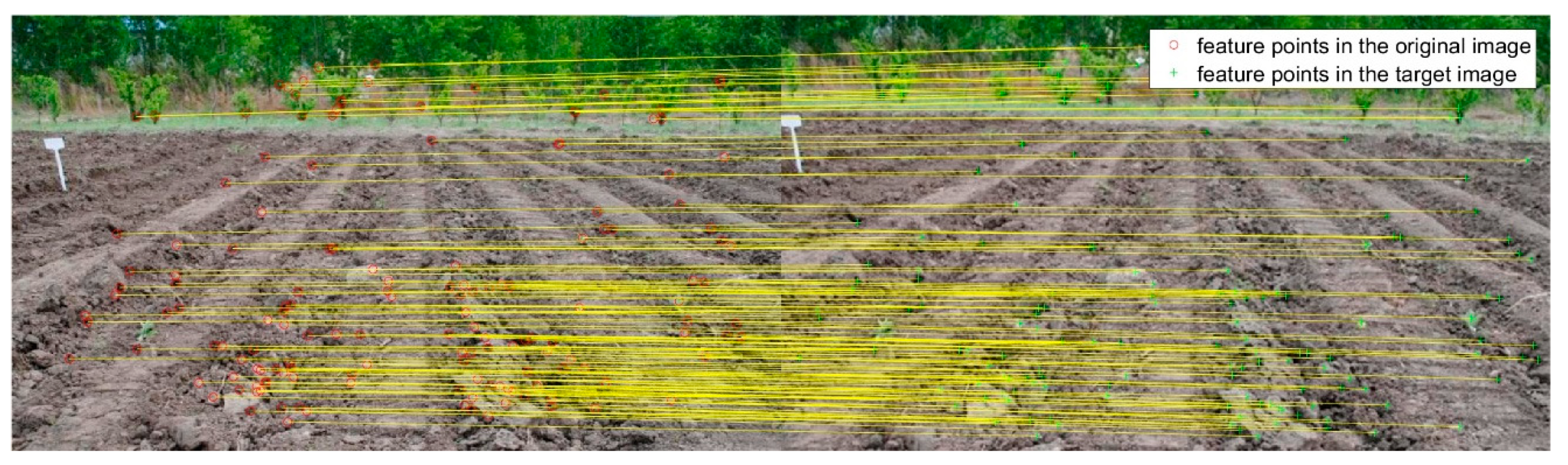

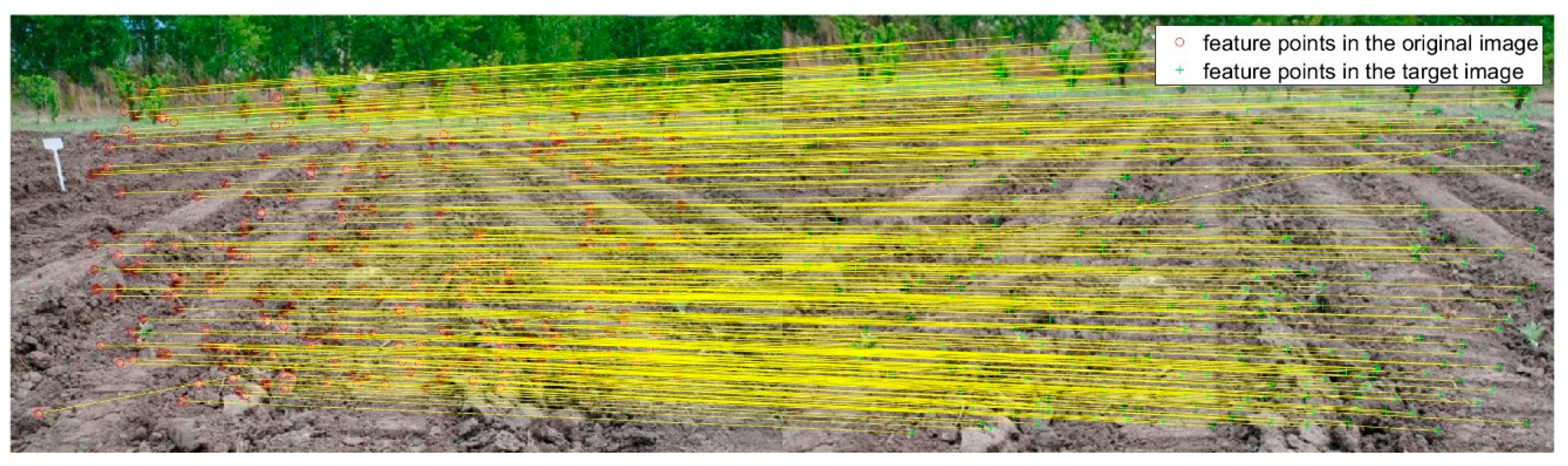

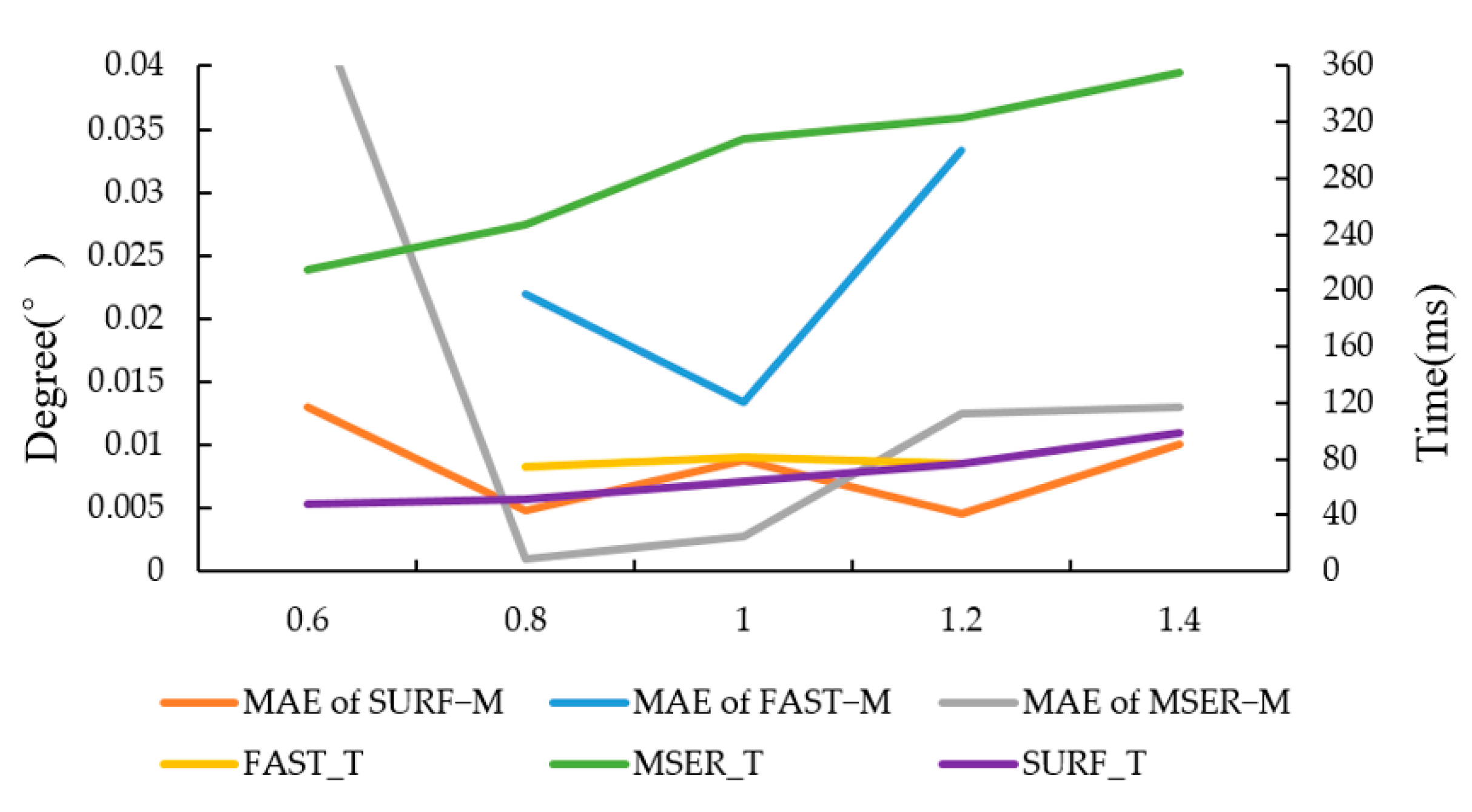

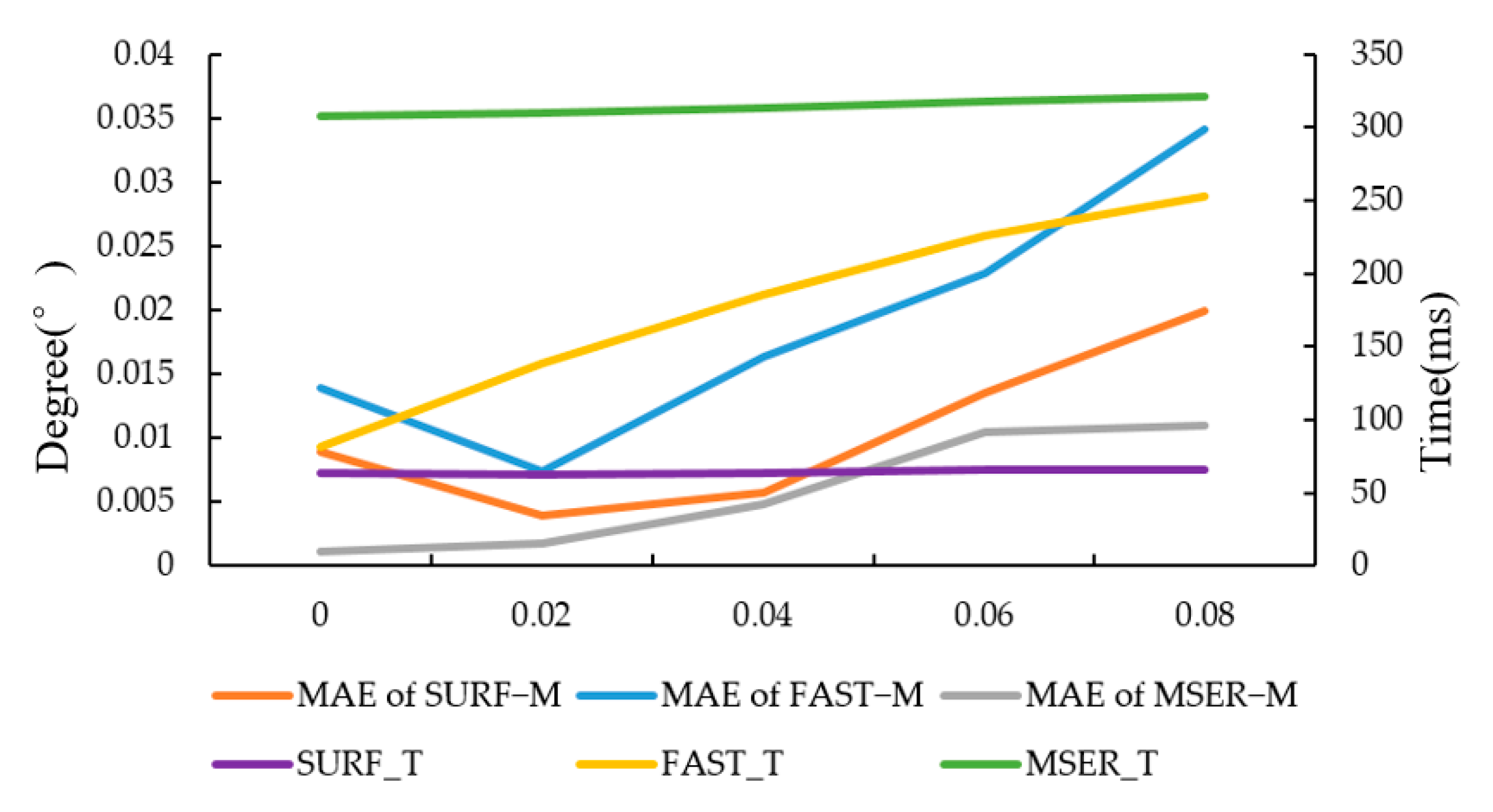

The image registration algorithm based on FAST corner point (hereafter, FAST−M), SURF (hereafter, SURF−M) and MSER (hereafter, MSER−M) was selected to build the image registration algorithm. For the sake of choosing the most suitable algorithm to build field feature point image registration model, the images of two typical field operations were tested in MATLAB. The software for the experiment was windows 10, MATLAB R2014a and Microsoft visual C++ 2015; the processor was Intel Core i7-8700 CPU, running at 3.2 GHz, 16.0 GB RAM.

4. Field Test and Analyses

To testify the feasibility of the attitude acquisition system, a field test was conducted in Boli county, Heilongjiang Province, China.

Experimental devices included the 2BGD-6 soybean variable fertilization seeder, an 88.2-kw CASE IH PUMA 210 tractor, a monocular camera, a digital level ruler (CHERVON Trading Co., Ltd., Nanjing, China, DEVON 9409.1) and the monitoring terminal with attitude angle acquisition model. The display accuracy of the digital level ruler is ±0.05°.

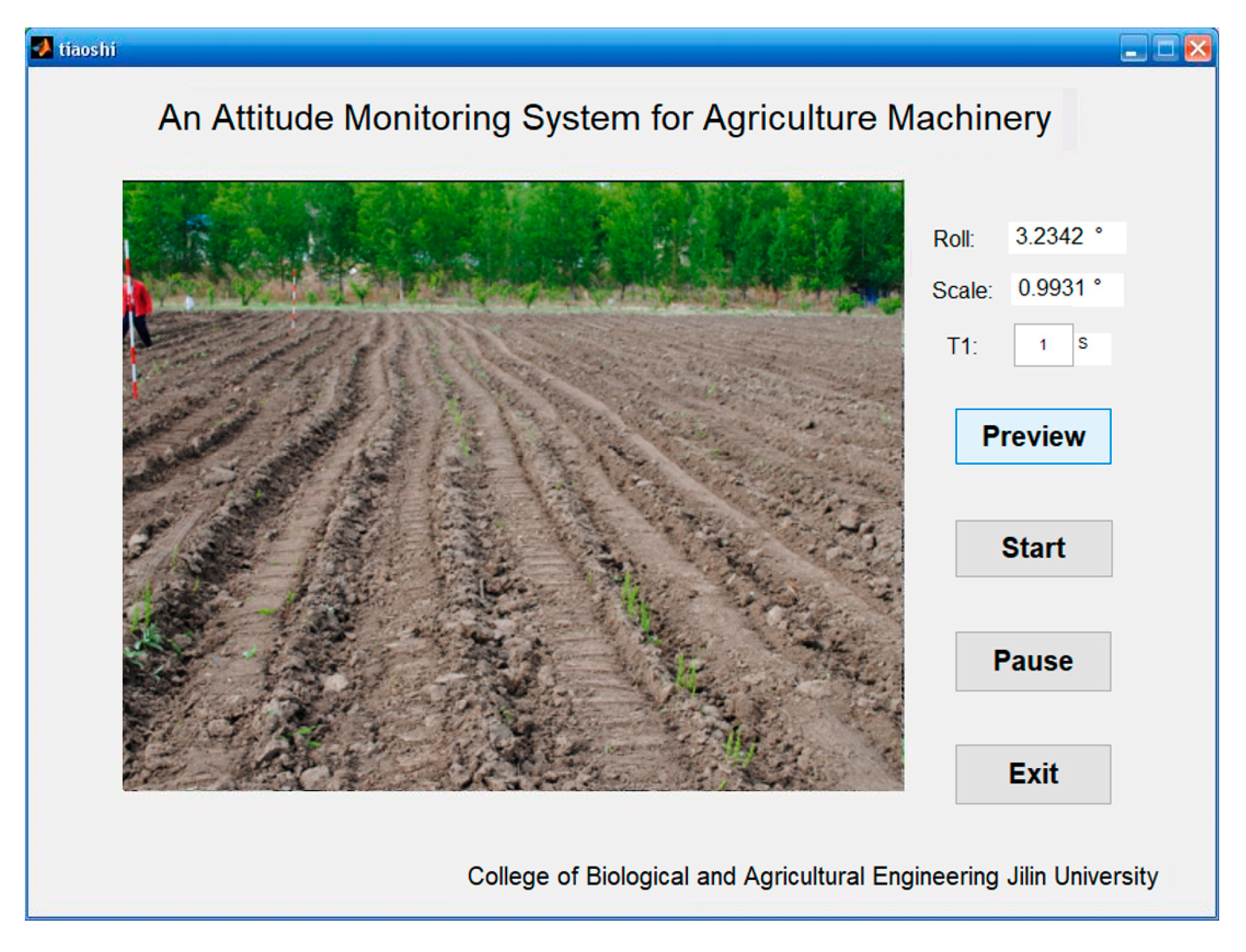

During the test, the facilities were installed as shown in

Figure 11. The camera was facing down and at an angle of 15° to the horizontal; thus, most of the area in the captured image was the field. In the dynamic comparison test, the speed of the tractor was 3.6 km/h. The tractor operated along a straight line. When the speed of the tractor was stable, the monitoring test started. The frequency of attitude monitoring was 1 Hz, and a marking point was made every 1 m along the ridge with a tape measure along the driving direction of the seeder. At each marking point, the angle of the agricultural machine was collected as a true angle value by a digital angle ruler. At the same time, the image was collected through the monitoring terminal at each marking point, and the measured value was displayed and saved in real-time. The interface of the monitoring system is shown in

Figure 12. By comparing the two values, the performance of the image feature point registration algorithm, which was applied to the roll angle measurement, was evaluated.

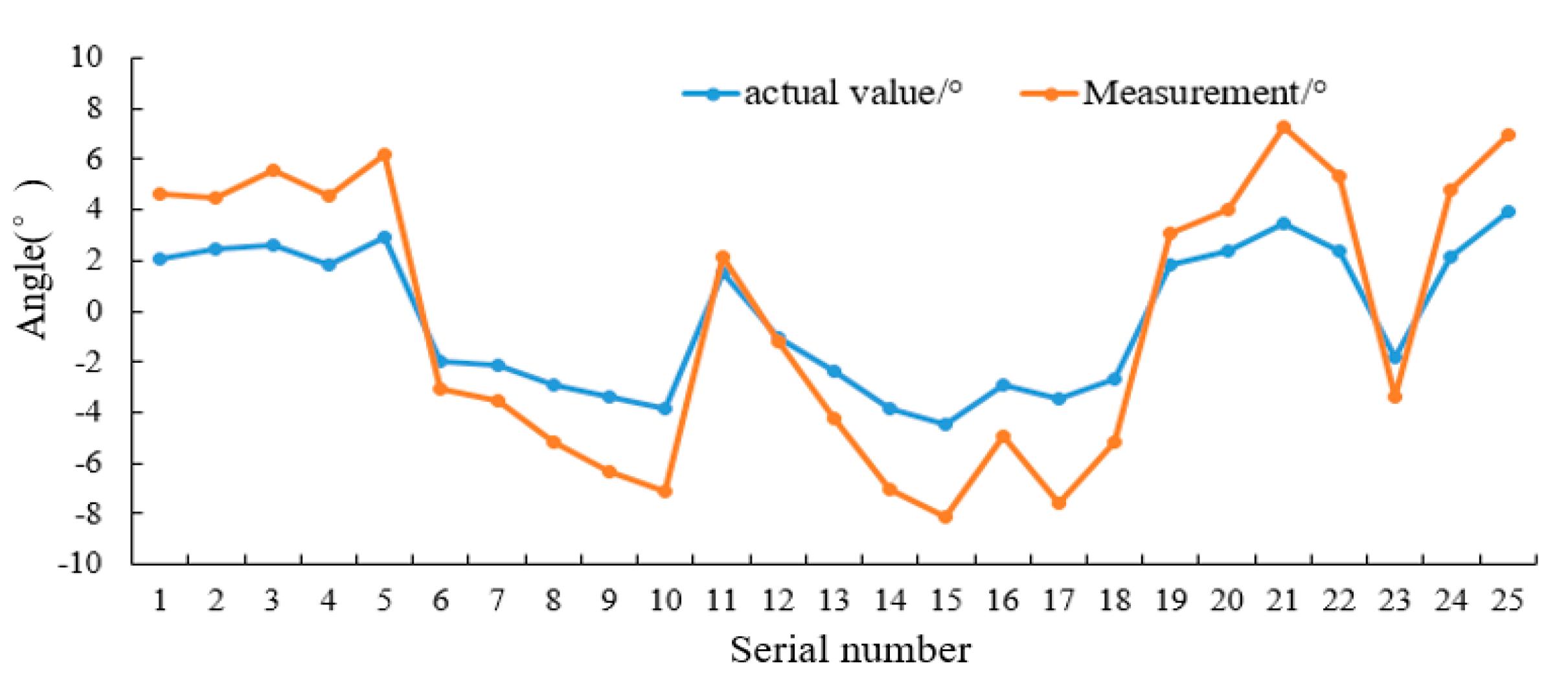

As displayed in

Table 1 and

Figure 13, comparing the angle value calculated by the attitude monitoring algorithm with the angle value measured by the digital display angle ruler, the maximum, minimum and average values of the absolute error of the attitude monitoring algorithm were 0.97°, 0.08°, and 0.61°, respectively.

The test results reveal that, when the agricultural machinery was working in the field, if its attitude changed, the model established could accurately perceive the attitude change trend and obtain the rotation angle of the agricultural machinery. However, there was a certain error in the attitude angle obtained by the system. The cause of the error is mainly because the camera was installed on the agricultural machine. The vibration of the agricultural machinery during the operation had an impact on the camera, which caused a slight deviation of the object in the captured images, resulting in a certain error in the rotation angle. In this study, since the feature variation between two images was small in a short time, the rotation angle of the two images could be obtained, and then the rotation angle of the camera at different times could be obtained. After obtaining the rotation angle of the camera, the roll angle of the agricultural machinery could be acquired. Compared with the SLAM algorithm, the function of the algorithm in this paper is relatively simple, but the algorithm is more concise, and the complexity is lower.

5. Conclusions

This paper presents a method for obtaining feature points with a monocular camera. Image registrations algorithm based on common image feature detection algorithms, such as FAST, SURF and MSER feature detection algorithm, were studied. By comparing the running time and accuracy of the three detection algorithms, the results demonstrate that FAST−M algorithm was more sensitive to noise and scale transformation than the other algorithms. Regarding the running time, the MSER−M algorithm took the longest time, followed by SURF−M, while the FAST−M algorithm took the shortest time. With respect to accuracy, the errors of the three algorithms are all less than 0.1°. In light of the test results, the SURF−M algorithm was selected for field feature point registration.

In this study, model for obtaining the attitude angle based on a monocular camera was built. When tested in the field, the average error of the rolling angle was 0.61°, and the minimum error was 0.08°. Field experiments indicated that the model could accurately obtain the attitude change trend of agricultural machinery. Due to the vibration of the machine, there was a certain error in the attitude angle obtained by the system. In the future, how to eliminate the influence of vibration on the accuracy of attitude angle acquisition should be deeply studied.