An Overview of Artificial Intelligence Applications in Liver and Pancreatic Imaging

Abstract

:Simple Summary

Abstract

1. Introduction

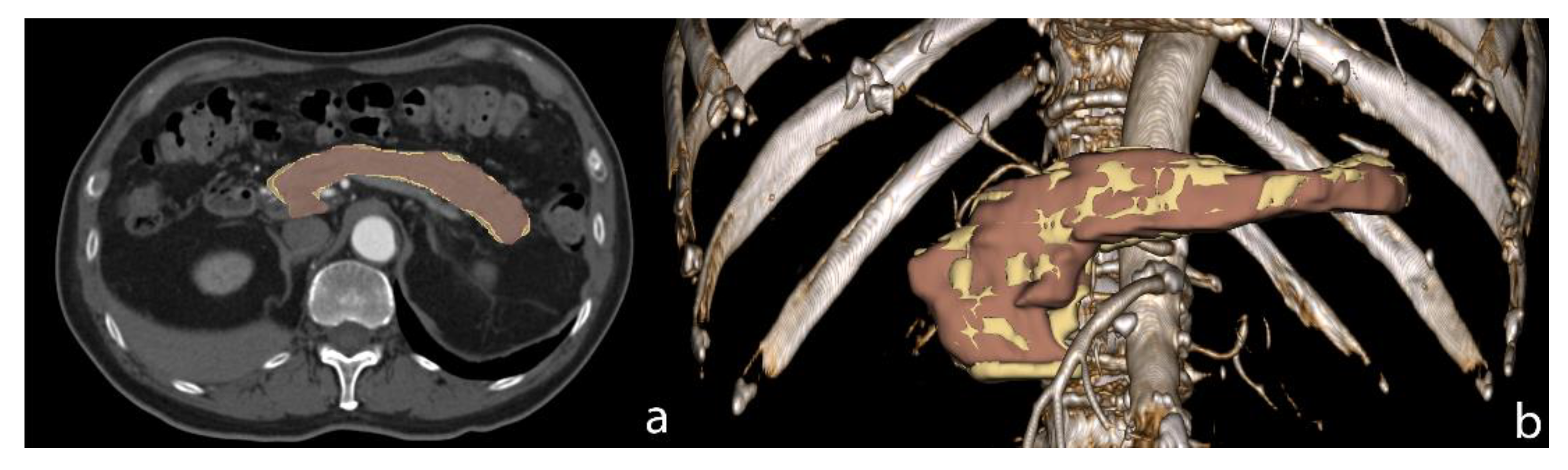

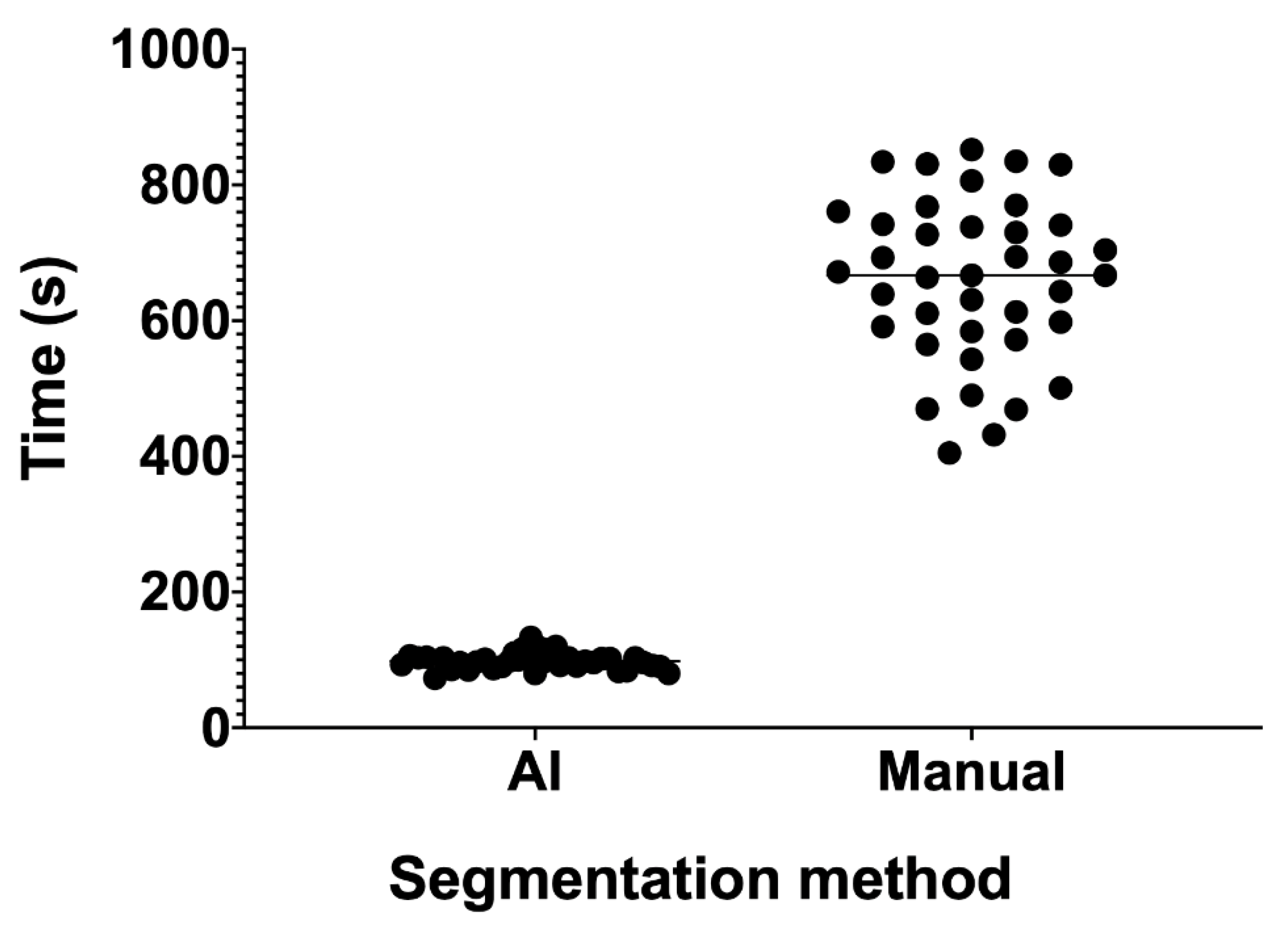

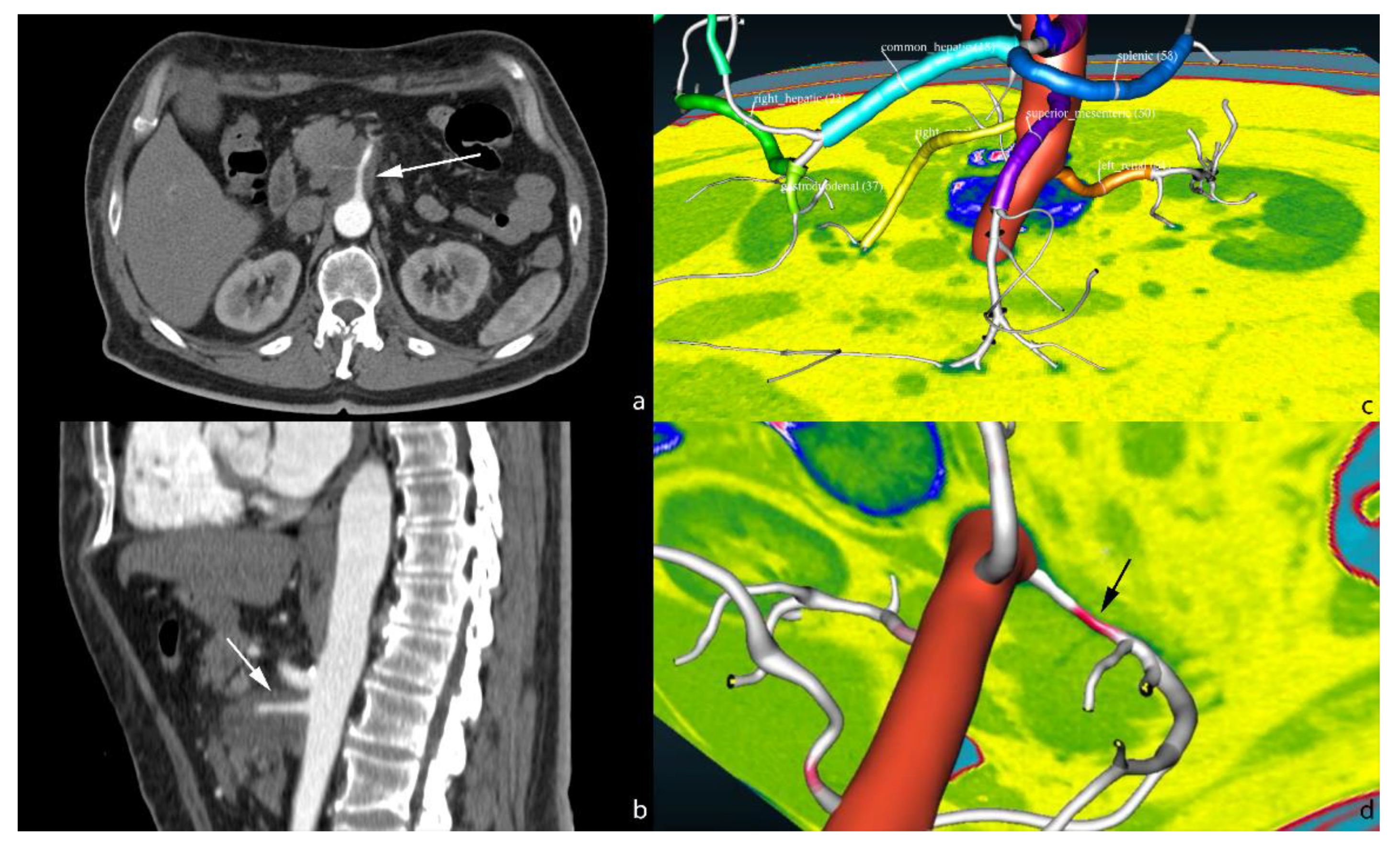

2. Segmentation

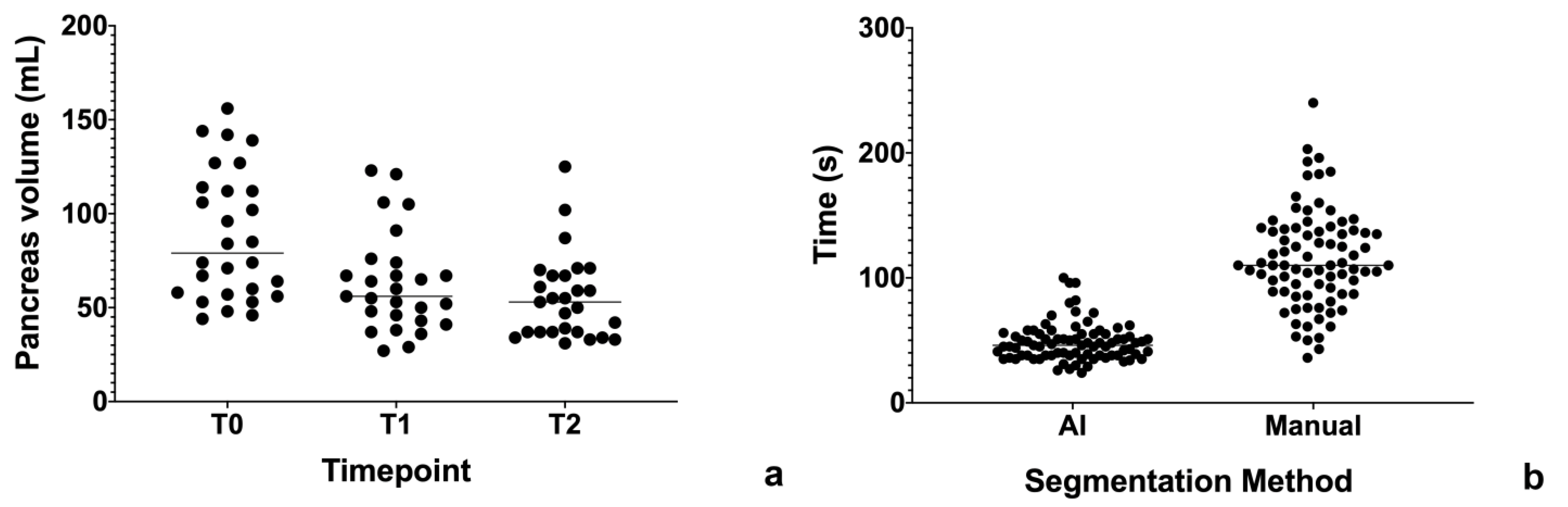

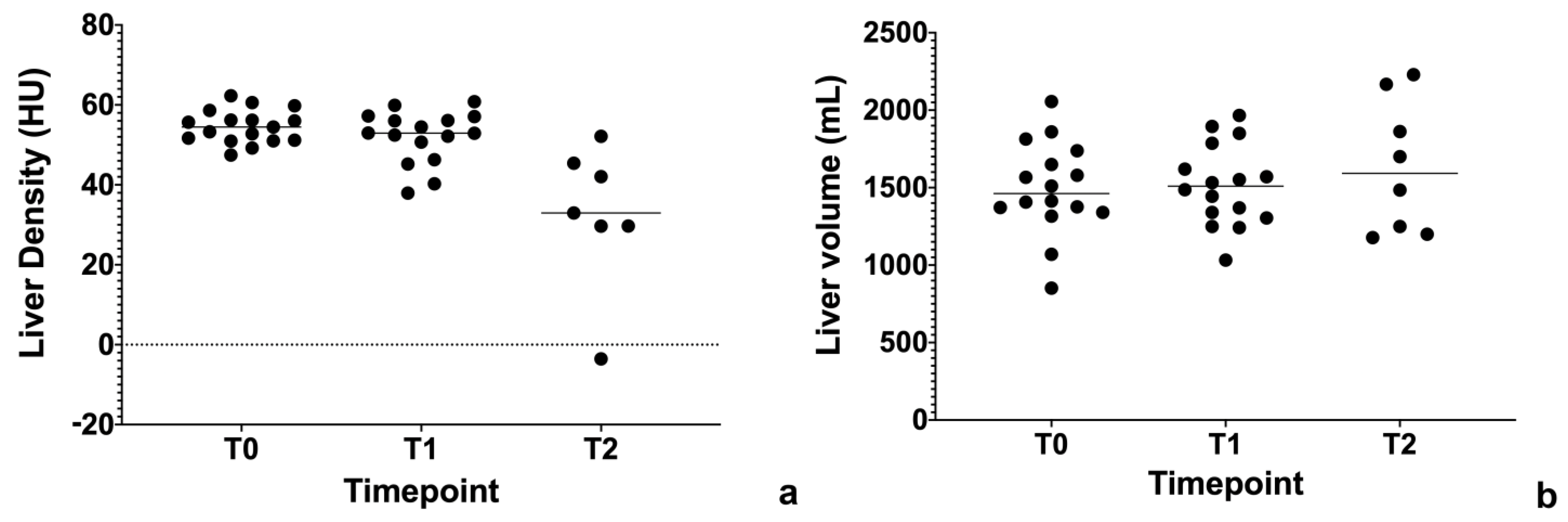

3. Quantification

4. Characterization and Diagnosis

5. Reconstruction and Image Quality Improvement

6. Limitations

7. Future Perspectives

8. Conclusions

Funding

Conflicts of Interest

References

- Amisha Malik, P.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef] [PubMed]

- Rieder, T.N.; Hutler, B.; Mathews, D.J.H. Artificial Intelligence in Service of Human Needs: Pragmatic First Steps Toward an Ethics for Semi-Autonomous Agents. AJOB Neurosci. 2020, 11, 120–127. [Google Scholar] [CrossRef] [PubMed]

- Cavasotto, C.N.; Di Filippo, J.I. Artificial intelligence in the early stages of drug discovery. Arch. Biochem. Biophys. 2020, 698, 108730. [Google Scholar] [CrossRef] [PubMed]

- Weiss, J.; Hoffmann, U.; Aerts, H.J. Artificial intelligence-derived imaging biomarkers to improve population health. Lancet Digit. Health 2020, 2, e154–e155. [Google Scholar] [CrossRef]

- Erickson, B.J.; Korfiatis, P.; Akkus, Z.; Kline, T.L. Machine learning for medical imaging. Radiographics 2017. [Google Scholar] [CrossRef] [PubMed]

- Chartrand, G.; Cheng, P.M.; Vorontsov, E.; Drozdzal, M.; Pal, C.J.; Kadoury, S.; Tang, A. Deep learning: A primer for radiologists. Radiographics 2017. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in neural networks: An overview. Neural Netw. 2015. [Google Scholar] [CrossRef] [Green Version]

- Du, S.S.; Lee, J.D.; Li, H.; Wang, L.; Zhai, X. Gradient descent finds global minima of deep neural networks. In Proceedings of the 36th International Conference on Machine Learning, ICML, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yeghiazaryan, V.; Voiculescu, I. Family of boundary overlap metrics for the evaluation of medical image segmentation. J. Med. Imaging 2018, 5, 1. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the Amount of Ecologic Association Between Species. Ecology 1945. [Google Scholar] [CrossRef]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 2015, 15, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Zou, K.H.; Warfield, S.K.; Bharatha, A.; Tempany, C.M.C.; Kaus, M.R.; Haker, S.J.; Wells, W.M., III; Jolesz, F.A. Statistical Validation of Image Segmentation Quality Based on a Spatial Overlap Index. Acad. Radiol. 2004. [Google Scholar] [CrossRef] [Green Version]

- Zijdenbos, A.P.; Dawant, B.M.; Margolin, R.A.; Palmer, A.C. Morphometric Analysis of White Matter Lesions in MR Images: Method and Validation. IEEE Trans. Med. Imaging 1994. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qayyum, A.; Lalande, A.; Meriaudeau, F. Automatic segmentation of tumors and affected organs in the abdomen using a 3D hybrid model for computed tomography imaging. Comput. Biol. Med. 2020, 127, 104097. [Google Scholar] [CrossRef] [PubMed]

- Hu, P.; Wu, F.; Peng, J.; Liang, P.; Kong, D. Automatic 3D liver segmentation based on deep learning and globally optimized surface evolution. Phys. Med. Biol. 2016, 61, 8676–8698. [Google Scholar] [CrossRef]

- Ahn, Y.; Yoon, J.S.; Lee, S.S.; Suk, H.I.; Son, J.S.; Sung, Y.S.; Lee, Y.; Kang, B.K.; Kim, H.S. Deep learning algorithm for automated segmentation and volume measurement of the liver and spleen using portal venous phase computed tomography images. Korean J. Radiol. 2020, 21, 987–997. [Google Scholar] [CrossRef]

- Bousabarah, K.; Letzen, B.; Tefera, J.; Savic, L.; Schobert, I.; Schlachter, T.; Staib, L.H.; Kocher, M.; Chapiro, J.; Lin, M. Automated detection and delineation of hepatocellular carcinoma on multiphasic contrast-enhanced MRI using deep learning. Abdom. Radiol. N. Y. 2020. [Google Scholar] [CrossRef]

- Bagheri, M.H.; Roth, H.; Kovacs, W.; Yao, J.; Farhadi, F.; Li, X.; Summers, R.M. Technical and Clinical Factors Affecting Success Rate of a Deep Learning Method for Pancreas Segmentation on CT. Acad. Radiol. 2020, 27, 689–695. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Ruan, D.; Xiao, J.; Wang, L.; Sun, B.; Saouaf, R.; Yang, W.; Li, D.; Fan, Z. Fully automated multiorgan segmentation in abdominal magnetic resonance imaging with deep neural networks. Med. Phys. 2020. [Google Scholar] [CrossRef] [PubMed]

- Weston, A.D.; Korfiatis, P.; Philibrick, K.A.; Conte, G.M.; Kostandy, P.; Sakinis, T.; Zeinoddini, A.; Boonrod, A.; Moynagh, M.; Takahashi, N.; et al. Complete abdomen and pelvis segmentation using U-net variant architecture. Med. Phys. 2020. [Google Scholar] [CrossRef]

- Fang, X.; Xu, S.; Wood, B.J.; Yan, P. Deep learning-based liver segmentation for fusion-guided intervention. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 963–972. [Google Scholar] [CrossRef] [PubMed]

- Shin, T.Y.; Kim, H.; Lee, J.; Choi, J.; Min, H.; Cho, H.; Kim, K.; Kang, G.; Kim, J.; Yoon, S.; et al. Expert-level segmentation using deep learning for volumetry of polycystic kidney and liver. Investig. Clin. Urol. 2020, 61, 555–564. [Google Scholar] [CrossRef]

- NVIDIA CLARA TRAIN SDK: AI-ASSISTED ANNOTATION; DU-09358-002 _v2.0; NVIDIA: Santa Clara, CA, USA, 2019.

- Treacher, A.; Beauchamp, D.; Quadri, B.; Fetzer, D.; Vij, A.; Yokoo, T.; Montillo, A. Deep Learning Convolutional Neural Networks for the Estimation of Liver Fibrosis Severity from Ultrasound Texture. In Proceedings of the International Society for Optical Engineering, SPIE Medical Imaging, San Diego, CA, USA, 16– 21 February 2019; Volume 10950. [Google Scholar]

- Schawkat, K.; Ciritsis, A.; von Ulmenstein, S.; Honcharova-Biletska, H.; Jüngst, C.; Weber, A.; Gubler, C.; Mertens, J.; Reiner, C.S. Diagnostic accuracy of texture analysis and machine learning for quantification of liver fibrosis in MRI: Correlation with MR elastography and histopathology. Eur. Radiol. 2020, 30, 4675–4685. [Google Scholar] [CrossRef]

- Hectors, S.J.; Kennedy, P.; Huang, K.; Stocker, D.; Carbonell, G.; Greenspan, H.; Friedman, S.; Taouli, B. Fully automated prediction of liver fibrosis using deep learning analysis of gadoxetic acid–enhanced MRI. Eur. Radiol. 2020. [Google Scholar] [CrossRef]

- Cao, W.; An, X.; Cong, L.; Lyu, C.; Zhou, Q.; Guo, R. Application of Deep Learning in Quantitative Analysis of 2-Dimensional Ultrasound Imaging of Nonalcoholic Fatty Liver Disease. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2020, 39, 51–59. [Google Scholar] [CrossRef]

- Pickhardt, P.J.; Blake, G.M.; Graffy, P.M.; Sandfort, V.; Elton, D.C.; Perez, A.A.; Summers, R.M. Liver Steatosis Categorization on Contrast-Enhanced CT Using a Fully-Automated Deep Learning Volumetric Segmentation Tool: Evaluation in 1204 Heathy Adults Using Unenhanced CT as Reference Standard. Am. J. Roentgenol. 2020. [Google Scholar] [CrossRef]

- Maor, Y.; Malnick, S. Liver Injury Induced by Anticancer Chemotherapy and Radiation Therapy. Int. J. Hepatol. 2013, 2013, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- White, M.A. Chemotherapy-Associated Hepatotoxicities. Surg. Clin. N. Am. 2016, 96, 207–217. [Google Scholar] [CrossRef] [PubMed]

- Ramadori, G.; Cameron, S. Effects of systemic chemotherapy on the liver. Ann. Hepatol. 2010, 9, 133–143. [Google Scholar] [CrossRef]

- Reddy, S.K.; Reilly, C.; Zhan, M.; Mindikoglu, A.L.; Jiang, Y.; Lane, B.F.; Alezander, R.H.; Culpepper, W.J.; El-Kamary, S.S. Long-term influence of chemotherapy on steatosis-associated advanced hepatic fibrosis. Med. Oncol. 2014, 31, 971. [Google Scholar] [CrossRef] [Green Version]

- Simionato, F.; Zecchetto, C.; Merz, V.; Cavaliere, A.; Casalino, S.; Gaule, M.; D’Onofrio, M.; Malleo, G.; Landoni, L.; Esposito, A.; et al. A phase II study of liposomal irinotecan with 5-fluorouracil, leucovorin and oxaliplatin in patients with resectable pancreatic cancer: The nITRO trial. Ther. Adv. Med. Oncol. 2020, 12, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Vivanti, R.; Szeskin, A.; Lev-Cohain, N.; Sosna, J.; Joskowicz, L. Automatic detection of new tumors and tumor burden evaluation in longitudinal liver CT scan studies. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1945–1957. [Google Scholar] [CrossRef] [PubMed]

- Goehler, A.; Hsu, T.H.; Lacson, R.; Gujrathi, I.; Hashemi, R.; Chlebus, G.; Szolovits, P.; Khorasani, R. Three-Dimensional Neural Network to Automatically Assess Liver Tumor Burden Change on Consecutive Liver MRIs. J. Am. Coll. Radiol. 2020, 17, 1475–1484. [Google Scholar] [CrossRef]

- Hamm, C.A.; Wang, C.J.; Savic, L.J.; Ferrante, M.; Schobert, I.; Schlachter, T.; Lin, M.; Duncan, J.S.; Weinreb, J.C.; Chapiro, J.; et al. Deep learning for liver tumor diagnosis part I: Development of a convolutional neural network classifier for multi-phasic MRI. Eur Radiol. 2019, 29, 3338–3347. [Google Scholar] [CrossRef]

- Chernyak, V.; Fowler, K.J.; Kamaya, A.; Kielar, A.Z.; Elsayes, K.M.; Bashir, M.R.; Kono, Y.; Do, R.K.; Mitchell, D.G.; Singal, A.G.; et al. Liver Imaging Reporting and Data System (LI-RADS) version 2018: Imaging of hepatocellular carcinoma in at-risk patients. Radiology 2018, 289, 816–830. [Google Scholar] [CrossRef]

- Wu, Y.; White, G.M.; Cornelius, T.; Gowdar, I.; Ansari, M.H.; Supanich, M.P.; Deng, J. Deep learning LI-RADS grading system based on contrast enhanced multiphase MRI for differentiation between LR-3 and LR-4/LR-5 liver tumors. Ann. Transl. Med. 2020, 8, 701. [Google Scholar] [CrossRef] [PubMed]

- Cao, S.-E.; Zhang, L.; Kuang, S.; Shi, W.; Hu, B.; Xie, S.; Chen, Y.; Liu, H.; Chen, S.; Jiang, T.; et al. Multiphase convolutional dense network for the classification of focal liver lesions on dynamic contrast-enhanced computed tomography. World J. Gastroenterol. 2020, 26, 3660–3672. [Google Scholar] [CrossRef]

- Shi, W.; Kuang, S.; Cao, S.; Hu, B.; Xie, S.; Chen, S.; Chen, Y.; Gao, D.; Chen, Y.; Zhu, Y.; et al. Deep learning assisted differentiation of hepatocellular carcinoma from focal liver lesions: Choice of four-phase and three-phase CT imaging protocol. Abdom. Radiol. 2020, 45, 2688–2697. [Google Scholar] [CrossRef]

- Pereira, S.P.; Oldfield, L.; Ney, A.; Hart, P.A.; Keane, M.G.; Pandol, S.J.; Li, D.; Grrenhalf, W.; Jeon, C.Y.; Koay, E.J.; et al. Early detection of pancreatic cancer. Lancet Gastroenterol. Hepatol. 2020, 5, 698–710. [Google Scholar] [CrossRef]

- Liu, K.L.; Wu, T.; Chen, P.; Tsai, Y.; Roth, H.; Wu, M.; Liao, W.; Wang, W. Deep learning to distinguish pancreatic cancer tissue from non-cancerous pancreatic tissue: A retrospective study with cross-racial external validation. Lancet Digit. Health 2020, 2, e303–e313. [Google Scholar] [CrossRef]

- Kuwahara, T.; Hara, K.; Mizuno, N.; Okuno, N.; Matsumoto, S.; Obata, M.; Kurita, Y.; Koda, H.; Toriyama, K.; Onishi, S.; et al. Usefulness of deep learning analysis for the diagnosis of malignancy in intraductal papillary mucinous neoplasms of the pancreas. Clin. Transl. Gastroenterol. 2019, 10, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Fabiano, F.; Dal, P. An asp approach for arteries classification in CT-scans? CEUR Workshop Proc. 2020, 2710, 312–326. [Google Scholar]

- Reina, G.A.; Stassen, M.; Pezzotti, N. White Paper Philips Healthcare Uses the Intel ® Distribution of OpenVINO ™ Toolkit and the Intel ® DevCloud for the Edge to Accelerate Compressed Sensing Image Reconstruction Algorithms for MRI; White paper; Intel: Santa Clara, CA, USA, 2020. [Google Scholar]

- Hsieh, J.; Liu, E.; Nett, B.; Tang, J.; Thibault, J.; Sahney, S. A New Era of Image Reconstruction: TrueFidelity ™ Technical White Paper on Deep Learning Image Reconstruction; White Paper (JB68676XX); GE Healthcare: Chicago, IL, USA, 2019. [Google Scholar]

- Boedeker, K. AiCE Deep Learning Reconstruction: Bringing the Power of Ultra-High Resolution CT to Routine Imaging; Aquilion Precision Ultra-High Resolution CT: Quantifying diagnostic image quality; Canon Medical Systems Corporation: Otawara, Tochigi, Japan, 2017. [Google Scholar]

- Hammernik, K.; Knoll, F.; Rueckert, D. Deep Learning for Parallel MRI Reconstruction: Overview, Challenges, and Opportunities. MAGNETOM Flash 2019, 4, 10–15. [Google Scholar]

- Greffier, J.; Hamard, A.; Pereira, F.; Barrau, C.; Pasquier, H.; Beregi, J.P.; Frandon, J. Image quality and dose reduction opportunity of deep learning image reconstruction algorithm for CT: A phantom study. Eur. Radiol. 2020, 30, 3951–3959. [Google Scholar] [CrossRef]

- Ichikawa, Y.; Kanii, Y.; Yamazaki, A.; Nagasawa, N.; Nagata, M.; Ishida, M.; Kitagawa, K.; Sakuma, H. Deep learning image reconstruction for improvement of image quality of abdominal computed tomography: Comparison with hybrid iterative reconstruction. Jpn. J. Radiol. 2021. [Google Scholar] [CrossRef] [PubMed]

- Park, C.; Choo, K.S.; Jung, Y.; Jeong, H.S.; Hwang, J.; Yun, M.S. CT iterative vs. deep learning reconstruction: Comparison of noise and sharpness. Eur. Radiol. 2020. [Google Scholar] [CrossRef]

- Akagi, M.; Nakamura, Y.; Higaki, T.; Narita, K.; Honda, Y.; Zhou, J.; Zhou, Y.; Akino, N.; Awai, K. Deep learning reconstruction improves image quality of abdominal ultra-high-resolution CT. Eur. Radiol. 2019, 29, 6163–6171. [Google Scholar] [CrossRef] [PubMed]

- Narita, K.; Nakamura, Y.; Higaki, T.; Akagi, M.; Honda, Y.; Awai, K. Deep learning reconstruction of drip-infusion cholangiography acquired with ultra-high-resolution computed tomography. Abdom. Radiol. 2020. [Google Scholar] [CrossRef]

- Nakamura, Y.; Narita, K.; Higaki, T.; Akagi, M.; Honda, Y.; Awai, K. Diagnostic value of deep learning reconstruction for radiation dose reduction at abdominal ultra-high-resolution CT. Eur. Radiol. 2021. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Choi, Y.H.; Cho, J.Y.; Lee, S.B.; Cheon, J.; Kim, W.S.; Ahn, C.K.; Kim, J.H. Noise reduction approach in pediatric abdominal CT combining deep learning and dual-energy technique. Eur. Radiol. 2020. [Google Scholar] [CrossRef]

- Cao, L.; Liu, X.; Li, J.; Qu, T.; Chen, L.; Cheng, Y.; Hu, J.; Sun, J.; Guo, J. A study of using a deep learning image reconstruction to improve the image quality of extremely low-dose contrast-enhanced abdominal CT for patients with hepatic lesions. Br. J. Radiol. 2021. [Google Scholar] [CrossRef] [PubMed]

- Herrmann, J.; Gassenmaier, S.; Nickel, D.; Arberet, S.; Afat, S.; Lingg, A.; Kündel, M.; Othman, A.E. Diagnostic Confidence and Feasibility of a Deep Learning Accelerated HASTE Sequence of the Abdomen in a Single Breath-Hold. Investig. Radiol. 2020. [Google Scholar] [CrossRef]

- Kromrey, M.-L.; Tamada, D.; Johno, H.; Funayama, S.; Nagata, N.; Ichikawa, S.; Kühn, J.P.; Onishi, H.; Motosugi, U. Reduction of respiratory motion artifacts in gadoxetate-enhanced MR with a deep learning-based filter using convolutional neural network. Eur. Radiol. 2020, 30, 5923–5932. [Google Scholar] [CrossRef]

- Antun, V.; Renna, F.; Poon, C.; Adcock, B.; Hansen, A.C. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc. Natl. Acad. Sci. USA 2020, 117, 30088–30095. [Google Scholar] [CrossRef]

- The new EU General Data Protection Regulation: What the radiologist should know. Insights Imaging 2017. [CrossRef] [Green Version]

- Toll, D.B.; Janssen KJ, M.; Vergouwe, Y.; Moons, K.G.M. Validation, updating and impact of clinical prediction rules: A review. J. Clin. Epidemiol. 2008. [Google Scholar] [CrossRef] [PubMed]

- Zech, J.R.; Badgeley, M.A.; Liu, M.; Costa, A.B.; Titano, J.J.; Oermann, E.K. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: A cross-sectional study. PLoS Med. 2018, 15, e1002683. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Geijer, H.; Geijer, M. Added value of double reading in diagnostic radiology, a systematic review. Insights Imaging 2018. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018. [Google Scholar] [CrossRef]

- Mutasa, S.; Sun, S.; Ha, R. Understanding artificial intelligence based radiology studies: What is overfitting? Clin. Imaging 2020. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019. [Google Scholar] [CrossRef]

- Kim, D.W.; Jang, H.Y.; Kim, K.W.; Shin, Y.; Park, S.H. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: Results from recently published papers. Korean J. Radiol. 2019. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Rodriguez, N.D.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Samek, W.; Müller, K.R. Towards Explainable Artificial Intelligence. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef] [Green Version]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable deep learning models in medical image analysis. J. Imaging 2020, 6, 52. [Google Scholar] [CrossRef]

- Couteaux, V.; Nempont, O.; Pizaine, G.; Bloch, I. Towards interpretability of segmentation networks by analyzing deepDreams. In Interpretability of Machine Intelligence in Medical Image Computing and Multimodal Learning for Clinical Decision Support, Proceedings of the Second International Workshop, iMIMIC 2019, and 9th International Workshop, ML-CDS 2019, Shenzhen, China, 17 October 2019; Lecture Notes in Computer Science; Suzuki, K., Reyes, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11797. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cardobi, N.; Dal Palù, A.; Pedrini, F.; Beleù, A.; Nocini, R.; De Robertis, R.; Ruzzenente, A.; Salvia, R.; Montemezzi, S.; D’Onofrio, M. An Overview of Artificial Intelligence Applications in Liver and Pancreatic Imaging. Cancers 2021, 13, 2162. https://doi.org/10.3390/cancers13092162

Cardobi N, Dal Palù A, Pedrini F, Beleù A, Nocini R, De Robertis R, Ruzzenente A, Salvia R, Montemezzi S, D’Onofrio M. An Overview of Artificial Intelligence Applications in Liver and Pancreatic Imaging. Cancers. 2021; 13(9):2162. https://doi.org/10.3390/cancers13092162

Chicago/Turabian StyleCardobi, Nicolò, Alessandro Dal Palù, Federica Pedrini, Alessandro Beleù, Riccardo Nocini, Riccardo De Robertis, Andrea Ruzzenente, Roberto Salvia, Stefania Montemezzi, and Mirko D’Onofrio. 2021. "An Overview of Artificial Intelligence Applications in Liver and Pancreatic Imaging" Cancers 13, no. 9: 2162. https://doi.org/10.3390/cancers13092162