1. Introduction

In many subject areas, such as engineering, science, and economics, a large number of complex optimization problems have arisen, such as large-scale production scheduling, complex system control, and nonlinear function optimization [

1,

2,

3,

4,

5]. These problems are highly nonlinear, multimodal, and constrained. Traditional optimization algorithms face many difficulties in dealing with these problems, such as their proneness to local optimal solutions and inefficiency in computation, which makes it difficult to meet actual needs. Therefore, a more effective optimization method is needed. People have observed and studied phenomena such as biological evolution and group behavior in nature, finding that organisms have formed efficient adaptation and optimization mechanisms in the long-term evolution process [

6,

7,

8,

9,

10]. For example, the inheritance, variation, and natural selection mechanisms of organisms enable species to continuously adapt to environmental changes and evolve in a better direction; for example, ant colonies can find the optimal path from the ant nest to a food source by transmitting pheromones during foraging, bird flocks can achieve the optimal group flight posture through cooperation and information sharing between individuals during flight, etc. [

11,

12,

13]. These natural phenomena provide a rich source of inspiration for designing intelligent optimization algorithms.

Swarm intelligence algorithms have developed rapidly since the beginning of the 21st century [

14,

15,

16,

17]. On one hand, different intelligent optimization algorithms are integrated with each other, for instance, combining the genetic algorithm (GA) with the particle swarm optimization algorithm (PSO) or the ant colony algorithm with others. This integration capitalizes on their respective strengths, yielding more potent hybrid optimization algorithms [

18,

19]. Pan et al. developed an agent-assisted hybrid optimization (SAHO) algorithm to tackle the computationally expensive optimization problem [

20]. SAHO combines teaching–learning-based optimization and differential evolution, obtaining stronger solving capabilities. Sangeetha et al. introduced a sentiment analysis method called the Taylor–Harris hawk optimization-driven long short-term memory network (THHO-BiLSTM) [

21]. THHO-BiLSTM was formed by incorporating Taylor series into Harris hawk optimization (HHO), which can improve the performance of BiLSTM classifiers by selecting the optimal weights for hidden layers. Yıldız et al. used a new hybrid optimizer, AOA-NM (Arithmetic Optimization–Naider–Mead), to solve engineering design and manufacturing problems [

22]. To address the AOA’s tendency to become trapped in local optima and boost solution quality, they incorporated the Naider–Mead local search method into AOA’s basic framework. This hybrid approach optimized AOA’s exploration and exploitation during the search.

On the other hand, these algorithms are combined with technologies in other fields, such as applying intelligent optimization algorithms to model selection and hyperparameter optimization in machine learning, as well as combining them with deep learning, big data processing, and other technologies, promoting the development of related fields [

23,

24,

25]. Zheng et al. put forward a path prediction model integrating the GA, ant colony algorithm (ACO), and BP neural network, named GA-ACO-BP [

26]. This model first conducts in-depth preprocessing on the original AIS data. It takes the BP neural network as the core prediction model. Leveraging the complementary nature of the GA and ACO, the GA determines the initial pheromone concentration of the ant colony. This effectively improves the convergence speed and performance of the traditional BP neural network. Cheng et al. proposed an approach for monitoring tool wear, which optimized the BP neural network with the firefly algorithm (FA) to enhance the accuracy of online tool wear prediction [

27]. It did so by using the FA to modify the weights and thresholds of the BP neural network, which improved its performance. The experimental results validated the accuracy and reliability of this method.

In addition, new intelligent optimization algorithms continue to emerge, such as the artificial bee colony algorithm, bat algorithm, firefly algorithm, moth-to-fire optimization algorithm, etc., which further enrich the system of intelligent optimization algorithms [

28,

29,

30]. The starfish optimization algorithm (SFOA) is an intelligent optimization algorithm developed based on the foraging behavior of starfish [

31]. It aims to solve various optimization problems, especially complex problems difficult to handle with traditional methods. The SFOA simulates the foraging activities of starfish in a large range, allowing the algorithm to conduct a more extensive search in the entire solution space, increasing the chance of finding the global optimal solution and avoiding falling into the local optimum. During the search process, the movement strategy of individual starfish can be adaptively adjusted according to the search situation. For example, when approaching the optimal solution area, the step size may automatically decrease for a more refined search; in the early stages of the search, the step size is better able to quickly explore different areas. Although the starfish algorithm performs well, it still has problems, such as an insufficient local search capability and a slow convergence speed, and its global search capability needs to be improved. Therefore, this study modifies the SFOA through the joint strategy of sine chaotic mapping, t-distribution variation, and logarithmic spiral reverse learning to obtain an enhanced starfish optimization algorithm (SFOAL). It is found that the SFOAL has stronger local search and global search capabilities, as well as a faster convergence speed and greater stability, than the SFOA.

The subsequent parts of this study are arranged as follows: in

Section 2, the principles underlying the SFOA and SFOAL are expounded upon in detail. In

Section 3, the algorithms are rigorously evaluated, and the experimental results are dissected meticulously. In

Section 4, the practical implications of the algorithms for engineering problems are explored. Finally, a summary of the key findings is presented, encapsulating the main outcomes of this study. Additionally, suggestions for future research directions are put forward, highlighting potential areas for further exploration and improvement.

3. SFOAL

The SFOAL modifies the SFOA by introducing a multi-strategy mode of sine chaotic map initialization population, t-distribution mutation, and logarithmic spiral reverse learning to update the position. By introducing multiple strategies, the search space can be explored from different angles, increasing the possibility of the algorithm finding the optimal solution in the global scope. When the algorithm uses a single strategy, it is easy to fall into the local optimal solution and be unable to jump out, with the final result not being the global optimal. Multi-strategy modification can provide the algorithm with more opportunities to jump out of the local optimal solution by switching between different strategies.

3.1. Initialization with Sine Chaotic Map

Initial conditions have a significant impact on the sine chaotic map. Even the slightest change in these initial conditions may result in chaotic sequences that are completely different from one another. This feature can be used to initialize multiple different populations or search directions in intelligent optimization algorithms, thereby increasing the diversity of the algorithm. For complex multi-peak optimization problems, different initial conditions allow the algorithm to start searching from different starting points, increasing the probability of finding the global optimal solution. In the initialization stage, the positions of the starfish are randomly produced. xij denotes the position of the ith starfish within the jth dimension and is expressed in matrix form. The size of the matrix is , where n represents the population size and d represents the dimension of the design variable.

Sine chaotic mapping is a classic one-dimensional mapping method, which the SFOAL uses to optimize the initial population of starfish. Its mathematical expression is shown in Equation (1), as follows:

where

δ is a constant between 0 and 4. When it is greater than 3.8, the mapping system shows a chaotic state, and the closer it is to 4, the more obvious this chaotic state is. Here,

δ is equal to four.

ubj and

lbj are the upper and lower boundaries of the variables, respectively.

xn and

xn+1 represent the current position of the starfish and its position after sine chaotic mapping, respectively.

After the position is initialized, the positions of all starfish are evaluated to obtain the fitness value of each starfish, which are saved and updated using the vector

F of size n × 1, as seen in Equation (2), as follows:

3.2. Exploration

After completing the position initialization, the size of

Gp will be compared to a number in the range (0, 1); when the number is not greater than

Gp, the exploration phase will begin. Then,

d is determined, and if

d is greater than five, the starfish moves its five arms to explore the environment. Moreover, the starfish’s movement is updated by searching for the best position information in the agent. The model for this stage is presented in Equation (3), as follows:

where

denotes the obtained position;

and

denote the current position and current best position of dimension

p, respectively; and

p denotes five randomly selected dimensions of dimension

d.

a1 and

θ are obtained using Equations (4) and (5), respectively, as follows:

where

t and

tmax represent the present and maximum iteration number, respectively. During the exploration phase,

a1 is randomly generated and used to update the position, while

θ varies with the number of iterations. These two parameters jointly assess the influence of the distance between the optimal and present position in the chosen update dimension. For the position outside the boundary after the update, the previous position is maintained instead of moving to the updated position, as shown in Equation (6), as follows:

where

p denotes the update dimension, and

lb and

ub denote the lower and upper bounds of the design variables, respectively.

If

, one arm of the starfish will move to search for food sources while using the position information of the other starfish. The formula for the position is shown in Equation (7), as follows:

where

and

are the dimensional positions of two selected starfish,

b1 and

b2 are two numbers in the range (1, −1), and

p is a number randomly chosen in the

d dimension.

Et is the energy of the starfish, obtained via Equation (8), as follows:

θ is calculated via Equation (5), and the position out of the bounds is determined in the same way as before.

3.3. Exploitation

During the development phase, two update strategies were designed for the SFOA. The SFOA uses a parallel bidirectional search strategy that requires the use of information from other starfish and the present optimal position in the population. First, five distances between the optimal position and other starfish are calculated, then two distances are randomly selected as confirmation information, and the position of each starfish is updated using a parallel bidirectional search strategy. The distance can be obtained via Equation (9), as follows:

where

do is the distance between the best position and other starfish, and

op is the five randomly chosen starfish.

Therefore, the position update rule for each starfish for the predation behavior can be obtained via Equation (10), as follows:

where

r1 and

r2 are random numbers between 0 and 1, and

do1 and d

o2 are randomly selected from

do.

In addition, starfish are vulnerable to attacks from other predators during the predation process. When a predator attempts to catch a starfish, the starfish might sever and jettison one of its arms to elude capture. In the starfish optimization algorithm, this concept is reflected in the regeneration phase, which is carried out solely on the last starfish within the population. The formula for updating the position during this regeneration phase is presented in Equation (11), as follows:

For out-of-bounds positions, the following update rules are used (Equation (12)):

3.4. T-Distribution Mutation

The basic aim of updating the population position using t-distribution mutation is to introduce mutation by using the characteristics of t-distribution, which brings a certain degree of randomness and robustness to the search process. After each position update of the starfish, the SFOAL incorporates t-distribution variation. This addition accelerates the convergence rate of the SFOA and enhances its stability and accuracy. The position update formula is established in Equations (13) and (14), as follows:

where

represents the new position after the t-distribution mutation disturbance,

represents the best position searched by the current discoverer,

represents the

t-distribution in which the degree of freedom is given by the present iteration number,

represents the position of the

ith starfish,

Fnew represents the fitness value of the new position, and

Fi represents the fitness value of the

ith starfish. When

Fnew <

Fi, the new position obtained after the

t-distribution mutation disturbance is better than the current starfish position, and the position is updated to the starfish.

3.5. Logarithmic Spiral Opposition-Based Learning

Logarithmic Spiral Opposition-Based Learning (LSOBL) focuses specifically on the reverse solution of the optimal individual within the boundary. As the iteration progresses, the position of the optimal individual constantly changes, and the spiral reverse learning search space of OBL decreases. However, LSOBL operates on individuals that change in a smaller space (especially those close to the best solution), which is very valuable in the later iterations when the population diversity decreases. This can prevent the algorithm from converging prematurely and ensure that the global optimal value is not missed. Moreover, the LSOBL model is defined in Equations (15) and (16), as follows:

Among them,

is the position of the spiral after reverse learning, and

l is a random number between −1 and 1. Flowcharts of SFOA and SFOAL are presented in

Figure 1.

6. Conclusions and Future Work

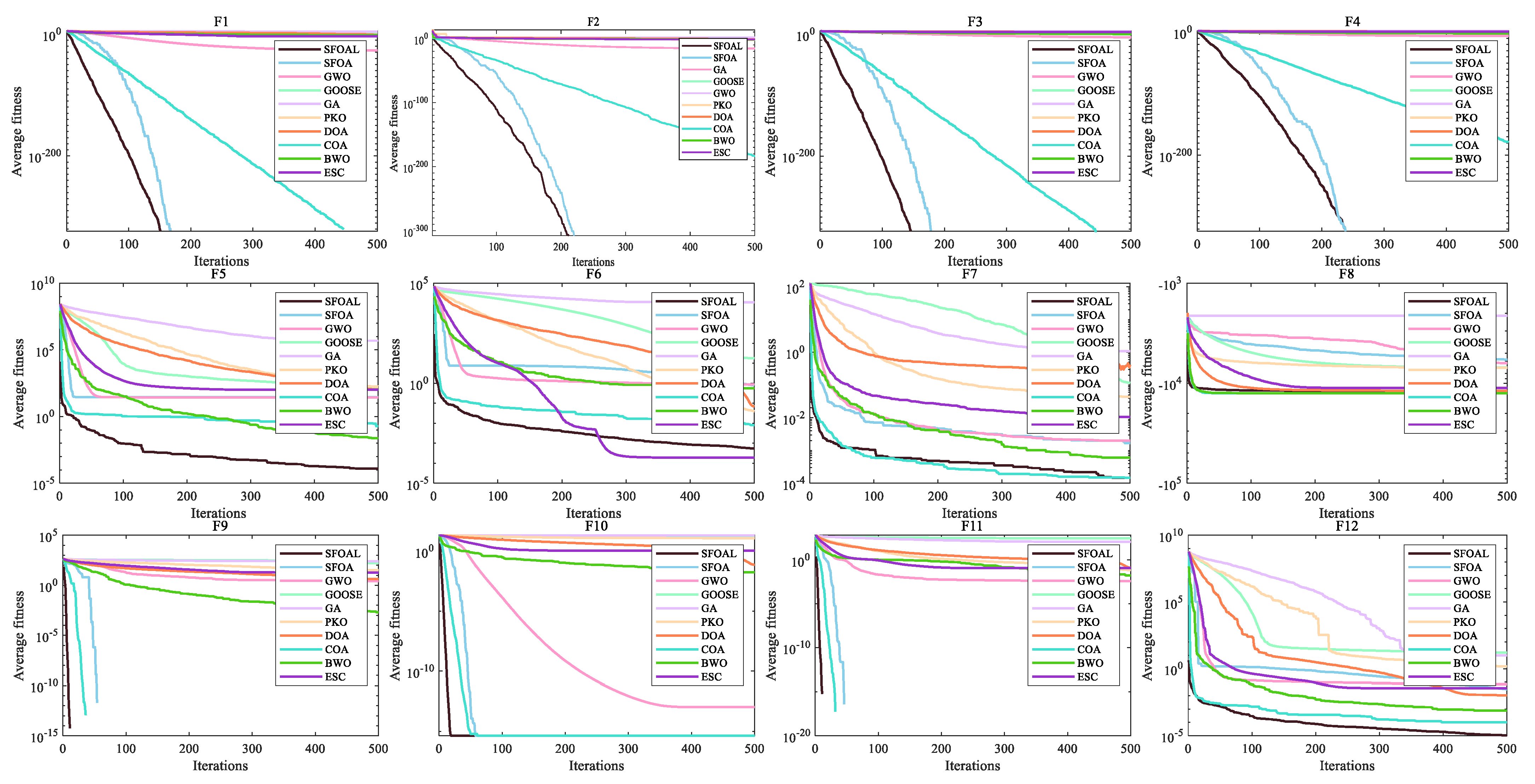

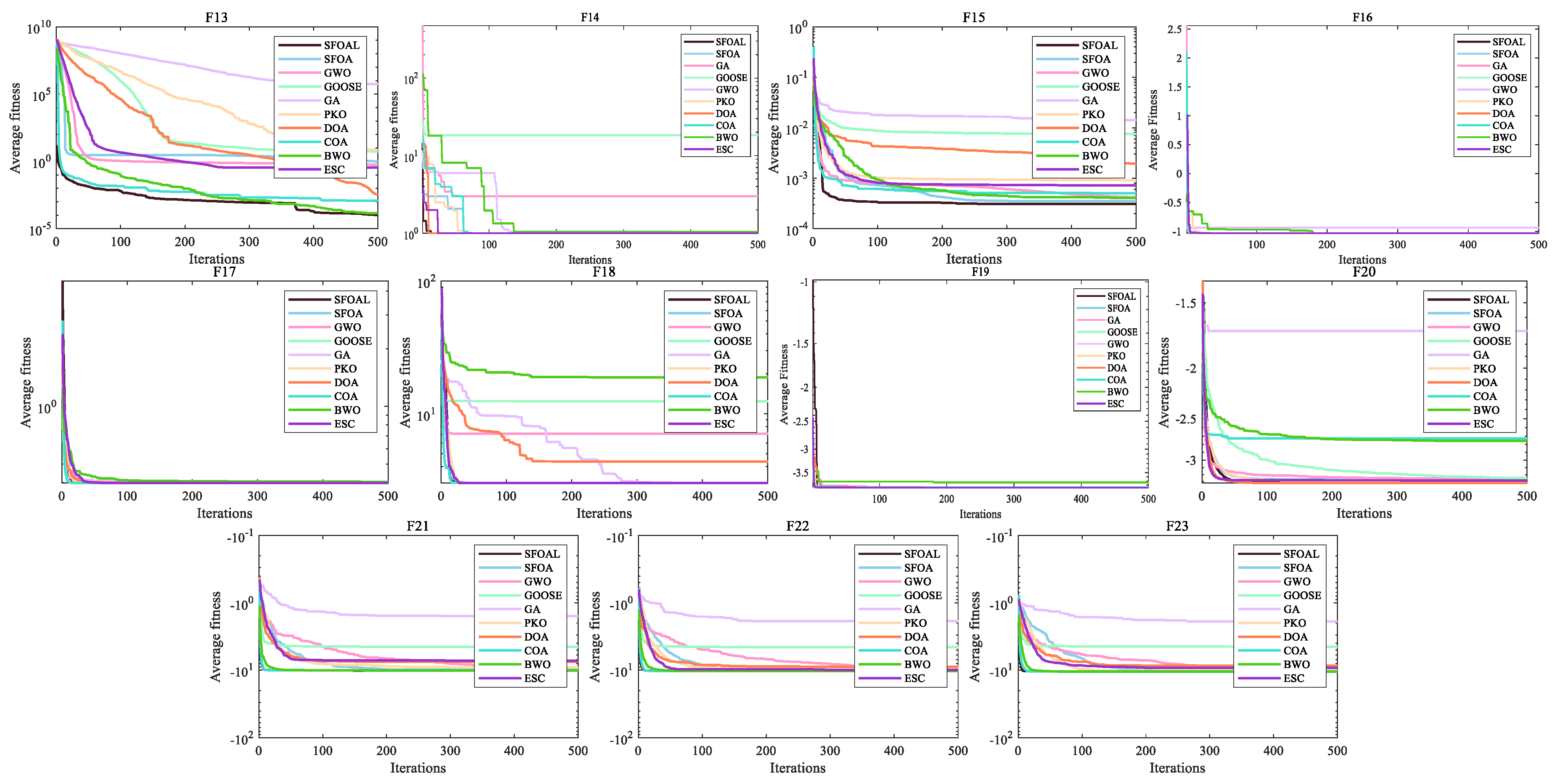

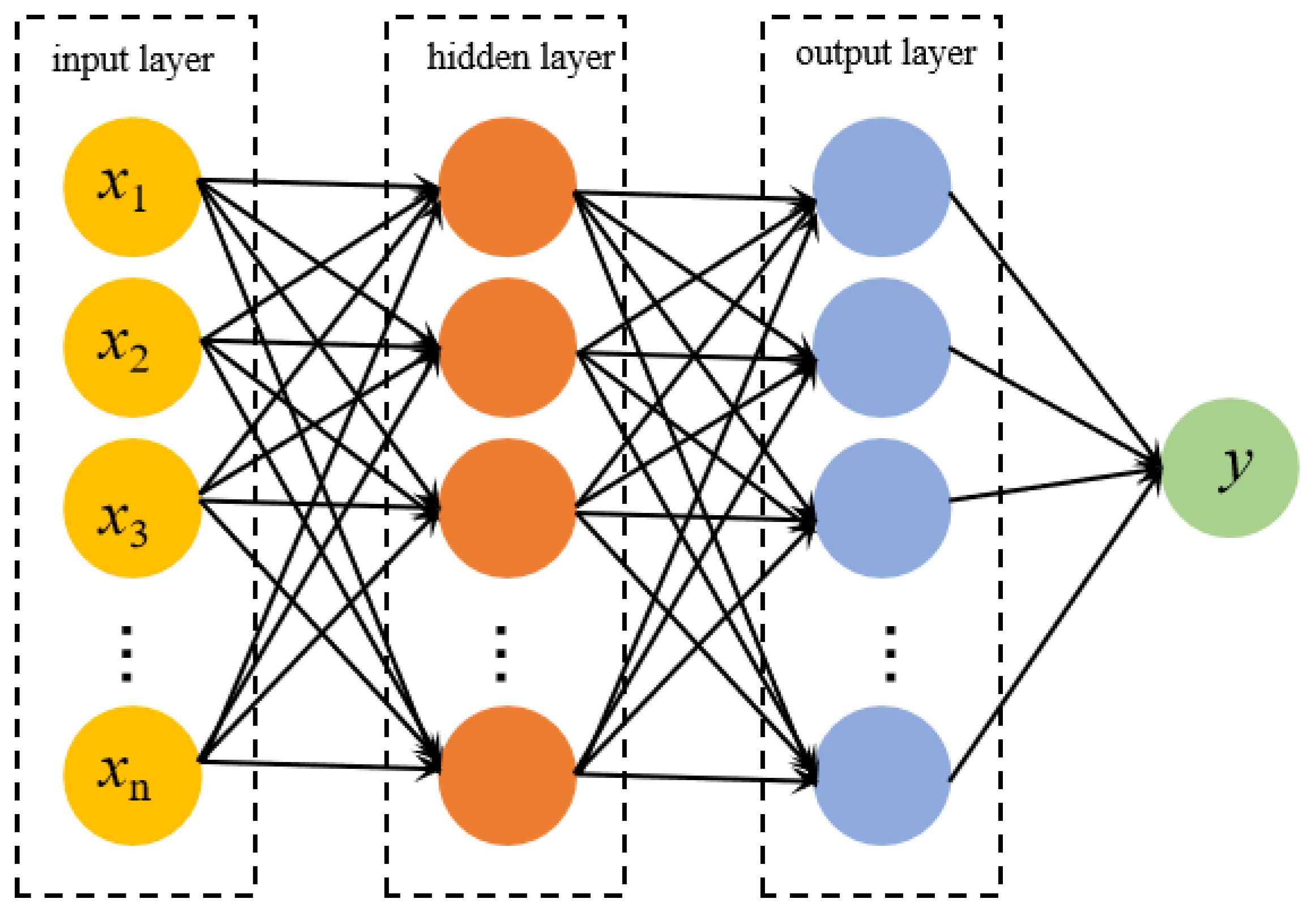

In summary, this study modifies the SFOA by combining sine chaotic mapping, t-distribution mutation, and the logarithmic spiral reverse learning strategy to obtain a strong global optimization ability and stability. A total of 23 basic functions, the CEC2021 function set, and an ultra-wideband indoor positioning problem are used to evaluate the development, exploration, and convergence ability of the SFOAL. Through testing the unimodal function, it is found that the joint strategy can enhance the local optimization ability of the algorithm. The SFOAL has the fastest convergence speed compared with the SFOA and other algorithms. Through testing the multimodal function and multimodal function of fixed dimensions, it can be clearly seen that the SFOAL has a stronger global optimization ability and a smaller standard deviation than other algorithms, indicating that the joint strategy can prevent the SFOAL from falling into local convergence too early and can effectively improve stability. The results of the UWB line-of-sight positioning problem show that the SFOAL-BP neural network has the smallest average position error compared with the random BP neural network and the SFOA-BP neural network, and it can be used to solve practical application problems.

In future studies, by mixing the SFOA with other algorithms, it may be possible to further improve the algorithm’s global optimization ability, avoid premature convergence, improve the algorithm’s stability, and use it for solving more functions. The enhanced SFOAL can be applied to artificial intelligence and machine learning. Through reinforcement learning algorithms, different network layers, the number of neurons, the convolution kernel size, and other architectural parameters can be explored to find the neural network architecture with the best performance in specific tasks (such as image classification, speech recognition, etc.), reducing the time and workload required for manually designing network architectures.