Advances in the Automated Identification of Individual Tree Species: A Systematic Review of Drone- and AI-Based Methods in Forest Environments

Abstract

:1. Introduction

2. Materials and Methods

- RQ1: What types of instruments were used to collect data? (What type of data is collected?)

- RQ2: What type of AI algorithms were used for tree identification/classification?

- RQ3: How effective are current identification/classification proposals?

2.1. Search Strategy

| ("tree detection" OR "tree classification" OR "forest classification") |

| AND |

| ("forest" OR "woods") |

| AND |

| ("image" OR "high resolution" OR "RGB" OR "multispectral" OR "hyperspectral" OR "LiDAR") |

| AND |

| ("drone" OR "UAV" OR "Unmanned aerial vehicle") |

| AND |

| ("artificial intelligence" OR "machine learning" OR "deep learning" OR "neural networks") |

2.2. Eligibility Criteria

- 1.

- The study focuses on an algorithm for the general segmentation of trees, but it does not include species classification or identification.

- 2.

- The study focuses on the detection of generic tree parts (trunk, canopy, etc.).

- 3.

- The study focuses on the identification or counting of trees in plantations of a single species.

- 4.

- The study focuses on the classification of types of tree-covered areas.

- 5.

- The study presents a proposed algorithm for parameterizing a tree (height, trunk thickness, etc.).

- 6.

- The study is conducted in urban areas or uses satellite images.

- 7.

- The manuscript corresponds to a conference paper or a thesis.

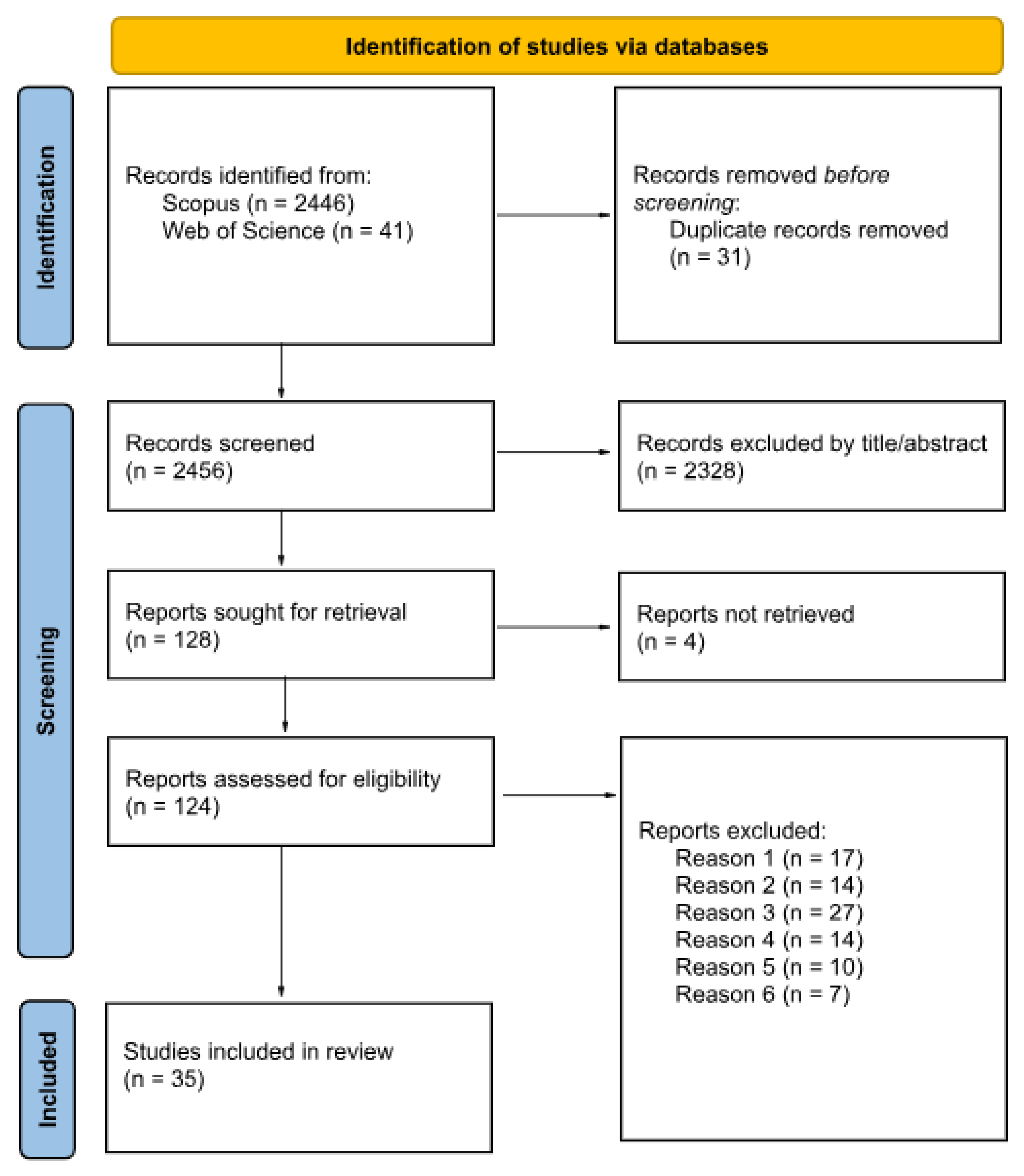

2.3. Article Selection

3. Results

3.1. Studies by Year

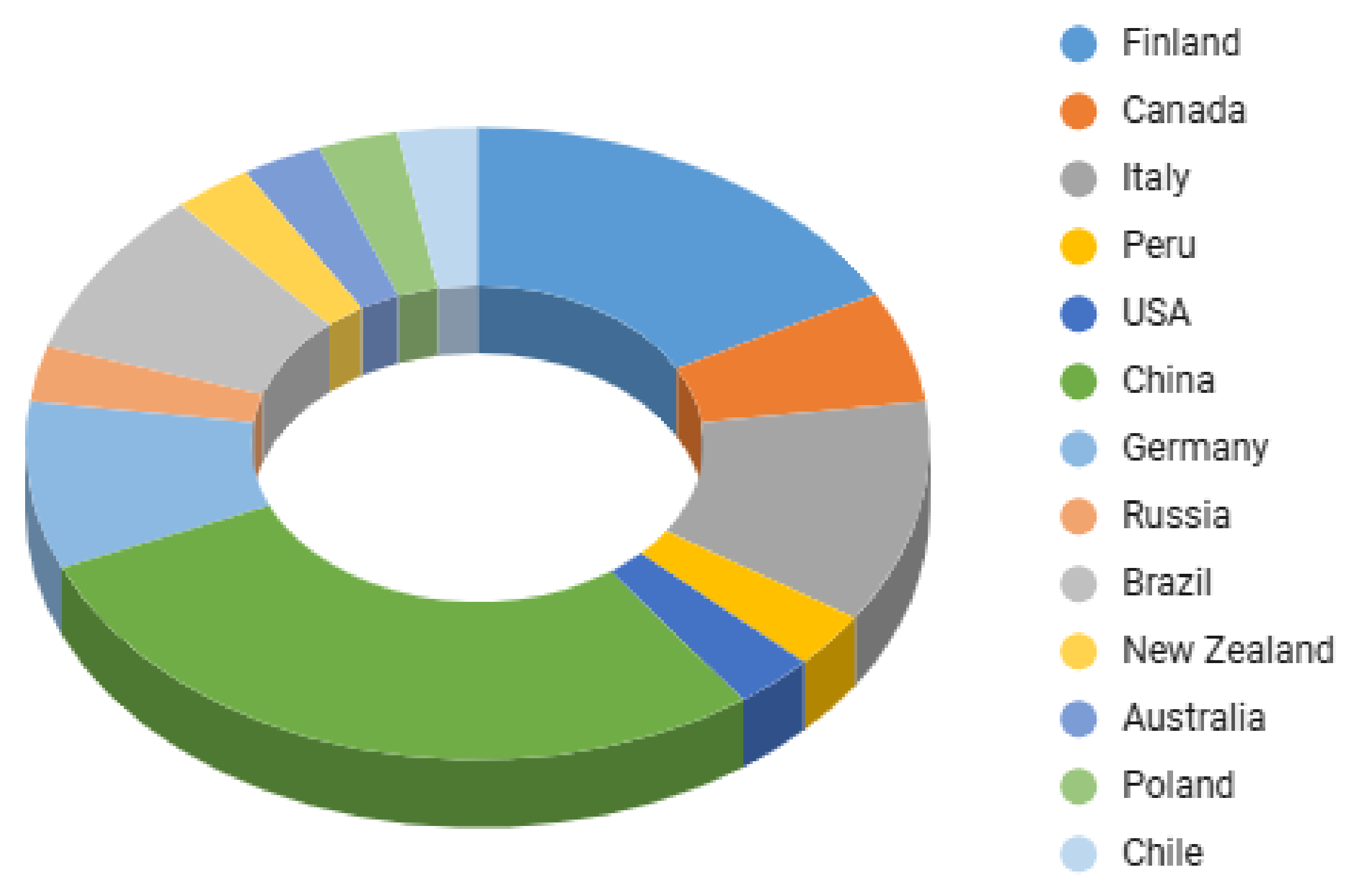

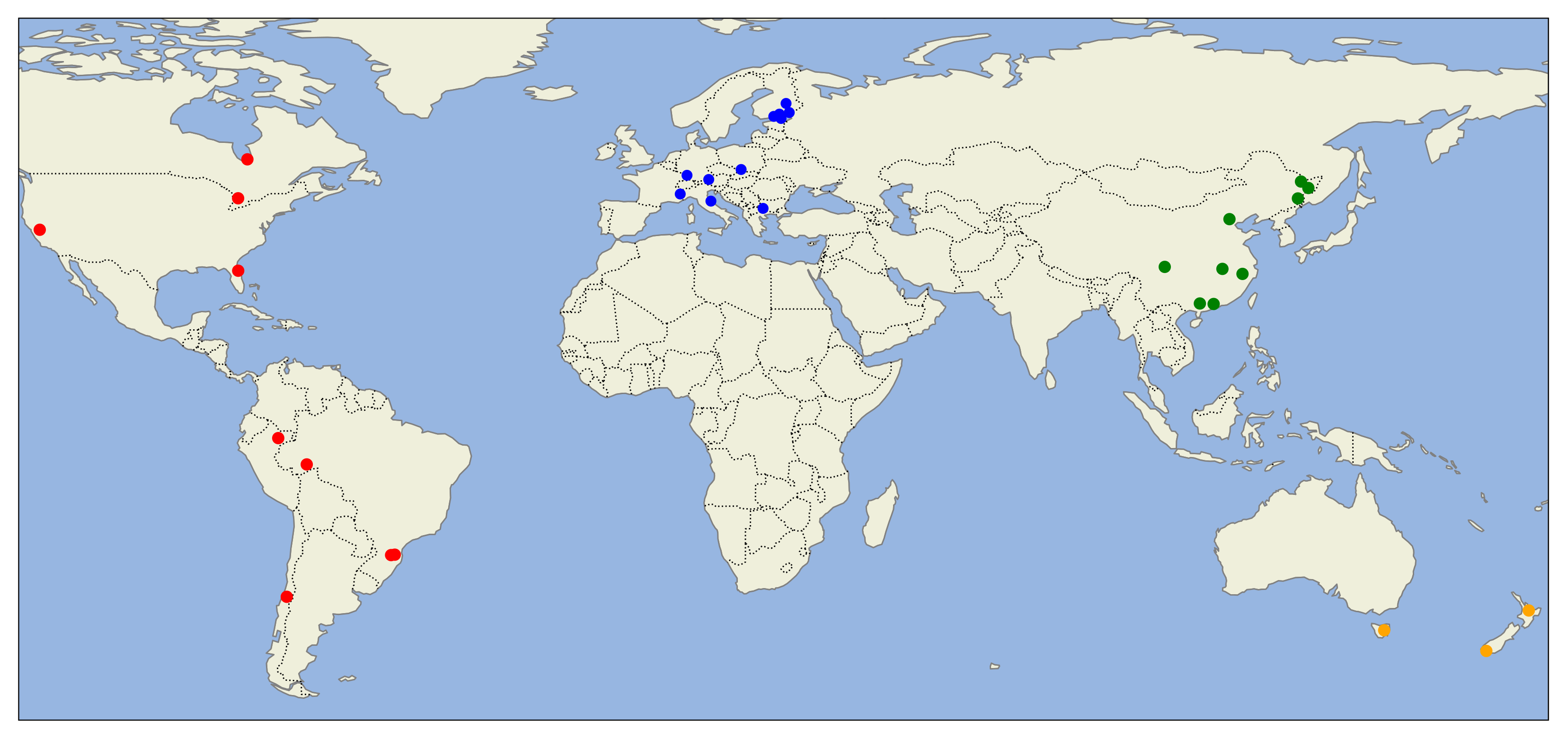

3.2. Areas of Study

- China leads in the number of contributing authors (28%), reflecting the country’s increasing focus on technological solutions to forestry challenges. This aligns with China’s extensive forested regions and rapid advancements in AI and drone technologies.

- European countries (37%), especially Finland, Germany, Poland, and Italy, show strong participation. This reflects Europe’s commitment to sustainable forestry practices and biodiversity protection, supported by policies and initiatives aimed at environmental conservation.

- In the Americas (23%), contributions come predominantly from Brazil, Canada, and the United States. The presence of diverse and ecologically rich forests, such as the Amazon Rainforest and North American temperate forests, drives research interest in these regions.

- China (9 studies) is the most frequent study location, with multiple sites such as the Mao’ershan Experimental Forest Farm [15], Haizhu National Wetland Park [16], and Hongya Forestry Farm [17]. This reflects China’s commitment to leveraging advanced technologies for managing its extensive and diverse forest resources.

- The Americas (8 studies) show a wide geographical dispersion, including locations in Brazil (e.g., Embrapa Forest [18], Ponte Branca Forest [19]), Peru (e.g., Iquitos [20]), and Canada (e.g., Ontario). These regions offer a variety of ecosystems, from the tropical rainforests of South America to the mixed forests of North America.

- Europe (12 studies) covers locations in Germany, Italy, Poland, and Finland. In Germany, studies are conducted in the Black Forest and Kranzberg Forest [21]. Italy contributes with studies in the Alps and the Marche Region [22], while Poland adds insights through research in Bielsko-Biała [23]. Finland features prominently with studies carried out in Evo [24], Kouvola [25], Eastern Finland [26], and Vesijako Forest [27]. These locations encompass data from temperate and boreal ecosystems. Together, these studies provide data from managed and natural forests in various climatic zones across Europe.

3.3. Tree Identification Tasks Workflow

- LiDAR (Light detection and ranging);

- GPS for positioning, with optional RTK (real-time kinematic) systems for enhanced accuracy;

- Image capture cameras, such as RGB, multispectral imaging (MSI) and/or hyperspectral imaging (HSI).

3.4. Instruments

3.4.1. Drones

3.4.2. Data Acquisition Instruments

3.5. Preprocessing Algorithms

3.6. Tree Segmentation

3.7. Classification Techniques

4. Discussion

4.1. RQ1—What Types of Instruments Were Used to Collect Data? (What Type of Data Is Collected?)

4.2. RQ2—What Type of AI Algorithms Were Used for Tree Identification/Classification?

4.3. RQ3—How Effective Are Current Identification/Classification Proposals?

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CHM | Canopy Height Models |

| CFS | Correlation-based Feature Selection |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| DSM | Digital Surface Models |

| EASA | European Union Aviation Safety Agency |

| ECOC | Error-Correcting Output Codes |

| GSD | Ground Sampling Distance |

| GIS | Geographic Information System |

| HSI | Hyperspectral Imaging |

| LiDAR | Light Detection and Ranging |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| MRS | Multi-Resolution Segmentation |

| MSI | Multispectral Imaging |

| MDPI | Multidisciplinary Digital Publishing Institute |

| NIR | Near-Infrared |

| OBIA | Object-Based Image Analysis |

| PCA | Principal Component Analysis |

| PPC | Photogrammetric Point Clouds |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RE | Red Edge |

| RF | Random Forest |

| RFECV | Recursive Feature Elimination with Cross-Validation |

| ROI | Region of Interest |

| RTK | Real-Time Kinematic |

| SfM | Structure from Motion |

| SFFS | Sequential Forward Floating Selection |

| SVM | Support Vector Machine |

| SWIR | Shortwave Infrared |

| UAV | Unmanned Aerial Vehicle |

| ViT | Vision Transformers |

| VNIR | Visible and Near-Infrared |

References

- United Nations. Forests | Department of Economic and Social Affairs. 2019. Available online: https://sdgs.un.org/topics/forests (accessed on 4 December 2024).

- Titus, B.D.; Brown, K.; Helmisaari, H.S.; Vanguelova, E.; Stupak, I.; Evans, A.; Clarke, N.; Guidi, C.; Bruckman, V.J.; Varnagiryte-Kabasinskiene, I.; et al. Sustainable forest biomass: A review of current residue harvesting guidelines. Energy Sustain. Soc. 2021, 11, 10. [Google Scholar] [CrossRef]

- Li, H.; Yang, W.; Zhang, Y. Application of High-Resolution Remote Sensing Image for Individual Tree Identification of Pinus sylvestris and Pinus tabulaeformis. Wirel. Commun. Mob. Comput. 2021, 2021, 7672762. [Google Scholar] [CrossRef]

- Liu, B.; Hao, Y.; Huang, H.; Chen, S.; Li, Z.; Chen, E.; Tian, X.; Ren, M. TSCMDL: Multimodal Deep Learning Framework for Classifying Tree Species Using Fusion of 2-D and 3-D Features. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4402711. [Google Scholar] [CrossRef]

- Liu, H. Classification of tree species using UAV-based multi-spectral and multi-seasonal images: A multi-feature-based approach. New For. 2024, 55, 173–196. [Google Scholar] [CrossRef]

- Sankararao, A.; Pachamuthu, R.; Choudhary, S. UC-HSI: UAV Based Crop Hyperspectral Imaging Datasets and Machine Learning Benchmark Results. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1. [Google Scholar] [CrossRef]

- Sarma, A.S.; Nidamanuri, R.R. Optimal band selection and transfer in drone-based hyperspectral images for plant-level vegetable crops identification using statistical-swarm intelligence (SSI) hybrid algorithms. Ecol. Inform. 2025, 86, 103051. [Google Scholar] [CrossRef]

- Kourounioti, O.; Temenos, A.; Temenos, N.; Oikonomou, E.; Doulamis, A.; Doulamis, N. UAVINE-XAI: Explainable AI-Based Spectral Band Selection for Vineyard Monitroting Using UAV Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 10095–10104. [Google Scholar] [CrossRef]

- Niu, B.; Feng, Q.; Chen, B.; Ou, C.; Liu, Y.; Yang, J. HSI-TransUNet: A transformer based semantic segmentation model for crop mapping from UAV hyperspectral imagery. Comput. Electron. Agric. 2022, 201, 107297. [Google Scholar] [CrossRef]

- Santos, A.A.d.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef]

- Lei, L.; Yin, T.; Chai, G.; Li, Y.; Wang, Y.; Jia, X.; Zhang, X. A novel algorithm of individual tree crowns segmentation considering three-dimensional canopy attributes using UAV oblique photos. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102893. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. PLoS Med. 2021, 18, e1003583. [Google Scholar] [CrossRef] [PubMed]

- Santos-Gago, J.M.; Ramos-Merino, M.; Valladares-Rodriguez, S.; Álvarez Sabucedo, L.M.; Fernández-Iglesias, M.J.; García-Soidán, J.L. Innovative Use of Wrist-Worn Wearable Devices in the Sports Domain: A Systematic Review. Electronics 2019, 8, 1257. [Google Scholar] [CrossRef]

- Lopez-Barreiro, J.; Garcia-Soidan, J.L.; Alvarez-Sabucedo, L.; Santos-Gago, J.M. Artificial Intelligence-Powered Recommender Systems for Promoting Healthy Habits and Active Aging: A Systematic Review. Appl. Sci. 2024, 14, 10220. [Google Scholar] [CrossRef]

- Zhong, H.; Zhang, Z.; Liu, H.; Wu, J.; Lin, W. Individual Tree Species Identification for Complex Coniferous and Broad-Leaved Mixed Forests Based on Deep Learning Combined with UAV LiDAR Data and RGB Images. Forests 2024, 15, 293. [Google Scholar] [CrossRef]

- Sun, Y.; Huang, J.; Ao, Z.; Lao, D.; Xin, Q. Deep Learning Approaches for the Mapping of Tree Species Diversity in a Tropical Wetland Using Airborne LiDAR and High-Spatial-Resolution Remote Sensing Images. Forests 2019, 10, 1047. [Google Scholar] [CrossRef]

- Huang, H.; Li, F.; Fan, P.; Chen, M.; Yang, X.; Lu, M.; Sheng, X.; Pu, H.; Zhu, P. AMDNet: A Modern UAV RGB Remote-Sensing Tree Species Image Segmentation Model Based on Dual-Attention Residual and Structure Re-Parameterization. Forests 2023, 14, 549. [Google Scholar] [CrossRef]

- Veras, H.F.P.; Ferreira, M.P.; da Cunha Neto, E.M.; Figueiredo, E.O.; Corte, A.P.D.; Sanquetta, C.R. Fusing multi-season UAS images with convolutional neural networks to map tree species in Amazonian forests. Ecol. Inform. 2022, 71, 101815. [Google Scholar] [CrossRef]

- Pereira Martins-Neto, R.; Garcia Tommaselli, A.M.; Imai, N.N.; Honkavaara, E.; Miltiadou, M.; Saito Moriya, E.A.; David, H.C. Tree Species Classification in a Complex Brazilian Tropical Forest Using Hyperspectral and LiDAR Data. Forests 2023, 14, 945. [Google Scholar] [CrossRef]

- Morales, G.; Kemper, G.; Sevillano, G.; Arteaga, D.; Ortega, I.; Telles, J. Automatic Segmentation of Mauritia flexuosa in Unmanned Aerial Vehicle (UAV) Imagery Using Deep Learning. Forests 2018, 9, 736. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Pierdicca, R.; Nepi, L.; Mancini, A.; Malinverni, E.S.; Balestra, M. UAV4TREE: Deep Learning-based system for automatic classification of tree species using RGB optical images obtained by an unmanned aerial vehicle. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, X-1/W1-2023, 1089–1096. [Google Scholar] [CrossRef]

- Maja Michałowska, J.R.; Janicka, J. Tree species classification on images from airborne mobile mapping using ML.NET. Eur. J. Remote Sens. 2023, 56, 2271651. [Google Scholar] [CrossRef]

- Hakula, A.; Ruoppa, L.; Lehtomäki, M.; Yu, X.; Kukko, A.; Kaartinen, H.; Taher, J.; Matikainen, L.; Hyyppä, E.; Luoma, V.; et al. Individual tree segmentation and species classification using high-density close-range multispectral laser scanning data. ISPRS Open J. Photogramm. Remote Sens. 2023, 9, 100039. [Google Scholar] [CrossRef]

- Tuominen, S.; Näsi, R.; Honkavaara, E.; Balazs, A.; Hakala, T.; Viljanen, N.; Pölönen, I.; Saari, H.; Ojanen, H. Assessment of Classifiers and Remote Sensing Features of Hyperspectral Imagery and Stereo-Photogrammetric Point Clouds for Recognition of Tree Species in a Forest Area of High Species Diversity. Remote Sens. 2018, 10, 714. [Google Scholar] [CrossRef]

- Kukkonen, M.; Lähivaara, T.; Packalen, P. Combination of Lidar Intensity and Texture Features Enable Accurate Prediction of Common Boreal Tree Species With Single Sensor UAS Data. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4401508. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Sivanandam, P.; Lucieer, A. Tree Detection and Species Classification in a Mixed Species Forest Using Unoccupied Aircraft System (UAS) RGB and Multispectral Imagery. Remote Sens. 2022, 14, 4963. [Google Scholar] [CrossRef]

- Pearse, G.D.; Watt, M.S.; Soewarto, J.; Tan, A.Y.S. Deep Learning and Phenology Enhance Large-Scale Tree Species Classification in Aerial Imagery during a Biosecurity Response. Remote Sens. 2021, 13, 1789. [Google Scholar] [CrossRef]

- Fricker, G.A.; Ventura, J.D.; Wolf, J.A.; North, M.P.; Davis, F.W.; Franklin, J. A Convolutional Neural Network Classifier Identifies Tree Species in Mixed-Conifer Forest from Hyperspectral Imagery. Remote Sens. 2019, 11, 2326. [Google Scholar] [CrossRef]

- National Oceanic and Atmospheric Administration (NOAA) Coastal Services Center. Lidar 101: An Introduction to Lidar Technology, Data, and Applications. 2012. Available online: https://coast.noaa.gov/digitalcoast/training/lidar-101.html (accessed on 10 February 2024).

- Desta Ekaso, F.N.; Kerle, N. Accuracy assessment of real-time kinematics (RTK) measurements on unmanned aerial vehicles (UAV) for direct geo-referencing. Geo-Spat. Inf. Sci. 2020, 23, 165–181. [Google Scholar] [CrossRef]

- Kior, A.; Yudina, L.; Zolin, Y.; Sukhov, V.; Sukhova, E. RGB Imaging as a Tool for Remote Sensing of Characteristics of Terrestrial Plants: A Review. Plants 2024, 13, 1262. [Google Scholar] [CrossRef] [PubMed]

- Prey, L.; Von Bloh, M.; Schmidhalter, U. Evaluating RGB Imaging and Multispectral Active and Hyperspectral Passive Sensing for Assessing Early Plant Vigor in Winter Wheat. Sensors 2018, 18, 2931. [Google Scholar] [CrossRef] [PubMed]

- Martín-Rodríguez, F.; Álvarez Sabucedo, L.M.; Santos-Gago, J.M.; Fernández-Barciela, M. Enhanced Satellite Analytics for Mussel Platform Census Using a Machine-Learning Based Approach. Electronics 2024, 13, 2782. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Ma, Y.; Zhao, Y.; Im, J.; Zhao, Y.; Zhen, Z. A deep-learning-based tree species classification for natural secondary forests using unmanned aerial vehicle hyperspectral images and LiDAR. Ecol. Indic. 2024, 159, 111608. [Google Scholar] [CrossRef]

- Zhong, H.; Lin, W.; Liu, H.; Ma, N.; Liu, K.; Cao, R.; Wang, T.; Ren, Z. Identification of tree species based on the fusion of UAV hyperspectral image and LiDAR data in a coniferous and broad-leaved mixed forest in Northeast China. Front. Plant Sci. 2022, 13, 964769. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Kuzmin, A.; Korhonen, L.; Kivinen, S.; Hurskainen, P.; Korpelainen, P.; Tanhuanpää, T.; Maltamo, M.; Vihervaara, P.; Kumpula, T. Detection of European Aspen (Populus tremula L.) Based on an Unmanned Aerial Vehicle Approach in Boreal Forests. Remote Sens. 2021, 13, 1723. [Google Scholar] [CrossRef]

- Safonova, A.; Hamad, Y.; Dmitriev, E.; Georgiev, G.; Trenkin, V.; Georgieva, M.; Dimitrov, S.; Iliev, M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones 2021, 5, 77. [Google Scholar] [CrossRef]

- Franklin, S.; Ahmed, O.; Williams, G. Northern Conifer Forest Species Classification Using Multispectral Data Acquired from an Unmanned Aerial Vehicle. Photogramm. Eng. Remote Sens. 2017, 83, 501–507. [Google Scholar] [CrossRef]

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree species classification using deep learning and RGB optical images obtained by an unmanned aerial vehicle. J. For. Res. 2021, 32, 1879–1888. [Google Scholar] [CrossRef]

- Cabrera-Ariza, A.M.; Peralta-Aguilera, M.; Henríquez-Hernández, P.V.; Santelices-Moya, R. Using UAVs and Machine Learning for Nothofagus alessandrii Species Identification in Mediterranean Forests. Drones 2023, 7, 668. [Google Scholar] [CrossRef]

- Dersch, S.; Schöttl, A.; Krzystek, P.; Heurich, M. Novel single tree detection by transformers using UVA-based multispectral imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 981–988. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, J.; Wang, H.; Tan, T.; Cui, M.; Huang, Z.; Wang, P.; Zhang, L. Multi-Species Individual Tree Segmentation and Identification Based on Improved Mask R-CNN and UAV Imagery in Mixed Forests. Remote Sens. 2022, 14, 874. [Google Scholar] [CrossRef]

- Belcore, E.; Pittarello, M.; Lingua, A.M.; Lonati, M. Mapping Riparian Habitats of Natura 2000 Network (91E0*, 3240) at Individual Tree Level Using UAV Multi-Temporal and Multi-Spectral Data. Remote Sens. 2021, 13, 1756. [Google Scholar] [CrossRef]

- Franklin, S.E. Pixel- and object-based multispectral classification of forest tree species from small unmanned aerial vehicles. J. Unmanned Veh. Syst. 2018, 6, 195–211. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Wiser, S.; Burrows, L.; Fassnacht, F.; Schmidtlein, S. Convolutional Neural Networks accurately predict cover fractions of plant species and communities in Unmanned Aerial Vehicle imagery. Remote Sens. Ecol. Conserv. 2020, 6, 472–486. [Google Scholar] [CrossRef]

- Gini, R.; Sona, G.; Ronchetti, G.; Passoni, D.; Pinto, L. Improving Tree Species Classification Using UAS Multispectral Images and Texture Measures. ISPRS Int. J. Geo-Inf. 2018, 7, 315. [Google Scholar] [CrossRef]

- La Rosa, L.E.C.; Sothe, C.; Feitosa, R.Q.; de Almeida, C.M.; Schimalski, M.B.; Oliveira, D.A.B. Multi-task fully convolutional network for tree species mapping in dense forests using small training hyperspectral data. ISPRS J. Photogramm. Remote Sens. 2021, 179, 35–49. [Google Scholar] [CrossRef]

- Nezami, S.; Khoramshahi, E.; Nevalainen, O.; Pölönen, I.; Honkavaara, E. Tree Species Classification of Drone Hyperspectral and RGB Imagery with Deep Learning Convolutional Neural Networks. Remote Sens. 2020, 12, 1070. [Google Scholar] [CrossRef]

- European Union Aviation Safety Agency. Easy Access Rules for Unmanned Aircraft Systems (Regulation (EU) 2019/947 and 2019/945). 2023. Available online: https://www.easa.europa.eu/sites/default/files/dfu/D0593E_2024-07-10_06.26.37_EAR-for-Unmanned-Aircraft-Systems.pdf (accessed on 10 February 2024).

- Liao, L.; Cao, L.; Xie, Y.; Luo, J.; Wang, G. Phenotypic Traits Extraction and Genetic Characteristics Assessment of Eucalyptus Trials Based on UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 765. [Google Scholar] [CrossRef]

- Zhao, D.; Pang, Y.; Liu, L.; Li, Z. Individual Tree Classification Using Airborne LiDAR and Hyperspectral Data in a Natural Mixed Forest of Northeast China. Forests 2020, 11, 303. [Google Scholar] [CrossRef]

- Wang, C. At-Sensor Radiometric Correction of a Multispectral Camera (RedEdge) for sUAS Vegetation Mapping. Sensors 2021, 21, 8224. [Google Scholar] [CrossRef]

- Rajab Pourrahmati, M.; Baghdadi, N.; Fayad, I. Comparison of GEDI LiDAR Data Capability for Forest Canopy Height Estimation over Broadleaf and Needleleaf Forests. Remote Sens. 2023, 15, 1522. [Google Scholar] [CrossRef]

- Zhong, L.; Dai, Z.; Fang, P.; Cao, Y.; Wang, L. A Review: Tree Species Classification Based on Remote Sensing Data and Classic Deep Learning-Based Methods. Forests 2024, 15, 852. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.; Koch, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; White, E.P. Cross-site learning in deep learning RGB tree crown detection. Ecol. Inform. 2020, 56, 101061. [Google Scholar] [CrossRef]

| Drone | Type | EASA | Weight | Paper |

|---|---|---|---|---|

| eBee Plus RTK | Fixed Wing | C2 | 1.1 kg | [42] |

| JOUAV CW-15 | Fixed Wing | C3 | 14 kg | [5] |

| SenseFly eBee Series | Fixed Wing | C2 | 1–1.5 kg | [42,43,44] |

| Aeryon SkyRanger | Quadcopter | C2 | 2.5 kg | [20] |

| Avartek Boxer | Quadcopter | C3 | 25 kg | [26] |

| DJI Inspire 2 | Quadcopter | C2 | 4 kg | [45] |

| DJI Matrice 200/210 | Quadcopter | C3 | 4 kg | [42] |

| DJI Matrice 300 RTK | Quadcopter | C3 | 6.3 kg | [15,40,46] |

| DJI Matrice 600 Pro | Hexacopter | C3 | 10 kg | [39,47] |

| DJI Mavic Series | Quadcopter | C1 | 0.7–0.9 kg | [20,48] |

| DJI Phantom 4 Series | Quadcopter | C2 | 1.4 kg | [4,17,18,21,28,42,43,49] |

| ING Robotic Responder | Quadcopter | C3 | 3 kg | [50] |

| Multirotor GV2000 | Quadcopter | C3 | [4] | |

| Okto-XL | Octocopter | C3 | [21,51] | |

| Pegasus D200 | Hexacopter | C3 | [40] | |

| Tarot 960 | Hexacopter | C3 | 5 kg | [25,27] |

| TurboAce Matrix-E | Quadcopter | C3 | 2.2 kg | [20] |

| Generic UAV | [19,22,52,53,54] | |||

| Helicopter | [23,24] | |||

| Small Airplane | [16,30] |

| RGB | MSI | HSI | LiDAR | Articles |

|---|---|---|---|---|

| ✓ | [17,18,20,22,29,45,46,48,51] | |||

| ✓ | ✓ | [25,28,42,43,44,49,50,52] | ||

| ✓ | ✓ | [27,53,54] | ||

| ✓ | ✓ | [4,15,16,21,23] | ||

| ✓ | ✓ | ✓ | [56] | |

| ✓ | [5,24,47] | |||

| ✓ | ✓ | [19,30,39,40,57] | ||

| ✓ | [26] |

| Instrument | Paper | Resolution | Weight |

|---|---|---|---|

| Canon 100D (Canon Inc., Tokyo, Japan) | [51] | 18 MP (5184 × 3456 pixels) | 575 g |

| FC6310R RGB (DJI, Shenzhen, China) | [4] | 20 MP (5472 × 3648 pixels) | 200 g |

| FC7303 RGB (DJI, Shenzhen, China) | [22] | 12 MP (4000 × 3000 pixels) | 200 g |

| Nikon J1 Camera (Nikon Corporation, Tokyo, Japan) | [52] | 10.1 MP (3872 × 2592 pixels) | 277 g |

| PhaseOne iXU 100 MP (Phase One, Copenhagen, Denmark) | [23] | 100 MP (11,664 × 8750 pixels) | 1200 g |

| Samsung NX1000 (Samsung Elec. Co., Suwon, South Korea) | [54] | 20.3 MP (5472 × 3648 pixels) | 222 g |

| Samsung NX300 (Samsung Elec. Co., Suwon, South Korea) | [25] | 20.3 MP (5472 × 3648 pixels) | 331 g |

| Sony Alpha 7R (Sony Corporation, Tokyo, Japan) | [21] | 36.4 MP (7360 × 4912 pixels) | 465 g |

| Sony DSC-WX220 (Sony Corporation, Tokyo, Japan) | [44,50] | 18.2 MP (4896 × 3672 pixels) | 121 g |

| Sony Nex-7 CMOS (Sony Corporation, Tokyo, Japan) | [20] | 24.3 MP (6000 × 4000 pixels) | 291 g |

| Zenmuse P1 (DJI, Shenzhen, China) | [46] | 45 MP (8192 × 5460 pixels) | 900 g |

| Zenmuse X5S (DJI, Shenzhen, China) | [45] | 20.8 MP (5280 × 3956 pixels) | 430 g |

| MT9F002 (ON Semiconductor, Phoenix, USA) | [20] | 14 MP (4384 × 3288 pixels) | 150 g |

| Generic RGB Camera | [16,17,18,27,28,29,43,48,49,53,57] |

| Instrument | Paper | Bands | Weight |

|---|---|---|---|

| Tetracam MiniCam MCA6 (Tetracam Inc., CA, USA) | [50] | RGB, NIR | 700 g |

| Tetracam ADC Lite (Tetracam Inc., CA, USA) | [52] | RG, NIR | 200 g |

| MicaSense RedEdge-MX Dual (MicaSense Inc., WA, USA) | [47] | RGB, RE, NIR, plus 5 bands | 510 g |

| Parrot Sequoia (Parrot SA, Paris, France) | [42,43,44,50] | RG, NIR | 110 g |

| MicaSense RedEdge-MX (MicaSense Inc., WA, USA) | [5,28,42] | RGB, RE, NIR | 230 g |

| Instrument | Paper | Spectral Range/Bands | Weight |

|---|---|---|---|

| AVIRIS Hyperspectral NG (NASA, CA, USA) | [30] | 380–2510 nm, 425 bands | 100 kg |

| CASI-1500 (ITRES Research Ltd., Alberta, Canada) | [57] | 380–1050 nm, 288 bands | 15 kg |

| Fabry-Perot FPI (VTT Research Centre, Espoo, Finland) | [53,54] | 400–1000 nm | 2–3 kg |

| Hyperspectral Camera (FPI) | [27,39] | 2–3 kg | |

| NEON Imaging Spectrometer (NEON, CO, USA) | [56] | 380–2500 nm, 426 bands | 20 kg |

| Resonon Pika L (Resonon Inc., MT, USA) | [40] | 400–1000 nm, 281 bands | 1.5 kg |

| Rikola FPI-based (Rikola Ltd., Oulu, Finland) | [25] | VNIR 400–1000 nm, 40 bands | 2–3 kg |

| Rikola Hyperspectral (Rikola Ltd., Oulu, Finland) | [19] | VNIR 500–900 nm, 50 bands | 2–3 kg |

| Xenics Bobcat-1.7-320 (Xenics NV, Leuven, Belgium) | [25] | 900–1700 nm | 0.5 kg |

| Instrument | Paper | Bands | Weight |

|---|---|---|---|

| Riegl mini VUX-3UAV (RIEGL GmbH, Horn, Austria) | [24] | 905 nm | 1.55 kg |

| Riegl VQ-840-G (RIEGL GmbH, Horn, Austria) | [24] | 532 nm | 12 kg |

| Riegl VUX-1HA (RIEGL GmbH, Horn, Austria) | [24] | NIR 1550 nm | 3.5 kg |

| Riegl VUX-1 (RIEGL GmbH, Horn, Austria) | [26] | NIR 1550 nm | 3.5 kg |

| Riegl VUX-240 (RIEGL GmbH, Horn, Austria) | [23] | NIR 1550 nm | 4.3 kg |

| RIEGL VUX-1LR (RIEGL GmbH, Horn, Austria) | [4] | NIR 1550 nm | 3.5 kg |

| Trimble Harrier 68i Scanner (Trimble Inc., California, USA) | [16] | 1064 nm | 3 kg |

| RIEGL LMS-Q680i LiDAR (RIEGL GmbH, Horn, Austria) | [19] | NIR 1550 nm | 17.5 kg |

| LiDAR Gemini Mapper (3D Laser Mapping Ltd., Nottingham, UK) | [56] | 1550 nm | 87 kg |

| LiteMapper 5600 (RIEGL and IGI mbH, Kreuztal, Germany) | [57] | 1550 nm | 16 kg |

| Riegl mini VUX-1UAV (RIEGL GmbH, Horn, Austria) | [39,40] | 905 nm | 1.55 kg |

| Zenmuse L1 (DJI, Shenzhen, China) | [15] | 905 nm NIR + RGB | 1 kg |

| Generic LiDAR | [21,30] |

| Category | Software | Papers |

|---|---|---|

| Image Alignment & Orthomosaic | Agisoft Metashape 1, PhotoScan, Pix4Dmapper 2, Pix4UAV, Agisoft | [5,18,25,27,28,42,44,50,52] |

| Specialized LiDAR Software | LAStools, LiDAR360 3, FUSION 4, DASOS | [15,16,19,28,40] |

| GIS & Remote Sensing Tools | ENVI 5, ArcGIS 6, ArcGIS Pro 7, QGIS, eCognition | [5,15,16,17,21,27,28,40,43,44,46,48,49,50,52,57] |

| Image Annotation & Preprocessing | LabelImg 8, Capture One Pro, IrfanView | [15,23] |

| Category | Segmentation Algorithm | Papers |

|---|---|---|

| Traditional Algorithms | Local maxima (CHM-based) | [16,25,27,42] |

| Multi-resolution segmentation | [21,28,44,49,50] | |

| ITC delineation | [18,56] | |

| Watershed and distance-based clustering | [26,40,53,57] | |

| Classic Machine Learning | RF with segmentation features | [24,28,44,46,50] |

| Fuzzy k-nearest neighbors (FkNN) | [24] | |

| Simple linear iterative clustering (SLIC) | [19,39] | |

| Deep Learning | U-Net | [21,48] |

| U-Net variations | [20,39] | |

| Post-processed YOLOv4 + CHM | [47] | |

| YOLOv8 + gather-and-distribute mechanism | [15] | |

| DeepLabv3+ | [18] | |

| Dual-attention residual network (AMDNet) | [17] | |

| Mask R-CNN | [48] |

| Category | Classification Algorithm | Accuracy | Papers |

|---|---|---|---|

| Classic ML | RF | 80–95% | [5,19,24,25,26,27,28,40,44,46,49,50] |

| SVM | 85–97% | [40,42,43,56,57] | |

| Maximum Likelihood | 55–87% | [50,52] | |

| DL | YOLOv8 | 81% | [15] |

| ResNet-50 | 92–97% | [23,29,45] | |

| DenseNet161 | 72% | [22] | |

| VGG16 | 73% | [16] | |

| PointMLP | 98.52% | [4] | |

| AMDNet | 93.8% | [17] | |

| 1D-CNN, CBAM | 83% | [39] | |

| 3D-CNN | 97–99% | [54] | |

| Generic CNN | 87% | [30,51] | |

| InceptionV3 | 82.9% | [23] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abreu-Dias, R.; Santos-Gago, J.M.; Martín-Rodríguez, F.; Álvarez-Sabucedo, L.M. Advances in the Automated Identification of Individual Tree Species: A Systematic Review of Drone- and AI-Based Methods in Forest Environments. Technologies 2025, 13, 187. https://doi.org/10.3390/technologies13050187

Abreu-Dias R, Santos-Gago JM, Martín-Rodríguez F, Álvarez-Sabucedo LM. Advances in the Automated Identification of Individual Tree Species: A Systematic Review of Drone- and AI-Based Methods in Forest Environments. Technologies. 2025; 13(5):187. https://doi.org/10.3390/technologies13050187

Chicago/Turabian StyleAbreu-Dias, Ricardo, Juan M. Santos-Gago, Fernando Martín-Rodríguez, and Luis M. Álvarez-Sabucedo. 2025. "Advances in the Automated Identification of Individual Tree Species: A Systematic Review of Drone- and AI-Based Methods in Forest Environments" Technologies 13, no. 5: 187. https://doi.org/10.3390/technologies13050187

APA StyleAbreu-Dias, R., Santos-Gago, J. M., Martín-Rodríguez, F., & Álvarez-Sabucedo, L. M. (2025). Advances in the Automated Identification of Individual Tree Species: A Systematic Review of Drone- and AI-Based Methods in Forest Environments. Technologies, 13(5), 187. https://doi.org/10.3390/technologies13050187