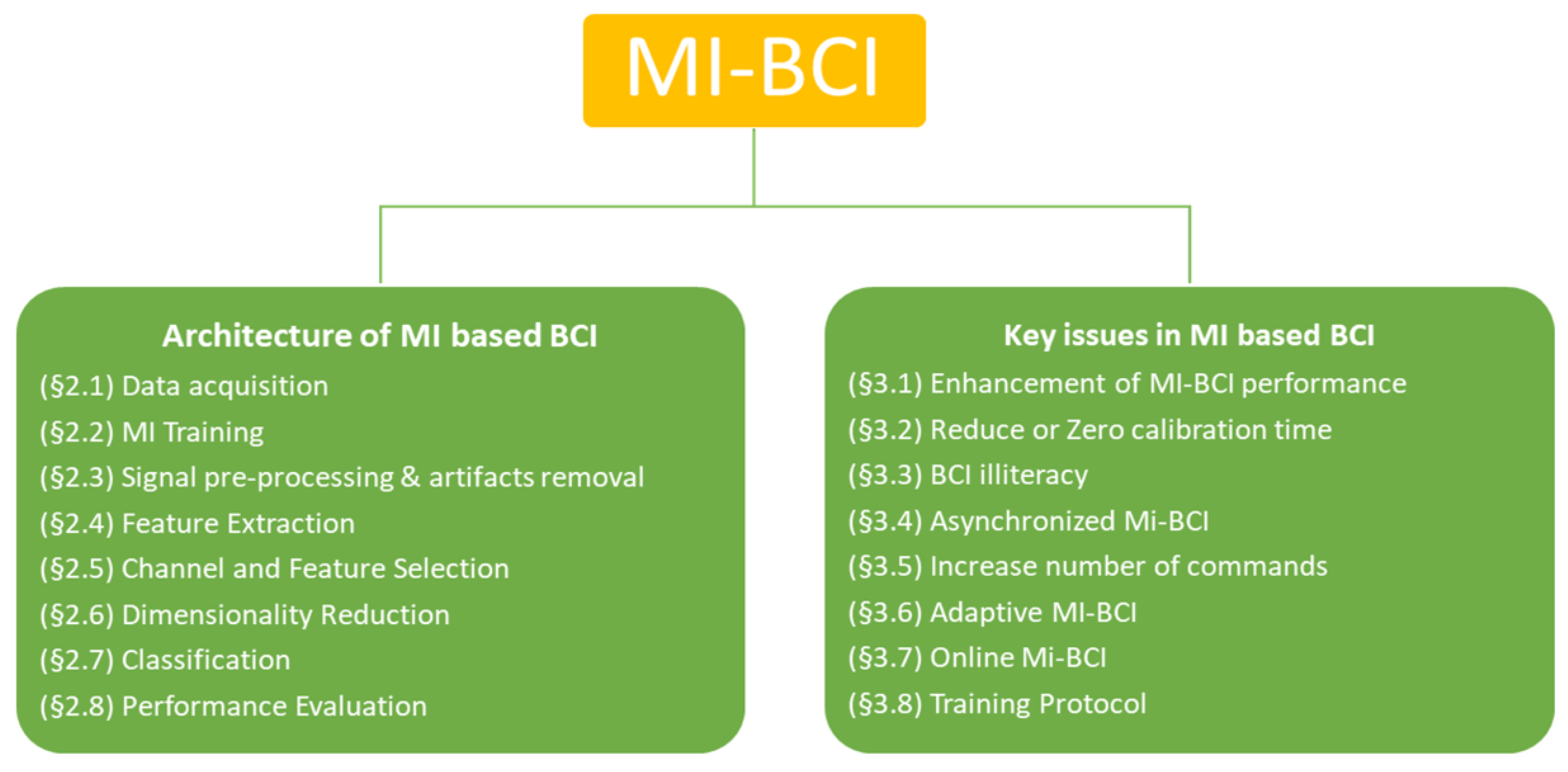

A Comprehensive Review on Critical Issues and Possible Solutions of Motor Imagery Based Electroencephalography Brain-Computer Interface

Abstract

:1. Introduction

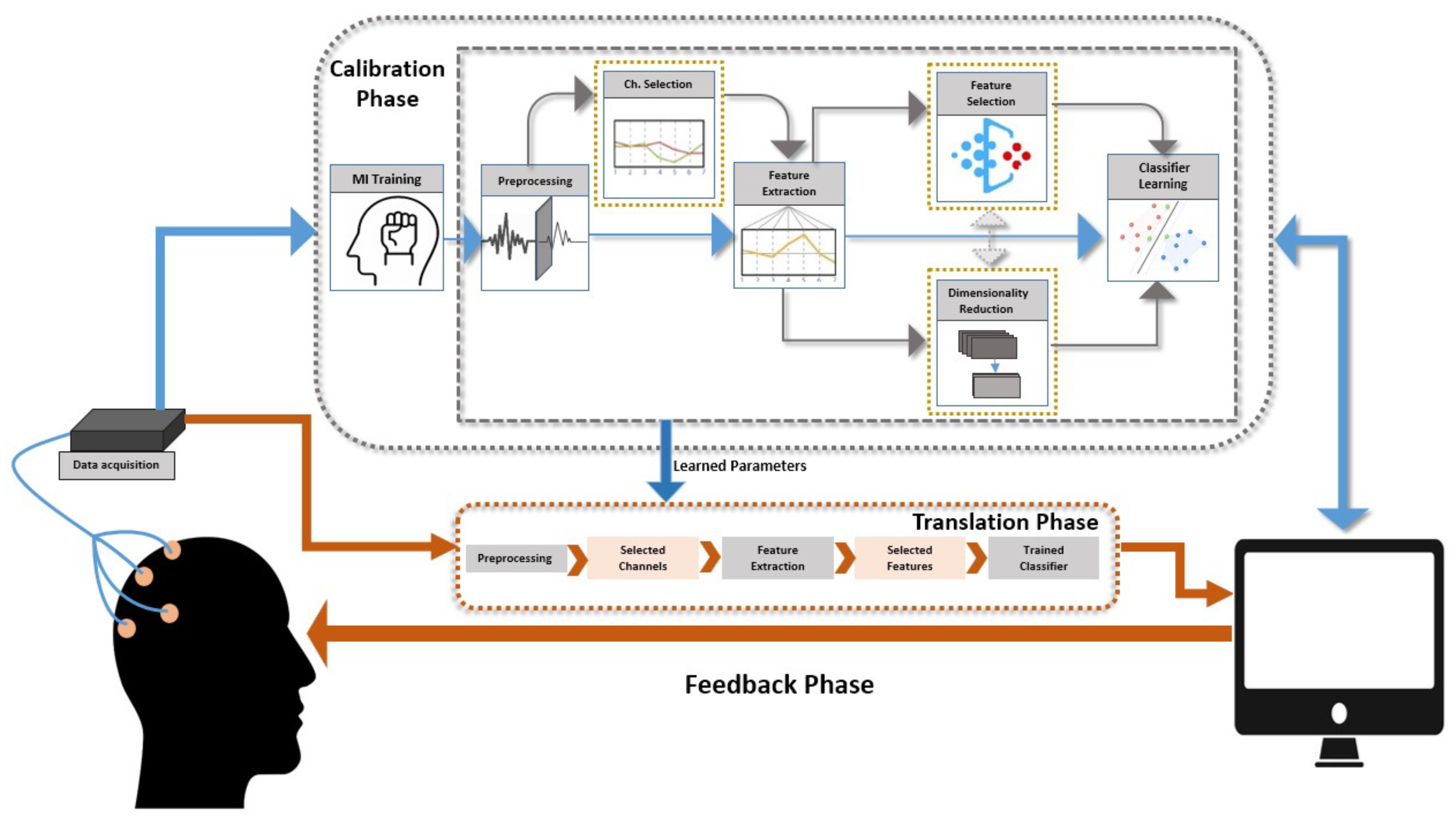

2. Architecture of MI Based BCI

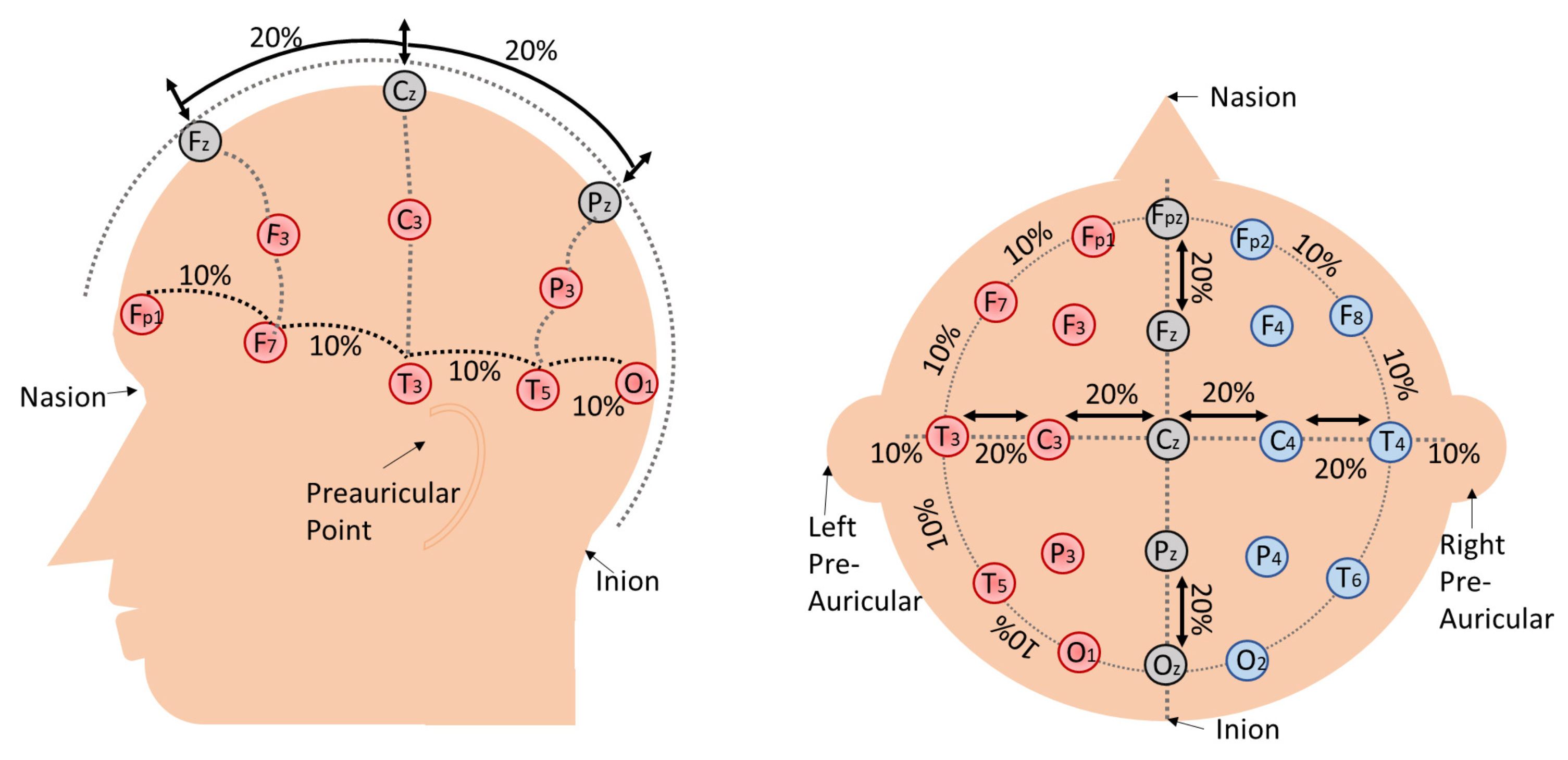

2.1. Data Acquisition

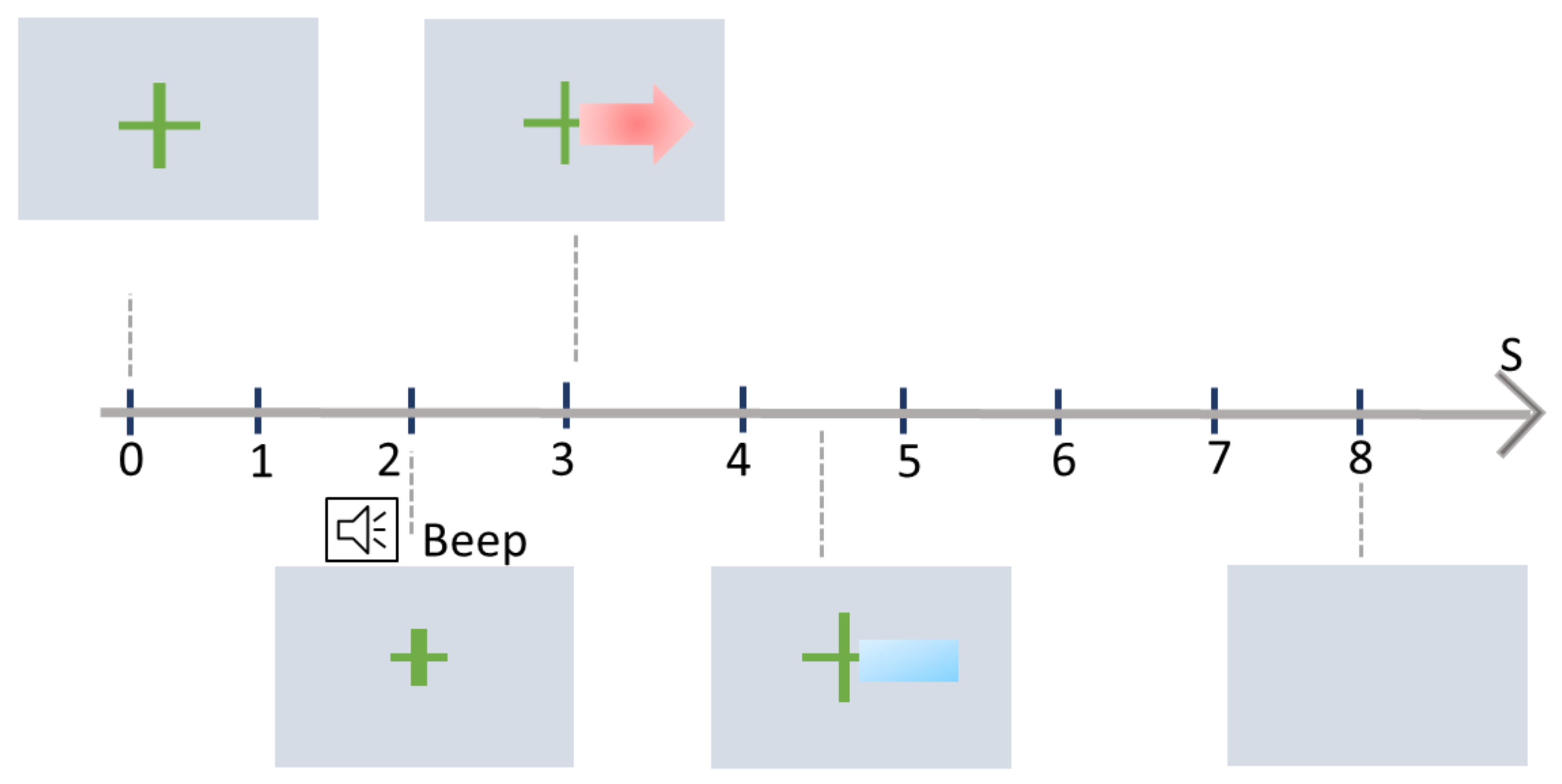

2.2. MI Training

2.3. Signal Pre-Processing and Artifacts Removal

2.4. Feature Extraction

2.4.1. Time Domain Methods

2.4.2. Spectral Domain Methods

2.4.3. Time-Frequency Methods

2.4.4. Spatial Domain Methods

2.4.5. Spatio-Temporal and Spatio-Spectral Methods

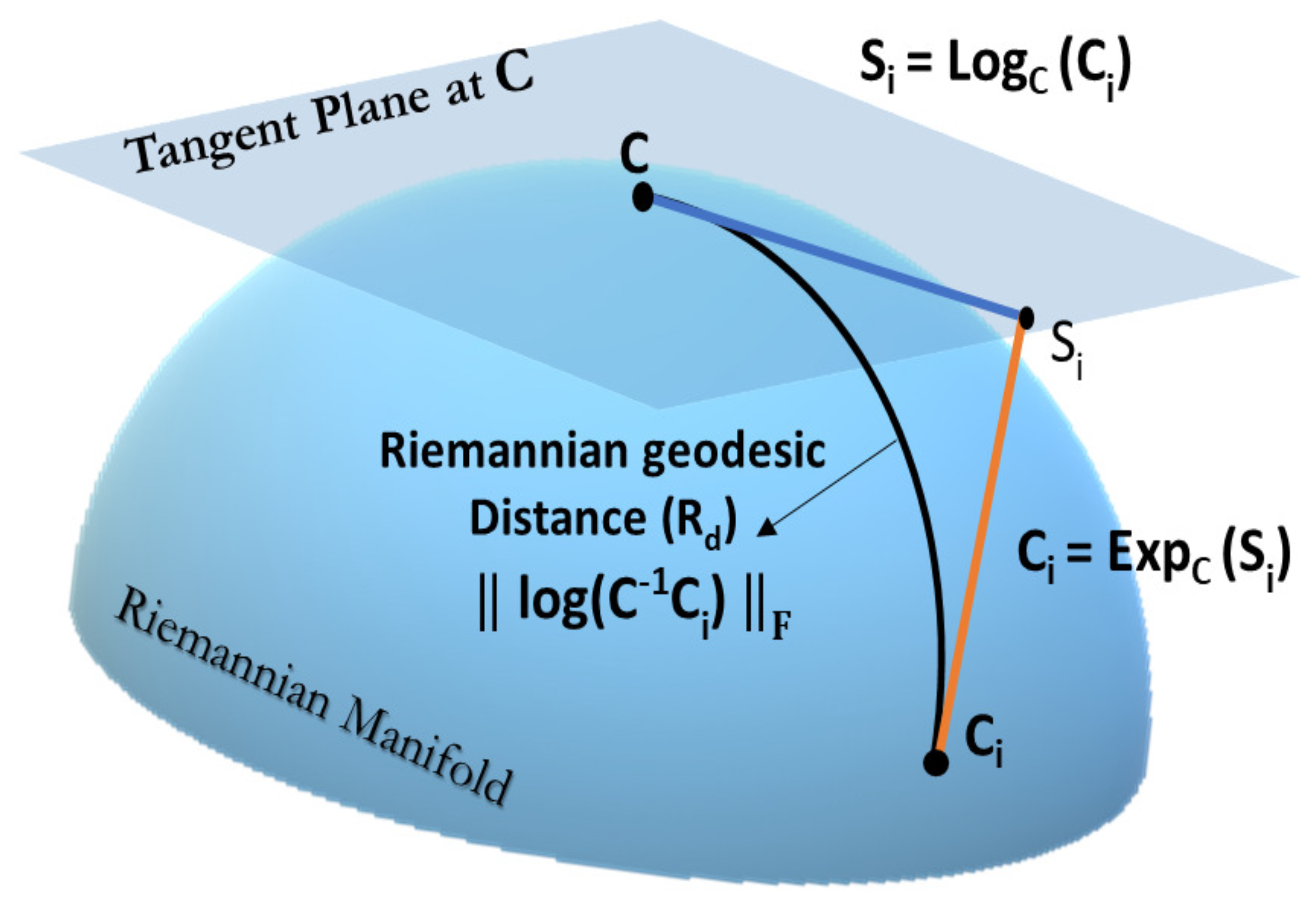

2.4.6. Riemannian Geometry Based Methods

2.5. Channel and Feature Selection

2.5.1. Filter Approach

2.5.2. Wrapper Approach

2.6. Dimensionality Reduction

2.7. Classification

2.7.1. Euclidean Space Methods

2.7.2. Riemannian Space Methods

2.8. Performance Evaluation

3. Key Issues in MI Based BCI

3.1. Enhancement of MI-BCI Performance

3.1.1. Enhancement of MI-BCI Performance Using Preprocessing

3.1.2. Enhancement of MI-BCI Performance Using Channel Selection

3.1.3. Enhancement of MI-BCI Performance Using Feature Selection

3.1.4. Enhancement of MI-BCI Performance Using Dimensionality Reduction

3.1.5. Enhancement of MI-BCI Performance with Combination of All

3.2. Reduce or Zero Calibration Time

3.2.1. Subject-Specific Methods

3.2.2. Transfer Learning Methods

3.2.3. Subject Independent Methods

3.3. BCI Illiteracy

3.4. Asynchronised MI-BCI

3.5. Increase Number of Commands

3.6. Adaptive BCI

3.7. Online MI-BCI

3.8. Training Protocol

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Singh, A.; Lal, S.; Guesgen, H.W. Architectural Review of Co-Adaptive Brain Computer Interface. In Proceedings of the 2017 4th Asia-Pacific World Congress on Computer Science and Engineering (APWC on CSE), Mana Island, Fiji, 11–13 December 2017; pp. 200–207. [Google Scholar] [CrossRef]

- Bashashati, H.; Ward, R.K.; Bashashati, A.; Mohamed, A. Neural Network Conditional Random Fields for Self-Paced Brain Computer Interfaces. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 939–943. [Google Scholar] [CrossRef]

- Rashid, M.; Sulaiman, N.; Abdul Majeed, A.P.P.; Musa, R.M.; Ab. Nasir, A.F.; Bari, B.S.; Khatun, S. Current Status, Challenges, and Possible Solutions of EEG-Based Brain-Computer Interface: A Comprehensive Review. Front. Neurorobot. 2020, 14, 25. [Google Scholar] [CrossRef] [PubMed]

- Ramadan, R.A.; Vasilakos, A.V. Brain computer interface: Control signals review. Neurocomputing 2017, 223, 26–44. [Google Scholar] [CrossRef]

- Martini, M.L.; Oermann, E.K.; Opie, N.L.; Panov, F.; Oxley, T.; Yaeger, K. Sensor Modalities for Brain-Computer Interface Technology: A Comprehensive Literature Review. Neurosurgery 2020, 86, E108–E117. [Google Scholar] [CrossRef] [PubMed]

- Bucci, P.; Galderisi, S. Physiologic Basis of the EEG Signal. In Standard Electroencephalography in Clinical Psychiatry; John Wiley and Sons, Ltd.: Hoboken, NJ, USA, 2011; Chapter 2; pp. 7–12. [Google Scholar] [CrossRef]

- Farnsworth, B. EEG (Electroencephalography): The Complete Pocket Guide; IMotions, Global HQ: Copenhagen, Denmark, 2019. [Google Scholar]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain-computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Otaiby, T.; Abd El-Samie, F.; Alshebeili, S.; Ahmad, I. A review of channel selection algorithms for EEG signal processing. EURASIP J. Adv. Signal Process. 2015, 2015, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Wan, X.; Zhang, K.; Ramkumar, S.; Deny, J.; Emayavaramban, G.; Siva Ramkumar, M.; Hussein, A.F. A Review on Electroencephalogram Based Brain Computer Interface for Elderly Disabled. IEEE Access 2019, 7, 36380–36387. [Google Scholar] [CrossRef]

- Jeunet, C.; Jahanpour, E.; Lotte, F. Why standard brain-computer interface (BCI) training protocols should be changed: An experimental study. J. Neural Eng. 2016, 13. [Google Scholar] [CrossRef] [Green Version]

- McCreadie, K.A.; Coyle, D.H.; Prasad, G. Is Sensorimotor BCI Performance Influenced Differently by Mono, Stereo, or 3-D Auditory Feedback? IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 431–440. [Google Scholar] [CrossRef]

- Cincotti, F.; Kauhanen, L.; Aloise, F.; Palomäki, T.; Caporusso, N.; Jylänki, P.; Mattia, D.; Babiloni, F.; Vanacker, G.; Nuttin, M.; et al. Vibrotactile Feedback for Brain-Computer Interface Operation. Comput. Intell. Neurosci. 2007, 2007, 48937. [Google Scholar] [CrossRef] [Green Version]

- Lotte, F.; Faller, J.; Guger, C.; Renard, Y.; Pfurtscheller, G.; Lécuyer, A.; Leeb, R. Combining BCI with Virtual Reality: Towards New Applications and Improved BCI. In Towards Practical Brain-Computer Interfaces; Springer: Berlin/Heidelberg, Germany, 2012; pp. 197–220. [Google Scholar] [CrossRef] [Green Version]

- Leeb, R.; Lee, F.; Keinrath, C.; Scherer, R.; Bischof, H.; Pfurtscheller, G. Brain–Computer Communication: Motivation, Aim, and Impact of Exploring a Virtual Apartment. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 473–482. [Google Scholar] [CrossRef]

- Islam, M.K.; Rastegarnia, A.; Yang, Z. Methods for artifact detection and removal from scalp EEG: A review. Neurophysiol. Clin. Neurophysiol. 2016, 46, 287–305. [Google Scholar] [CrossRef]

- Uribe, L.F.S.; Filho, C.A.S.; de Oliveira, V.A.; da Silva Costa, T.B.; Rodrigues, P.G.; Soriano, D.C.; Boccato, L.; Castellano, G.; Attux, R. A correntropy-based classifier for motor imagery brain-computer interfaces. Biomed. Phys. Eng. Express 2019, 5, 065026. [Google Scholar] [CrossRef]

- Xu, B.; Zhang, L.; Song, A.; Wu, C.; Li, W.; Zhang, D.; Xu, G.; Li, H.; Zeng, H. Wavelet Transform Time-Frequency Image and Convolutional Network-Based Motor Imagery EEG Classification. IEEE Access 2019, 7, 6084–6093. [Google Scholar] [CrossRef]

- Samuel, O.W.; Geng, Y.; Li, X.; Li, G. Towards Efficient Decoding of Multiple Classes of Motor Imagery Limb Movements Based on EEG Spectral and Time Domain Descriptors. J. Med. Syst. 2017, 41, 194. [Google Scholar] [CrossRef] [PubMed]

- Hamedi, M.; Salleh, S.; Noor, A.M.; Mohammad-Rezazadeh, I. Neural network-based three-class motor imagery classification using time-domain features for BCI applications. In Proceedings of the 2014 IEEE REGION 10 SYMPOSIUM, Kuala Lumpur, Malaysia, 14–16 April 2014; pp. 204–207. [Google Scholar] [CrossRef]

- Rodríguez-Bermúdez, G.; García-Laencina, P.J. Automatic and Adaptive Classification of Electroencephalographic Signals for Brain Computer Interfaces. J. Med. Syst. 2012, 36, 51–63. [Google Scholar] [CrossRef] [PubMed]

- Güçlü, U.; Güçlütürk, Y.; Loo, C.K. Evaluation of fractal dimension estimation methods for feature extraction in motor imagery based brain computer interface. Procedia Comput. Sci. 2011, 3, 589–594. [Google Scholar] [CrossRef] [Green Version]

- Adam, A.; Ibrahim, Z.; Mokhtar, N.; Shapiai, M.I.; Cumming, P.; Mubin, M. Evaluation of different time domain peak models using extreme learning machine-based peak detection for EEG signal. SpringerPlus 2016, 5, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Yilmaz, C.M.; Kose, C.; Hatipoglu, B. A Quasi-probabilistic distribution model for EEG Signal classification by using 2-D signal representation. Comput. Methods Programs Biomed. 2018, 162, 187–196. [Google Scholar] [CrossRef]

- Kee, C.Y.; Ponnambalam, S.G.; Loo, C.K. Binary and multi-class motor imagery using Renyi entropy for feature extraction. Neural Comput. Appl. 2016, 28, 2051–2062. [Google Scholar] [CrossRef]

- Chen, S.; Luo, Z.; Gan, H. An entropy fusion method for feature extraction of EEG. Neural Comput. Appl. 2016, 29, 857–863. [Google Scholar] [CrossRef]

- Batres-Mendoza, P.; Montoro-Sanjose, C.R.; Guerra-Hernandez, E.I.; Almanza-Ojeda, D.L.; Rostro-Gonzalez, H.; Romero-Troncoso, R.J.; Ibarra-Manzano, M.A. Quaternion-Based Signal Analysis for Motor Imagery Classification from Electroencephalographic Signals. Sensors 2016, 16, 336. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aggarwal, S.; Chugh, N. Signal processing techniques for motor imagery brain computer interface: A review. Array 2019, 1, 100003. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, Z.; Ma, C.; Dang, W.; Zhang, K. A Wavelet Time-Frequency Representation Based Complex Network Method for Characterizing Brain Activities Underlying Motor Imagery Signals. IEEE Access 2018, 6, 65796–65802. [Google Scholar] [CrossRef]

- Ortiz, M.; Iáñez, E.; Contreras-Vidal, J.L.; Azorín, J.M. Analysis of the EEG Rhythms Based on the Empirical Mode Decomposition During Motor Imagery When Using a Lower-Limb Exoskeleton. A Case Study. Front. Neurorobot. 2020, 14, 48. [Google Scholar] [CrossRef]

- Lotte, F.; Guan, C. Regularizing Common Spatial Patterns to Improve BCI Designs: Unified Theory and New Algorithms. IEEE Trans. Biomed. Eng. 2011, 58, 355–362. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Zhang, C.; Jia, S.; Sun, Y. Classification of Motor Imagery Tasks in Source Domain. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 83–88. [Google Scholar] [CrossRef]

- Rejer, I.; Górski, P. EEG Classification for MI-BCI with Independent Component Analysis. In Proceedings of the 10th International Conference on Computer Recognition Systems CORES 2017; Kurzynski, M., Wozniak, M., Burduk, R., Eds.; Springer: Cham, Switzerland, 2018; pp. 393–402. [Google Scholar]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Classification of covariance matricies using a Riemannian-based kernel for BCI applications. Neurocomputing 2013, 112, 172–178. [Google Scholar] [CrossRef] [Green Version]

- Suma, D.; Meng, J.; Edelman, B.J.; He, B. Spatial-temporal aspects of continuous EEG-based neurorobotic control. J. Neural Eng. 2020, 17, 066006. [Google Scholar] [CrossRef]

- Stefano Filho, C.A.; Attux, R.; Castellano, G. Can graph metrics be used for EEG-BCIs based on hand motor imagery? Biomed. Signal Process. Control 2018, 40, 359–365. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 2018. [Google Scholar] [CrossRef] [Green Version]

- Wang, P.; Jiang, A.; Liu, X.; Shang, J.; Zhang, L. LSTM-Based EEG Classification in Motor Imagery Tasks. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2086–2095. [Google Scholar] [CrossRef] [PubMed]

- Rashkov, G.; Bobe, A.; Fastovets, D.; Komarova, M. Natural image reconstruction from brain waves: A novel visual BCI system with native feedback. bioRxiv 2019, 1–15. [Google Scholar] [CrossRef]

- Virgilio G., C.D.; Sossa A., J.H.; Antelis, J.M.; Falcón, L.E. Spiking Neural Networks applied to the classification of motor tasks in EEG signals. Neural Netw. 2020, 122, 130–143. [Google Scholar] [CrossRef]

- Lee, S.B.; Kim, H.J.; Kim, H.; Jeong, J.H.; Lee, S.W.; Kim, D.J. Comparative analysis of features extracted from EEG spatial, spectral and temporal domains for binary and multiclass motor imagery classification. Inf. Sci. 2019, 502, 190–200. [Google Scholar] [CrossRef]

- Chu, Y.; Zhao, X.; Zou, Y.; Xu, W.; Han, J.; Zhao, Y. A Decoding Scheme for Incomplete Motor Imagery EEG With Deep Belief Network. Front. Neurosci. 2018, 12, 680. [Google Scholar] [CrossRef]

- Zhang, R.; Xu, P.; Chen, R.; Li, F.; Guo, L.; Li, P.; Zhang, T.; Yao, D. Predicting Inter-session Performance of SMR-Based Brain–Computer Interface Using the Spectral Entropy of Resting-State EEG. Brain Topogr. 2015, 28, 680–690. [Google Scholar] [CrossRef]

- Guo, X.; Wu, X.; Zhang, D. Motor imagery EEG detection by empirical mode decomposition. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 2619–2622. [Google Scholar] [CrossRef]

- Ortiz-Echeverri, C.J.; Salazar-Colores, S.; Rodríguez-Reséndiz, J.; Gómez-Loenzo, R.A. A New Approach for Motor Imagery Classification Based on Sorted Blind Source Separation, Continuous Wavelet Transform, and Convolutional Neural Network. Sensors 2019, 19, 4541. [Google Scholar] [CrossRef] [Green Version]

- Ang, K.K.; Chin, Z.Y.; Zhang, H.; Guan, C. Filter Bank Common Spatial Pattern (FBCSP) in Brain-Computer Interface. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 2390–2397. [Google Scholar] [CrossRef]

- Thomas, K.P.; Guan, C.; Lau, C.T.; Vinod, A.; Ang, K. A New Discriminative Common Spatial Pattern Method for Motor Imagery Brain-Computer Interface. IEEE Trans. Biomed. Eng. 2009, 56, 2730–2733. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Zhang, B.; Lei, M.; Cui, W.; Guo, Y. A Channel-Projection Mixed-Scale Convolutional Neural Network for Motor Imagery EEG Decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1170–1180. [Google Scholar] [CrossRef]

- Yang, J.; Yao, S.; Wang, J. Deep Fusion Feature Learning Network for MI-EEG Classification. IEEE Access 2018, 6, 79050–79059. [Google Scholar] [CrossRef]

- Wu, W.; Gao, X.; Hong, B.; Gao, S. Classifying Single-Trial EEG During Motor Imagery by Iterative Spatio-Spectral Patterns Learning (ISSPL). IEEE Trans. Biomed. Eng. 2008, 55, 1733–1743. [Google Scholar] [CrossRef] [PubMed]

- Suk, H.; Lee, S. A probabilistic approach to spatio-spectral filters optimization in Brain-Computer Interface. In Proceedings of the 2011 IEEE International Conference on Systems, Man, and Cybernetics, Anchorage, AK, USA, 9–12 October 2011; pp. 19–24. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, X.; Zhang, W.; Chen, J. Learning Spatial–Spectral–Temporal EEG Features with Recurrent 3D Convolutional Neural Networks for Cross-Task Mental Workload Assessment. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 31–42. [Google Scholar] [CrossRef] [PubMed]

- Bang, J.S.; Lee, M.H.; Fazli, S.; Guan, C.; Lee, S.W. Spatio-Spectral Feature Representation for Motor Imagery Classification Using Convolutional Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Horev, I.; Yger, F.; Sugiyama, M. Geometry-aware principal component analysis for symmetric positive definite matrices. Mach. Learn. 2017, 106, 493–522. [Google Scholar] [CrossRef] [Green Version]

- Venkatesh, B.; Anuradha, J. A Review of Feature Selection and Its Methods. Cybern. Inf. Technol. 2019, 19, 3–26. [Google Scholar] [CrossRef] [Green Version]

- Battiti, R. Using Mutual Information for Selecting Features in Supervised Neural Net Learning. IEEE Trans. Neural Netw. 1994, 5, 537–550. [Google Scholar] [CrossRef] [Green Version]

- Homri, I.; Yacoub, S. A hybrid cascade method for EEG classification. Pattern Anal. Appl. 2019, 22, 1505–1516. [Google Scholar] [CrossRef]

- Ramos, A.C.; Hernandex, R.G.; Vellasco, M. Feature Selection Methods Applied to Motor Imagery Task Classification. In Proceedings of the 2016 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Cartagena, Colombia, 2–4 November 2016. [Google Scholar] [CrossRef]

- Kashef, S.; Nezamabadi-pour, H.; Nikpour, B. Multilabel feature selection: A comprehensive review and guiding experiments. WIREs Data Min. Knowl. Discov. 2018, 8, e1240. [Google Scholar] [CrossRef]

- Atyabi, A.; Shic, F.; Naples, A. Mixture of autoregressive modeling orders and its implication on single trial EEG classification. Expert Syst. Appl. 2016, 65, 164–180. [Google Scholar] [CrossRef] [Green Version]

- Baig, M.Z.; Aslam, N.; Shum, H.P.; Zhang, L. Differential evolution algorithm as a tool for optimal feature subset selection in motor imagery EEG. Expert Syst. Appl. 2017, 90, 184–195. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Differential evolution: A survey of the state-of-the-art. IEEE Trans. Evol. Comput. 2011, 15, 4–31. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-tr06; Erciyes University, Engineering Faculty, Computer Engineering Department: Kayseri, Turkey, 2005; Volume 200, pp. 1–10. [Google Scholar]

- Rakshit, P.; Bhattacharyya, S.; Konar, A.; Khasnobish, A.; Tibarewala, D.; Janarthanan, R. Artificial Bee Colony Based Feature Selection for Motor Imagery EEG Data. In Proceedings of the Seventh International Conference on Bio-Inspired Computing: Theories and Applications (BIC-TA 2012); Springer: New Delhi, India, 2012; pp. 127–138. [Google Scholar] [CrossRef]

- van der Maaten, L.; Postma, E.; Herik, H. Dimensionality Reduction: A Comparative Review. J. Mach. Learn. Res. JMLR 2007, 10, 13. [Google Scholar]

- Gupta, A.; Agrawal, R.K.; Kaur, B. Performance enhancement of mental task classification using EEG signal: A study of multivariate feature selection methods. Soft Comput. 2015, 19, 2799–2812. [Google Scholar] [CrossRef]

- Jusas, V.; Samuvel, S.G. Classification of Motor Imagery Using a Combination of User-Specific Band and Subject-Specific Band for Brain-Computer Interface. Appl. Sci. 2019, 9, 4990. [Google Scholar] [CrossRef] [Green Version]

- Ayesha, S.; Hanif, M.K.; Talib, R. Overview and comparative study of dimensionality reduction techniques for high dimensional data. Inf. Fusion 2020, 59, 44–58. [Google Scholar] [CrossRef]

- Scholkopf, B.; Smola, A.; Muller, K.R. Nonlinear Component Analysis as a Kernel Eigenvalue Problem. Neural Comput. 1996, 10, 1299–1319. [Google Scholar] [CrossRef] [Green Version]

- Pei, D.; Burns, M.; Chandramouli, R.; Vinjamuri, R. Decoding Asynchronous Reaching in Electroencephalography Using Stacked Autoencoders. IEEE Access 2018, 6, 52889–52898. [Google Scholar] [CrossRef]

- Tenenbaum, J.; Silva, V.; Langford, J. A Global Geometric Framework for Nonlinear Dimensionality Reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Iturralde, P.; Patrone, M.; Lecumberry, F.; Fernández, A. Motor Intention Recognition in EEG: In Pursuit of a Relevant Feature Set. In Proceedings of the Pattern Recognition, Image Analysis, Computer Vision, and Applications, Buenos Aires, Argentina, 3–6 September 2012; pp. 551–558. [Google Scholar] [CrossRef] [Green Version]

- Gramfort, A.; Clerc, M. Low Dimensional Representations of MEG/EEG Data Using Laplacian Eigenmaps. In Proceedings of the 2007 Joint Meeting of the 6th International Symposium on Noninvasive Functional Source Imaging of the Brain and Heart and the International Conference on Functional Biomedical Imaging, Hangzhou, China, 12–14 October 2007; pp. 169–172. [Google Scholar] [CrossRef] [Green Version]

- Lafon, S.; Lee, A.B. Diffusion maps and coarse-graining: A unified framework for dimensionality reduction, graph partitioning, and data set parameterization. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1393–1403. [Google Scholar] [CrossRef] [Green Version]

- Lee, F.; Scherer, R.; Leeb, R.; Schlögl, A.; Bischof, H.; Pfurtscheller, G. Feature Mapping using PCA, Locally Linear Embedding and Isometric Feature Mapping for EEG-based Brain Computer Interface. In Proceedings of the 28th Workshop of the Austrian Association for Pattern Recognition, Hagenberg, Austria, 17–18 June 2004; pp. 189–196. [Google Scholar]

- Li, M.; Luo, X.; Yang, J.; Sun, Y. Applying a Locally Linear Embedding Algorithm for Feature Extraction and Visualization of MI-EEG. J. Sens. 2016, 2016, 1–9. [Google Scholar] [CrossRef]

- Xie, X.; Yu, Z.L.; Lu, H.; Gu, Z.; Li, Y. Motor Imagery Classification Based on Bilinear Sub-Manifold Learning of Symmetric Positive-Definite Matrices. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 504–516. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Davoudi, A.; Ghidary, S.S.; Sadatnejad, K. Dimensionality reduction based on distance preservation to local mean for symmetric positive definite matrices and its application in brain–computer interfaces. J. Neural Eng. 2017, 14, 036019. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tanaka, T.; Uehara, T.; Tanaka, Y. Dimensionality reduction of sample covariance matrices by graph fourier transform for motor imagery brain-machine interface. In Proceedings of the 2016 IEEE Statistical Signal Processing Workshop (SSP), Palma de Mallorca, Spain, 26–29 June 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Roy, S.; Rathee, D.; Chowdhury, A.; Prasad, G. Assessing impact of channel selection on decoding of motor and cognitive imagery from MEG data. J. Neural Eng. 2020, 17, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain-computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification, 2nd ed.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2001. [Google Scholar] [CrossRef]

- Thomas, E.; Dyson, M.; Clerc, M. An analysis of performance evaluation for motor-imagery based BCI. J. Neural Eng. 2013, 10, 031001. [Google Scholar] [CrossRef]

- Schlögl, A.; Kronegg, J.; Huggins, J.; Mason, S. Evaluation Criteria for BCI Research. In Toward Brain-Computer Interfacing; MIT Press: Cambridge, UK, 2007; Volume 1, pp. 327–342. [Google Scholar]

- Wolpaw, J.R.; Ramoser, H.; McFarland, D.J.; Pfurtscheller, G. EEG-based communication: Improved accuracy by response verification. IEEE Trans. Rehabil. Eng. 1998, 6, 326–333. [Google Scholar] [CrossRef]

- Nykopp, T. Statistical Modelling Issues for the Adaptive Brain Interface. Ph.D. Thesis, Helsinki University of Technology, Espoo, Finland, 2001. [Google Scholar]

- Lotte, F.; Jeunet, C. Defining and quantifying users’ mental imagery-based BCI skills: A first step. J. Neural Eng. 2018, 15, 046030. [Google Scholar] [CrossRef] [Green Version]

- Solé-Casals, J.; Caiafa, C.; Zhao, Q.; Cichocki, A. Brain-Computer Interface with Corrupted EEG Data: A Tensor Completion Approach. Cognit. Comput. 2018. [Google Scholar] [CrossRef] [Green Version]

- Gaur, P.; Pachori, R.B.; Wang, H.; Prasad, G. An Automatic Subject Specific Intrinsic Mode Function Selection for Enhancing Two-Class EEG-Based Motor Imagery-Brain Computer Interface. IEEE Sens. J. 2019, 19, 6938–6947. [Google Scholar] [CrossRef]

- Togha, M.M.; Salehi, M.R.; Abiri, E. Improving the performance of the motor imagery-based brain-computer interfaces using local activities estimation. Biomed. Signal Process. Control 2019, 50, 52–61. [Google Scholar] [CrossRef]

- Sampanna, R.; Mitaim, S. Noise benefits in the array of brain-computer interface classification systems. Inform. Med. Unlocked 2018, 12, 88–97. [Google Scholar] [CrossRef]

- Kumar, S.; Sharma, A. A new parameter tuning approach for enhanced motor imagery EEG signal classification. Med. Biol. Eng. Comput. 2018, 56. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, C.S.; Sun, J.; Liu, D.; Wang, Q.; Paek, S.G. Removal of ocular artifacts using ICA and adaptive filter for motor imagery-based BCI. IEEE/CAA J. Autom. Sin. 2017, 1–8. [Google Scholar] [CrossRef]

- Sun, L.; Feng, Z.; Chen, B.; Lu, N. A contralateral channel guided model for EEG based motor imagery classification. Biomed. Signal Process. Control 2018, 41, 1–9. [Google Scholar] [CrossRef]

- Sagha, H.; Perdikis, S.; Millán, J.d.R.; Chavarriaga, R. Quantifying Electrode Reliability During Brain–Computer Interface Operation. IEEE Trans. Biomed. Eng. 2015, 62, 858–864. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Feng, J.K.; Jin, J.; Daly, I.; Zhou, J.; Niu, Y.; Wang, X.; Cichocki, A. An Optimized Channel Selection Method Based on Multifrequency CSP-Rank for Motor Imagery-Based BCI System. Comput. Intell. Neurosci. 2019, 2019. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ramakrishnan, A.; Satyanarayana, J. Reconstruction of EEG from limited channel acquisition using estimated signal correlation. Biomed. Signal Process. Control 2016, 27, 164–173. [Google Scholar] [CrossRef]

- Yang, Y.; Bloch, I.; Chevallier, S.; Wiart, J. Subject-Specific Channel Selection Using Time Information for Motor Imagery Brain–Computer Interfaces. Cognit. Comput. 2016, 8, 505–518. [Google Scholar] [CrossRef]

- Ruan, J.; Wu, X.; Zhou, B.; Guo, X.; Lv, Z. An Automatic Channel Selection Approach for ICA-Based Motor Imagery Brain Computer Interface. J. Med. Syst. 2018, 42, 253. [Google Scholar] [CrossRef]

- Park, S.M.; Kim, J.Y.; Sim, K.B. EEG electrode selection method based on BPSO with channel impact factor for acquisition of significant brain signal. Optik 2018, 155, 89–96. [Google Scholar] [CrossRef]

- Jin, J.; Miao, Y.; Daly, I.; Zuo, C.; Hu, D.; Cichocki, A. Correlation-based channel selection and regularized feature optimization for MI-based BCI. Neural Netw. 2019, 118, 262–270. [Google Scholar] [CrossRef]

- Yu, X.Y.; Yu, J.H.; Sim, K.B. Fruit Fly Optimization based EEG Channel Selection Method for BCI. J. Inst. Control Robot. Syst. 2016, 22, 199–203. [Google Scholar] [CrossRef]

- Masood, N.; Farooq, H.; Mustafa, I. Selection of EEG channels based on Spatial filter weights. In Proceedings of the 2017 International Conference on Communication, Computing and Digital Systems (C-CODE 2017), Islamabad, Pakistan, 8–9 March 2017; pp. 341–345. [Google Scholar] [CrossRef]

- Yang, Y.; Chevallier, S.; Wiart, J.; Bloch, I. Subject-specific time-frequency selection for multi-class motor imagery-based BCIs using few Laplacian EEG channels. Biomed. Signal Process. Control 2017, 38, 302–311. [Google Scholar] [CrossRef]

- Rajan, R.; Thekkan Devassy, S. Improving Classification Performance by Combining Feature Vectors with a Boosting Approach for Brain Computer Interface (BCI). In Intelligent Human Computer Interaction; Horain, P., Achard, C., Mallem, M., Eds.; Springer: Cham, Switzerland, 2017; pp. 73–85. [Google Scholar]

- Shahsavari Baboukani, P.; Mohammadi, S.; Azemi, G. Classifying Single-Trial EEG During Motor Imagery Using a Multivariate Mutual Information Based Phase Synchrony Measure. In Proceedings of the 2017 24th National and 2nd International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 30 November–1 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, J.; Feng, Z.; Lu, N.; Sun, L.; Luo, J. An information fusion scheme based common spatial pattern method for classification of motor imagery tasks. Biomed. Signal Process. Control 2018, 46, 10–17. [Google Scholar] [CrossRef]

- Liu, A.; Chen, K.; Liu, Q.; Ai, Q.; Xie, Y.; Chen, A. Feature Selection for Motor Imagery EEG Classification Based on Firefly Algorithm and Learning Automata. Sensors 2017, 17, 2576. [Google Scholar] [CrossRef] [Green Version]

- Kumar, S.; Sharma, A.; Tsunoda, T. An improved discriminative filter bank selection approach for motor imagery EEG signal classification using mutual information. BMC Bioinform. 2017, 18, 125–137. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Samanta, K.; Chatterjee, S.; Bose, R. Cross Subject Motor Imagery Tasks EEG Signal Classification Employing Multiplex Weighted Visibility Graph and Deep Feature Extraction. IEEE Sens. Lett. 2019, 4, 1–4. [Google Scholar] [CrossRef]

- Xie, X.; Yu, Z.L.; Gu, Z.; Zhang, J.; Cen, L.; Li, Y. Bilinear Regularized Locality Preserving Learning on Riemannian Graph for Motor Imagery BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 698–708. [Google Scholar] [CrossRef] [PubMed]

- She, Q.; Gan, H.; Ma, Y.; Luo, Z.; Potter, T.; Zhang, Y. Scale-Dependent Signal Identification in Low-Dimensional Subspace: Motor Imagery Task Classification. Neural Plast. 2016, 2016, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Özdenizci, O.; Erdoğmuş, D. Information Theoretic Feature Transformation Learning for Brain Interfaces. IEEE Trans. Biomed. Eng. 2020, 67, 69–78. [Google Scholar] [CrossRef] [Green Version]

- Razzak, I.; Hameed, I.A.; Xu, G. Robust Sparse Representation and Multiclass Support Matrix Machines for the Classification of Motor Imagery EEG Signals. IEEE J. Transl. Eng. Health Med. 2019, 7, 1–8. [Google Scholar] [CrossRef]

- Harandi, M.T.; Salzmann, M.; Hartley, R. From Manifold to Manifold: Geometry-Aware Dimensionality Reduction for SPD Matrices. In Computer Vision–ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 17–32. [Google Scholar]

- Li, M.; Han, J.; Duan, L. A novel MI-EEG imaging with the location information of electrodes. IEEE Access 2019, 8, 3197–3211. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Wang, H.T.; Li, T.; Huang, H.; He, Y.B.; Liu, X.C. A motor imagery analysis algorithm based on spatio-temporal-frequency joint selection and relevance vector machine. Kongzhi Lilun Yu Yingyong/Control Theory Appl. 2017, 34, 1403–1408. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Fan, Z.; Rehman, A.U.; Li, G.; Xiao, G. Motor Imagery EEG Signals Classification Based on Mode Amplitude and Frequency Components Using Empirical Wavelet Transform. IEEE Access 2019, 7, 127678–127692. [Google Scholar] [CrossRef]

- Selim, S.; Tantawi, M.M.; Shedeed, H.A.; Badr, A. A CSP AM-BA-SVM Approach for Motor Imagery BCI System. IEEE Access 2018, 6, 49192–49208. [Google Scholar] [CrossRef]

- Athif, M.; Ren, H. WaveCSP: A robust motor imagery classifier for consumer EEG devices. Australas. Phys. Eng. Sci. Med. 2019, 42, 1–10. [Google Scholar] [CrossRef]

- Li, X.; Guan, C.; Zhang, H.; Ang, K.K. A Unified Fisher’s Ratio Learning Method for Spatial Filter Optimization. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2727–2737. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xu, G.; Zhang, F.; Xie, J.; Li, M. Relevant Feature Integration and Extraction for Single-Trial Motor Imagery Classification. Front. Neurosci. 2017, 11, 371. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.; Chen, M.; Zhang, L. A Boosting-Based Spatial-Spectral Model for Stroke Patients’ EEG Analysis in Rehabilitation Training. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 169–179. [Google Scholar] [CrossRef]

- Cachón, A.; Vázquez, R.A. Tuning the parameters of an integrate and fire neuron via a genetic algorithm for solving pattern recognition problems. Neurocomputing 2015, 148, 187–197. [Google Scholar] [CrossRef]

- Salazar-Varas, R.; Vazquez, R.A. Evaluating spiking neural models in the classification of motor imagery EEG signals using short calibration sessions. Appl. Soft Comput. 2018, 67, 232–244. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, H.; Zhu, G.; You, F.; Kuang, S.; Sun, L. A Multi-Branch 3D Convolutional Neural Network for EEG-Based Motor Imagery Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2164–2177. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Chung, W. Frequency-Optimized Local Region Common Spatial Pattern Approach for Motor Imagery Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1378–1388. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Ding, X.; She, Q.; Luo, Z.; Potter, T.; Zhang, Y. Classification of Motor Imagery EEG Signals with Support Vector Machines and Particle Swarm Optimization. Comput. Math. Methods Med. 2016, 2016, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Costa, A.; Møller, J.; Iversen, H.; Puthusserypady, S. An adaptive CSP filter to investigate user independence in a 3-class MI-BCI paradigm. Comput. Biol. Med. 2018, 103, 24–33. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Guan, C. Spatially Regularized Common Spatial Patterns for EEG Classification. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 3712–3715. [Google Scholar] [CrossRef] [Green Version]

- Singh, A.; Lal, S.; Guesgen, H.W. Reduce Calibration Time in Motor Imagery Using Spatially Regularized Symmetric Positives-Definite Matrices Based Classification. Sensors 2019, 19, 379. [Google Scholar] [CrossRef] [Green Version]

- Saha, S.; Ahmed, K.I.U.; Mostafa, R.; Hadjileontiadis, L.; Khandoker, A. Evidence of Variabilities in EEG Dynamics During Motor Imagery-Based Multiclass Brain–Computer Interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 371–382. [Google Scholar] [CrossRef]

- He, H.; Wu, D. Transfer Learning for Brain-Computer Interfaces: An Euclidean Space Data Alignment Approach. IEEE Trans. Biomed. Eng. 2019, 67, 399–410. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hossain, I.; Khosravi, A.; Hettiarachchi, I.T.; Nahavandhi, S. Informative instance transfer learning with subject specific frequency responses for motor imagery brain computer interface. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 252–257. [Google Scholar] [CrossRef]

- Dai, M.; Wang, S.; Zheng, D.; Na, R.; Zhang, S. Domain Transfer Multiple Kernel Boosting for Classification of EEG Motor Imagery Signals. IEEE Access 2019, 7, 49951–49960. [Google Scholar] [CrossRef]

- Park, S.; Lee, D.; Lee, S. Filter Bank Regularized Common Spatial Pattern Ensemble for Small Sample Motor Imagery Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 498–505. [Google Scholar] [CrossRef]

- Azab, A.M.; Mihaylova, L.; Ang, K.K.; Arvaneh, M. Weighted Transfer Learning for Improving Motor Imagery-Based Brain–Computer Interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1352–1359. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Lal, S.; Guesgen, H.W. Motor Imagery Classification Based on Subject to Subject Transfer in Riemannian Manifold. In Proceedings of the 2019 7th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 18–20 February 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Singh, A.; Lal, S.; Guesgen, H.W. Small Sample Motor Imagery Classification Using Regularized Riemannian Features. IEEE Access 2019, 7, 46858–46869. [Google Scholar] [CrossRef]

- Jiao, Y.; Zhang, Y.; Chen, X.; Yin, E.; Jin, J.; Wang, X.; Cichocki, A. Sparse Group Representation Model for Motor Imagery EEG Classification. IEEE J. Biomed. Health Inform. 2019, 23, 631–641. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, P.L.C.; Jutten, C.; Congedo, M. Riemannian Procrustes Analysis: Transfer Learning for Brain–Computer Interfaces. IEEE Trans. Biomed. Eng. 2019, 66, 2390–2401. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.; Li, P.; Li, C.; Yao, D.; Zhang, R.; Xu, P. Separated channel convolutional neural network to realize the training free motor imagery BCI systems. Biomed. Signal Process. Control 2019, 49, 396–403. [Google Scholar] [CrossRef]

- Joadder, M.; Siuly, S.; Kabir, E.; Wang, H.; Zhang, Y. A New Design of Mental State Classification for Subject Independent BCI Systems. IRBM 2019, 40, 297–305. [Google Scholar] [CrossRef]

- Zhao, X.; Zhao, J.; Cai, W.; Wu, S. Transferring Common Spatial Filters With Semi-Supervised Learning for Zero-Training Motor Imagery Brain-Computer Interface. IEEE Access 2019, 7, 58120–58130. [Google Scholar] [CrossRef]

- Kwon, O.; Lee, M.; Guan, C.; Lee, S. Subject-Independent Brain-Computer Interfaces Based on Deep Convolutional Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3839–3852. [Google Scholar] [CrossRef]

- Yao, L.; Sheng, X.; Zhang, D.; Jiang, N.; Mrachacz-Kersting, N.; Zhu, X.; Farina, D. A Stimulus-Independent Hybrid BCI Based on Motor Imagery and Somatosensory Attentional Orientation. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1674–1682. [Google Scholar] [CrossRef]

- Shu, X.; Chen, S.; Yao, L.; Sheng, X.; Zhang, D.; Jiang, N.; Jia, J.; Zhu, X. Fast Recognition of BCI-Inefficient Users Using Physiological Features from EEG Signals: A Screening Study of Stroke Patients. Front. Neurosci. 2018, 12, 93. [Google Scholar] [CrossRef] [Green Version]

- Acqualagna, L.; Botrel, L.; Vidaurre, C.; Kübler, A.; Blankertz, B. Large-scale assessment of a fully automatic co-adaptive motor imagery-based brain computer interface. PLoS ONE 2016, 11, e0148886. [Google Scholar] [CrossRef] [Green Version]

- Shu, X.; Chen, S.; Chai, G.; Sheng, X.; Jia, J.; Zhu, X. Neural Modulation by Repetitive Transcranial Magnetic Stimulation (rTMS) for BCI Enhancement in Stroke Patients. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Honolulu, HI, USA, 17–21 July 2018; pp. 2272–2275. [Google Scholar] [CrossRef]

- Sannelli, C.; Vidaurre, C.; Müller, K.R.; Blankertz, B. A large scale screening study with a SMR-based BCI: Categorization of BCI users and differences in their SMR activity. PLoS ONE 2019, 14, e0207351. [Google Scholar] [CrossRef] [Green Version]

- Zhang, R.; Li, X.; Wang, Y.; Liu, B.; Shi, L.; Chen, M.; Zhang, L.; Hu, Y. Using Brain Network Features to Increase the Classification Accuracy of MI-BCI Inefficiency Subject. IEEE Access 2019, 7, 74490–74499. [Google Scholar] [CrossRef]

- Ahn, M.; Cho, H.; Ahn, S.; Jun, S.C. User’s Self-Prediction of Performance in Motor Imagery Brain–Computer Interface. Front. Hum. Neurosci. 2018, 12, 59. [Google Scholar] [CrossRef] [Green Version]

- Darvishi, S.; Gharabaghi, A.; Ridding, M.C.; Abbott, D.; Baumert, M. Reaction Time Predicts Brain–Computer Interface Aptitude. IEEE J. Transl. Eng. Health Med. 2018, 6, 1–11. [Google Scholar] [CrossRef]

- Müller, J.; Vidaurre, C.; Schreuder, M.; Meinecke, F.; von Bünau, P.; Müller, K.R. A mathematical model for the two-learners problem. J. Neural Eng. 2017, 14. [Google Scholar] [CrossRef]

- Vidaurre, C.; Sannelli, C.; Müller, K.R.; Blankertz, B. Machine-learning-based coadaptive calibration for Brain-computer interfaces. Neural Comput. 2011, 23, 791–816. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.H.; Kwon, O.Y.; Kim, Y.J.; Kim, H.K.; Lee, Y.E.; Williamson, J.; Fazli, S.; Lee, S.W. EEG dataset and OpenBMI toolbox for three BCI paradigms: An investigation into BCI illiteracy. GigaScience 2019, 8, giz002. [Google Scholar] [CrossRef] [PubMed]

- Sannelli, C.; Vidaurre, C.; Müller, K.R.; Blankertz, B. Ensembles of adaptive spatial filters increase BCI performance: An online evaluation. J. Neural Eng. 2016, 13, 46003. [Google Scholar] [CrossRef] [PubMed]

- Vidaurre, C.; Murguialday, A.R.; Haufe, S.; Gómez, M.; Müller, K.R.; Nikulin, V. Enhancing sensorimotor BCI performance with assistive afferent activity: An online evaluation. NeuroImage 2019, 199, 375–386. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, Z.; Yin, E.; Jiang, J.; Tang, J.; Liu, Y.; Hu, D. Toward brain-actuated car applications: Self-paced control with a motor imagery-based brain-computer interface. Comput. Biol. Med. 2016, 77, 148–155. [Google Scholar] [CrossRef] [PubMed]

- Cheng, P.; Autthasan, P.; Pijarana, B.; Chuangsuwanich, E.; Wilaiprasitporn, T. Towards Asynchronous Motor Imagery-Based Brain-Computer Interfaces: A joint training scheme using deep learning. In Proceedings of the 2018 IEEE Region 10 Conference (TENCON 2018), Jeju, Korea, 28–31 October 2018; pp. 1994–1998. [Google Scholar] [CrossRef] [Green Version]

- Sanchez-Ante, G.; Antelis, J.; Gudiño-Mendoza, B.; Falcon, L.; Sossa, H. Dendrite morphological neural networks for motor task recognition from electroencephalographic signals. Biomed. Signal Process. Control 2018, 44, 12–24. [Google Scholar] [CrossRef]

- Jiang, Y.; Hau, N.T.; Chung, W. Semiasynchronous BCI Using Wearable Two-Channel EEG. IEEE Trans. Cognit. Dev. Syst. 2018, 10, 681–686. [Google Scholar] [CrossRef]

- Sun, Y.; Feng, Z.; Zhang, J.; Zhou, Q.; Luo, J. Asynchronous motor imagery detection based on a target guided sub-band filter using wavelet packets. In Proceedings of the 2017 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 4850–4855. [Google Scholar] [CrossRef]

- He, S.; Zhou, Y.; Yu, T.; Zhang, R.; Huang, Q.; Chuai, L.; Madah-Ul-Mustafa; Gu, Z.; Yu, Z.L.; Tan, H.; et al. EEG- and EOG-based Asynchronous Hybrid BCI: A System Integrating a Speller, a Web Browser, an E-mail Client, and a File Explorer. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 1. [Google Scholar] [CrossRef]

- Yu, Y.; Liu, Y.; Jiang, J.; Yin, E.; Zhou, Z.; Hu, D. An Asynchronous Control Paradigm Based on Sequential Motor Imagery and Its Application in Wheelchair Navigation. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2367–2375. [Google Scholar] [CrossRef]

- An, H.; Kim, J.; Lee, S. Design of an asynchronous brain-computer interface for control of a virtual Avatar. In Proceedings of the 2016 4th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 22–24 February 2016; pp. 1–2. [Google Scholar] [CrossRef]

- Jiang, Y.; He, J.; Li, D.; Jin, J.; Shen, Y. Signal classification algorithm in motor imagery based on asynchronous brain-computer interface. In Proceedings of the 2019 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Auckland, New Zealand, 20–23 May 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Yousefi, R.; Rezazadeh, A.; Chau, T. Development of a robust asynchronous brain-switch using ErrP-based error correction. J. Neural Eng. 2019, 16. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, Z.; Liu, Y.; Jiang, J.; Yin, E.; Zhang, N.; Wang, Z.; Liu, Y.; Wu, X.; Hu, D. Self-Paced Operation of a Wheelchair Based on a Hybrid Brain-Computer Interface Combining Motor Imagery and P300 Potential. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2516–2526. [Google Scholar] [CrossRef]

- Wang, L.; Wu, X. Classification of Four-Class Motor Imagery EEG Data Using Spatial Filtering. In Proceedings of the 2008 2nd International Conference on Bioinformatics and Biomedical Engineering, Shanghai, China, 16–18 May 2008; pp. 2153–2156. [Google Scholar] [CrossRef]

- Grosse-Wentrup, M.; Buss, M. Multiclass Common Spatial Patterns and Information Theoretic Feature Extraction. IEEE Trans. Biomed. Eng. 2008, 55, 1991–2000. [Google Scholar] [CrossRef] [PubMed]

- Christensen, S.M.; Holm, N.S.; Puthusserypady, S. An Improved Five Class MI Based BCI Scheme for Drone Control Using Filter Bank CSP. In Proceedings of the 2019 7th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 18–20 February 2019; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Razzak, I.; Blumenstein, M.; Xu, G. Multiclass Support Matrix Machines by Maximizing the Inter-Class Margin for Single Trial EEG Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1117–1127. [Google Scholar] [CrossRef]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Multiclass Brain Computer Interface Classification by Riemannian Geometry. IEEE Trans. Biomed. Eng. 2012, 59, 920–928. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Faiz, M.Z.A.; Al-Hamadani, A.A. Online Brain Computer Interface Based Five Classes EEG To Control Humanoid Robotic Hand. In Proceedings of the 2019 42nd International Conference on Telecommunications and Signal Processing (TSP), Budapest, Hungary, 1–3 July 2019; pp. 406–410. [Google Scholar] [CrossRef]

- Aliakbaryhosseinabadi, S.; Kamavuako, E.N.; Jiang, N.; Farina, D.; Mrachacz-Kersting, N. Classification of Movement Preparation Between Attended and Distracted Self-Paced Motor Tasks. IEEE Trans. Biomed. Eng. 2019, 66, 3060–3071. [Google Scholar] [CrossRef] [PubMed]

- Dagaev, N.; Volkova, K.; Ossadtchi, A. Latent variable method for automatic adaptation to background states in motor imagery BCI. J. Neural Eng. 2017, 15, 016004. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mondini, V.; Mangia, A.; Cappello, A. EEG-Based BCI System Using Adaptive Features Extraction and Classification Procedures. Comput. Intell. Neurosci. 2016, 2016, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Schwarz, A.; Brandstetter, J.; Pereira, J.; Muller-Putz, G.R. Direct comparison of supervised and semi-supervised retraining approaches for co-adaptive BCIs. Med. Biol. Eng. Comput. 2019, 57, 2347–2357. [Google Scholar] [CrossRef] [Green Version]

- Saeedi, S.; Chavarriaga, R.; Millán, J.d.R. Long-Term Stable Control of Motor-Imagery BCI by a Locked-In User Through Adaptive Assistance. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 380–391. [Google Scholar] [CrossRef] [Green Version]

- Perdikis, S.; Leeb, R.; Millán, J.d.R. Context-aware adaptive spelling in motor imagery BCI. J. Neural Eng. 2016, 13, 036018. [Google Scholar] [CrossRef]

- Faller, J.; Vidaurre, C.; Solis-Escalante, T.; Neuper, C.; Scherer, R. Autocalibration and Recurrent Adaptation: Towards a Plug and Play Online ERD-BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 313–319. [Google Scholar] [CrossRef]

- Raza, H.; Rathee, D.; Zhou, S.M.; Cecotti, H.; Prasad, G. Covariate shift estimation based adaptive ensemble learning for handling non-stationarity in motor imagery related EEG-based brain-computer interface. Neurocomputing 2019, 343, 154–166. [Google Scholar] [CrossRef]

- Rong, H.; Li, C.; Bao, R.; Chen, B. Incremental Adaptive EEG Classification of Motor Imagery-based BCI. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Sharghian, V.; Rezaii, T.Y.; Farzamnia, A.; Tinati, M.A. Online Dictionary Learning for Sparse Representation-Based Classification of Motor Imagery EEG. In Proceedings of the 2019 27th Iranian Conference on Electrical Engineering (ICEE), Yazd, Iran, 30 April–2 May 2019; pp. 1793–1797. [Google Scholar] [CrossRef]

- Zhang, Z.; Foong, R.; Phua, K.S.; Wang, C.; Ang, K.K. Modeling EEG-based Motor Imagery with Session to Session Online Adaptation. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1988–1991. [Google Scholar] [CrossRef]

- Andreu-Perez, J.; Cao, F.; Hagras, H.; Yang, G. A Self-Adaptive Online Brain–Machine Interface of a Humanoid Robot Through a General Type-2 Fuzzy Inference System. IEEE Trans. Fuzzy Syst. 2018, 26, 101–116. [Google Scholar] [CrossRef] [Green Version]

- Ang, K.K.; Guan, C. EEG-Based Strategies to Detect Motor Imagery for Control and Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 392–401. [Google Scholar] [CrossRef]

- Abdalsalam, E.; Yusoff, M.Z.; Malik, A.; Kamel, N.; Mahmoud, D. Modulation of sensorimotor rhythms for brain-computer interface using motor imagery with online feedback. Signal Image Video Process. 2017. [Google Scholar] [CrossRef]

- Ron-Angevin, R.; Díaz-Estrella, A. Brain–computer interface: Changes in performance using virtual reality techniques. Neurosci. Lett. 2009, 449, 123–127. [Google Scholar] [CrossRef]

- Achanccaray, D.; Pacheco, K.; Carranza, E.; Hayashibe, M. Immersive Virtual Reality Feedback in a Brain Computer Interface for Upper Limb Rehabilitation. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 1006–1010. [Google Scholar] [CrossRef]

- Alchalabi, B.; Faubert, J. A Comparison between BCI Simulation and Neurofeedback for Forward/Backward Navigation in Virtual Reality. Comput. Intell. Neurosci. 2019, 2019, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Asensio-Cubero, J.; Gan, J.; Palaniappan, R. Multiresolution Analysis over Graphs for a Motor Imagery Based Online BCI Game. Comput. Biol. Med. 2015, 68. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jianjun, M.; He, B. Exploring Training Effect in 42 Human Subjects Using a Non-invasive Sensorimotor Rhythm Based Online BCI. Front. Hum. Neurosci. 2019, 13, 128. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Lee, M.; Lee, S. Self-paced training on motor imagery-based BCI for minimal calibration time. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2297–2301. [Google Scholar] [CrossRef]

- Jeunet, C.; N’Kaoua, B.; Lotte, F. Advances in user-training for mental-imagery-based BCI control: Psychological and cognitive factors and their neural correlates. In Brain-Computer Interfaces: Lab Experiments to Real-World Applications; Progress in Brain Research; Coyle, D., Ed.; Elsevier: Amsterdam, The Netherlands, 2016; Chapter 1; Volume 228, pp. 3–35. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, Y.; Li, J.; Wang, F.; Wang, Z. Covert Verb Reading Contributes to Signal Classification of Motor Imagery in BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 45–50. [Google Scholar] [CrossRef]

- Wang, L.; Liu, X.; Liang, Z.; Yang, Z.; Hu, X. Analysis and classification of hybrid BCI based on motor imagery and speech imagery. Measurement 2019, 147, 106842. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, Y.; Chen, L.; Gu, B.; Liu, S.; Xu, M.; Qi, H.; He, F.; Ming, D. A BCI based visual-haptic neurofeedback training improves cortical activations and classification performance during motor imagery. J. Neural Eng. 2019, 16, 066012. [Google Scholar] [CrossRef]

- Liburkina, S.; Vasilyev, A.; Yakovlev, L.; Gordleeva, S.; Kaplan, A. Motor imagery based brain computer interface with vibrotactile interaction. Zhurnal Vysshei Nervnoi Deyatelnosti Imeni I.P. Pavlova 2017, 67, 414–429. [Google Scholar] [CrossRef]

- Pillette, L.; Jeunet, C.; Mansencal, B.; N’Kambou, R.; N’Kaoua, B.; Lotte, F. A physical learning companion for Mental-Imagery BCI User Training. Int. J. Hum.-Comput. Stud. 2020, 136, 102380. [Google Scholar] [CrossRef] [Green Version]

- Škola, F.; Tinková, S.; Liarokapis, F. Progressive Training for Motor Imagery Brain-Computer Interfaces Using Gamification and Virtual Reality Embodiment. Front. Hum. Neurosci. 2019, 13, 329. [Google Scholar] [CrossRef] [PubMed]

| A Summary of Feature Extraction Methods | ||

|---|---|---|

| Temporal methods | Statistical Features [19,20] | , |

| Hijorth features [21] | ||

| RMS [20] | ||

| IEEG [20] | ||

| Fractal Dimension [22] | ||

| Autoregressive modeling [21] | where {a for i = 1,…, p} are AR model coefficients and p is the model order | |

| Peak-Valley modeling [23,24] | Cosine angles, Euclidean distance between neighbouring peak and valley points | |

| Entropy [25,26] | ||

| Quaternion modeling [27] | ||

| Spectral methods | Band power [19] | |

| Spectral Entropy [26] | , P(f) is PSD of signal | |

| Spectral statistical Features [19] | Mean Peak Frequency, Mean Power, Variance of Central Frequency etc. | |

| Time-frequency Methods | STFT [28] | |

| Wavelet transform [29] | ||

| EMD [30] | ||

| Spatial Methods | CSP [31] | |

| BSS [32,33] | Approaches like ICA, CCD estimate | |

| Spatio-temporal methods | Sample covariance matrices [34] | Where is covariance matrix of single trial |

| Mapping Function | Objective Function | Min/Max Algorithm | |

|---|---|---|---|

| DT | Gain impurity, information gain | greedy algorithm | |

| LDA | Eigen value solver | ||

| SVM | Quadratic Programming | ||

| R-SVM | |||

| MLP | MSE, Cross entropy, Hinge | SGD, Adam | |

| CNN | |||

| MDRM | Averaging approaches |

| Prediction | |||||

|---|---|---|---|---|---|

| Target | |||||

| − | − | − | − | − | |

| Metrics | Two Class | Multi Class (N-Class) | |

|---|---|---|---|

| BCI decoding capabilty | Accuracy | , where | |

| Kappa | , | ||

| sensitivity | , where | ||

| ITR | |||

| User encoding capability | Stability | ||

| Distinct | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, A.; Hussain, A.A.; Lal, S.; Guesgen, H.W. A Comprehensive Review on Critical Issues and Possible Solutions of Motor Imagery Based Electroencephalography Brain-Computer Interface. Sensors 2021, 21, 2173. https://doi.org/10.3390/s21062173

Singh A, Hussain AA, Lal S, Guesgen HW. A Comprehensive Review on Critical Issues and Possible Solutions of Motor Imagery Based Electroencephalography Brain-Computer Interface. Sensors. 2021; 21(6):2173. https://doi.org/10.3390/s21062173

Chicago/Turabian StyleSingh, Amardeep, Ali Abdul Hussain, Sunil Lal, and Hans W. Guesgen. 2021. "A Comprehensive Review on Critical Issues and Possible Solutions of Motor Imagery Based Electroencephalography Brain-Computer Interface" Sensors 21, no. 6: 2173. https://doi.org/10.3390/s21062173