Axle box bearings of rail vehicle, as a key component of railway running gear, are used to adapt the rotational movement of wheelsets into a longitudinal motion of the car body along the track. Any fault state of the axle box bearing seriously challenges the running safety of rail vehicles [

1]. Different from other kinds of bearings, axle box bearings work in a harsh condition and are heavily subjected to wheel–rail contact force, dynamic vibration generated by the car body and frame, meshing excitation during the gear engagement in the power transmission of gear box, and the excited dynamic load generated by the bearing itself [

2]. Therefore, it is necessary to monitor the health condition of the bearings to ensure the running safety of rail vehicles.

At present, the condition monitoring and health diagnosis methods of bearings in railway applications are mainly categorized into two groups: on-board monitoring based on vibration or temperature, and trackside monitoring based on acoustics. The on-board monitoring requires additional detecting equipment installed on the bogie, which greatly increases the manufacturing cost. The acoustic signals measured by trackside monitoring are seriously affected by ambient noise [

3,

4]. In order to reduce monitoring cost and further improve monitoring accuracy and reliability, it is necessary to develop a new method for bearing monitoring.

In contrast, there are many monitoring technologies for structural health monitoring which can potentially apply to bearing condition monitoring. Structural health monitoring is widely used for the assessment of structural performance and safety state by monitoring, analyzing, and identifying various loads and structural responses of the target structures [

5,

6,

7]. Displacement is an important index for structural state evaluation and performance evaluation [

6] because the displacement can be further converted into a corresponding physical index for structural safety assessment. Static and dynamic characteristics of the structure, such as bearing capacity [

8], deflection [

9], deformation [

10], load distribution [

11], load input [

12], influence line [

13], influence surface [

14], modal parameters, etc. [

15,

16], can thus be reflected by the structural displacement. Among them, structural displacement monitoring based on computer vision has attracted more and more attention because it has many advantages, e.g., non-contact, high accuracy, time and cost saving, multi-point monitoring, etc. [

17]. Computer vision monitoring methods of structural displacement have been applied to many tasks of bridge health monitoring, Yoon et al. [

18] used a UAV(Unmanned Aerial Vehicles) to carry a 4K camera to monitor the displacement of a steel truss bridge, and obtained the absolute displacement of the structure without the influence of UAV movement. Ye et al. [

19] used a computer-controlled programmable industrial camera to monitor the behavior of an arch bridge under vehicle load, obtaining the influence line of its structural displacement, and realizing the real-time online displacement monitoring of multiple bridges. Tian et al. [

20] combined the acceleration sensor and visual displacement measurement method to carry out an impact test of a structure and construct the frequency response function of the structure, to realize the estimation of the structure’s mode, mode shape, damping, and modal scale factor, and to realize the impact displacement monitoring of the pedestrian bridge. Besides the bridge health monitoring, many other engineering applications also use this method to monitor and identify structural displacements. For example, Chang et al. used structural displacement monitoring, feature extraction, and the support vector machine of computer vision to form vibration monitoring systems for the on-site diagnosis and performance evaluation of industrial motors and carried out preventive maintenance experiments [

21]. Liu studied a track displacement monitoring system in which a fixed camera at the trackside was used for imaging and then the actual displacement of the track was calculated through a digital image processing algorithm, which realized an accurate non-contact measurement of track displacement [

22]. Based on the above studies using computer vision to monitor structural displacement in different engineering applications, it can be seen that it is effective, convenient, and accurate to monitor structural states by detecting displacement signals.

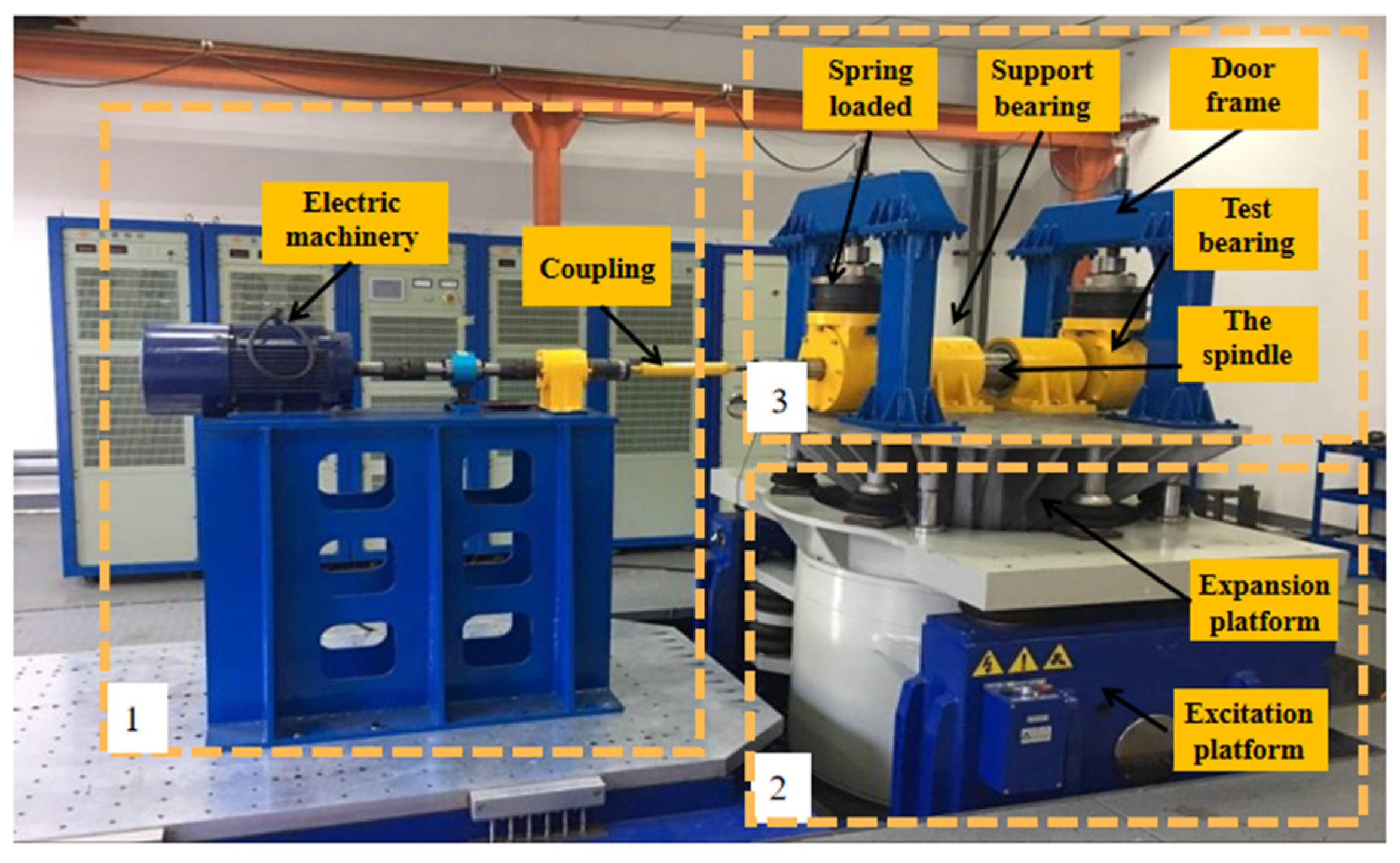

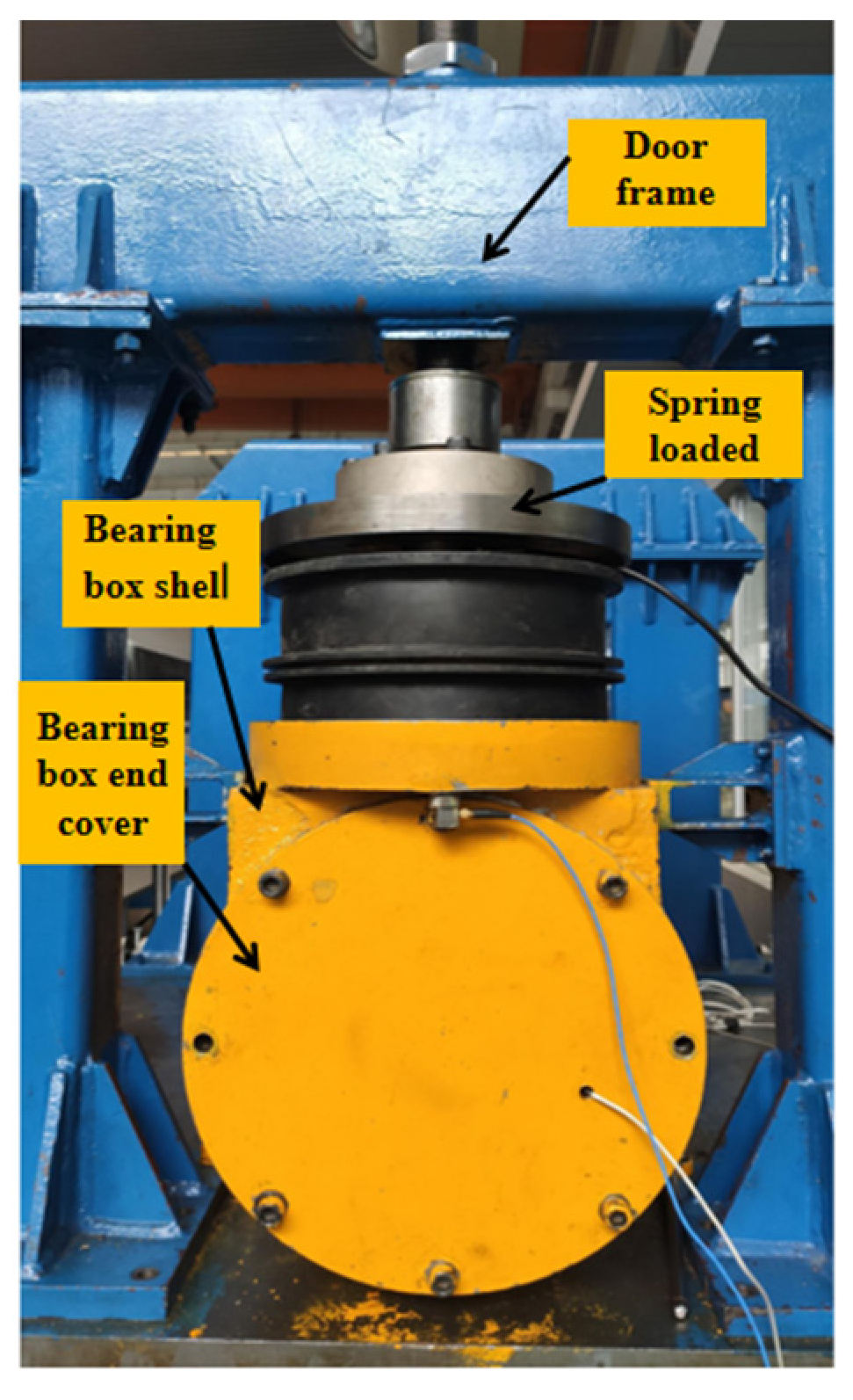

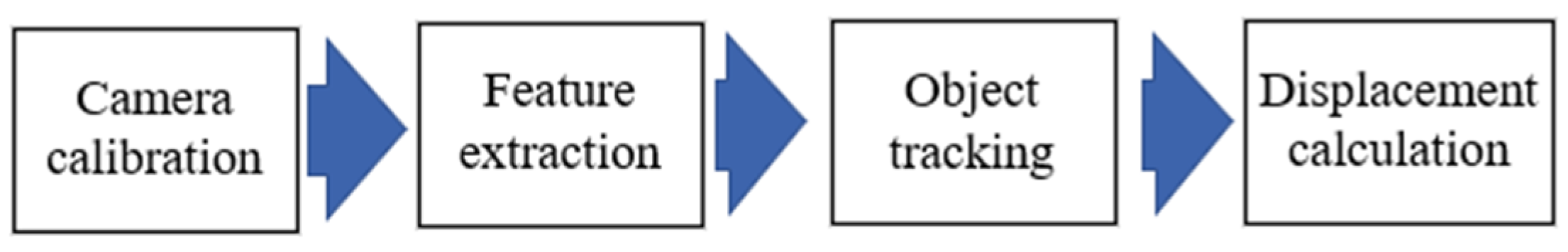

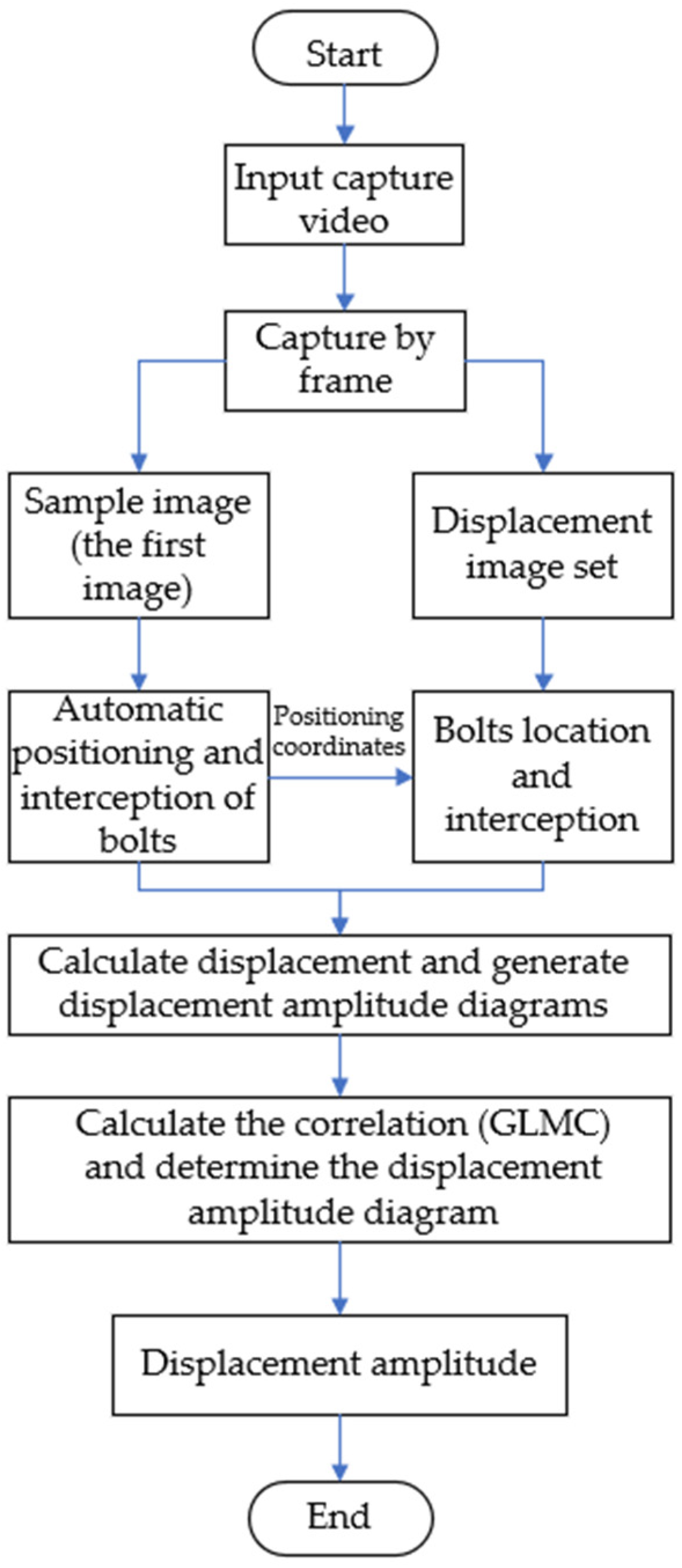

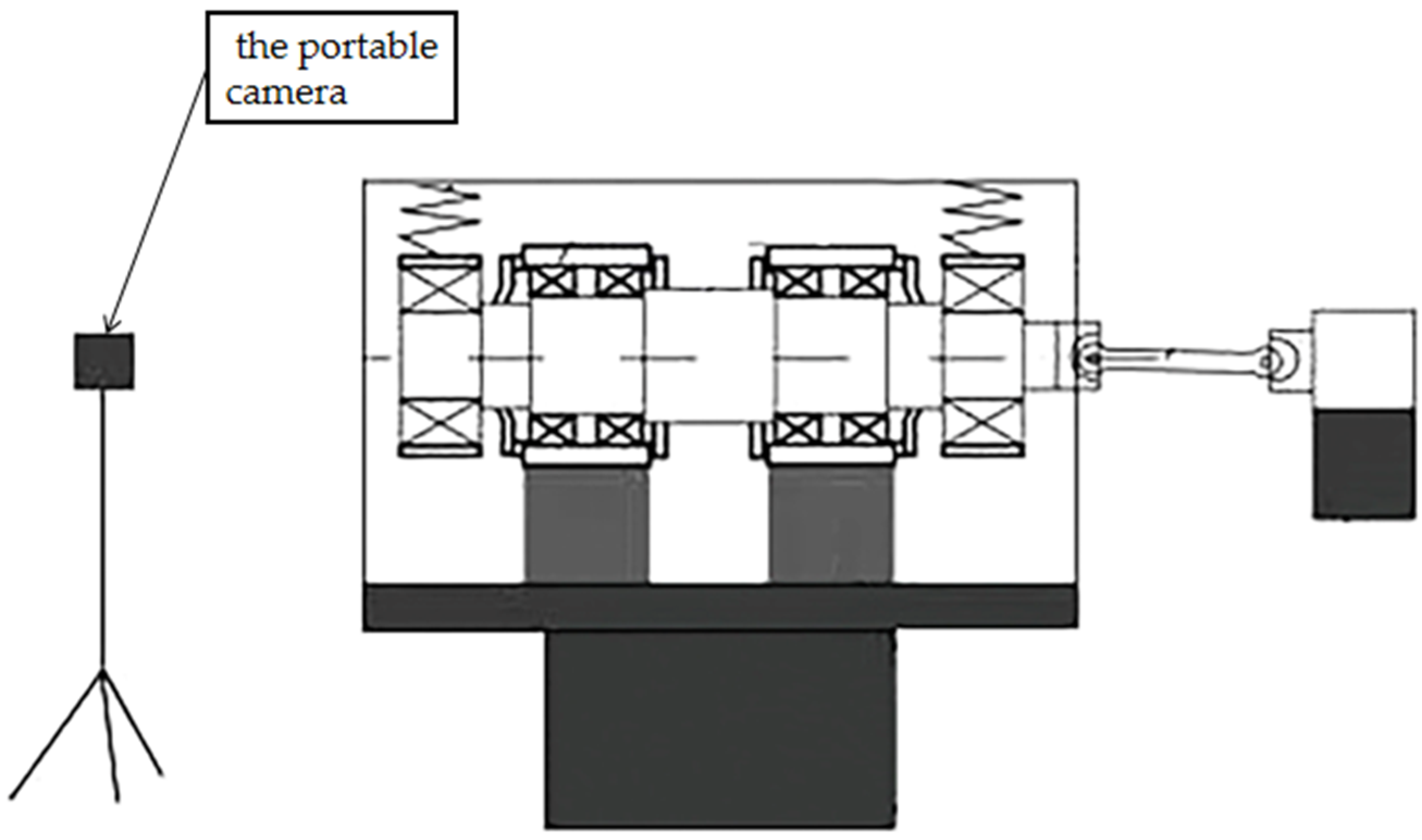

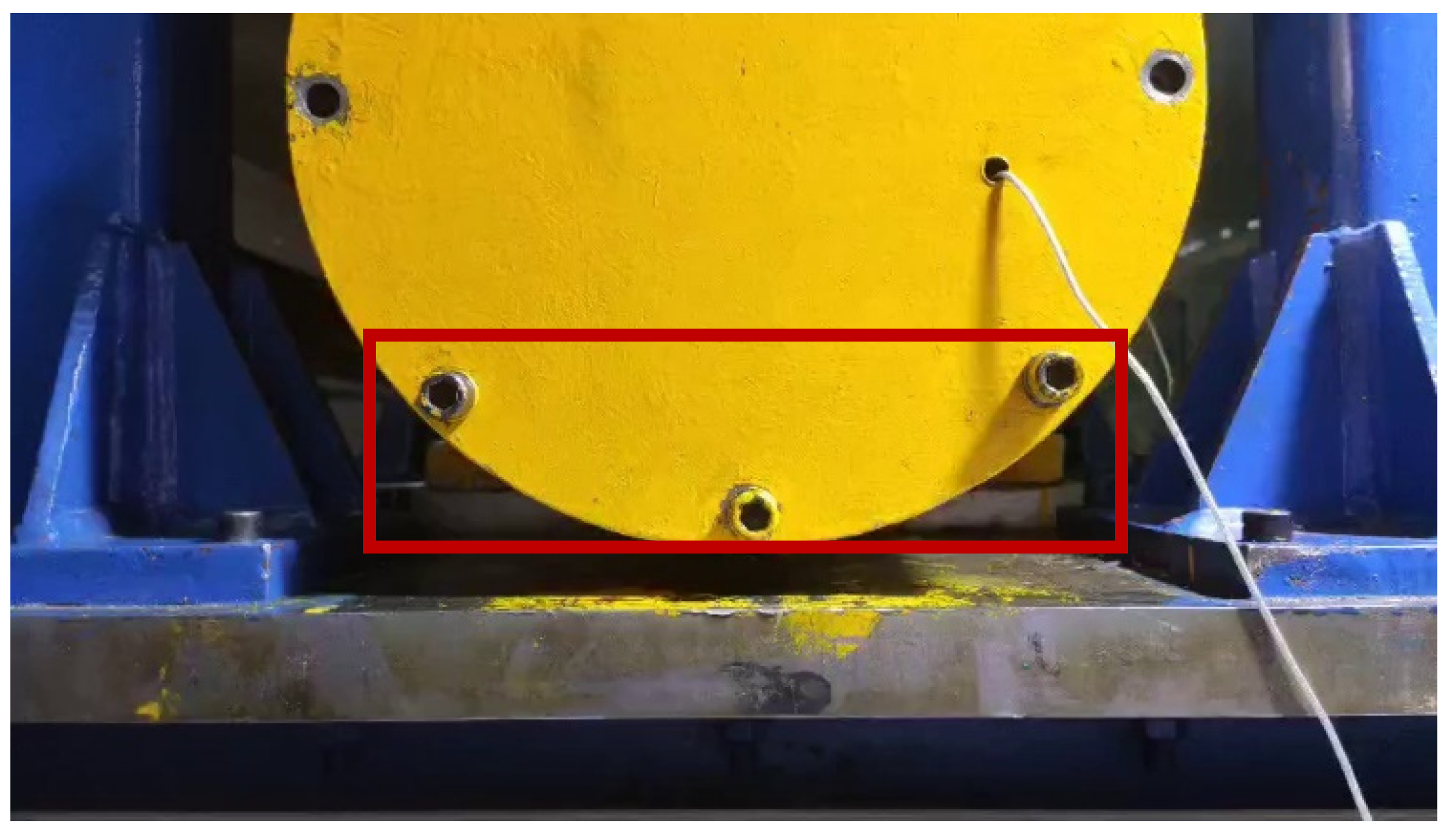

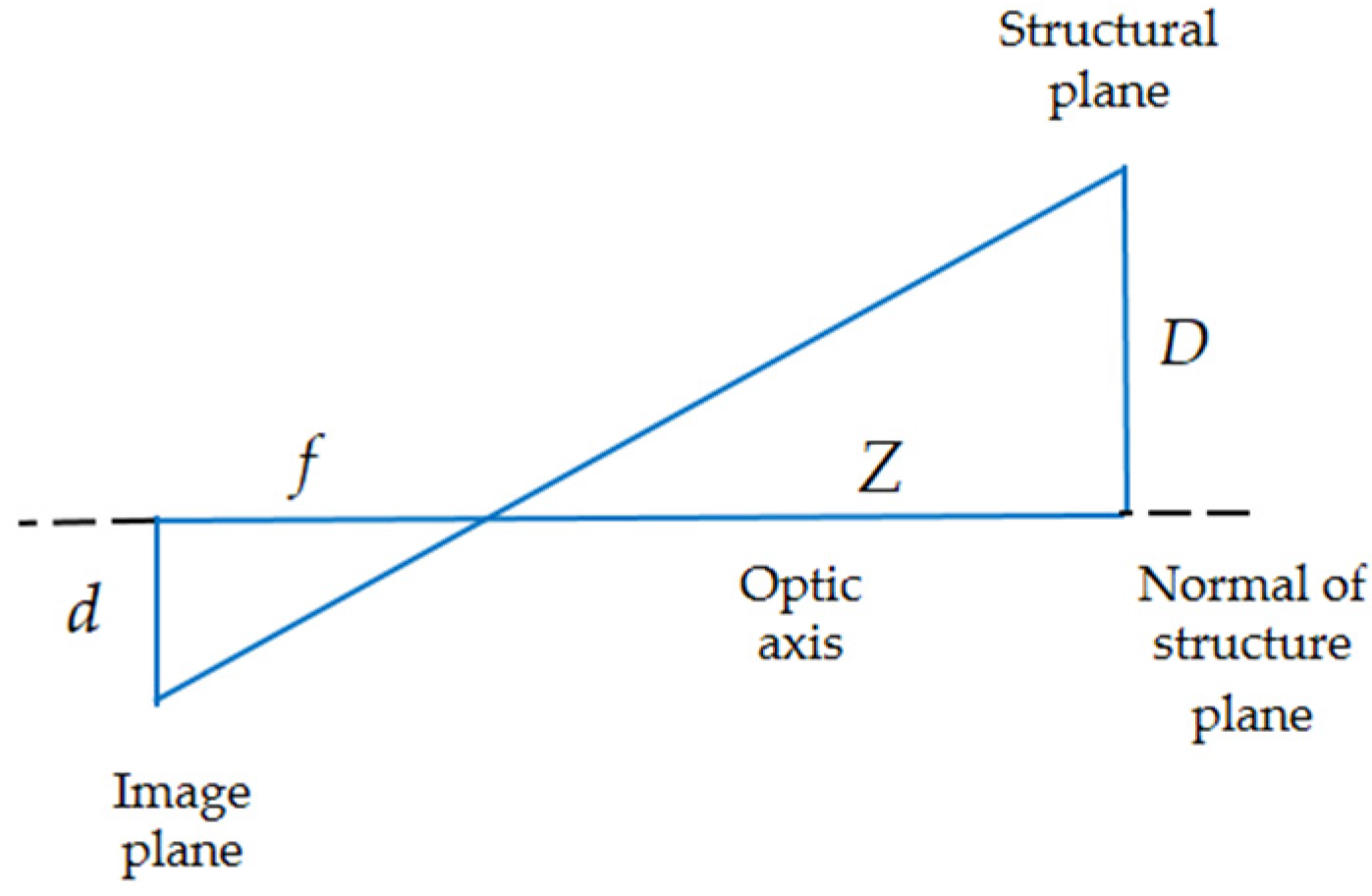

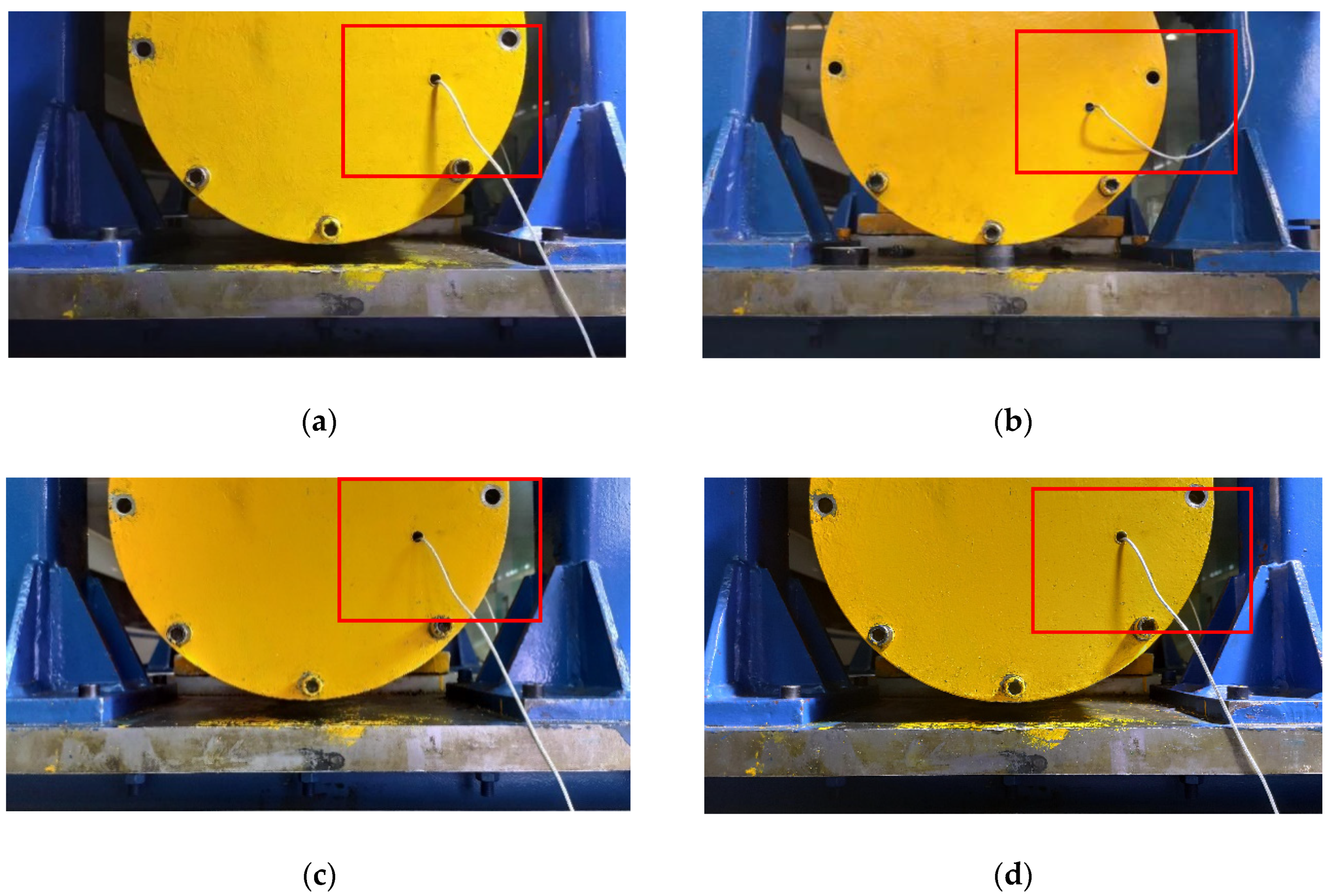

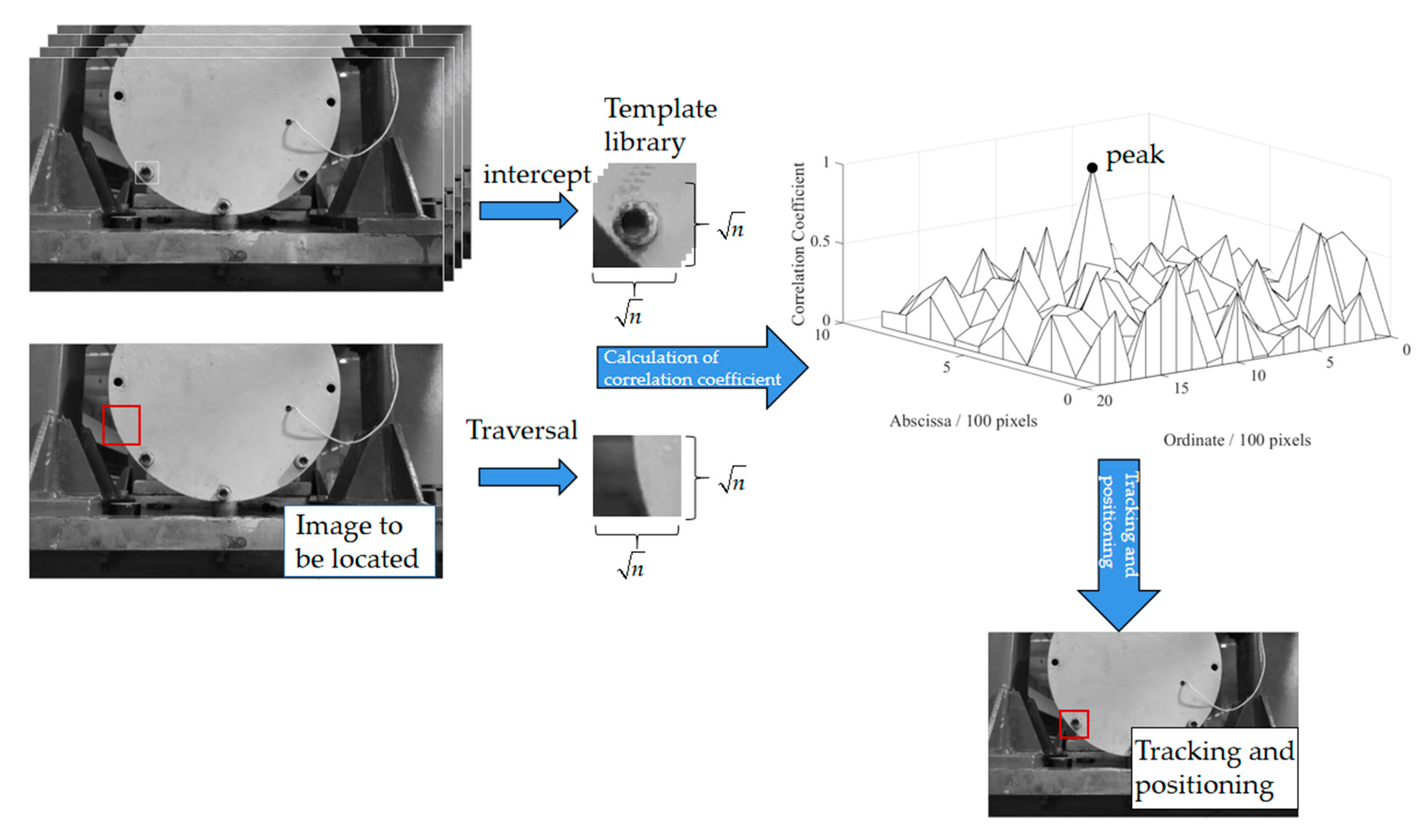

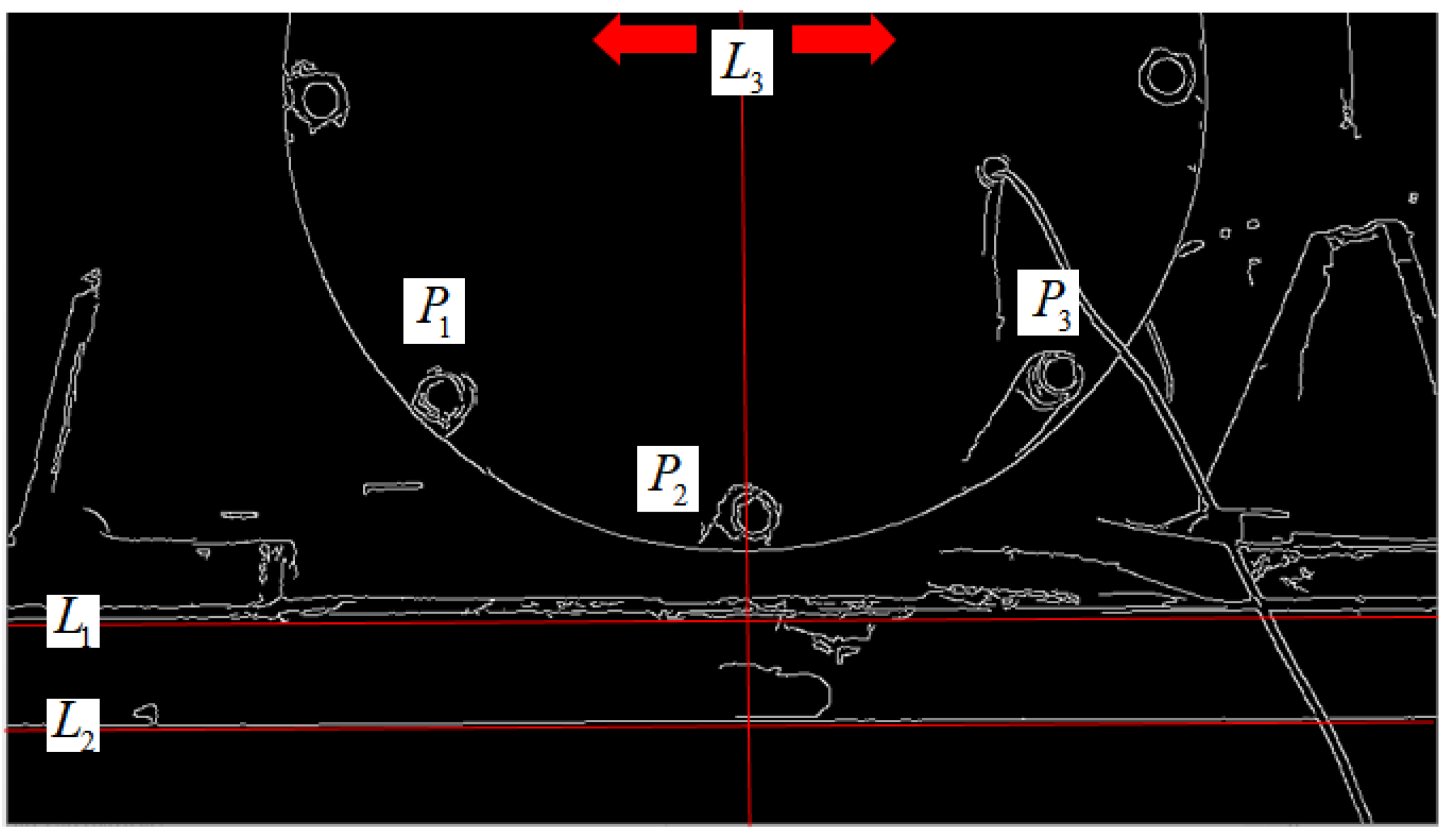

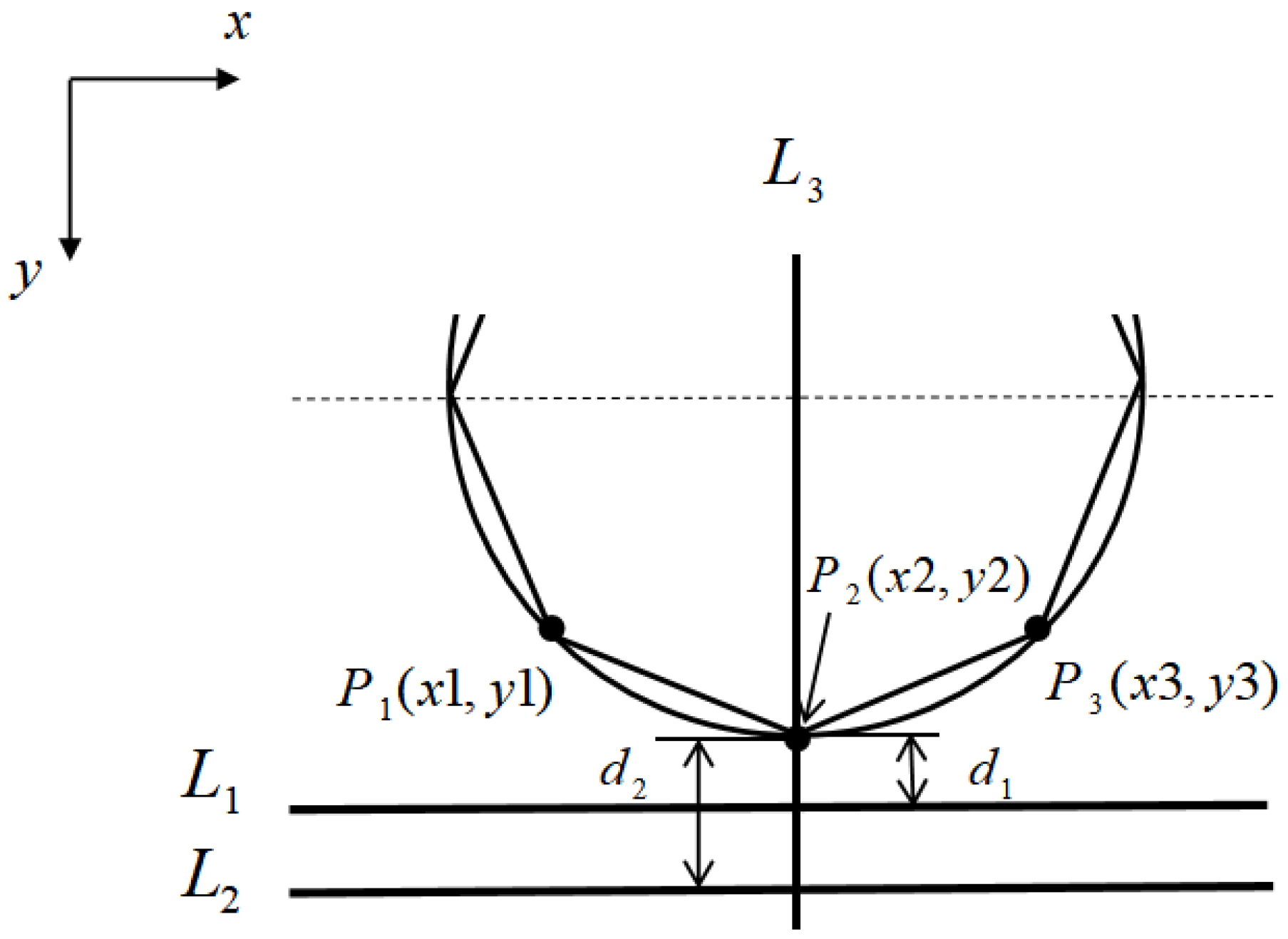

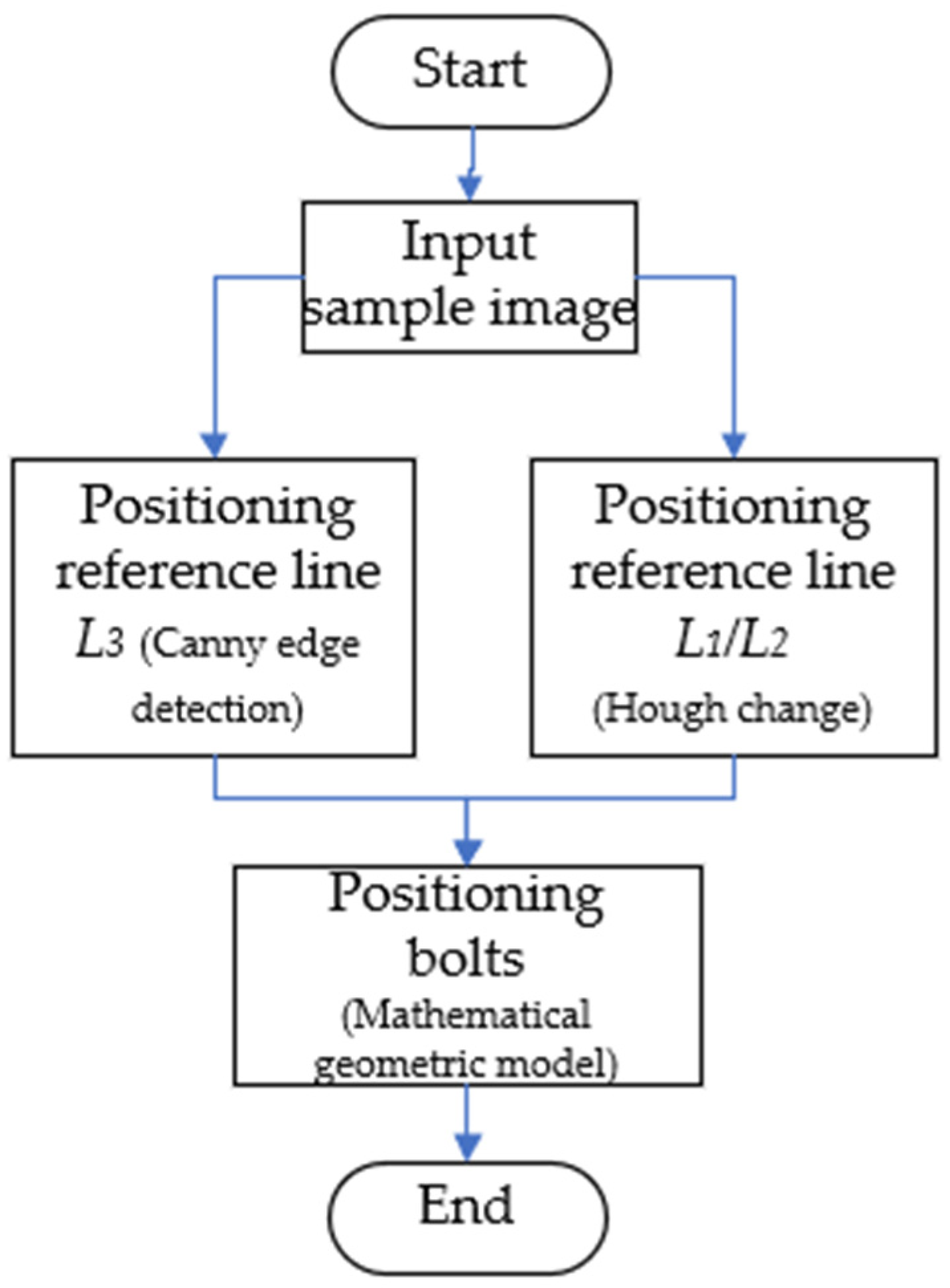

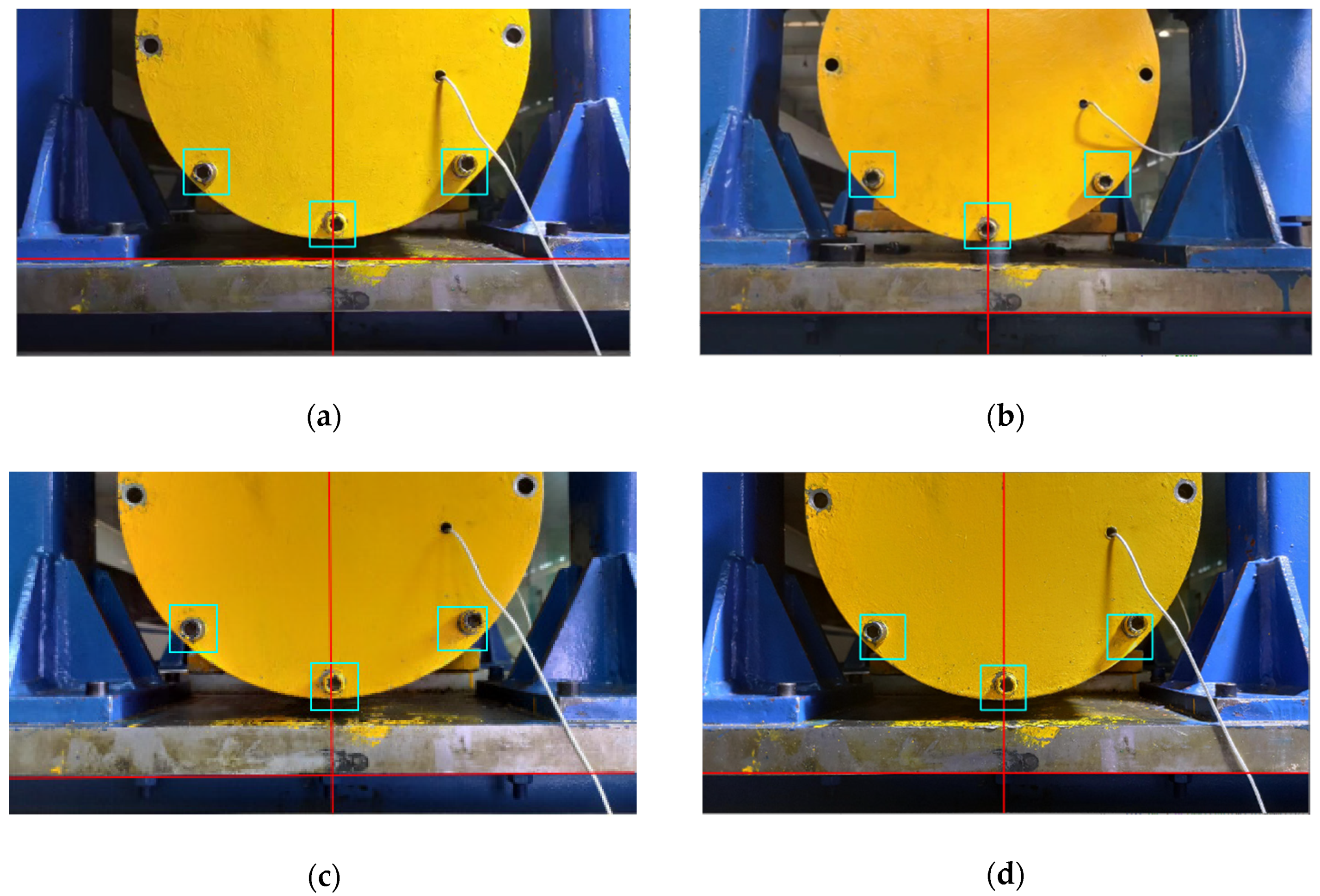

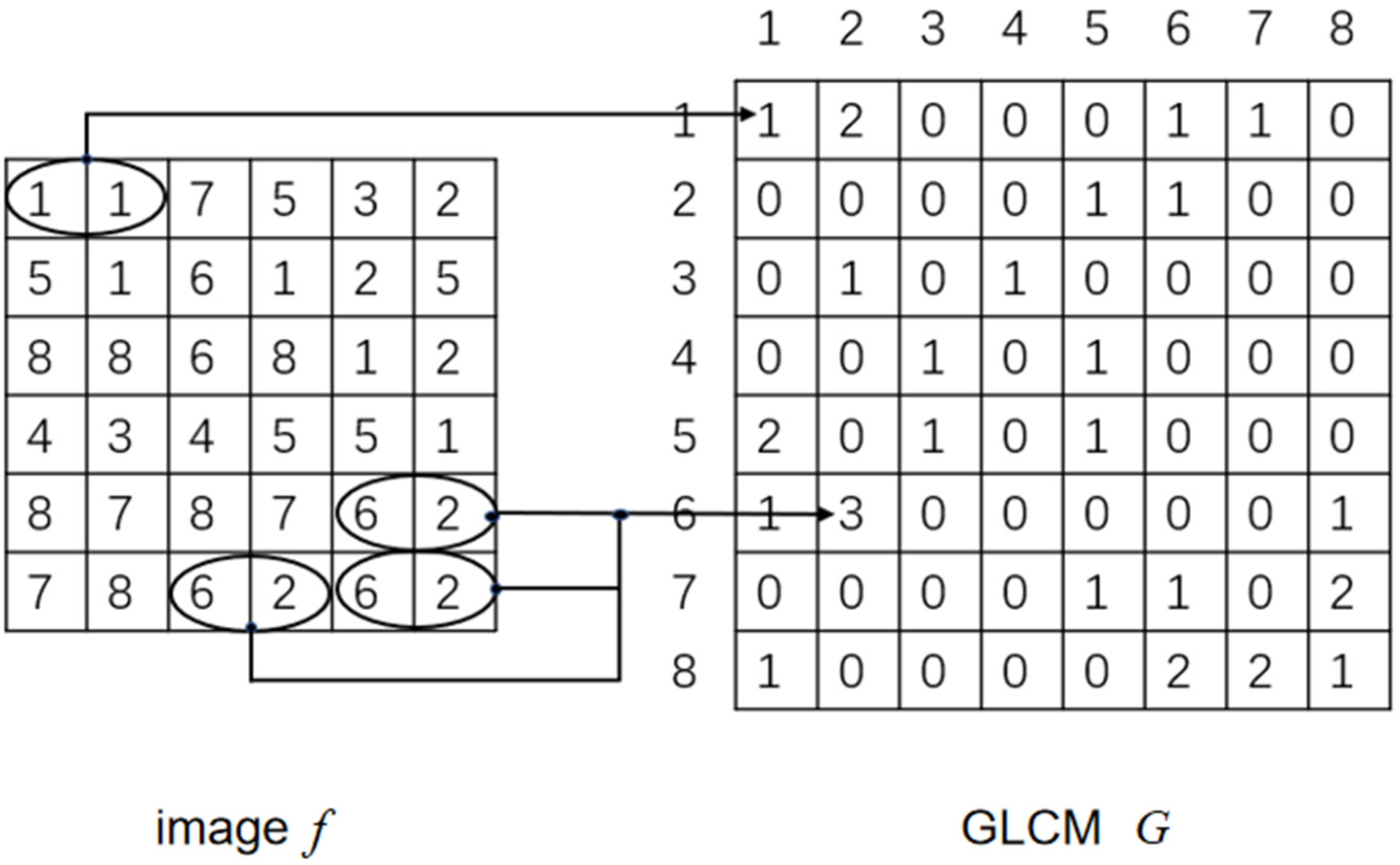

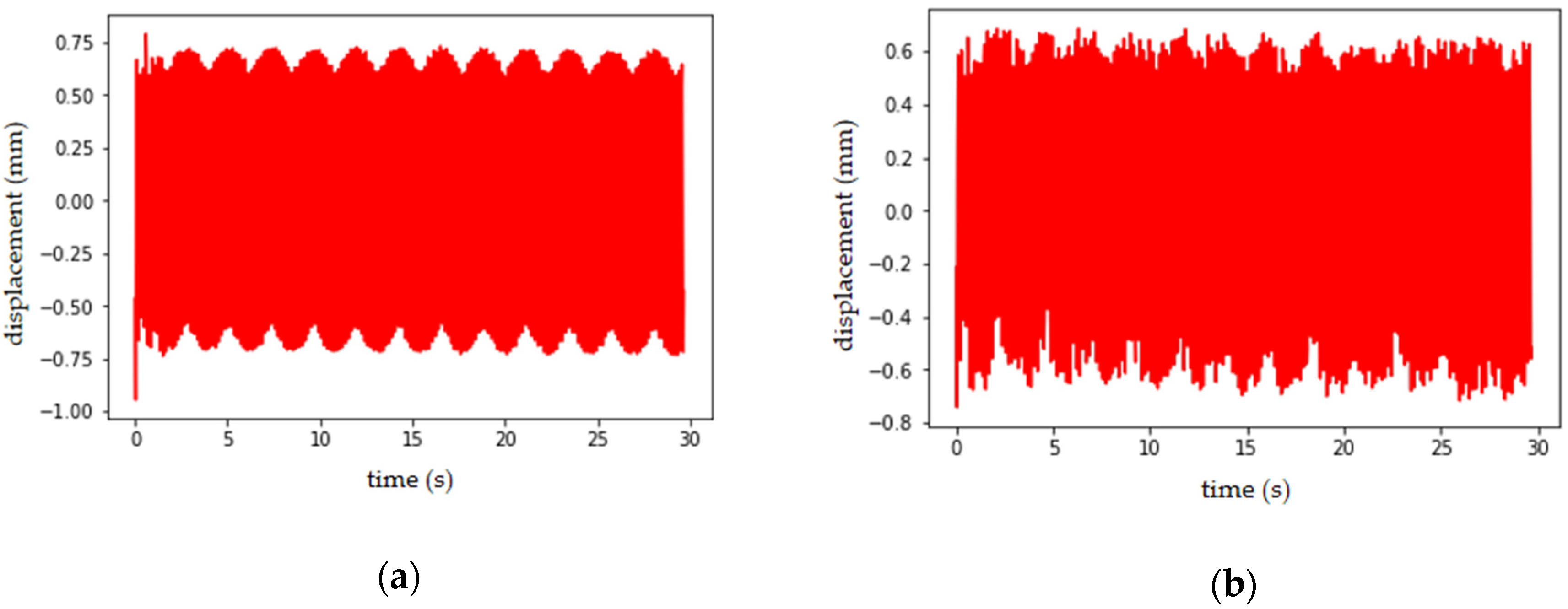

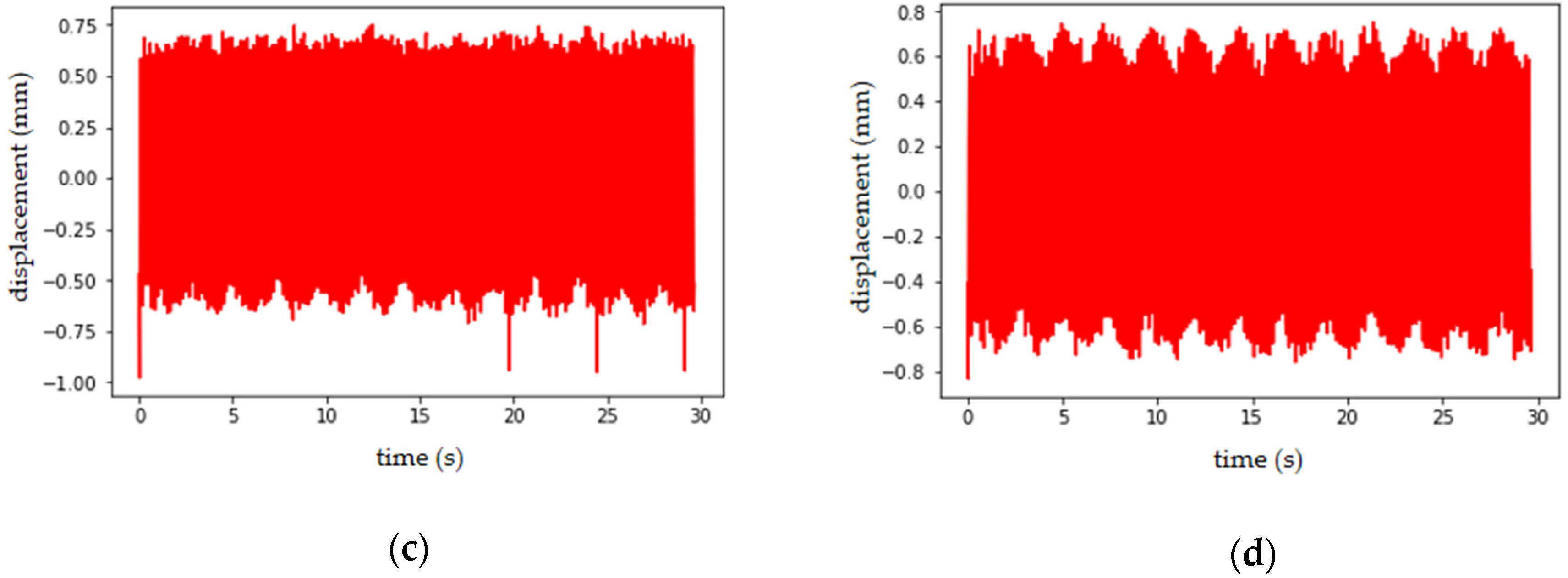

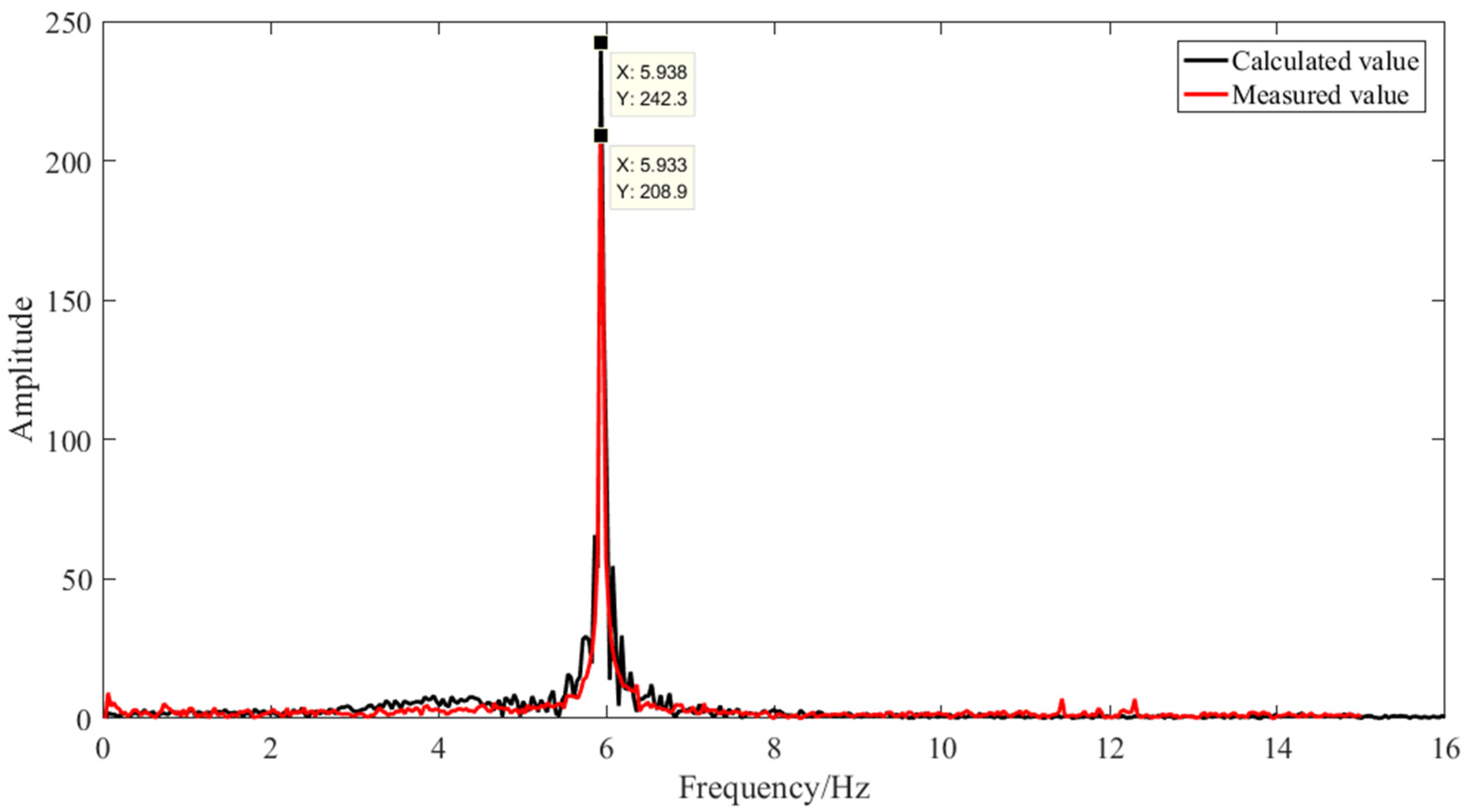

Based on the existing bearing monitoring are some shortcomings of traditional methods (including installing additional sensing devices on the bogie which increases manufacturing cost; trackside monitoring is susceptible to ambient noise, etc.). In this article, a displacement monitoring method based on computer vision to monitor the vertical displacement of the axle box bearing of the rail vehicles under simulated real working conditions in proposed, in order to realize the non-contact, high accuracy of axle box bearings of condition monitoring, the realization of ultimate axle box bearing fault diagnosis, and preventive maintenance. Firstly, a portable camera is used to image the platform and detect the displacement amplitude of the bolts, which is used to calculate the state of the bearing through the phase correlation method. Next, the displacement amplitudes of the bearing system in the vertical direction are derived by comparing the correlations of the image’s gray-level co-occurrence matrix (GLCM). Finally, for verification, the measured displacement is checked against the measurement from a laser displacement sensor. In the following sections, the proposed approach is used to monitor the displacement of several sets of the platform under different working conditions, and the experimental results are analyzed. Finally, the associated open research challenges are discussed.