Deep Learning on Oral Squamous Cell Carcinoma Ex Vivo Fluorescent Confocal Microscopy Data: A Feasibility Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Patient Cohort

2.2. Tissue Processing and Ex Vivo FCM

2.3. Tissue Annotation

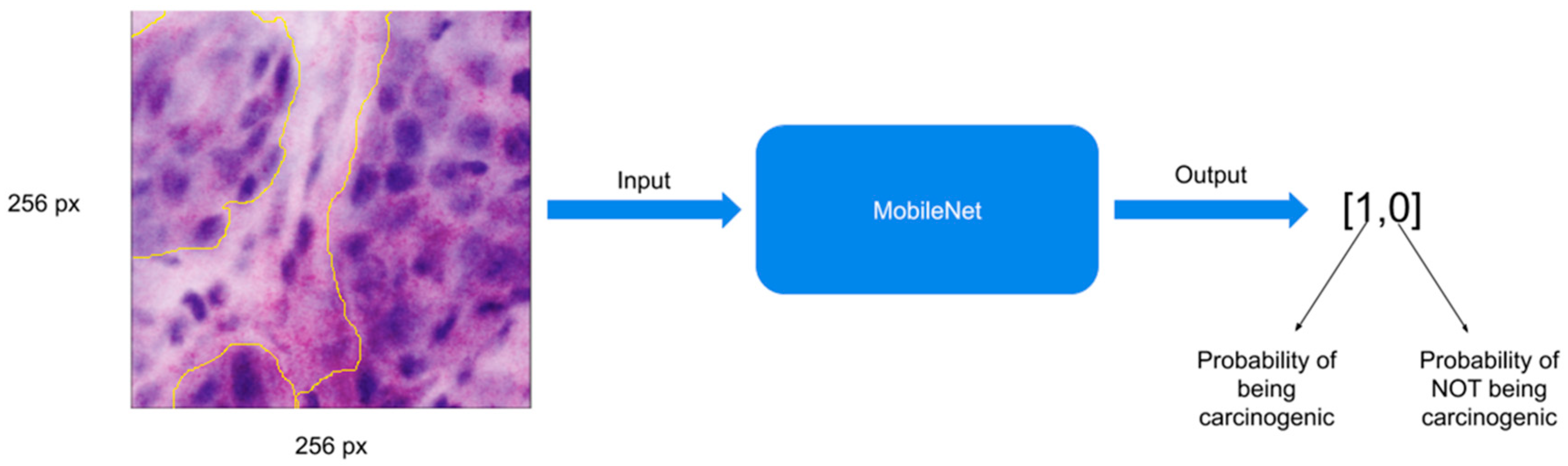

2.4. Image Pre-Processing and Convolutional Neural Networks

2.5. Training the MobileNet Model

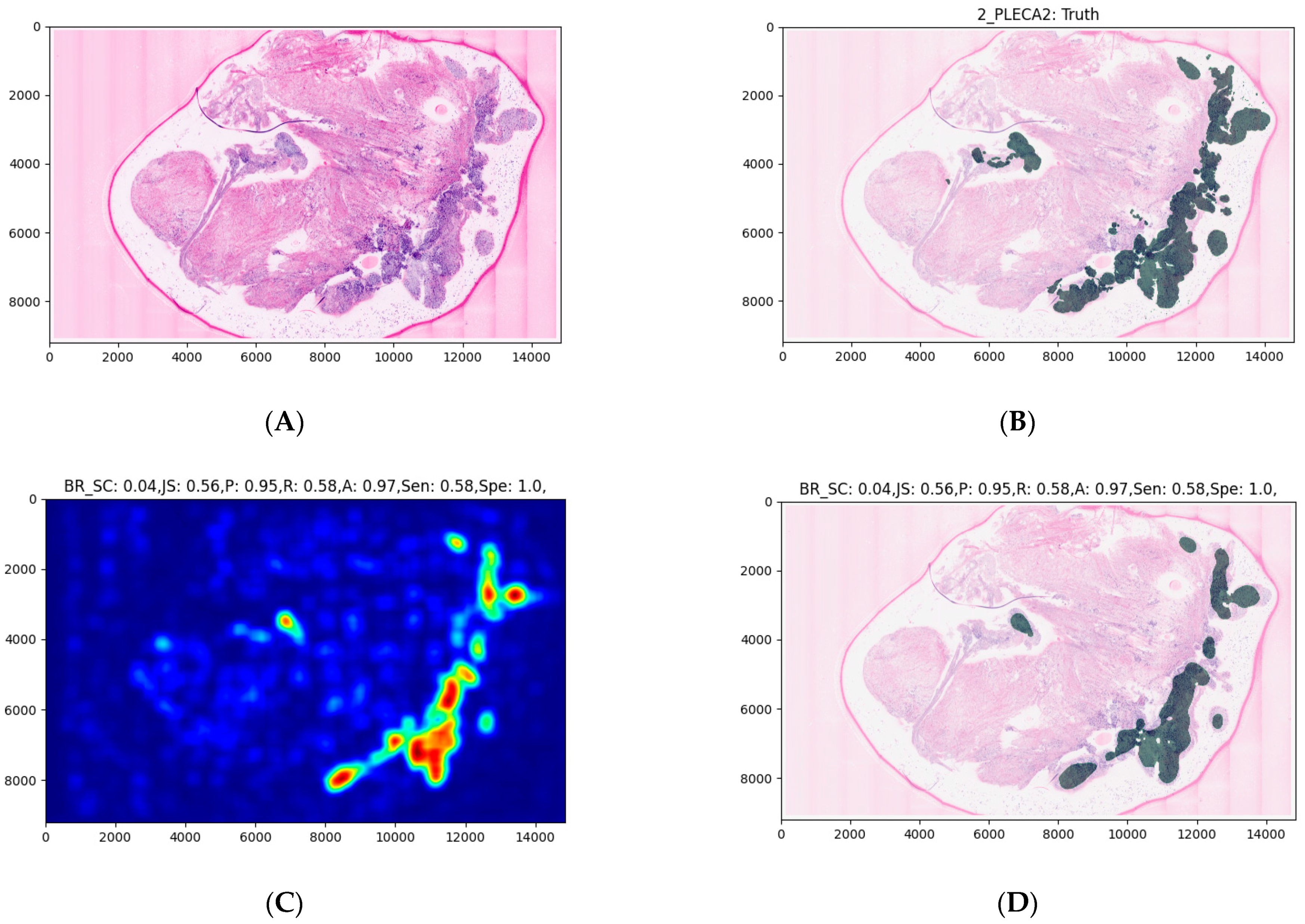

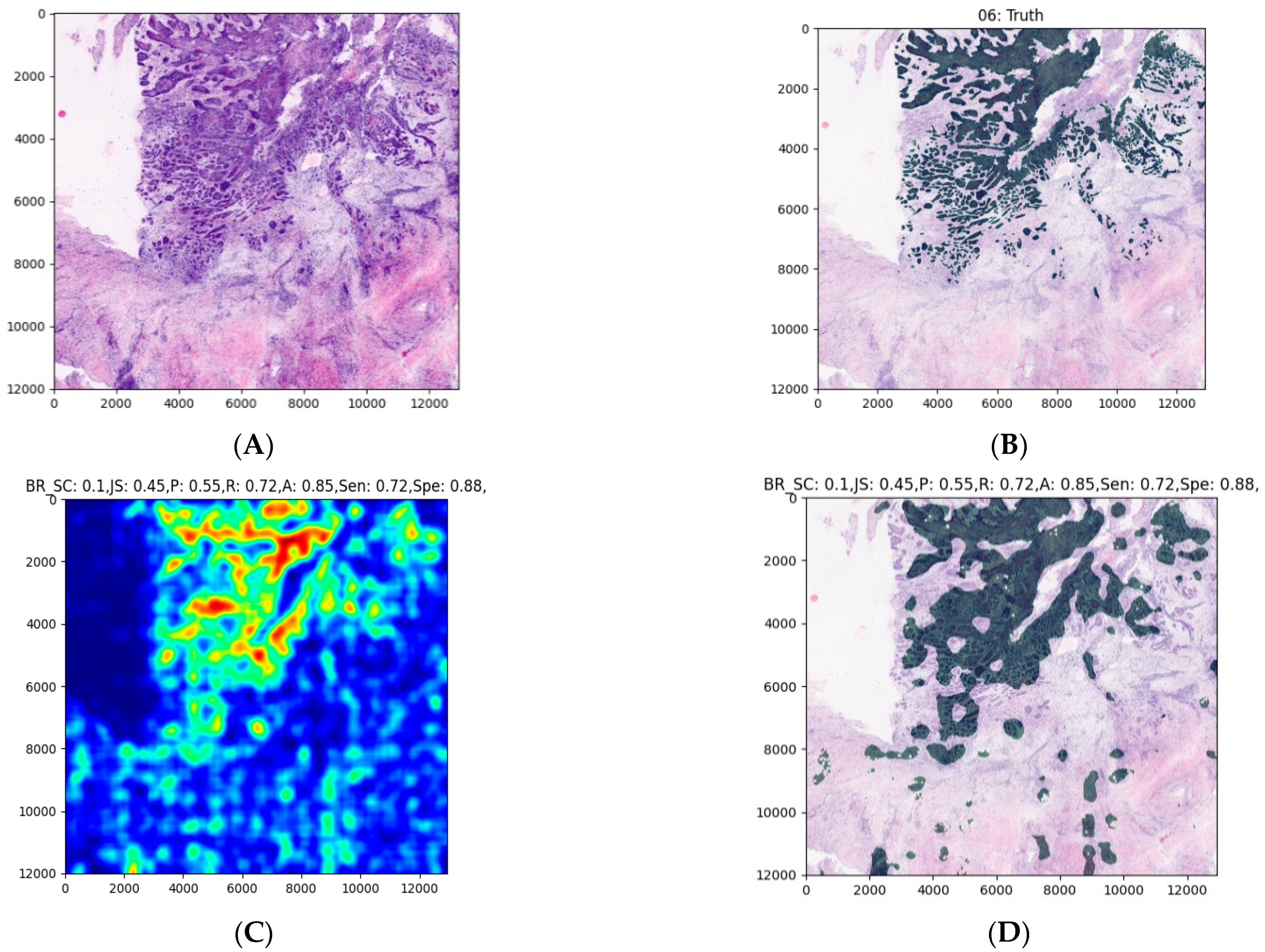

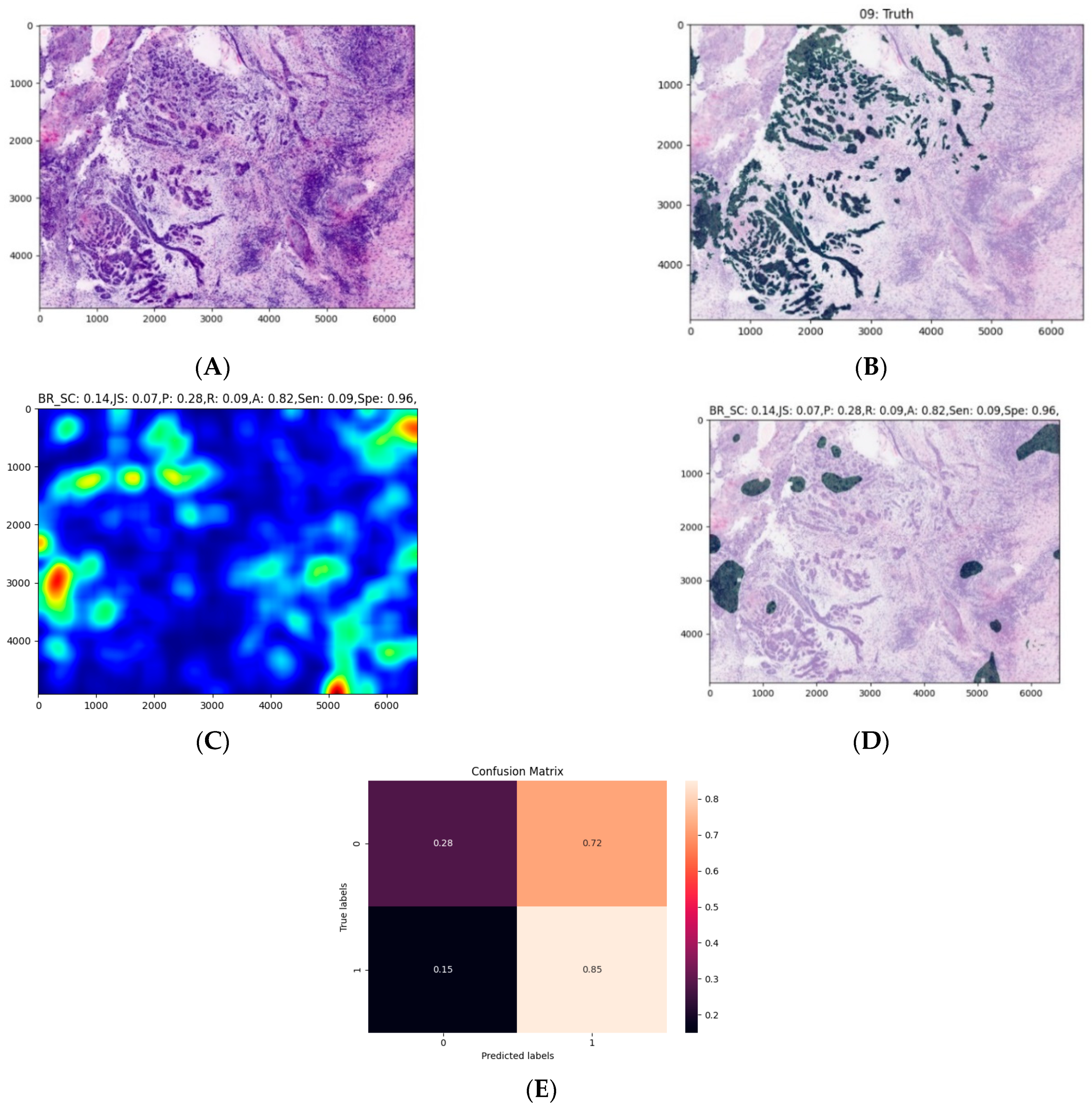

2.6. Expanding the MobileNet and Evaluation on the Validation Dataset

- Applying a threshold of 0.5 (transform every pixel that has a probability lower than 0.5 to 0 and the rest to 1).

- Erosion (the goal of this operation is to exclude isolated pixels).

- Dilation (after the operation of erosion, the entire mask is slightly thinner, and this operation reverts this property).

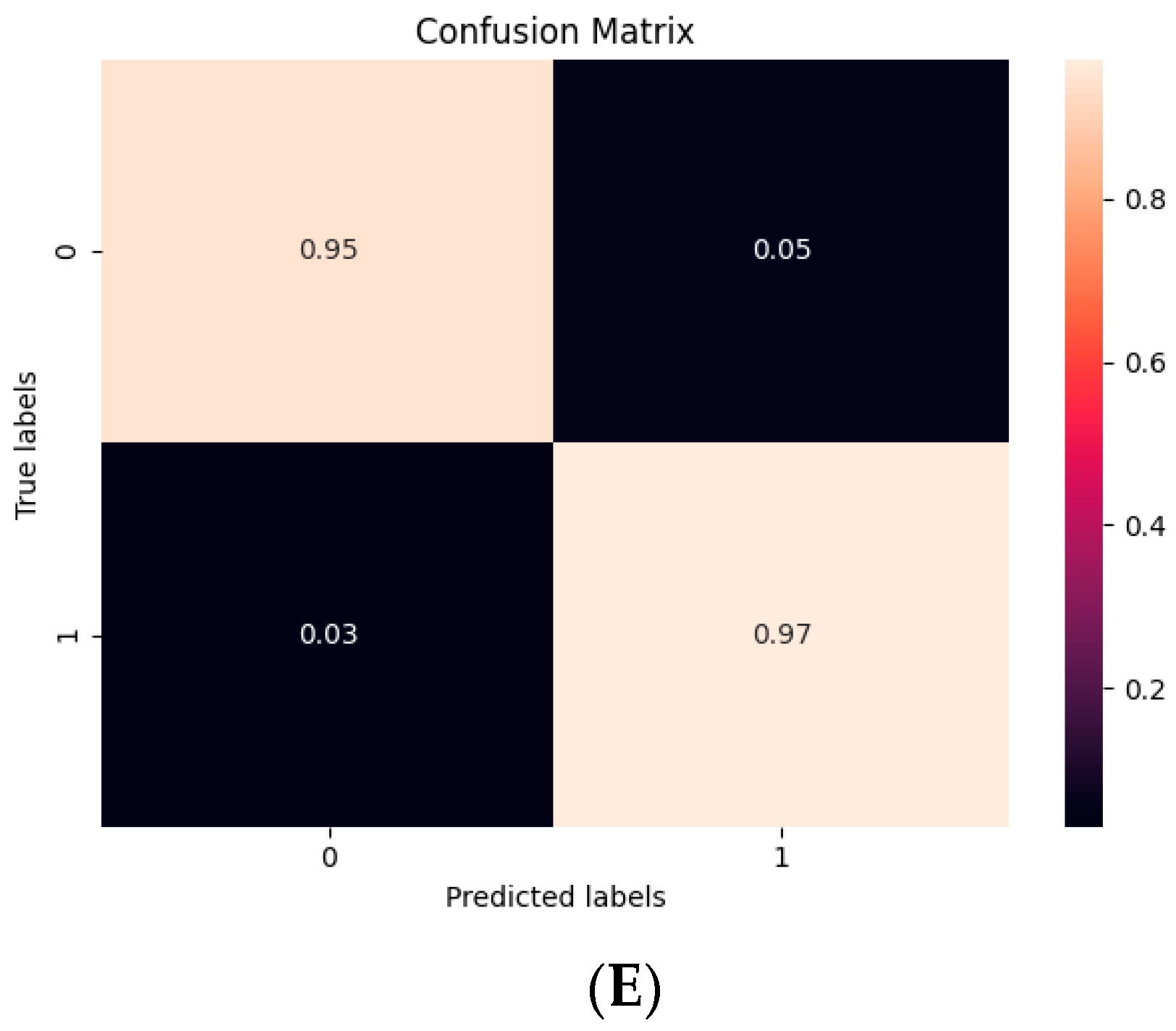

2.7. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vigneswaran, N.; Williams, M.D. Epidemiologic trends in head and neck cancer and aids in diagnosis. Oral Maxillofac. Surg. Clin. N. Am. 2014, 26, 123–141. [Google Scholar] [CrossRef]

- Capote-Moreno, A.; Brabyn, P.; Muñoz-Guerra, M.; Sastre-Pérez, J.; Escorial-Hernandez, V.; Rodríguez-Campo, F.; García, T.; Naval-Gías, L. Oral squamous cell carcinoma: Epidemiological study and risk factor assessment based on a 39-year series. Int. J. Oral Maxillofac. Surg. 2020, 49, 1525–1534. [Google Scholar] [CrossRef]

- Ragazzi, M.; Longo, C.; Piana, S. Ex vivo (fluorescence) confocal microscopy in surgical pathology. Adv. Anat. Pathol. 2016, 23, 159–169. [Google Scholar] [CrossRef] [PubMed]

- Krishnamurthy, S.; Ban, K.; Shaw, K.; Mills, G.; Sheth, R.; Tam, A.; Gupta, S.; Sabir, S. Confocal fluorescence microscopy platform suitable for rapid evaluation of small fragments of tissue in surgical pathology practice. Arch. Pathol. Lab. Med. 2018, 143, 305–313. [Google Scholar] [CrossRef]

- Krishnamurthy, S.; Cortes, A.; Lopez, M.; Wallace, M.; Sabir, S.; Shaw, K.; Mills, G. Ex vivo confocal fluorescence microscopy for rapid evaluation of tissues in surgical pathology practice. Arch. Pathol. Lab. Med. 2017, 142, 396–401. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Puliatti, S.; Bertoni, L.; Pirola, G.M.; Azzoni, P.; Bevilacqua, L.; Eissa, A.; Elsherbiny, A.; Sighinolfi, M.C.; Chester, J.; Kaleci, S.; et al. Ex vivo fluorescence confocal microscopy: The first application for real-time pathological examination of prostatic tissue. BJU Int. 2019, 124, 469–476. [Google Scholar] [CrossRef]

- Shavlokhova, V.; Flechtenmacher, C.; Sandhu, S.; Vollmer, M.; Hoffmann, J.; Engel, M.; Freudlsperger, C. Features of oral squamous cell carcinoma in ex vivo fluorescence confocal microscopy. Int. J. Dermatol. 2021, 60, 236–240. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hollon, T.C.; Pandian, B.; Adapa, A.R.; Urias, E.; Save, A.V.; Khalsa, S.S.S.; Eichberg, D.G.; D’Amico, R.S.; Farooq, Z.U.; Lewis, S.; et al. Near real-time intraoperative brain tumor diagnosis using stimulated Raman histology and deep neural networks. Nat. Med. 2020, 26, 52–58. [Google Scholar] [CrossRef]

- Hou, L.; Samaras, D.; Kurc, T.M.; Gao, Y.; Davis, J.E.; Saltz, J.H. Patch-based convolutional neural network for whole slide tissue image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 2424–2433. [Google Scholar] [CrossRef] [Green Version]

- Madabhushi, A.; Lee, G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 2016, 33, 170–175. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Litjens, G.; Sánchez, C.I.; Timofeeva, N.; Hermsen, M.; Nagtegaal, I.; Kovacs, I.; Van De Kaa, C.H.; Bult, P.; Van Ginneken, B.; Van Der Laak, J. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci. Rep. 2016, 6, 26286. [Google Scholar] [CrossRef] [Green Version]

- Kraus, O.Z.; Ba, J.L.; Frey, B.J. Classifying and segmenting microscopy images with deep multiple instance learning. Bioinformatics 2016, 32, i52–i59. [Google Scholar] [CrossRef]

- Korbar, B.; Olofson, A.M.; Miraflor, A.P.; Nicka, C.M.; Suriawinata, M.A.; Torresani, L.; Suriawinata, A.A.; Hassanpour, S. Deep learning for classification of colorectal polyps on whole-slide images. J. Pathol. Inform. 2017, 8, 30. [Google Scholar] [CrossRef]

- Luo, X.; Zang, X.; Yang, L.; Huang, J.; Liang, F.; Rodriguez-Canales, J.; Wistuba, I.I.; Gazdar, A.; Xie, Y.; Xiao, G. Comprehensive computational pathological image analysis predicts lung cancer prognosis. J. Thorac. Oncol. 2016, 12, 501–509. [Google Scholar] [CrossRef] [Green Version]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Wei, J.W.; Tafe, L.J.; Linnik, Y.A.; Vaickus, L.J.; Tomita, N.; Hassanpour, S. Pathologist-level classification of histologic patterns on resected lung adenocarcinoma slides with deep neural networks. Sci. Rep. 2019, 9, 3358. [Google Scholar] [CrossRef] [Green Version]

- Gertych, A.; Swiderska-Chadaj, Z.; Ma, Z.; Ing, N.; Markiewicz, T.; Cierniak, S.; Salemi, H.; Guzman, S.; Walts, A.E.; Knudsen, B.S. Convolutional neural networks can accurately distinguish four histologic growth patterns of lung adenocarcinoma in digital slides. Sci. Rep. 2019, 9, 1483. [Google Scholar] [CrossRef] [PubMed]

- Bejnordi, B.E.; Veta, M.; Van Diest, P.J.; Van Ginneken, B.; Karssemeijer, N.; Litjens, G.; Van Der Laak, J.A.W.M.; Hermsen, M.; Manson, Q.F.; Balkenhol, M.; et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef] [PubMed]

- Saltz, J.; Gupta, R.; Hou, L.; Kurc, T.; Singh, P.; Nguyen, V.; Samaras, D.; Shroyer, K.R.; Zhao, T.; Batiste, R.; et al. Spatial organization and molecular correlation of tumor-infiltrating lymphocytes using deep learning on pathology images. Cell Rep. 2018, 23, 181–193.e7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Campanella, G.; Hanna, M.G.; Geneslaw, L.; Miraflor, A.; Silva, V.W.K.; Busam, K.J.; Brogi, E.; Reuter, V.E.; Klimstra, D.S.; Fuchs, T.J. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019, 25, 1301–1309. [Google Scholar] [CrossRef] [PubMed]

- Sharma, H.; Zerbe, N.; Klempert, I.; Hellwich, O.; Hufnagl, P. Deep convolutional neural networks for automatic classification of gastric carcinoma using whole slide images in digital histopathology. Comput. Med. Imaging Graph. 2017, 61, 2–13. [Google Scholar] [CrossRef] [PubMed]

- Arvaniti, E.; Fricker, K.S.; Moret, M.; Rupp, N.; Hermanns, T.; Fankhauser, C.; Wey, N.; Wild, P.J.; Rüschoff, J.H.; Claassen, M. Automated Gleason grading of prostate cancer tissue microarrays via deep learning. Sci. Rep. 2018, 8, 12054. [Google Scholar] [CrossRef]

- Fletcher, C.D.M.; Unni, K.; Mertens, F. World health organization classification of tumours. In Pathology and Genetics of Tumours of Soft Tissue and Bone; IARC Press: Lyon, France, 2002; ISBN 978-92-832-2413-6. [Google Scholar]

- Granter, S.R.; Beck, A.H.; Papke, D.J. AlphaGo, deep learning, and the future of the human microscopist. Arch. Pathol. Lab. Med. 2017, 141, 619–621. [Google Scholar] [CrossRef] [Green Version]

- Xing, F.; Yang, L. Chapter 4—Machine learning and its application in microscopic image analysis. In Machine Learning and Medical Imaging; Wu, G., Shen, D., Sabuncu, M.R., Eds.; The Elsevier and MICCAI Society Book Series; Academic Press: Cambridge, MA, USA, 2016; pp. 97–127. ISBN 978-0-12-804076-8. [Google Scholar]

- Chang, H.Y.; Jung, C.K.; Woo, J.I.; Lee, S.; Cho, J.; Kim, S.W.; Kwak, T.-Y. Artificial intelligence in pathology. J. Pathol. Transl. Med. 2019, 53, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Veta, M.; Heng, Y.J.; Stathonikos, N.; Bejnordi, B.E.; Beca, F.; Wollmann, T.; Rohr, K.; Shah, M.A.; Wang, D.; Rousson, M.; et al. Predicting breast tumor proliferation from whole-slide images: The TUPAC16 challenge. Med. Image Anal. 2019, 54, 111–121. [Google Scholar] [CrossRef] [Green Version]

- Dong, F.; Irshad, H.; Oh, E.-Y.; Lerwill, M.F.; Brachtel, E.F.; Jones, N.C.; Knoblauch, N.; Montaser-Kouhsari, L.; Johnson, N.B.; Rao, L.K.F.; et al. Computational pathology to discriminate benign from malignant intraductal proliferations of the breast. PLoS ONE 2014, 9, e114885. [Google Scholar] [CrossRef] [Green Version]

- Karadaghy, O.A.; Shew, M.; New, J.; Bur, A.M. Development and assessment of a machine learning model to help predict survival among patients with oral squamous cell carcinoma. JAMA Otolaryngol. Head Neck Surg. 2019, 145, 1115–1120. [Google Scholar] [CrossRef]

- Bur, A.M.; Holcomb, A.; Goodwin, S.; Woodroof, J.; Karadaghy, O.; Shnayder, Y.; Kakarala, K.; Brant, J.; Shew, M. Machine learning to predict occult nodal metastasis in early oral squamous cell carcinoma. Oral Oncol. 2019, 92, 20–25. [Google Scholar] [CrossRef]

- Alabi, R.O.; Elmusrati, M.; Sawazaki-Calone, I.; Kowalski, L.P.; Haglund, C.; Coletta, R.D.; Mäkitie, A.A.; Salo, T.; Leivo, I.; Almangush, A. Machine learning application for prediction of locoregional recurrences in early oral tongue cancer: A Web-based prognostic tool. Virchows Arch. 2019, 475, 489–497. [Google Scholar] [CrossRef] [Green Version]

- Arora, A.; Husain, N.; Bansal, A.; Neyaz, A.; Jaiswal, R.; Jain, K.; Chaturvedi, A.; Anand, N.; Malhotra, K.; Shukla, S. Development of a new outcome prediction model in early-stage squamous cell carcinoma of the oral cavity based on histopathologic parameters with multivariate analysis. Am. J. Surg. Pathol. 2017, 41, 950–960. [Google Scholar] [CrossRef]

- Patil, S.; Awan, K.; Arakeri, G.; Seneviratne, C.J.; Muddur, N.; Malik, S.; Ferrari, M.; Rahimi, S.; Brennan, P.A. Machine learning and its potential applications to the genomic study of head and neck cancer—A systematic review. J. Oral Pathol. Med. 2019, 48, 773–779. [Google Scholar] [CrossRef]

- Li, S.; Chen, X.; Liu, X.; Yu, Y.; Pan, H.; Haak, R.; Schmidt, J.; Ziebolz, D.; Schmalz, G. Complex integrated analysis of lncRNAs-miRNAs-mRNAs in oral squamous cell carcinoma. Oral Oncol. 2017, 73, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, S.; Linge, A.; Zwanenburg, A.; Leger, S.; Lohaus, F.; Krenn, C.; Appold, S.; Gudziol, V.; Nowak, A.; von Neubeck, C.; et al. Development and validation of a gene signature for patients with head and neck carcinomas treated by postoperative radio(chemo)therapy. Clin. Cancer Res. 2018, 24, 1364–1374. [Google Scholar] [CrossRef] [Green Version]

- Chang, S.-W.; Abdul-Kareem, S.; Merican, A.F.; Zain, R.B. Oral cancer prognosis based on clinicopathologic and genomic markers using a hybrid of feature selection and machine learning methods. BMC Bioinform. 2013, 14, 170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeyaraj, P.R.; Nadar, E.R.S. Computer-assisted medical image classification for early diagnosis of oral cancer employing deep learning algorithm. J. Cancer Res. Clin. Oncol. 2019, 145, 829–837. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.; Lewis, J.S.; Dupont, W.D.; Plummer, W.D.; Janowczyk, A.; Madabhushi, A. An oral cavity squamous cell carcinoma quantitative histomorphometric-based image classifier of nuclear morphology can risk stratify patients for disease-specific survival. Mod. Pathol. 2017, 30, 1655–1665. [Google Scholar] [CrossRef]

- Muthu, M.S.; Krishnan, R.; Chakraborty, C.; Ray, A.K. Wavelet based texture classification of oral histopathological sections. In Microscopy: Science, Technology, Applications and Education; Méndez-Vilas, A., Díaz, J., Eds.; FORMATEX: Badajoz, Spain, 2010; Volume 3, pp. 897–906. [Google Scholar]

- Chodorowski, A.; Mattsson, U.; Gustavsson, T. Oral lesion classification using true-color images. In Proceedings of the Medical Imagining: Image Processing, San Diego, CA, USA, 20–26 February 1999; pp. 1127–1138. [Google Scholar] [CrossRef]

- Sae-Lim, W.; Wettayaprasit, W.; Aiyarak, P. Convolutional neural networks using MobileNet for skin lesion classification. In Proceedings of the 2019 16th International Joint Conference on Computer Science and Software Engineering (JCSSE), Chonburi, Thailand, 10–12 July 2019; pp. 242–247. [Google Scholar]

- Shavlokhova, V.; Vollmer, M.; Vollmer, A.; Gholam, P.; Saravi, B.; Hoffmann, J.; Engel, M.; Elsner, J.; Neumeier, F.; Freudlsperger, C. In vivo reflectance confocal microscopy of wounds: Feasibility of intraoperative basal cell carcinoma margin assessment. Ann. Transl. Med. 2021. [Google Scholar] [CrossRef]

- Das, N.; Hussain, E.; Mahanta, L.B. Automated classification of cells into multiple classes in epithelial tissue of oral squamous cell carcinoma using transfer learning and convolutional neural network. Neural Netw. 2020, 128, 47–60. [Google Scholar] [CrossRef]

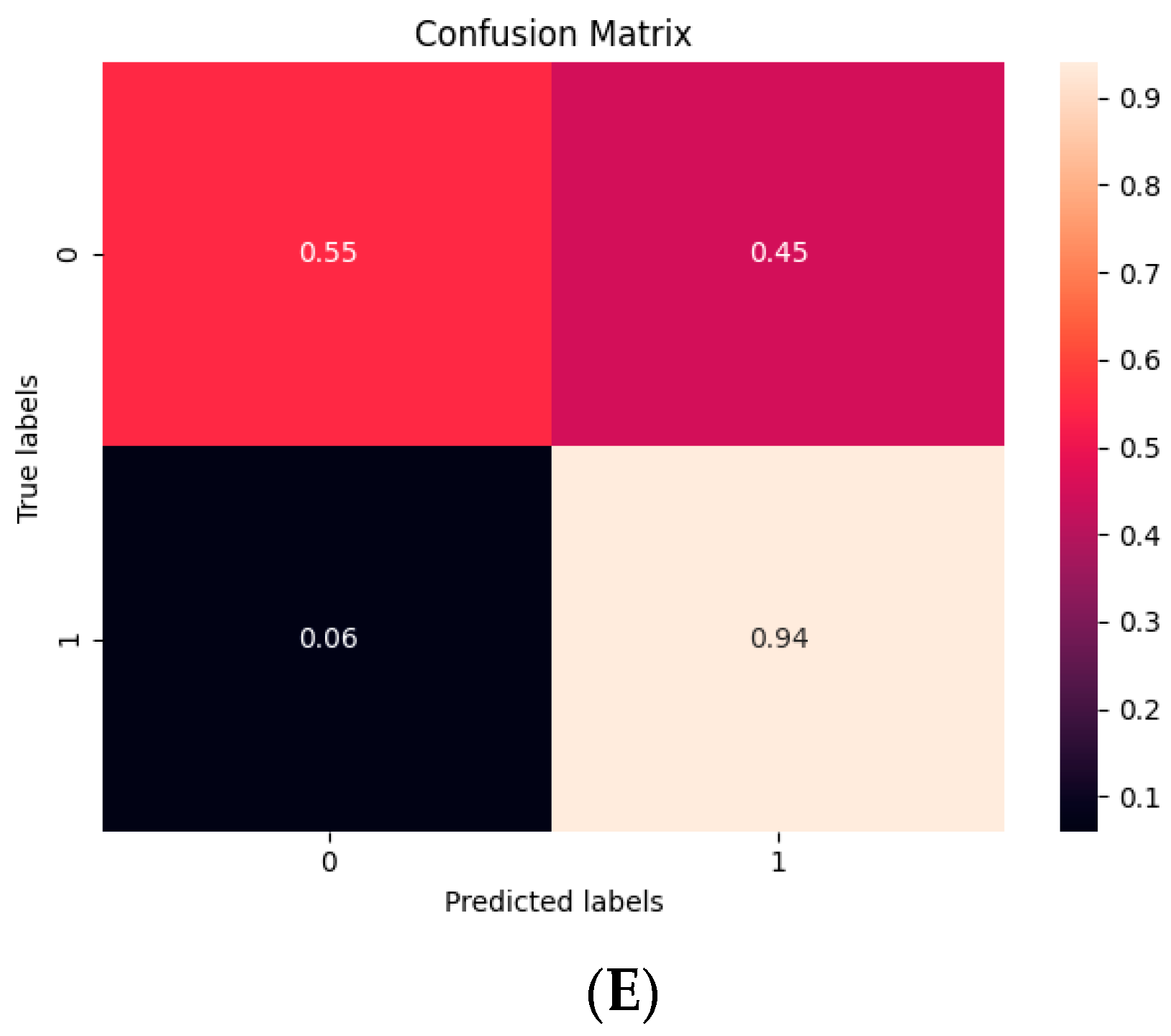

| 10 Fold (n = 20, t = 0.3) | |

|---|---|

| Sensitivity | 0.47 |

| Specificity | 0.96 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shavlokhova, V.; Sandhu, S.; Flechtenmacher, C.; Koveshazi, I.; Neumeier, F.; Padrón-Laso, V.; Jonke, Ž.; Saravi, B.; Vollmer, M.; Vollmer, A.; et al. Deep Learning on Oral Squamous Cell Carcinoma Ex Vivo Fluorescent Confocal Microscopy Data: A Feasibility Study. J. Clin. Med. 2021, 10, 5326. https://doi.org/10.3390/jcm10225326

Shavlokhova V, Sandhu S, Flechtenmacher C, Koveshazi I, Neumeier F, Padrón-Laso V, Jonke Ž, Saravi B, Vollmer M, Vollmer A, et al. Deep Learning on Oral Squamous Cell Carcinoma Ex Vivo Fluorescent Confocal Microscopy Data: A Feasibility Study. Journal of Clinical Medicine. 2021; 10(22):5326. https://doi.org/10.3390/jcm10225326

Chicago/Turabian StyleShavlokhova, Veronika, Sameena Sandhu, Christa Flechtenmacher, Istvan Koveshazi, Florian Neumeier, Víctor Padrón-Laso, Žan Jonke, Babak Saravi, Michael Vollmer, Andreas Vollmer, and et al. 2021. "Deep Learning on Oral Squamous Cell Carcinoma Ex Vivo Fluorescent Confocal Microscopy Data: A Feasibility Study" Journal of Clinical Medicine 10, no. 22: 5326. https://doi.org/10.3390/jcm10225326