Dog Identification Method Based on Muzzle Pattern Image

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Dataset

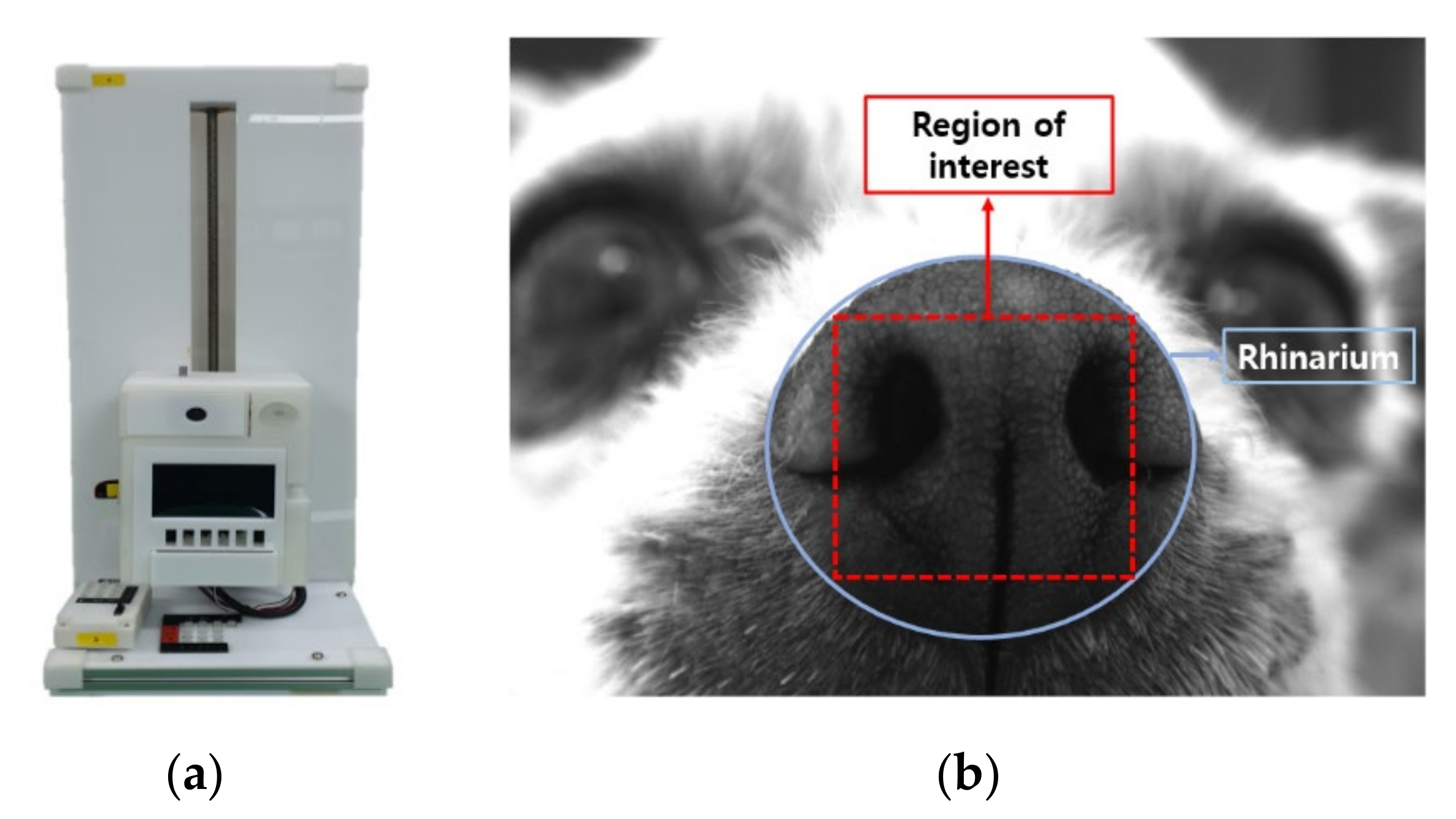

3.1.1. Data Acquisition

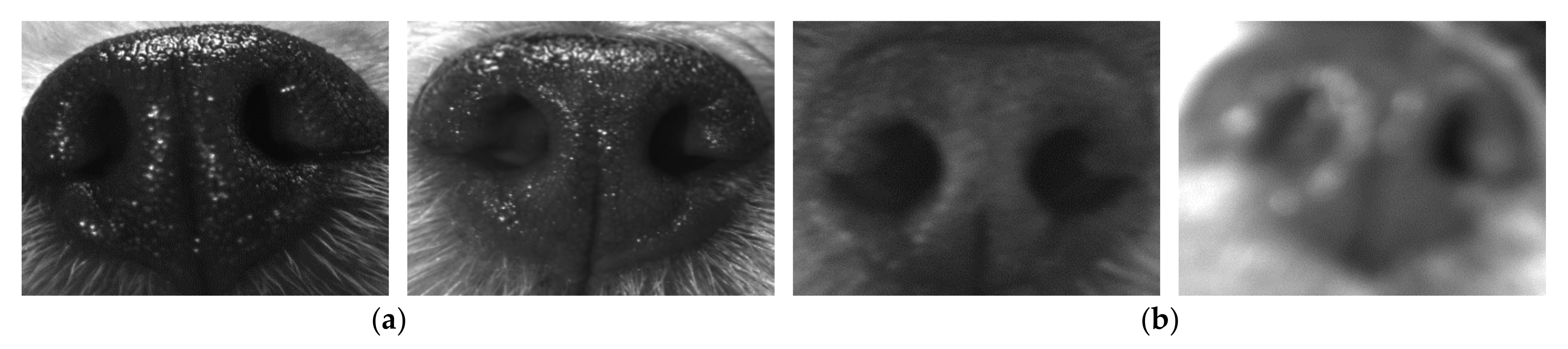

3.1.2. Data Screening

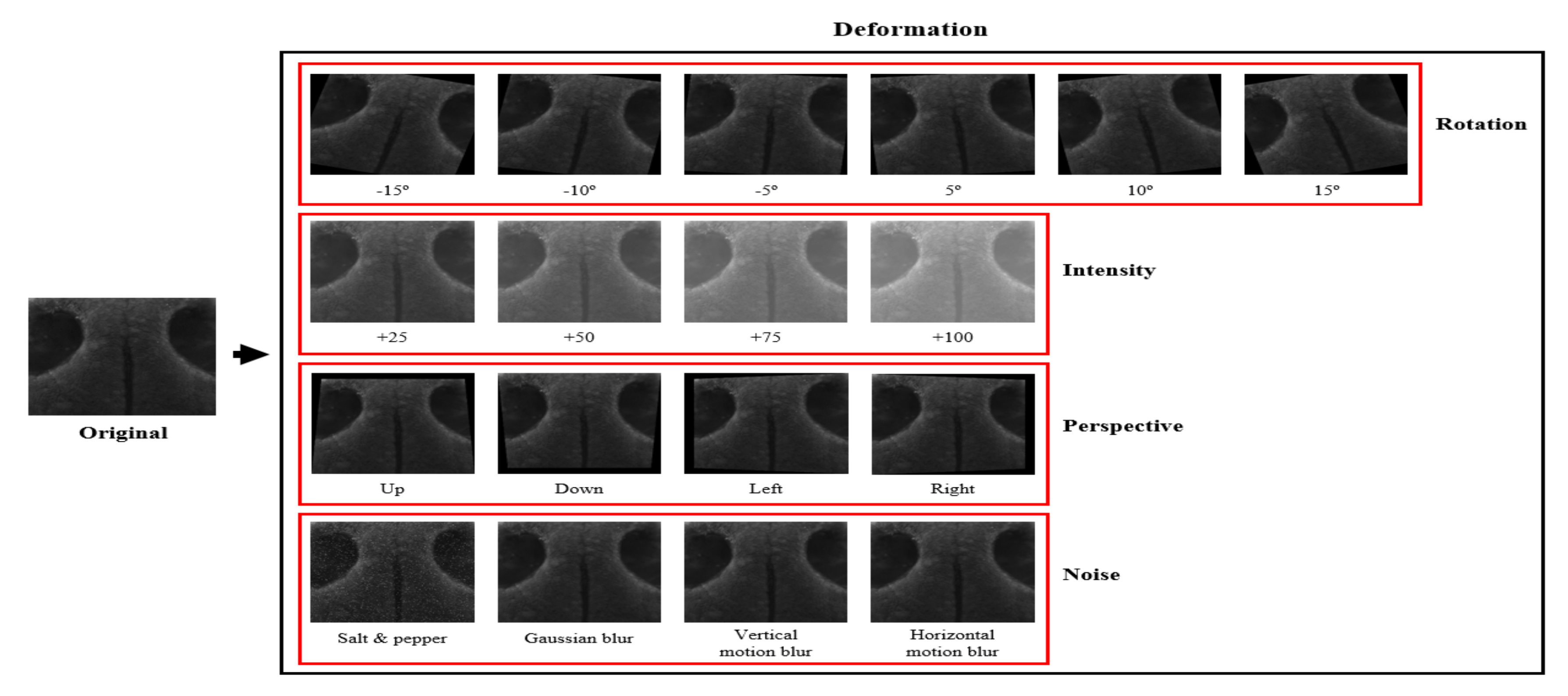

3.1.3. Data Augmentation

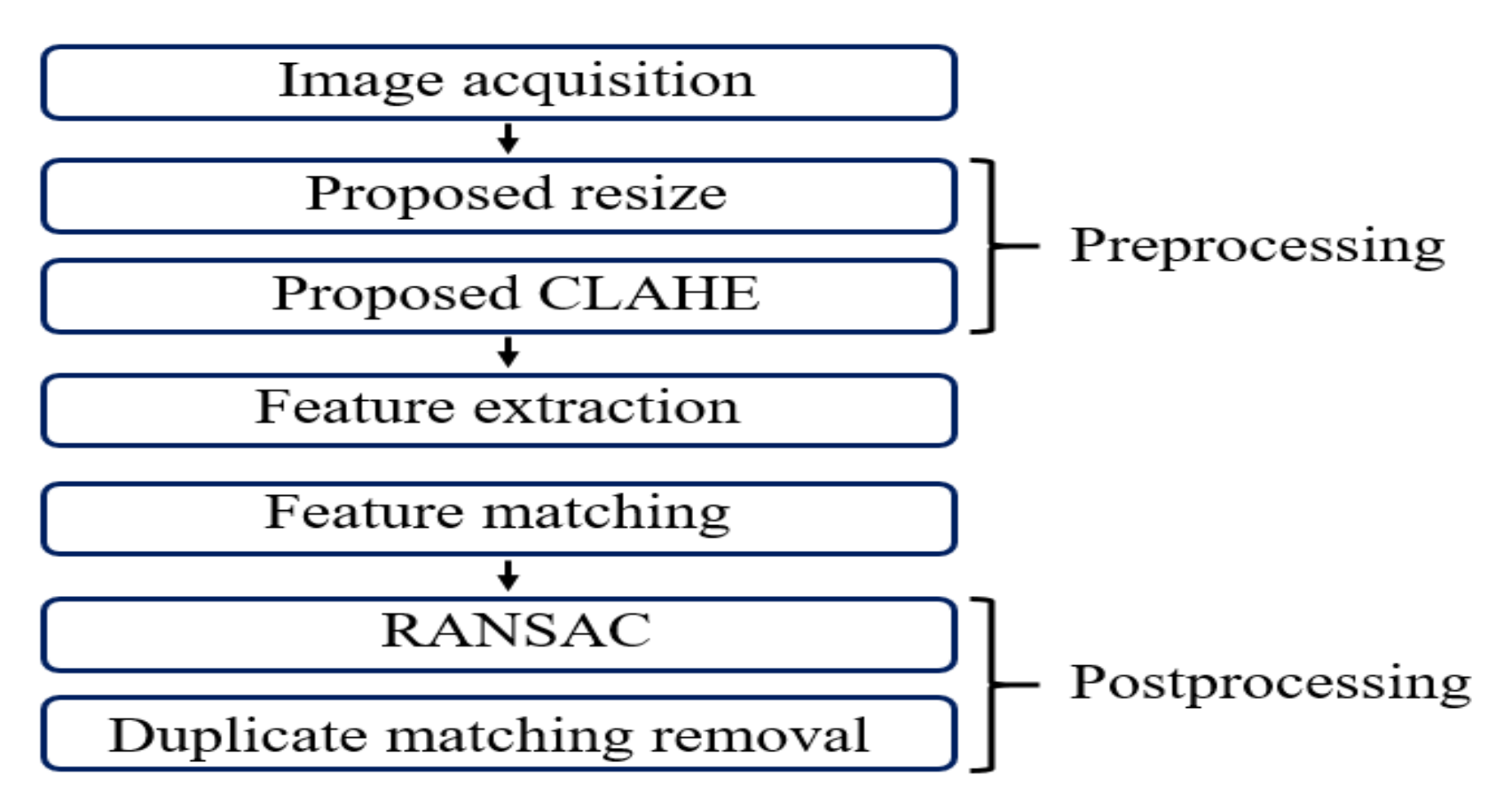

3.2. Proposed Method

3.2.1. Image Resize

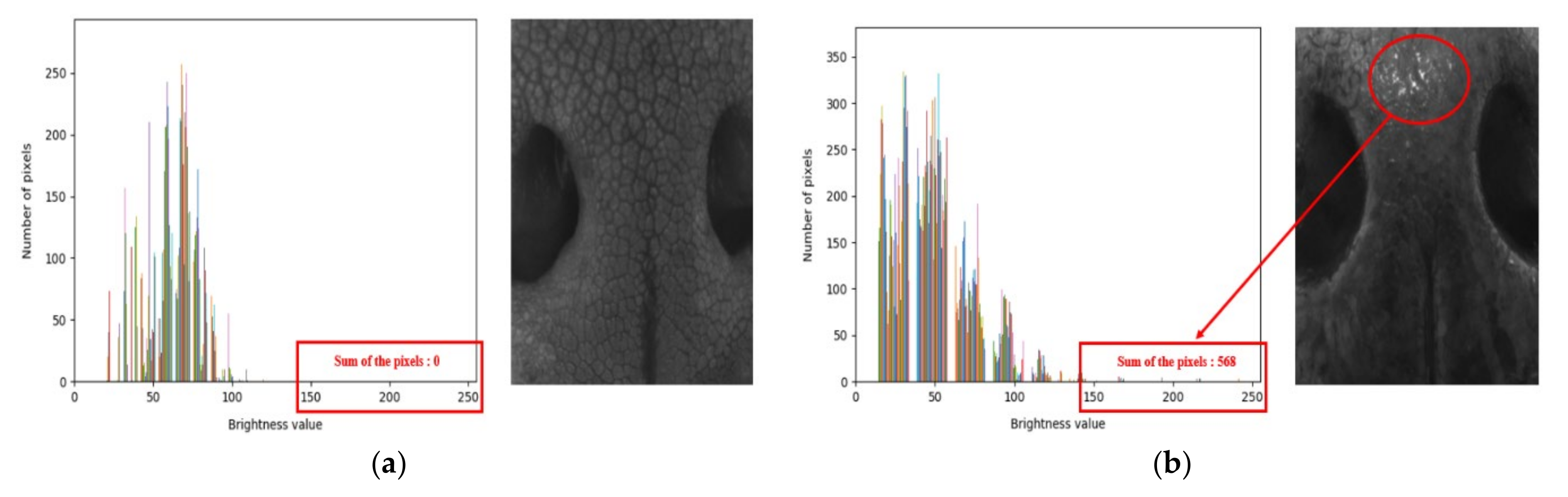

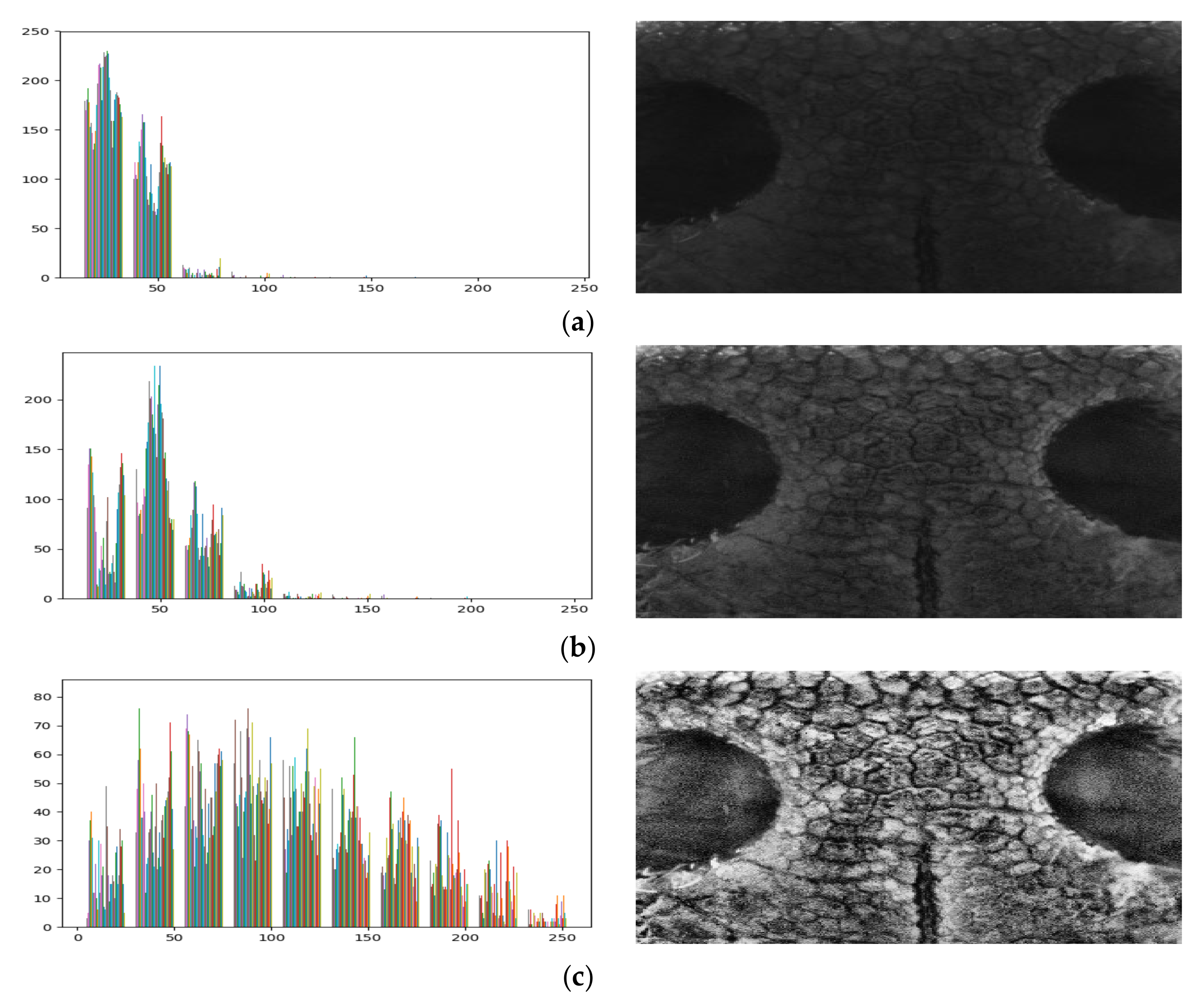

3.2.2. Contrast Limited Adaptive Histogram Equalization (CLAHE)

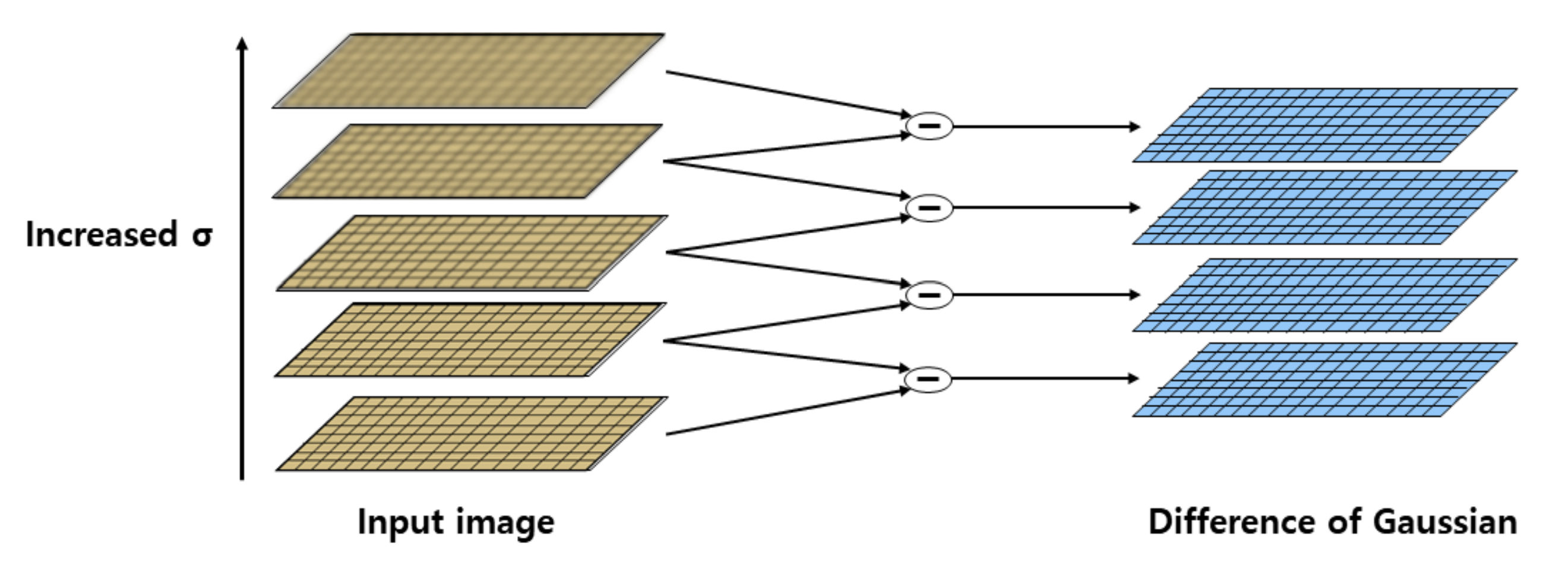

3.2.3. Feature Extraction Algorithm

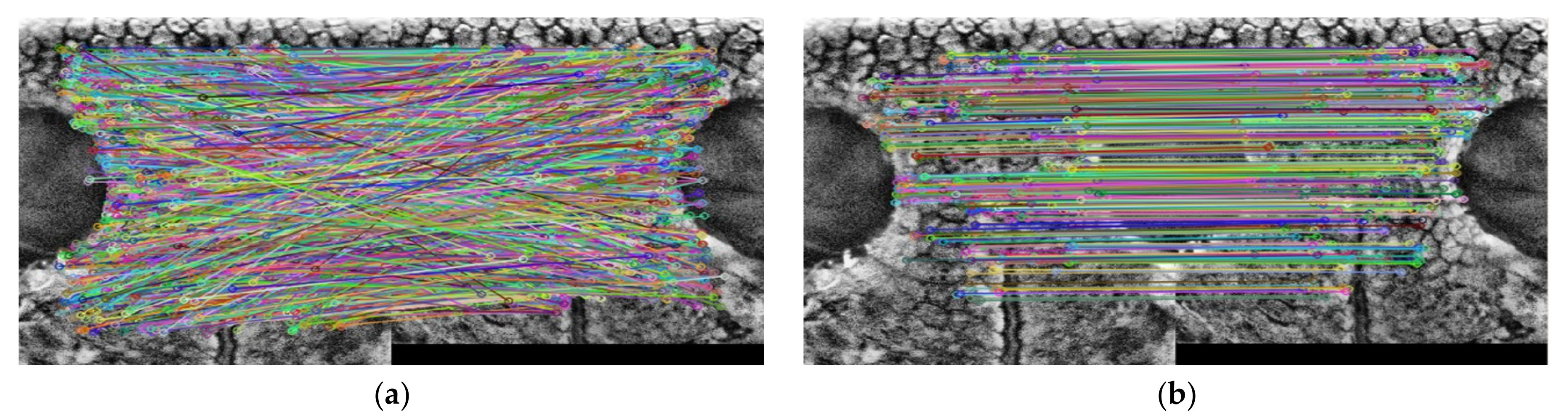

3.2.4. Matching

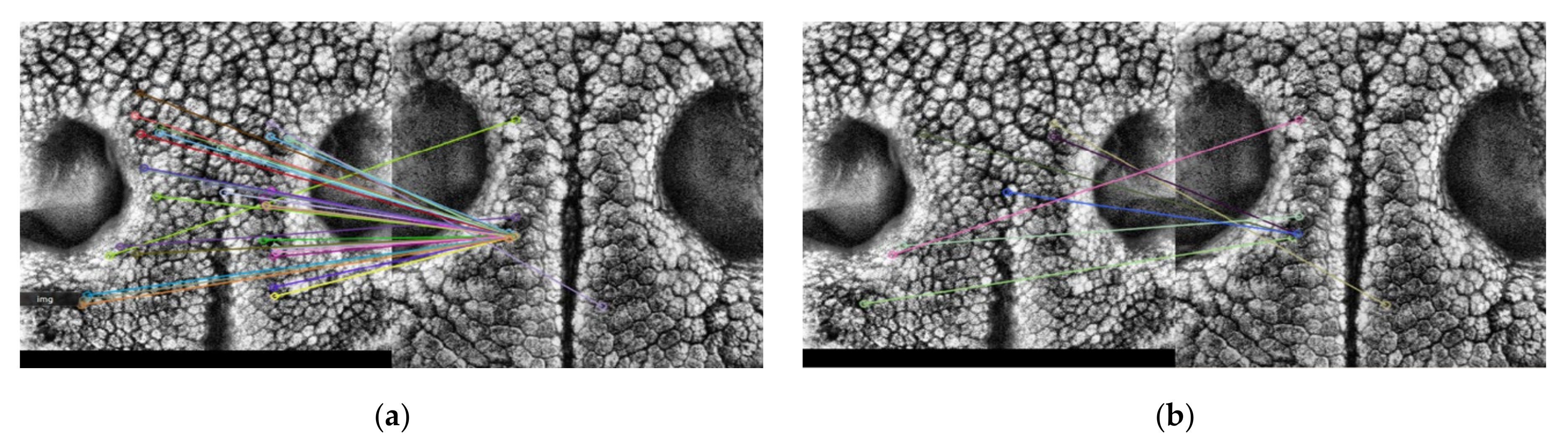

3.2.5. Random Sample Consensus (RANSAC)

3.2.6. Duplicate Matching Removal (DMR)

4. Results and Discussion

4.1. Performance Evaluation

4.2. Effectiveness of the Proposed Methods

4.2.1. Basic Method

4.2.2. Duplicate Matching Removal (DMR)

4.2.3. Proposed CLAHE

4.2.4. Proposed Resize

4.2.5. Processing Time

4.3. Evaluation of the Robustness of the Proposed Method

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ministry for Food, Agriculture, Forestry and Fisheries. A Survey on the Protection and Welfare of Pets in 2019; Ministry for Food, Agriculture, Forestry and Fisheries: Gwacheon, Korea, 2020. [Google Scholar]

- Jain, A.; Ross, A.; Prabhakar, S. An Introduction to Biometric Recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef]

- Ng, R.Y.F.; Tay, Y.H.; Mok, K.M. A review of iris recognition algorithms. In Proceedings of the 2008 International Symposium on Information Technology, Kuala Lumpur, Malaysia, 26–28 August 2008; Volume 2, pp. 1–7. [Google Scholar]

- Yang, W.; Wang, S.; Hu, J.; Zheng, G.; Valli, C. Security and Accuracy of Fingerprint-Based Biometrics: A Review. Symmetry 2019, 11, 141. [Google Scholar] [CrossRef]

- Tolba, A.S.; El-Baz, A.H.; El-Harby, A.A. Face recognition: A literature review. Int. J. Signal Process. 2006, 2, 88–103. [Google Scholar]

- Liu, T.T.; Wu, D.F.; Wang, L.Y. Development process of animal image recognition technology and its application in modern cow and pig industry. IOP Conf. Ser.: Earth Environ. Sci. 2020, 512, 012090. [Google Scholar] [CrossRef]

- Sun, S.; Yang, S.; Zhao, L. Noncooperative bovine iris recognition via SIFT. Neurocomputing 2013, 120, 310–317. [Google Scholar] [CrossRef]

- Lu, Y.; He, X.; Wen, Y.; Wang, P.S. A new cow identification system based on iris analysis and recognition. Int. J. Biom. 2014, 6, 18. [Google Scholar] [CrossRef]

- Trokielewicz, M.; Szadkowski, M. Iris and periocular recognition in arabian race horses using deep convolutional neural networks. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 510–516. [Google Scholar]

- Kumar, S.; Singh, S.K. Biometric Recognition for Pet Animal. J. Softw. Eng. Appl. 2014, 7, 470–482. [Google Scholar] [CrossRef]

- Petersen, W. The Identification of the Bovine by Means of Nose-Prints. J. Dairy Sci. 1922, 5, 249–258. [Google Scholar] [CrossRef]

- Baranov, A.S.; Graml, R.; Pirchner, F.; Schmid, D.O. Breed differences and intra-breed genetic variability of dermatoglyphic pattern of cattle. J. Anim. Breed. Genet. 1993, 110, 385–392. [Google Scholar] [CrossRef]

- Minagawa, H.; Fujimura, T.; Ichiyanagi, M.; Tanaka, K.; Fangquan, M. Identification of beef cattle by analyzing images of their muzzle patterns lifted on paper. Publ. Jpn. Soc. Agric. Inform. 2002, 8, 596–600. [Google Scholar]

- Barry, B.; Gonzales-Barron, U.; McDonnell, K.; Butler, F.; Ward, S. Using Muzzle Pattern Recognition as a Biometric Approach for Cattle Identification. Trans. ASABE 2007, 50, 1073–1080. [Google Scholar] [CrossRef]

- Noviyanto, A.; Arymurthy, A.M. Beef cattle identification based on muzzle pattern using a matching refinement technique in the SIFT method. Comput. Electron. Agric. 2013, 99, 77–84. [Google Scholar] [CrossRef]

- Tharwat, A.; Gaber, T.; Hassanien, A.E.; Hassanien, H.A.; Tolba, M.F. Cattle Identification Using Muzzle Print Images Based on Texture Features Approach. In IOP Conference Series: Materials Science and Engineering, Proceedings of the The 6th International Conference On Electrical Engineering, Control And Robotics, Xiamen, China 10–12 January 2020; IOP Science: Bristol, UK, 2020; Volume 853, p. 853. [Google Scholar]

- Awad, A.I.; Zawbaa, H.M.; Mahmoud, H.A.; Nabi, E.H.H.A.; Fayed, R.H.; Hassanien, A.E. A robust cattle identification scheme using muzzle print images. In Proceedings of the 2013 Federated Conference on Computer Science and Information Systems, Krakow, Poland, 8–11 September 2013; pp. 529–534. [Google Scholar]

- Noviyanto, A.; Arymurthy, A.M. Automatic cattle identification based on muzzle photo using speed-up robust features approach. In Proceedings of the 3rd European conference of computer science, ECCS, Paris, France, 2–4 December 2012; Volume 110, p. 114. [Google Scholar]

- Tong, H.; Li, M.; Zhang, H.; Zhang, C. Blur detection for digital images using wavelet transform. In Proceedings of the 2004 IEEE International Conference on Multimedia and Expo (ICME) (IEEE Cat. No.04TH8763), Taipei, Taiwan, 27–30 June 2004; Volume 1, pp. 17–20. [Google Scholar] [CrossRef]

- Rybak, Ł.; Dudczyk, J. A Geometrical Divide of Data Particle in Gravitational Classification of Moons and Circles Data Sets. Entropy 2020, 22, 1088. [Google Scholar] [CrossRef]

- Pisano, E.D.; Zong, S.; Hemminger, B.M.; DeLuca, M.; Johnston, R.E.; Muller, K.; Braeuning, M.P.; Pizer, S.M. Contrast Limited Adaptive Histogram Equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 1998, 11, 193–200. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–10. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Computer Vision—ECCV 2006, Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Cappelli, R.; Maio, D.; Maltoni, D.; Wayman, J.L.; Jain, A.K. Performance evaluation of fingerprint verification systems. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 28, 3–18. [Google Scholar] [CrossRef] [PubMed]

- Poh, N.; Bengio, S. Evidences of Equal Error Rate Reduction in Biometric Authentication Fusion; IDIAP: Martigny, Switzerland, 2004. [Google Scholar]

- Maio, D.; Maltoni, D.; Cappelli, R.; Wayman, J.L.; Jain, A.K. FVC2000: Fingerprint verification competition. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 402–412. [Google Scholar] [CrossRef]

| Method | Evaluation Item | SIFT | SURF | BRISK | ORB | ||||

|---|---|---|---|---|---|---|---|---|---|

| Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | ||

| Basic Method | Min | 4 | 0 | 4 | 0 | 29 | 10 | 12 | 8 |

| Max | 751 | 12 | 686 | 34 | 5791 | 622 | 2661 | 88 | |

| Average | 132 | 4 | 96 | 6 | 1206 | 68 | 408 | 21 | |

| False matching | 7 | 14 | 12 | 161 | 16 | 199 | 17 | 207 | |

| Optimal threshold | 7.5 | 8.1 | 109.9 | 29.7 | |||||

| EER(%) | 3.1 | 11.2 | 14.6 | 15.5 | |||||

| Proposed Method 1 | Min | 2 | 0 | 1 | 0 | 2 | 0 | 2 | 0 |

| Max | 581 | 6 | 578 | 6 | 4203 | 19 | 1214 | 14 | |

| Average | 107 | 3 | 78 | 3 | 895 | 6 | 222 | 4 | |

| False matching | 2 | 4 | 12 | 5 | 12 | 134 | 11 | 7 | |

| Optimal threshold | 4.8 | 4.6 | 8.8 | 9.8 | |||||

| EER(%) | 1.6 | 5.8 | 10.9 | 5.9 | |||||

| Proposed Method 2 | Min | 7 | 1 | 2 | 0 | 1 | 1 | 3 | 0 |

| Max | 889 | 6 | 822 | 6 | 3787 | 29 | 2180 | 21 | |

| Average | 181 | 4 | 120 | 3 | 709 | 6 | 685 | 6 | |

| False matching | 0 | 0 | 7 | 18 | 14 | 193 | 2 | 28 | |

| Optimal threshold | 6 | 4.7 | 8.3 | 12.3 | |||||

| EER(%) | 0 | 5.8 | 12.7 | 1.8 | |||||

| Proposed Method 3 | Min | 8 | 0 | 4 | 1 | 9 | 0 | 82 | 0 |

| Max | 670 | 8 | 311 | 6 | 1061 | 26 | 1740 | 25 | |

| Average | 189 | 4 | 76 | 3 | 346 | 5 | 725 | 6 | |

| False matching | 0 | 0 | 1 | 0 | 1 | 12 | 0 | 0 | |

| Optimal threshold | 8 | 5.7 | 18 | 25 | |||||

| EER(%) | 0 | 0.9 | 0.9 | 0 | |||||

| Method | Size (Pixels) | Algorithm | Genuine | Imposter | GAP (Min-Max) | ||

|---|---|---|---|---|---|---|---|

| Average | Min | Average | Max | ||||

| Fixed size | 250 × 250 | SIFT | 146 | 7 | 4 | 6 | 1 |

| SURF | 44 | 3 | 4 | 6 | −3 | ||

| BRISK | 212 | 3 | 5 | 33 | −30 | ||

| ORB | 529 | 4 | 7 | 36 | −32 | ||

| 300 × 300 | SIFT | 168 | 4 | 4 | 6 | −2 | |

| SURF | 63 | 2 | 4 | 7 | −5 | ||

| BRISK | 289 | 4 | 5 | 24 | −20 | ||

| ORB | 680 | 27 | 7 | 39 | −12 | ||

| 350 × 350 | SIFT | 180 | 6 | 4 | 6 | 0 | |

| SURF | 79 | 3 | 3 | 6 | −3 | ||

| BRISK | 435 | 2 | 5 | 35 | −33 | ||

| ORB | 719 | 24 | 6 | 28 | −4 | ||

| Ratio of original size (Proposed method) | 250 for smaller | SIFT | 163 | 4 | 4 | 6 | −2 |

| SURF | 54 | 3 | 4 | 6 | −3 | ||

| BRISK | 249 | 21 | 6 | 28 | −7 | ||

| ORB | 600 | 81 | 7 | 34 | 47 | ||

| 300 for smaller | SIFT | 189 | 8 | 4 | 8 | 1 | |

| SURF | 76 | 4 | 3 | 6 | −2 | ||

| BRISK | 346 | 9 | 5 | 32 | −17 | ||

| ORB | 725 | 82 | 6 | 25 | 57 | ||

| 350 for smaller | SIFT | 193 | 6 | 4 | 7 | −1 | |

| SURF | 93 | 4 | 3 | 6 | −2 | ||

| BRISK | 479 | 7 | 5 | 22 | −15 | ||

| ORB | 765 | 48 | 6 | 24 | 24 | ||

| Time (ms) | SIFT | SURF | BRISK | ORB | ||||

|---|---|---|---|---|---|---|---|---|

| Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | |

| Min | 213 | 194 | 65 | 68 | 555 | 530 | 172 | 108 |

| Max | 647 | 514 | 232 | 193 | 1,400 | 790 | 3,328 | 210 |

| Average | 348 | 306 | 99 | 116 | 751 | 639 | 834 | 159 |

| Alg. | Evaluation Item | Rotation | Intensity | Perspective | Noise | Total | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | Genuine | Imposter | ||

| SIFT | Min | 3 | 0 | 5 | 0 | 3 | 0 | 0 | 0 | 0 | 0 |

| Max | 1367 | 7 | 1578 | 7 | 1207 | 7 | 1403 | 7 | 1578 | 8 | |

| Average | 290 | 4 | 338 | 4 | 266 | 4 | 218 | 4 | 266 | 4 | |

| False matching | 21 | 24 | 1 | 7 | 11 | 30 | 26 | 420 | 394 | 123 | |

| Optimal threshold | 5.9 | 6 | 5.9 | 5.2 | 5.6 | ||||||

| EER(%) | 0.3 | 0.0 | 0.3 | 0.9 | 0.66 | ||||||

| SURF | Min | 1 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Max | 619 | 8 | 961 | 8 | 405 | 9 | 821 | 7 | 961 | 8 | |

| Average | 91 | 3 | 160 | 4 | 85 | 3 | 102 | 4 | 92 | 4 | |

| False matching | 154 | 75 | 55 | 956 | 108 | 59 | 150 | 902 | 1845 | 13062 | |

| Optimal threshold | 5.6 | 5.3 | 5.9 | 4.9 | 4.9 | ||||||

| EER(%) | 1.4 | 1.9 | 4.0 | 4.4 | 3.70 | ||||||

| BRISK | Min | 2 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| Max | 2183 | 43 | 7467 | 48 | 2099 | 40 | 2945 | 33 | 7467 | 51 | |

| Average | 458 | 5 | 973 | 6 | 439 | 5 | 430 | 5 | 446 | 5 | |

| False matching | 66 | 655 | 78 | 859 | 47 | 445 | 136 | 1305 | 1552 | 17095 | |

| Optimal threshold | 15 | 17.4 | 16.8 | 9.9 | 11.4 | ||||||

| EER(%) | 1.0 | 2.4 | 1.4 | 4.0 | 3.10 | ||||||

| ORB | Min | 2 | 0 | 4 | 0 | 2 | 0 | 4 | 0 | 0 | 0 |

| Max | 2332 | 37 | 4857 | 36 | 2116 | 49 | 3271 | 35 | 4857 | 51 | |

| Average | 750 | 6 | 1112 | 6 | 730 | 6 | 793 | 6 | 731 | 6 | |

| False matching | 7 | 94 | 2 | 49 | 9 | 113 | 12 | 149 | 175 | 1856 | |

| Optimal threshold | 23 | 22 | 23 | 19 | 19.5 | ||||||

| EER(%) | 0.1 | 0.1 | 0.3 | 0.4 | 0.35 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jang, D.-H.; Kwon, K.-S.; Kim, J.-K.; Yang, K.-Y.; Kim, J.-B. Dog Identification Method Based on Muzzle Pattern Image. Appl. Sci. 2020, 10, 8994. https://doi.org/10.3390/app10248994

Jang D-H, Kwon K-S, Kim J-K, Yang K-Y, Kim J-B. Dog Identification Method Based on Muzzle Pattern Image. Applied Sciences. 2020; 10(24):8994. https://doi.org/10.3390/app10248994

Chicago/Turabian StyleJang, Dong-Hwa, Kyeong-Seok Kwon, Jung-Kon Kim, Ka-Young Yang, and Jong-Bok Kim. 2020. "Dog Identification Method Based on Muzzle Pattern Image" Applied Sciences 10, no. 24: 8994. https://doi.org/10.3390/app10248994

APA StyleJang, D.-H., Kwon, K.-S., Kim, J.-K., Yang, K.-Y., & Kim, J.-B. (2020). Dog Identification Method Based on Muzzle Pattern Image. Applied Sciences, 10(24), 8994. https://doi.org/10.3390/app10248994