Deep Neural Network for Visual Stimulus-Based Reaction Time Estimation Using the Periodogram of Single-Trial EEG

Abstract

1. Introduction

2. Background

3. Materials and Methods

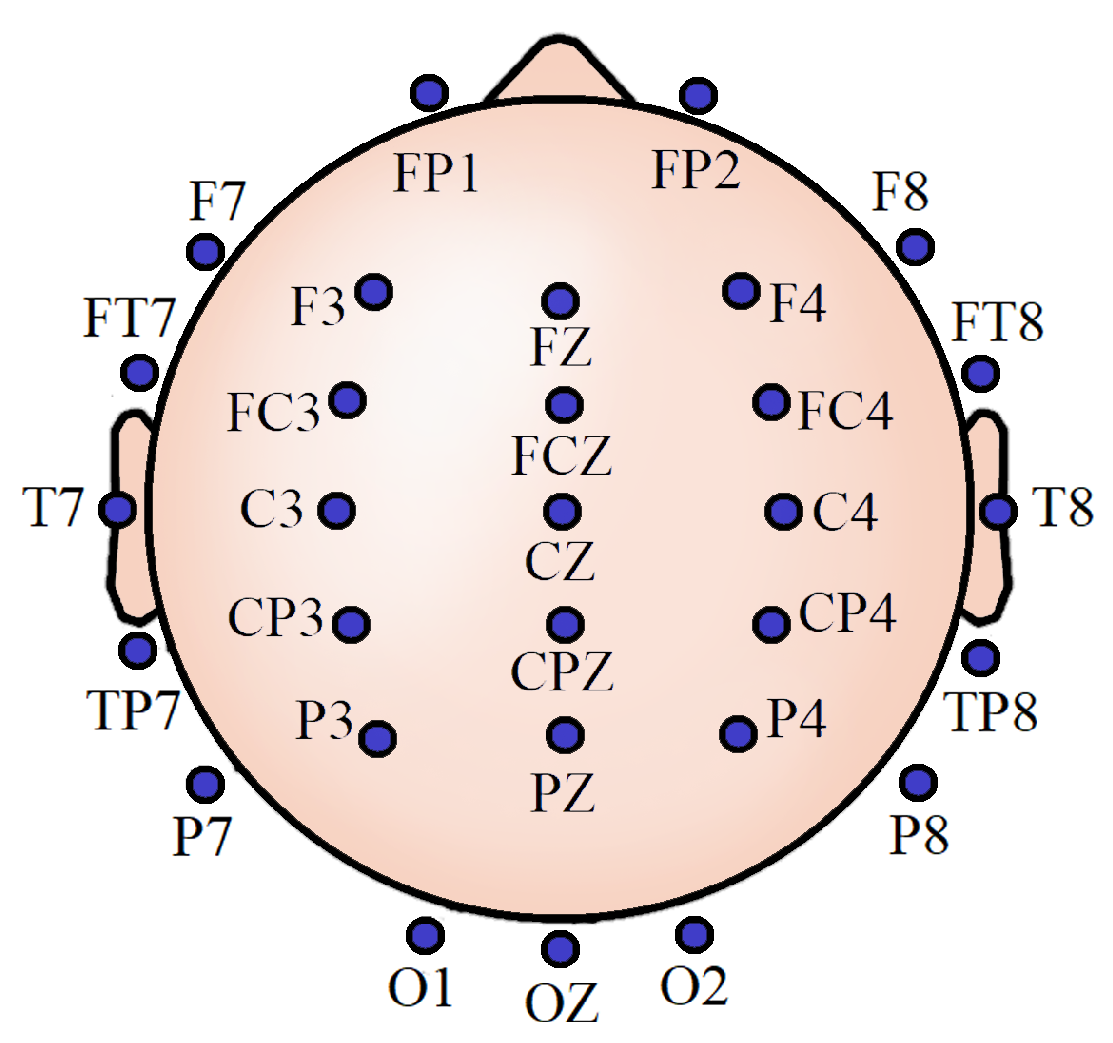

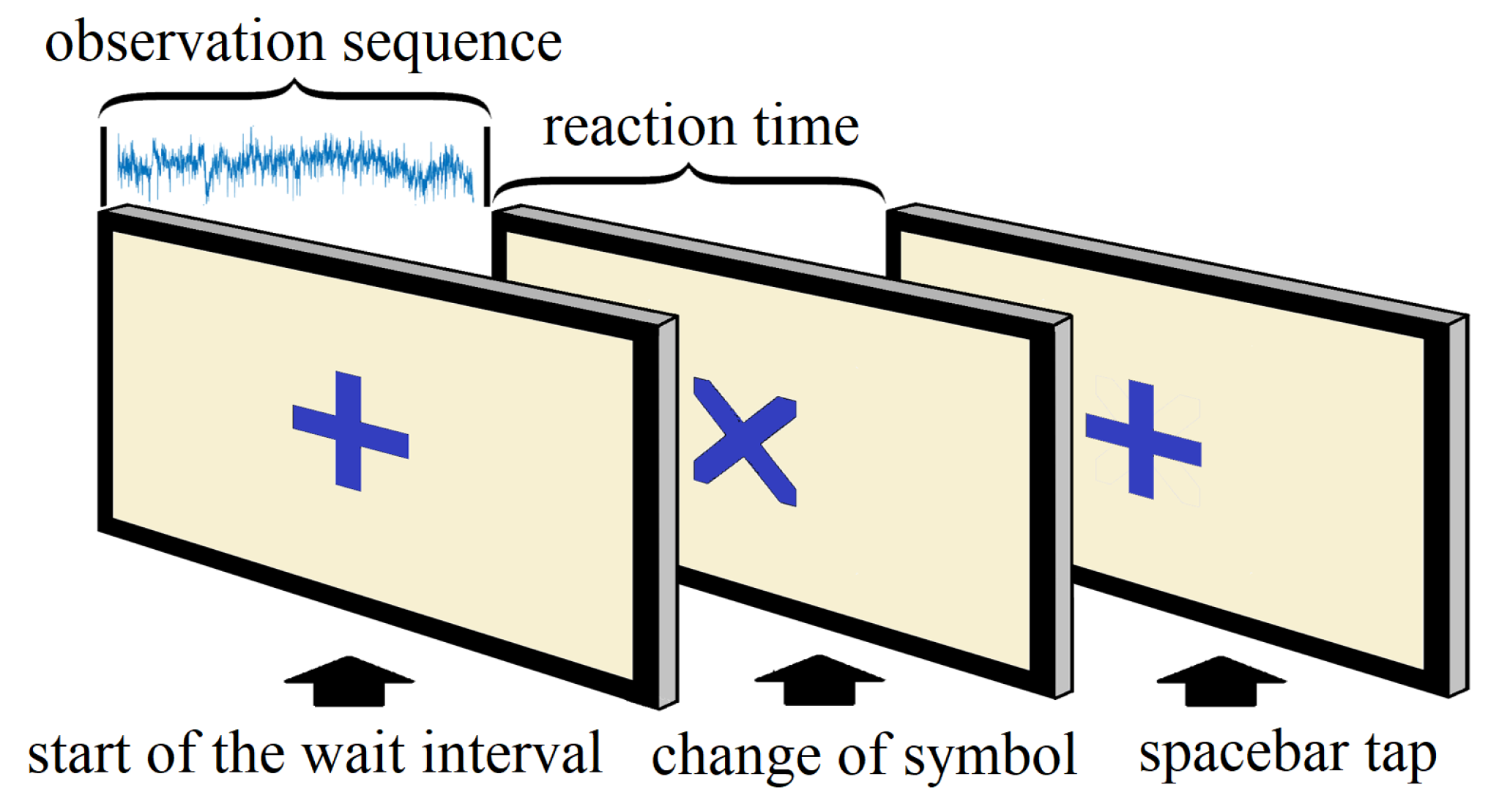

3.1. Experiment Details

3.2. Data Handling and Preprocessing

- 1.

- beginning of the trial (+)

- 2.

- change of symbol (×)

- 3.

- response of the subject (space bar tap)

3.3. Periodogram

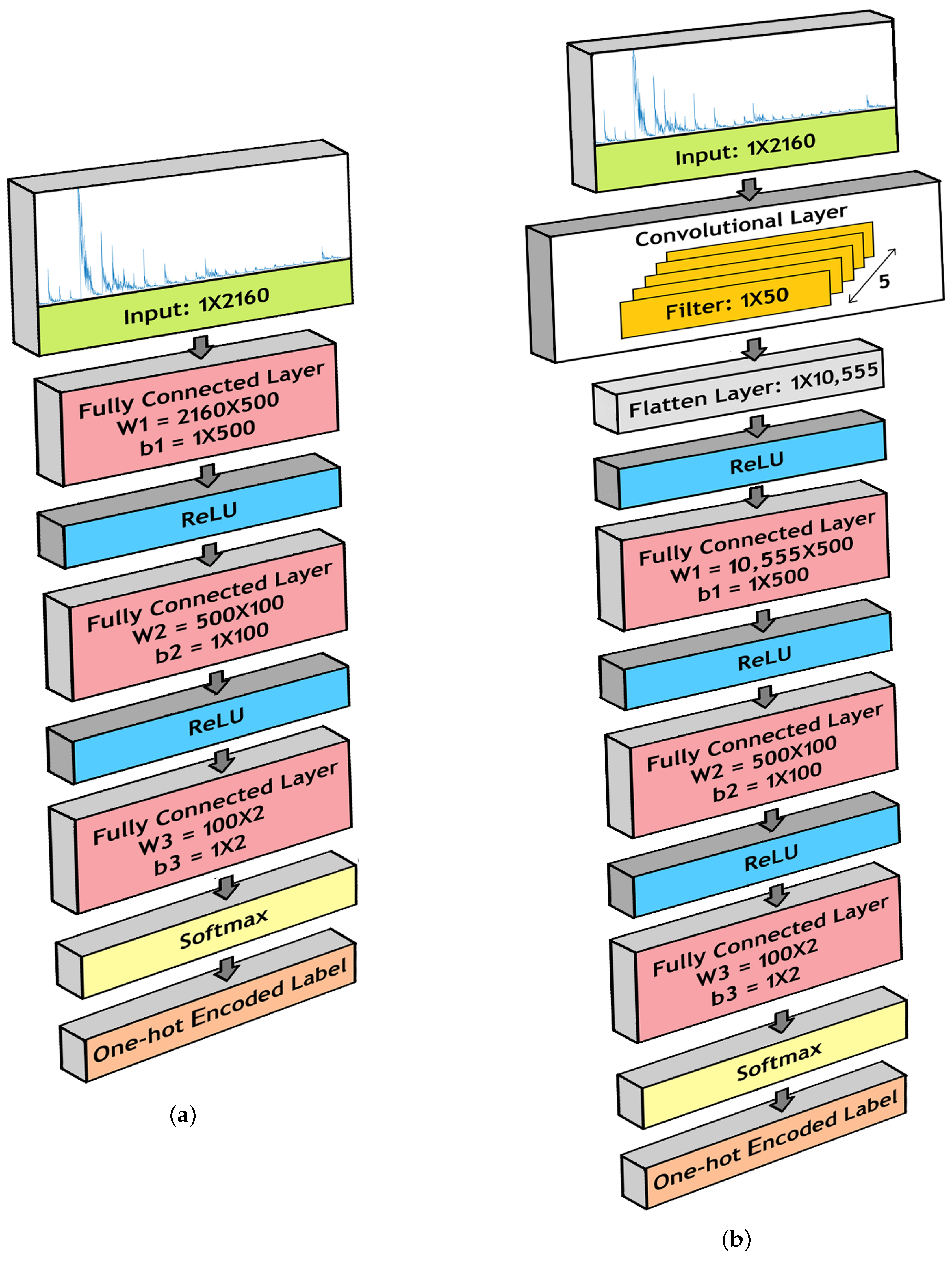

3.4. Deep Neural Network Models

3.4.1. Fully Connected Neural Network (FCNN)

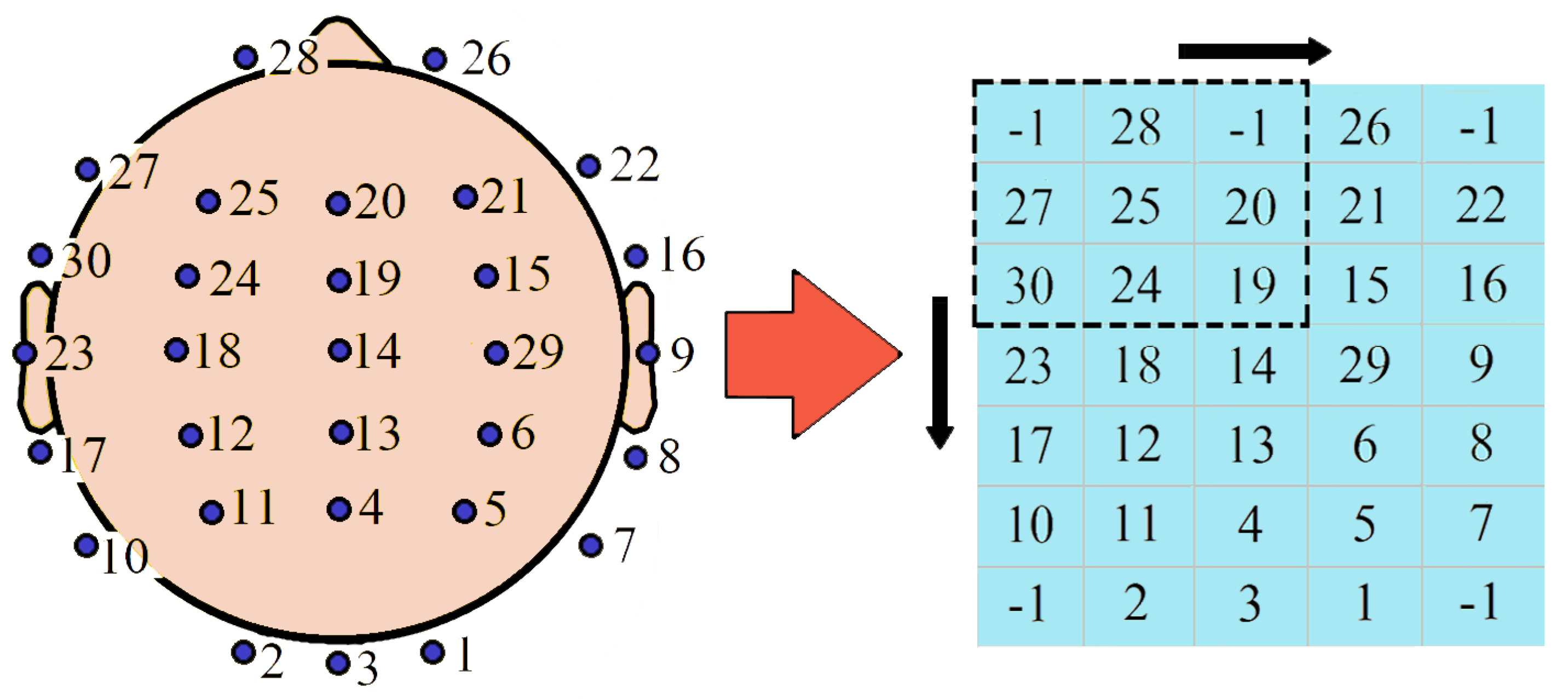

3.4.2. Convolutional Neural Network (CNN)

3.5. Binary Classification

- 1.

- Fast RT (RT 500 ms)

- 2.

- Slow RT (RT > 500 ms)

- 1.

- Fully Connected Layer 1:

- (a)

- W1 = 2160 × 500

- (b)

- b1 = 1 × 500

- 2.

- Fully Connected Layer 2:

- (a)

- W2 = 500 × 100

- (b)

- b2 = 1 × 100

- 3.

- Fully Connected Layer 3:

- (a)

- W3 = 100 × 2

- (b)

- b3 = 1 × 2

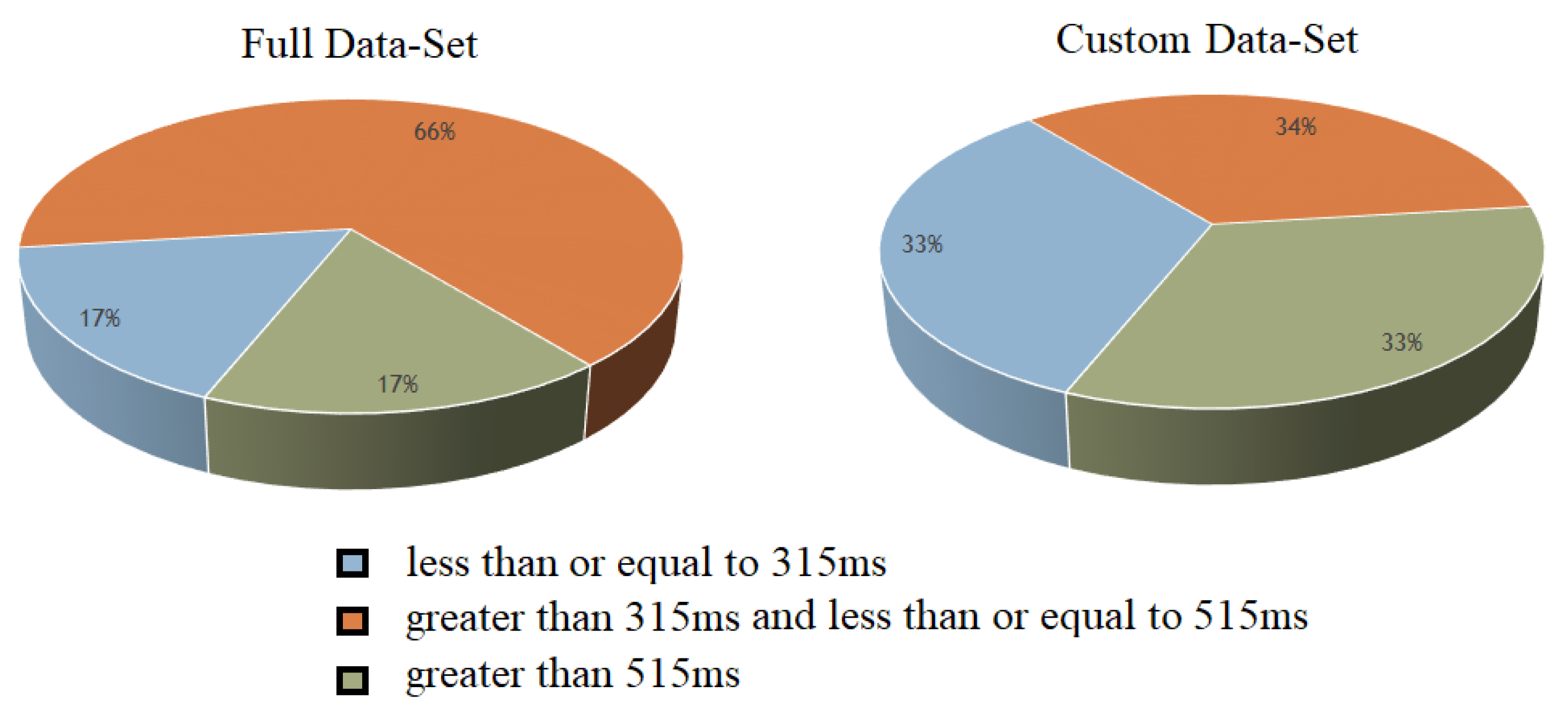

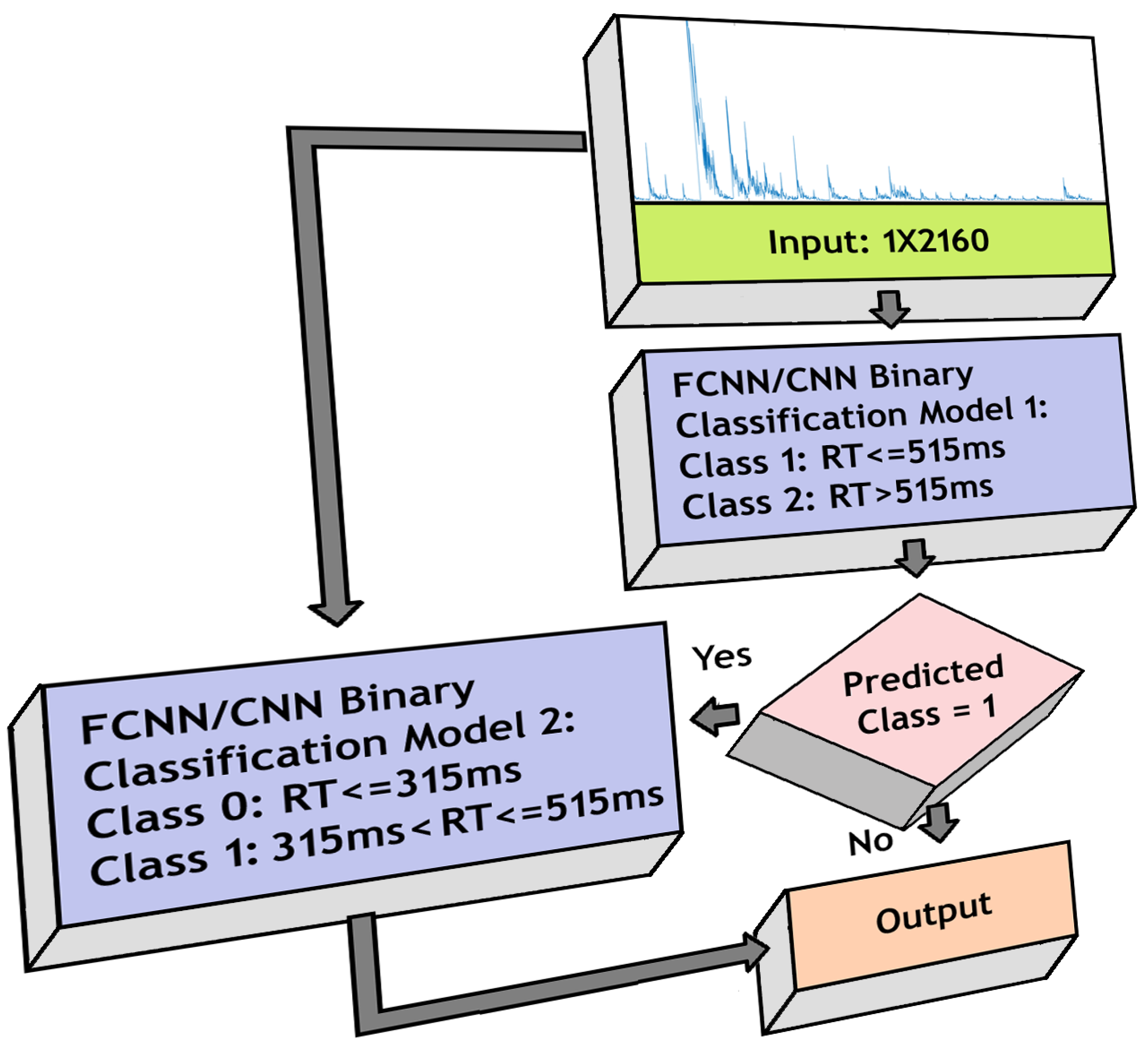

3.6. Three-Class Classification

- 1.

- Fast RT (RT 315 ms)

- 2.

- Medium RT (315 ms < RT 515 ms)

- 3.

- Slow RT (RT > 515 ms)

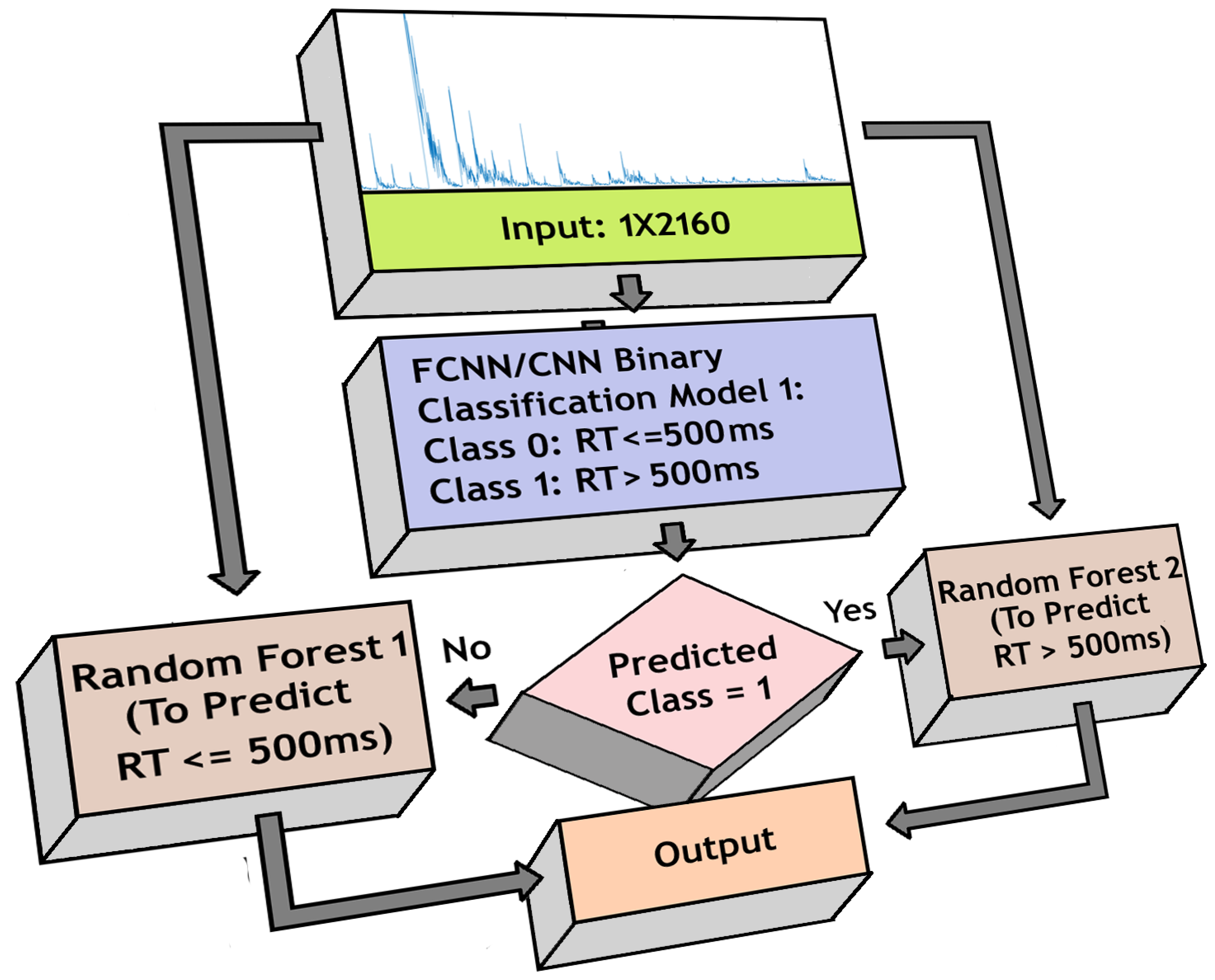

3.7. Regression

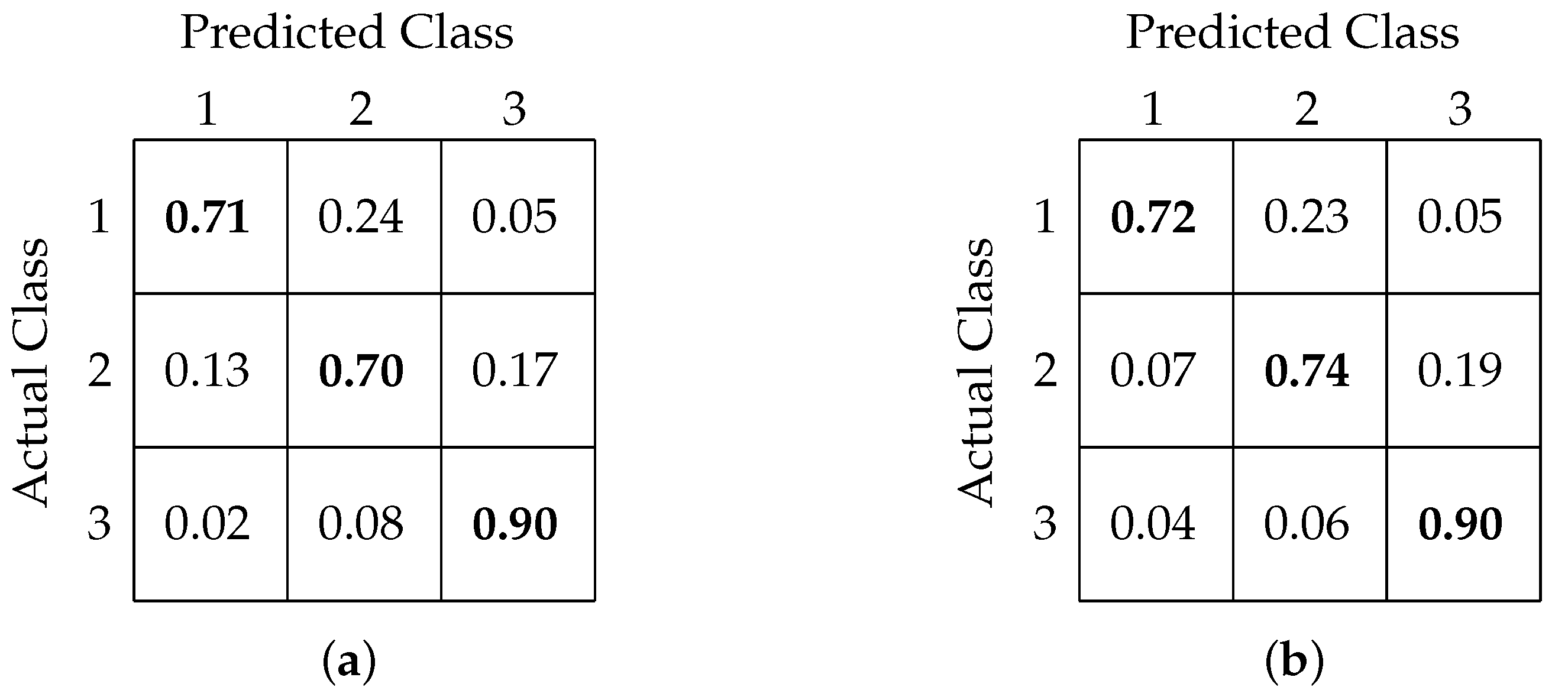

3.8. Important Channels Isolation

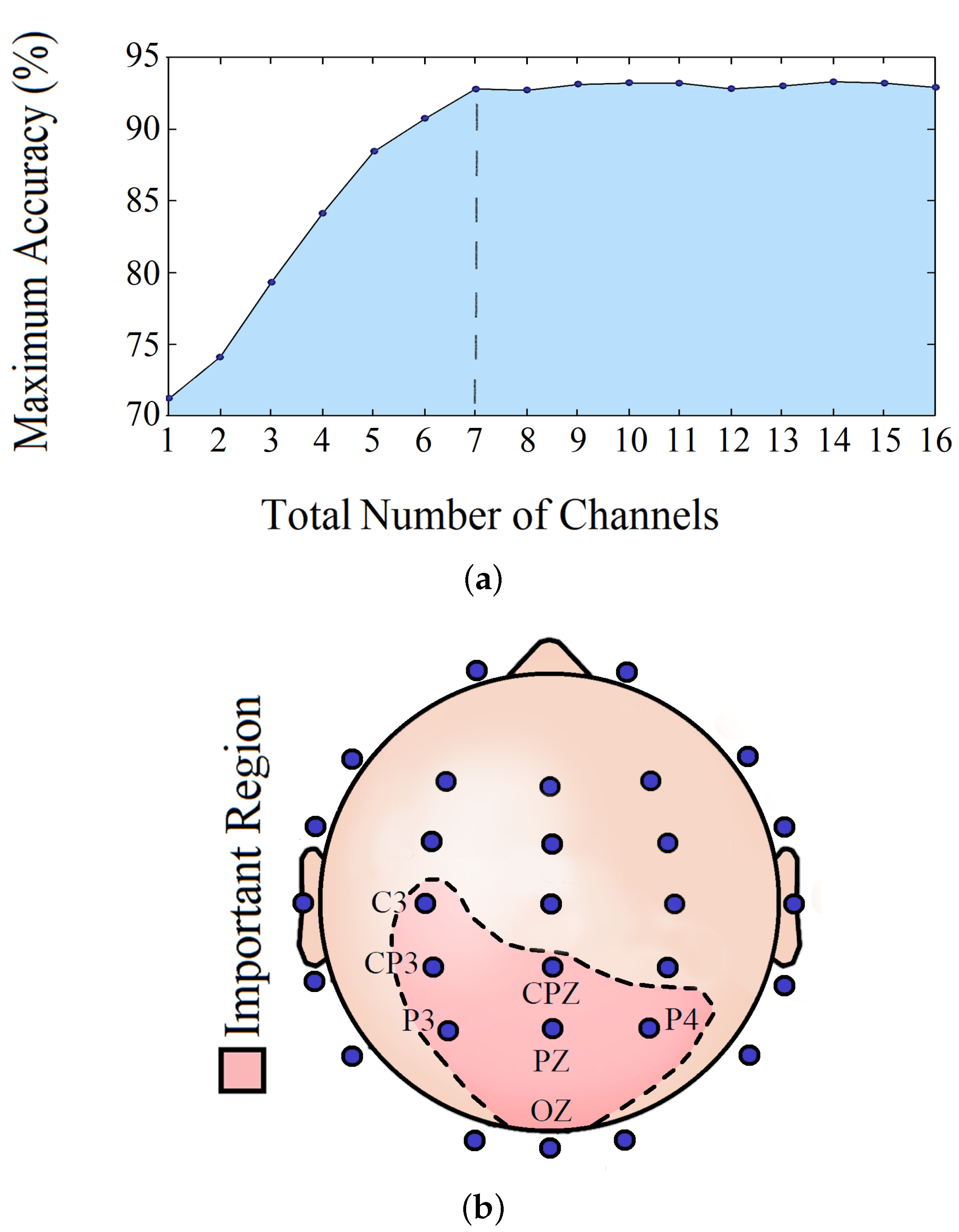

3.9. Important Frequency Band Isolation

4. Results

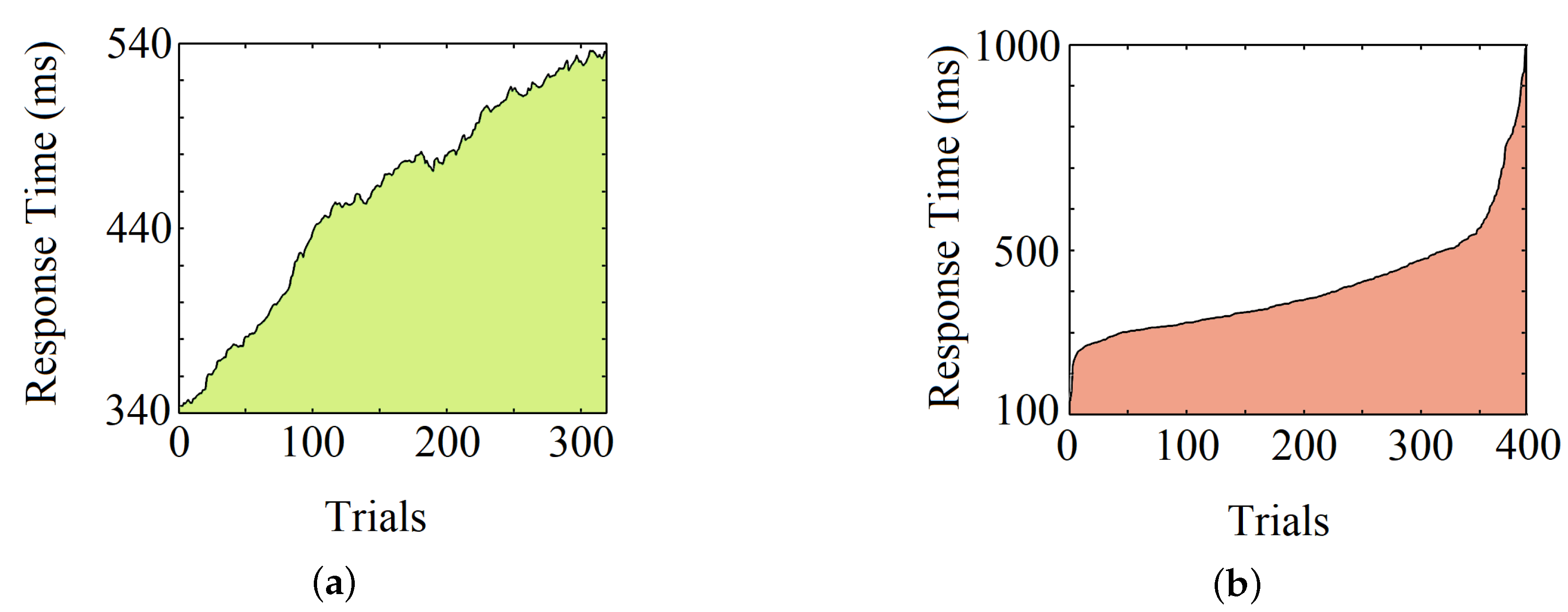

4.1. Individual Subject Analysis

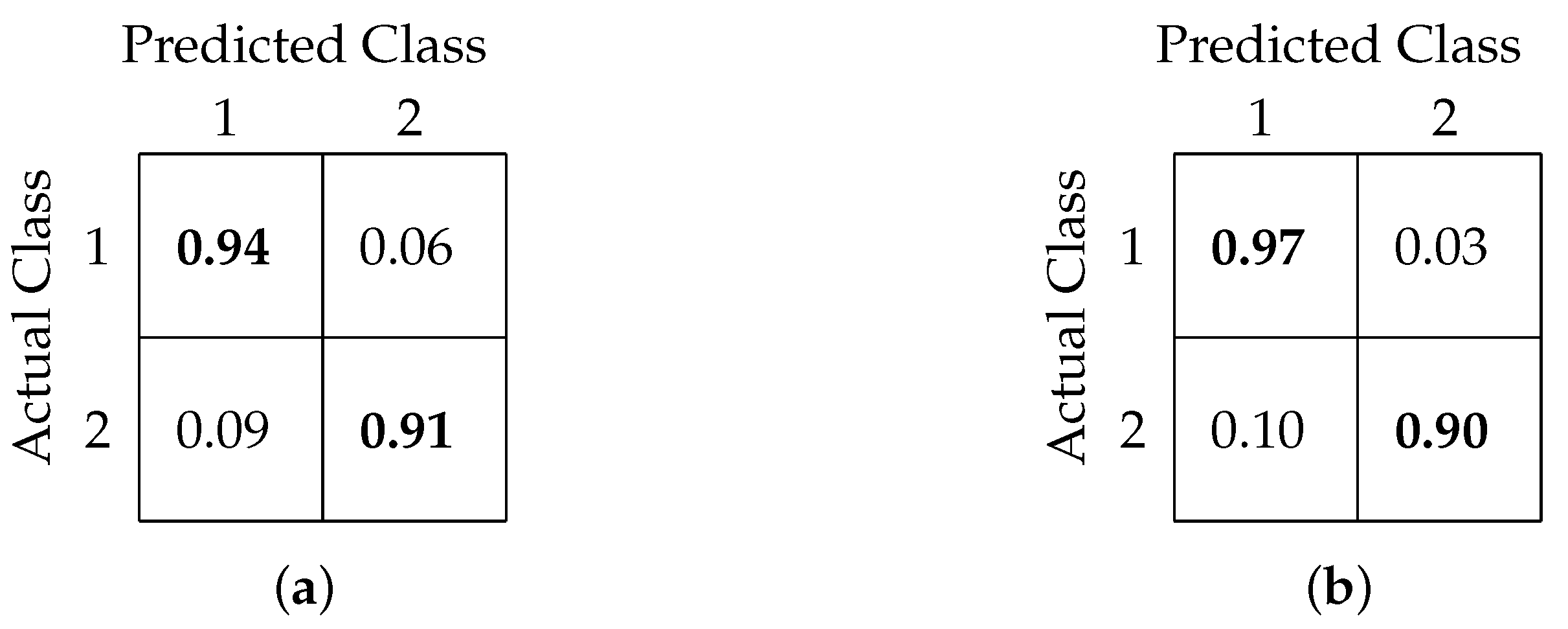

4.2. Binary Classification

4.3. Three-Class Classification

4.4. Regression

4.5. Important Channels Isolation

4.6. Important Frequency Band Isolation

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Ethical Statements

References

- Brouwer, A.M.; Zander, T.O.; van Erp, J.B.; Korteling, J.E.; Bronkhorst, A.W. Using neurophysiological signals that reflect cognitive or affective state: Six recommendations to avoid common pitfalls. Front. Neurosci. 2015, 9, 136. [Google Scholar] [CrossRef]

- Luck, S.J. An Introduction to the Event-Related Potential Technique, 2nd ed.; MIT Press: Cambridge, MA, USA, 2005; pp. 22–41. [Google Scholar]

- He, B.; Baxter, B.; Edelman, B.J.; Cline, C.C.; Ye, W.W. Noninvasive Brain-Computer Interfaces Based on Sensorimotor Rhythms. Proc. IEEE 2015, 103, 907–925. [Google Scholar] [CrossRef]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain–computer interfaces. J. Neural Eng. 2007, 4, R1–R13. [Google Scholar] [CrossRef]

- Shenoy, P.; Krauledat, M.; Blankertz, B.; Rao, R.P.; Müller, K.R. Towards adaptive classification for BCI. J. Neural Eng. 2006, 3, R13. [Google Scholar] [CrossRef]

- Millán, J.D.R.; Rupp, R.; Müller-Putz, G.R.; Murray-Smith, R.; Giugliemma, C.; Tangermann, M. Combining brain–computer interfaces and assistive technologies: State-of-the-art and challenges. Front. Neurosci. 2010, 4, 161. [Google Scholar] [CrossRef]

- Myrden, A.; Chau, T. Effects of user mental state on EEG-BCI performance. Front. Hum. Neurosci. 2015, 9, 308. [Google Scholar] [CrossRef]

- Binias, B.; Myszor, D.; Palus, H.; Cyran, K.A. Prediction of Pilot’s Reaction Time Based on EEG Signals. Front. Neuroinformatics 2020, 14, 6. [Google Scholar] [CrossRef]

- Scherer, R.; Billinger, M.; Wagner, J.; Schwarz, A.; Hettich, D.T.; Bolinger, E. Thought-based row-column scanning communication board for individuals with cerebral palsy. Ann. Phys. Rehabil. Med. 2015, 58, 14–22. [Google Scholar] [CrossRef]

- Cincotti, F.; Mattia, D.; Aloise, F.; Bufalari, S.; Schalk, G.; Oriolo, G. Non-invasive brain–computer interface system: Towards its application as assistive technology. Brain Res. Bull. 2008, 75, 796–803. [Google Scholar] [CrossRef]

- MGeronimo, A.; Simmons, Z.; Schiff, S.J. Performance predictors of brain–computer interfaces in patients with amyotrophic lateral sclerosis. J. Neural Eng. 2016, 13, 26002. [Google Scholar] [CrossRef]

- Park, W.; Kwon, G.H.; Kim, Y.-H.; Lee, J.-H.; Kim, L. EEG response varies with lesion location in patients with chronic stroke. J. Neuroeng. Rehabil. 2016, 13, 21. [Google Scholar] [CrossRef]

- Lazarou, I.; Nikolopoulos, S.; Petrantonakis, P.C.; Kompatsiaris, I.; Magda, T. EEG-Based Brain–Computer Interfaces for Communication and Rehabilitation of People with Motor Impairment: A Novel Approach of the 21st Century. Front. Hum. Neurosci. 2018, 12, 14. [Google Scholar] [CrossRef]

- Arvaneh, M.; Guan, C.; Ang, K.K.; Ward, T.E.; Chua, K.S.G.; Kuah, C.W.K. Facilitating motor imagery-based brain–computer interface for stroke patients using passive movement. Neural Comput. Appl. 2016, 28, 3259–3272. [Google Scholar] [CrossRef]

- Wolpaw, J.; Birbaumer, N.; Mcfarland, D.; Pfurtscheller, G.; Vaughan, T. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Morrell, L.K. EEG frequency and reaction time a sequential analysis. Neuropsychologia 1966, 4, 41–48. [Google Scholar] [CrossRef]

- Mckay, C.L.; Luria, S.M.; Kinney, J.A.; Strauss, M.S. Visual evoked responses, EEG’s and reaction time during a normoxic saturation dive, NISAT I. Undersea Biomed. Res. 1977, 4, 131–145. [Google Scholar]

- Takeda, Y.; Yamanaka, K.; Yamamoto, Y. Temporal decomposition of EEG during a simple reaction time task into stimulus and response-locked components. Neuroimage 2008, 39, 742–754. [Google Scholar] [CrossRef]

- Ahirwal, M.; Londhe, N. Power spectrum analysis of EEG signals for estimating visual attention. Int. J. Comput. Appl. 2012, 42, 34–40. [Google Scholar]

- Cheng, M.; Wu, J.; Hung, T. The relationship between reaction time and EEG activity in a cued reaction time task. J. Sport Exerc. Physiol. 2010, 32, 236. [Google Scholar]

- Rabbi, A.F.; Ivanca, K.; Putnam, A.V.; Musa, A.; Thaden, C.B.; Fazel-Rezai, R. Human performance evaluation based on EEG signal analysis: A prospective review. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 1879–1882. [Google Scholar]

- Wu, D.; Lance, B.J.; Lawhern, V.J.; Gordon, S.; Jung, T.-P.; Lin, C.-T. EEG-Based User Reaction Time Estimation Using Riemannian Geometry Features. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2157–2168. [Google Scholar] [CrossRef]

- Luo, A.; Sajda, P. Using Single-Trial EEG to Estimate the Timing of Target Onset During Rapid Serial Visual Presentation. In Proceedings of the 28th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 79–82. [Google Scholar]

- Chowdhury, M.S.N.; Dutta, A.; Robison, M.K.; Blais, C.; Brewer, G.A.; Bliss, D.W. A Generalized Model to Estimate Reaction Time Corresponding to Visual Stimulus Using Single-Trial EEG. In Proceedings of the 42nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Montreal, QC, Canada, 20–24 July 2020; pp. 3011–3014. [Google Scholar]

- Aboalayon, K.; Faezipour, M.; Almuhammadi, W.; Moslehpour, S. Sleep Stage Classification Using EEG Signal Analysis: A Comprehensive Survey and New Investigation. Entropy 2016, 18, 272. [Google Scholar] [CrossRef]

- Dinges, D.F.; Powell, J.W. Microcomputer analyses of performance on a portable, simple visual RT task during sustained operations. Behav. Res. Methods Instrum. Comput. 1985, 17, 652–655. [Google Scholar] [CrossRef]

- Niemi, P.; Näätänen, R. Foreperiod and simple reaction time. Psychol. Bull. 1981, 89, 133. [Google Scholar] [CrossRef]

- Brewer, G.A.; Lau, K.K.; Wingert, K.M.; Ball, B.H.; Blais, C. Examining depletion theories under conditions of within-task transfer. J. Exp. Psychol. Gen. 2017, 146, 988. [Google Scholar] [CrossRef]

- Case, A.; Deaton, A.S. Broken Down by Work and Sex: How Our Health Declines. In Analyses in the Economics of Aging; University of Chicago Press: Chicago, IL, USA, 2005; pp. 185–212. [Google Scholar]

- Montoya-Martínez, J.; Bertrand, A.; Francart, T. Effect of number and placement of EEG electrodes on measurement of neural tracking of speech. bioRxiv 2019, 800979. [Google Scholar] [CrossRef]

- Whitehead, P.; Brewer, G.A.; Blais, C. ERP Evidence for Conflict in Contingency Learning. Psychophysiology 2017, 4, 1031–1039. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Raimondo, F.; Kamienkowski, J.E.; Sigman, M.; Slezak, D.F. CUDAICA: GPU optimization of infomax-ICA EEG analysis. Comput. Intell. Neurosci. 2012, 2012, 206972. [Google Scholar] [CrossRef]

- Akin, M.; Kiymik, M. Application of Periodogram and AR Spectral Analysis to EEG Signals. J. Med. Syst. 2000, 24, 247–256. [Google Scholar] [CrossRef]

- Wang, J.; Yu, G.; Zhong, L.; Chen, W.; Sun, Y. Classification of EEG signal using convolutional neural networks. In Proceedings of the 14th IEEE Conference on Industrial Electronics and Applications (ICIEA), Xi’an, China, 19–21 June 2019; pp. 1694–1698. [Google Scholar]

- Emami, A.; Kunii, N.; Matsuo, T.; Shinozaki, T.; Kawai, K.; Takahashi, H. Seizure detection by convolutional neural network-based analysis of scalp electroencephalography plot images. NeuroImage Clin. 2019, 22, 101684. [Google Scholar] [CrossRef] [PubMed]

- John, T.; Luca, C.; Jing, J.; Justin, D.; Sydney, C.; Brandon, W.M. EEG CLassification Via Convolutional Neural Network-Based Interictal Epileptiform Event Detection. In Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Honolulu, HI, USA, 18–21 July 2018; pp. 3148–3151. [Google Scholar]

- Peiris, M.T.R.; Jones, R.D.; Davidson, P.R.; Carroll, G.J.; Parkin, P.J.; Signal, T.L.; van den Berg, M.; Bones, P.J. Identification of vigilance lapses using EEG/EOG by expert human raters. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 5735–5737. [Google Scholar]

- Buckley, R.J.; Helton, W.S.; Innes, C.R.H.; Dalrymple-Alford, J.C.; Jones, R.D. Attention lapses and behavioural microsleeps during tracking, psychomotor vigilance, and dual tasks. Conscious. Cogn. 2016, 45, 174–183. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Zhou, J.; Cheng, C.; Wei, X.; Chu, S. An Emotion Recognition Method Based on Selective Gated Recurrent Unit. In Proceedings of the IEEE International Conference on Progress in Informatics and Computing (PIC), Suzhou, China, 14–16 December 2018; pp. 33–37. [Google Scholar]

- Whelan, R. Effective Analysis of Reaction Time Data. Psychol. Rec. 2008, 58, 475–482. [Google Scholar] [CrossRef]

- Chowdhury, M.S.N. EEG-Based Estimation of Human Reaction Time Corresponding to Change of Visual Event. Master’s Thesis, Arizona State University, Tempe, AZ, USA, December 2019. [Google Scholar]

- Unsworth, N.; Robison, M. Pupillary correlates of lapses of sustained attention. Cogn. Affect. Behav. Neurosci. 2016, 16, 601–615. [Google Scholar] [CrossRef]

- Bisley, J.W.; Goldberg, M.E. Attention, intention, and priority in the parietal lobe. Annu. Rev. Neurosci. 2010, 33, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Johnson, E.B.; Rees, E.M.; Labuschagne, I.; Durr, A.; Leavitt, B.R.; Roos, R.A.C.; Reilmann, R.; Johnson, H.; Hobbs, N.Z.; Langbehn, D.R.; et al. The impact of occipital lobe cortical thickness on cognitive task performance: An investigation in Huntington’s Disease. Neuropsychologia 2015, 79, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Trejo, L.J.; Kubitz, K.; Rosipal, R.; Kochavi, R.L.; Montgomery, L.D. EEG-Based Estimation and Classification of Mental Fatigue. Psychology 2015, 6, 572–589. [Google Scholar] [CrossRef]

- Keller, A.S.; Payne, L.; Sekuler, R. Characterizing the roles of alpha and theta oscillations in multisensory attention. Neuropsychologia 2017, 99, 48–63. [Google Scholar] [CrossRef]

- Luce, R.D. Response Times: Their Role in Inferring Elementary Mental Organization; Oxford University Press: New York, NY, USA, 1986. [Google Scholar]

- Zhan, S.; Ottenbacher, K.J. Single subject research designs for disability research. Disabil. Rehabil. 2001, 23, 1–8. [Google Scholar] [CrossRef]

- Hrycaiko, D.; Martin, G.L. Applied research studies with single-subject designs: Why so few? J. Appl. Sport Psychol. 1996, 8, 183–199. [Google Scholar] [CrossRef]

- Perdices, M.; Tate, R.L. Single-subject designs as a tool for evidence-based clinical practice: Are they unrecognised and undervalued? Neuropsychol. Rehabil. 2009, 19, 904–927. [Google Scholar] [CrossRef]

| Frequency Band | Frequency Range (Hz) |

|---|---|

| delta () | 1–4 |

| theta () | 4–8 |

| alpha () | 8–12 |

| beta () | 12–35 |

| Algorithm | Accuracy (%) | Precision | Recall |

|---|---|---|---|

| Linear Regression | 67 | 0.67 | 0.67 |

| Decision Tree | 63 | 0.63 | 0.63 |

| Support Vector Classifier (SVC) | 73 | 0.72 | 0.73 |

| Stochastic Gradient Descent (SGD) + SVC | 63 | 0.63 | 0.63 |

| Random Forest | 79 | 0.79 | 0.78 |

| FCNN | 93 | 0.92 | 0.93 |

| CNN | 94 | 0.94 | 0.93 |

| Algorithm | Accuracy (%) | Precision | Recall |

|---|---|---|---|

| Linear Regression | 52 | 0.54 | 0.53 |

| Decision Tree | 56 | 0.55 | 0.56 |

| Support Vector Classifier (SVC) | 70 | 0.54 | 0.59 |

| Stochastic Gradient Descent (SGD) + SVC | 59 | 0.56 | 0.56 |

| Random Forest | 72 | 0.71 | 0.70 |

| FCNN | 76 | 0.75 | 0.72 |

| CNN | 78 | 0.75 | 0.74 |

| Algorithm | CC | RMSE (ms) |

|---|---|---|

| Linear Regression | 0.56 | 158.7 |

| Ridge Regression | 0.56 | 157.6 |

| Support Vector Regression (SVR) | 0.60 | 136.7 |

| Extra Tree Regression | 0.73 | 114.4 |

| Random Forest Regression | 0.74 | 111.2 |

| FCNN + Random Forest | 0.78 | 110.4 |

| CNN+ Random Forest | 0.80 | 108.6 |

| Channel/Channels | Accuracy (%) |

|---|---|

| CP3 | 72.2 |

| CP3, C3 | 73.8 |

| CP3, C3, P3 | 79.1 |

| CP3, C3, P3, OZ | 84.1 |

| CP3, C3, P3, OZ, P4 | 88.3 |

| CP3, C3, P3, OZ, P4, PZ | 90.8 |

| CP3, C3, P3, OZ, P4, PZ, CPZ | 92.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chowdhury, M.S.N.; Dutta, A.; Robison, M.K.; Blais, C.; Brewer, G.A.; Bliss, D.W. Deep Neural Network for Visual Stimulus-Based Reaction Time Estimation Using the Periodogram of Single-Trial EEG. Sensors 2020, 20, 6090. https://doi.org/10.3390/s20216090

Chowdhury MSN, Dutta A, Robison MK, Blais C, Brewer GA, Bliss DW. Deep Neural Network for Visual Stimulus-Based Reaction Time Estimation Using the Periodogram of Single-Trial EEG. Sensors. 2020; 20(21):6090. https://doi.org/10.3390/s20216090

Chicago/Turabian StyleChowdhury, Mohammad Samin Nur, Arindam Dutta, Matthew Kyle Robison, Chris Blais, Gene Arnold Brewer, and Daniel Wesley Bliss. 2020. "Deep Neural Network for Visual Stimulus-Based Reaction Time Estimation Using the Periodogram of Single-Trial EEG" Sensors 20, no. 21: 6090. https://doi.org/10.3390/s20216090

APA StyleChowdhury, M. S. N., Dutta, A., Robison, M. K., Blais, C., Brewer, G. A., & Bliss, D. W. (2020). Deep Neural Network for Visual Stimulus-Based Reaction Time Estimation Using the Periodogram of Single-Trial EEG. Sensors, 20(21), 6090. https://doi.org/10.3390/s20216090