A Novel Approach for Reliable Classification of Marine Low Cloud Morphologies with Vision–Language Models

Abstract

1. Introduction

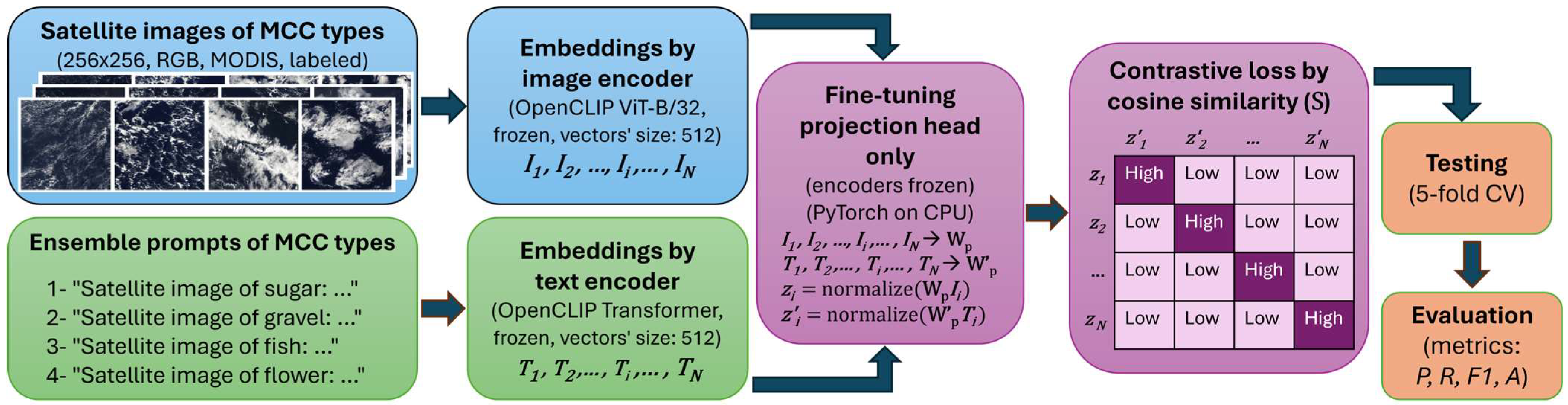

2. Methodology

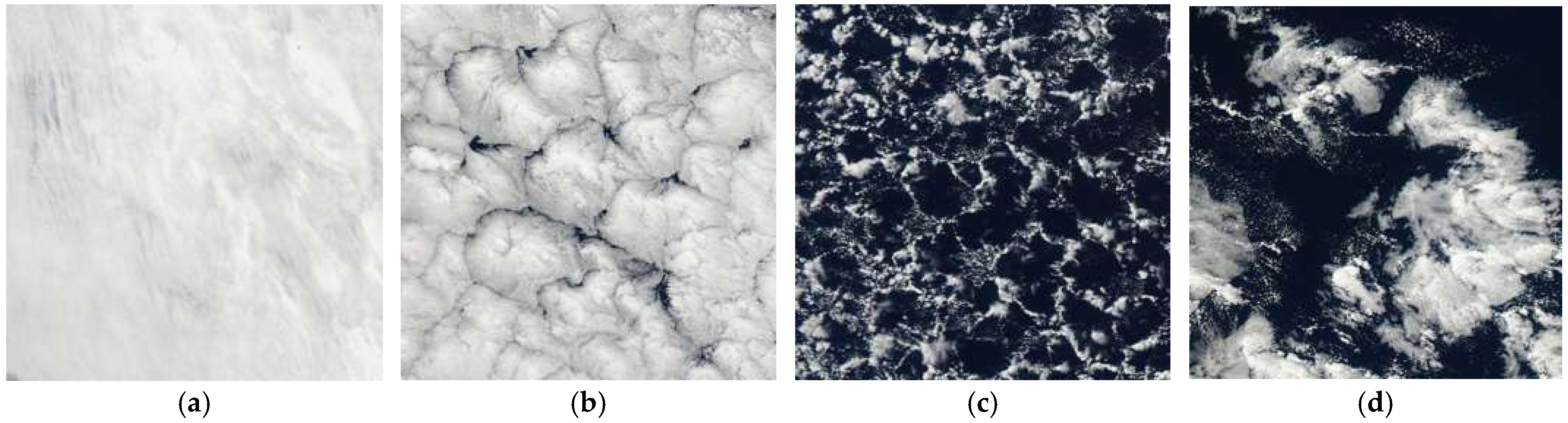

2.1. Data

2.2. Model Framework

2.3. Training Procedure

2.4. Testing the Model and Cross-Validation Strategy

2.5. Evaluation Metrics

3. Results

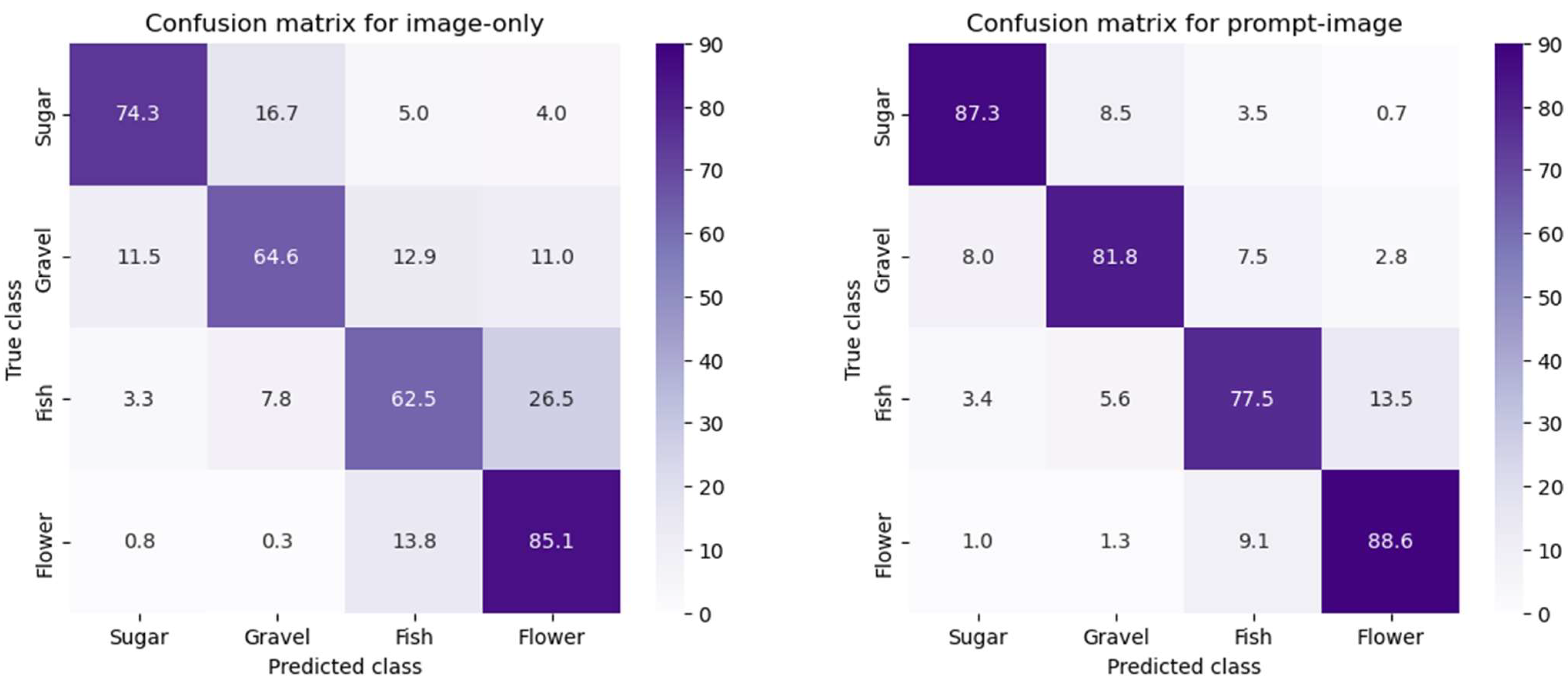

3.1. Model Development for SGFF Cloud Types

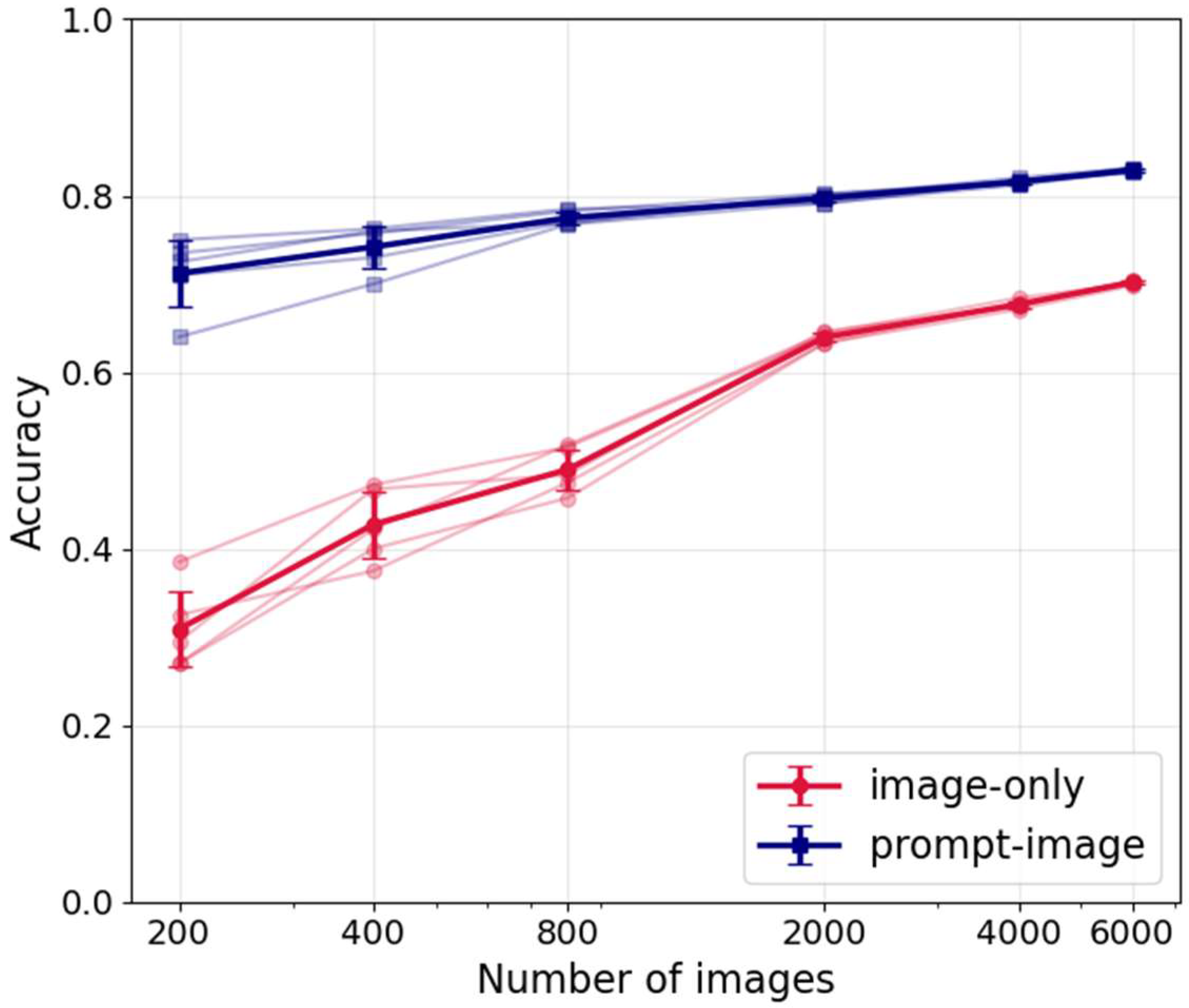

3.2. VLM Performance Under Limited Samples

3.3. Model Development for Marine Sc Cloud Types

4. Conclusions

- 1.

- Facilitate rapid development of new pattern recognition algorithms without retraining entire models, by modifying natural-language descriptions rather than neural network weights.

- 2.

- Provide interpretable, knowledge-guided classifications that link morphological patterns to concepts described in the text prompts.

- 3.

- Enable integration with other modalities (e.g., model output, radar data) through the shared image–text embedding space and create a natural pathway for fusing satellite, radar, and simulation datasets within a unified framework (this positions VLMs as part of the broader shift in atmospheric artificial intelligence from black-box benchmarking toward physically informed, knowledge-driven analysis).

- 4.

- Lower computational barriers for applying advanced AI methods in climate research in order to make it feasible to replicate or extend such analyses on modest hardware.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- de Burgh-Day, C.O.; Leeuwenburg, T. Machine Learning for Numerical Weather and Climate Modelling: A Review. Geosci. Model Dev. 2023, 16, 6433–6477. [Google Scholar] [CrossRef]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N. Prabhat Deep Learning and Process Understanding for Data-Driven Earth System Science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Thessen, A. Adoption of Machine Learning Techniques in Ecology and Earth Science. One Ecosyst. 2016, 1, e8621. [Google Scholar] [CrossRef]

- Bracco, A.; Brajard, J.; Dijkstra, H.A.; Hassanzadeh, P.; Lessig, C.; Monteleoni, C. Machine Learning for the Physics of Climate. Nat. Rev. Phys. 2025, 7, 6–20. [Google Scholar] [CrossRef]

- Lam, R.; Sanchez-Gonzalez, A.; Willson, M.; Wirnsberger, P.; Fortunato, M.; Alet, F.; Ravuri, S.; Ewalds, T.; Eaton-Rosen, Z.; Hu, W.; et al. Learning Skillful Medium-Range Global Weather Forecasting. Science 2023, 382, 1416–1421. [Google Scholar] [CrossRef] [PubMed]

- Li, X.-Y.; Wang, H.; Chakraborty, T.; Sorooshian, A.; Ziemba, L.D.; Voigt, C.; Thornhill, K.L.; Yuan, E. On the Prediction of Aerosol-Cloud Interactions Within a Data-Driven Framework. Geophys. Res. Lett. 2024, 51, e2024GL110757. [Google Scholar] [CrossRef]

- Méndez, M.; Merayo, M.G.; Núñez, M. Machine Learning Algorithms to Forecast Air Quality: A Survey. Artif. Intell. Rev. 2023, 56, 10031–10066. [Google Scholar] [CrossRef]

- Hosseinpour, F.; Kumar, N.; Tran, T.; Knipping, E. Using Machine Learning to Improve the Estimate of U.S. Background Ozone. Atmos. Environ. 2024, 316, 120145. [Google Scholar] [CrossRef]

- Mooers, G.; Pritchard, M.; Beucler, T.; Ott, J.; Yacalis, G.; Baldi, P.; Gentine, P. Assessing the Potential of Deep Learning for Emulating Cloud Superparameterization in Climate Models with Real-Geography Boundary Conditions. J. Adv. Model. Earth Syst. 2021, 13, e2020MS002385. [Google Scholar] [CrossRef]

- Yuan, T.; Song, H.; Wood, R.; Mohrmann, J.; Meyer, K.; Oreopoulos, L.; Platnick, S. Applying Deep Learning to NASA MODIS Data to Create a Community Record of Marine Low-Cloud Mesoscale Morphology. Atmos. Meas. Tech. 2020, 13, 6989–6997. [Google Scholar] [CrossRef]

- Baño-Medina, J.; Manzanas, R.; Gutiérrez, J.M. Configuration and Intercomparison of Deep Learning Neural Models for Statistical Downscaling. Geosci. Model Dev. 2020, 13, 2109–2124. [Google Scholar] [CrossRef]

- Wood, R. Stratocumulus Clouds. Mon. Weather Rev. 2012, 140, 2373–2423. [Google Scholar] [CrossRef]

- Forster, P.; Storelvmo, T.; Armour, K.; Collins, W.; Dufresne, J.-L.; Frame, D.; Lunt, D.; Mauritsen, T.; Palmer, M.; Watanabe, M. The Earth’s Energy Budget, Climate Feedbacks, and Climate Sensitivity. In Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; Masson-Delmotte, V., Zhai, P., Pirani, A., Connors, S.L., Péan, C., Berger, S., Caud, N., Chen, Y., Goldfarb, L., Gomis, M.I., et al., Eds.; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Sherwood, S.C.; Webb, M.J.; Annan, J.D.; Armour, K.C.; Forster, P.M.; Hargreaves, J.C.; Hegerl, G.; Klein, S.A.; Marvel, K.D.; Rohling, E.J.; et al. An Assessment of Earth’s Climate Sensitivity Using Multiple Lines of Evidence. Rev. Geophys. 2020, 58, e2019RG000678. [Google Scholar] [CrossRef]

- Lee, H.-H.; Bogenschutz, P.; Yamaguchi, T. Resolving Away Stratocumulus Biases in Modern Global Climate Models. Geophys. Res. Lett. 2022, 49, e2022GL099422. [Google Scholar] [CrossRef]

- Erfani, E.; Burls, N.J. The Strength of Low-Cloud Feedbacks and Tropical Climate: A CESM Sensitivity Study. J. Clim. 2019, 32, 2497–2516. [Google Scholar] [CrossRef]

- Mülmenstädt, J.; Feingold, G. The Radiative Forcing of Aerosol–Cloud Interactions in Liquid Clouds: Wrestling and Embracing Uncertainty. Curr. Clim. Change Rep. 2018, 4, 23–40. [Google Scholar] [CrossRef]

- Zelinka, M.D.; Randall, D.A.; Webb, M.J.; Klein, S.A. Clearing Clouds of Uncertainty. Nat. Clim. Change 2017, 7, 674–678. [Google Scholar] [CrossRef]

- Erfani, E.; Wood, R.; Blossey, P.; Doherty, S.J.; Eastman, R. Building a Comprehensive Library of Observed Lagrangian Trajectories for Testing Modeled Cloud Evolution, Aerosol–Cloud Interactions, and Marine Cloud Brightening. Atmos. Chem. Phys. 2025, 25, 8743–8768. [Google Scholar] [CrossRef]

- Erfani, E.; Blossey, P.; Wood, R.; Mohrmann, J.; Doherty, S.J.; Wyant, M.; Kuan-Ting, O. Simulating Aerosol Lifecycle Impacts on the Subtropical Stratocumulus-to-Cumulus Transition Using Large-Eddy Simulations. J. Geophys. Res. Atmos. 2022, 127, e2022JD037258. [Google Scholar] [CrossRef]

- Sandu, I.; Stevens, B. On the Factors Modulating the Stratocumulus to Cumulus Transitions. J. Atmos. Sci. 2011, 68, 1865–1881. [Google Scholar] [CrossRef]

- Agee, E.M.; Chen, T.S.; Dowell, K.E. A Review of Mesoscale Cellular Convection. Bull. Am. Meteorol. Soc. 1973, 54, 1004–1012. [Google Scholar] [CrossRef]

- Mohrmann, J.; Wood, R.; Yuan, T.; Song, H.; Eastman, R.; Oreopoulos, L. Identifying Meteorological Influences on Marine Low-Cloud Mesoscale Morphology Using Satellite Classifications. Atmos. Chem. Phys. 2021, 21, 9629–9642. [Google Scholar] [CrossRef]

- Wood, R.; Hartmann, D.L. Spatial Variability of Liquid Water Path in Marine Low Cloud: The Importance of Mesoscale Cellular Convection. J. Clim. 2006, 19, 1748–1764. [Google Scholar] [CrossRef]

- Rasp, S.; Schulz, H.; Bony, S.; Stevens, B. Combining Crowdsourcing and Deep Learning to Explore the Mesoscale Organization of Shallow Convection. Bull. Am. Meteorol. Soc. 2020, 101, E1980–E1995. [Google Scholar] [CrossRef]

- Stevens, B.; Bony, S.; Brogniez, H.; Hentgen, L.; Hohenegger, C.; Kiemle, C.; L’Ecuyer, T.S.; Naumann, A.K.; Schulz, H.; Siebesma, P.A.; et al. Sugar, Gravel, Fish and Flowers: Mesoscale Cloud Patterns in the Trade Winds. Q. J. R. Meteorol. Soc. 2020, 146, 141–152. [Google Scholar] [CrossRef]

- Geiss, A.; Christensen, M.W.; Varble, A.C.; Yuan, T.; Song, H. Self-Supervised Cloud Classification. Artif. Intell. Earth Syst. 2024, 3, e230036. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Online, 1 July 2021; pp. 8748–8763. [Google Scholar]

- Salomonson, V.V.; Barnes, W.; Masuoka, E.J. Introduction to MODIS and an Overview of Associated Activities. In Earth Science Satellite Remote Sensing: Vol. 1: Science and Instruments; Qu, J.J., Gao, W., Kafatos, M., Murphy, R.E., Salomonson, V.V., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 12–32. ISBN 978-3-540-37293-6. [Google Scholar]

- Cherti, M.; Beaumont, R.; Wightman, R.; Wortsman, M.; Ilharco, G.; Gordon, C.; Schuhmann, C.; Schmidt, L.; Jitsev, J. Reproducible Scaling Laws for Contrastive Language-Image Learning. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 2818–2829. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Howard, J.; Ruder, S. Universal Language Model Fine-Tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; Gurevych, I., Miyao, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 328–339. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Kornblith, S.; Shlens, J.; Le, Q.V. Do Better ImageNet Models Transfer Better? arXiv 2019, arXiv:1805.08974. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How Transferable Are Features in Deep Neural Networks? arXiv 2014, arXiv:1411.1792. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to Prompt for Vision-Language Models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Li, J.; Selvaraju, R.R.; Gotmare, A.D.; Joty, S.; Xiong, C.; Hoi, S. Align before Fuse: Vision and Language Representation Learning with Momentum Distillation. arXiv 2021, arXiv:2107.07651. [Google Scholar] [CrossRef]

- He, T.; Zhang, Z.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of Tricks for Image Classification with Convolutional Neural Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 558–567. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems, San Diego, CA, USA, 30 November–7 December 2019; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Keskar, N.S.; Mudigere, D.; Nocedal, J.; Smelyanskiy, M.; Tang, P.T.P. On Large-Batch Training for Deep Learning: Generalization Gap and Sharp Minima. arXiv 2017, arXiv:1609.04836. [Google Scholar] [CrossRef]

- Masters, D.; Luschi, C. Revisiting Small Batch Training for Deep Neural Networks. arXiv 2018, arXiv:1804.07612. [Google Scholar] [CrossRef]

- You, Y.; Gitman, I.; Ginsburg, B. Large Batch Training of Convolutional Networks. arXiv 2017, arXiv:1708.03888. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning, 2nd ed.; Springer Series in Statistics; Springer: New York, NY, USA, 2009; ISBN 978-0-387-84857-0. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning: With Applications in R.; Springer Texts in Statistics; Springer: New York, NY, USA, 2021; ISBN 978-1-07-161417-4. [Google Scholar]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 40th International Joint Conference on Artificial Intelligence, Montréal, QC, Canada, 20–25 August 1995; Volume 14, pp. 1137–1145. [Google Scholar]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness and Correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

- Stehman, S.V. Selecting and Interpreting Measures of Thematic Classification Accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 18661–18673. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. arXiv 2020, arXiv:2002.05709. [Google Scholar] [CrossRef]

- Zhang, H.; He, J.; Chen, S.; Zhan, Y.; Bai, Y.; Qin, Y. Comparing Three Methods of Selecting Training Samples in Supervised Classification of Multispectral Remote Sensing Images. Sensors 2023, 23, 8530. [Google Scholar] [CrossRef] [PubMed]

- Hestness, J.; Narang, S.; Ardalani, N.; Diamos, G.; Jun, H.; Kianinejad, H.; Patwary, M.M.A.; Yang, Y.; Zhou, Y. Deep Learning Scaling Is Predictable, Empirically. arXiv 2017, arXiv:1712.00409. [Google Scholar] [CrossRef]

- Huh, M.; Agrawal, P.; Efros, A.A. What Makes ImageNet Good for Transfer Learning? arXiv 2016, arXiv:1608.08614. [Google Scholar] [CrossRef]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E.; Price, B.S. Effects of Training Set Size on Supervised Machine-Learning Land-Cover Classification of Large-Area High-Resolution Remotely Sensed Data. Remote Sens. 2021, 13, 368. [Google Scholar] [CrossRef]

- Mu, N.; Kirillov, A.; Wagner, D.; Xie, S. SLIP: Self-Supervision Meets Language-Image Pre-Training. arXiv 2021, arXiv:2112.12750. [Google Scholar]

- Sanghi, A.; Chu, H.; Lambourne, J.G.; Wang, Y.; Cheng, C.-Y.; Fumero, M.; Malekshan, K.R. CLIP-Forge: Towards Zero-Shot Text-to-Shape Generation. arXiv 2022, arXiv:2110.02624. [Google Scholar]

- Zhao, Z.; Liu, Y.; Wu, H.; Wang, M.; Li, Y.; Wang, S.; Teng, L.; Liu, D.; Cui, Z.; Wang, Q.; et al. CLIP in Medical Imaging: A Survey. Med. Image Anal. 2025, 102, 103551. [Google Scholar] [CrossRef] [PubMed]

| Evaluation Metrics for Image-Only | |||||

| Cloud Type | Precision | Recall | F1-Score | Accuracy | Sample Size |

| Sugar | 0.827 | 0.743 | 0.782 | --- | 2200 |

| Gravel | 0.723 | 0.646 | 0.683 | --- | 2200 |

| Fish | 0.664 | 0.625 | 0.644 | --- | 2200 |

| Flower | 0.672 | 0.851 | 0.751 | --- | 2200 |

| Total | 0.721 | 0.716 | 0.715 | 0.716 | 8800 |

| Evaluation Metrics for Prompt-Image | |||||

| Cloud Type | Precision | Recall | F1-Score | Accuracy | Sample Size |

| Sugar | 0.877 | 0.873 | 0.875 | --- | 2200 |

| Gravel | 0.841 | 0.818 | 0.829 | --- | 2200 |

| Fish | 0.794 | 0.775 | 0.784 | --- | 2200 |

| Flower | 0.839 | 0.886 | 0.862 | --- | 2200 |

| Total | 0.838 | 0.838 | 0.838 | 0.838 | 8800 |

| Evaluation Metrics for Image-Only | |||||

| Cloud Type | Precision | Recall | F1-Score | Accuracy | Sample Size |

| Open cells | 0.375 | 0.185 | 0.247 | --- | 65 |

| Closed cells | 0.351 | 0.523 | 0.420 | --- | 65 |

| Stratus | 0.449 | 0.477 | 0.463 | --- | 65 |

| Other cells | 0.516 | 0.492 | 0.504 | --- | 65 |

| Total | 0.423 | 0.419 | 0.408 | 0.419 | 260 |

| Evaluation Metrics for Prompt-Image | |||||

| Cloud Type | Precision | Recall | F1-Score | Accuracy | Sample Size |

| Open cells | 0.809 | 0.846 | 0.827 | --- | 65 |

| Closed cells | 0.984 | 0.938 | 0.961 | --- | 65 |

| Stratus | 0.883 | 0.815 | 0.848 | --- | 65 |

| Other cells | 0.786 | 0.846 | 0.815 | --- | 65 |

| Total | 0.865 | 0.862 | 0.863 | 0.862 | 260 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Erfani, E.; Hosseinpour, F. A Novel Approach for Reliable Classification of Marine Low Cloud Morphologies with Vision–Language Models. Atmosphere 2025, 16, 1252. https://doi.org/10.3390/atmos16111252

Erfani E, Hosseinpour F. A Novel Approach for Reliable Classification of Marine Low Cloud Morphologies with Vision–Language Models. Atmosphere. 2025; 16(11):1252. https://doi.org/10.3390/atmos16111252

Chicago/Turabian StyleErfani, Ehsan, and Farnaz Hosseinpour. 2025. "A Novel Approach for Reliable Classification of Marine Low Cloud Morphologies with Vision–Language Models" Atmosphere 16, no. 11: 1252. https://doi.org/10.3390/atmos16111252

APA StyleErfani, E., & Hosseinpour, F. (2025). A Novel Approach for Reliable Classification of Marine Low Cloud Morphologies with Vision–Language Models. Atmosphere, 16(11), 1252. https://doi.org/10.3390/atmos16111252