Research on a Real-Time Tunnel Vehicle Speed Detection System Based on YOLOv8 and DeepSORT Algorithms

Abstract

1. Introduction

2. Methods

2.1. Overview

2.2. YOLOv8

2.2.1. Classification and Prediction

2.2.2. Loss Function

2.2.3. Non-Maximum Suppression Mechanism

2.3. DeepSORT

2.3.1. State Space Model

2.3.2. Data Association Strategy

2.4. Perspective Transformation and Speed Estimation Module

2.4.1. Principle of Perspective Transformation

2.4.2. Principle of Vehicle Speed Estimation

2.4.3. Speeding Detection Mechanism

2.5. Bounding Box Smoothing and Stabilization

3. Results

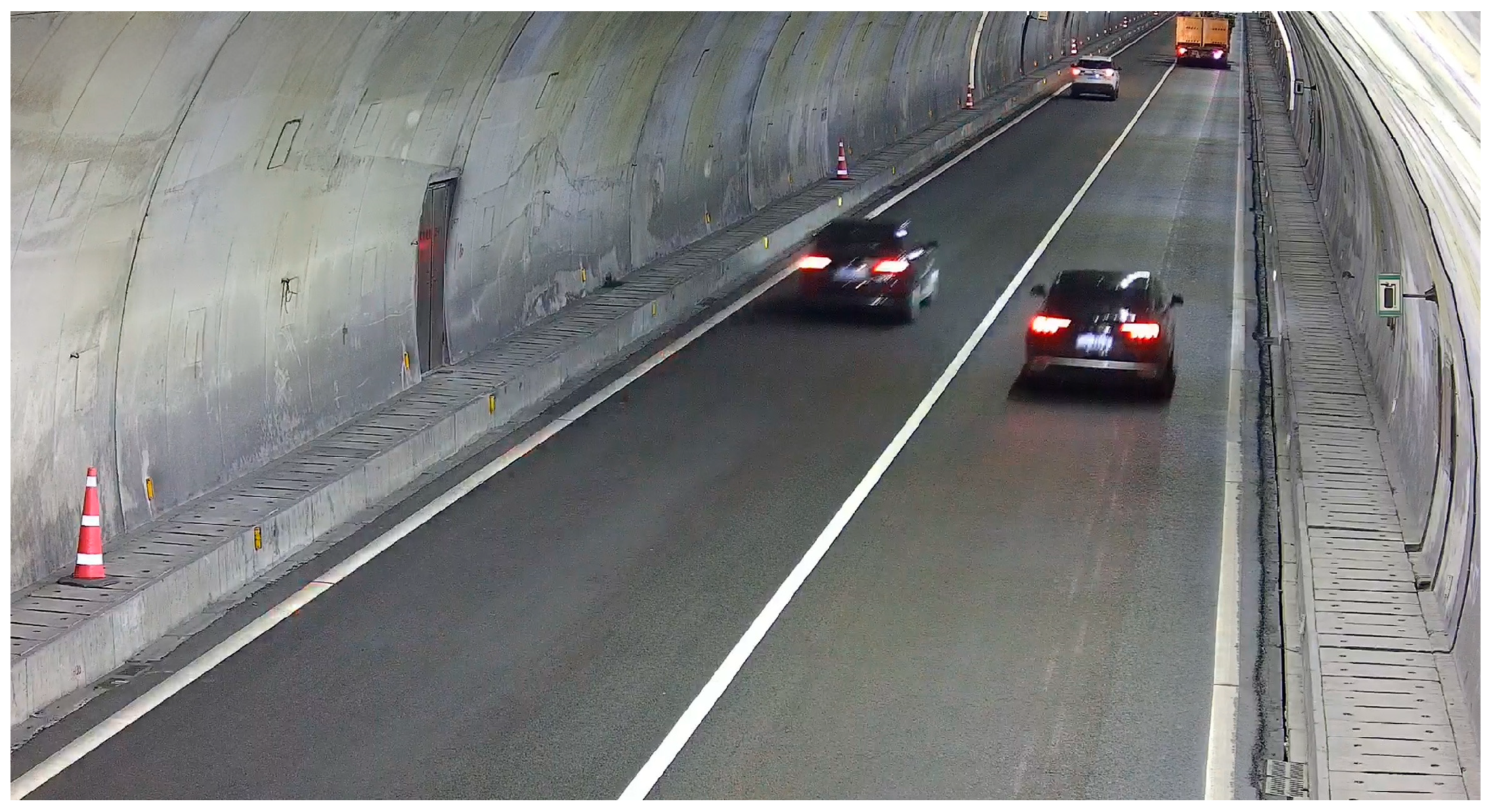

3.1. Experimental Dataset and Environment

3.2. Accuracy Evaluation

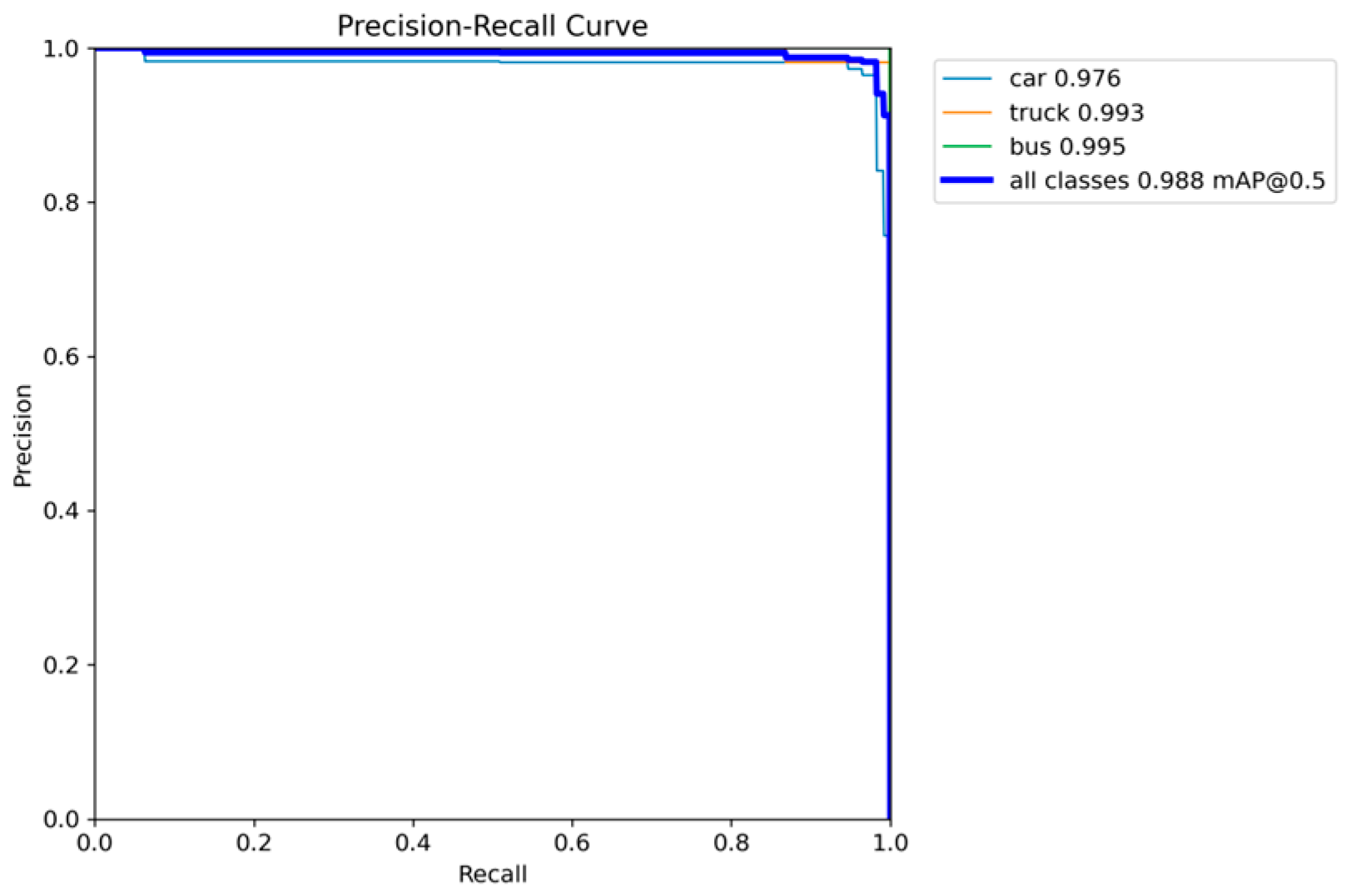

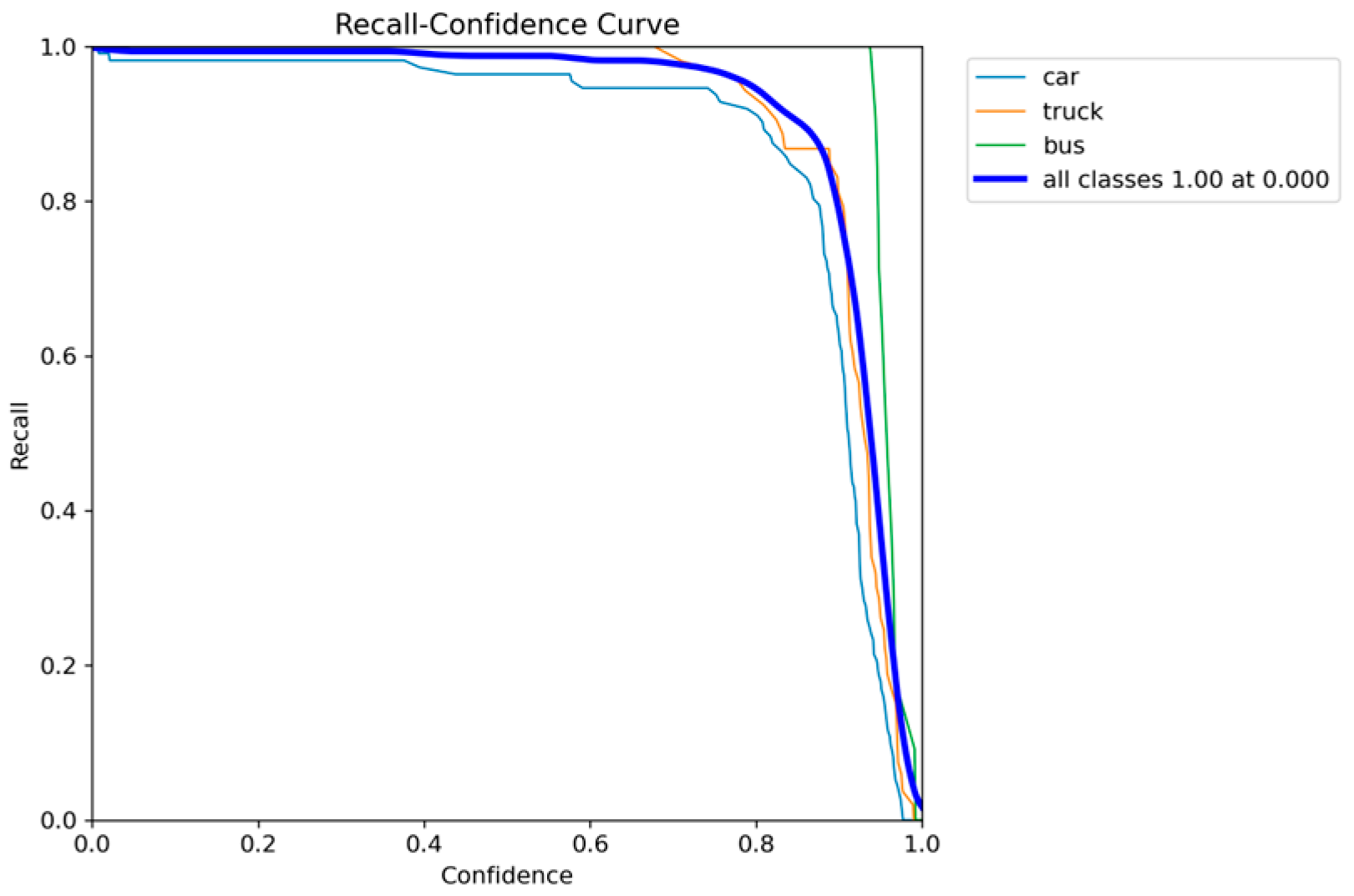

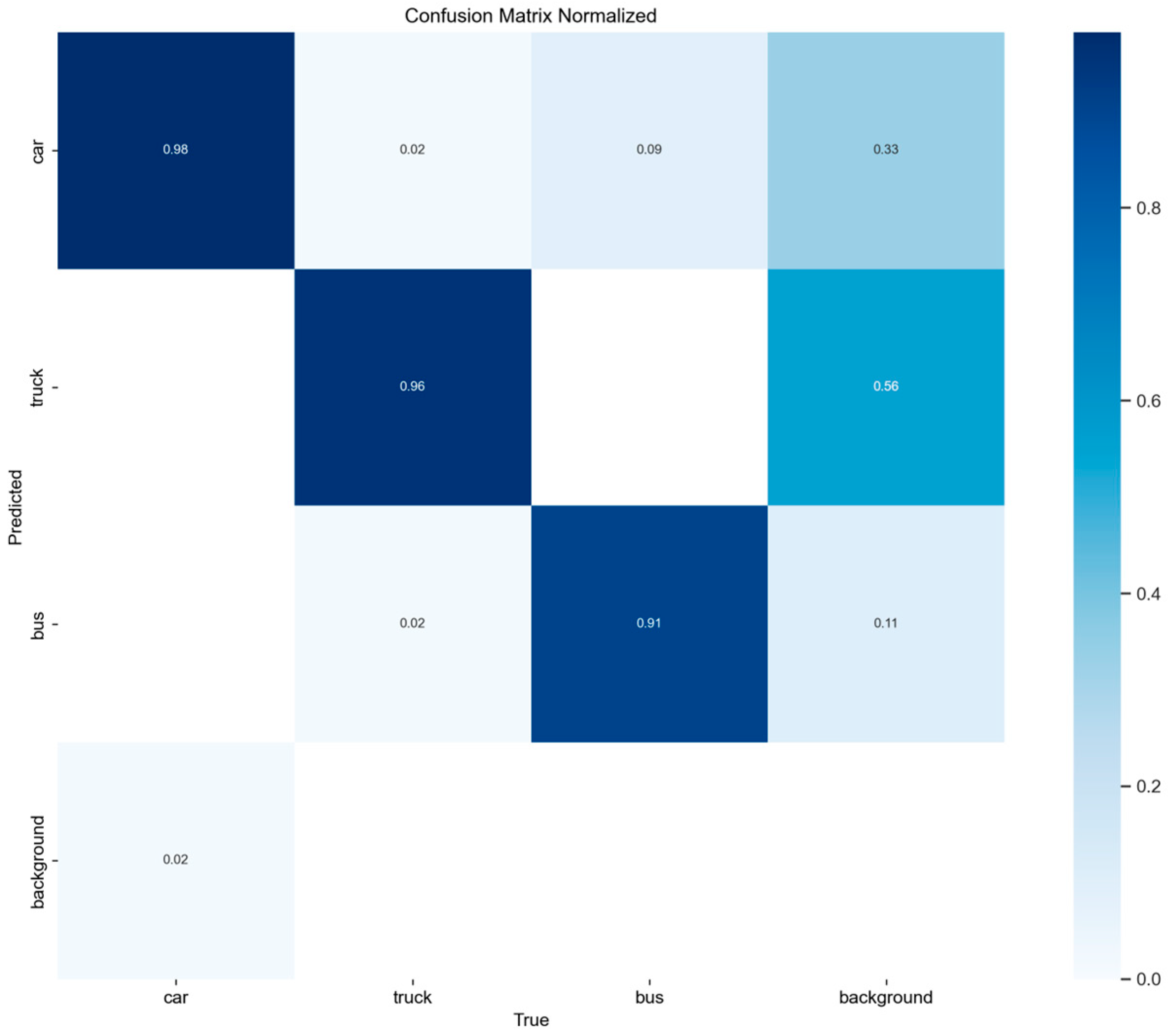

3.2.1. Classification Accuracy

3.2.2. Vehicle Speed Detection Accuracy

3.2.3. Detection Timing Analysis

3.2.4. Ablation Study

3.2.5. Algorithm Performance Comparison

3.3. Analysis of Tunnel Traffic Operation Results

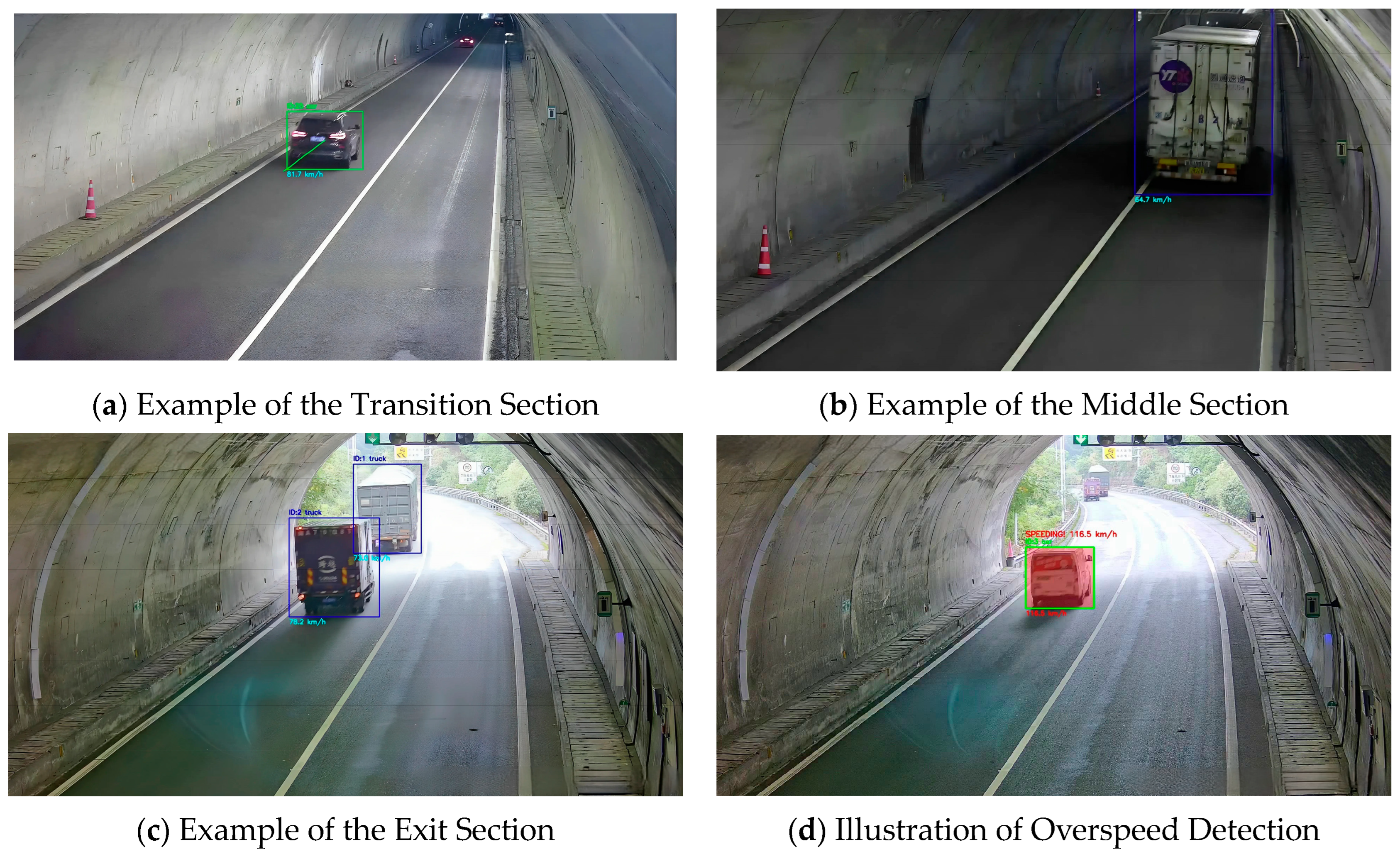

3.3.1. Detection Results

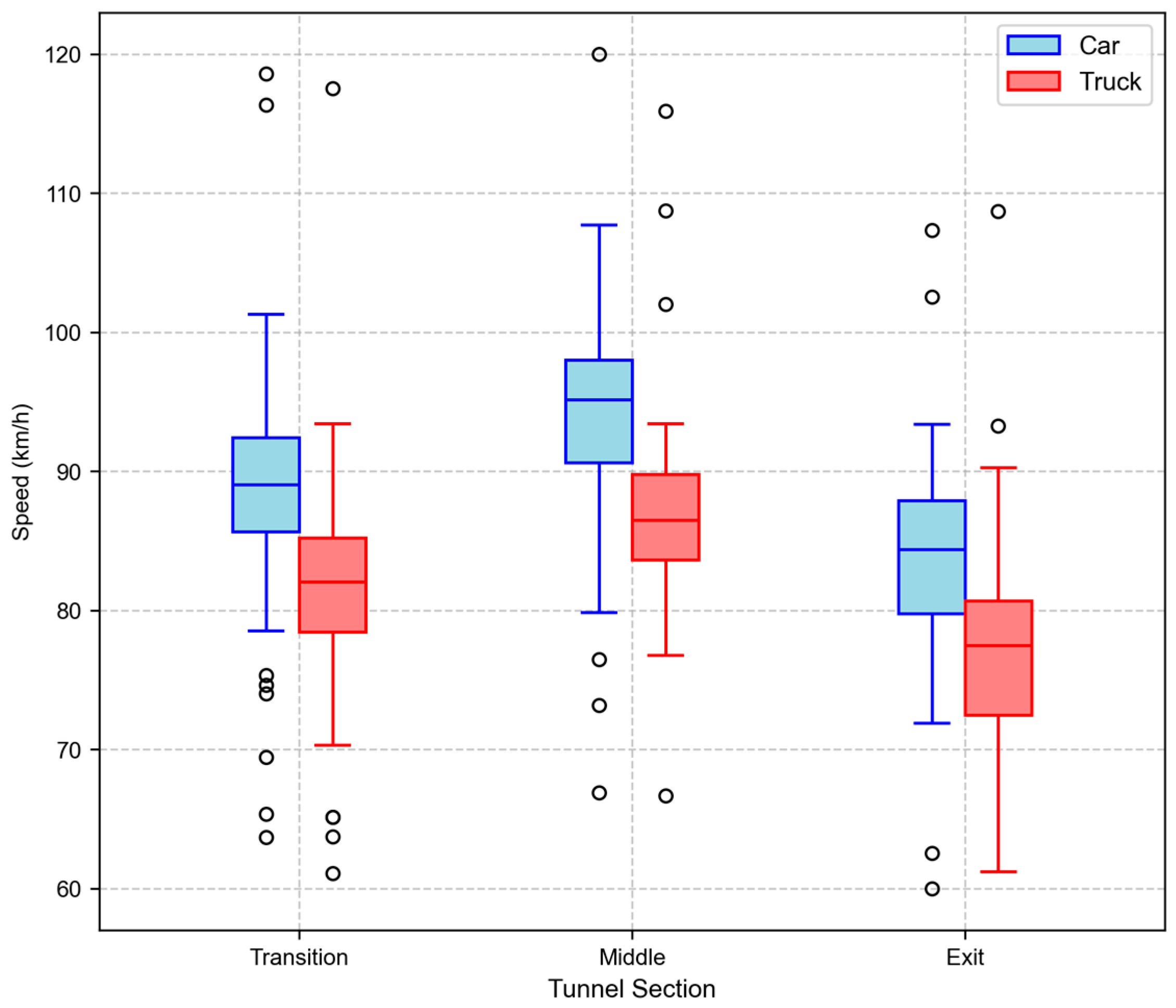

3.3.2. Speed Characteristics in Different Tunnel Sections

3.3.3. Speed Analysis Results and Discussion

4. Discussion

4.1. System Performance and Technical Advantages

4.2. Analysis of Multi-Vehicle Speed Behavior Characteristics

4.3. Practical Application Value and Limitation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highd dataset: A drone dataset of naturalistic vehicle trajectories on german highways for validation of highly automated driving systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Honolulu, HI, USA, 4–7 November 2018; pp. 2118–2125. [Google Scholar] [CrossRef]

- Hu, W.; Chen, J.; Tian, Q.; Wang, C.; Zhang, Y. Analysis and Application of Highway Tunnel Risk Factors Based on Traffic Accident Data. In Proceedings of the 2024 7th International Symposium on Traffic Transportation and Civil Architecture (ISTTCA 2024), Tianjing, China, 21–23 June 2024; pp. 836–849. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, H.; Zhu, T. Influence of spatial visual conditions in tunnel on driver behavior: Considering the route familiarity of drivers. Adv. Mech. Eng. 2019, 11, 1–9. [Google Scholar] [CrossRef]

- He, S.; Du, Z.; Mei, J.; Han, L. Driving behavior inertia in urban tunnel diverging areas: New findings based on task-switching perspective. Transp. Res. Part F Traffic Psychol. Behav. 2025, 109, 1007–1023. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Bilakeri, S.; Kotegar, K.A. A Review: Recent Advancements in Online Private Mode Multi-Object Tracking. IEEE Trans. Artif. Intell. 2025. Early Access. [Google Scholar] [CrossRef]

- Abdullah, A.; Amran, G.A.; Tahmid, S.A.; Alabrah, A.; AL-Bakhrani, A.A.; Ali, A. A deep-learning-based model for the detection of diseased tomato leaves. Agronomy 2024, 14, 1593. [Google Scholar] [CrossRef]

- He, Y.; Che, J.; Wu, J. Pedestrian multi-target tracking method based on YOLOv5 and person re-identification. Chin. J. Liq. Cryst. Disp. 2022, 37, 880–890. [Google Scholar] [CrossRef]

- Xu, H.; Chang, M.; Chen, Y.; Hao, D. Research on Influence of Vehicle Type on Traffic Flow Speed Under Target Detection. J. Comput. Eng. Appl. 2024, 60, 314–321. (In Chinese) [Google Scholar] [CrossRef]

- Costa, L.R.; Rauen, M.S.; Fronza, A.B. Car speed estimation based on image scale factor. Forensic Sci. Int. 2020, 310, 110229. [Google Scholar] [CrossRef] [PubMed]

- Tayeb, A.A.; Aldhaheri, R.W.; Hanif, M.S. Vehicle speed estimation using gaussian mixture model and kalman filter. Int. J. Comput. Commun. Control 2021, 16, 4211. [Google Scholar] [CrossRef]

- Ou, J.; Zeng, W.; Chen, S.; Lu, S. YOLO-DeepSORT Empowered UAV-based Traffic Behavior Analysis: A Novel Trajectory Extraction Tool for Traffic Moving Objects at Intersections. In Proceedings of the 2025 IEEE International Annual Conference on Complex Systems and Intelligent Science (CSIS-IAC), Shenzhen, China, 16–18 May 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Kiran, M.J.; Roy, K. A video surveillance system for speed detection in vehicles. Int. J. Eng. Trends Technol. (IJETT) 2013, 4, 1437–1441. [Google Scholar]

- Tang, J.; Wang, W. Vehicle trajectory extraction and integration from multi-direction video on urban intersection. Displays 2024, 85, 102834. [Google Scholar] [CrossRef]

- Kaif, A.; Santosh, T.S.; AshokKumar, C.; Kumar, C.J. YOLOv8-Powered Driver Monitoring: A Scalable and Efficient Approach for Real-Time Distraction Detection. In Proceedings of the 2025 International Conference on Inventive Computation Technologies (ICICT), Honolulu, HI, USA, 14–16 March 2025; pp. 338–345. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE international conference on image processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar] [CrossRef]

- Luo, Z.; Bi, Y.; Yang, X.; Li, Y.; Yu, S.; Wu, M.; Ye, Q. Enhanced YOLOv5s+ DeepSORT method for highway vehicle speed detection and multi-sensor verification. Front. Phys. 2024, 12, 1371320. [Google Scholar] [CrossRef]

- Sangsuwan, K.; Ekpanyapong, M. Video-based vehicle speed estimation using speed measurement metrics. IEEE Access 2024, 12, 4845–4858. [Google Scholar] [CrossRef]

- Shaqib, S.; Alo, A.P.; Ramit, S.S.; Rupak, A.U.H.; Khan, S.S.; Rahman, M.S. Vehicle Speed Detection System Utilizing YOLOv8: Enhancing Road Safety and Traffic Management for Metropolitan Areas. arXiv 2024, arXiv:2406.07710. [Google Scholar] [CrossRef]

- Arriffin, M.N.; Mostafa, S.A.; Khattak, U.F.; Jaber, M.M.; Baharum, Z.; Gusman, T. Vehicles Speed Estimation Model from Video Streams for Automatic Traffic Flow Analysis Systems. JOIV Int. J. Inform. Vis. 2023, 7, 295–300. [Google Scholar] [CrossRef]

- Cvijetić, A.; Djukanović, S.; Peruničić, A. Deep learning-based vehicle speed estimation using the YOLO detector and 1D-CNN. In Proceedings of the 2023 27th International Conference on Information Technology (IT), Žabljak, Montenegro, 15–18 February 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Kim, J. Vehicle detection using deep learning technique in tunnel road environments. Symmetry 2020, 12, 2012. [Google Scholar] [CrossRef]

- Mosa, A.H.; Kyamakya, K.; Junghans, R.; Ali, M.; Al Machot, F.; Gutmann, M. Soft Radial Basis Cellular Neural Network (SRB-CNN) based robust low-cost truck detection using a single presence detection sensor. Transp. Res. Part C Emerg. Technol. 2016, 73, 105–127. [Google Scholar] [CrossRef]

- Geetha, A.S. Comparing yolov5 variants for vehicle detection: A performance analysis. arXiv 2024, arXiv:2408.12550. [Google Scholar] [CrossRef]

- Xu, J.; Lin, W.; Wang, X.; Shao, Y.-M. Acceleration and deceleration calibration of operating speed prediction models for two-lane mountain highways. J. Transp. Eng. Part A Syst. 2017, 143, 04017024. [Google Scholar] [CrossRef]

- Kim, D.-G.; Lee, C.; Park, B.-J. Use of digital tachograph data to provide traffic safety education and evaluate effects on bus driver behavior. Transp. Res. Rec. 2016, 2585, 77–84. [Google Scholar] [CrossRef]

- Xiao, J.; Liang, B.; Niu, J.a.; Qin, C. Study on the Glare Phenomenon and Time-Varying Characteristics of Luminance in the Access Zone of the East–West Oriented Tunnel. Appl. Sci. 2024, 14, 2147. [Google Scholar] [CrossRef]

- Abdulghani, A.; Lee, C. Differential variable speed limits to improve performance and safety of car-truck mixed traffic on freeways. J. Traffic Transp. Eng. (Engl. Ed.) 2022, 9, 1003–1016. [Google Scholar] [CrossRef]

- Kim, J.-R.; Yoo, H.-S.; Kwon, S.-W.; Cho, M.-Y. Integrated tunnel monitoring system using wireless automated data collection technology. In Proceedings of the 25th International Symposium on Automation and Robotics in Construction (ISARC2008), Vilnius, Lithuania, 26–29 June 2008; pp. 26–29. [Google Scholar]

- Berberan, A.; Machado, M.; Batista, S. Automatic multi total station monitoring of a tunnel. Surv. Rev. 2007, 39, 203–211. [Google Scholar] [CrossRef]

- Thatikonda, M. An Enhanced Real-Time Object Detection of Helmets and License Plates Using a Lightweight YOLOv8 Deep Learning Model. Master’s Thesis, Wright State University, Dayton, OH, USA, 2024. [Google Scholar]

- Fernández Llorca, D.; Hernández Martínez, A.; García Daza, I. Vision-based vehicle speed estimation: A survey. IET Intell. Transp. Syst. 2021, 15, 987–1005. [Google Scholar] [CrossRef]

| No. | System-Detected Speed (km/h) | Radar-Measured Speed (km/h) | Difference (km/h) | ASD | SDR |

|---|---|---|---|---|---|

| 1 | 87.6 | 84.9 | 2.7 | 1.92 km/h | 2.21% |

| 2 | 87.5 | 88.6 | 1.1 | ||

| 3 | 87.4 | 90.3 | 2.9 | ||

| 4 | 88.9 | 90.2 | 1.3 | ||

| 5 | 81.8 | 83.4 | 1.6 | ||

| 6 | 79.4 | 76.3 | 3.1 | 2.4 km/h | 2.86% |

| 7 | 78.6 | 81.3 | 2.7 | ||

| 8 | 84.5 | 86.1 | 1.6 | ||

| 9 | 90.1 | 92.4 | 2.3 | ||

| 10 | 86.6 | 88.9 | 2.3 | ||

| … | … | … | … | … | … |

| Indicators | ASD | DDVS | VST | SDR |

|---|---|---|---|---|

| results | 2.54 km/h | 3.12 | 1.22 | 2.9% |

| Configuration | Detection mAP | ASD (km/h) | DDVS |

|---|---|---|---|

| Full system (YOLOv8s + DeepSORT + Perspective Transform + Sliding Window) | 98.8% | 2.54 | 3.12 |

| YOLOv8s + Simple IoU tracking | 98.8% | 5.87 | 7.45 |

| YOLOv5s + DeepSORT (baseline) | 94.6% | 3.21 | 4.08 |

| YOLOv8s + DeepSORT (without sliding window) | 98.8% | 4.12 | 5.34 |

| YOLOv8s + DeepSORT (without perspective transform) | 98.8% | 6.89 | 8.92 |

| Method | Detection Algorithm | Tracking Algorithm | Test Environment | Speed Accuracy Metrics | Year |

|---|---|---|---|---|---|

| Enhanced YOLOv5s + DeepSORT [17] | YOLOv5s + Swin Transformer | DeepSORT | Highway/Tunnel | Absolute error: 1–8 km/h, RMSE: 2.06–9.28 km/h | 2024 |

| YOLOv3 + DeepSORT [18] | YOLOv3 | DeepSORT + Optical Flow | Road traffic | MAE: 3.38 km/h, RMSE: 4.69 km/h | 2024 |

| YOLOv8 Speed Detection [19] | YOLOv8 | Tracking algorithm | Urban traffic | MAE: 3.5 km/h, RMSE: 4.22 km/h | 2024 |

| Video Stream Estimation [20] | Feature-based detection | Video tracking | Automatic traffic flow | Speed Estimation Error: 20.86%, Average Percentage for Accuracy: 79.14% | 2023 |

| YOLO + 1D-CNN [21] | YOLO detector | 1D-CNN with CBBA features | Road traffic | Speed Average Error: 2.76 km/h | 2023 |

| This Study Proposed (YOLOv8s + DeepSORT) | YOLOv8s | DeepSORT | Expressway tunnel | ASD: 2.54 km/h, DDVS: 3.12, VST: 1.22, SDR: 2.9% | 2025 |

| Non-Parametric Test | Grouping | Statistic | p |

|---|---|---|---|

| Mann–Whitney U | Transition (Car vs. Truck) | 5291 | <0.01 |

| Middle (Car vs. Truck) | 4286 | <0.01 | |

| Exit (Car vs. Truck) | 4848 | <0.01 | |

| Kruskal–Wallis | Car (Transition vs. Middle vs. Exit) | 111.8581 | <0.01 |

| Truck (Transition vs. Middle vs. Exit) | 52.75877 | <0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mu, H.; Wang, X.; Tian, J.; Yang, Y. Research on a Real-Time Tunnel Vehicle Speed Detection System Based on YOLOv8 and DeepSORT Algorithms. Intell. Infrastruct. Constr. 2025, 1, 10. https://doi.org/10.3390/iic1030010

Mu H, Wang X, Tian J, Yang Y. Research on a Real-Time Tunnel Vehicle Speed Detection System Based on YOLOv8 and DeepSORT Algorithms. Intelligent Infrastructure and Construction. 2025; 1(3):10. https://doi.org/10.3390/iic1030010

Chicago/Turabian StyleMu, Honglin, Xinyuan Wang, Junshan Tian, and Yanqun Yang. 2025. "Research on a Real-Time Tunnel Vehicle Speed Detection System Based on YOLOv8 and DeepSORT Algorithms" Intelligent Infrastructure and Construction 1, no. 3: 10. https://doi.org/10.3390/iic1030010

APA StyleMu, H., Wang, X., Tian, J., & Yang, Y. (2025). Research on a Real-Time Tunnel Vehicle Speed Detection System Based on YOLOv8 and DeepSORT Algorithms. Intelligent Infrastructure and Construction, 1(3), 10. https://doi.org/10.3390/iic1030010